UPDATED February 11, 2020. The blog has been update, with new information and the recommended solution.

I have had some customers with a problem that they can’ add ESXi hosts to a vCenter after upgrading to 6.7 Update 3/3a, and adding new ESXI 6.7 Update 3 hosts.

When trying to add the host to the vCenter they get this error:

A general system error occurred: Unable to push CA certificates and CRLs to host <hostname/IP>

The problem is mentioned in the release notes, “You might be unable to add a self-signed certificate to the ESXi trust store and fail to add an ESXi host to the vCenter Server system” , but this is not an good description.

There are 2 solutions, one thats in the release notes and another is the workaround I have already published.

NOTE: This is fixed in later versions, so if you see the problem, please try to check NTP or time on the ESXi host certificate is not issued in the future.

This is a workaround: you can change an advanced setting on the vCenter; vCenter -> Configure -> Settings -> Advanced Settings:

vpxd.certmgmt.mode = thumbprint

This may also affect other operation on the ESXi hosts, I have not checked, but I think that it also means that you can not push new certifices to hosts, already added, and maybe also other things.

Note: This solution can create a new problem later see the blog.

The solution: in the VMware release notes is to change an advanced setting: “The fix adds the advanced option Config.HostAgent.ssl.keyStore.allowSelfSigned. If you already face the issue, set this option to TRUE to add a self-signed server certificate to the ESXi trust store”. They do forget to mention that you need to restart the management agents “services.sh restart” true the server console og SSH, or reboot the hosts.

To do this a little easier, i have made a script to do this, from PowerCLI, and also using plink.exe. This script is just a sample, that you can modify, for your use case.

$cmd = "services.sh restart"

$hostname = "esx01.domain.local"

$esx_Password = read-host "Input ESXi root password: "

$vmhost = connect-viserver -server $hostname -user root -password $esx_password

set-VMHostAdvancedConfiguration -Name "Config.HostAgent.ssl.keyStore.allowSelfSigned" -value true

$sshService = Get-VmHostService | Where { $_.Key -eq “TSM-SSH”}

Start-VMHostService -HostService $sshService -Confirm:$false

cmd /c "echo y | C:tempPlink -ssh -pw $($esx_Password) root@$hostname $($cmd)"

disconnect-viserver $vmhost -Confirm:$false

$vmhost = connect-viserver -server $hostname -user root -password $esx_password

$sshService = Get-VmHostService | Where { $_.Key -eq “TSM-SSH”}

Stop-VMHostService -HostService $sshService -Confirm:$false

disconnect-viserver $vmhost -Confirm:$false

Hope this will help you.

The best solution would be, not to use self issued certificates.

Note: Check if time is correct on the ESXi hosts, this could give similar problems.

vSphere 6: Start Time Error (70034) When Adding ESXi Hosts

When adding an ESXi host to a cluster, you might see this error:

A general system error occured: Unable to get signed certificate for host <your host>. Error: Start Time Error (70034).

You are more likely to see this in a lab environment but may also see it in production, if you have just generated the certificate that replaces the VMware Certificate Authority (VMCA) root certificate, to make it an intermediate authority within your lab/organisation. I like to do that as pretty much the first thing after installation and before adding any ESXi hosts so that all compliant services from that point forward, get their certificate from the VMCA.

However according to this article, VMCA predates the ESXi certificates by 24 hours when adding them to the environment, to avoid time-synchronisation issues. If you’ve just generated VMCA’s own certificate, its time-stamp might become later in time than the generated ESXi certificates and hence the error. The suggested fix is to either wait 24 hours or add the hosts before replacing VMCA’s root certificate. If I have the choice, I prefer taking the first route as avoiding manual handling of certificates going forward is the whole point here.

Once it’s left for 24 hours, adding hosts works fine without any problems.

Hope this helps!

Share This Story, Choose Your Platform!

Being in the industry for over 25 years, Ather has been a vExpert for 10 years running and is also vExpert NSX/HCX/CloudProvider. He has also been an official VMware blogger at VMworld EU and US and is one of the founding members and contributor to Open HomeLab Wiki and co-hosts @OpenTechCast as well.

Ather’s natural habitat is tech events like VMworld, Cloud (and other) Field Days, VMUGs etc. and he thrives on meeting like-minded people and having a good old chat about technology. He’s friendly and not dangerous at all so please do interact with him whenever you spot him in such surroundings.

Related Posts

Page load link

Go to Top

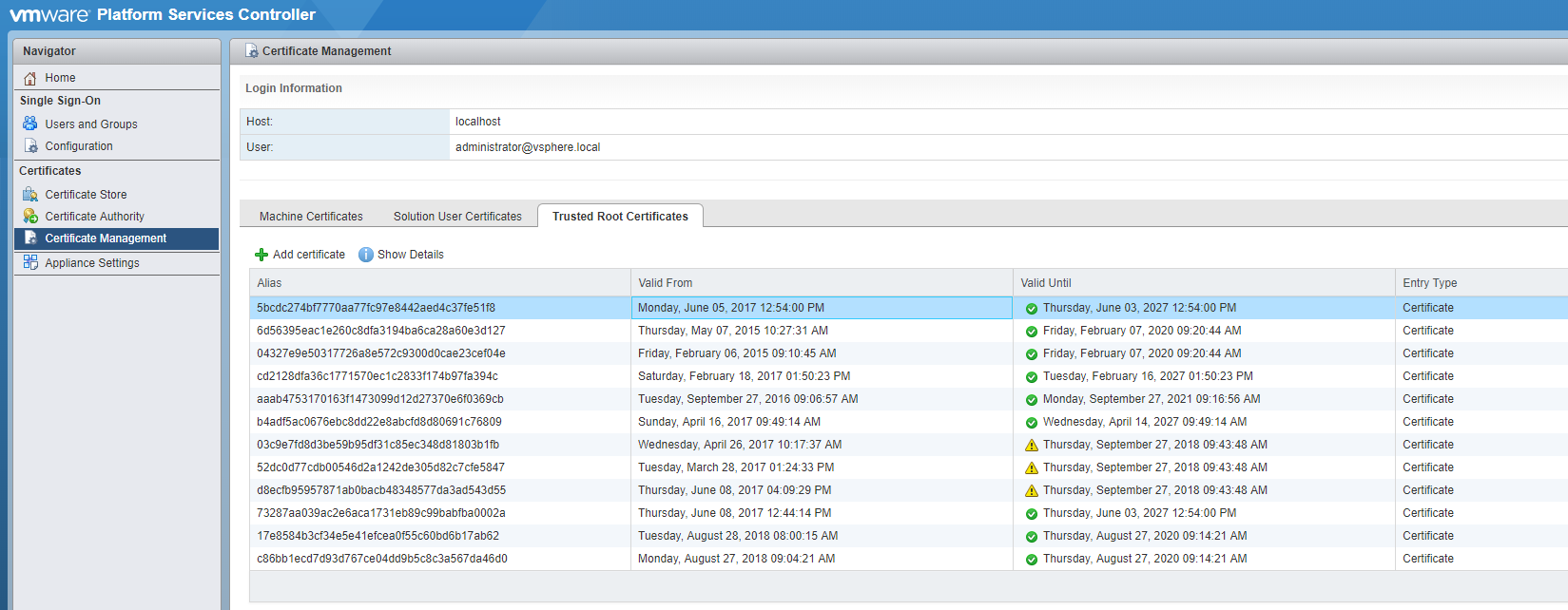

Managing certificates in a large vSphere environment has never been particularly fun. Admittedly, it has improved vastly since the release of 6.x and the integrated certificate authority, but it can still be a chore to update a large environment. I recently had to update the PSC, vCenter, and ESXi host certificates due to a looming expiration date on the CA certificate and ran into a strange issue that ultimately VMware Support helped me to track down. I figured it was worth sharing the problem for future reference. The TL;DR version of this story is that having too many old trusted root certificates in the VCSA’s trusted root store may cause this issue.

In this environment the VMCA acts as a subordinate or intermediate CA to an internal Microsoft Certificate Services infrastructure. There is a great VMware blog post that walks through some different approaches to managing certificates with the VMCA. For what it’s worth, the next time around I’m going to try the hybrid approach that is outlined there.

Initally, things were going pretty well. I issued a new subordinate CA certificate for the VMCA and updated it on the external PSC. I then issued a new machine certificate for vCenter and issued new solution certificates. All of this completed with no issues, as far as I could tell. For reference, here are the VMware docs covering both of these steps.

Creating a Microsoft Certificate Authority Template for SSL certificate creation in vSphere 6.x

Replace VMCA Root Certificate with Custom Signing Certificate and Replace All Certificates

After the vCenter services restarted I tried to access the vSphere Web Client when I was presented with the following error:

**A server error occurred.**

[400] An error occurred while sending an authentication request to the vCenter Single Sign-On server - An error occurred when processing the metadata during vCenter Single Sign-On setup - javax.net.ssl.SSLException: java.lang.RuntimeException: Unexpected error: java.security.InvalidAlgorithmParameterException: the trustAnchors parameter must be non-empty.

*Check the vSphere Web Client server logs for details.*

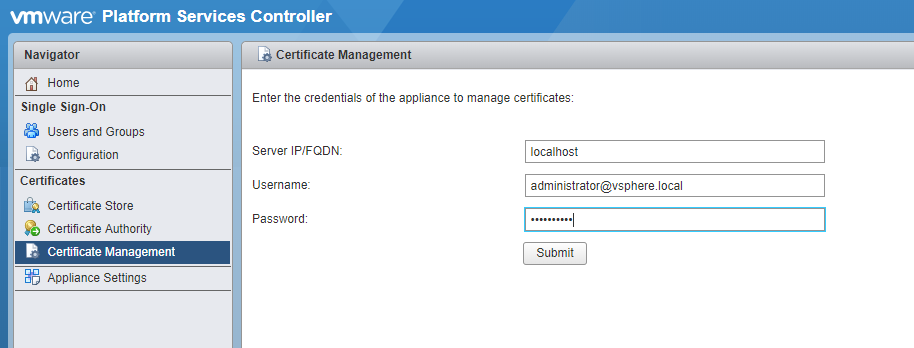

I doubled checked all of the certificates. The machine certificate and solutions certificates all had a new expiration date, showed the full CA chain correctly, and had no issues… The PSC has a handy certificate management UI where you can easily view the certificates for both the PSC and any vCenters.

The certificate manager script also has an option to revert to the previous certificates. I tried this and although I confirmed that it restored the previous certificates successfully I was still getting the web client error. After a healthy amount of searching and combing logs I opened a support ticket with VMware. As usual the VMware support tech was very helpful and after checking my certs and consulting with his colleagues he wanted to try deleting some of the trusted root certificates that were no longer needed. This has to be done using a LDAP client/explorer and JXplorer is recommended. This KB article, though for a differnt issue, walks you through using JXplorer. How to use JXplorer to update the LDAP string In JXplorer browse to Configuration, Certificate-Authorities and view the details for each of the certificate IDs listed. Using the DNs shown here you can identify which certificates to delete. Make sure you take a snapshot of the PSC and linked PSCs before deleting anything. After deleting the uneeded certificates you’ll probably need to restart the PSC and vCenter. If you prefer to just restart the services, these commands can be run in the shell:

service-control --stop --all

service-control --start --all

service-control --status --all

With the PSC and vCenter certificates renewed and the web client functioning it was time to update the hosts. Renewing a host certificate immediately after replacing the vCenter certificate results in:

A general system error occurred: Unable to get signed certificate for host: esxi_hostname. Error: Start Time Error (70034)

This error is easy to fix, just modify the vCenter advanced setting ‘vpxd.certmgmt.certs.minutesBefore’ to ‘10’. This is documented here: “Signed certificate could not be retrieved due to a start time error” when adding ESXi host to vCenter Server 6.0

Many host certificate renewals later my adventures in certificate management had finally ended. Whew! Hopefully this information helps someone, possibly even my future self.