🐛 Bug

To Reproduce

Steps to reproduce the behavior:

There are two examples to reproduce this bug when I train official faster rcnn with pascal voc dataset.

example1:

import torch from torchvision.models.detection import fasterrcnn_resnet50_fpn def main(): device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") print("using {}".format(device.type)) img = [torch.rand((3, 442, 500), dtype=torch.float32, device=device)] target = [{'boxes': torch.as_tensor([[122., 1., 230., 176.], [336., 1., 443., 150.]], dtype=torch.float32, device=device), 'labels': torch.as_tensor([5, 5], dtype=torch.int64, device=device), 'image_id': torch.as_tensor([1], dtype=torch.int64, device=device), 'area': torch.as_tensor([18900., 15943.], dtype=torch.float32, device=device), 'iscrowd': torch.as_tensor([0, 0], dtype=torch.int64, device=device)}] model = fasterrcnn_resnet50_fpn(pretrained=False, pretrained_backbone=False).to(device) model.train() res = model(img, target) print(res) if __name__ == '__main__': main()

example2:

import torch from torchvision.models.detection import fasterrcnn_resnet50_fpn def main(): device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") print("using {}".format(device.type)) img = [torch.rand((3, 442, 500), dtype=torch.float32, device=device)] target = [{'boxes': torch.as_tensor([[29., 87., 447., 420.], [211., 44., 342., 167.]], dtype=torch.float32, device=device), 'labels': torch.as_tensor([13, 15], dtype=torch.int64, device=device), 'image_id': torch.as_tensor([0], dtype=torch.int64, device=device), 'area': torch.as_tensor([139194., 16113.], dtype=torch.float32, device=device), 'iscrowd': torch.as_tensor([0, 0], dtype=torch.int64, device=device)}] model = fasterrcnn_resnet50_fpn(pretrained=False, pretrained_backbone=False).to(device) model.train() with torch.cuda.amp.autocast(enabled=True): re = model(img, target) print(re) if __name__ == '__main__': main()

In this example, When I delete with torch.cuda.amp.autocast(enabled=True): or using with torch.cuda.amp.autocast(enabled=False):, this bug disappears.

Error messages as follows:

using cuda

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [32,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [33,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [34,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [35,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [75,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [76,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [78,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [79,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [80,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [81,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [82,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [83,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [84,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [85,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [86,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [87,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [88,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [89,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [90,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [91,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [92,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [93,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [94,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [95,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [96,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [97,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [98,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [99,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [100,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [101,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [102,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [103,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [104,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [105,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [106,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [107,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [108,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [109,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [110,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [111,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [112,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [113,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [114,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [115,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [116,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [117,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [118,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [119,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [120,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [121,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [122,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [123,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [124,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [125,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [126,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [127,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [0,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [1,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [2,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [3,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [4,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [5,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [6,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [7,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [8,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [9,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [10,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [11,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [12,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [13,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [14,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [15,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [16,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [17,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [18,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [19,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [20,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [21,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [22,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [23,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [24,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [25,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [26,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [27,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [28,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [29,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [30,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

/pytorch/aten/src/ATen/native/cuda/IndexKernel.cu:142: operator(): block: [0,0,0], thread: [31,0,0] Assertion `index >= -sizes[i] && index < sizes[i] && "index out of bounds"` failed.

Traceback (most recent call last):

File "bbbbbbbbug.py", line 34, in <module>

main()

File "bbbbbbbbug.py", line 28, in main

re = model(img, target2)

File "/usr/local/miniconda3/envs/torch18/lib/python3.8/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/miniconda3/envs/torch18/lib/python3.8/site-packages/torchvision/models/detection/generalized_rcnn.py", line 97, in forward

proposals, proposal_losses = self.rpn(images, features, targets)

File "/usr/local/miniconda3/envs/torch18/lib/python3.8/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/miniconda3/envs/torch18/lib/python3.8/site-packages/torchvision/models/detection/rpn.py", line 364, in forward

loss_objectness, loss_rpn_box_reg = self.compute_loss(

File "/usr/local/miniconda3/envs/torch18/lib/python3.8/site-packages/torchvision/models/detection/rpn.py", line 297, in compute_loss

sampled_pos_inds = torch.where(torch.cat(sampled_pos_inds, dim=0))[0]

RuntimeError: CUDA error: device-side assert triggered

Expected behavior

There should be no problems.

When I using torch1.6/1.7.1 with cuda10.1, this bug disappears.

Environment

- PyTorch Version (e.g., 1.0): 1.8.1

- OS (e.g., Linux): Centos7

- How you installed PyTorch (

conda,pip, source): pip install - Build command you used (if compiling from source): no

- Python version: 3.8

- CUDA/cuDNN version: cuda:11.1

- GPU models and configuration: tesla v100

- GPU driver: 460.32.03

cc @ezyang @gchanan @zou3519 @bdhirsh @jbschlosser @anjali411 @ngimel @mcarilli @ptrblck

If you happen to run into this error — cuda runtime error (59): device-side assert triggered — you know how frustrating it can be. The most frustrating part for me was the lack of a clear, step-by-step solution to this problem. This could be due to the fact that PyTorch is still relatively new.

I first encountered this problem while working on the Stanford car data set during a hackathon for the Udacity Pytorch Challenge. It took me a while to fix it, and it didn’t help that I was using Kaggle Kernels, which presented its own challenges in regards to GPU.

A CUDA error: device-side assert triggered is an error that’s often caused when you either have inconsistency between the number of labels and output units or you input the loss function incorrectly. To solve it, you need to make sure your output units match the number of classes and that your output layer returns values in the range of the loss function (criterion) that you chose.

What Causes a CUDA Error: Device-Side Assert Triggered?

The following two reasons cause a CUDA error to occur:

- Inconsistency between the number of labels/classes and the number of output units

- The input of the loss function may be incorrect.

Let’s unpack these reasons and their solutions below.

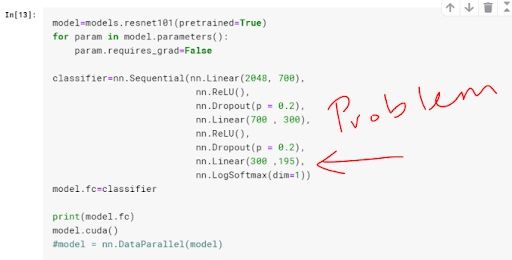

Inconsistency Between the Number of Labels and Output Units

When defining the final fully connected layer of my model, instead of putting 196 — the total number of classes for the Stanford car data set — as the number of output units, I put 195.

The error is usually identified in the line where you do the backpropagation. Your loss function will be comparing the output from your model and the label of that observation in your data set. Just in case you are confused between labels and output, I define them as:

- Label: These are the tags associated with an observation. When working on a classification problem, the label is the class. For example, in the classic dog vs. cat problem, the labels are cat and dog.

- Output: This is the predicted “label” from your model. You give your model a set of features from an observation, and it gives out a prediction called “output” in the PyTorch ecosystem. Think of it as a predicted label.

In my case, some of the labels had a value of 195, which was beyond the range of output for my model in which the greatest possible value was 194 (you start counting from zero). This triggered the error.

More on Machine LearningA Complete Guide to PySpark Data Frames

How Do You Fix This Error?

Make sure the number of output units match the number of your classes. That would involve changing my classifier to be as follows:

classifier = nn.Sequential(nn.Linear(2048, 700),

nn.ReLU(),

nn.Dropout(p = 0.2),

nn.Linear(700 , 300),

nn.ReLU(),

nn.Dropout(p = 0.2),

nn.Linear(300 ,196), #changed this from 195 to 196

nn.LogSoftmax(dim = 1))

model.fc = classifierThis is how you get the number of classes in your data set programmatically:

#.classes returns a list of the classes in your dataset, usually #numbered from 0 to number_of_classes-1

len(dataset.classes)

#Calling len() function on the list will return the number of classes in your datasetReason 2: Wrong Input for the Loss Function

Loss functions have different ranges for the possible inputs that they can accept. If you choose an incompatible activation function for your output layer, it will trigger this error. For example, BCELoss() requires its input to be between zero and one. If the input (output from your model) is beyond the acceptable range for that particular loss function, the error will get triggered.

What Are Activation Loss Functions?

Activation functions are the mathematical equations that determine the output of your neural network. The purpose of the activation function is to introduce non-linearity into the output of a model, thus making a model capable of learning and performing more complex tasks. In turn, they determine how accurate your network will be.

Loss functions are the equations that compute the error that is used to learn via backpropagation.

More on Loss FunctionsThink You Don’t Need Loss Functions in Deep Learning? Think Again.

How to Resolve a CUDA Error: Device-Side Assert Triggered in PyTorch

Make sure your output layer returns values in the range of the loss function (criterion) that you chose. This implies that you’re using the appropriate activation function (sigmoid, softmax, LogSoftmax) in your final output layer.

Example of Problematic Code

model = nn.Linear()

input = torch.randn(128, 2)

output = model(input)

criterion=nn.BCELoss()

torch.empty(128).random_(2)

loss=criterion(output, target)The code above will trigger a CUDA runtime error 59 if you are using a GPU. You can fix it by passing your output through the sigmoid function or using BCEWithLogitsLoss().

Solution 1: Pass the Results Through Sigmoid Function

model = nn.Linear() #The sigmoid funtion can also be applied here as model=nn.Sigmoid(nn.Linear())

input = torch.randn(128, 2)

output = model(input)

criterion=nn.BCELoss()

target=torch.empty(128).random_(2)

loss=criterion(nn.Sigmoid(output), target)Solution 2: Using “BCEWithLogitsLoss()”

model = nn.Linear()

input = torch.randn(128, 2)

output = model(input)

criterion=nn.BCEWithLogitsLoss() #changed fromBCELoss

target = torch.empty(128).random_(2)

loss=criterion(output, target)Fixing CUDA Error: Device-Side Assert Triggered on Kaggle

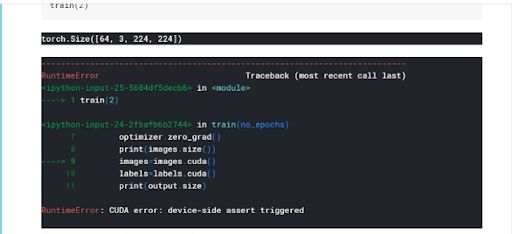

Once that error is triggered, you cannot continue using your GPU. Even after changing the problematic line and reloading the entire kernel, you will still get the error presented in different forms. The form depends on which line is the first one to attempt to use the GPU. Look at the image below for an idea.

The reason this happens is that even though you may have fixed the bug in your code, once the runtime error 59 is triggered, you are blocked from using the GPU entirely during the same GPU session in which the error was triggered.

The Solution

Stop your current kernel session and start a new one.

To do this, follow the steps below:

- From your kernel, click the ‘K’ on the top left. It automatically takes you to your kernels

- You will see a list of your kernels, which will have have edit option and additional stop for the ones currently running.

- Click “Stop kernel.”

- Restart your kernel session fresh. Every variable should reset, and you should have a brand new GPU session

CUDA Error: Device-Side Assert Triggered Tips

The error messages you get when running into this error may not be very descriptive. To make sure you get a complete and useful stack trace, enter CUDA_LAUNCH_BLOCKING="1" at the very beginning of your code and run it before anything else.

I am trying to initialize a tensor on Google Colab with GPU enabled.

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

t = torch.tensor([1,2], device=device)

But I am getting this strange error.

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1

Even by setting that environment variable to 1 seems not showing any further details.

Anyone ever had this issue?

Mateen Ulhaq

23k16 gold badges89 silver badges130 bronze badges

asked Jun 28, 2021 at 16:12

1

While I tried your code, and it did not give me an error, I can say that usually the best practice to debug CUDA Runtime Errors: device-side assert like yours is to turn collab to CPU and recreate the error. It will give you a more useful traceback error.

Most of the time CUDA Runtime Errors can be the cause of some index mismatching so like you tried to train a network with 10 output nodes on a dataset with 15 labels. And the thing with this CUDA error is once you get this error once, you will recieve it for every operation you do with torch.tensors. This forces you to restart your notebook.

I suggest you restart your notebook, get a more accuracate traceback by moving to CPU, and check the rest of your code especially if you train a model on set of targets somewhere.

answered Jun 28, 2021 at 20:24

SarthakJainSarthakJain

9766 silver badges10 bronze badges

4

As the other respondents indicated: Running it on CPU reveals the error. My target labels where {1,2} I changed them to {0,1}. This procedure solved it for me.

answered Jan 28, 2022 at 21:26

tschomackertschomacker

5326 silver badges15 bronze badges

Double-check the number of gpu. Normally, it should be gpu=0 unless you have more than one gpu.

answered Jun 3, 2022 at 21:38

Hoyeol KimHoyeol Kim

2192 silver badges4 bronze badges

Maybe, I mean in some cases

It is due to you forgetting to add a sigmoid activation before you send the logit to BCE Loss.

Hope it can help

answered Apr 27, 2022 at 9:01

1

I also encountered this problem and found the reason, because the vocabulary dimension is 8000, but the embedding dimension in my model is set to 5000

answered Apr 30, 2022 at 11:41

1st time:

Got the same error while using simpletransformers library to fine-tuning transformer-based model for multi-class classification problem. simpletransformers is a library written on the top of transformers library.

I changed my labels from string representations to numbers and it worked.

2nd time:

Face the same error again while training another transformer-based model with transformers library, for text classification.

I had 4 labels in the dataset, named 0,1,2, and 3. But in the last layer (Linear Layer) of my model class, I had two neurons. nn.Linear(*, 2)* which I had to replace by nn.Linear(*, 4) because I had total four labels.

answered Nov 23, 2021 at 17:06

2

I am a filthy casual coming from the VQGAN+Clip «ai-art» community. I get this error when I already have a session running on another tab. Killing all sessions from the session manager clears it up, and let’s you connect with the new tab, which is nice if you have fiddled with a lot of settings you don’t want to loose

answered Feb 28, 2022 at 21:27

I had the same problem on Colab as well.

If your code runs normally on device(«cpu»), try deleting the current Colab runtime and restart it. This worked for me.

answered Aug 2, 2022 at 10:05

I figured it out.

When use CUDA, just set num_workers=0, and It works!

answered Jan 7 at 13:02

Today when I using PyTorch framework to train a simple classifier, I got an error message like following:

RuntimeError: CUDA error: device-side assert triggered"I have had similar experience before and I have successfully solved it, but I don’t remember how to do.

This is the disadvantage of not taking notes.

After restart my remote server, this error is still exist. I think we can rule out hardware problems.

I will record possible solutions below.

Exception exclusion

Step 1: Use CPU to test

First, I looked for the discussion on GitHub issues, some people recommend using the CPU to run and check if the same problem still exists.

(But I still have this error)

Step 2: Check your labels

The next suggestion I saw is to check «whether -1 exists in the labels of the training data«.

My data is labelled by myself, it is impossible for this problem. But I still went to confirm my label.

Then I found the problem: The labels of data I decided is from 1-3140, but the final layer only has 3139 neurons set to output.

After I add the neuron in the last layer to 3140, the problem was solved!

References

- https://discuss.pytorch.org/t/runtimeerror-cuda-runtime-error-710-device-side-assert-triggered-at/64019

- https://discuss.pytorch.org/t/runtimeerror-cuda-error-device-side-assert-triggered/34213

Debugging CUDA device-side assert in PyTorch

June 15, 2018

The beautiful thing of PyTorch’s immediate execution model is that you can actually debug your programs.

Sometimes, however, the asynchronous nature of CUDA execution makes it hard. Here is a little trick to debug your programs.

When you run a PyTorch program using CUDA operations, the program usually doesn’t wait until the computation finishes but continues to throw instructions at the GPU until it actually needs a result (e.g. to evaluate using .item() or .cpu() or printing).

While this behaviour is key to the blazing performance of PyTorch programs, there is a downside: When a cuda operation fails, your program has long gone on to do other stuff. The usual symptom is that you get a very non-descript error at a more or less random place somewhere after the instruction that triggered the error.

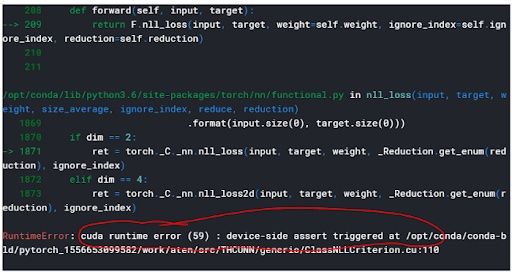

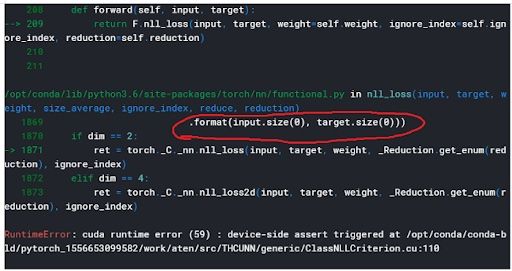

It typically looks like this:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-4-3d8a992c81ab> in <module>()

1 loss = torch.nn.functional.cross_entropy(activations, labels)

2 average = loss/4

----> 3 print(average.item())

RuntimeError: cuda runtime error (59) : device-side assert triggered at /home/tv/pytorch/pytorch/aten/src/THC/generic/THCStorage.cpp:36

Well, that is hard to understand, I’m sure that printing my results is a legitimate course of action. So a device-side assert means I just noticed something went wrong somewhere.

Here is the faulty program causing this output:

import torch

device = torch.device('cuda:0')

activations = torch.randn(4,3, device=device) # usually we get our activations in a more refined way...

labels = torch.arange(4, device=device)

loss = torch.nn.functional.cross_entropy(activations, labels)

average = loss/4

print(average.item())

One option in debugging is to move things to CPU. But often, we use libraries or have complex things where that isn’t an option. So what now? If we could only get a good traceback, we should find the problem in no time.

This is how to get a good traceback:You can launch the program with the environment variable CUDA_LAUNCH_BLOCKING set to 1. But as you can see, I like to use Jupyter for a lot of my work, so that is not as easy as one would like. But this can be solved, too: At the very top of your program, before you import anything (and in particular PyTorch), insert

import os

os.environ['CUDA_LAUNCH_BLOCKING'] = "1"

With this addition, we get a better traceback:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-4-3d8a992c81ab> in <module>()

----> 1 loss = torch.nn.functional.cross_entropy(activations, labels)

2 average = loss/4

3 print(average.item())

/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py in cross_entropy(input, target, weight, size_average, ignore_index, reduce)

1472 >>> loss.backward()

1473 """

-> 1474 return nll_loss(log_softmax(input, 1), target, weight, size_average, ignore_index, reduce)

1475

1476

/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py in nll_loss(input, target, weight, size_average, ignore_index, reduce)

1362 .format(input.size(0), target.size(0)))

1363 if dim == 2:

-> 1364 return torch._C._nn.nll_loss(input, target, weight, size_average, ignore_index, reduce)

1365 elif dim == 4:

1366 return torch._C._nn.nll_loss2d(input, target, weight, size_average, ignore_index, reduce)

RuntimeError: cuda runtime error (59) : device-side assert triggered at /home/tv/pytorch/pytorch/aten/src/THCUNN/generic/ClassNLLCriterion.cu:116

So apparently, the loss does not like what we pass it. In fact, our activations have shape batch x 3, so we only allow for three categories (0, 1, 2), but the labels run to 3!

The best part is that this also works for nontrivial examples. Now if only we could recover the non-GPU bits of our calculation instead of needing a complete restart…

Перевод

Ссылка на автора

Если вы случайно столкнулись с этой ошибкой, вы знаете, как это может быть неприятно. Самым разочаровывающим моментом для меня было отсутствие четкого, пошагового решения этой проблемы. Это может быть вызвано тем, что PyTorch все еще относительно нов.

Немного предыстории …

Я работал над Стэнфордский автомобильный набор данных как часть хакатона, рожденного от моего участия в Udacity Pytorch Challenge когда я столкнулся с этой проблемой. Мне потребовалось много времени, чтобы это исправить, и это усугубилось тем фактом, что я использовал ядра Kaggle, которые представляли свои собственные проблемы в отношении GPU.

В чем ошибка?

Эта ошибка возникает из-за следующих двух причин:

- Несоответствие между количеством меток / классов и количеством выходных единиц

- Ввод функции потерь может быть неправильным.

Давайте распакуем эти причины и их решения ниже:

Причина 1: несоответствие между количеством меток и количеством выходных единиц

При определении окончательного полностью связанного слоя моей модели вместо числа 196 (общее количество классов для автомобильного набора данных Стэнфорда) в качестве количества выходных единиц я выбрал 195.

Ошибка обычно указывается в строке, где вы делаете обратное распространение. Ваша функция потери будет сравниватьвыходот вашей модели и томуэтикеткаэтого наблюдения в вашем наборе данных. На всякий случай, если вы путаетесь между метками и выводом, посмотрите, как я их определяю ниже:

этикетка: Это теги, связанные с наблюдением. При работе над проблемой классификации метка является классом. Например, в классической проблеме «собака против кошки» ярлыки обозначают кошка и собака.

Выход: Это предсказанный «ярлык» вашей модели. Вы даете своей модели набор функций из наблюдения, и она выдает прогноз, называемый выходом в экосистеме PyTorch. Думайте об этом как о предсказанном ярлыке.

В моем случае некоторые метки будут иметь значение 195, которое выходит за пределы диапазона выходных данных для моей модели, максимально возможное значение которой составляет 194 (начать отсчет с нуля). Это вызывает ошибку, которая будет вызвана.

Как вы можете это исправить?

Убедитесь, что количество выходных единиц соответствует количеству ваших классов

Это изменило бы мой классификатор следующим образом:

classifier = nn.Sequential(nn.Linear(2048, 700),

nn.ReLU(),

nn.Dropout(p = 0.2),

nn.Linear(700 , 300),

nn.ReLU(),

nn.Dropout(p = 0.2),

nn.Linear(300 ,196), #changed this from 195 to 196

nn.LogSoftmax(dim = 1))

model.fc = classifier

Вот как вы получаете количество классов в вашем наборе данных программным способом:

#.classes returns a list of the classes in your dataset, usually #numbered from 0 to number_of_classes-1len(dataset.classes)#Calling len() function on the list will return the number of classes in your dataset

Причина 2: неверный ввод для функции потерь

Функции потери имеют разные диапазоны для возможных входов, которые они могут принять. Если вы выберете несовместимую функцию активации для выходного слоя, эта ошибка будет вызвана. Например, BCELoss требует, чтобы его входное значение находилось в диапазоне от 0 до 1. Если входное значение (выходное значение вашей модели) выходит за пределы допустимого диапазона для этой конкретной функции потерь, возникает ошибка.

Резюме на функции потери активации

Функции активации — это математические уравнения, которые определяют выход вашей нейронной сети. Целью функции активации являетсяввести нелинейностьв вывод модели, тем самым делая модель способной учиться и выполнять более сложные задачи. Они, в свою очередь, определяют, насколько точной будет ваша сеть.

Функции потерь — это уравнения, которые вычисляют ошибку, используемую для обучения посредствомобратное распространение,

Для подробного объяснения функций потери и как они работают, пожалуйста, проверьте это статья и Pytorch документация, Найти информацию о функция активации здесь,

Как вы можете это исправить?

Убедитесь, что ваш выходной слой возвращает значения в диапазоне выбранной вами функции потерь (критерия). Это подразумевает использование соответствующей функции активации (Sigmoid, Softmax, LogSoftmax) в вашем конечном выходном слое.

Пример проблемного кода

model = nn.Linear()

input = torch.randn(128, 2)

output = model(input)criterion=nn.BCELoss()

torch.empty(128).random_(2)loss=criterion(output, target)

Приведенный выше код вызовет ошибку времени выполнения 59, если мы используем графический процессор. Вы можете исправить это, передав свой вывод через функцию сигмоида или используя BCEWithLogitsLoss ().

Исправление 1: передача результатов через функцию Sigmoid

model = nn.Linear() #The sigmoid funtion can also be applied here as model=nn.Sigmoid(nn.Linear())input = torch.randn(128, 2)

output = model(input)criterion=nn.BCELoss()

target=torch.empty(128).random_(2)loss=criterion(nn.Sigmoid(output), target)

Исправление 2: вместо этого используется BCEWithLogitsLoss ()

model = nn.Linear()

input = torch.randn(128, 2)

output = model(input)criterion=nn.BCEWithLogitsLoss() #changed fromBCELoss

target = torch.empty(128).random_(2)loss=criterion(output, target)

Работаете над Kaggle? Вот почему даже после выполнения вышеуказанных шагов, вы все еще боретесь

Как только эта ошибка вызвана, вы не можете продолжать использовать свой графический процессор. Даже после изменения проблемной строки и перезагрузки всего ядра, вы все равно получите ошибку, представленную в разных формах. Форма зависит от того, какая строка первой попытается использовать графический процессор. Посмотрите на изображение ниже для идеи.

Причина, по которой это происходит, даже несмотря на то, что вы исправили ошибку в своем коде, заключается в том, что, как только ошибка 59 времени выполнения сработает, вы не сможете использовать GPU полностью во время того же сеанса GPU, в котором была вызвана ошибка.

Как вы можете это исправить?

Остановите текущий сеанс ядра и начните новый.

Для этого выполните следующие действия:

- В вашем Ядре нажмите «К» в левом верхнем углу. Это автоматически приведет вас к вашим ядрам

- Вы увидите список ваших ядер с возможностью редактирования и дополнительной остановкой для тех, которые в данный момент работают.

- Нажмите остановить ядро

- Снова откройте ядро, все переменные будут сброшены, и у вас будет новый сеанс GPU

Дополнительный совет

Сообщения об ошибках, которые вы получаете, сталкиваясь с этой ошибкой, могут быть не очень описательными. Чтобы убедиться, что вы получите полный иполезнымтрассировка стека, имейте это в самом начале вашего кода и запустите его прежде всего:

CUDA_LAUNCH_BLOCKING="1"

Ссылки

[1] Автор, GPU уходит после ошибки # 1010 ( 2018), Pytorch Github Выпуски страниц

[2] Лернаппрат, Отладка утверждения на стороне устройства CUDA в PyTorch (2018), https://lernapparat.de/

Я надеюсь, что эта статья помогла вам. Не стесняйтесь оставлять свои комментарии по любому аспекту этого урока в разделе ответов ниже.

The questions are as follows:

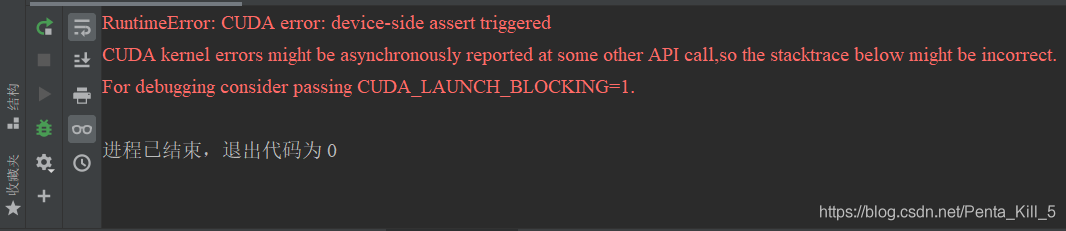

1. The problems are as follows:

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_ LAUNCH_ BLOCKING=1.

2. Solution:

(1) At the beginning, I searched for solutions on the Internet. As a result, most netizens’ solutions are similar to this:

Some people say that the reason for this problem is that there are tags exceeding the number of categories in the training data when doing the classification task. For example: if you set up a total of 8 classes, but there is 9 in the tag in the training data, this error will be reported. So here’s the problem. There’s a trap. If the tag in the training data contains 0, the above error will also be reported. This is very weird. Generally, we start counting from 0, but in Python, the category labels below 0 have to report an error. So if the category label starts from 0, add 1 to all category labels.

Python scans the train itself_ Each folder under path (each type of picture is under its category folder), and map each class to a numerical value. For example, there are four categories, and the category label is [0,1,2,3]. In the second classification, the label is mapped to [0,1], but in the fourth classification, the label is mapped to [1,2,3,4], so an error will be reported.

(2) In fact, it’s useless for me to solve the same problem that I still report an error. Later, I looked up the code carefully and found that it was not the label that didn’t match the category of the classification, but there was a problem with the code of the last layer of the network. If you want to output the categories, you should fill in the categories.

self.outlayer = nn.Linear(256 * 1 * 1, 3) # The final fully connected layer

# Others are 3 categories, while mine is 5 categories, corrected here to solve

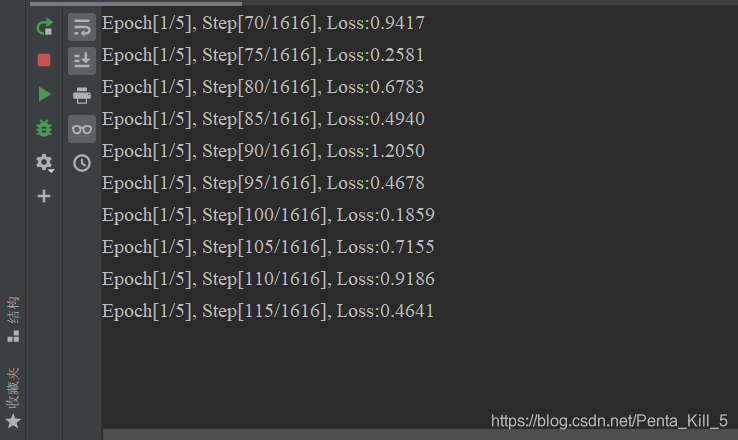

self.outlayer = nn.Linear(256 * 1 * 1, 5) # The last fully connected layer(3) It’s actually a small problem, but it’s been working for a long time. Let’s make a record here. The actual situation after the solution:

-

-

Cause of my case

.ipynb_checkpoints are cause when my case.

mtcnn_detect_resized/ ├ train/ │ ├ REAL/ │ │ ├ 8537.png │ │ └ ... │ ├ FAKE/ │ │ ├ 2857.png │ | └ ... │ ├ .ipynb_checkpointsThe reason why I notice

I check train image label and validation image label like below code.

# load library import torch import torch.nn as nn import torch.optim as optim from torch.optim import lr_scheduler import numpy as np import torchvision from torchvision import datasets, models, transforms data_transforms = { 'train': transforms.Compose([ transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) ]), 'val': transforms.Compose([ transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) ]), } data_dir = './mtcnn_detect_resized' image_datasets = { x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x]) for x in ['train', 'val'] } dataloaders = { x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4, shuffle=True, num_workers=4) for x in ['train', 'val'] } dataset_sizes = { x: len(image_datasets[x]) for x in ['train', 'val'] } class_names = image_datasets['train'].classes device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")When I check train classes, label is «FAKE» and «REAL»

image_datasets['train'].classes ['FAKE', 'REAL']

When I check valid classes, label is «FAKE» and «REAL» and strange «.ipynb_checkpoints».

This is not label I wnat to classify.

image_datasets['val'].classes ['.ipynb_checkpoints','FAKE', 'REAL']Solution of my case

Search .ipynb_checkpoints

mtcnn_detect_resized/val$ sudo find ./ -name .ipynb_checkpoints ./.ipynb_checkpointsDelete .ipynb_checkpoints

mtcnn_detect_resized/val$ rm -rf ./.ipynb_checkpoints

After this, error is gone.

This is the solutoin of when .ipynb_checkpoints prevent pytorch classes.

Check if you can’t solve this problem

- image shape is correct?

- labels/classes is correct?

- The input of loss function is correct?

-