Why is Docker trying to create the folder that I’m mounting? If I cd to C:UsersszxProjects

docker run --rm -it -v "${PWD}:/src" ubuntu /bin/bash

This command exits with the following error:

C:Program FilesDocker Toolboxdocker.exe: Error response from daemon: error while creating mount source path '/c/Users/szx/Projects': mkdir /c/Users/szx/Projects: file exists.

I’m using Docker Toolbox on Windows 10 Home.

asked Jun 12, 2018 at 13:05

szxszx

6,1356 gold badges44 silver badges65 bronze badges

3

For anyone running mac/osx and encountering this, I restarted docker desktop in order to resolve this issue.

Edit: It would appear this also fixes the issue on Windows 10

answered Dec 4, 2020 at 22:30

lancepantslancepants

1,2321 gold badge8 silver badges8 bronze badges

7

I got this error after changing my Windows password. I had to go into Docker settings and do «Reset credentials» under «Shared Drives», then restart Docker.

answered Dec 17, 2018 at 22:00

melicentmelicent

1,17112 silver badges22 bronze badges

3

My trouble was a fuse-mounted volume (e.g. sshfs, etc.) that got mounted again into the container. I didn’t help that the fuse-mount had the same ownership as the user inside the container.

I assume the underlying problem is that the docker/root supervising process needs to get a hold of the fuse-mount as well when setting up the container.

Eventually it helped to mount the fuse volume with the allow_other option. Be aware that this opens access to any user. Better might be allow_root – not tested, as blocked for other reasons.

answered May 8, 2020 at 19:39

pico_probpico_prob

1,02510 silver badges14 bronze badges

3

Make sure the folder is being shared with the docker embedded VM. This differs with the various types of docker for desktop installs. With toolbox, I believe you can find the shared folders in the VirtualBox configuration. You should also note that these directories are case sensitive. One way to debug is to try:

docker run --rm -it -v "/:/host" ubuntu /bin/bash

And see what the filesystem looks like under «/host».

answered Nov 28, 2018 at 16:31

BMitchBMitch

214k40 gold badges456 silver badges428 bronze badges

3

I have encountered this problem on Docker (Windows) after upgrading to 2.2.0.0 (42247). The issue was with casing in the folder name that I’ve provided in my arguments to docker command.

answered Jan 22, 2020 at 10:10

Victor FVictor F

90718 silver badges30 bronze badges

1

Did you use this container before? You could try to remove all the docker-volumes before re-executing your command.

docker volume rm `(docker volume ls -qf dangling=true)`

I tried your command locally (MacOS) without any error.

answered Jun 12, 2018 at 14:38

MornorMornor

3,2938 gold badges29 silver badges64 bronze badges

5

I met this problem too.

I used to run the following command to share the folder with container

docker run ... -v c:/seleniumplus:/dev/seleniumplus ...

But it cannot work anymore.

I am using the Windows 10 as host.

My docker has recently been upgraded to «19.03.5 build 633a0e».

I did change my windows password recently.

I followed the instructions to re-share the «C» drive, and restarted the docker and even restarted the computer, but it didn’t work :-(.

All of sudden, I found that the folder is «C:SeleniumPlus» in the file explorer, so I ran

docker run ... -v C:/SeleniumPlus:/dev/seleniumplus ...

And it did work. So it is case-sensitive when we specify the windows shared folder in the latest docker («19.03.5 build 633a0e»).

answered Feb 7, 2020 at 8:44

lei wanglei wang

1091 gold badge1 silver badge9 bronze badges

I am working in Linux (WSL2 under Windows, to be more precise) and my problem was that there existed a symlink for that folder on my host:

# docker run --rm -it -v /etc/localtime:/etc/localtime ...

docker: Error response from daemon: mkdir /etc/localtime: file exists.

# ls -al /etc/localtime

lrwxrwxrwx 1 root root 25 May 23 2019 /etc/localtime -> ../usr/share/zoneinfo/UTC

It worked for me to bind mount the source /usr/share/zoneinfo/UTC instead.

answered Dec 8, 2020 at 11:07

Had the exact error. In my case, I used c instead of C when changing into my directory.

answered Feb 6, 2020 at 18:26

AndriiAndrii

5192 silver badges6 bronze badges

I had this issue when I was working with Docker in a CryFS -encrypted directory in Ubuntu 20.04 LTS. The same probably happens in other UNIX-like OS-es.

The problem was that by default the CryFS-mounted virtual directory is not accessible by root, but Docker runs as root. The solution is to enable root access for FUSE-mounted volumes by editing /etc/fuse.conf: just comment out the use_allow_other setting in it. Then mount the encrypted directory with the command cryfs <secretdir> <opendir> -o allow_root (where <secretdir> and <opendir> are the encrypted directory and the FUSE mount point for the decrypted virtual directory, respectively).

Credits to the author of this comment on GitHub for calling my attention to the -o allow_root option.

answered Apr 15, 2021 at 12:06

András AszódiAndrás Aszódi

8,5205 gold badges46 silver badges48 bronze badges

I solved this by restarting docker and rebuilding the images.

answered Feb 10, 2022 at 10:59

In case you work with a separate Windows user, with which you share the volume (C: usually): you need to make sure it has access to the folders you are working with — including their parents, up to your home directory.

Also make sure that EFS (Encrypting File System) is disabled for the shared folders.

See also my answer here.

answered Aug 7, 2019 at 15:16

TheOperatorTheOperator

5,63827 silver badges41 bronze badges

I had the same issue when developing using docker. After I moved the project folder locally, Docker could not mount files that were listed with relatives paths, and tried to make directories instead.

Pruning docker volumes / images / containers did not solve the issue. A simple restart of docker-desktop did the job.

answered Dec 10, 2020 at 9:43

I have put the user_allow_other in /etc/fuse.conf.

Then mounting as in the example below has solved the problem.

$ sshfs -o allow_other user@remote_server:/directory/

answered Dec 3, 2021 at 10:17

JamesJames

1019 bronze badges

I had this issue in WSL, likely caused by leaving some containers alive too long. None of the advice here worked for me. Finally, based on this blog post, I managed to fix it with the following commands, which wipe all the volumes completely to start fresh.

docker-compose down

docker rm -f $(docker ps -a -q)

docker volume rm $(docker volume ls -q)

docker-compose up

Then, I restarted WSL (wsl --shutdown), restarted docker desktop, and tried my command again.

answered Jul 16, 2022 at 23:26

Omer RavivOmer Raviv

11.2k5 gold badges42 silver badges81 bronze badges

This error crept up for me because the problem was that my docker-compose file was looking for the APPDATA path on my machine on mac OS. MacOS doesn’t have an APPDATA environment variable so I just created a .env file with the contents:

APPDATA=~/Library/

And my problem was solved.

answered Nov 14, 2022 at 23:24

cr1ptocr1pto

5293 silver badges13 bronze badges

I faced this error when another running container was already using folder that is being mounted in docker run command. Please check for the same & if not needed then stop the container. Best solution is to use volume by using following command —

docker volume create

then Mount this created volume if required to be used by multiple containers..

answered Feb 2, 2019 at 17:08

Abhishek JainAbhishek Jain

3,5171 gold badge24 silver badges25 bronze badges

For anyone having this issue in linux based os, try to remount your remote folders which are used by docker image. This helped me in ubuntu:

sudo mount -a

answered Oct 29, 2019 at 6:02

Ahmet CetinAhmet Cetin

3,6033 gold badges25 silver badges32 bronze badges

I am running docker desktop(docker engine v20.10.5) on Windows 10 and faced similar error. I went ahead and removed the existing image from docker-desktop UI, deleted the folder in question(for me deleting the folder was an option because i was just doing some local testing), removed the existing container, restarted the docker and it worked

answered Apr 27, 2021 at 19:35

JavaTecJavaTec

9073 gold badges14 silver badges29 bronze badges

In my case my volume path (in a .env file for docker-compose) had a space in it

/Volumes/some thing/folder

which did work on Docker 3 but didn’t after updating to Docker 4. So I had to set my env variable to :

"/Volumes/some thing/folder"

answered Nov 22, 2021 at 9:22

AlucardAlucard

1161 silver badge4 bronze badges

I had this problem when the directory on my host was inside a directory mounted with gocryptfs. By default even root can’t see the directory mounted by gocryptfs, only the user who executed the gocryptfs command can. To fix this add user_allow_other to /etc/fuse.conf and use the -allow_other flag e.g. gocryptfs -allow_other encrypted mnt

answered Oct 15, 2021 at 20:57

katsukatsu

5445 silver badges7 bronze badges

In my specific instance, Windows couldn’t tell me who owned my SSL certs (probably docker). I took control of the SSL certs again under Properties, added read permission for docker-users and my user, and it seemed to have fixed the problem. After tearing my hair out for 3 days with just the Daemon: Access Denied error, I finally got a meaningful error regarding another answer above «mkdir failed» or whataever on a mounted file (the SSL cert).

answered Oct 8, 2022 at 3:29

I’m facing the same problem right now.

Been trying latest Docker Desktop for Windows as well as the Docker Desktop from edge channel.

My setup details:

OS Name: Microsoft Windows 10 Pro

OS Version: 10.0.18363 N/A Build 18363

> docker version

Client: Docker Engine - Community

Version: 19.03.5

API version: 1.40

Go version: go1.12.12

Git commit: 633a0ea

Built: Wed Nov 13 07:22:37 2019

OS/Arch: windows/amd64

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 19.03.5

API version: 1.40 (minimum version 1.12)

Go version: go1.12.12

Git commit: 633a0ea

Built: Wed Nov 13 07:29:19 2019

OS/Arch: linux/amd64

Experimental: true

containerd:

Version: v1.2.10

GitCommit: b34a5c8af56e510852c35414db4c1f4fa6172339

runc:

Version: 1.0.0-rc8+dev

GitCommit: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

docker-init:

Version: 0.18.0

GitCommit: fec3683

> docker-compose version

docker-compose version 1.25.1-rc1, build d92e9bee

docker-py version: 4.1.0

CPython version: 3.7.5

OpenSSL version: OpenSSL 1.1.1d 10 Sep 2019

When trying uncheck & check again shared drives in the Settings > Resources > File Sharing I’m being prompted to enter the user password all the time (I enter correct password). This happens only AFTER I face the mkdir /host_mnt/c: file exists error. When Docker is restarted I can (un)check shared drives successfully again, but as soon as the error comes by, it’s failing (continuously prompts for password).

#edit

After several tries, restarts and running docker-compose up I get a new error from SSHD:

ERROR: for nginx Cannot start service nginx: error while creating mount source path '/host_mnt/c/Users/samm/Desktop/wordpress-nginx-docker/logs/nginx': mkdir /host_mnt/c/Users/samm/Desktop/wordpress-nginx-docker/logs: transport endpoint is not connected

To reproduce, simply clone and docker-compose this repo.

Содержание

- Troubleshoot topics

- Topics for all platforms

- Make sure certificates are set up correctly

- Topics for Linux and Mac

- Volume mounting requires file sharing for any project directories outside of $HOME

- Docker Desktop fails to start on MacOS or Linux platforms

- Topics for Mac

- Incompatible CPU detected

- Topics for Windows

- Volumes

- Permissions errors on data directories for shared volumes

- Volume mounting requires shared folders for Linux containers

- Support for symlinks

- Avoid unexpected syntax errors, use Unix style line endings for files in containers

- Path conversion on Windows

- Working with Git Bash

- Virtualization

- WSL 2 and Windows Home

- Hyper-V

- Virtualization must be enabled

- Hypervisor enabled at Windows startup

- Windows containers and Windows Server

- Networking issues

- Error response from daemon: error while creating mount source path, mkdir /run/desktop/mnt/host/c/Users/. file exists #12403

- Comments

- Actual behavior

- Expected behavior

- Information

- Steps to reproduce the behavior

- Error when mounting host path with Docker for Windows — «Permission Denied» #3385

- Comments

- Expected behavior

- Actual behavior

- Information

- Steps to reproduce the behavior

Troubleshoot topics

Topics for all platforms

Make sure certificates are set up correctly

Docker Desktop ignores certificates listed under insecure registries, and does not send client certificates to them. Commands like docker run that attempt to pull from the registry produces error messages on the command line, like this:

As well as on the registry. For example:

Topics for Linux and Mac

Volume mounting requires file sharing for any project directories outside of $HOME

If you are using mounted volumes and get runtime errors indicating an application file is not found, access to a volume mount is denied, or a service cannot start, such as when using Docker Compose, you might need to enable file sharing.

Volume mounting requires shared drives for projects that live outside of the /home/ directory. From Settings, select Resources and then File sharing. Share the drive that contains the Dockerfile and volume.

Docker Desktop fails to start on MacOS or Linux platforms

On MacOS and Linux, Docker Desktop creates Unix domain sockets used for inter-process communication.

Docker fails to start if the absolute path length of any of these sockets exceeds the OS limitation which is 104 characters on MacOS and 108 characters on Linux. These sockets are created under the user’s home directory. If the user ID length is such that the absolute path of the socket exceeds the OS path length limitation, then Docker Desktop is unable to create the socket and fails to start. The workaround for this is to shorten the user ID which we recommend has a maximum length of 33 characters on MacOS and 55 characters on Linux.

Following are the examples of errors on MacOS which indicate that the startup failure was due to exceeding the above mentioned OS limitation:

Topics for Mac

Incompatible CPU detected

Docker Desktop requires a processor (CPU) that supports virtualization and, more specifically, the Apple Hypervisor framework. Docker Desktop is only compatible with Mac systems that have a CPU that supports the Hypervisor framework. Most Macs built in 2010 and later support it,as described in the Apple Hypervisor Framework documentation about supported hardware:

Generally, machines with an Intel VT-x feature set that includes Extended Page Tables (EPT) and Unrestricted Mode are supported.

To check if your Mac supports the Hypervisor framework, run the following command in a terminal window.

If your Mac supports the Hypervisor Framework, the command prints kern.hv_support: 1 .

If not, the command prints kern.hv_support: 0 .

See also, Hypervisor Framework Reference in the Apple documentation, and Docker Desktop Mac system requirements.

Topics for Windows

Volumes

Permissions errors on data directories for shared volumes

When sharing files from Windows, Docker Desktop sets permissions on shared volumes to a default value of 0777 ( read , write , execute permissions for user and for group ).

The default permissions on shared volumes are not configurable. If you are working with applications that require permissions different from the shared volume defaults at container runtime, you need to either use non-host-mounted volumes or find a way to make the applications work with the default file permissions.

Volume mounting requires shared folders for Linux containers

If you are using mounted volumes and get runtime errors indicating an application file is not found, access is denied to a volume mount, or a service cannot start, such as when using Docker Compose, you might need to enable shared folders.

With the Hyper-V backend, mounting files from Windows requires shared folders for Linux containers. From Settings, select Shared Folders and share the folder that contains the Dockerfile and volume.

Support for symlinks

Symlinks work within and across containers. To learn more, see How do symlinks work on Windows?.

Avoid unexpected syntax errors, use Unix style line endings for files in containers

Any file destined to run inside a container must use Unix style n line endings. This includes files referenced at the command line for builds and in RUN commands in Docker files.

Docker containers and docker build run in a Unix environment, so files in containers must use Unix style line endings: n , not Windows style: rn . Keep this in mind when authoring files such as shell scripts using Windows tools, where the default is likely to be Windows style line endings. These commands ultimately get passed to Unix commands inside a Unix based container (for example, a shell script passed to /bin/sh ). If Windows style line endings are used, docker run fails with syntax errors.

For an example of this issue and the resolution, see this issue on GitHub: Docker RUN fails to execute shell script.

Path conversion on Windows

On Linux, the system takes care of mounting a path to another path. For example, when you run the following command on Linux:

It adds a /work directory to the target container to mirror the specified path.

However, on Windows, you must update the source path. For example, if you are using the legacy Windows shell ( cmd.exe ), you can use the following command:

This starts the container and ensures the volume becomes usable. This is possible because Docker Desktop detects the Windows-style path and provides the appropriate conversion to mount the directory.

Docker Desktop also allows you to use Unix-style path to the appropriate format. For example:

Working with Git Bash

Git Bash (or MSYS) provides a Unix-like environment on Windows. These tools apply their own preprocessing on the command line. For example, if you run the following command in Git Bash, it gives an error:

This is because the character has a special meaning in Git Bash. If you are using Git Bash, you must neutralize it using \ :

Also, in scripts, the pwd command is used to avoid hardcoding file system locations. Its output is a Unix-style path.

Combined with the $() syntax, the command below works on Linux, however, it fails on Git Bash.

You can work around this issue by using an extra /

Portability of the scripts is not affected as Linux treats multiple / as a single entry. Each occurence of paths on a single line must be neutralized.

In this example, The $(pwd) is not converted because of the preceding вЂ/’. However, the second вЂ/work’ is transformed by the POSIX layer before passing it to Docker Desktop. You can also work around this issue by using an extra / .

To verify whether the errors are generated from your script, or from another source, you can use an environment variable. For example:

It only expects the environment variable here. The value doesn’t matter.

In some cases, MSYS also transforms colons to semicolon. Similar conversions can also occur when using

because the POSIX layer translates it to a DOS path. MSYS_NO_PATHCONV also works in this case.

Virtualization

Your machine must have the following features for Docker Desktop to function correctly.

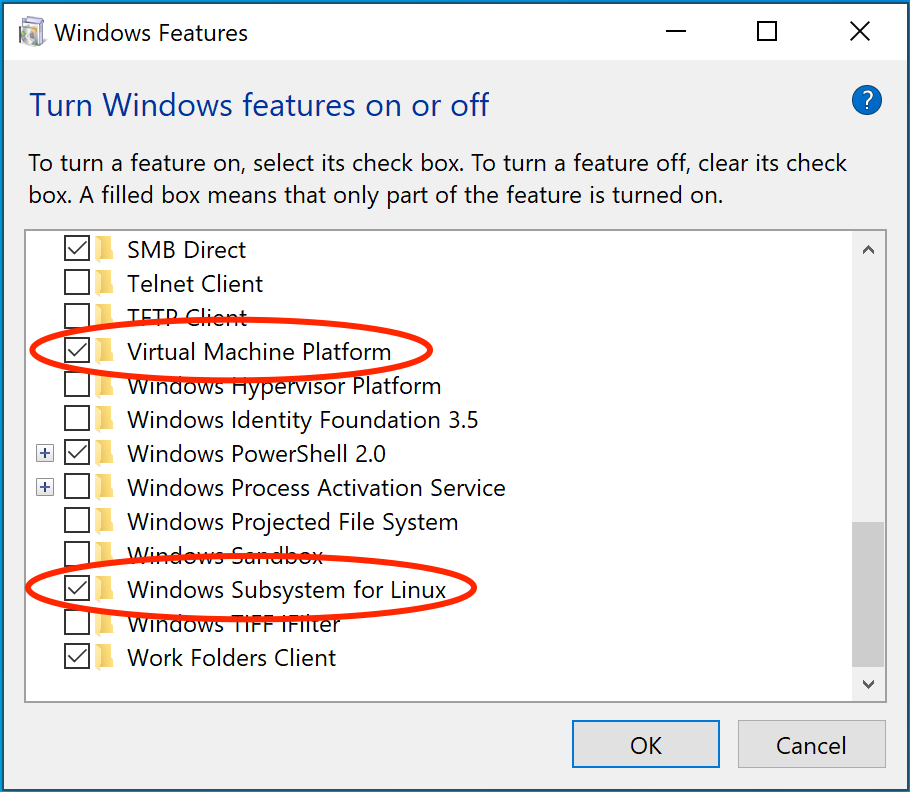

WSL 2 and Windows Home

- Virtual Machine Platform

- Windows Subsystem for Linux

- Virtualization enabled in the BIOS

- Hypervisor enabled at Windows startup

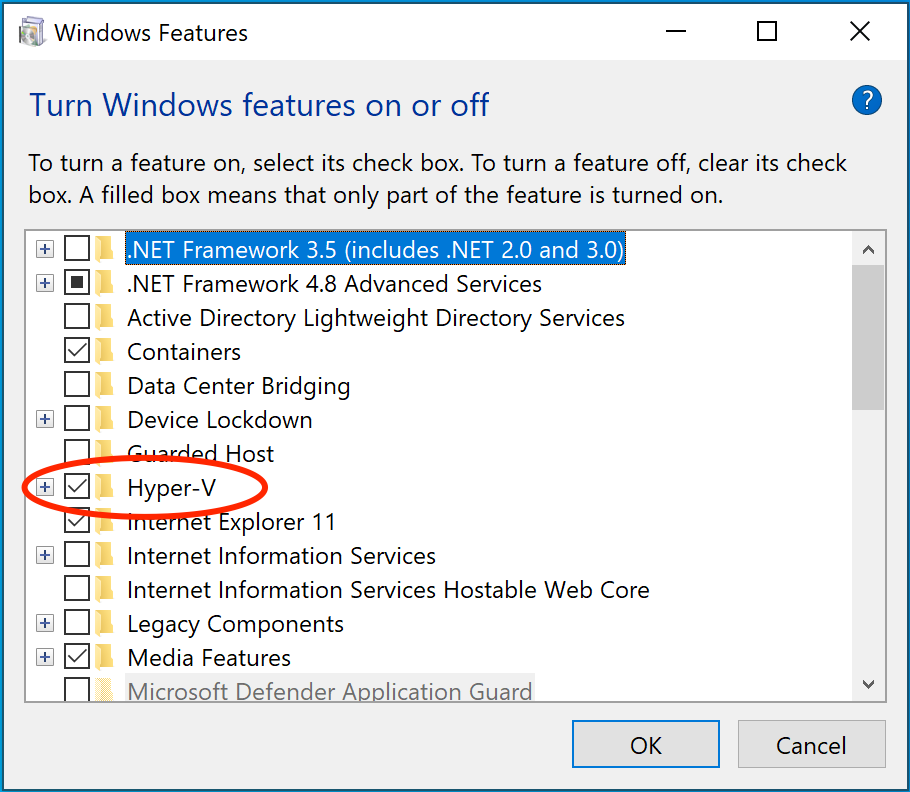

Hyper-V

On Windows 10 Pro or Enterprise, you can also use Hyper-V with the following features enabled:

Docker Desktop requires Hyper-V as well as the Hyper-V Module for Windows Powershell to be installed and enabled. The Docker Desktop installer enables it for you.

Docker Desktop also needs two CPU hardware features to use Hyper-V: Virtualization and Second Level Address Translation (SLAT), which is also called Rapid Virtualization Indexing (RVI). On some systems, Virtualization must be enabled in the BIOS. The steps required are vendor-specific, but typically the BIOS option is called Virtualization Technology (VTx) or something similar. Run the command systeminfo to check all required Hyper-V features. See Pre-requisites for Hyper-V on Windows 10 for more details.

To install Hyper-V manually, see Install Hyper-V on Windows 10. A reboot is required after installation. If you install Hyper-V without rebooting, Docker Desktop does not work correctly.

From the start menu, type Turn Windows features on or off and press enter. In the subsequent screen, verify that Hyper-V is enabled.

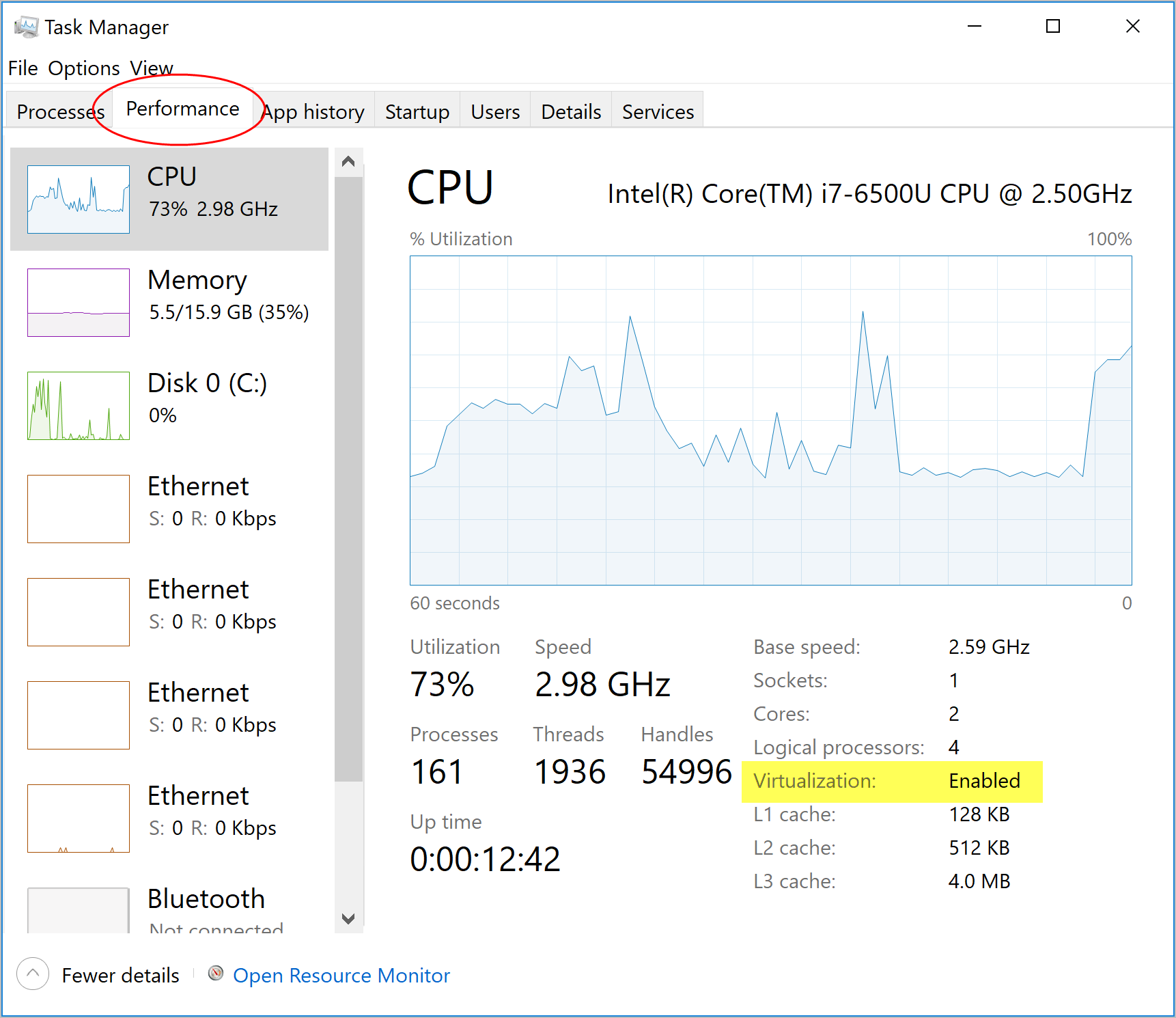

Virtualization must be enabled

In addition to Hyper-V or WSL 2, virtualization must be enabled. Check the Performance tab on the Task Manager:

If you manually uninstall Hyper-V, WSL 2 or disable virtualization, Docker Desktop cannot start. See Unable to run Docker for Windows on Windows 10 Enterprise.

Hypervisor enabled at Windows startup

If you have completed the steps described above and are still experiencing Docker Desktop startup issues, this could be because the Hypervisor is installed, but not launched during Windows startup. Some tools (such as older versions of Virtual Box) and video game installers disable hypervisor on boot. To reenable it:

- Open an administrative console prompt.

- Run bcdedit /set hypervisorlaunchtype auto .

- Restart Windows.

You can also refer to the Microsoft TechNet article on Code flow guard (CFG) settings.

Windows containers and Windows Server

Docker Desktop is not supported on Windows Server. If you have questions about how to run Windows containers on Windows 10, see Switch between Windows and Linux containers.

You can install a native Windows binary which allows you to develop and run Windows containers without Docker Desktop. However, if you install Docker this way, you cannot develop or run Linux containers. If you try to run a Linux container on the native Docker daemon, an error occurs:

Networking issues

IPv6 is not (yet) supported on Docker Desktop.

Источник

Error response from daemon: error while creating mount source path, mkdir /run/desktop/mnt/host/c/Users/. file exists #12403

- I have tried with the latest version of Docker Desktop

- I have tried disabling enabled experimental features

- I have uploaded Diagnostics

- Diagnostics ID:

Actual behavior

When I want to run the image with this command:

I get this error message:

Expected behavior

The container should be started and the mount point should appear in /var/www/ .

Information

- Windows Version: Microsoft Windows [Version 10.0.19043.1348]

- Docker Desktop Version: Docker Desktop 4.2.0 (70708)

- WSL2 or Hyper-V backend? WSL2

- Are you running inside a virtualized Windows e.g. on a cloud server or a VM: No.

Steps to reproduce the behavior

- I have two physical drives, C: and E:

- On E: , each user has their own working directories, like E: . This is because I don’t like spaces in the user name Windows creates for me.

- There is a file E: Projektek

src2my-file.html .

I created a junction in CMD.EXE, run as Administrator: mklink /J «c:Users src6» e: Projektek

src2 . Note: has no spaces in it, while is my first name and my last name, separated by a space.

stops with an error message:

Why is it an error that src6 exists? Of course, it does, I want to see its content under /var/www/.

This problem seems to be similar to #5516 but the solution written there does not solve the problem for me.

The text was updated successfully, but these errors were encountered:

Источник

Error when mounting host path with Docker for Windows — «Permission Denied» #3385

Expected behavior

I’m not able to do a docker run … I get the error below. I’m trying to run a Linux container on Windows 10.

error while creating mount source path ‘/host_mnt/c/Users/xyz/AppData/Roaming/Microsoft/UserSecrets’: mkdir /host_mnt/c/Users/xyz/AppData: permission denied.

Actual behavior

Run the container successfully

Information

- Windows Version: Windows 10

- Docker for Windows Version: 2.0.0.0-win78 (28905)

I checked the Drive sharing config and Drive sharing is enabled with credentials that have local admin rights on my machine.

Steps to reproduce the behavior

The text was updated successfully, but these errors were encountered:

Same problem here.

Same here.

For context, I have an Azure Active Directory account and I had to create another local one with the same name to be able to use Docker AT ALL. But it seems to still be unable to mount folders inside the user directories.

Also have this problem. Creates volumes fine once you add the local account, the container just cant access them. Doesn’t seem matter what rights I put on the .docker folder either for the new account user.

Also see «Error response from daemon: error while creating mount source path ‘/host_mnt/c/Users//.docker/Volumes/elasticdata-elasticsearch-0/pvc-64d22745-7883-11e9-9d67-00155d021b09’: mkdir /host_mnt/c/Users//.docker: permission denied» when inspecting the pod. File exists in the user directory and no issue with volumes themselves it seems.

Tried unsuccessfully to find a way to change the directory for these volumes to somewhere I would have more control as having them on another drive would be useful also.

The solution seems to be to add the Users group to the .docker folder and grant full access. Just the new local account access wasn’t enough for me.

@DarrenV79 could you please explain in more details how to add Users group to .docker folder and grant full access? thanks in advance

@daton89 To add a group to a directory you in windows follow these steps:

- Right-click the dir

- Click properties

- click the security tab

- click the «Edit» button.

- click the add button

- Type «users» in the text box

- click «Check Names» (should auto fill/update for you)

- click okay

- Highlight your newly added group.

10 . Under «Permissions for [User/Groupname]» select Full control - click apply

- Click «OK»

- Click «OK»

With all that out of the way I will report that adding the «Users» group to just the .docker file in my c:Users[home].docker dir did not work.

What did work was having the Users group added to at least the .docker, the great great great grandparent dir of my working dir, and the local dir I was trying to mount to my container.

Same problem. Trying to give access to users.

@freerider7777 were you able to resolve your issue?

@alexkoepke Yes, I gave permissions from @DarrenV79’s workaround and it worked

What did work was having the Users group added to at least the .docker, the great great great grandparent dir of my working dir, and the local dir I was trying to mount to my container.

@alexkoepke this solved it for me as well

@DarrenV79 ‘s answer solved my issue as well.

Giving full permissions only to the .docker folder didn’t seem to work for me. Giving full permissions to the entire User folder seemed to do the trick, even though it is nasty.

My setup is as follows:

- I have a primary account called «User» onto which I’m currently logged in and do my development work

- I have a user called «DockerDiskSharing» with full admin rights, which I intend to use for Docker to run with

The steps I took were:

- I opened a command prompt with admin rights and added the DockerDiskSharing user to the docker-users group by running

net localgroups docker-users DockerDiskSharing /add

- I went to Docker -> Settings -> Shared Drives, I clicked «Reset credentials» and added the DockerDiskSharing user’s credentials. I ticked C drive (my main partition) and the D drive (where my project is located)

- I opened a command prompt with admin rights and gave full access permissions to the entire Users/User folder by running:

icacls «C:UsersUser» /q /c /t /grant docker-users:F

Still unsure which folders in particular it needs permissions to read/write to though from Users/User but it worked.

Источник

This post goes over how to resolve Docker error “no space left on device”.

Problem

If Docker throws one of the following errors:

Error response from daemon: failed to mkdir /var/lib/docker/volumes/...: mkdir /var/lib/docker/volumes/...: no space left on device

Error response from daemon: failed to copy files: failed to open target /var/lib/docker/volumes/...: open /var/lib/docker/volumes/...: no space left on device

failed to solve: rpc error: code = Unknown desc = failed to mkdir /var/lib/docker/...: mkdir /var/lib/docker/...: no space left on device

It means your Docker has run out of space.

Solution

docker system prune

Run docker system prune to remove all unused data:

To prune without prompting for confirmation:

docker system prune --force

It will return the freed space at the end:

Total reclaimed space: 4.20GB

docker volume prune

Run docker volume prune to remove all unused local volumes:

WARNING: Be careful since this can remove local database data.

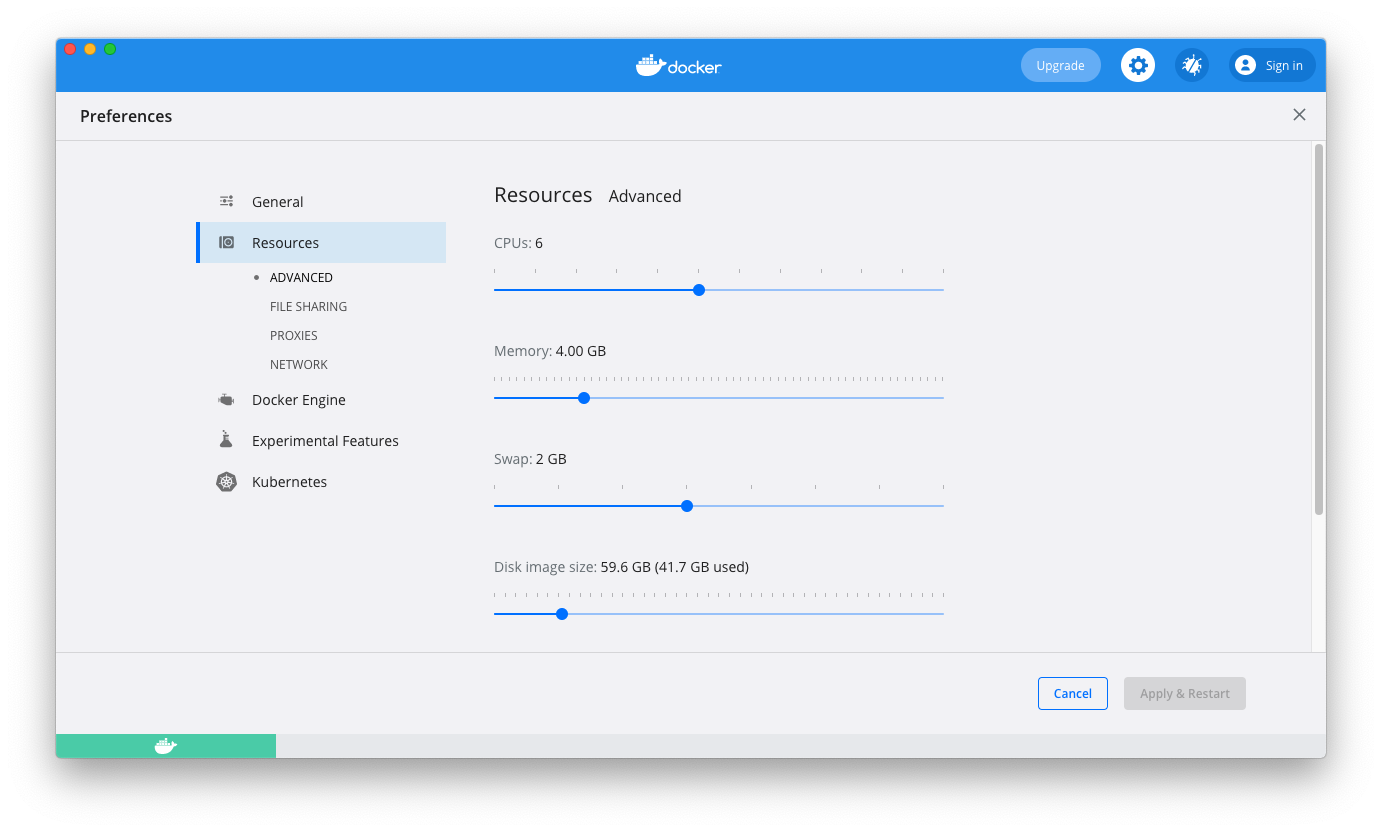

Disk image size

Increase disk space in Docker Desktop > Settings > Resources > Advanced > Disk image size:

Please support this site and join our Discord!

phenomenon

Stop the container (for MySQL) that sets volumes → Start it

$ docker-compose up -d

Creating volume "sample_db-store" with default driver

Creating hoge_db_1 ... error

ERROR: for sample_db_1 Cannot start service db: error while creating mount source path '/host_mnt/Users/~/docker/mysql/sql': mkdir /host_mnt/Users/~/docker/mysql/sql: file exists

ERROR: Encountered errors while bringing up the project.

An error message stating «The host directory cannot be mounted» is displayed and the container cannot be started.

Operating environment

Solutions

If you are using Docker Desktop for Windows

Docker Desktop for Windows → [Settings] → [Shared Drives] → [Reset Credentials] → Reauthentication (check the corresponding path)

[reference]

https://www.nuits.jp/entry/docker-for-windows-mkdir-file-exists

If you are using Docker Desktop for Mac

Docker Desktop for Mac → [preferences…] →[Resources] →[File Sharing]

After deleting ** / Users **, [apply & Restart]

Then add ** / Users ** and [apply & Restart]

What I tried before solving

Operating background

With docker-compose, I was building a MySQL container as follows.

docker-compose.yml

version: '3'

services:

db:

image: mysql:8.0

volumes:

- db-store:/var/lib/mysql

- ./logs:/var/log/mysql

- ./docker/mysql/my.cnf:/etc/mysql/conf.d/my.cnf

- ./docker/mysql/sql:/docker-entrypoint-initdb.d

environment:

- MYSQL_DATABASE=${DB_NAME}

- MYSQL_USER=${DB_USER}

- MYSQL_PASSWORD=${DB_PASS}

- MYSQL_ROOT_PASSWORD=${DB_PASS}

- TZ=${TZ}

ports:

- ${DB_PORT}:3306

volumes: db-store

In volumes, in addition to those related to data and log

docker-compose.yml

- ./docker/mysql/sql:/docker-entrypoint-initdb.d

Is defined.

In the image for MySQL, when mounting to /docker-entrypoint-initdb.d, the .sql, .sh file existing in the specified host side directory is read and executed when the container is started for the first time. ..

https://hub.docker.com/_/mysql/

Therefore, the SQL for table creation and the shell script for executing SQL were placed in the directory ./docker/mysql/sql on the host side to be mounted on the container. It works without any problem at the first startup. The SQL and shell script I put in between were running without any problems.

However, once the container was stopped with docker-compose stop, this phenomenon occurred at startup with docker-compose up.

Tried (1): Delete volume

I found information that a similar behavior could be solved by deleting the volume, so I tried deleting the container and the named volume.

$ docker-compose down --volumes

Removing sample_db_1 ... done

Removing volume sample_db-store

$ docker-compose up -d

Failure.

Tried (2): Update the authentication information of the shared folder of Docker Desktop for Mac (this solution)

Similar Docker Daemon error

docker: Error response from daemon: error while creating mount source path '/host_mnt/c/tmp': mkdir /host_mnt/c: file exists.

Was reported when using Docker Desktop for Windows.

However, I couldn’t find any reports of similar errors in Docker Desktop for Mac.

It’s no good, but the flow is a little different from Windows, but when I tried to imitate the solution of the above error with Docker Desktop for Mac, it worked.

Cause

I’m wondering if something went wrong with the Docker Desktop Volumes sharing settings and I couldn’t access the directory information on the host side, but I don’t know the details.

If you know the root cause, please let me know: qiitan-cry:

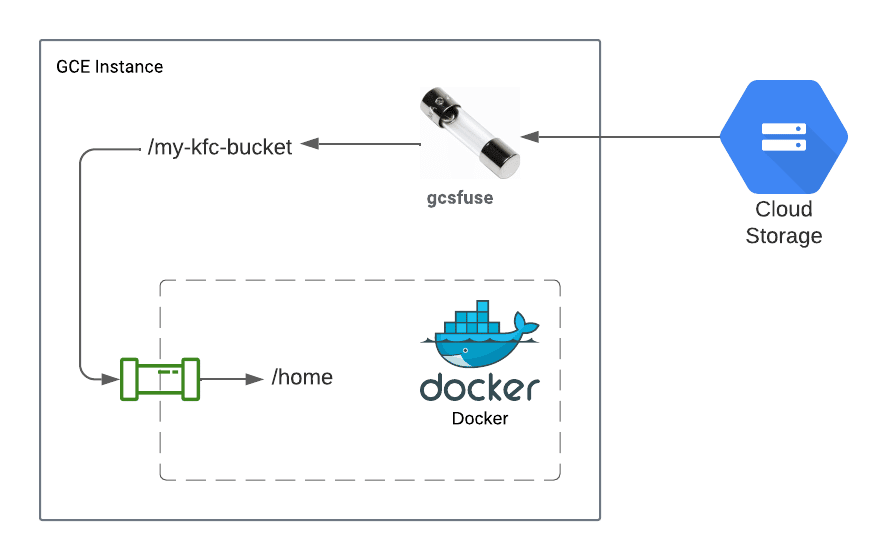

This post illustrates how you can mount a GCS bucket using GCSFuse on your host machine and expose it as a volume to a Docker container.

PROBLEM

You want to volume mount a FUSE-mounted directory to a container, for example:

When attempting to run the container…

docker run -it --rm -v /my-kfc-bucket:/home busybox

… an error occurred:

docker: Error response from daemon: error while creating mount source path '/my-kfc-bucket': mkdir /my-kfc-bucket: file exists.

SOLUTION

Unmount the existing FUSE-mounted directory.

sudo umount /my-kfc-bucket

Mount it back with the following option. Because this command with -o allow_other must be executed with sudo privilege, you will need to change the root ownership to yourself (via –uid and –gid) so that you can easily read/write within the directory.

sudo gcsfuse -o allow_other --uid $(id -u) --gid $(id -g) gcs-bucket /my-kfc-bucket

If it is successful, the output should look like this:

Start gcsfuse/0.40.0 (Go version go1.17.6) for app "" using mount point: /my-kfc-bucket Opening GCS connection... Mounting file system "gcs-bucket"... File system has been successfully mounted.

Rerun the docker container.

docker run -it --rm -v /my-kfc-bucket:/home busybox

Now, you can read/write the GCS bucket’s data from the container. In this example, the GCS bucket’s data is located in /home.