- Remove From My Forums

-

Question

-

I am new on Azure Machine Learning and I try to create some experiments to get familiar with it. I have a Train Model, then a Score Model and finally the evaluation model. However, in the last one, I have this error:

There is no label column in «Scored dataset». . ( Error 0024 )

Answers

-

Hi,

In order for evaluate model to work the scored dataset must have the ground truth label column, which is what the module is throwing an error for. Can you visualize the titanic-test.csv data from execute Python Script and make sure that it contains

the column that you set in Train Model as the label column with the base truth, and can you verify that the name of the column is the same in the train and score datasets?Thank you, Ilya

-

Edited by

Monday, September 28, 2015 7:59 PM

-

Proposed as answer by

neerajkh_MSFT

Tuesday, September 29, 2015 4:09 PM -

Marked as answer by

TasosVentouris

Tuesday, September 29, 2015 5:40 PM

-

Edited by

Содержание

- R-bloggers

- R news and tutorials contributed by hundreds of R bloggers

- How to evaluate R models in Azure Machine Learning Studio

- Demonstrating the issue

- Solving the issue

- Application: Comparing against native Azure ML Studio algorithms

- Summary

- Name already in use

- azure-reference-other-1 / studio-module-reference / errors / error-0024.md

- How to evaluate R models in Azure Machine Learning Studio

- Demonstrating the issue

- Solving the issue

- Application: Comparing against native Azure ML Studio algorithms

- Summary

R-bloggers

R news and tutorials contributed by hundreds of R bloggers

How to evaluate R models in Azure Machine Learning Studio

Posted on August 24, 2016 by Constantinos Voglis in R bloggers | 0 Comments

Azure Machine Learning Studio is a GUI-based integrated development environment for constructing and operationalizing machine learning workflows. The basic computational unit of an Azure ML Studio workflow (or Experiment) is a module which implements machine learning algorithms, data conversion and transformation functions etc. Modules can be connected by data flows, thus implementing a machine learning pipeline. A typical pipeline in Azure ML Studio is shown below:

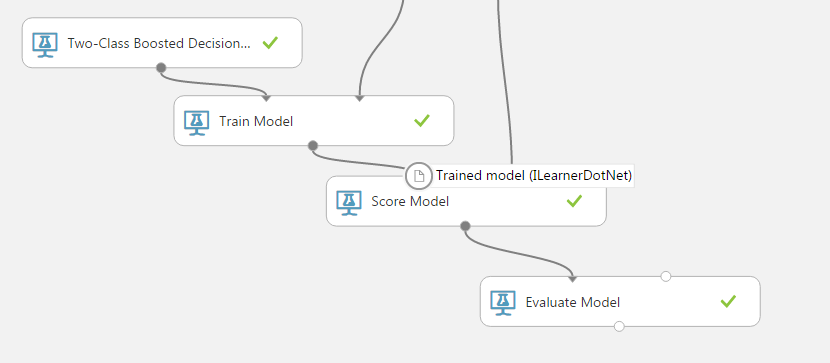

In the figure above, a native Azure ML Studio model (Two-Class Boosted Decision Trees) is connected successively to a Train Model which performs the training procedure, to a Score Model to predict the outcome on test data, and finally to an Evaluate Model that will assess the overall classification quality.

Microsoft currently offers an option for a Create R Model module, which allows for creating custom ML models written in R and integrating them into Azure ML Studio pipelines, hence opening up a vast area of possibilities. However, currently the Evaluate Model module cannot be used with a Create R Model module (i.e. through the pipeline: model -> train -> score -> evaluate); quoting from the documentation:

Warning :

Currently it is not possible to pass the scored results of an R model to Evaluate Model or Cross-Validate Model. If you need to evaluate a model, you can write custom R script and run it using the Execute R Script module.

This is not good news for a couple of reasons: the quality of a Create R Model module cannot be assessed uniformly and cannot be directly compared to native Azure models (Evaluate Model is used for comparison purposes too, as we will demonstrate below); hence, the user is forced to create custom comparison scripts, which is a tedious and non-standard process. Also, the Evaluate Model module offers handy out-of-the-box visualizations, which we would like to have available as they can help rapid prototyping. Finally, it is much more straightforward to save trained models in the Azure ML Studio workspace (without the need to work directly in an Azure blob storage) using the Create R Model module rather than the alternative option of Execute R Script.

So, in this post we will present a tweak that bypasses this limitation, allowing the use of an Evaluate Model module with a Create R Model one.

Demonstrating the issue

We will use as our staring point an example offered by Microsoft, which uses a two-class Naïve Bayes classifier from the e1071 R package.

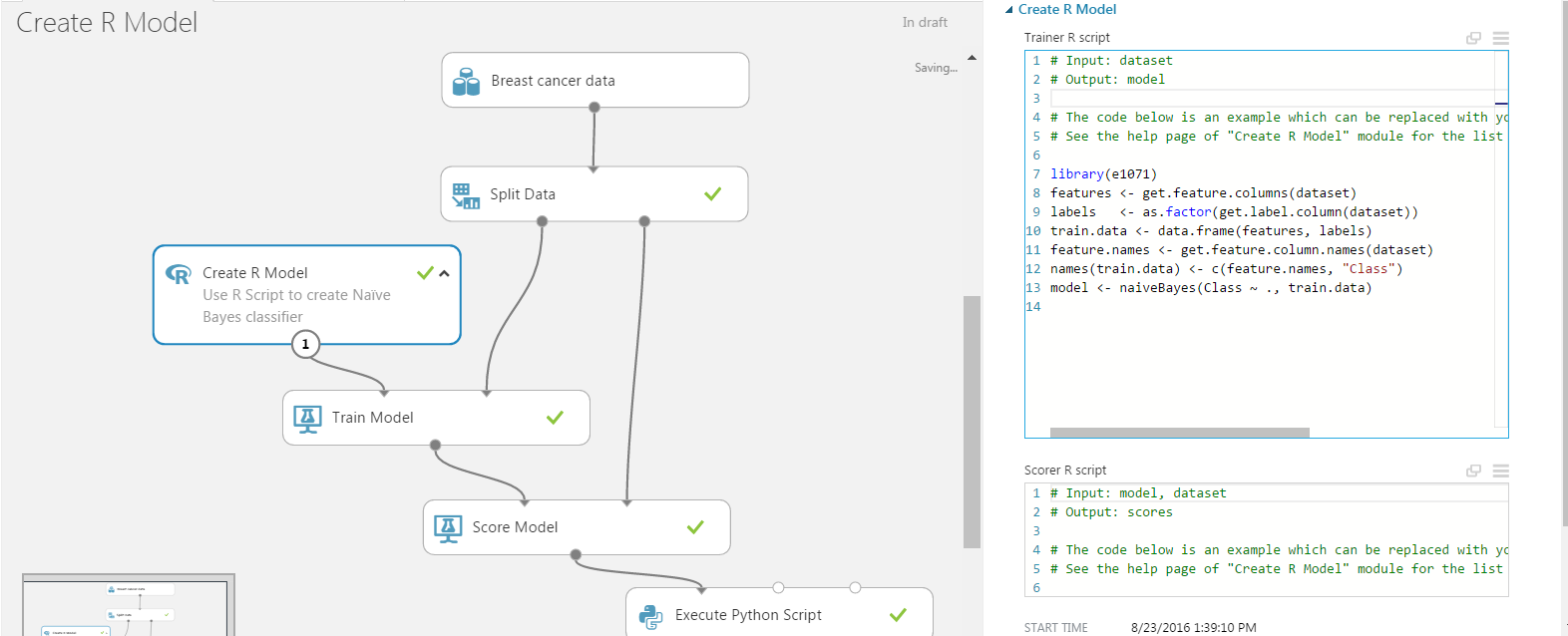

Here is the ‘Training Script’ of the Create R Model:

and the respective ‘Scoring Script’:

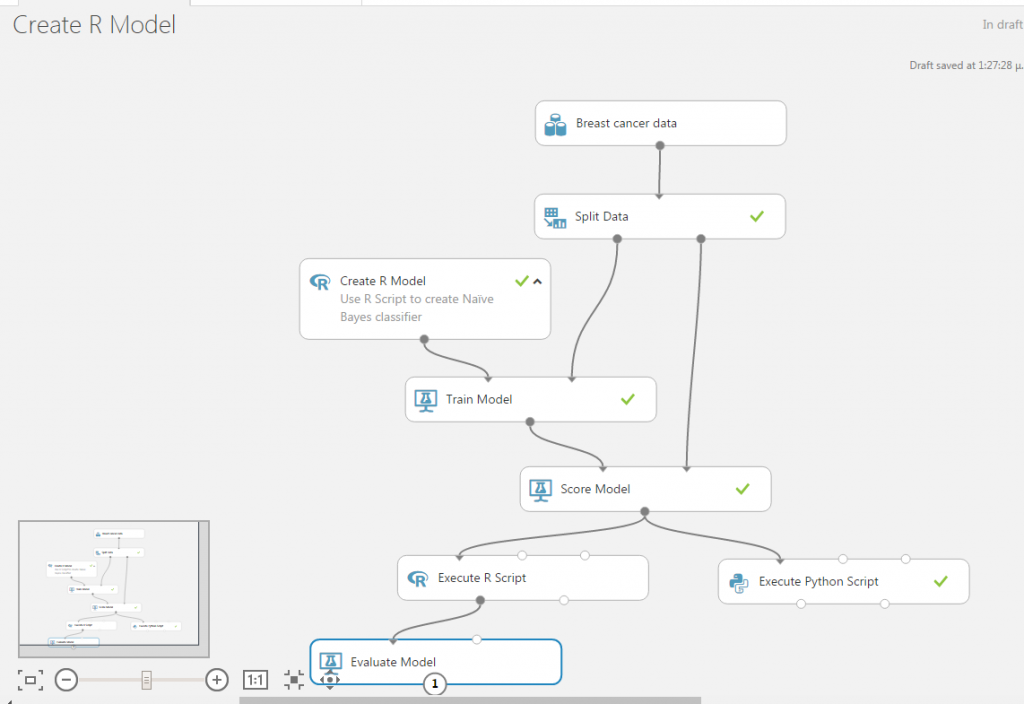

The complete experiment graph is shown in the figure below:

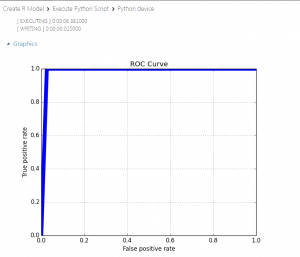

The experiment ends to an Execute Python Script module that facilitates, programmatically (in Python!), the model evaluation. This script calculates quantities like “Accuracy”, “Precision”, “Recall”, and “AUC”, and produces a PNG plot of the ROC curve as shown below:

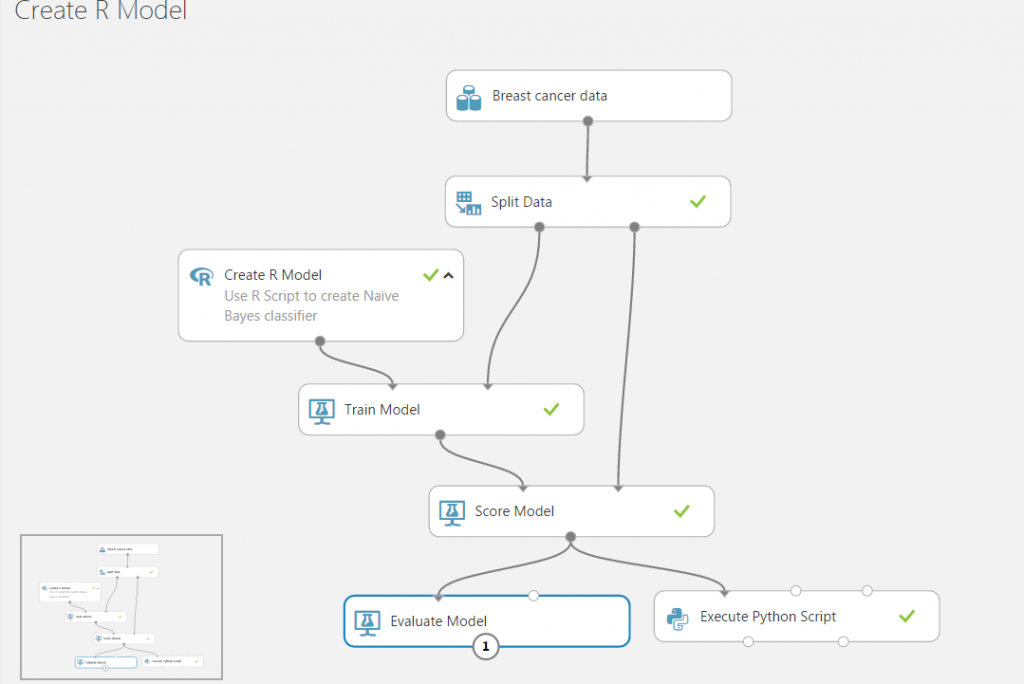

Let us first confirm Microsoft’s warning, by adding an Evaluate Model module and connecting it to the Scored Model like this:

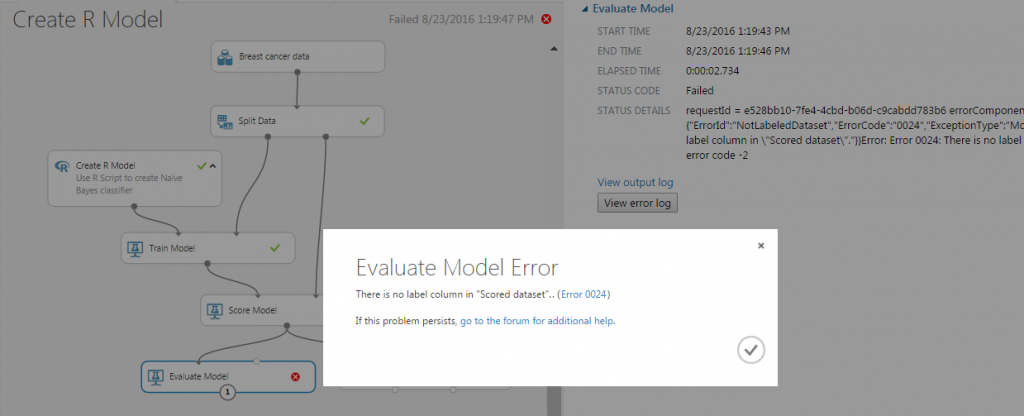

When we try to run the experiment we get the following error:

It seems that the data passed from the Score Model to the Evaluation Model miss a special annotation for the scored columns. The error message states There is no label column in «Scored dataset» .

Solving the issue

Fortunately R again comes to the rescue. From the error message we deduce that Evaluate Model is missing a label column from the incoming dataset. Score Model on the other hand creates all the necessary information. This indicates a possible metadata issue. The appropriate columns do exist but need to be further annotated. The solution emerges when reading the appropriate metadata documentation. Typical metadata information includes:

- Treating Boolean or numeric columns as categorical values

- Indicating which column contains the true label, the assigned label or the classification score

- Marking columns as features

- Changing date/time values to a numeric value

- Adding or changing column names

We need to indicate these special columns, i.e. true label, assigned label, and classification score in the dataset moved between the scoring and evaluation modules.

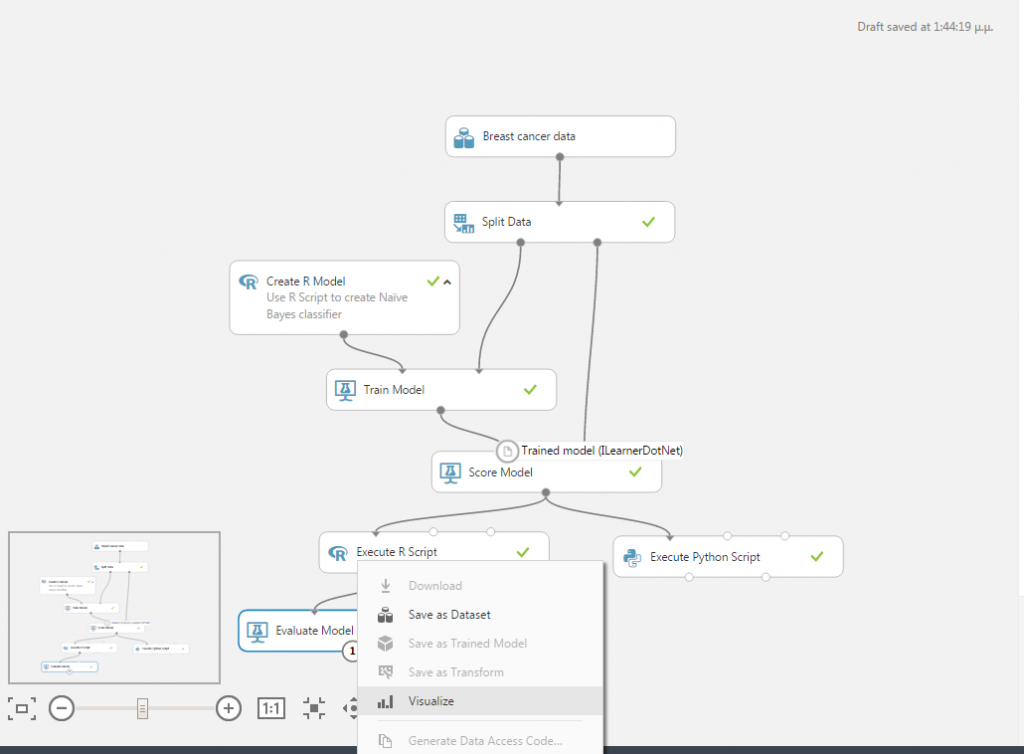

The simplest way to do this is by using an Execute R Script as a bridge between the Score and the Evaluate modules. Our experiment now is as shown below:

Before we provide the code of the Execute R Script module, we must make three important observations:

- In the ‘Training Script’ of the example we can see that the classification column (true label) is called Class

- The ‘Scoring Script’ of the example ends with scores . The first corresponds to the assigned label and the second to the classification score

- The Score Model module has the option Append score columns to output checked, so we expect the ‘Scoring Script’ to add two extra columns on the input dataset: classes and probabilities .

The final R script that will bridge the Score and Evaluation modules is as follows:

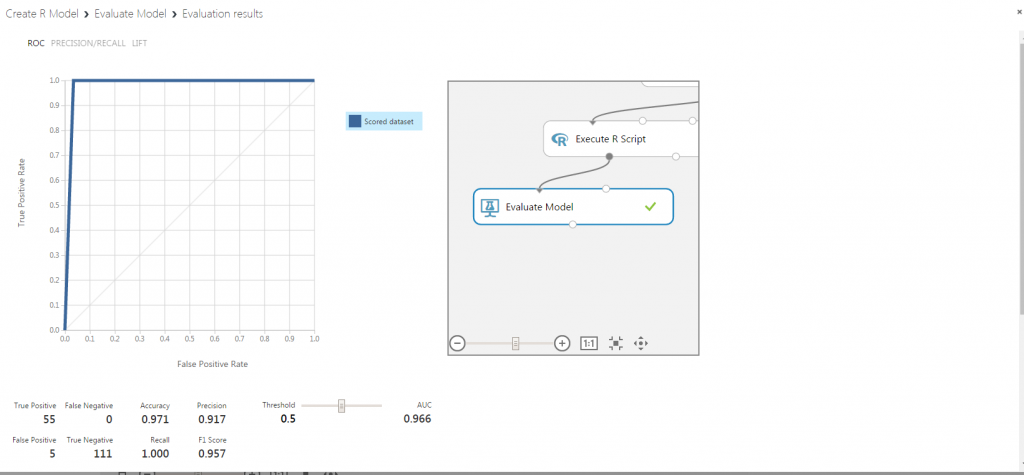

We run the experiment and see that everything works. The Evaluate Model module is now operational, and we can click to visualize:

The result of the visualization is shown below:

This is the ROC curve alright. We got the same figure from the Execute Python Script output. There is a huge difference though, as the result of Evaluate Model is interactive and the user can try different threshold combinations on the fly.

The R code provided above is tailored for the specific example; nevertheless, it is easily customized by identifying the corresponding columns and making the appropriate modifications. Moreover, we can even integrate this code into the ‘Scoring Script’ part of our Create R Model module, hence eliminating the extra Execute R Script module altogether (we leave this as an exercise for the interested reader).

Application: Comparing against native Azure ML Studio algorithms

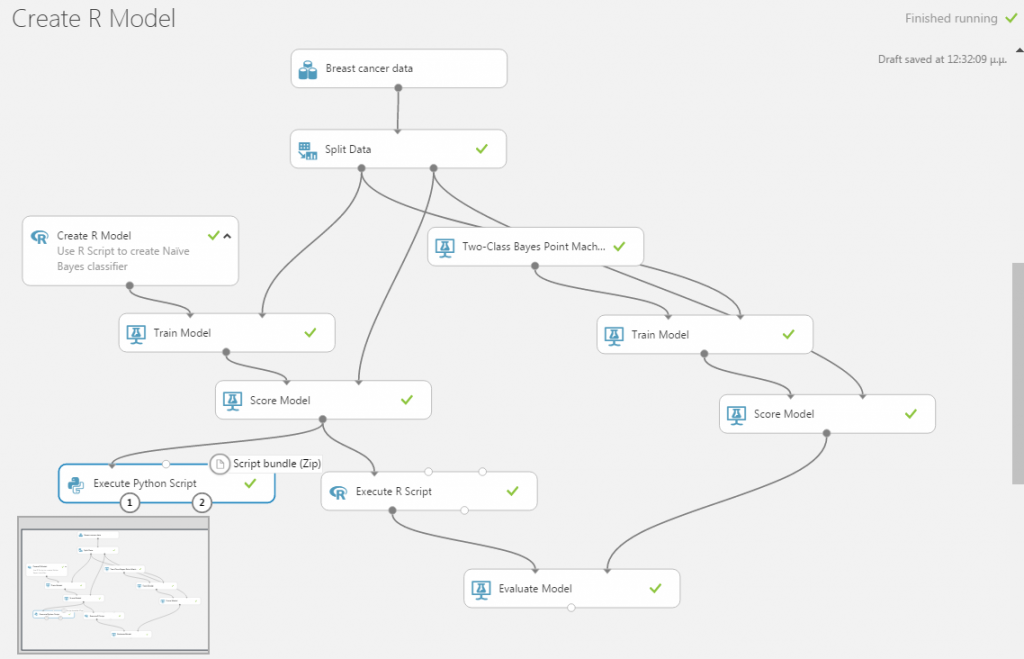

To demonstrate the usefulness of our workaround, we extend the existing experiment by adding a separate training workflow starting from a native Azure ML Studio algorithm. We then attach this secondary flow to our Evaluate Model module and perform a comparison. We have selected the native Two-Class Bayes Point Machine, and the experiment now looks like the figure below:

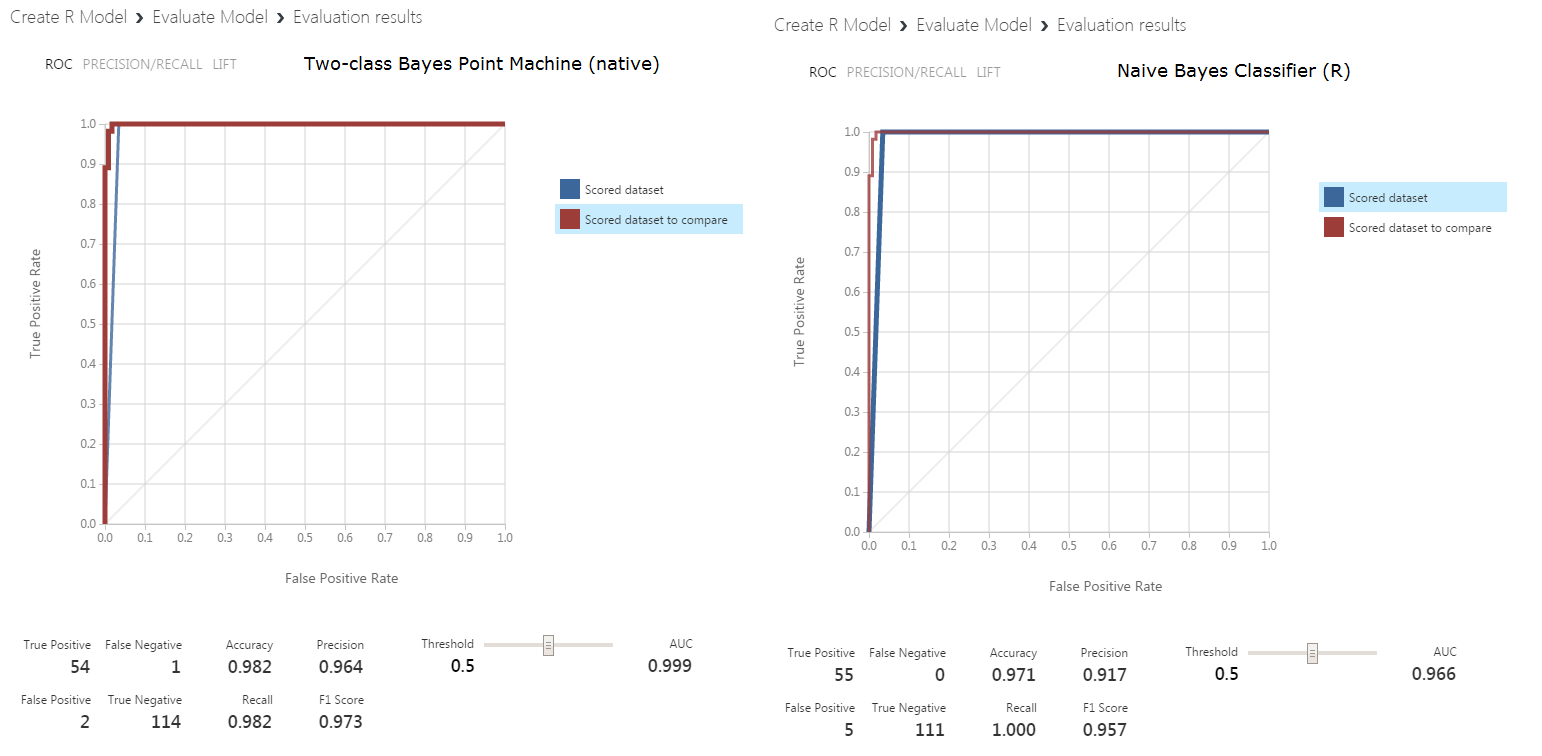

We can run the experiment and visualize the output of the Evaluate Model. In the figure below we present the two results side by side :

Summary

We have presented a workaround to connect scoring results from a Create R Model module to an Evaluate Model module in Azure ML Studio. This connection is not allowed in the current version of Azure ML Studio, and model evaluation has to be performed manually by user written scripts. The workaround consists of a rather simple R script that can be added in the existing ML pipeline between Score Model and Evaluate Model, altering the metadata of the scored dataset. With this modification, Azure ML Studio users can enjoy uniform evaluation of both native and custom machine learning models.

Источник

Name already in use

azure-reference-other-1 / studio-module-reference / errors / error-0024.md

- Go to file T

- Go to line L

- Copy path

- Copy permalink

Copy raw contents

Copy raw contents

Exception occurs if dataset does not contain a label column.

This error in Azure Machine Learning occurs when the module requires a label column and the dataset does not have a label column. For example, evaluation of a scored dataset usually requires that a label column be present to compute accuracy metrics.

It can also happen that a label column is present in the dataset, but not detected correctly by Azure Machine Learning.

- Open the module that generated the error, and determine if a label column is present. The name or data type of the column doesn’t matter, as long as the column contains a single outcome (or dependent variable) that you are trying to predict. If you are not sure which column has the label, look for a generic name such as Class or Target.

- If the dataset does not include a label column, it is possible that the label column was explicitly or accidentally removed upstream. It could also be that the dataset is not the output of an upstream scoring module.

- To explicitly mark the column as the label column, add the Edit Metadata module and connect the dataset. Select only the label column, and select Label from the Fields dropdown list.

- If the wrong column is chosen as the label, you can select Clear label from the Fields to fix the metadata on the column.

| Exception Messages |

|---|

| There is no label column in dataset. |

| There is no label column in «<0>«. |

[!TIP] Need more help or troubleshooting tips for Azure Machine Learning? Try these resources:

Источник

How to evaluate R models in Azure Machine Learning Studio

Azure Machine Learning Studio is a GUI-based integrated development environment for constructing and operationalizing machine learning workflows. The basic computational unit of an Azure ML Studio workflow (or Experiment) is a module which implements machine learning algorithms, data conversion and transformation functions etc. Modules can be connected by data flows, thus implementing a machine learning pipeline. A typical pipeline in Azure ML Studio is shown below:

In the figure above, a native Azure ML Studio model (Two-Class Boosted Decision Trees) is connected successively to a Train Model which performs the training procedure, to a Score Model to predict the outcome on test data, and finally to an Evaluate Model that will assess the overall classification quality.

Microsoft currently offers an option for a Create R Model module, which allows for creating custom ML models written in R and integrating them into Azure ML Studio pipelines, hence opening up a vast area of possibilities. However, currently the Evaluate Model module cannot be used with a Create R Model module (i.e. through the pipeline: model -> train -> score -> evaluate); quoting from the documentation:

Warning :

Currently it is not possible to pass the scored results of an R model to Evaluate Model or Cross-Validate Model. If you need to evaluate a model, you can write custom R script and run it using the Execute R Script module.

This is not good news for a couple of reasons: the quality of a Create R Model module cannot be assessed uniformly and cannot be directly compared to native Azure models (Evaluate Model is used for comparison purposes too, as we will demonstrate below); hence, the user is forced to create custom comparison scripts, which is a tedious and non-standard process. Also, the Evaluate Model module offers handy out-of-the-box visualizations, which we would like to have available as they can help rapid prototyping. Finally, it is much more straightforward to save trained models in the Azure ML Studio workspace (without the need to work directly in an Azure blob storage) using the Create R Model module rather than the alternative option of Execute R Script.

So, in this post we will present a tweak that bypasses this limitation, allowing the use of an Evaluate Model module with a Create R Model one.

Demonstrating the issue

We will use as our staring point an example offered by Microsoft, which uses a two-class Naïve Bayes classifier from the e1071 R package.

Here is the ‘Training Script’ of the Create R Model:

and the respective ‘Scoring Script’:

The complete experiment graph is shown in the figure below:

The experiment ends to an Execute Python Script module that facilitates, programmatically (in Python!), the model evaluation. This script calculates quantities like “Accuracy”, “Precision”, “Recall”, and “AUC”, and produces a PNG plot of the ROC curve as shown below:

Let us first confirm Microsoft’s warning, by adding an Evaluate Model module and connecting it to the Scored Model like this:

When we try to run the experiment we get the following error:

It seems that the data passed from the Score Model to the Evaluation Model miss a special annotation for the scored columns. The error message states There is no label column in «Scored dataset» .

Solving the issue

Fortunately R again comes to the rescue. From the error message we deduce that Evaluate Model is missing a label column from the incoming dataset. Score Model on the other hand creates all the necessary information. This indicates a possible metadata issue. The appropriate columns do exist but need to be further annotated. The solution emerges when reading the appropriate metadata documentation. Typical metadata information includes:

- Treating Boolean or numeric columns as categorical values

- Indicating which column contains the true label, the assigned label or the classification score

- Marking columns as features

- Changing date/time values to a numeric value

- Adding or changing column names

We need to indicate these special columns, i.e. true label, assigned label, and classification score in the dataset moved between the scoring and evaluation modules.

The simplest way to do this is by using an Execute R Script as a bridge between the Score and the Evaluate modules. Our experiment now is as shown below:

Before we provide the code of the Execute R Script module, we must make three important observations:

- In the ‘Training Script’ of the example we can see that the classification column (true label) is called Class

- The ‘Scoring Script’ of the example ends with scores . The first corresponds to the assigned label and the second to the classification score

- The Score Model module has the option Append score columns to output checked, so we expect the ‘Scoring Script’ to add two extra columns on the input dataset: classes and probabilities .

The final R script that will bridge the Score and Evaluation modules is as follows:

We run the experiment and see that everything works. The Evaluate Model module is now operational, and we can click to visualize:

The result of the visualization is shown below:

This is the ROC curve alright. We got the same figure from the Execute Python Script output. There is a huge difference though, as the result of Evaluate Model is interactive and the user can try different threshold combinations on the fly.

The R code provided above is tailored for the specific example; nevertheless, it is easily customized by identifying the corresponding columns and making the appropriate modifications. Moreover, we can even integrate this code into the ‘Scoring Script’ part of our Create R Model module, hence eliminating the extra Execute R Script module altogether (we leave this as an exercise for the interested reader).

Application: Comparing against native Azure ML Studio algorithms

To demonstrate the usefulness of our workaround, we extend the existing experiment by adding a separate training workflow starting from a native Azure ML Studio algorithm. We then attach this secondary flow to our Evaluate Model module and perform a comparison. We have selected the native Two-Class Bayes Point Machine, and the experiment now looks like the figure below:

We can run the experiment and visualize the output of the Evaluate Model. In the figure below we present the two results side by side :

Summary

We have presented a workaround to connect scoring results from a Create R Model module to an Evaluate Model module in Azure ML Studio. This connection is not allowed in the current version of Azure ML Studio, and model evaluation has to be performed manually by user written scripts. The workaround consists of a rather simple R script that can be added in the existing ML pipeline between Score Model and Evaluate Model, altering the metadata of the scored dataset. With this modification, Azure ML Studio users can enjoy uniform evaluation of both native and custom machine learning models.

Источник

Permalink

Cannot retrieve contributors at this time

| title | titleSuffix | ms.custom | ms.date | ms.reviewer | ms.service | ms.component | ms.suite | ms.tgt_pltfrm | ms.topic | ms.assetid | caps.latest.revision | author | ms.author | manager |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Error 0024 | Microsoft Docs |

Azure Machine Learning Studio |

06/14/2017 |

machine-learning |

studio |

reference |

60822377-da7a-40b8-0024-d185d1509344 |

8 |

rastala |

roastala |

cgronlun |

Exception occurs if dataset does not contain a label column.

This error in Azure Machine Learning occurs when the module requires a label column and the dataset does not have a label column. For example, evaluation of a scored dataset usually requires that a label column be present to compute accuracy metrics.

It can also happen that a label column is present in the dataset, but not detected correctly by Azure Machine Learning.

Resolution

- Open the module that generated the error, and determine if a label column is present. The name or data type of the column doesn’t matter, as long as the column contains a single outcome (or dependent variable) that you are trying to predict. If you are not sure which column has the label, look for a generic name such as Class or Target.

- If the dataset does not include a label column, it is possible that the label column was explicitly or accidentally removed upstream. It could also be that the dataset is not the output of an upstream scoring module.

- To explicitly mark the column as the label column, add the Edit Metadata module and connect the dataset. Select only the label column, and select Label from the Fields dropdown list.

- If the wrong column is chosen as the label, you can select Clear label from the Fields to fix the metadata on the column.

| Exception Messages |

|---|

| There is no label column in dataset. |

| There is no label column in «{0}». |

[!TIP]

Need more help or troubleshooting tips for Azure Machine Learning? Try these resources:

- Troubleshooting guide: Create and connect to an Machine Learning workspace

- Azure Machine Learning Frequently Asked Questions (FAQ)

See also

Module error codes

[This article was first published on R – Nodalpoint, and kindly contributed to R-bloggers]. (You can report issue about the content on this page here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

Azure Machine Learning Studio is a GUI-based integrated development environment for constructing and operationalizing machine learning workflows. The basic computational unit of an Azure ML Studio workflow (or Experiment) is a module which implements machine learning algorithms, data conversion and transformation functions etc. Modules can be connected by data flows, thus implementing a machine learning pipeline. A typical pipeline in Azure ML Studio is shown below:

In the figure above, a native Azure ML Studio model (Two-Class Boosted Decision Trees) is connected successively to a Train Model which performs the training procedure, to a Score Model to predict the outcome on test data, and finally to an Evaluate Model that will assess the overall classification quality.

Microsoft currently offers an option for a Create R Model module, which allows for creating custom ML models written in R and integrating them into Azure ML Studio pipelines, hence opening up a vast area of possibilities. However, currently the Evaluate Model module cannot be used with a Create R Model module (i.e. through the pipeline: model -> train -> score -> evaluate); quoting from the documentation:

Warning:

Currently it is not possible to pass the scored results of an R model to Evaluate Model or Cross-Validate Model. If you need to evaluate a model, you can write custom R script and run it using the Execute R Script module.

This is not good news for a couple of reasons: the quality of a Create R Model module cannot be assessed uniformly and cannot be directly compared to native Azure models (Evaluate Model is used for comparison purposes too, as we will demonstrate below); hence, the user is forced to create custom comparison scripts, which is a tedious and non-standard process. Also, the Evaluate Model module offers handy out-of-the-box visualizations, which we would like to have available as they can help rapid prototyping. Finally, it is much more straightforward to save trained models in the Azure ML Studio workspace (without the need to work directly in an Azure blob storage) using the Create R Model module rather than the alternative option of Execute R Script.

So, in this post we will present a tweak that bypasses this limitation, allowing the use of an Evaluate Model module with a Create R Model one.

Demonstrating the issue

We will use as our staring point an example offered by Microsoft, which uses a two-class Naïve Bayes classifier from the e1071 R package.

Here is the ‘Training Script’ of the Create R Model:

library(e1071) features <- get.feature.columns(dataset) labels <- as.factor(get.label.column(dataset)) train.data <- data.frame(features, labels) feature.names <- get.feature.column.names(dataset) names(train.data) <- c(feature.names, "Class") model <- naiveBayes(Class ~ ., train.data)

and the respective ‘Scoring Script’:

library(e1071) probabilities <- predict(model, dataset, type="raw")[,2] classes = 0.5)) scores <- data.frame(classes, probabilities)

The complete experiment graph is shown in the figure below:

The experiment ends to an Execute Python Script module that facilitates, programmatically (in Python!), the model evaluation. This script calculates quantities like “Accuracy”, “Precision”, “Recall”, and “AUC”, and produces a PNG plot of the ROC curve as shown below:

Let us first confirm Microsoft’s warning, by adding an Evaluate Model module and connecting it to the Scored Model like this:

When we try to run the experiment we get the following error:

It seems that the data passed from the Score Model to the Evaluation Model miss a special annotation for the scored columns. The error message states There is no label column in "Scored dataset".

Solving the issue

Fortunately R again comes to the rescue. From the error message we deduce that Evaluate Model is missing a label column from the incoming dataset. Score Model on the other hand creates all the necessary information. This indicates a possible metadata issue. The appropriate columns do exist but need to be further annotated. The solution emerges when reading the appropriate metadata documentation. Typical metadata information includes:

- Treating Boolean or numeric columns as categorical values

- Indicating which column contains the true label, the assigned label or the classification score

- Marking columns as features

- Changing date/time values to a numeric value

- Adding or changing column names

We need to indicate these special columns, i.e. true label, assigned label, and classification score in the dataset moved between the scoring and evaluation modules.

The simplest way to do this is by using an Execute R Script as a bridge between the Score and the Evaluate modules. Our experiment now is as shown below:

Before we provide the code of the Execute R Script module, we must make three important observations:

- In the ‘Training Script’ of the example we can see that the classification column (true label) is called

Class - The ‘Scoring Script’ of the example ends with

scores <- data.frame(classes, probabilities). The first corresponds to the assigned label and the second to the classification score - The Score Model module has the option

Append score columns to outputchecked, so we expect the ‘Scoring Script’ to add two extra columns on the input dataset:classesandprobabilities.

The final R script that will bridge the Score and Evaluation modules is as follows:

# Map 1-based optional input ports to variables

dataset1 <- maml.mapInputPort(1) # class: data.frame

#

# Create a three column data.frame with columns

# true_labels: actual classes

# assigned_label: predicted classes with threshold set to 0.5

# calibrated_score: probability output from the Baye's classifier

data.set <- data.frame(true_labels=dataset1$Class,

assigned_label=dataset1$classes,

calibrated_score=dataset1$probabilities)

#

# Change the "label.type" attribute of column "Class" to be of type "True Labels"

attr(data.set$true_labels, "label.type") <- "True Labels"

#

# Change the "feature.channel" and "score.type" attribute of "scored" column

attr(data.set$assigned_label, "feature.channel") <- "Binary Classification Scores"

attr(data.set$assigned_label, "score.type") <- "Assigned Labels"

#

# Change the "feature.channel" and "score.type" attribute of "probs" column

attr(data.set$calibrated_score, "feature.channel") <- "Binary Classification Scores"

attr(data.set$calibrated_score, "score.type") <- "Calibrated Score"

#

# Rename the columns

names(data.set) <- c("Class" ,"Scored Labels" , "Scored Probabilities")

#

# Export the dataset

maml.mapOutputPort("data.set");

We run the experiment and see that everything works. The Evaluate Model module is now operational, and we can click to visualize:

The result of the visualization is shown below:

This is the ROC curve alright. We got the same figure from the Execute Python Script output. There is a huge difference though, as the result of Evaluate Model is interactive and the user can try different threshold combinations on the fly.

The R code provided above is tailored for the specific example; nevertheless, it is easily customized by identifying the corresponding columns and making the appropriate modifications. Moreover, we can even integrate this code into the ‘Scoring Script’ part of our Create R Model module, hence eliminating the extra Execute R Script module altogether (we leave this as an exercise for the interested reader).

Application: Comparing against native Azure ML Studio algorithms

To demonstrate the usefulness of our workaround, we extend the existing experiment by adding a separate training workflow starting from a native Azure ML Studio algorithm. We then attach this secondary flow to our Evaluate Model module and perform a comparison. We have selected the native Two-Class Bayes Point Machine, and the experiment now looks like the figure below:

We can run the experiment and visualize the output of the Evaluate Model. In the figure below we present the two results side by side :

Summary

We have presented a workaround to connect scoring results from a Create R Model module to an Evaluate Model module in Azure ML Studio. This connection is not allowed in the current version of Azure ML Studio, and model evaluation has to be performed manually by user written scripts. The workaround consists of a rather simple R script that can be added in the existing ML pipeline between Score Model and Evaluate Model, altering the metadata of the scored dataset. With this modification, Azure ML Studio users can enjoy uniform evaluation of both native and custom machine learning models.

The post How to evaluate R models in Azure Machine Learning Studio appeared first on Nodalpoint.

I built a multiclass SVM model in R and used Create R model module from azure to train and predict my testing dataset. Here are the trainer and the score R scripts.

Trainer R script:

library(e1071)

features <- get.feature.columns(dataset)

labels <- as.factor(get.label.column(dataset))

train.data <- data.frame(features, labels)

feature.names <- get.feature.column.names(dataset)

names(train.data) <- c(feature.names, "Class")

model <- svm(Class ~ . , train.data)

Scores R script:

library(e1071)

classes <- predict(model, dataset)

classes <- as.factor(classes)

res <- data.frame(classes, probabilities = 0.5)

print(str(res))

print(res)

scores <- res

Note in my code, I hardcoded the probability values to simplify the code.

Here is my component design in Azure:

When I run the experiment, all the components work fine. However, in the score model, the scored dataset port does not show the predicted values. It only shows feature values from the testing dataset. I checked the output log of Score model and I could see the model has nicely predicted the testing data (note I added print commands in the Scores R script). But this is not enough and I need the prediction returned from the score model so I can pass it via API.

Has anyone faced this issue before?

$begingroup$

I am trying to predict scored labels using regression. But when I am about to get the result from Azure ML Web Service in Excel 2016, there is no result appeared in the scored label column. How should I fix this? Below is all my process…

Here is my problem I always get. As you are seeing now, there is no result in scored label column when I try to predict.

asked Oct 15, 2017 at 9:39

$endgroup$

$begingroup$

Test out the web service in the web client under configure to see if it works …

There might be something wrong with the client.

Stephen Rauch♦

1,76311 gold badges19 silver badges34 bronze badges

answered Jul 25, 2018 at 6:41

$endgroup$

Improve Article

Save Article

Improve Article

Save Article

In this article, we will discuss how to fix the KeyError in pandas. Pandas KeyError occurs when we try to access some column/row label in our DataFrame that doesn’t exist. Usually, this error occurs when you misspell a column/row name or include an unwanted space before or after the column/row name.

The link to dataset used is here

Example

Python3

import pandas as pd

df = pd.read_csv('data.csv')

Output:

Reproducing keyError :

Python3

output:

KeyError: 'country'

Since there is no column with the name country we get a KeyError.

How to Fix the KeyError?

We can simply fix the error by correcting the spelling of the key. If we are not sure about the spelling we can simply print the list of all column names and crosscheck.

Python3

print(df.columns.tolist())

Output:

['Country', 'Age', 'Salary', 'Purchased']

Using the Correct Spelling of the Column

Python3

Output:

0 France 1 Spain 2 Germany 3 Spain 4 Germany 5 France 6 Spain 7 France 8 Germany 9 France Name: Country, dtype: object

If we want to avoid errors raised by the compiler when an invalid key is passed, we can use df.get(‘your column’) to print column value. No error is raised if the key is invalid.

Syntax : DataFrame.get( ‘column_name’ , default = default_value_if_column_is_not_present)

Python3

df.get('country', default="no_country")

Output:

'no_country'

But when we will use correct spelling we will get the value of the column instead of the default value.

Python3

df.get('Country', default="no_country")

Output:

0 France 1 Spain 2 Germany 3 Spain 4 Germany 5 France 6 Spain 7 France 8 Germany 9 France Name: Country, dtype: object

Warning :

Warning :

Warning :

Warning :