Содержание

- canceling autovacuum task error

- Don’t stop PostgreSQL’s autovacuum with your application

- The problem

- Unfinished transactions

- Exclusive table locks

- Summary

- Re: Getting «ERROR: canceling autovacuum task»

- In response to

- Browse pgsql-admin by date

- Обсуждение: Autovacuum Truncation Phase Loop?

- Autovacuum Truncation Phase Loop?

- Re: Autovacuum Truncation Phase Loop?

- Обсуждение: Timeout error on pgstat

- Timeout error on pgstat

- Re: Timeout error on pgstat

- Re: Timeout error on pgstat

- Re: Timeout error on pgstat

- Re: Timeout error on pgstat

canceling autovacuum task error

| From: | tamanna madaan |

|---|---|

| To: | pgsql-general(at)postgresql(dot)org |

| Subject: | canceling autovacuum task error |

| Date: | 2011-08-10 05:07:05 |

| Message-ID: | CAD4qJ_JVsq4tu68+EVEKe0_RFejVxttxOfHKq6qBGuZo0gxh5w@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-general |

I am using a cluster setup having postgres-8.4.0 and slon 2.0.4 is being

used for replication . It happened that the autovacuum was not running

successfully on one of the nodes in cluster and was giving error :

2011-05-13 23:07:42 CDTERROR: canceling autovacuum task

2011-05-13 23:07:42 CDTCONTEXT: automatic vacuum of table

«abc.abc.sometablename»

2011-05-13 23:07:42 CDTERROR: could not open relation with OID 141231 at

character 87

sometimes it was giving a different error as below :

2011-05-13 04:45:05 CDTERROR: canceling autovacuum task

2011-05-13 04:45:05 CDTCONTEXT: automatic analyze of table

«abc.abc.sometablename»

2011-05-13 04:45:05 CDTLOG: could not receive data from client: Connection

reset by peer

2011-05-13 04:45:05 CDTLOG: unexpected EOF on client connection

2011-05-13 04:45:05 CDTERROR: duplicate key value violates unique

constraint «sl_nodelock-pkey»

2011-05-13 04:45:05 CDTSTATEMENT: select «_schemaname».cleanupNodelock();

insert into «_mswcluster».sl_nodelock values ( 2, 0,

«pg_catalog».pg_backend_pid());

Can see the below log also in postgres logs :

«checkpoints are occurring too frequently (19 seconds apart)»

I am not sure when these all errors started coming . Just noticed these

when database size grew huge and it became slow.

Can anybody shed some light on it if these errors are related or what could

be the reason for these errors .

Источник

Don’t stop PostgreSQL’s autovacuum with your application

The problem

Some weeks ago, we received a complaint from a customer about bad PostgreSQL performance for a specific application. I took a look into the database and found strange things going on: the query planner was executing “interesting” query plans, tables were bloated with lots of dead rows (one was 6 times as big as it should be), and so on.

The cause revealed itself when looking at pg_stat_user_tables:

Despite of heavy write activity on the database, no table had ever seen autovacuum or autoanalyze. But why?

As I delved into it, I noticed that PostgreSQL’s autovacuum/autoanalyze was practically stopped in two ways by the application. I’d like to share our findings to help other programmers not to get trapped in situations like this.

Unfinished transactions

It turned out that the application had one component which connected to the database and opened a transaction right after startup, but never finished that transaction:

Note that the database server was started about 11 ¾ hours ago in this example. Vacuuming (whether automatic or manual) stops at the oldest transaction id that is still in use. Otherwise it would be vacuuming active transactions, which is not sensible at all. In our example, vacuuming is stopped right away since the oldest running transaction is only one minute older than the running server instance. At least this is easy to resolve: we got the developers to fix the application. Now it finishes every transaction in a sensible amount of time with either COMMIT or ABORT.

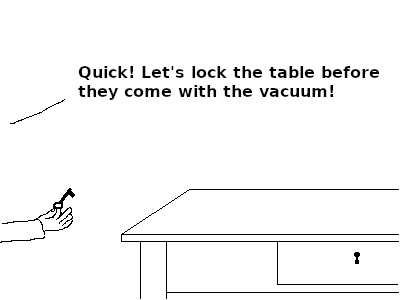

Exclusive table locks

Unfortunately, this was not all of it: autovacuum was working now but quite sporadically. A little bit of research revealed that autovacuum will abort if it is not able to obtain a table lock within one second – and guess what: the application made quite heavy use of table locks. We found a hint that something suspicious is going on in the PostgreSQL log:

Searching the application source brought up several places where table locks were used. Example:

The textindex code was particularly problematic as it dealt often with large documents. Statements like the one above could easily place load on the database server high enough to cause frequent autovacuum aborts.

The developers said that they have introduced the locks because of concurrency issues. As we could not get rid of them, I have installed a nightly cron job to force-vacuum the database. PostgreSQL has shown much improved query responses since then. Some queries’ completion times even improved by a factor of 10. I’ve been told that in the meantime they have found a way to remove the locks so the cron job is not necessary anymore.

Summary

PostgreSQL shows good auto-tuning and is a pretty low-maintenance database server if you allow it to perform its autovacuum/autoanalyze tasks regularly. We have seen that application programs may put autovacuum effectively out of business. In this particular case, unfinished transactions and extensive use of table locks were the show-stoppers. After we have identified and removed these causes, our PostgreSQL database is running smoothly again.

We are currently in the process of integrating some of the most obvious signs of trouble into the standard database monitoring on our managed hosting platform to catch those problems quickly as they show up.

Источник

Re: Getting «ERROR: canceling autovacuum task»

| From: | Alvaro Herrera |

|---|---|

| To: | «Dean Gibson (DB Administrator)» |

Cc: pgsql-admin(at)postgresql(dot)org Subject: Re: Getting «ERROR: canceling autovacuum task» Date: 2008-02-10 23:47:56 Message-ID: 20080210234756.GA7093@alvh.no-ip.org Views: Raw Message | Whole Thread | Download mbox | Resend email Thread: Lists: pgsql-admin

Dean Gibson (DB Administrator) wrote:

> I’m getting this loading some large (1 million row) tables. Is this

> anything to be concerned about?

No, it’s normal. It means the autovacuum task was cancelled in order to

avoid blocking your regular Postgres sessions.

If it’s only during table loading, there’s no problem — the table will

be processed later eventually. If it happens all the time, I would

advise setting a cron job to carry out the vacuum task.

—

Alvaro Herrera http://www.CommandPrompt.com/

The PostgreSQL Company — Command Prompt, Inc.

In response to

- Getting «ERROR: canceling autovacuum task» at 2008-02-10 17:30:22 from Dean Gibson (DB Administrator)

Browse pgsql-admin by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Phillip Smith | 2008-02-10 23:56:55 | Re: Postgres Backup and Restore |

| Previous Message | Tom Lane | 2008-02-10 23:40:21 | Re: Postgres Backup and Restore |

Copyright © 1996-2023 The PostgreSQL Global Development Group

Источник

Обсуждение: Autovacuum Truncation Phase Loop?

Autovacuum Truncation Phase Loop?

We recently upgraded a 17 TB database from Postgres 9.6 to 12 using pg_upgrade. After this upgrade, we started observing that autovacuum would get in a loop about every 5 seconds for certain tables. This usually happened to be the toast table of the relation. This causes the performance of the table to decrease substantially. A manual VACUUM of the table resolves the issue. Here is an example of what we see in the log:

2020-11-04 16:34:38.131 UTC [892980-1] ERROR: canceling autovacuum task

2020-11-04 16:34:38.131 UTC [892980-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

2020-11-04 16:34:41.878 UTC [893355-1] ERROR: canceling autovacuum task

2020-11-04 16:34:41.878 UTC [893355-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

2020-11-04 16:34:45.208 UTC [893972-1] ERROR: canceling autovacuum task

2020-11-04 16:34:45.208 UTC [893972-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

2020-11-04 16:34:47.635 UTC [894681-1] ERROR: canceling autovacuum task

2020-11-04 16:34:47.635 UTC [894681-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

Based upon Googling, we suspect it is the truncation step of autovacuum and its ACCESS EXCLUSIVE lock attempt(s).

Re: Autovacuum Truncation Phase Loop?

We recently upgraded a 17 TB database from Postgres 9.6 to 12 using pg_upgrade. After this upgrade, we started observing that autovacuum would get in a loop about every 5 seconds for certain tables. This usually happened to be the toast table of the relation. This causes the performance of the table to decrease substantially. A manual VACUUM of the table resolves the issue. Here is an example of what we see in the log:

2020-11-04 16:34:38.131 UTC [892980-1] ERROR: canceling autovacuum task

2020-11-04 16:34:38.131 UTC [892980-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

2020-11-04 16:34:41.878 UTC [893355-1] ERROR: canceling autovacuum task

2020-11-04 16:34:41.878 UTC [893355-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

2020-11-04 16:34:45.208 UTC [893972-1] ERROR: canceling autovacuum task

2020-11-04 16:34:45.208 UTC [893972-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

2020-11-04 16:34:47.635 UTC [894681-1] ERROR: canceling autovacuum task

2020-11-04 16:34:47.635 UTC [894681-2] CONTEXT: automatic vacuum of table «x.pg_toast.pg_toast_981540»

Based upon Googling, we suspect it is the truncation step of autovacuum and its ACCESS EXCLUSIVE lock attempt(s).

Источник

Обсуждение: Timeout error on pgstat

Timeout error on pgstat

I have a lot (maybe 1 every 10 seconds) of this error WARNING: pgstat wait timeout

ERROR: canceling autovacuum task

In the pg_stat_activity show an autovacuum process over a very used table that runs about 1 hour and then this vacuum is cancelled (according to log)

I have Postgres 9.0.3 on a windows 2008 R2 running for about 1 year in same conditions, but this error is occurring about 1 week ago.

Re: Timeout error on pgstat

I have a lot (maybe 1 every 10 seconds) of this error WARNING: pgstat wait timeout

A quick search suggests this can be due to excessive I/O. However, this thread:

sounds very similar to your issue. I’m wondering if there’s a bug lurking in there somewhere.

I have Postgres 9.0.3 on a windows 2008 R2 running for about 1 year in same conditions, but this error is occurring about 1 week ago.

The current 9.0 release is 9.0.8, so you’re missing a bunch of bug fixes.

Consider updating. You don’t need to do a dump and reload or use pg_upgrade, since it’s only a minor version update. Stop the DB, install the new binaries, start the DB.

However, I don’t see any fixes related to the stats writer in the relnotes from the 9.0 series.

Re: Timeout error on pgstat

Re: Timeout error on pgstat

Craig, those lines appear between pgstat timeout

ERROR: canceling autovacuum task

CONTEXT: automatic vacuum of table «XXX»

The table XXX is a table with about 200 insert p/ second. No update or delete.

The problem apparently is just with this table because there are others autovacuum running and working fin over others tables

The only difference is that this table XXX has about 5millions of insert daily and all those 5millons are deleted in the night (cleanup process).

De: Craig Ringer [mailto:]

Enviado el: miércoles, 01 de agosto de 2012 10:01 p.m.

Para: Anibal David Acosta

CC:

Asunto: Re: [ADMIN] Timeout error on pgstat

On 08/02/2012 04:27 AM, Anibal David Acosta wrote:

I have a lot (maybe 1 every 10 seconds) of this error WARNING: pgstat wait timeout

A quick search suggests this can be due to excessive I/O. However, this thread:

sounds very similar to your issue. I’m wondering if there’s a bug lurking in there somewhere.

In the pg_stat_activity show an autovacuum process over a very used table that runs about 1 hour and then this vacuum is cancelled (according to log)

Was there any context to the `cancelling autovacuum task’ message?

I have Postgres 9.0.3 on a windows 2008 R2 running for about 1 year in same conditions, but this error is occurring about 1 week ago.

The current 9.0 release is 9.0.8, so you’re missing a bunch of bug fixes.

Consider updating. You don’t need to do a dump and reload or use pg_upgrade, since it’s only a minor version update. Stop the DB, install the new binaries, start the DB.

However, I don’t see any fixes related to the stats writer in the relnotes from the 9.0 series.

Re: Timeout error on pgstat

Maybe this can contribute…

When I run a query over this table XXX, and immediately try to cancel the query, the cancel never completes.

I found that this situation was fixed in last release (9.0.8)

· Ensure sequential scans check for query cancel reasonably often (Merlin Moncure)

A scan encountering many consecutive pages that contain no live tuples would not respond to interrupts meanwhile

Maybe in the autovacuum happen something similar?

De: Anibal David Acosta [mailto:]

Enviado el: jueves, 02 de agosto de 2012 10:52 a.m.

Para: ‘Craig Ringer’

CC: »

Asunto: RE: [ADMIN] Timeout error on pgstat

Craig, those lines appear between pgstat timeout

ERROR: canceling autovacuum task

CONTEXT: automatic vacuum of table «XXX»

The table XXX is a table with about 200 insert p/ second. No update or delete.

The problem apparently is just with this table because there are others autovacuum running and working fin over others tables

The only difference is that this table XXX has about 5millions of insert daily and all those 5millons are deleted in the night (cleanup process).

De: Craig Ringer [mailto:]

Enviado el: miércoles, 01 de agosto de 2012 10:01 p.m.

Para: Anibal David Acosta

CC:

Asunto: Re: [ADMIN] Timeout error on pgstat

On 08/02/2012 04:27 AM, Anibal David Acosta wrote:

I have a lot (maybe 1 every 10 seconds) of this error WARNING: pgstat wait timeout

A quick search suggests this can be due to excessive I/O. However, this thread:

sounds very similar to your issue. I’m wondering if there’s a bug lurking in there somewhere.

In the pg_stat_activity show an autovacuum process over a very used table that runs about 1 hour and then this vacuum is cancelled (according to log)

Was there any context to the `cancelling autovacuum task’ message?

I have Postgres 9.0.3 on a windows 2008 R2 running for about 1 year in same conditions, but this error is occurring about 1 week ago.

The current 9.0 release is 9.0.8, so you’re missing a bunch of bug fixes.

Consider updating. You don’t need to do a dump and reload or use pg_upgrade, since it’s only a minor version update. Stop the DB, install the new binaries, start the DB.

However, I don’t see any fixes related to the stats writer in the relnotes from the 9.0 series.

Источник

I am trying to restore database in PostgreSQL docker container using pg_restore from a shellscript that will be called from docker file. I’m getting following error «ERROR: canceling autovacuum task

CONTEXT: automatic analyze of table ‘tablename'».

DockerFile:

FROM postgres:9.3

ENV POSTGRES_USER postgres

ENV POSTGRES_PASSWORD Abcd1234

ENV POSTGRES_DB Clarion1

COPY DB.backup /var/lib/postgresql/backup/DB.backup

COPY initialize.sh /docker-entrypoint-initdb.d/initialize.sh

initialize.sh

#!/bin/bash

set -e

set -x

echo "******PostgreSQL initialisation******"

pg_restore -C -d DB /var/lib/postgresql/backup/DB.backup

Log:

server started

CREATE DATABASE

/docker-entrypoint.sh: running /docker-entrypoint-initdb.d/initialize.sh

++ echo '******PostgreSQL initialisation******'

++ pg_restore -C -d Clarion1 /var/lib/postgresql/backup/Clarion53.backup

******PostgreSQL initialisation******

ERROR: canceling autovacuum task

But if I try to restore DB from command prompt in host machine from same backup file , it is working fine.

Uwe Allner

3,3519 gold badges38 silver badges49 bronze badges

asked Sep 1, 2016 at 9:11

1

Here is a way to restore from a file located on the host machine:

docker exec -i container_name pg_restore -U postgres_user -v -d database_name < /dir_backup_outside_container/file_name.tar

answered Sep 17, 2019 at 15:48

2

I don’t think the backup restore can be done during the initialization phase. Start your container and then upload the db.

docker run -d --name mydb mypgimage

docker exec mydb sh -c "pg_restore -C -d DB /var/lib/postgresql/backup/DB.backup"

answered Sep 1, 2016 at 15:10

BernardBernard

15.3k12 gold badges64 silver badges62 bronze badges

Combine the most voted and Heroku’s guide, I came up with this:

docker exec -i mohe-bc_db_1 pg_restore --verbose --clean --no-acl --no-owner -U postgres -d mohe-bc_development < ~/Downloads/de8dc786-b133-4ae2-a040-dcf34f12c3de

mohe-bc_db_1: pg container name showed by docker ps NAMES column

postgres: pg username

mohe-bc_development: db name

~/Downloads/de8dc786-b133-4ae2-a040-dcf34f12c3de: file path of pg db dump

And it works:

pg_restore: connecting to database for restore

pg_restore: dropping CONSTRAINT webhooks webhooks_pkey

pg_restore: dropping CONSTRAINT schema_migrations schema_migrations_pkey

pg_restore: dropping CONSTRAINT ar_internal_metadata ar_internal_metadata_pkey

pg_restore: dropping DEFAULT webhooks id

pg_restore: dropping SEQUENCE webhooks_id_seq

pg_restore: dropping TABLE webhooks

pg_restore: dropping TABLE schema_migrations

pg_restore: dropping TABLE ar_internal_metadata

pg_restore: creating TABLE "public.ar_internal_metadata"

pg_restore: creating TABLE "public.schema_migrations"

pg_restore: creating TABLE "public.webhooks"

pg_restore: creating SEQUENCE "public.webhooks_id_seq"

pg_restore: creating SEQUENCE OWNED BY "public.webhooks_id_seq"

pg_restore: creating DEFAULT "public.webhooks id"

pg_restore: processing data for table "public.ar_internal_metadata"

pg_restore: processing data for table "public.schema_migrations"

pg_restore: processing data for table "public.webhooks"

pg_restore: executing SEQUENCE SET webhooks_id_seq

pg_restore: creating CONSTRAINT "public.ar_internal_metadata ar_internal_metadata_pkey"

pg_restore: creating CONSTRAINT "public.schema_migrations schema_migrations_pkey"

pg_restore: creating CONSTRAINT "public.webhooks webhooks_pkey"

answered Jan 5, 2022 at 6:35

xofredxofred

1241 silver badge6 bronze badges

This one worked for me from a pg_dump -Fc ‘pg_dump_Fc_file’ custom (compressed) database dump:

docker exec -i container_name pg_restore -Fc -U admin_username -d database_name < pg_dump_Fc_file

answered Oct 15, 2021 at 8:21

jufxjufx

1542 silver badges7 bronze badges

(Adding this just for windows users, < is not supported by powerShell). Under powershell:

Get-Content C:pathToDumpFolderMydump.sql | docker exec -i containername psql -U username -v -d dbname

answered Jul 17, 2022 at 16:56

Cetin BasozCetin Basoz

21.2k2 gold badges28 silver badges38 bronze badges

Another variant in case all the shorter ones don’t work:

docker exec -i container_name pg_restore -U db_user --verbose --clean --no-acl --no-owner -h localhost -d db_name < db_backup_file

Also, pay attention to the --format option.

answered Jan 27, 2022 at 9:57

Hi All

I am using a cluster setup having postgres-8.4.0 and slon 2.0.4 is being

used for replication . It happened that the autovacuum was not running

successfully on one of the nodes in cluster and was giving error :

2011-05-13 23:07:42 CDTERROR: canceling autovacuum task

2011-05-13 23:07:42 CDTCONTEXT: automatic vacuum of table

«abc.abc.sometablename»

2011-05-13 23:07:42 CDTERROR: could not open relation with OID 141231 at

character 87

sometimes it was giving a different error as below :

2011-05-13 04:45:05 CDTERROR: canceling autovacuum task

2011-05-13 04:45:05 CDTCONTEXT: automatic analyze of table

«abc.abc.sometablename»

2011-05-13 04:45:05 CDTLOG: could not receive data from client: Connection

reset by peer

2011-05-13 04:45:05 CDTLOG: unexpected EOF on client connection

2011-05-13 04:45:05 CDTERROR: duplicate key value violates unique

constraint «sl_nodelock-pkey»

2011-05-13 04:45:05 CDTSTATEMENT: select «_schemaname».cleanupNodelock();

insert into «_mswcluster».sl_nodelock values ( 2, 0,

«pg_catalog».pg_backend_pid());

Can see the below log also in postgres logs :

«checkpoints are occurring too frequently (19 seconds apart)»

I am not sure when these all errors started coming . Just noticed these

when database size grew huge and it became slow.

Can anybody shed some light on it if these errors are related or what could

be the reason for these errors .

Thanks..

Tamanna

Many people know that explicit table locks with LOCK TABLE are bad style and usually a consequence of bad design. The main reason is that they hamper concurrency and hence performance.

Through a recent support case I learned that there are even worse effects of explicit table locks.

Table locks

Before an SQL statement uses a table, it takes the appropriate table lock. This prevents concurrent use that would conflict with its operation. For example, reading from a table will take a ACCESS SHARE lock which will conflict with the ACCESS EXCLUSIVE lock that TRUNCATE needs.

You can find a description of the individual lock levels in the documentation. There is also the matrix that shows which lock levels conflict with each other.

You don’t have to perform these table locks explicitly, PostgreSQL does it for you automatically.

Explicit table locks with the LOCK TABLE statement

You can also explicitly request locks on a table with the LOCK statement:

LOCK [ TABLE ] [ ONLY ] name [ * ] [, ...] [ IN lockmode MODE ] [ NOWAIT ]

There are some cases where it is useful and indicated to use such an explicit table lock. One example is a bulk update of a table, where you want to avoid deadlocks with other transactions that modify the table at the same time. In that case you would use a SHARE lock on the table that prevents concurrent data modifications:

LOCK atable IN SHARE MODE;

Typical mistakes with LOCK TABLE

Unfortunately, most people don’t think hard enough and just use “LOCK atable” without thinking that the default lock mode is ACCESS EXCLUSIVE, which blocks all concurrent access to the table, even read access. This harms performance more than necessary.

But most of the time, tables are locked because developers don’t know that there are less restrictive ways to achieve what they want:

- You don’t want concurrent transactions to modify a row between the time you read it and the time you update it? Use

SELECT ... FOR UPDATE!

If concurrent modifications are unlikely and you are not sure that you are actually going to modify the row, aREPEATABLE READtransaction may be even better. That means that you have to be ready to retry the operation if theUPDATEfails due to a serialization error. - You want to perform several

SELECTs on the table and want to be sure that nobody modifies the table between your statements? Use a transaction withREPEATABLE READisolation level, so that you see a consistent snapshot of the database! - You want to get a row from a table, process it and then remove it? Use

DELETE ... RETURNING, then the row will be locked immediately! - You want to implement a queue where workers should grab different items and process them? Use

SELECT ... LIMIT 1 FOR UPDATE SKIP LOCKED! - You want to synchronize concurrent processes with database techniques? Use advisory locks!

LOCK TABLE versus autovacuum

It is necessary that autovacuum processes a table from time to time so that

- dead tuples are removed so that the space can be reused

- the free space map and the visibility map of the table are maintained

- table rows get “frozen” (marked as unconditionally all-visible) before the transaction counter wraps around

Now VACUUM requires a SHARE UPDATE EXCLUSIVE lock on the table. This conflicts with the lock levels people typically use to explicitly lock tables, namely SHARED and ACCESS EXCLUSIVE. (As I said, the latter lock is usually used by mistake.)

Now autovacuum is designed to be non-intrusive. If any transaction that that wants to lock a table is blocked by autovacuum, the deadlock detector will cancel the autovacuum process after a second of waiting. You will see this message in the database log:

ERROR: canceling autovacuum task DETAIL: automatic vacuum of table "xyz"

The autovacuum launcher process will soon start another autovacuum worker for this table, so this is normally no big problem. Note that “normal” table modifications like INSERT, UPDATE and DELETE do not require locks that conflict with VACUUM!

How things can go wrong

If you use LOCK on a table frequently, there is a good chance that autovacuum will never be able to successfully process that table. This is because it is designed to run slowly, again in an attempt not to be intrusive.

Then dead tuples won’t get removed, live tuples won’t get frozen, and the table will grow (“get bloated” in PostgreSQL jargon). The bigger the table grows, the less likely it becomes that autoacuum can finish processing it. This can go undetected for a long time unless you monitor the number of dead tuples for each table.

The ugly end

Eventually, though, the sticky brown substance is going to hit the ventilation device. This will happen when there are non-frozen live rows in the table that are older than autovacuum_freeze_max_age. Then PostgreSQL knows that something has to be done to prevent data corruption due to transaction counter wrap-around. It will start autovacuum in “anti-wraparound mode” (you can see that in pg_stat_activity in recent PostgreSQL versions).

Such an anti-wraparound autovacuum will not back down if it blocks other processes. The next LOCK statement will block until autovacuum is done, and if it is an ACCESS EXCLUSIVElock, all other transactions will queue behind it. Processing will come to a sudden stop. Since by now the table is probably bloated out of proportion and autovacuum is slow, this will take a long time.

If you cancel the autovacuum process or restart the database, the autovacuum will just start running again. Even if you disable autovacuum (which is a really bad idea), PostgreSQL will launch the anti-wraparound autovacuum. The only way to resume operation for a while is to increase autovacuum_freeze_max_age, but that will only make things worse eventually: 1 million transactions before the point at which you would suffer data corruption from transaction counter wrap-around, PostgreSQL will shut down and can only be started in single-user mode for a manual VACUUM.

How can I avoid this problem?

First, if you already have the problem, declare downtime, launch an explicit VACUUM (FULL, FREEZE) on the table and wait until it is done.

To avoid the problem:

- Don’t use

LOCKon a routine basis. Once a day for the nightly bulk load is fine, as long as autovacuum has enough time to finish during the day. - Tune autovacuum to run more aggressively and hence faster. This can be done by increasing

autovacuum_vacuum_cost_limitand reducingautovacuum_vacuum_cost_delay. - Use PostgreSQL 9.6 or later. Anti-wraparound autovacuum has been improved in 9.6; it now skips pages with only frozen rows to speed up processing.

I am trying to help a team of junior, senior, principal and chief (mostly JEE) developers to be more data-centric and data-aware. In some cases we look into the data-processing costs, complexity of the algorithms, predictability of the results and statistical robustness of the estimates for query plans. In other cases we blindly believe that use of indexes is always great and scan of the tables is always bad. Sometimes we just opportunistically throw gazillions of insert, update, delete queries into the DB and hope for the best. We run load tests afterwards and we notice that our tables and indexes are bloated beyond imagination, the tables became pretty much unmanageable in size and chaos rules the area.

A good way to proceed is to train and learn complexity classes, understand the costs, have the right attitude. This change is very fruitful but hard and slow. As long as I am breathing, I’ll continue this journey.

For now we are trying to understand why autovacuum for some tables kicks in so seldomly. We’ve got a postgres server (v9.5 I believe) running in azure cloud (test environment). We pay for 10K IOPS, and we use them fully (we write to the DB like hell). In the last 24 hours I see that autovacuum was run only 2 times for two large tables through

select * from pg_stat_all_tables order by last_autovacuum desc

In order to trigger an autovacuum, I created:

create table a(a int)

ALTER TABLE a SET (autovacuum_vacuum_scale_factor = 0.0 );

ALTER TABLE a SET (autovacuum_vacuum_threshold = 10 );

ALTER TABLE a SET (autovacuum_analyze_scale_factor = 0.0 );

ALTER TABLE a SET (autovacuum_analyz_threshold = 10 );

and ran the following two statements multiple times:

delete from a;

insert into a (a) select generate_series(1,10);

This should have triggered an autovacuum on the table, but pg_stat_all_tables has NULL for last_autovacuum column for table a.

We also set log_autovacuum_min_duration to a very low value (like 250ms or even 0), but the only two entries in the logs are:

postgresql-2021-02-18_010000.log:2021-02-18 01:56:29 UTC-602a2e9c.284-LOG: automatic vacuum of table "asc_rs.pg_toast.pg_toast_3760410": index scans: 1

postgresql-2021-02-18_060000.log:2021-02-18 06:35:47 UTC-602a2e9c.284-LOG: automatic vacuum of table "asc_rs.pg_toast.pg_toast_3112937": index scans: 1

Our settings are:

We have a feeling that autovacuum is killed on large tables because of row-locks. Can we log this information in any way? Can we also log (failing) autovacuum attempts? How does postgres decide to start an autovacuum job (or more generally speaking tradeoffs between regular changes in the DB vs. maintenance jobs) on a very high load system? If parameters for kicking off of autovacuum are meat, would it definitely be kicked off or wait until the load of IOs decrease?

EDIT:

We do not see any errors/breaks w.r.t. the autovacuums in the logs (thanks Laurenz Albe)

DETAIL: while scanning block 5756 of relation "laurenz.vacme"