Block Codes

Takahiro Yamada, in Essentials of Error-Control Coding Techniques, 1990

3.5.2 Product and Concatenated Codes

In order to obtain a code that has high error-correction capability and can be decoded with a relatively simple decoder, we often combine two codes. Product codes and concatenated codes are the most basic methods for combining codes.

A product code is encoded as follows. First, arrange the k1k2 message symbols in a k1 × k2 array and encode each column with an (n1, k1) code C1, as shown in Fig. 3.7A. Then we have an n1 × k2 array. Next, encode each row of this array with an (n2, k2) code C2 (Fig. 3.7B). Thus, we have an n1 × n2 array, which is the codeword of the product code. The resulting product code is an (n1n2, k1k2) code. Let d1 and d2 be the minimum distance of C1 and C2, respectively. Then the minimum distance of the product code is d1d2.

Fig. 3.7. Encoding a product code. (A) Encoding with C1; (B) encoding with C2.

For example, if C1 is an (n1, n1 − 1) parity check code and C2 is an (n2, n2 − 1) parity check code, both with minimum distance 2, the resulting product code is an (n1n2,(n1 − 1)(n2 − 1)) code with minimum distance 4.

A concatenated code (Forney, 1966) is encoded as follows. First, arrange the k1k2 binary message symbols in a k1 × k2 array as in encoding a product code. Then regard each row of this array as an element of GF(2k2), so this array is assumed to be a column vector of length k1, over GF(2k2).

Encode this vector with an (n1, k1) code C1 over GF(2k2) as shown in Fig. 3.8A. Next, we regard the resulting codewords of C1 as an n1 × k2 array over GF(2), and we encode each row with an (n2, k2) code C2 over GF(2) (Fig. 3.8B). The resulting n1 × n2 array is the codeword of the concatenated code, which is an (n1n2, k1k2) code over GF(2). Let d1 and d2 be the minimum distance of C1 and C2, respectively. Then the minimum distance of the concatenated code is d1d2.

Fig. 3.8. Encoding of a concatenated code. (A) Encoding with C1; (B) encoding with C2.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123707208500076

Quantum Error Correction

Ivan Djordjevic, in Quantum Information Processing and Quantum Error Correction, 2012

7.4.1 Quantum Hamming Bound

The quantum Hamming bound for an [N,K] QECC of error correction capability t is given by

(7.52)∑j=0t(Nj)3j2K≤2N,

where K is the information word length, and N is the codeword length. This inequality is quite straightforward to prove. The number of information words can be found as 2K, the number of error locations is N chooses j, and the number of possible errors {X,Y,Z} at every location is 3j. The total number of errors for all codewords that can be corrected cannot be larger than the code space, which is 2N-dimensional. Analogously to classical codes, the QECCs satisfying the Hamming inequality with equality can be called perfect codes. For t = 1, the quantum Hamming bound becomes (1 + 3N)2K ≤ 2N. For an [N,1] quantum code with t = 1, the quantum Hamming bound is simply 2(1 + 3N) ≤ 2N. The smallest possible N to satisfy the Hamming bound is N = 5, which represents the perfect code. This cyclic code has already been introduced in Section 7.2. It is interesting to note that the quantum Hamming bound is identical to the classical Hamming bound for q-ary linear block codes (LBCs) (see Section 6.7.2) by setting q = 4, corresponding to the cardinality of set of errors {I,X,Y,Z}. This is consistent with the connection we established between QECCs and classical codes over GF(4).

The asymptotic quantum Hamming bound can be obtained by letting N → ∞. For very large N, the last term in summation (7.52) dominates and we can write (Nt)3t2K≤2N. By taking the log2( ) from both sides of the inequality we obtain: log2(Nt)+tlog23+K≤N. By using the approximation log2(Nt)≃NH(t/N), where H(p) is the binary entropy function H(p)=−plogp−(1−p)log(1−p), we obtain the following asymptotic quantum Hamming bound:

(7.53)KN≤1−H(t/N)−tNlog23.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123854919000071

Turbo-Like Codes Constructions

Sergio Benedetto, … Guido Montorsi, in Academic Press Library in Mobile and Wireless Communications, 2014

5.1 Interleaver theory

Interleavers are devices that permute sequences of symbols: they are widely used for improving error correction capabilities of coding schemes over bursty channels [37]. Their basic theory has received relatively limited attention in the past, apart from some classical papers ([38,39]). Since the introduction of turbo codes [1], where interleavers play a fundamental role, researchers have dedicated many efforts to the interleaver design (e.g., [49]). However, the misunderstanding of the basic interleaver theory often causes confusion in turbo code literature.

In this section, interleaver theory is revisited. The intent is twofold: first, to establish a clear mathematical framework which encompasses old definitions and results on causal interleavers. Second, to extend this theory to noncausal interleavers, which can be useful for turbo codes.

We begin by a proper definition of the key quantities that characterize an interleaver, like its minimum/maximum delay, its characteristic latency, and its period. Then, interleaver equivalence and deinterleavers are carefully studied to derive physically realizable interleavers. Connections between interleaver quantities are then explored, especially those concerning latency, a key parameter for applications. Next, the class of convolutional interleavers is considered and block interleavers, which are the basis of most concatenated code schemes, are introduced as a special case. Finally, we describe the classes of interleavers that have been used in practical and standard turbo codes construction.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123964991000029

Speech Coding and Channel Coding

Vijay K. Garg, in Wireless Communications & Networking, 2007

8.6.1 Reed-Solomon (RS) Codes

RS coding is a type of FEC. It has been widely used because of its relatively large error correction capability when weighed against its minimal added overhead. RS codes are also easily scaled up or down in error correction capability to match the error rates expected in a given system. It provides a robust error control method for many common types of data transfer mediums, particularly those that are one-way or noisy and sure to produce errors.

In block codes a sequence of K information symbols is encoded in a block of N symbols, N > K, to be transmitted over the channel. For a data source that delivers the information bits at the rate B bps, every T seconds the encoder receives a sequence of K = BT bits which defines a message. After K information bits have entered the encoder, the encoder generates a sequence of coded symbols of length N to be transmitted over the channel. In this transmitted sequence or codeword, N must be greater or equal to K in order to guarantee a unique relationship between each codeword and each of the possible 2K messages. Such a code which maps a block of K information symbols into a block of N coded symbols is called an (N,K) block code. The code rate is r = K/N bits/symbol, N is called the block length.

RS codes are an example of a block coding technique. The data stream to be transmitted is broken up into blocks and redundant data is then added to each block. The size of these blocks and the amount of redundant data added to each block is either specified for a particular application or can be user-defined for a closed system. Within these blocks, the data is further subdivided into a number of symbols, which are generally from 6 to 10 bits in size. The redundant data then consists of additional symbols being added to the end of the transmission. The system-level block diagram for an RS codec is shown in Figure 8.5.

Figure 8.5. RS system level block diagram.

The original data, which is a block consisting of N-R symbols, is run through an RS encoder and R check symbols are added to form a code word of length N. Since RS can be done on any message length and can add any number of check symbols, a particular RS code is expressed as RS (N, N-R) code. N is the total number of symbols per code word; R is the number of check symbols per code word, and N-R is the number of actual information symbols per code word.

RS encoding consists of the generation of check symbols from the original data. The process is based upon finite field arithmetic. The variables to generate a particular RS code include field polynomial and generator polynomial starting roots. The field polynomial is used to determine the order of the elements in the finite field.

Another system-level characteristic of RS coding is whether the implementation is systematic or nonsystematic. A systematic implementation produces a code word that contains the unaltered original input data stream in the first R symbols of the code word. In contrast, in a nonsystematic implementation, the input data stream is altered during the encoding process. Most specifications require systematic coding.

The simplified schematic representation for a systematic RS encoder is shown in Figure 8.6. The input data stream is immediately clocked back out of the function into the check symbol generation circuitry. The fact that the input data stream is clocked out immediately without being altered means that the implementation is systematic. A series of finite fields adds and multiplies results in each register containing one check symbol after the entire input stream has been entered. At that point, the output select is switched over to the check symbol registers, and the check symbols are shifted out at the end of the original message.

Figure 8.6. Systematic RS encoder schematic representation.

The size of the encoder is most heavily affected by the number of check symbols required for the target RS code. The total message length, as well as the field polynomial and first root value, do not have any appreciable effect on the device performance.

A typical RS decode algorithm consists of several major blocks. The first of these blocks is the syndrome calculation, where the incoming symbols are divided into the generator polynomial, which is known from the parameters of the decoder. The check symbols, which form the remainder in the encoder section, will cause the syndrome calculation to be zero in the case of no errors. If there are errors, the resulting polynomial is passed to the Euclid algorithm, where the factors of the remainder are found (see Figure 8.7). The result is evaluated for each of the incoming symbols over many iterations, and any errors are found and corrected. The corrected code word is the output from the decoder. If there are more errors in the code word than can be corrected by the RS code used, then the received code word is output with no changes and a flag is set, stating that the error correction has failed for that code word.

Figure 8.7. RS decoder block diagram.

The error correction capability of a given RS code is a function of the number of check bits appended to the message. In general, it may be assumed that correcting an error requires one check symbol to find the location of the error, and a second check symbol to correct the error. In general then, a given RS code can correct R/2 symbol errors, where R is the number of check symbols in the given RS code. Since RS codes are generally described as an RS (N, N-R) value, the number of errors correctable by this code is [N- (N-R)]/2. This error control capability can be enhanced by use of erasures, a technique that helps to determine the location of an error without using one of the check symbols. An RS implementation supporting erasures would then be able to correct up to R errors.

Since RS codes work on symbols (most commonly equal to one 8-bit byte) as opposed to individual data bits, the number of correctable errors refers to symbol errors. This means that a symbol with all of the bits corrupted is no different than a symbol with only one of its bits corrupted, and error control capability refers to the number of corrupted symbols that can be corrected. RS codes are more suitable to correct consecutive bits. RS codes are generally combined with other coding methods such as Viterbi, which is more suited to correcting evenly distributed errors.

The effective throughput of an RS decoder is a combination of the number of clock cycles required to locate and correct errors after the code word has been received and the speed at which the design can be clocked. Knowing the latency and clock speed allows the user to determine how many symbols per second may be processed by the decoder. In the RS code, there are two RS decoder choices: a high-speed decoder and a low-speed decoder. The trade-off is that the low-speed decoder is usually approximately 20% smaller in device utilization. Note that both decoders operate at the same clock rate, but the low-speed decoder has a longer latency period, resulting in a slower effective symbol rate. As the number of check symbols decreases, the complexity of the decoder decreases, resulting in a smaller design and an increase in performance.

In a real-life RS coding implementation, functions that tend to reside on either side of the RS encoder or decoder are often implemented in programmable logic. One function that often resides after an RS encoder is an interleaver. The task of an interleaver is to scramble the symbols in several RS code words before transmission, effectively spreading any burst error that occurs during transmission over several code words. Spreading this burst error over several code words increases the chance of each code word being able to correct all of its induced errors.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123735805500429

The Fading Channel Problem and Its Impact on Wireless Communication Systems in Uganda

L.L. Kaluuba, … D. Waigumbulizi, in Proceedings from the International Conference on Advances in Engineering and Technology, 2006

6.1 Adaptive Coding

When a link is experiencing fading, the introduction of additional redundant bits to the information bits to improve error correction capabilities (FEC) allows to maintain the nominal BER while leading to a reduction of the required energy per information bit. Adaptive coding consists in implementing variable coding rate in order to match impairments due to propagation conditions. A gain of varying from 2 to 10 dB can be achieved depending on the coding rate. The limitations of this fade mitigation technique are linked to additional bandwidth requirements for FDMA and larger bursts in the same frame for TDMA. Adaptive coding at constant information data rate then translates in a reduction of the total system throughput when various links are experiencing fading simultaneously.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780080453125500686

Introduction

Hideki Imai, in Essentials of Error-Control Coding Techniques, 1990

1.4.1 Applications to Audio Systems

Since the error rate of devices is high and both random and burst errors occur in digital audio systems, large error-correction capability is required. However, since the correlation between adjacent data is relatively high for audio signals, we can estimate the correct value of erroneous data by using the values of the data before and after the erroneous data. Miscorrection by the decoder causing a click noise must be strictly avoided. Therefore, it is desirable to estimate the correct values of data that are likely to be miscorrected as described previously, instead of correcting any errors at the decoder. Doubly coded Reed—Solomon or cyclic codes with interleaving are often used.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123707208500052

High-level Design Tools for Complex DSP Applications

Yang Sun, … Tai Ly, in DSP for Embedded and Real-Time Systems, 2012

LDPC decoder design example using PICO

Low-density parity-check (LDPC) codes [6] have received tremendous attention in the coding community because of their excellent error correction capability and near-capacity performance. Some randomly constructed LDPC codes, measured in Bit Error Rate (BER), come very close to the Shannon limit for the AWGN channel with iterative decoding and very long block sizes (on the order of 106 to 107). The remarkable error correction capabilities of LDPC codes have led to their recent adoption in many standards, such as IEEE 802.11n, IEEE 802.16e, and IEEE 802.15.3c.

As wireless standards are rapidly changing and different wireless standards employ different types of LDPC codes, it is very important to design a flexible and scalable LDPC decoder that can be tailored to different wireless applications. In this section, we will explore the design space of efficient implementations of LDPC decoders using the PICO high level synthesis methodology. Under the guidance of the designers, PICO can effectively exploit the parallelism of a given algorithm, and then create an area-time-power efficient hardware architecture for the algorithm. We will present a partial-parallel LDPC decoder implementation using PICO.

A binary LDPC code is a linear block code specified by a very sparse binary M by N parity check matrix:

H·xT = 0,

where x is a codeword and H can be viewed as a bipartite graph where each column and row in H represents a variable node and a check node, respectively. Each element of the parity check matrix is either a zero or a one, where nonzero entries are typically placed at random to achieve good performance. During the encoding process, N-K redundant bits are added to the K information bits to create a codeword length of N bits. The code rate is the ratio of the information bits to the total bits in a codeword. LDPC codes are often represented by a bi-partite graph called a Tanner graph. There are two types of nodes in a Tanner graph, variable nodes and check nodes. A variable node corresponds to a coded bit or a column of the parity check matrix, and a check node corresponds to a parity check equation or a row of the parity check matrix. There is an edge between each pair of nodes if there is a one in the corresponding parity check matrix entry. The number of nonzero elements in each row or column of a parity check matrix is called the degree of that node. An LDPC code is regular or irregular based on the node degrees. If variable or check nodes have different degrees, then the LDPC code is called irregular, otherwise, it is called regular. Generally, irregular codes have better performance than regular codes. On the other hand, irregularity of the code will result in more complex hardware architecture.

Non-zero elements in H are typically placed at random positions to achieve good coding performance. However, this randomness is unfavorable for efficient VLSI implementation that calls for structured design. To address this issue, block-structured quasi-cyclic LDPC codes are recently proposed for several new communication standards such as IEEE 802.11n, IEEE 802.16e, and DVB-S2. As shown in Figure 8-6, the parity check matrix can be viewed as a 2-D array of square sub matrices. Each sub matrix is either a zero matrix or a cyclically shifted identity matrix Ix. Generally, the block-structured parity check matrix H consists of a j-by-k array of z-by-z cyclically shifted identity matrices with random shift values x (0 = < x < = z).

Figure 8-6. A block structured parity check matrix with block rows (or layers) j = 4 and block columns k = 8, where the sub-matrix size is z-by-z.

A good tradeoff between design complexity and decoding throughput is partially parallel decoding by grouping a certain number of variable and check nodes into a cluster for parallel processing. Furthermore, the layered decoding algorithm [7] can be applied to improve the decoding convergence time by a factor of two and hence increases the throughput by two times.

In a block-structured parity-check matrix, which is a j by k array of z by z sub-matrices, each sub-matrix is either a zero or a shifted identity matrix with random shift value. In every layer, each column has at most one 1, which satisfies that there are no data dependencies between the variable node messages, so that the messages flow in tandem only between the adjacent layers. The block size z is variable corresponding to the code definition in the standards.

To simplify the hardware implementation, the scaled min-sum algorithm [8] is used. This algorithm is summarized as follows. Let Qmn denote the variable node log likelihood ratio (LLR) message sent from variable node n to the check node m, Rmn denote the check node LLR message sent from the check node m to the variable node n, and APPn denote the a posteriori probability ratio (APP) for variable node n, then:

Qmn=APPn−RmnRmn′=s×∏j:j≠nsign(Qmj)×(minj:j≠n|Qmj|)APPn′=Qmn+Rmn

where s is a scaling factor. The APP messages are initialized with the channel reliability values of the coded bits.

Hard decisions can be made after every horizontal layer based on the sign of APPn. If all parity-check equations are satisfied or the pre-determined maximum number of iterations is reached, then the decoding algorithm stops. Otherwise, the algorithm repeats for the next horizontal layer.

To implement this algorithm in hardware, we use a block-serial decoding method [9]: data in each layer is processed block-column by block-column. The decoder first reads APP and R messages from memory, calculates Q, and then finds the minimum and the second minimum values for each row m over all column n. Then, the decoder computes the new R and APP values based on the two minimum values, and writes the new R and APP values back to memory. The algorithm is coded in an un-timed C code. A section of the C code is shown in Figure 8-7, which depicts the PPA architecture generated by the PICO C compiler. The parallelism of this architecture is at the level of the sub-matrix size z. Note that the ‘pragma unroll’ statement in the C code will be used by the PICO C compiler to determine the parallelism level. Multiple instances of the decoding cores are generated by the PICO C compiler to achieve a large decoder parallelism.

Figure 8-7. Pipelined LDPC decoder architecture generated by PICO.

As a case study, a flexible LDPC decoder which fully supports the IEEE 802.16e standard was described in an un-timed C procedure, and then the PICO software was used to create synthesizable RTLs. The generated RTLs were synthesized using Synopsys Design Compiler, and placed & routed using Cadence SoC Encounter on a TSMC 65nm 0.9V 8-metal layer CMOS technology. Table 8-1 summarizes the main features of this decoder.

Table 8-1. ASIC synthesis result.

| Core area | 1.2 mm2 |

| Clock frequency | 400 MHz |

| Power consumption | 180 mW |

| Maximum throughput | 415 Mbps |

| Maximum latency | 2.8 μs |

Compared to the manual RTL designs [10, 11] which usually took 6 months to finish, the C based design using PICO technology only took 2 weeks to complete, and is able to achieve high performance in terms of area, power, and throughput. The area overhead is about 15% compared to the manual LDPC decoders [10, 11] that we have implemented before at Rice University.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123865359000081

Design Technique for an Error-Control Scheme

Tohru Inoue, in Essentials of Error-Control Coding Techniques, 1990

Publisher Summary

This chapter presents design technique for an error-control scheme. To implement actual encoders and decoders for error-correcting codes, it is necessary first to know the specifications required and then to take advantage of the characteristics of the channel. There is a trade-off between error-correction capability and redundancy required, concerning the amount of encoding and decoding hardware, which is also related to the code rate. Errors are classified into two types: (1) random errors and (2) burst errors. In addition to occurring individually, errors can exist as the mixed mode of both types. The chapter describes the method to obtain performance for various important and widely used codes. Cyclic redundancy check is a widely known typical error-detecting code. The decoding procedure for binary Bose, Chaudhuri and Hocquenghem (BCH) codes consists of three steps: (1) calculation of syndromes, (2) calculation of error-locator polynomial using syndromes, and (3) calculation of error locations induced by the error-locator polynomial. BCH decoders, correcting two or three errors, are usually designed to determine the locations of errors directly from the syndrome.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B978012370720850009X

Video Transmission over Networks

John W. Woods, in Multidimensional Signal, Image, and Video Processing and Coding (Second Edition), 2012

Forward Error Control Coding

The FEC technique is used in many communication systems. In the simplest case, it consists of a block coding wherein a number n − k of parity bits are added to k binary information bits to create a binary channel codeword of length n. The Hamming codes are examples of binary linear codes, where the codewords exist in n-dimensional binary space. Each code is characterized by its minimum Hamming distance dmin, defined as the minimum number of bit differences between two different codewords. Thus, it takes dmin bit errors to change one codeword into another. So the error detection capability of a code is dmin−1, and the error correction capability of a code is ⌊dmin/2⌋, where ⌊⋅⌋ is the least integer function. This last is so because if fewer than ⌊dmin/2⌋ errors occur, the received string is still closer (in Hamming distance) to its error-free version than to any other codeword. Reed-Solomon (RS) codes are also linear, but operate on symbols in a so-called Galois field with 2l elements. Codewords, parity words, and minimal distance are all computed using the arithmetic of this field. An example is l = 4, which corresponds to hexadecimal arithmetic with 16 symbols. The (n, k) = (15,9) RS code has hexadecimal symbols and can correct 3 symbol errors. It codes 9 hexadecimal information symbols (36 bits) into 15 symbol codewords (60 bits) [16]. The RS codes are perfect codes, meaning that the minimum distance between codewords attains the maximum value dmin = n − k + 1 [17]. Thus an (n, k) RS code can detect up to n − k symbol errors. The RS codes are very good for bursts of errors since a short symbol error burst translates into an l times longer binary error burst, when the symbols are written in terms of their l–bit binary code [18]. These RS codes are used in the CD and DVD standards to correct error bursts on decoding.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123814203000138

Network coding and its applications to satellite systems

Fausto Vieira, Daniel E. Lucani, in Cooperative and Cognitive Satellite Systems, 2015

9.3.5 Overview of broadband multibeam satellites scenarios

In the first scenario, network coding is proposed for providing efficient soft-handover capabilities to terminals over a broadband multibeam satellite networks. The focus is unicast traffic on the forward link. It is assumed that terminals are capable of multibeam reception and that are mounted in high speed platforms such as trains and planes, where handover between (narrow) beams is a common occurrence within the duration of the trip. The network coding gains can be measured in terms of delay gain in recovering from losses, where initial results point to gains up to 70% when comparing with Reed-Solomon codes. Note that this is achieved by exploiting the multipath and FEC capabilities of network coding algorithms, and therefore the algorithms do not employ feedback mechanisms on the return link. Future work would have to focus not only of the optimization of the algorithms for generating the coded packets, but also the cross-layer design required to seamlessly integrate the network coding with the soft-handover management at the lower layers.

In the second scenario, network coding is proposed for broadband multibeam satellite and terminals capable of multibeam reception. The focus is also unicast traffic on the forward link, over multiple beams and the network coding algorithms do not employ feedback mechanisms on the return link. It is assumed that a terminal covered by multiple beams may have different packet loss probabilities per beam. The network coding gains would be in compensating the different packet loss probabilities by providing unequal levels of FEC protection over different beams. Future work would have to analyze if there are efficiencies in not providing the same packet loss probabilities over multiple beams but rather different levels of network coding resilience. Furthermore, the network coding algorithms and the cross-layer design would also need to be explored in detail.

In the third scenario, network coding is proposed for providing beam level load balancing mechanisms for broadband multibeam satellite networks. It is assumed that terminals are capable of multibeam reception and that many are covered by multiple beams. The network coding gains are expected to be around 50% in terms of the aggregate data rates that can be provided over a satellite with a conventional payload with realistic asymmetric demand patterns per beam. Future work would have to focus on the optimization of the network coding algorithms, the cross-layer design for integrating the multibeam reception, the resource allocation mechanisms, and the deployment strategies for terminals with multibeam reception capabilities.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780127999487000098

- error-correcting capability

-

- возможность исправления ошибок

возможность исправления ошибок

исправляющая способность

—

[Л.Г.Суменко. Англо-русский словарь по информационным технологиям. М.: ГП ЦНИИС, 2003.]Тематики

- информационные технологии в целом

Синонимы

- исправляющая способность

EN

- error-correcting capability

Англо-русский словарь нормативно-технической терминологии.

.

2015.

Смотреть что такое «error-correcting capability» в других словарях:

-

Time-Limited Error Recovery — (TLER) is a name used by Western Digital for a hard drive feature that allows improved error handling in a RAID environment. In some cases, there is a conflict whether error handling should be undertaken by the hard drive or by the RAID… … Wikipedia

-

Advanced Audio Coding — AAC redirects here. For other uses, see AAC (disambiguation). Advanced Audio Codings iTunes standard AAC file icon Filename extension .m4a, .m4b, .m4p, .m4v, .m4r, .3gp, .mp4, .aac Internet media type audio/aac, audio/aacp, au … Wikipedia

-

Корректирующая способность — (англ. error correcting capability) характеристика кода , описывающая возможность исправить ошибки в кодовых словах. Определяется как целое число, меньшее половины от минимального расстояния между кодовыми словами минус один в принятой… … Википедия

-

возможность исправления ошибок — исправляющая способность — [Л.Г.Суменко. Англо русский словарь по информационным технологиям. М.: ГП ЦНИИС, 2003.] Тематики информационные технологии в целом Синонимы исправляющая способность EN error correcting capability … Справочник технического переводчика

-

information theory — the mathematical theory concerned with the content, transmission, storage, and retrieval of information, usually in the form of messages or data, and esp. by means of computers. [1945 50] * * * ▪ mathematics Introduction a mathematical… … Universalium

-

Mathematics and Physical Sciences — ▪ 2003 Introduction Mathematics Mathematics in 2002 was marked by two discoveries in number theory. The first may have practical implications; the second satisfied a 150 year old curiosity. Computer scientist Manindra Agrawal of the… … Universalium

-

Abkürzungen/Computer — Dies ist eine Liste technischer Abkürzungen, die im IT Bereich verwendet werden. A [nach oben] AA Antialiasing AAA authentication, authorization and accounting, siehe Triple A System AAC Advanced Audio Coding AACS … Deutsch Wikipedia

-

Liste der Abkürzungen (Computer) — Dies ist eine Liste technischer Abkürzungen, die im IT Bereich verwendet werden. A [nach oben] AA Antialiasing AAA authentication, authorization and accounting, siehe Triple A System AAC Advanced Audio Coding AACS … Deutsch Wikipedia

-

Packet (information technology) — In information technology, a packet is a formatted unit of data carried by a packet mode computer network. Computer communications links that do not support packets, such as traditional point to point telecommunications links, simply transmit… … Wikipedia

-

Network packet — In computer networking, a packet is a formatted unit of data carried by a packet mode computer network. Computer communications links that do not support packets, such as traditional point to point telecommunications links, simply transmit data… … Wikipedia

-

Convolutional code — In telecommunication, a convolutional code is a type of error correcting code in which each m bit information symbol (each m bit string) to be encoded is transformed into an n bit symbol, where m/n is the code rate (n ≥ m) and the transformation… … Wikipedia

«Interleaver» redirects here. For the fiber-optic device, see optical interleaver.

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding[1][2][3] is a technique used for controlling errors in data transmission over unreliable or noisy communication channels.

The central idea is that the sender encodes the message in a redundant way, most often by using an error correction code or error correcting code, (ECC).[4][5] The redundancy allows the receiver not only to detect errors that may occur anywhere in the message, but often to correct a limited number of errors. Therefore a reverse channel to request re-transmission may not be needed. The cost is a fixed, higher forward channel bandwidth.

The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code.[5]

FEC can be applied in situations where re-transmissions are costly or impossible, such as one-way communication links or when transmitting to multiple receivers in multicast.

Long-latency connections also benefit; in the case of a satellite orbiting Uranus, retransmission due to errors can create a delay of five hours. FEC is widely used in modems and in cellular networks, as well.

FEC processing in a receiver may be applied to a digital bit stream or in the demodulation of a digitally modulated carrier. For the latter, FEC is an integral part of the initial analog-to-digital conversion in the receiver. The Viterbi decoder implements a soft-decision algorithm to demodulate digital data from an analog signal corrupted by noise. Many FEC decoders can also generate a bit-error rate (BER) signal which can be used as feedback to fine-tune the analog receiving electronics.

FEC information is added to mass storage (magnetic, optical and solid state/flash based) devices to enable recovery of corrupted data, and is used as ECC computer memory on systems that require special provisions for reliability.

The maximum proportion of errors or missing bits that can be corrected is determined by the design of the ECC, so different forward error correcting codes are suitable for different conditions. In general, a stronger code induces more redundancy that needs to be transmitted using the available bandwidth, which reduces the effective bit-rate while improving the received effective signal-to-noise ratio. The noisy-channel coding theorem of Claude Shannon can be used to compute the maximum achievable communication bandwidth for a given maximum acceptable error probability. This establishes bounds on the theoretical maximum information transfer rate of a channel with some given base noise level. However, the proof is not constructive, and hence gives no insight of how to build a capacity achieving code. After years of research, some advanced FEC systems like polar code[3] come very close to the theoretical maximum given by the Shannon channel capacity under the hypothesis of an infinite length frame.

How it works[edit]

ECC is accomplished by adding redundancy to the transmitted information using an algorithm. A redundant bit may be a complex function of many original information bits. The original information may or may not appear literally in the encoded output; codes that include the unmodified input in the output are systematic, while those that do not are non-systematic.

A simplistic example of ECC is to transmit each data bit 3 times, which is known as a (3,1) repetition code. Through a noisy channel, a receiver might see 8 versions of the output, see table below.

| Triplet received | Interpreted as |

|---|---|

| 000 | 0 (error-free) |

| 001 | 0 |

| 010 | 0 |

| 100 | 0 |

| 111 | 1 (error-free) |

| 110 | 1 |

| 101 | 1 |

| 011 | 1 |

This allows an error in any one of the three samples to be corrected by «majority vote», or «democratic voting». The correcting ability of this ECC is:

- Up to 1 bit of triplet in error, or

- up to 2 bits of triplet omitted (cases not shown in table).

Though simple to implement and widely used, this triple modular redundancy is a relatively inefficient ECC. Better ECC codes typically examine the last several tens or even the last several hundreds of previously received bits to determine how to decode the current small handful of bits (typically in groups of 2 to 8 bits).

Averaging noise to reduce errors[edit]

ECC could be said to work by «averaging noise»; since each data bit affects many transmitted symbols, the corruption of some symbols by noise usually allows the original user data to be extracted from the other, uncorrupted received symbols that also depend on the same user data.

- Because of this «risk-pooling» effect, digital communication systems that use ECC tend to work well above a certain minimum signal-to-noise ratio and not at all below it.

- This all-or-nothing tendency – the cliff effect – becomes more pronounced as stronger codes are used that more closely approach the theoretical Shannon limit.

- Interleaving ECC coded data can reduce the all or nothing properties of transmitted ECC codes when the channel errors tend to occur in bursts. However, this method has limits; it is best used on narrowband data.

Most telecommunication systems use a fixed channel code designed to tolerate the expected worst-case bit error rate, and then fail to work at all if the bit error rate is ever worse.

However, some systems adapt to the given channel error conditions: some instances of hybrid automatic repeat-request use a fixed ECC method as long as the ECC can handle the error rate, then switch to ARQ when the error rate gets too high;

adaptive modulation and coding uses a variety of ECC rates, adding more error-correction bits per packet when there are higher error rates in the channel, or taking them out when they are not needed.

Types of ECC[edit]

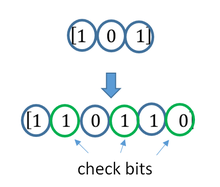

A block code (specifically a Hamming code) where redundant bits are added as a block to the end of the initial message

A continuous code convolutional code where redundant bits are added continuously into the structure of the code word

The two main categories of ECC codes are block codes and convolutional codes.

- Block codes work on fixed-size blocks (packets) of bits or symbols of predetermined size. Practical block codes can generally be hard-decoded in polynomial time to their block length.

- Convolutional codes work on bit or symbol streams of arbitrary length. They are most often soft decoded with the Viterbi algorithm, though other algorithms are sometimes used. Viterbi decoding allows asymptotically optimal decoding efficiency with increasing constraint length of the convolutional code, but at the expense of exponentially increasing complexity. A convolutional code that is terminated is also a ‘block code’ in that it encodes a block of input data, but the block size of a convolutional code is generally arbitrary, while block codes have a fixed size dictated by their algebraic characteristics. Types of termination for convolutional codes include «tail-biting» and «bit-flushing».

There are many types of block codes; Reed–Solomon coding is noteworthy for its widespread use in compact discs, DVDs, and hard disk drives. Other examples of classical block codes include Golay, BCH, Multidimensional parity, and Hamming codes.

Hamming ECC is commonly used to correct NAND flash memory errors.[6]

This provides single-bit error correction and 2-bit error detection.

Hamming codes are only suitable for more reliable single-level cell (SLC) NAND.

Denser multi-level cell (MLC) NAND may use multi-bit correcting ECC such as BCH or Reed–Solomon.[7][8] NOR Flash typically does not use any error correction.[7]

Classical block codes are usually decoded using hard-decision algorithms,[9] which means that for every input and output signal a hard decision is made whether it corresponds to a one or a zero bit. In contrast, convolutional codes are typically decoded using soft-decision algorithms like the Viterbi, MAP or BCJR algorithms, which process (discretized) analog signals, and which allow for much higher error-correction performance than hard-decision decoding.

Nearly all classical block codes apply the algebraic properties of finite fields. Hence classical block codes are often referred to as algebraic codes.

In contrast to classical block codes that often specify an error-detecting or error-correcting ability, many modern block codes such as LDPC codes lack such guarantees. Instead, modern codes are evaluated in terms of their bit error rates.

Most forward error correction codes correct only bit-flips, but not bit-insertions or bit-deletions.

In this setting, the Hamming distance is the appropriate way to measure the bit error rate.

A few forward error correction codes are designed to correct bit-insertions and bit-deletions, such as Marker Codes and Watermark Codes.

The Levenshtein distance is a more appropriate way to measure the bit error rate when using such codes.

[10]

Code-rate and the tradeoff between reliability and data rate[edit]

The fundamental principle of ECC is to add redundant bits in order to help the decoder to find out the true message that was encoded by the transmitter. The code-rate of a given ECC system is defined as the ratio between the number of information bits and the total number of bits (i.e., information plus redundancy bits) in a given communication package. The code-rate is hence a real number. A low code-rate close to zero implies a strong code that uses many redundant bits to achieve a good performance, while a large code-rate close to 1 implies a weak code.

The redundant bits that protect the information have to be transferred using the same communication resources that they are trying to protect. This causes a fundamental tradeoff between reliability and data rate.[11] In one extreme, a strong code (with low code-rate) can induce an important increase in the receiver SNR (signal-to-noise-ratio) decreasing the bit error rate, at the cost of reducing the effective data rate. On the other extreme, not using any ECC (i.e., a code-rate equal to 1) uses the full channel for information transfer purposes, at the cost of leaving the bits without any additional protection.

One interesting question is the following: how efficient in terms of information transfer can an ECC be that has a negligible decoding error rate? This question was answered by Claude Shannon with his second theorem, which says that the channel capacity is the maximum bit rate achievable by any ECC whose error rate tends to zero:[12] His proof relies on Gaussian random coding, which is not suitable to real-world applications. The upper bound given by Shannon’s work inspired a long journey in designing ECCs that can come close to the ultimate performance boundary. Various codes today can attain almost the Shannon limit. However, capacity achieving ECCs are usually extremely complex to implement.

The most popular ECCs have a trade-off between performance and computational complexity. Usually, their parameters give a range of possible code rates, which can be optimized depending on the scenario. Usually, this optimization is done in order to achieve a low decoding error probability while minimizing the impact to the data rate. Another criterion for optimizing the code rate is to balance low error rate and retransmissions number in order to the energy cost of the communication.[13]

Concatenated ECC codes for improved performance[edit]

Classical (algebraic) block codes and convolutional codes are frequently combined in concatenated coding schemes in which a short constraint-length Viterbi-decoded convolutional code does most of the work and a block code (usually Reed–Solomon) with larger symbol size and block length «mops up» any errors made by the convolutional decoder. Single pass decoding with this family of error correction codes can yield very low error rates, but for long range transmission conditions (like deep space) iterative decoding is recommended.

Concatenated codes have been standard practice in satellite and deep space communications since Voyager 2 first used the technique in its 1986 encounter with Uranus. The Galileo craft used iterative concatenated codes to compensate for the very high error rate conditions caused by having a failed antenna.

Low-density parity-check (LDPC)[edit]

Low-density parity-check (LDPC) codes are a class of highly efficient linear block

codes made from many single parity check (SPC) codes. They can provide performance very close to the channel capacity (the theoretical maximum) using an iterated soft-decision decoding approach, at linear time complexity in terms of their block length. Practical implementations rely heavily on decoding the constituent SPC codes in parallel.

LDPC codes were first introduced by Robert G. Gallager in his PhD thesis in 1960,

but due to the computational effort in implementing encoder and decoder and the introduction of Reed–Solomon codes,

they were mostly ignored until the 1990s.

LDPC codes are now used in many recent high-speed communication standards, such as DVB-S2 (Digital Video Broadcasting – Satellite – Second Generation), WiMAX (IEEE 802.16e standard for microwave communications), High-Speed Wireless LAN (IEEE 802.11n),[14] 10GBase-T Ethernet (802.3an) and G.hn/G.9960 (ITU-T Standard for networking over power lines, phone lines and coaxial cable). Other LDPC codes are standardized for wireless communication standards within 3GPP MBMS (see fountain codes).

Turbo codes[edit]

Turbo coding is an iterated soft-decoding scheme that combines two or more relatively simple convolutional codes and an interleaver to produce a block code that can perform to within a fraction of a decibel of the Shannon limit. Predating LDPC codes in terms of practical application, they now provide similar performance.

One of the earliest commercial applications of turbo coding was the CDMA2000 1x (TIA IS-2000) digital cellular technology developed by Qualcomm and sold by Verizon Wireless, Sprint, and other carriers. It is also used for the evolution of CDMA2000 1x specifically for Internet access, 1xEV-DO (TIA IS-856). Like 1x, EV-DO was developed by Qualcomm, and is sold by Verizon Wireless, Sprint, and other carriers (Verizon’s marketing name for 1xEV-DO is Broadband Access, Sprint’s consumer and business marketing names for 1xEV-DO are Power Vision and Mobile Broadband, respectively).

Local decoding and testing of codes[edit]

Sometimes it is only necessary to decode single bits of the message, or to check whether a given signal is a codeword, and do so without looking at the entire signal. This can make sense in a streaming setting, where codewords are too large to be classically decoded fast enough and where only a few bits of the message are of interest for now. Also such codes have become an important tool in computational complexity theory, e.g., for the design of probabilistically checkable proofs.

Locally decodable codes are error-correcting codes for which single bits of the message can be probabilistically recovered by only looking at a small (say constant) number of positions of a codeword, even after the codeword has been corrupted at some constant fraction of positions. Locally testable codes are error-correcting codes for which it can be checked probabilistically whether a signal is close to a codeword by only looking at a small number of positions of the signal.

Interleaving[edit]

«Interleaver» redirects here. For the fiber-optic device, see optical interleaver.

A short illustration of interleaving idea

Interleaving is frequently used in digital communication and storage systems to improve the performance of forward error correcting codes. Many communication channels are not memoryless: errors typically occur in bursts rather than independently. If the number of errors within a code word exceeds the error-correcting code’s capability, it fails to recover the original code word. Interleaving alleviates this problem by shuffling source symbols across several code words, thereby creating a more uniform distribution of errors.[15] Therefore, interleaving is widely used for burst error-correction.

The analysis of modern iterated codes, like turbo codes and LDPC codes, typically assumes an independent distribution of errors.[16] Systems using LDPC codes therefore typically employ additional interleaving across the symbols within a code word.[17]

For turbo codes, an interleaver is an integral component and its proper design is crucial for good performance.[15][18] The iterative decoding algorithm works best when there are not short cycles in the factor graph that represents the decoder; the interleaver is chosen to avoid short cycles.

Interleaver designs include:

- rectangular (or uniform) interleavers (similar to the method using skip factors described above)

- convolutional interleavers

- random interleavers (where the interleaver is a known random permutation)

- S-random interleaver (where the interleaver is a known random permutation with the constraint that no input symbols within distance S appear within a distance of S in the output).[19]

- a contention-free quadratic permutation polynomial (QPP).[20] An example of use is in the 3GPP Long Term Evolution mobile telecommunication standard.[21]

In multi-carrier communication systems, interleaving across carriers may be employed to provide frequency diversity, e.g., to mitigate frequency-selective fading or narrowband interference.[22]

Example[edit]

Transmission without interleaving:

Error-free message: aaaabbbbccccddddeeeeffffgggg Transmission with a burst error: aaaabbbbccc____deeeeffffgggg

Here, each group of the same letter represents a 4-bit one-bit error-correcting codeword. The codeword cccc is altered in one bit and can be corrected, but the codeword dddd is altered in three bits, so either it cannot be decoded at all or it might be decoded incorrectly.

With interleaving:

Error-free code words: aaaabbbbccccddddeeeeffffgggg Interleaved: abcdefgabcdefgabcdefgabcdefg Transmission with a burst error: abcdefgabcd____bcdefgabcdefg Received code words after deinterleaving: aa_abbbbccccdddde_eef_ffg_gg

In each of the codewords «aaaa», «eeee», «ffff», and «gggg», only one bit is altered, so one-bit error-correcting code will decode everything correctly.

Transmission without interleaving:

Original transmitted sentence: ThisIsAnExampleOfInterleaving Received sentence with a burst error: ThisIs______pleOfInterleaving

The term «AnExample» ends up mostly unintelligible and difficult to correct.

With interleaving:

Transmitted sentence: ThisIsAnExampleOfInterleaving... Error-free transmission: TIEpfeaghsxlIrv.iAaenli.snmOten. Received sentence with a burst error: TIEpfe______Irv.iAaenli.snmOten. Received sentence after deinterleaving: T_isI_AnE_amp_eOfInterle_vin_...

No word is completely lost and the missing letters can be recovered with minimal guesswork.

Disadvantages of interleaving[edit]

Use of interleaving techniques increases total delay. This is because the entire interleaved block must be received before the packets can be decoded.[23] Also interleavers hide the structure of errors; without an interleaver, more advanced decoding algorithms can take advantage of the error structure and achieve more reliable communication than a simpler decoder combined with an interleaver[citation needed]. An example of such an algorithm is based on neural network[24] structures.

Software for error-correcting codes[edit]

Simulating the behaviour of error-correcting codes (ECCs) in software is a common practice to design, validate and improve ECCs. The upcoming wireless 5G standard raises a new range of applications for the software ECCs: the Cloud Radio Access Networks (C-RAN) in a Software-defined radio (SDR) context. The idea is to directly use software ECCs in the communications. For instance in the 5G, the software ECCs could be located in the cloud and the antennas connected to this computing resources: improving this way the flexibility of the communication network and eventually increasing the energy efficiency of the system.

In this context, there are various available Open-source software listed below (non exhaustive).

- AFF3CT(A Fast Forward Error Correction Toolbox): a full communication chain in C++ (many supported codes like Turbo, LDPC, Polar codes, etc.), very fast and specialized on channel coding (can be used as a program for simulations or as a library for the SDR).

- IT++: a C++ library of classes and functions for linear algebra, numerical optimization, signal processing, communications, and statistics.

- OpenAir: implementation (in C) of the 3GPP specifications concerning the Evolved Packet Core Networks.

List of error-correcting codes[edit]

| Distance | Code |

|---|---|

| 2 (single-error detecting) | Parity |

| 3 (single-error correcting) | Triple modular redundancy |

| 3 (single-error correcting) | perfect Hamming such as Hamming(7,4) |

| 4 (SECDED) | Extended Hamming |

| 5 (double-error correcting) | |

| 6 (double-error correct-/triple error detect) | Nordstrom-Robinson code |

| 7 (three-error correcting) | perfect binary Golay code |

| 8 (TECFED) | extended binary Golay code |

- AN codes

- BCH code, which can be designed to correct any arbitrary number of errors per code block.

- Barker code used for radar, telemetry, ultra sound, Wifi, DSSS mobile phone networks, GPS etc.

- Berger code

- Constant-weight code

- Convolutional code

- Expander codes

- Group codes

- Golay codes, of which the Binary Golay code is of practical interest

- Goppa code, used in the McEliece cryptosystem

- Hadamard code

- Hagelbarger code

- Hamming code

- Latin square based code for non-white noise (prevalent for example in broadband over powerlines)

- Lexicographic code

- Linear Network Coding, a type of erasure correcting code across networks instead of point-to-point links

- Long code

- Low-density parity-check code, also known as Gallager code, as the archetype for sparse graph codes

- LT code, which is a near-optimal rateless erasure correcting code (Fountain code)

- m of n codes

- Nordstrom-Robinson code, used in Geometry and Group Theory[25]

- Online code, a near-optimal rateless erasure correcting code

- Polar code (coding theory)

- Raptor code, a near-optimal rateless erasure correcting code

- Reed–Solomon error correction

- Reed–Muller code

- Repeat-accumulate code

- Repetition codes, such as Triple modular redundancy

- Spinal code, a rateless, nonlinear code based on pseudo-random hash functions[26]

- Tornado code, a near-optimal erasure correcting code, and the precursor to Fountain codes

- Turbo code

- Walsh–Hadamard code

- Cyclic redundancy checks (CRCs) can correct 1-bit errors for messages at most

bits long for optimal generator polynomials of degree

, see Mathematics of cyclic redundancy checks#Bitfilters

See also[edit]

- Code rate

- Erasure codes

- Soft-decision decoder

- Burst error-correcting code

- Error detection and correction

- Error-correcting codes with feedback

References[edit]

- ^ Charles Wang; Dean Sklar; Diana Johnson (Winter 2001–2002). «Forward Error-Correction Coding». Crosslink. The Aerospace Corporation. 3 (1). Archived from the original on 14 March 2012. Retrieved 5 March 2006.

- ^ Charles Wang; Dean Sklar; Diana Johnson (Winter 2001–2002). «Forward Error-Correction Coding». Crosslink. The Aerospace Corporation. 3 (1). Archived from the original on 14 March 2012. Retrieved 5 March 2006.

How Forward Error-Correcting Codes Work]

- ^ a b Maunder, Robert (2016). «Overview of Channel Coding».

- ^ Glover, Neal; Dudley, Trent (1990). Practical Error Correction Design For Engineers (Revision 1.1, 2nd ed.). CO, USA: Cirrus Logic. ISBN 0-927239-00-0.

- ^ a b Hamming, Richard Wesley (April 1950). «Error Detecting and Error Correcting Codes». Bell System Technical Journal. USA: AT&T. 29 (2): 147–160. doi:10.1002/j.1538-7305.1950.tb00463.x. S2CID 61141773.

- ^ «Hamming codes for NAND flash memory devices» Archived 21 August 2016 at the Wayback Machine. EE Times-Asia. Apparently based on «Micron Technical Note TN-29-08: Hamming Codes for NAND Flash Memory Devices». 2005. Both say: «The Hamming algorithm is an industry-accepted method for error detection and correction in many SLC NAND flash-based applications.»

- ^ a b «What Types of ECC Should Be Used on Flash Memory?» (Application note). Spansion. 2011.

Both Reed–Solomon algorithm and BCH algorithm are common ECC choices for MLC NAND flash. … Hamming based block codes are the most commonly used ECC for SLC…. both Reed–Solomon and BCH are able to handle multiple errors and are widely used on MLC flash.

- ^ Jim Cooke (August 2007). «The Inconvenient Truths of NAND Flash Memory» (PDF). p. 28.

For SLC, a code with a correction threshold of 1 is sufficient. t=4 required … for MLC.

- ^ Baldi, M.; Chiaraluce, F. (2008). «A Simple Scheme for Belief Propagation Decoding of BCH and RS Codes in Multimedia Transmissions». International Journal of Digital Multimedia Broadcasting. 2008: 1–12. doi:10.1155/2008/957846.

- ^ Shah, Gaurav; Molina, Andres; Blaze, Matt (2006). «Keyboards and covert channels». USENIX. Retrieved 20 December 2018.

- ^ Tse, David; Viswanath, Pramod (2005), Fundamentals of Wireless Communication, Cambridge University Press, UK

- ^ Shannon, C. E. (1948). «A mathematical theory of communication» (PDF). Bell System Technical Journal. 27 (3–4): 379–423 & 623–656. doi:10.1002/j.1538-7305.1948.tb01338.x. hdl:11858/00-001M-0000-002C-4314-2.

- ^ Rosas, F.; Brante, G.; Souza, R. D.; Oberli, C. (2014). «Optimizing the code rate for achieving energy-efficient wireless communications». Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC). pp. 775–780. doi:10.1109/WCNC.2014.6952166. ISBN 978-1-4799-3083-8.

- ^ IEEE Standard, section 20.3.11.6 «802.11n-2009» Archived 3 February 2013 at the Wayback Machine, IEEE, 29 October 2009, accessed 21 March 2011.

- ^ a b Vucetic, B.; Yuan, J. (2000). Turbo codes: principles and applications. Springer Verlag. ISBN 978-0-7923-7868-6.

- ^ Luby, Michael; Mitzenmacher, M.; Shokrollahi, A.; Spielman, D.; Stemann, V. (1997). «Practical Loss-Resilient Codes». Proc. 29th Annual Association for Computing Machinery (ACM) Symposium on Theory of Computation.

- ^ «Digital Video Broadcast (DVB); Second generation framing structure, channel coding and modulation systems for Broadcasting, Interactive Services, News Gathering and other satellite broadband applications (DVB-S2)». En 302 307. ETSI (V1.2.1). April 2009.

- ^ Andrews, K. S.; Divsalar, D.; Dolinar, S.; Hamkins, J.; Jones, C. R.; Pollara, F. (November 2007). «The Development of Turbo and LDPC Codes for Deep-Space Applications». Proceedings of the IEEE. 95 (11): 2142–2156. doi:10.1109/JPROC.2007.905132. S2CID 9289140.

- ^ Dolinar, S.; Divsalar, D. (15 August 1995). «Weight Distributions for Turbo Codes Using Random and Nonrandom Permutations». TDA Progress Report. 122: 42–122. Bibcode:1995TDAPR.122…56D. CiteSeerX 10.1.1.105.6640.

- ^ Takeshita, Oscar (2006). «Permutation Polynomial Interleavers: An Algebraic-Geometric Perspective». IEEE Transactions on Information Theory. 53 (6): 2116–2132. arXiv:cs/0601048. Bibcode:2006cs……..1048T. doi:10.1109/TIT.2007.896870. S2CID 660.

- ^ 3GPP TS 36.212, version 8.8.0, page 14

- ^ «Digital Video Broadcast (DVB); Frame structure, channel coding and modulation for a second generation digital terrestrial television broadcasting system (DVB-T2)». En 302 755. ETSI (V1.1.1). September 2009.

- ^ Techie (3 June 2010). «Explaining Interleaving». W3 Techie Blog. Retrieved 3 June 2010.

- ^ Krastanov, Stefan; Jiang, Liang (8 September 2017). «Deep Neural Network Probabilistic Decoder for Stabilizer Codes». Scientific Reports. 7 (1): 11003. arXiv:1705.09334. Bibcode:2017NatSR…711003K. doi:10.1038/s41598-017-11266-1. PMC 5591216. PMID 28887480.

- ^ Nordstrom, A.W.; Robinson, J.P. (1967), «An optimum nonlinear code», Information and Control, 11 (5–6): 613–616, doi:10.1016/S0019-9958(67)90835-2

- ^ Perry, Jonathan; Balakrishnan, Hari; Shah, Devavrat (2011). «Rateless Spinal Codes». Proceedings of the 10th ACM Workshop on Hot Topics in Networks. pp. 1–6. doi:10.1145/2070562.2070568. hdl:1721.1/79676. ISBN 9781450310598.

Further reading[edit]

- MacWilliams, Florence Jessiem; Sloane, Neil James Alexander (2007) [1977]. Written at AT&T Shannon Labs, Florham Park, New Jersey, USA. The Theory of Error-Correcting Codes. North-Holland Mathematical Library. Vol. 16 (digital print of 12th impression, 1st ed.). Amsterdam / London / New York / Tokyo: North-Holland / Elsevier BV. ISBN 978-0-444-85193-2. LCCN 76-41296. (xxii+762+6 pages)

- Clark, Jr., George C.; Cain, J. Bibb (1981). Error-Correction Coding for Digital Communications. New York, USA: Plenum Press. ISBN 0-306-40615-2.

- Arazi, Benjamin (1987). Swetman, Herb (ed.). A Commonsense Approach to the Theory of Error Correcting Codes. MIT Press Series in Computer Systems. Vol. 10 (1 ed.). Cambridge, Massachusetts, USA / London, UK: Massachusetts Institute of Technology. ISBN 0-262-01098-4. LCCN 87-21889. (x+2+208+4 pages)

- Wicker, Stephen B. (1995). Error Control Systems for Digital Communication and Storage. Englewood Cliffs, New Jersey, USA: Prentice-Hall. ISBN 0-13-200809-2.

- Wilson, Stephen G. (1996). Digital Modulation and Coding. Englewood Cliffs, New Jersey, USA: Prentice-Hall. ISBN 0-13-210071-1.

- «Error Correction Code in Single Level Cell NAND Flash memories» 2007-02-16

- «Error Correction Code in NAND Flash memories» 2004-11-29

- Observations on Errors, Corrections, & Trust of Dependent Systems, by James Hamilton, 2012-02-26

- Sphere Packings, Lattices and Groups, By J. H. Conway, Neil James Alexander Sloane, Springer Science & Business Media, 2013-03-09 – Mathematics – 682 pages.

External links[edit]

- Morelos-Zaragoza, Robert (2004). «The Correcting Codes (ECC) Page». Retrieved 5 March 2006.

- lpdec: library for LP decoding and related things (Python)

Cyclic Redundancy Check Codes

-

CRC-Code Features

-

CRC Non-Direct Algorithm

-

Example Using CRC Non-Direct Algorithm

-

CRC Direct Algorithm

-

Selected Bibliography for CRC Coding

CRC-Code Features

Cyclic redundancy check (CRC) coding is an error-control coding technique for

detecting errors that occur when a message is transmitted. Unlike block or

convolutional codes, CRC codes do not have a built-in error-correction

capability. Instead, when a communications system detects an error in a received

message word, the receiver requests the sender to retransmit the message word.

In CRC coding, the transmitter applies a rule to each message word to create

extra bits, called the checksum, or

syndrome, and then appends the checksum to the message

word. After receiving a transmitted word, the receiver applies the same rule to

the received word. If the resulting checksum is nonzero, an error has occurred,

and the transmitter should resend the message word.

Open the Error Detection and Correction library by double-clicking its icon in

the main Communications Toolbox™ block library. Open the CRC sublibrary by double-clicking on its

icon in the Error Detection and Correction library.

Communications Toolbox supports CRC Coding using Simulink® blocks, System objects, or MATLAB® objects. These CRC coding features are listed in Error Detection and Correction.

CRC Non-Direct Algorithm

The CRC non-direct algorithm accepts a binary data vector, corresponding to a

polynomial M, and appends a checksum of r bits, corresponding

to a polynomial C. The concatenation of the input vector and

the checksum then corresponds to the polynomial T =

M*xr

+ C, since multiplying by

xr corresponds to shifting the

input vector r bits to the left. The algorithm chooses the

checksum C so that T is divisible by a

predefined polynomial P of degree r,

called the generator polynomial.

The algorithm divides T by P, and sets

the checksum equal to the binary vector corresponding to the remainder. That is,

if T =

Q*P +

R, where R is a polynomial of degree

less than r, the checksum is the binary vector corresponding

to R. If necessary, the algorithm prepends zeros to the

checksum so that it has length r.

The CRC generation feature, which implements the transmission phase of the CRC

algorithm, does the following:

-

Left shifts the input data vector by r bits and

divides the corresponding polynomial by P. -

Sets the checksum equal to the binary vector of length

r, corresponding to the remainder from step

1. -

Appends the checksum to the input data vector. The result is the

output vector.

The CRC detection feature computes the checksum for its entire input vector,

as described above.

The CRC algorithm uses binary vectors to represent binary polynomials, in

descending order of powers. For example, the vector [1 1 0 1]

represents the polynomial x3 +

x2 + 1.

Note

The implementation described in this section is one of many valid

implementations of the CRC algorithm. Different implementations can yield

different numerical results.

Bits enter the linear feedback shift register (LFSR) from the lowest index bit

to the highest index bit. The sequence of input message bits represents the

coefficients of a message polynomial in order of decreasing powers. The message

vector is augmented with r zeros to flush out the LFSR, where

r is the degree of the generator polynomial. If the

output from the leftmost register stage d(1) is a 1, then the bits in the shift

register are XORed with the coefficients of the generator polynomial. When the

augmented message sequence is completely sent through the LFSR, the register

contains the checksum [d(1) d(2) . . . d(r)]. This is an implementation of

binary long division, in which the message sequence is the divisor (numerator)

and the polynomial is the dividend (denominator). The CRC checksum is the

remainder of the division operation.

Example Using CRC Non-Direct Algorithm

Suppose the input frame is [1 1 0 0 1 1 0]', corresponding

to the polynomial M = x6

+x

5 + x2

+ x, and the generator polynomial is

P = x3 +

x2 + 1, of degree

r = 3. By polynomial division,

M*x3

= (x6 +

x3 +

x)*P +

x. The remainder is R =

x, so that the checksum is then [0 1. An extra 0 is added on the left to make the checksum have

0]'

length 3.

CRC Direct Algorithm

where

Message Block Input is m0, m1, … , mk−1

Code Word Output is c0, c1,… , cn−1=m0, m1,… ,mk−1,︸Xd0,d1, … , dn−k−1︸Y

The initial step of the direct CRC encoding occurs with the three switches in

position X. The algorithm feeds k message bits to the

encoder. These bits are the first k bits of the code word

output. Simultaneously, the algorithm sends k bits to the

linear feedback shift register (LFSR). When the system completely feeds the

kth message bit to the LFSR, the switches move to

position Y. Here, the LFSR contains the mathematical remainder from the

polynomial division. These bits are shifted out of the LFSR and they are the

remaining bits (checksum) of the code word output.

Selected Bibliography for CRC Coding

[1] Sklar, Bernard., Digital

Communications: Fundamentals and Applications, Englewood

Cliffs, NJ, Prentice Hall, 1988.

[2] Wicker, Stephen B., Error Control

Systems for Digital Communication and Storage, Upper Saddle

River, NJ, Prentice Hall, 1995.

Block Codes

-

Block-Coding Features

-

Terminology

-

Data Formats for Block Coding

-

Using Block Encoders and Decoders Within a Model

-

Examples of Block Coding

-

Notes on Specific Block-Coding Techniques

-

Shortening, Puncturing, and Erasures

-

Reed-Solomon Code in Integer Format

-

Find a Generator Polynomial

-

Performing Other Block Code Tasks

-

Selected Bibliography for Block Coding

Block-Coding Features

Error-control coding techniques detect, and possibly correct, errors that

occur when messages are transmitted in a digital communication system. To

accomplish this, the encoder transmits not only the information symbols but also

extra redundant symbols. The decoder interprets what it receives, using the

redundant symbols to detect and possibly correct whatever errors occurred during

transmission. You might use error-control coding if your transmission channel is

very noisy or if your data is very sensitive to noise. Depending on the nature

of the data or noise, you might choose a specific type of error-control

coding.

Block coding is a special case of error-control coding. Block-coding

techniques map a fixed number of message symbols to a fixed number of code

symbols. A block coder treats each block of data independently and is a

memoryless device. Communications Toolbox contains block-coding capabilities by providing Simulink blocks, System objects, and MATLAB functions.

The class of block-coding techniques includes categories shown in the diagram

below.

Communications Toolbox supports general linear block codes. It also process cyclic, BCH,

Hamming, and Reed-Solomon codes (which are all special kinds of linear block

codes). Blocks in the product can encode or decode a message using one of the

previously mentioned techniques. The Reed-Solomon and BCH decoders indicate how

many errors they detected while decoding. The Reed-Solomon coding blocks also

let you decide whether to use symbols or bits as your data.

Note

The blocks and functions in Communications Toolbox are designed for error-control codes that use an alphabet

having 2 or 2m symbols.

Communications Toolbox Support Functions. Functions in Communications Toolbox can support simulation blocks by

-

Determining characteristics of a technique, such as

error-correction capability or possible message lengths -

Performing lower-level computations associated with a technique,

such as-

Computing a truth table

-

Computing a generator or parity-check matrix

-

Converting between generator and parity-check

matrices -

Computing a generator polynomial

-

For more information about error-control coding capabilities, see Block

Codes.

Terminology

Throughout this section, the information to be encoded consists of

message symbols and the code that is produced consists

of codewords.

Each block of K message symbols is encoded into a codeword

that consists of N message symbols. K is

called the message length, N is called the codeword length,

and the code is called an [N,K]

code.

Data Formats for Block Coding

Each message or codeword is an ordered grouping of symbols. Each block in the

Block Coding sublibrary processes one word in each time step, as described in

the following section, Binary Format (All Coding Methods). Reed-Solomon coding blocks also

let you choose between binary and integer data, as described in Integer Format (Reed-Solomon Only).

Binary Format (All Coding Methods). You can structure messages and codewords as binary

vector signals, where each vector represents a

message word or a codeword. At a given time, the encoder receives an entire

message word, encodes it, and outputs the entire codeword. The message and

code signals operate over the same sample time.

This example illustrates the encoder receiving a four-bit message and

producing a five-bit codeword at time 0. It repeats this process with a new

message at time 1.

For all coding techniques except Reed-Solomon using

binary input, the message vector must have length K and the corresponding

code vector has length N. For Reed-Solomon codes with binary input, the

symbols for the code are binary sequences of length M, corresponding to

elements of the Galois field GF(2M). In this

case, the message vector must have length M*K and the corresponding code

vector has length M*N. The Binary-Input RS Encoder block and the

Binary-Output RS Decoder block use this format for messages and

codewords.

If the input to a block-coding block is a frame-based vector, it must be a

column vector instead of a row vector.

To produce sample-based messages in the binary format, you can configure

the Bernoulli Binary Generator block

so that its Probability of a zero parameter is a vector

whose length is that of the signal you want to create. To produce

frame-based messages in the binary format, you can configure the same block

so that its Probability of a zero parameter is a scalar

and its Samples per frame parameter is the length of

the signal you want to create.

Using Serial Signals

If you prefer to structure messages and codewords as scalar signals, where

several samples jointly form a message word or codeword, you can use the

Buffer and Unbuffer blocks. Buffering

involves latency and multirate processing. If your model computes error

rates, the initial delay in the coding-buffering combination influences the

Receive delay parameter in the Error Rate Calculation block.

You can display the sample times of signals in your model. On the

tab, expand . In the

section, select . Alternatively, you can

attach Probe (Simulink) blocks to connector

lines to help evaluate sample timing, buffering, and delays.

Integer Format (Reed-Solomon Only). A message word for an [N,K] Reed-Solomon code consists of M*K bits, which

you can interpret as K symbols from 0 to 2M. The

symbols are binary sequences of length M, corresponding to elements of the

Galois field GF(2M), in descending order of

powers. The integer format for Reed-Solomon codes lets you structure

messages and codewords as integer signals instead of

binary signals. (The input must be a frame-based column vector.)

Note

In this context, Simulink expects the first bit

to be the most significant bit in the symbol, as well as the smallest

index in a vector or the smallest time for a series of scalars.

The following figure illustrates the equivalence between binary and

integer signals for a Reed-Solomon encoder. The case for the decoder is

similar.

To produce sample-based messages in the integer format, you can configure

the Random Integer Generator block so that M-ary number

and Initial seed parameters are vectors of

the desired length and all entries of the M-ary number

vector are 2M. To produce frame-based messages in

the integer format, you can configure the same block so that its

M-ary number and Initial seed

parameters are scalars and its Samples per frame

parameter is the length of the signal you want to create.

Using Block Encoders and Decoders Within a Model

Once you have configured the coding blocks, a few tips can help you place them

correctly within your model:

-

If a block has multiple outputs, the first one is always the stream of

coding data.The Reed-Solomon and BCH blocks have an error counter as a second

output. -

Be sure that the signal sizes are appropriate for the mask parameters.

For example, if you use the Binary Cyclic Encoder block and set

Message length K to4,

the input signal must be a vector of length 4.You can display the size of signals in your model. On the

tab, expand

. In the

section, select

.