Pages Previous 1 2 3 4 5 … 24 Next

You must login or register to post a reply

51 06-02-2014 01:09:01

- MAG79

- SVP developer

- Offline

- Thanks: 1084

Re: Настройка отрисовщика madVR

Noweol

Да, удалось. Держит удвоение разрешения для 1280x720p при 24 к/сек. Для этого нужен драйвер 327.23 или более ранний. Последние драйвера не работали с NNED3 OpenCL. madshi начал переносить работу аппаратно-ускоренных алгоритмов с OpenCL на DirectCompute (поддерживаются D3D11 совместимые вимдеокарты, т.е. Nvidia 2xx и AMD 4xxx уже не подходят). На данный момент он перенес только дебандинг Error Diffusion. Это сократило объем требуемой видеопамяти вдвое и увеличило скорость работы на 10-25%.

Скачать madVR 0.78.4 бета3 с Error Diffusion на DirectCompute: http://madshi.net/madVRdirectCompute3.rar

Сейчас madshi работает над переносом NNEDI3 с OpenCL на DirectCompute. Так что я пока отложил замеры.

52 Reply by MAG79 06-02-2014 01:48:10

- MAG79

- SVP developer

- Offline

- Thanks: 1084

Re: Настройка отрисовщика madVR

По поводу качества удвоителя NNEDI3. Вот нарезка из сравнения качества увеличения размера в 4 раза алгоритмов Nearest Neibour, Jinc3 и NNEDI3.

Как видно, NNEDI3 лучше сглаживает наклонные линии и практически полностью убирает пикселизацию. Резкость при этом не падает так сильно, как при использовании Jinc3.

Post’s attachments

Mario_NNEDI3.gif 95.82 kb, 1023 downloads since 2014-02-05

53 Reply by NightFox 07-02-2014 01:45:03

- NightFox

- Beta Tester

- Offline

- Thanks: 42

Re: Настройка отрисовщика madVR

Его бы в эмуляторы прикрутить.

54 Reply by nemoW 07-02-2014 15:19:42

- nemoW

- Beta Tester

- Offline

- Thanks: 47

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

http://madshi.net/madVRdirectCompute5.rar

В общем, на R7850 либо SVP, либо NNEDI. Вместе сплошные Dropped frames идут.

55 Reply by Noweol 07-02-2014 15:58:21

- Noweol

- Beta Tester

- Offline

- Thanks: 133

Re: Настройка отрисовщика madVR

И это печально… Видяйка-то из топовых…

56 Reply by MAG79 08-02-2014 16:14:50

- MAG79

- SVP developer

- Offline

- Thanks: 1084

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

madVRdirectCompute5.rar все еще с OpenCL реализацией NNEDI3. madshi пишет, что пока ему не удается решить проблему 10-ти кратного падения производительности алгоритма NNEDI3, портированного на DirectCompute. Он взял тайм-аут. Учитывая не скорое появление обещанного быстрого аппаратно-ускоренного NNEDI3, я померял имеющийся OpenCL-алгоритм NNEDI3.

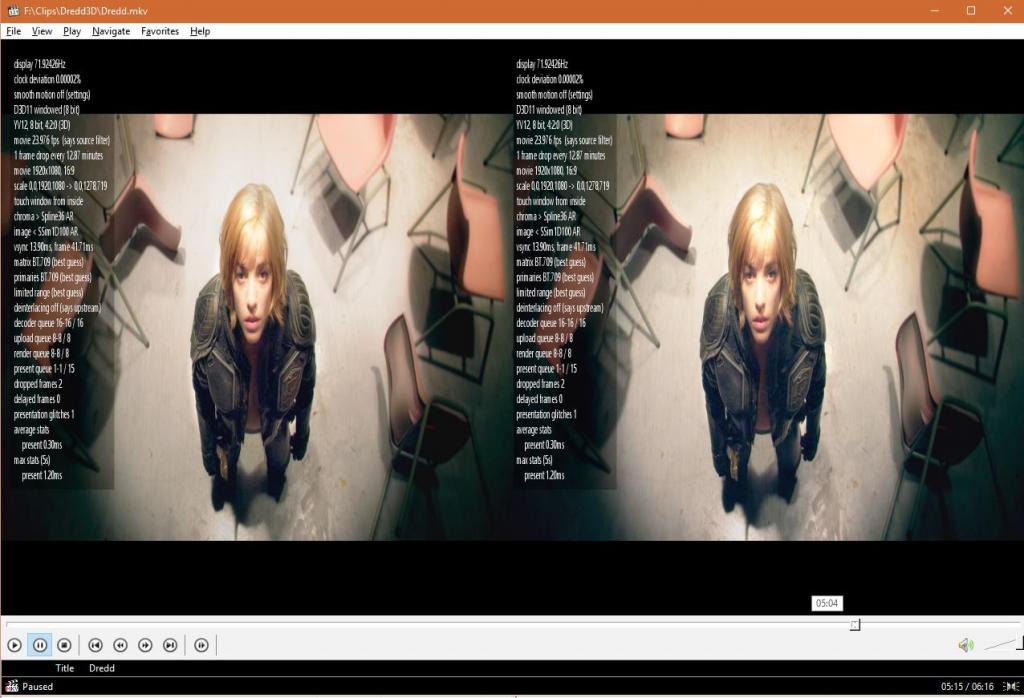

софт: SVP 3.1.5, MPC-HC 1.7.3, madVR 0.87.4 directCompute5, настройки по-умолчанию, включено NNEDI3 удвоение разрешения

железо: core i5-3570K, GTX 660 Ti (GeForce driver 327.23)

Максимальное разрешение, которое смогла удвоить эта конфигурация через NNEDI3 без дропов:

видео 1280×720 60 к/сек, увеличение до 1920×1080. загрузка GPU 77%, загрузка Memory Controller 30%, загрузка CPU 33%

(поток 55,3 МПикс/сек)

видео 1024×432 120 к/сек, увеличение до 1920×1080. загрузка GPU 80%, загрузка Memory Controller 35%, загрузка CPU 33%

(поток 53,1 МПикс/сек)

видео 1152×448 120 к/сек, увеличение до 1920×1080. загрузка GPU 89%, загрузка Memory Controller 36%, загрузка CPU 38%

(поток 61,9 МПикс/сек)

Максимальные цифры отмечены красным.

57 Reply by Noweol 08-02-2014 16:45:20

- Noweol

- Beta Tester

- Offline

- Thanks: 133

Re: Настройка отрисовщика madVR

Хорошие цифры. Это значит, что при наличии middle-end видяйки, nnedi3 реально можно пользоваться

58 Reply by Chainik 08-02-2014 21:13:39

- Chainik

- SVP developer

- Offline

- Thanks: 1457

Re: Настройка отрисовщика madVR

MAG79

Картинки бы сравнить, чуть более реальные чем пиксельно-мультяшная синтетика причем с двумя ресайзами (ну типа что «1280×720 увеличение до 1920×1080»)

А еще, для чистоты эксперимента, сравнить скорость с программным NNEDI3 в ависните.

59 Reply by fakel 14-02-2014 17:40:34 (edited by fakel 14-02-2014 18:49:10)

- fakel

- Member

- Offline

- Thanks: 73

Re: Настройка отрисовщика madVR

Добрый день, подскажите пожалуйста так влияет ли на качество изображения «галка» в «enable automatic exclusive fullscreen mode»? У меня связка SVP 3.1.5+MadVR 0.87.4+POT (сборка 7sh3 1.5.44465×86) при включении этой галки, при автоматическом переходе в списке воспроизведения на следующий файл намертво виснет плеер. И еще, с момента выхода этой инструкции: svp-team.com/wiki/Настройка_MadVR ничего не поменялось? Может кто поделится настройками, как его правильно настроить(максимальное качество)? i5 2500K | 4Gb | MSI GTX560 Ti | Win 8.1 64.

60 Reply by MAG79 15-02-2014 04:33:57

- MAG79

- SVP developer

- Offline

- Thanks: 1084

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

fakel

с момента выхода этой инструкции: svp-team.com/wiki/Настройка_MadVR ничего не поменялось?

Менялось. Инструкция устарела.

Может кто поделится настройками, как его правильно настроить(максимальное качество)? i5 2500K | 4Gb | MSI GTX560 Ti | Win 8.1 64

Самая лучшая инструкция — это сбросить настройки madVR к значениям по-умолчанию. Менять только то, что понимаешь. Все остальное уже настроено разработчиком и его армией поклонников на doom9.org.

так влияет ли на качество изображения «галка» в «enable automatic exclusive fullscreen mode»?

Она имеет своей целью исключить появление выпавших кадров. См. статистику по ctrl-j. Если она не помогает, то вместо нее можно включить галку «enable windowed overlay» либо «disable desktop composition». У них та же самая цель, только иная реализация этой цели.

61 Reply by fakel 15-02-2014 09:49:26

- fakel

- Member

- Offline

- Thanks: 73

Re: Настройка отрисовщика madVR

MAG79

Спасибо большое, извините если глупые вопросы, пытаюсь понимать…

62 Reply by psnsergey 01-03-2014 01:52:40 (edited by psnsergey 01-03-2014 01:54:06)

- psnsergey

- Member

- Offline

Re: Настройка отрисовщика madVR

MAG79 wrote:

По поводу качества удвоителя NNEDI3.

Есть ещё вот такая ссылка: http://www.infognition.com/articles/vid … otout.html

Там вычисляют PSNR для восстановленной разными отрисовщиками вчетверо уменьшенной исходной картинки. NNEDI3 там плетётся почти в хвостике. А Lanczos с шарпером — среди фаворитов.

Лучше — больше

Post’s attachments

Ресайзеры.png 14.93 kb, 985 downloads since 2014-02-28

63 Reply by Noweol 01-03-2014 05:58:12 (edited by Noweol 01-03-2014 06:02:33)

- Noweol

- Beta Tester

- Offline

- Thanks: 133

Re: Настройка отрисовщика madVR

Вот поэтому и говорят, что PNSR — плохое мерило всего. Во-первых, у lanczos рингинг будет, во вторых шарпинг его только увеличит. В третьих lanczos ещё и алиазинга добавит. Всего этого не будет у NNEDI3.

А вот VideoEnhancer заинтриговал. Если смотреть скрины bjorn.avi, то VideoEnhancer умудряется рамы в окнах восстанавливать, а у NNEDI3 это не получается. Всё остальные алгоритмы отдыхают. В общем и целом результат VideoEnhancer мне нравится — в среднем деталей больше выдаёт, но, бывают и промахи — Шрек и Panasonic.

P.S. Ха! У них бикубик и lanczos ничем друг от друга не отличаются. Насмешили, однако.

64 Reply by psnsergey 01-03-2014 09:54:08

- psnsergey

- Member

- Offline

Re: Настройка отрисовщика madVR

Noweol wrote:

А вот VideoEnhancer заинтриговал. Если смотреть скрины bjorn.avi, то VideoEnhancer умудряется рамы в окнах восстанавливать, а у NNEDI3 это не получается. Всё остальные алгоритмы отдыхают.

Прикольно, что главная вычислительная сложность алгоритма заключается в том же самом определении движения объектов в кадре, которым и так занимается процессор в SVP…

65 Reply by Chainik 01-03-2014 20:02:22

- Chainik

- SVP developer

- Offline

- Thanks: 1457

Re: Настройка отрисовщика madVR

Noweol

А вот VideoEnhancer заинтриговал.

вроде как давно эту штуку на doom9 развенчали

по сути то что она делает — это денойс (по трем кадрам) + шарп, и как бы все

каждый может повторить это сам

66 Reply by psnsergey 02-03-2014 02:18:29 (edited by psnsergey 02-03-2014 02:34:06)

- psnsergey

- Member

- Offline

Re: Настройка отрисовщика madVR

Chainik wrote:

по сути то что она делает — это денойс (по трем кадрам) + шарп, и как бы все

каждый может повторить это сам

Вы, похоже, правы (оттуда же, http://forum.doom9.org/archive/index.php/t-142704.html ):

Tempter57

26th November 2012, 11:07

@Jenyok

The problem is to discriminate between what is noise and what isn’t. But the sharpening stage will sharpen also noise, which will be blend later inside the other frames. So you can’t escape the temporal denoising, more efficient than spatial denoising, combined with motion compensation.

А есть способы сделать это или что-то похожее при проигрывании видео? Про плагины к MVTools знаю, но скорость их (если использовать не кластер из 10 машин) вызывает печаль… Шейдерами. Ведь векторы перемещения объектов в SVP и так определяются, и задача Super Resolution сильно облегчилась бы, если бы это можно было использовать не только как «построить промежуточные кадры с исходным разрешением» в видеокарте (как это делается в SVP сейчас), но и как

» 1. построить исходные кадры с увеличенным до размера экрана разрешением силами видеокарты посредством Super Resolution (того самого темпорал денойсинга и потом шарпа), используя определённые вектора перемещений;

2. построить промежуточные кадры с увеличенным до размера экрана разрешением силами видеокарты из увеличенных в п. 1 исходных кадров, используя те же вектора перемещений. «

Так можно зарулить MadVR по качеству картинки так, что никакие NNEDI ему не помогут…

Подозреваю, что сие можно сделать скриптом AVISync для нынешнего SVP, но относительно .avs «давненько не брал я в руки шашек»… Никто не делал подобного?

67 Reply by gaunt 02-03-2014 06:14:52

- gaunt

- Beta Tester

- Offline

- Thanks: 153

Re: Настройка отрисовщика madVR

psnsergey

» 1. построить исходные кадры с увеличенным до размера экрана разрешением силами видеокарты посредством Super Resolution (того самого темпорал денойсинга и потом шарпа), используя определённые вектора перемещений; 2. построить промежуточные кадры с увеличенным до размера экрана разрешением силами видеокарты из увеличенных в п. 1 исходных кадров, используя те же вектора перемещений. «

Используёте увеличение картинки до свп . Хоть там и используется обычный сплайн …

На секундочку — во всех промежуточных кадрах вы увидите реальные посчитанные пикселы , вместо отресайзенного изображения .

Честно сказать , уже давно практикую увеличение кадров до двухразмерного от мониторного , затем наложение шейдеров на полученный кадр , затем уменьшение рендером до размера монитора .

Картинка получается исключительная . Пикселы не бьют + более ,чем четвертьпиксельное построение .

При наличии ай5 и видяйки уровня 5750 — вполне реально «раскочегарить» свп до уровня 1500р .

68 Reply by psnsergey 02-03-2014 07:06:16 (edited by psnsergey 02-03-2014 11:12:02)

- psnsergey

- Member

- Offline

Re: Настройка отрисовщика madVR

gaunt wrote:

Используёте увеличение картинки до свп . Хоть там и используется обычный сплайн …

1. В том-то и дело, можно недорого (при наличии среднего уровня видеокарты) получить уменьшение шума на многие дБ благодаря темпоральному денойсингу. Вектора уже есть!..

Хотя если исходник плохой, то шумы там — артефакты сжатия, а они будут примерно те же самые в соседних кадрах, ибо сжатие основано на той же самой компенсации движения.

2. Вообще ресайзить до СВП — немножко не правильно, ИМХО. СВП же прежде всего надо вычислить вектора перемещений, а делать это лучше всего по исходной картинке до искажения ресайзом (а ресайз всегда искажение, он искажает спектр картинки). А вот потом, после того, как исходные кадры попадут в видеокарту, тут-то и надо их ресайзить — и потом уже и выводить отресайзенные кадры на телевизор, и строить из них же промежуточные кадры.

gaunt wrote:

На секундочку — во всех промежуточных кадрах вы увидите реальные посчитанные пикселы , вместо отресайзенного изображения .

Это как так? Ресайз в СВП работает только для алгоритма поиска векторов СВП, но не для построения промежуточных кадров? То есть цепочка построения выглядит как:

для исходных кадров — ресайз (с последующим построением маски векторов перемещений), вывод.

для промежуточных — построение из картинки исходного размера по маске векторов перемещений, ресайз, вывод?

Я думал, что раз до SVP изображение увеличено до HD — всё, SVP имеет дело с интерполированной картинкой и к исходной SD доступа не имеет.

69 Reply by gaunt 02-03-2014 11:33:06

- gaunt

- Beta Tester

- Offline

- Thanks: 153

Re: Настройка отрисовщика madVR

psnsergey

2. Вообще ресайзить до СВП — немножко не правильно, ИМХО. СВП же прежде всего надо вычислить вектора перемещений, а делать это лучше всего по исходной картинке до искажения ресайзом (а ресайз всегда искажение, он искажает спектр картинки). А вот потом, после того, как исходные кадры попадут в видеокарту, тут-то и надо их ресайзить — и потом уже и выводить отресайзенные кадры на телевизор, и строить из них же промежуточные кадры.

Это одно из заблуждений , не только ваше .

Не забываем — вектора ищатся не по соседним пикселам , а целыми блоками .

Т.е. блок 8*8 учитывает 64 пиксела . Ему абсолютно фиолетов тип ресайза на увеличение .

Блок 16*16 содержит уже 256 пиксел …

С другой стороны — поиск векторов на грубых уровнях пирамиды , Тут надо понимать , что весь поиск может иметь лишь целые координаты .

Т.е. блок 8*8 и радиус 2 пиксела (четверть размера блока)

16*16 и радиус 4 пиксела (та же четверть)

32*32 и радиус 8 пиксел (опять четверть)

Всё это по сути одно и тоже . НО — сколько координат может дать радиус 2 пиксела …0,1 и 2 .

4 пиксела — 0 ,1,2,3,4

8 пиксела от 0 до 9 .

Т.е. поиск блоком 32*32 будет в 4 раза точнее поиска блоком 8*8 . И это линейные размеры , по площади — в 16 раз .

Другое дело , что даже блок 8*8 является достаточно точным .

Т.е. увеличивая кадр , появляется возможность найти и вывести пиксела в натуральном виде . Ведь поиск целочисленный .

Как и монитор — реальный пиксел монитора тоже целочисленный .

Без движения ресайзить действительно неважно где , до или после .

Но с движением — появляется шанс вывести реальный мониторный пиксел , именно там , где он должен быть .

Т.е. в промежуточных — расчитанных кадрах элементарно растет точность , что выражается в улучшенном отображении деталей .

Чем не продвинутый ресайз ?

Ну и построение избыточной точности по определению будет уменьшать шум . Ибо поиск происходит блоками , где один-два пиксела погоды не сделает . При построении же — имеем смешивание , шумы уменьшатся , контуры подчеркнуты=усилены .

Если наложить шейдеры на реальный кадр , который сильно больше реального изображения монитора — вы получаете шейдер полупиксельной точности . Т.е. имеем относительно безопасное увеличение резкости .

Конечно , многое будет зависеть от качества обратного ресайза . Например , евр польз. с бикубиком 0.6,075 позволяет наложить лишь один шарпенкомплекс2 . С Мадвр и ланзосом — вполне можно накатить два раза подряд . Повторюсь — при размере видео после свп больше реальных пиксел монитора .

70 Reply by Vovanchik 05-03-2014 19:20:06

- Vovanchik

- SVP developer

- Offline

- Thanks: 92

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

madVR v0.87.5

* error diffusion now uses DirectCompute (DX11 GPU needed) instead of OpenCL

* added fast and reasonably high quality «ordered dithering» algorithm

* added «renderingdithering» settings page with many new options

* new default dithering is now ordered dithering instead of random dithering

* madTPG now always uses monochromatic ordered dithering

* fixed: #107: XySubFilter: reducing CPU queue size during playback -> crash

* fixed: #112: 120fps clip resulted in 23Hz being selected instead of 60Hz

* fixed: #119: installation resulted in «might not have installed correctly»

* fixed: #123: XySubFilter: Nearest Neighbor/Bilinear distorted subtitles

* fixed: #125: forced film mode with unsupported FOURCCs: graphical corruption

* fixed: #133: XySubFilter: opaque black box when smooth motion was enabled

* fixed: #136: when playback is stopped, madVR now always turns the video off

* fixed: #137: Nearest Neighbor/Bilinear has problems with post-resize shaders

* fixed: #138: smooth motion FRC flickered when using Nearest Neighbor

* fixed: #145: DCI-P3 was using an incorrect white point

* fixed: #155: screeshots sometimes had an added black border

* fixed: #159: speciying DCI-P3 as the calibrated gamut -> green screen

* fixed: #160: corruption with uncompressed 4096×2304 v210 in AVI

* fixed: #161: YUV 4:4:4 videos with weird resolutions crashed madVR

* fixed: #165: overlay mode restricted madVR to single player window

* fixed: #167: dithering produced dithering noise on pure black areas

* fixed: #169: dithering produced dithering noise on pure white areas

* fixed: #170: Overlay mode sometimes unnecessarily cleared GPU gamma ramps

* fixed: Overlay mode applied 3dlut and gamma ramps in wrong order

* fixed: crash reporting didn’t catch exceptions in private threads, anymore

* fixed: crash when using XySubFilter with small GPU queue size

* fixed: DVD navigator was not released properly by madVR

* fixed: Run/Seek hooks also affected secondary DirectShow graphs

* fixed: profile key shortcuts only worked for «scaling» profiles

* fixed: full range YCbCr input produced slightly incorrect colors

* reduced Overlay mode graphical corruption when resizing media player

* exclusive -> windowed switch now shows a black frame instead of an old one

* removed XySubFilter auto-loading functionality, it’s now XySubFilter’s job

* disabled resolution based DCI-P3 auto detection

* changed default luma doubling value to 32 neurons

* display bitdepth can be be set to as low as 3bit (just for testing)

71 Reply by nemoW 07-03-2014 20:21:22

- nemoW

- Beta Tester

- Offline

- Thanks: 47

Re: Настройка отрисовщика madVR

madVR v0.87.6

* fixed: #090: FSE mode switched to 23Hz instead of 24Hz in Windows 8

* fixed: #127: crash when jumping to next video file on secondary monitor

* fixed: #173: overlay: exiting multiple windows in same order -> black screen

* madTPG now forces ordered dither, but you can en/disable colored & dynamic

* added support for new subtitle API ISubRenderConsumer2::Clear()

72 Reply by fakel 15-03-2014 14:08:11

- fakel

- Member

- Offline

- Thanks: 73

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

madVR v0.87.7

* added linear light processing for ordered dithering and error diffusion

* added «trade quality for performance» option for linear light dithering

* fixed: #175: Banding appears if bitdepth is set to ‘7 bit’

* fixed: crash in MC19 when switching videos with native DXVA decoding

* fixed: rare overlay stability problems introduced in v0.87.5

* random dithering doesn’t round down to less than 8bit, anymore

* «present several frames in advance = off» now auto-disables error diffusion

* display bitdepth can be be set to as low as 1bit (just for testing)

* added silent exception handling for Intel OpenCL initialization crashes

* madTPG now optionally supports APL windows (gamma and linear light)

* madTPG now has a minimum image area of 4% instead of 10%

* madTPG now draws a 20 pixel black border around the measurement area

* madTPG now properly supports dynamic dithering (didn’t before)

* madTPG dithering was optimized to not dither for integer test patterns

* madTPG headers and demo projects updated

* madVR in a media player no longer supports test pattern, only madTPG does

73 Reply by fakel 31-03-2014 12:02:59

- fakel

- Member

- Offline

- Thanks: 73

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

madVR v0.87.8

* added workaround for NVidia OpenCL <-> D3D9 interop driver bug

* fixed: #158: NNEDI3 chroma upscaling + DXVA deint + NVidia -> green image

* fixed: DirectCompute rendering resources weren’t properly released

* fixed: some multi monitor problems introduced in v0.87.7

* fixed: smooth motion frc sometimes incorrectly dropped frames

* fixed: toggling subtitle «trade quality» option required restart

* some DirectCompute stability improvements

* added vendor based OpenCL device filtering

* non-DX11-GPUs: error diffusion now falls back to ordered dithering

* improved Windows 8.1 FSE mode refresh rate hack

74 Reply by fakel 03-04-2014 11:19:04

- fakel

- Member

- Offline

- Thanks: 73

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

madVR v0.87.9

* fixed: NNEDI3 didn’t work properly on AMD/Intel (introduced in v0.87.8)

* fixed: native DXVA decoding + NNEDI3 chroma up + NVidia -> green color cast

* fixed: #032: Smooth Motion FRC sometimes failed to activate

* fixed: #096: Smooth Motion FRC resulted in last/only frame being hidden

* fixed: #097: Smooth Motion FRC didn’t respect «treat 25p movies as 24p»

* fixed: #098: «Treat 25p movies as 24p» now only activates up to 25.5fps

* fixed: #104: «Delay playback until …» failed when toggling subtitles

* fixed: #113: film mode key shortcut didn’t enable Smooth Motion FRC

* fixed: #124: videoLUTs were not properly restored in multi monitor setup

* fixed: #132: Image corruption when leaving FSE with 3dlut loaded

* fixed: #171: film mode activation with display mode change could crash

* fixed: #178: RGB/YUV 4:4:4 with mod2 height showed black screen

* fixed: #182: NNEDI3 chroma up neuron count wasn’t properly memorized

* fixed: #187: switching subtitles triggered a short black screen

* fixed: #189: Smooth Motion FRC sometimes activated when not needed

* NNEDI3 no longer offsets by 0.5 pixel if Luma needs to be resampled, anyway

* added «HKCUSoftwaremadshimadVROpenCLforceVendor» override option

* modified madLevelsTweaker GUI to make intended multi monitor usage clearer

75 Reply by fakel 22-04-2014 22:18:19

- fakel

- Member

- Offline

- Thanks: 73

- Thanks for the post: 1

Re: Настройка отрисовщика madVR

madVR v0.87.10

* added some optimizations to reduce AMD OpenCL interop cost

* added new windowed presentation path («present several frames in advance»)

* added support for decimating 50p/60p movies to 25p/24p

* added profile strings «filePath/Name/Ext», with wild char («?», «*») support

* fixed: #181: profile auto switching sometimes invalidated file name tags

* fixed: #192: black flashing with Smooth Motion + NNEDI3 chroma doubling

* fixed: #193: image corruption when up&down scale is needed at the same time

* fixed: crash on Vista when trying to activate error diffusion

* fixed: Intel OpenCL CPU driver sometimes crashed

* OpenCL should now automatically prefer NVidia GPUs on Optimus laptops

* refresh rate hack is now only installed on Windows 8 (and newer)

* «Pause» OSD message no longer blocked

* file «madshi.net/madVR/version.txt» lists the current version number

* file «madshi.net/madVR/sha1.txt» lists SHA1 hash of the current «madVR.zip»

Pages Previous 1 2 3 4 5 … 24 Next

You must login or register to post a reply

Содержание

- madVR: как выжать максимум качества из видео

- Секреты качественного воспроизведения видео на компьютере. Часть 3: Настройка плавного воспроизведения в рендере madVR

- Настройка рендера madVR

madVR: как выжать максимум качества из видео

К основным фишкам madVR можно отнести:

- плавное отображение движения в кадре (smooth motion rendering)

- высокое качество дискретизация цвета (chroma upsampling)

- высокое качество преобразования YCbCr -> RGB,

- высококачественные алгоритмы маштабирования видео (bicubic, mitchell, lanczos, spline etc)

- коррекция спектра и контрастности для разных видов мониторов (gamut & gamma correction)

- обработка видео GPU в 16 битном режиме без потерь

Как я уже упоминал, MPC HC поддерживает madVR довольно давно, также как и KmPlayer и Zoom Player.

Все, что нужно, чтобы попробовать в деле этот видео рендерер — распаковать скачанный zip файл (ссылка на закачку последней версии) в отдельную папку и установить, кликнув на install.bat.

После этого в настройках (Настройки-Воспроизведение-Вывод) выбрать madVR.

Настройки самого madVR доступны после запуска видео и правого клика Фильтры-madVRRenderer. У меня все отлично работает с настройками по умолчанию, но при желании, там достаточно много опций, чтобы подстроить под свой вкус и мощность видеокарты.

Обсуждение madVR, пожелания к разработчику и баг репорты можно оставить в специальной ветке на форуме Doom9. Там же на первой странице можно посмотреть сравнение по качеству с конкурентами.

Источник

Секреты качественного воспроизведения видео на компьютере. Часть 3: Настройка плавного воспроизведения в рендере madVR

Здравствуйте уважаемые читатели блога www.ithabits.ru. В предыдущих статьях мы выяснили, что если нет проблем с быстродействием компьютера, то основное влияние на плавность воспроизведения видео оказывают временные (ударение на последнем слоге) характеристики. В первую очередь это несоответствие частоты обновления экрана монитора частоте кадров воспроизводимого видеоконтента.

В прошлый раз мы рассмотрели способ улучшения качества демонстрации фильмов путем соответствующего изменения частоты обновления дисплея.

К сожалению, этот очень простой и эффективный способ в полной мере доступен только тогда, когда видеомонитор: дисплей компьютера, телевизор, проектор — имеет необходимый набор кадровых частот.

Как быть, если в списке доступных отсутствует режим 24р или дисплей и вовсе поддерживает одну единственную частоту обновления равную 60 Гц?

Ожидать от мировой киноиндустрии отказа от стандарта съемки 24 кадра в секунду в самое ближайшее время не приходится.

Однако, как мы уже говорили, решение есть. Как гласит древняя восточная мудрость: “Если гора не идет к Магомету, то Магомет идет к горе”.

В нашем случае это означает, что если нельзя изменить частоту обновления экрана монитора, то нужно поменять частоту кадров проигрываемого видео.

Возможно ли это? Не только возможно, но уже широко используется, например, во многих современных телевизорах.

Эту технологию обобщенно можно назвать “Smooth motion” (плавное движение). У разных производителей видеоаппаратуры она имеет различные названия. Например, в телевизорах Sony — Motionflow, у Samsung — Clear Motion Rate, у других производителей как-то еще, но суть от этого не меняется. Заключается она в добавлении недостающих промежуточных кадров методом интерполяции соседних кадров исходного видео непосредственно в процессе его вывода на экран.

Учитывая тот факт, что сегодня далеко не у всех есть телевизор с поддержкой такой технологии, посмотрим как можно реализовать ее с помощью компьютера.

Сразу же необходимо отметить, что отнюдь не все зрители воспринимают изменение частоты кадров фильмов с 24 до 60, 100 и более в секунду как однозначное благо. Некоторые отмечают, что при этом совершенно меняется характер восприятия и теряется привычное ощущение кино. Иногда это даже называют эффектом мыльной оперы (soap opera effect).

И действительно, такое мнение не лишено оснований. Не знаю как вы, а мне с детства достаточно было одного взгляда на экран телевизора, чтобы мгновенно определить, что показывают – художественный фильм или телевизионную постановку. А всего-то разница была в два раза – 25р против 50i (о демонстрации фильмов 24р в телевизионных системах PAL/SECAM смотрим в предыдущей части).

В любом случае имеет смысл попробовать и лишь после этого принимать решение о том, как лучше смотреть фильмы. Ну а если устройство видео вывода не поддерживает режим 24р, то думать особо и не о чем.

Далее мы рассмотрим три возможных варианта реализации Smooth motion:

- Smooth motion в рендере madVR;

- SmoothVideo Project(SVP);

- МедиаплеерSplash PRO EX.

Из-за большого объема материала в данной статье будет представлено описание лишь первого из них, а именно настройки рендера madVR. Два других – в следующей части.

Настройка рендера madVR

В прошлый раз мы уже начали знакомиться с программным обеспечением madVR.

Напомню, что MadVR — это проект, нацеленный на улучшение качества обработки и визуализации видео путем использования в процессе вывода точного преобразования цветового пространства и качественного масштабирования изображения с использованием возможностей видеоадаптера.

Рассмотрим основные настройки рендера madVR. (Загрузка и установка данного ПО была разобрана здесь).

Данный модуль имеет большое количество настроек. При этом необходимо иметь в виду, что чем выше желаемое качество обработки изображения, тем больше будет нагрузка на графический процессор. В конечном счете изменение тех или иных настроек процесса может привести к тому, что GPU перестанет справляться с обработкой видеопотока, что является недопустимым. При этом реальное улучшение картинки может оказаться крайне незначительным.

В целом можно рекомендовать такой подход к настройкам данного фильтра.

Запасаемся несколькими видеофайлами с различным разрешением по горизонтали: 720, 1280, 1440, 1920 px и частотой кадров: 23,976, 24, 29,97, 50, 59,94 fps.

Не обязательно должен присутствовать весь перечисленный набор, но одного “предельного” 1080р60 будет недостаточно. Так, в моем случае самыми “тяжелыми”, кто бы мог подумать, оказались файлы MPEG 1440х1080i50 с видеокамеры. Почему, вскоре станет понятно.

Для более-менее объективной настройки вывода потребуется контролировать загрузку графического процессора.

Владельцы видеокарт AMD, правда не все, могут использовать для этой цели вкладку AMD OverDrive в Catalyst Control Center.

Всем остальным можно порекомендовать утилиту TechPowerUp GPU-Z. Ее можно скачать непосредственно с сайта разработчика.

На вкладке “Sensor” представлена информация о состоянии видеоадаптера в интересующий момент времени. В первую очередь нас будет интересовать загрузка процессора (GPU Load). Информация об использовании видеопамяти (Memory Used) поможет определиться с количеством видео буферов.

Ниже представлены оптимальные с точки зрения качество/производительность настройки madVR применительно к компьютеру с процессором i7-950 (3,06 GHz) и видеокартой NVIDIA GeForce GTS 450.

Они ни в коем случае не являются догмой или чем-то обязательным к исполнению. В зависимости от производительности вашего видеоадаптера их следует менять в ту или иную сторону руководствуясь при этом информацией о загрузке GPU в процессе воспроизведения видео различных форматов.

Напомню, что войти в настройки madVR можно только непосредственно во время воспроизведения видео медиаплеером, поддерживающим данный тип DirectShow вывода, с помощью соответствующего значка в трее или через меню “Воспроизведение –> Фильтры –> MadVR” (для MPC-HC). Настройки madVR можно открыть и из меню по правому клику мышью в любом месте экрана плеера.

В контексте сегодняшней темы нас интересует одна единственная настройка, но пропустить все остальные было бы как-то неправильно. Поэтому начнем по порядку сверху вниз.

- Devices (устройства вывода)

Для каждого подключенного к видеокарте компьютера монитора указываем его тип.

Calibration (калибровка)

На вкладке “Calibration” имеет смысл выбрать “this display is already calibrated” (дисплей уже откалиброван). MadVR позволяет задавать расширенные калибровочные характеристики, однако в подавляющем большинстве случаев лучше указать все так, как показано. Задание этих параметров нужно рендеру для правильного преобразования цветовых пространств.

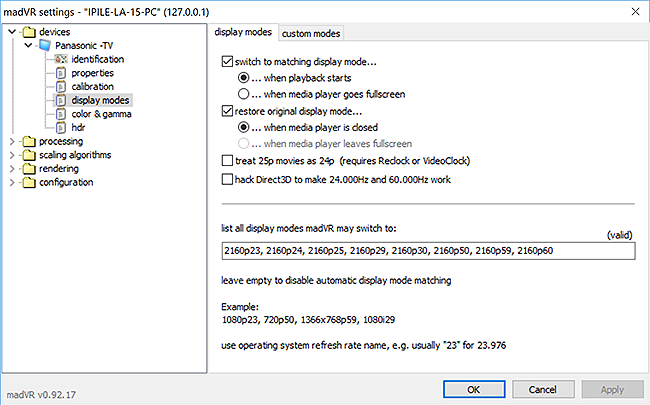

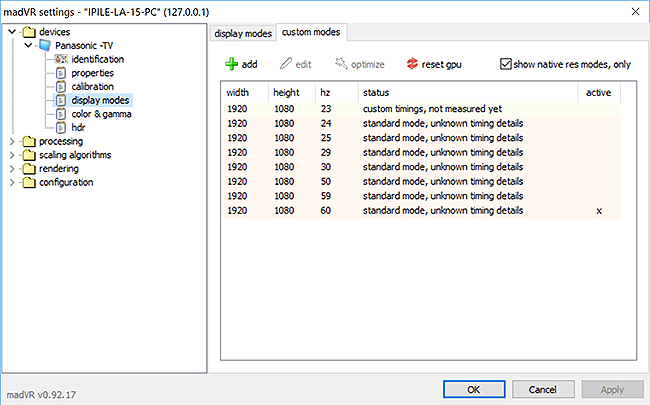

Display modes (Режимы дисплея)

Данную вкладку мы уже разобрали в прошлый раз. В поле “list all display modes…” нужно вписать режимы, поддерживаемые дисплеем и выбрать в какой момент — при старте воспроизведения или при переходе на полный экран, переключать частоту его обновления.

Возможность обработки видео 25р как 24р пропускаем (для реализации данной опции необходима установка дополнительного ПО).

- Processing (обработка)

Decoding (декодирование видео)

Считаем, что все вопросы с декодированием видео к этому моменту уже решены и поэтому вкладку “decoding”, на которой для MPEG2, VC1 и h264 можно отдельно включить декодирование с помощью ffmpeg/libav, пропускаем.

Большое количество свободных медиаплееров, в том числе MPC, перешли на использование декодера LAV. Сегодня это представляется оправданным и целесообразным.

Если есть проблемы с производительностью центрального процессора компьютера, то для его разгрузки, если позволяют возможности видеоадаптера, в настройках декодера LAV можно включить аппаратное декодирование видео с помощью GPU.

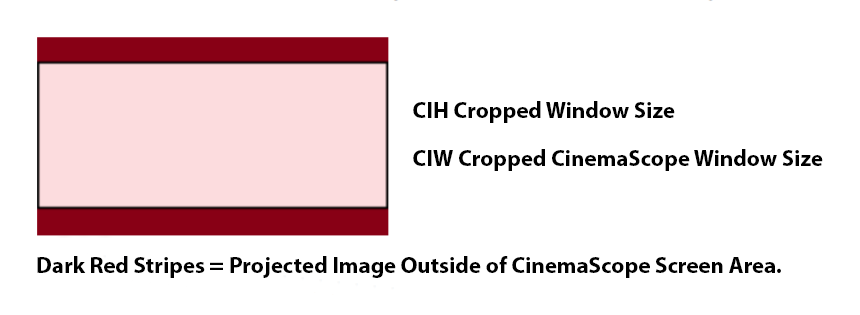

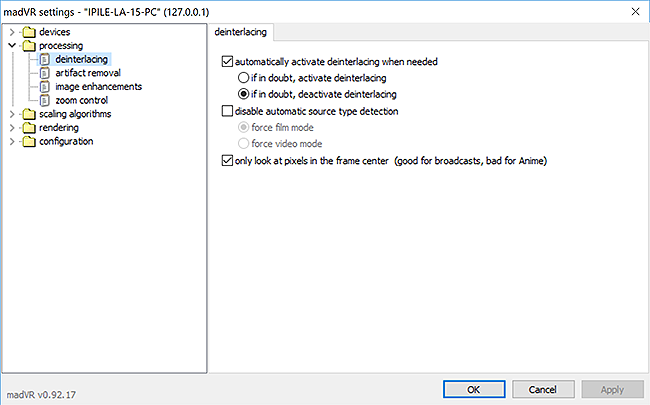

Deinterlacing (деинтерлейсинг)

В самой первой публикации темы интерлейсному видео уже было уделено достаточно много внимания.

Определения для вариантов переключателя “automatically activate deinterlacing when needed (автоматически активировать деинтерлейсинг если нужно)” могут показаться несколько неожиданными: “if in doubt, activate deinterlacing (если есть сомнения, то активировать деинтерлейсинг)” и “if in doubt, deactivate deinterlacing (если есть сомнения, то выключить деинтерлейсинг)”. О каком сомнении или неопределенности может идти речь?

Дело в том, что декодеры далеко не всегда правильно распознают тип видео. И LAV в данном случае не является исключением. Например, вот с такими настройками неожиданно возникла проблема с деинтерлейсингом обычного PAL DV видео.

С чем это связано сказать трудно, как и невозможно дать единую рекомендацию для всех типов видео с полями. В целом можно рекомендовать такой подход – включить деинтерлейсинг в настройках кодека, программный или аппаратный, а в рендере madVR сделать “подстраховку”, как это показано на рисунке.

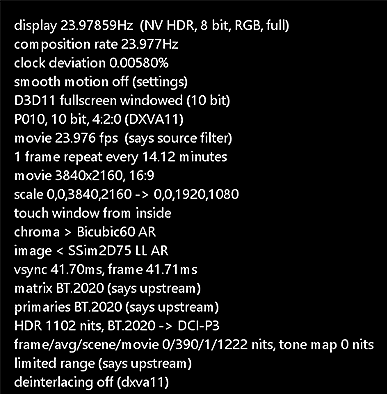

Узнать активен ли деинтерлейсинг в рендере madVR можно из информации, выводимой по комбинации клавиш “Ctrl+J”, а переключать режимы обработки чересстрочного видео на ходу с помощью “Ctrl+Alt+Shift+T”.

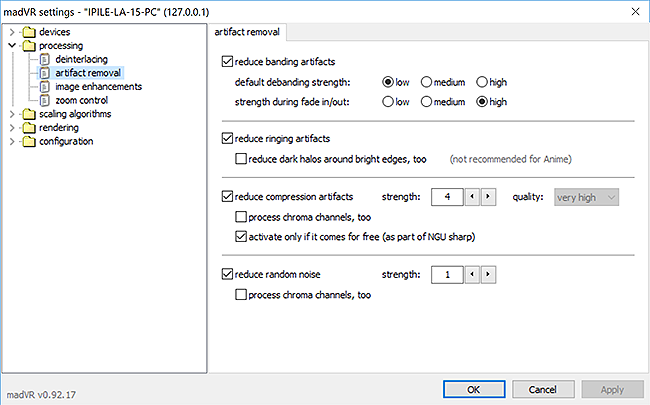

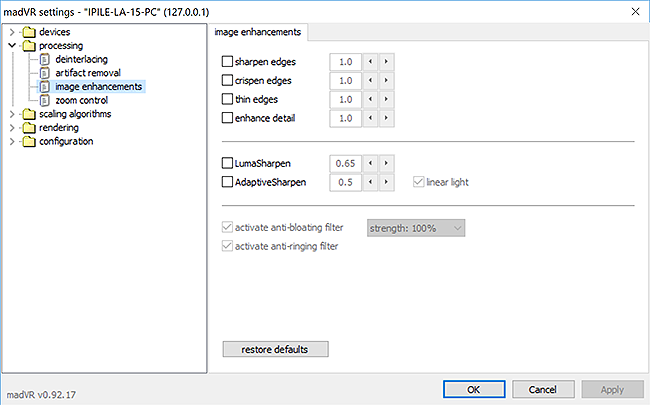

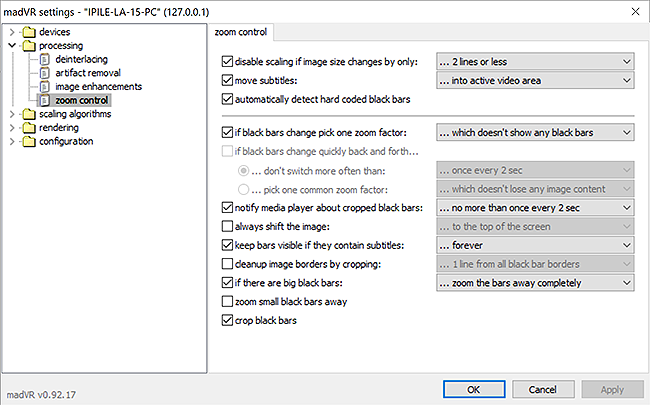

Artifact removal (удаление артифактов)

Речь идет о таком неприятном явлении при просмотре видео, как дебандинг. Проявляется он в виде ступенчатого градиента на однородных поверхностях. Если позволяет быстродействие видеокарты, то безусловно данную возможность уменьшения артефактов такого типа имеет смысл включить.

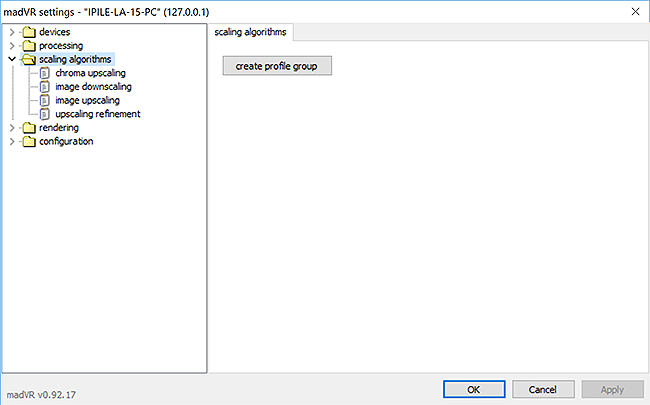

- Scaling algorithms (алгоритмы масштабирования)

Очень высокое качество масштабирования изображения в рендере madVR является одной из главных причин, по которой его стоит использовать.

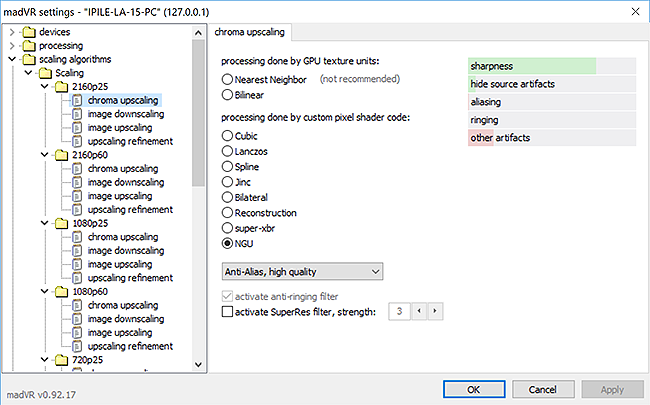

Chroma upscaling (масштабирование цвета)

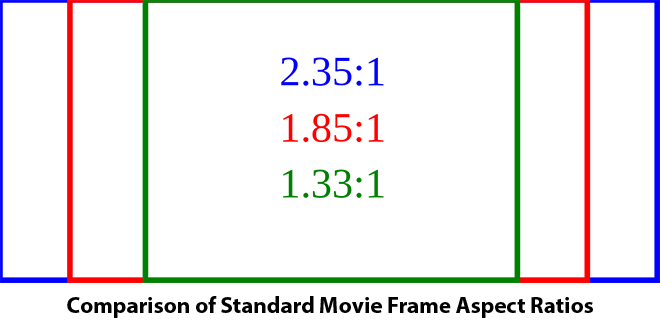

Большинство цифровых видеофильмов кодируются в формате субдискретизации цветности 4:2:0. Это означает, что черно-белое изображение (luma — яркость) сохраняется в видео с полным разрешением, а цветовая картинка (chroma — цветность) имеет разрешение в два раза меньшее как по горизонтали, так и по вертикали. В данном представлении четыре соседних пикселя (квадрат 2х2) имеют одинаковый цвет.

По сравнению с представлением без субдискретизации (4:4:4), 4:2:0 позволяет уменьшить скорость потока (битрейт) и, соответственно, конечный размер видеофайла примерно в два раза.

В некоторых форматах видео используется субдискретизация цветности 4:1:1, которая также позволяет уменьшить скорость потока в два раза, но при этом одинаковый цвет приобретают четыре пикселя в строке по горизонтали.

Такое возможно благодаря тому, что детали черно-белого изображения в значительной степени маскируют низкое разрешение цветного. Тем не менее границы объектов получают неравномерную ступенчатую окраску.

В этой связи вспомнился некогда легендарный домашний компьютер ZX Spectrum. Возможно старшее поколение читателей его помнит. Графическое разрешение создаваемой им картинки составляло 256х192 точек, а вот цветовые атрибуты задавались по знакоместам размером 8х8 пикселей. Благодаря такому решению получилось занять под видеопамять всего около 7 Кб. Хотя, конечно, возникли определенные сложности с рисованием цветных изображений и сами они выглядели порой весьма забавно.

По причине, отмеченной выше, цветная картинка всегда масштабируется в madVR в сторону увеличения (Chroma upscaling) до разрешения видео. Это позволяет заметно улучшить качество ее визуального восприятия.

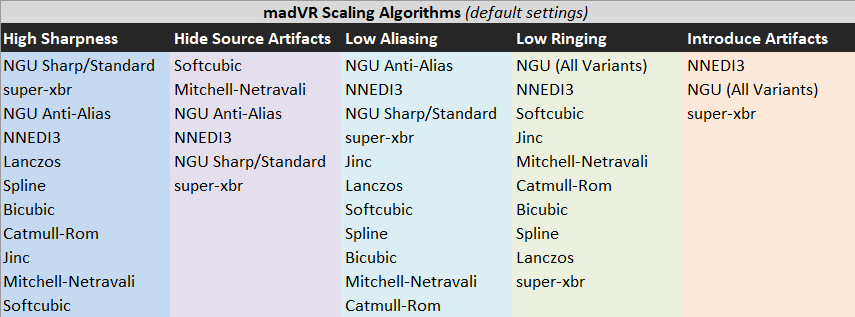

Интерфейс секции “Scaling algorithms” выполнен в дружественном пользователю стиле. Слева представлены доступные алгоритмы масштабирования. Общий порядок такой – качество увеличивается сверху вниз по списку. Разумеется, одновременно с этим повышаются и требования к производительности компьютера.

Так, самый примитивный, но одновременно и самый быстрый и легкий алгоритм “Nearest Neighbor (Ближайший сосед)” расположен в самом верху.

Зато внизу можно увидеть появившийся не так давно в madVR OpenCL алгоритм масштабирования цветности NNEDI3, являющийся на сегодняшний момент времени одним из лучших. Однако позволить себе его смогут только владельцы достаточно продвинутых видеокарт.

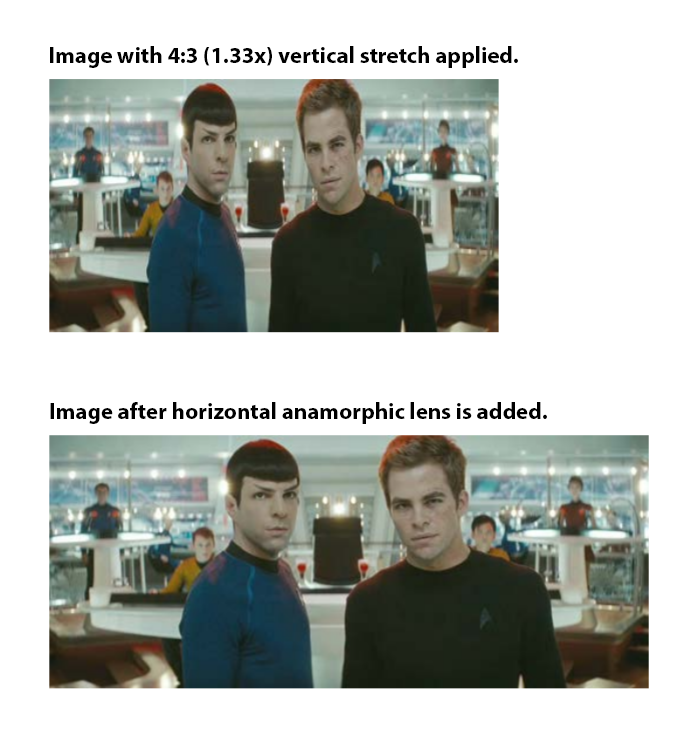

В моей конфигурации компьютера со старенькой NVIDIA GeForce GTS 450, равно как и с ее одноклассницей AMD Radeon HD 5770, выбор алгоритма масштабирования NNEDI3 мгновенно загружает GPU до уровня, близкого к 100%, со всеми вытекающими из этого последствиями в виде заикающегося видео.

Это как раз тот случай, когда лучшее может стать врагом хорошего. Если видео компьютером не воспроизводится плавно, то разговоры о качестве масштабирования картинки становятся бессмысленными. Плавность воспроизведения однозначно имеет абсолютный приоритет.

В конце концов я остановился на методе Jinc, но при этом для уменьшения нагрузки на GPU пришлось отключить в настройках LAV аппаратное декодирование (примерно –10% для 1080р50/60, соответственно, +10…15% увеличение загрузки CPU).

Идеальных алгоритмов масштабирования не существует. Каждый из них имеет свои сильные и слабые стороны.

Для того, чтобы облегчить пользователю выбор, в правой верхней части окна показаны их основные характеристики в форме цветных полос. Чем больше будет площадь зеленых столбиков, тем лучше, чем больше красных, тем хуже.

В категории положительных (зеленых) характеристик присутствуют:

Sharpness – резкость. Очевидно, в комментариях не нуждается.

Hide source artifacts – способность алгоритма скрывать дефекты (артифакты) исходной картинки.

В категории отрицательных (красных) свойств представлены:

Aliasing – широкий класс нежелательных визуальных эффектов (артефактов), проявляющихся в компьютерной графике в простейшем случае как зубчатость, неровность, ступенчатость линий, расположенных не параллельно краям экрана.

В более широком смысле aliasing означает неправильное или искаженное представление реального объекта его цифровой моделью, обусловленное недостаточной частотой выборок. В нашем случае частота выборок определяется разрешением изображения и частотой кадров видео.

Классическими примерами такого проявления алиасинга являются причудливые фигуры, получаемые вместо горизонтальных жалюзей на окнах домов или видеосъемка быстро вращающихся лопастей воздушного винта самолета.

Rinding — «звон» — переходный процесс в виде затухающих колебаний. Получил свое название по аналогии со звучанием колокола после удара по нему. При цифровой обработке изображений rinding приводит к появлению артефактов, представляющих собой ложные сигналы вблизи резких переходов. Визуально они проявляются в виде полос или «призраков» у краев объектов.

Other artifacts – другие искажения.

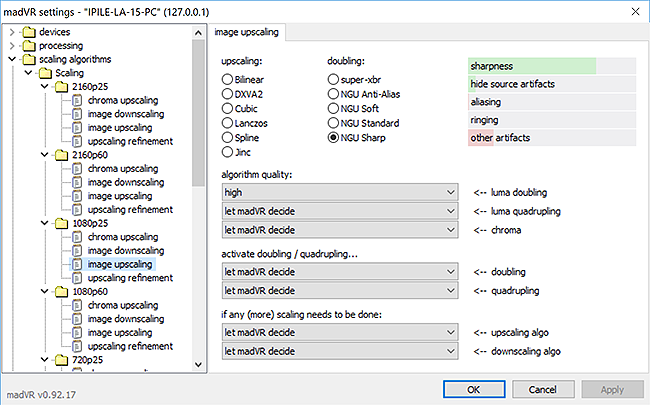

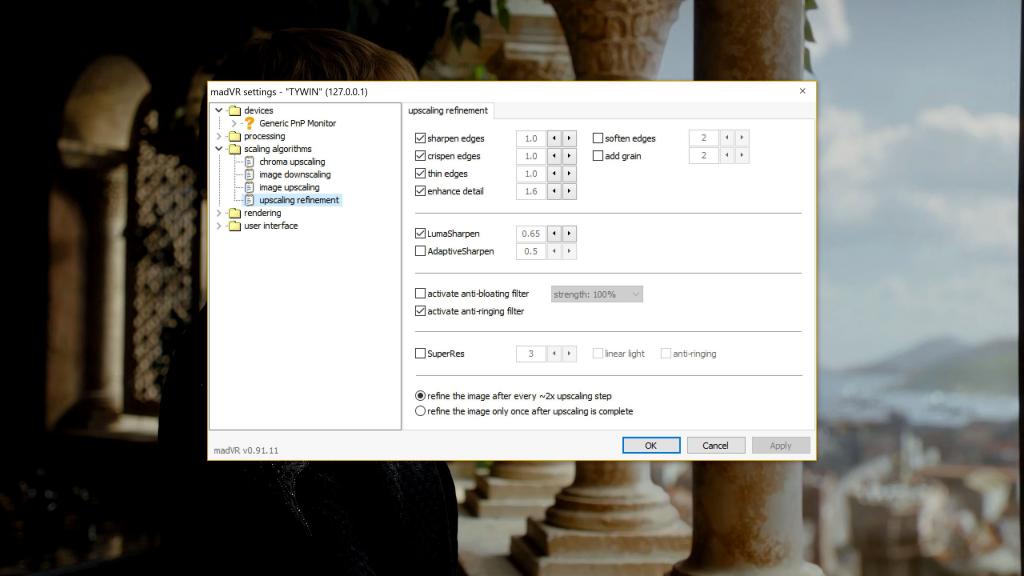

Image upscaling (увеличение изображения)

Для того, чтобы правильно выбрать подходящий для вашей конфигурации оборудования алгоритм масштабирования, как раз и потребуются видеофайлы разного формата и утилита TechPowerUp GPU-Z, о которых мы упомянули выше.

Подбор будем проводить по критерию качество/производительность.

Для видеокарт средней категории имеет смысл сразу начать с метода “Bicubic”. По многочисленным оценкам он дает вполне приемлемое для большинства случаев качество при увеличении разрешения — Image upscaling.

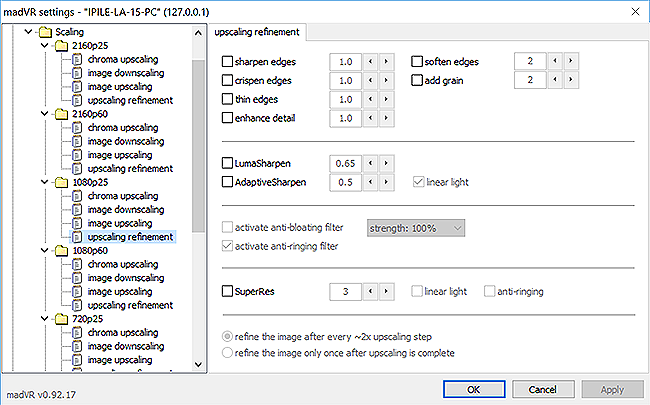

Проверяем загрузку GPU при воспроизведении выбранных видеофайлов. Если она невелика, то можно попробовать другие варианты, расположенные ниже по списку, или включить дополнительные опции. Например, активировать anti-ringing фильтр, как показано на иллюстрации.

Необходимо иметь в виду, что при воспроизведении видео с разрешением 1920х1080 на дисплее с таким же разрешением Image upscaling задействован не будет. Поэтому, как я уже говорил выше, одного “предельного” файла 1080р60 для настройки madVR недостаточно.

Image downscaling (уменьшение изображения)

Поступаем аналогично двум предыдущим настройкам, но начать лучше сразу с метода “Lanczos”, который лучше справляется с задачей Image downscaling — масштабирование изображения в сторону уменьшения размерности.

Для активизации фильтра разрешение окна или экрана монитора должно быть меньше, чем разрешение видео. Если дисплей имеет разрешение 1920х1080, то запустить Image downscaling получится только в оконном режиме видеоплеера. Об этом нужно помнить в процессе настройки.

В целом настройка madVR на максимально возможное качество обработки изображения при сохранении необходимой для плавного воспроизведения производительности является задачей весьма увлекательной.

- Rendering (построение изображения, воспроизведение)

Некоторые настройки madVR из раздела Rendering способны оказывать заметное влияние на производительность.

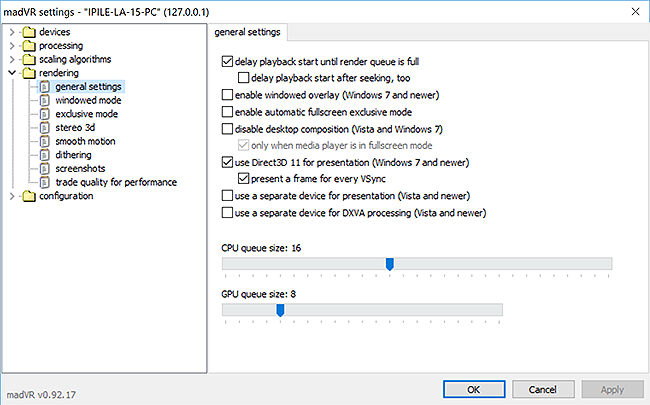

General settings – основные настройки

Если не вдаваться в детали, то можно сказать, что включение режимов “windowed overlay (оконное наложение)” и “automatic fullscreen exclusive mode (автоматическое включение эксклюзивного режима при полноэкранном воспроизведении)” потенциально должно улучшать производительность. Попробуйте. Лично я не нашел в этих режимах для себя ничего полезного. Кроме того, fullscreen exclusive mode запрещает вывод на экран любых окон других приложений, не будет работать и “Print Screen”.

В разделе General settings есть настройка длинны очередей на обработку в СPU (decoder queue) и GPU (upload/render queue). Если есть проблема с плавностью воспроизведения, то теоретически увеличение очередей должно помочь ее решению. Однако при этом нужно помнить, что чем больше длинна, тем больше памяти нужно madVR.

Проконтролировать доступный объем видеопамяти можно с помощью утилиты TechPowerUp GPU-Z.

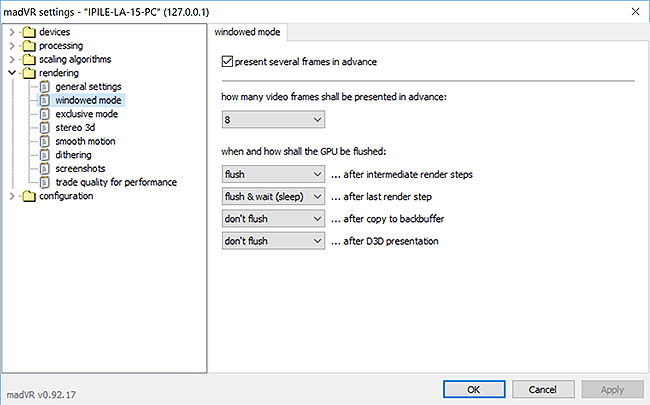

Windowed mode settings – настройки оконного режима

Как следует из названия, эти параметры применяются когда madVR работает в оконном режиме. При отключенном fullscreen exclusive mode эти настройки будут использоваться как при работе в окне, так и в при переходе к полноэкранному представлению.

Данная настройка определяет количество буферизированных кадров и потенциально может повлиять на плавность воспроизведения. По умолчанию в память предварительно загружаются 3 кадра. При обычном воспроизведении этого вполне достаточно. Однако, если планируется включить опцию Smootch motion, то имеет смысл увеличить размер буфера, например, до 8-ми кадров.

Нужно помнить, что при этом будет использовано больше памяти.

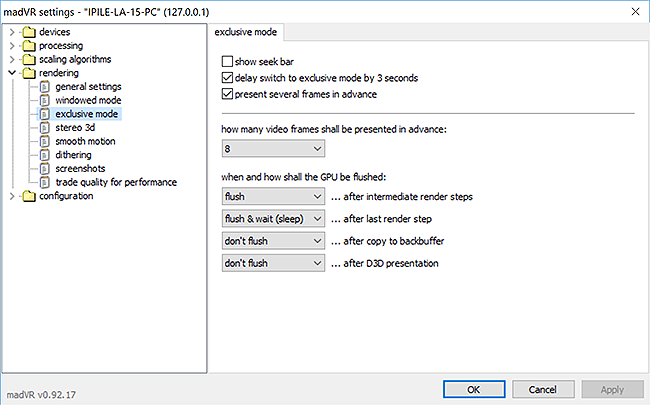

Exclusive mode settings – настройки режима эксклюзивного полноэкранного воспроизведения

Аналогично Windowed mode settings.

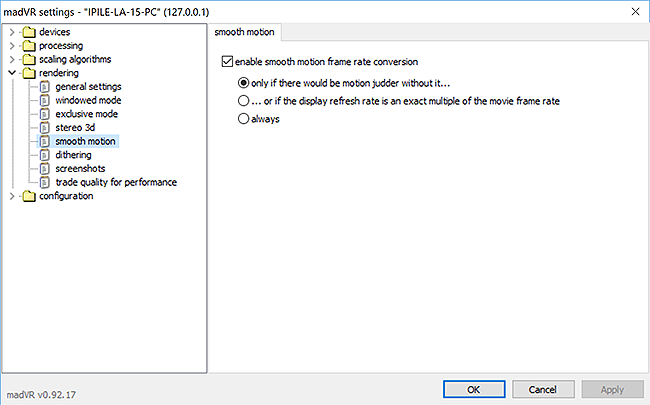

Smooth motion – плавное воспроизведение

Наконец мы подошли к главной цели сегодняшней публикации – к опции Smooth motion.

Smooth motion в madVR – это не совсем то, о чем мы говорили в начале статьи. Она не является системой безусловной интерполяции кадров, которая используется в телевизорах, или в системе SVP, о которой пойдет речь в следующей части.

В рендере madVR Smooth motion предназначена для организации плавного воспроизведения в тех случаях, когда частота кадров исходного видео не соответствует ни одной из частот обновления экрана монитора.

Так как для согласования частот используется смешивание кадров (frame blending), то иногда можно увидеть ореол вокруг движущихся объектов. Однако такое случается достаточно редко и точно не может перечеркнуть ценность улучшения плавности воспроизведения.

Если сделать настройки так, как показано на иллюстрации, — “Enable smootch motion frame rate conversion only if there would be motion judder without it” (включить преобразование частоты кадров только тогда, когда без него будет дрожание изображения), то функция будет активироваться только в тех случаях, когда это действительно нужно. Например, при воспроизведении видео с частотой кадров 23/24/25/50 на мониторе с частотой 60 Гц. А вот для NTSC частот 30/60 кадров в секунду в этом случае smootch motion останется неактивной.

Если экран вашего устройства вывода имеет возможность обновляться с частотой 50 Гц, эту возможность нужно обязательно использовать. В этом случае smootch motion будет включаться в работу только для фильмов 23/24 fps.

В общем случае такую настройку можно считать оптимальной.

Могу сказать, что фильмы с наиболее распространенной частотой кадров 29,976 fps с включенной в madVR функцией smootch motion смотрятся на мониторе 60 Гц очень даже неплохо.

Еще одним положительным аспектом реализации технологии smootch motion в madVR является ее низкая ресурсоемкость. Общее правило такое – если вам удалось настроить и запустить madVR в принципе, то smootch motion также будет работать.

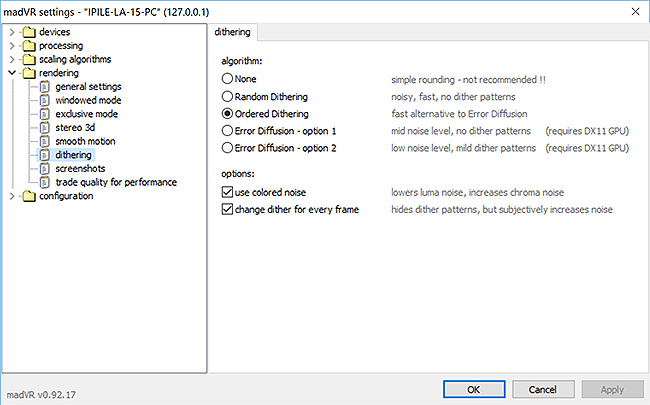

Dithering (сглаживание)

Выбор метода сглаживания в данной секции настроек может оказать решающее влияние на производительность. В последних версиях madVR появилась реализация очень высококачественного алгоритма сглаживания, известного как Error Diffusion. К сожалению, это как раз то, что, как и NNEDI3, может мгновенно поставить на колени вашу видеокарту. Кстати, для работы Error Diffusion нужна поддержка DX11 со стороны GPU видеоадаптера.

Как следует из комментариев к представленным алгоритмам, быстрой альтернативой Error Diffusion является Ordered Dithering.

Включение дополнительных опций use colored noise (использовать цветной шум) и change dither for every frame (менять сглаживание для каждого кадра) не оказывают заметного влияния на нагрузку GPU. Но при этом их влияние неоднозначно. Выбирайте в данном случае то, что вам больше понравилось, или оставьте их первоначальные установки.

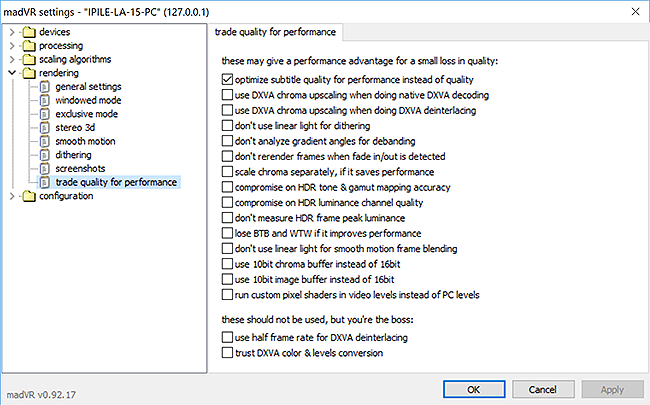

Trade quality for perfomance (улучшить производительность за счет качества)

Если все еще остались проблемы с производительностью, то можно попробовать решить их за счет незначительного ухудшения качества обработки изображения.

Отмечать перечисленные опции нужно последовательно сверху вниз до тех пор, пока не будет достигнута необходимая плавность воспроизведения видео.

- User interface (интерфейс пользователя)

Keyboard shortcuts (клавиатурные комбинации)

Здесь можно посмотреть используемые в madVR для быстрого доступа к некоторым настройкам клавиатурные комбинации.

Желаю всем приятного просмотра фильмов, например в плеере MPC-HC, с использованием рендера madVR.

В следующий раз мы займемся изменением частоты кадров исходного видео с помощью программного обеспечения SmoothVideo Project (SVP). Не пропустите самое интересное.

Источник

Posts: 3,823

Joined: Feb 2014

Reputation:

220

2016-02-08, 05:37

(This post was last modified: 2019-08-04, 21:15 by Warner306.)

madVR Set up Guide (for Kodi DSPlayer and Media Player Classic)

madVR v0.92.17

LAV Filters 0.74

Last Updated: Aug 04, 2019

Please inform me of any dead links as there are countless external links spread throughout this guide. It is also helpful to point out any typos you find or technical information that appears to be misstated or incorrect. It is always an option to use a description from a better and more reliable source.

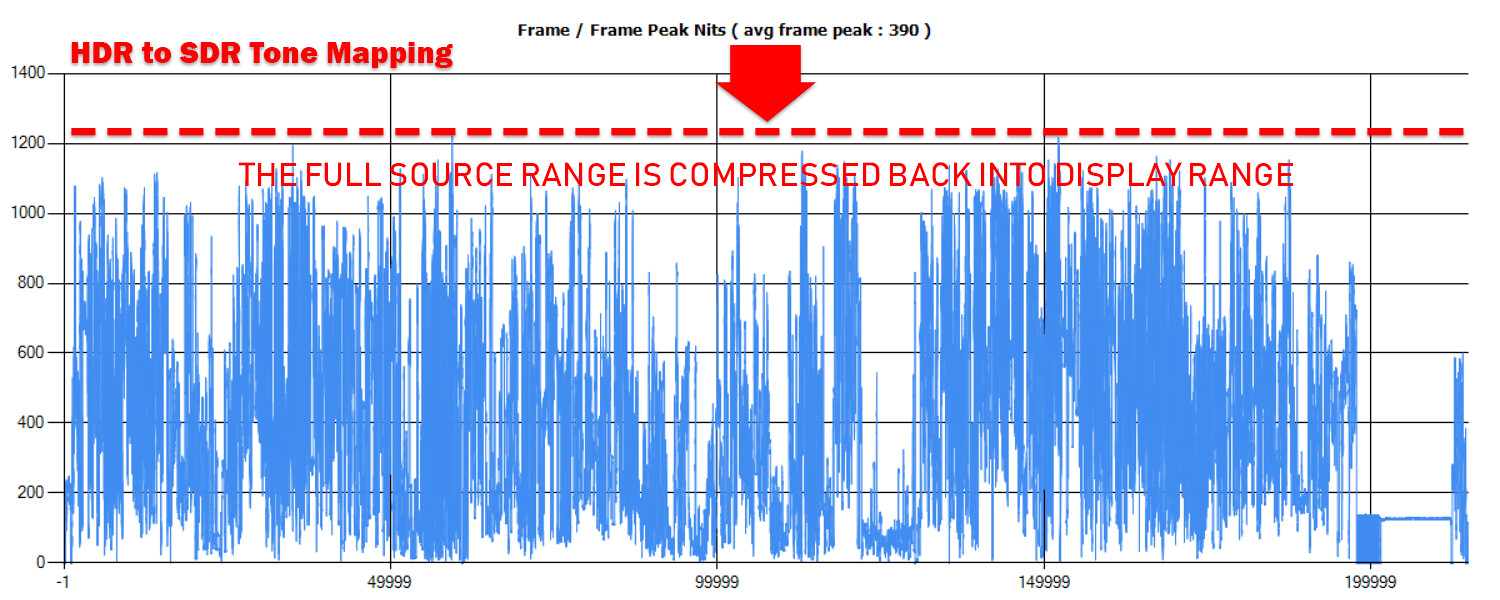

Follow current madVR development at AVS Forum: Thread: Improving HDR -> SDR Tone Mapping for Projectors

New to Kodi? Try this Quick Start Guide.

What Is madVR?

How to Configure LAV Filters

This guide is an additional resource for those using Kodi DSPlayer or MPC. Set up for madVR is a lengthy topic and its configuration will remain fairly consistent regardless of the chosen media player.

Table of Contents:

- Devices;

- Processing;

- Scaling Algorithms;

- Rendering;

- Measuring Performance & Troubleshooting;

- Sample Settings Profiles & Profile Rules;

- Other Resources.

…………..

Devices

Identification, Properties, Calibration, Display Modes, Color & Gamma, HDR and Screen Config.

Processing

Deinterlacing, Artifact Removal, Image Enhancements and Zoom Control.

Scaling Algorithms

Chroma Upscaling, Image Downscaling, Image Upscaling and Upscaling Refinement.

Rendering

General Settings, Windowed Mode Settings, Exclusive Mode Settings, Stereo 3D, Smooth Motion, Dithering and Trade Quality for Performance.

…………..

Credit goes to Asmodian’s madVR Options Explained, JRiver Media Center MADVR Expert Guide and madshi for most technical descriptions.

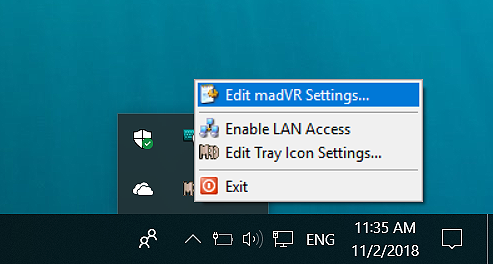

To access the control panel, open madHcCtrl in the installation folder:

Double-click the tray icon or select Edit madVR Settings…

During Video Playback:

Ctrl + S opens the control panel. I suggest mapping this shortcut to your media remote.

…………..

Resource Use of Each Setting

madVR can be very demanding on most graphics cards. Accordingly, each setting is ranked based on the amount of processing resources consumed: Minimum, Low, Medium, High and Maximum. Users of integrated graphics cards should not combine too many features labelled Medium and will be unable to use features labelled High or Maximum without performance problems.

This performance scale only relates to processing features requiring use of the GPU.

…………..

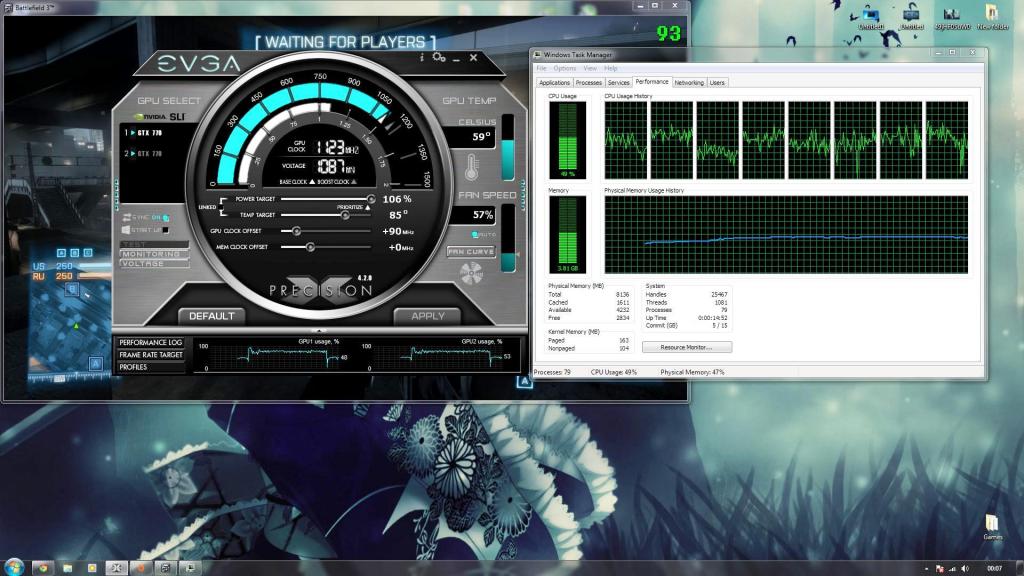

GPU Overclocking

Overclocking the GPU with a utility such as MSI Afterburner can improve the performance of madVR. Increasing the memory clock speed alone is a simple adjustment that is often beneficial in lowering rendering times. Most overclocking utilities also offer the ability to create custom fan curves to reduce fan noise.

…………..

Video Drivers

Most issues with madVR can be traced to changes to video drivers (e.g., broken HDR passthrough, playback stutter, 10-bit output support, color tints, etc.). Those only using a HTPC for video playback do not require frequent driver upgrades or updates. The majority of basic features such as HDR passthrough will work for many years with older drivers and frequent driver releases are solely intended to improve video game performance, not video playback performance. As such, users of HTPCs and madVR are advised to find a stable video driver that serves your needs and stick with it. It is easy to disable automatic driver updates that coincide with Windows updates and any of Intel, AMD or Nvidia provide download links to installers for legacy drivers that can be kept in case the video drivers need to be reinstalled.

…………..

Image gallery of madVR image processing settings

…………..

Summary of the rendering process:

Source

Posts: 3,823

Joined: Feb 2014

Reputation:

220

2016-02-08, 05:50

(This post was last modified: 2019-11-30, 14:36 by Warner306.)

1. DEVICES

- Identification

- Properties

- Calibration

- Display Modes

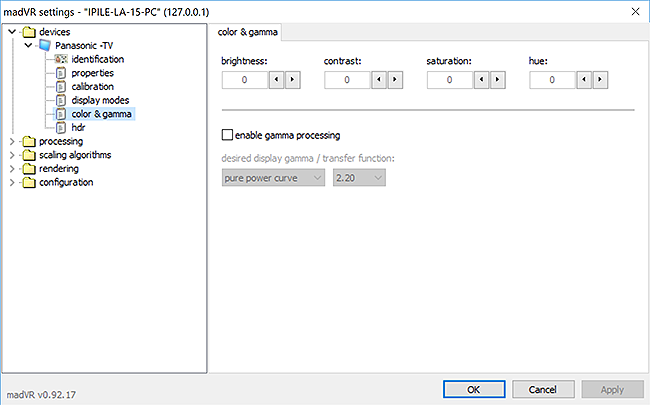

- Color & Gamma

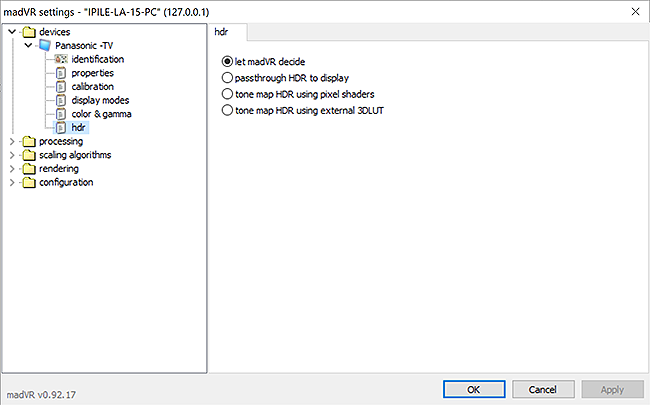

- HDR

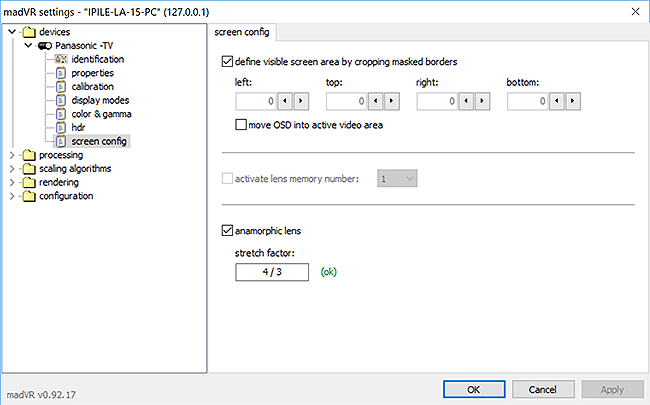

- Screen Config

Devices contains settings necessary to describe the capabilities of your display, including: color space, bit depth, 3D support, calibration, display modes, HDR support and screen type.

device name

Customizable device name. The default name is taken from the device’s EDID (Extended Display Information Data).

device type

The device type is only important when using a Digital Projector or a Receiver, Processor or Switch. If Digital Projector is selected, a new screen config section becomes available under devices.

Identification

The identification tab displays a summary of the EDID (Extended Display Information Data) that identifies any connected display devices and outlines its playback capabilities.

Before continuing on, it can be helpful to have a refresher on basic video terminology. These two sections are optional references:

Common Video Source Specifications & Definitions

Reading & Understanding Display Calibration Charts

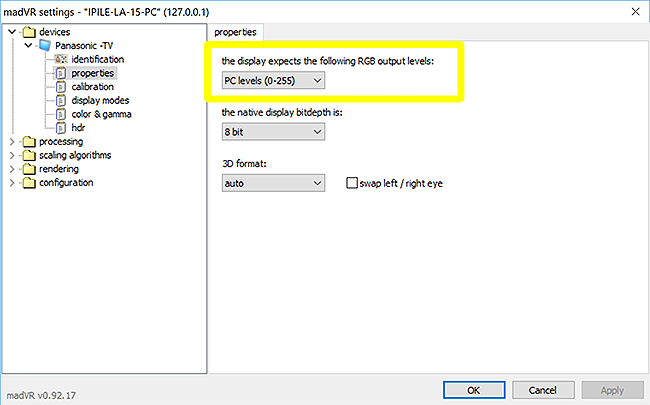

Properties – RGB Output Levels

Step one is to configure video output levels, so black and white are shown correctly.

What Are Video Levels?

PC and consumer video use different video levels. At 8-bits, video levels will be either full range RGB 0-255 (PC) or limited range RGB 16-235 (Video). Reference black starts at 0 (PC) or 16 (Video), but 16-235 video content is visually identical when displayed. The ideal output path maintains the same video levels from the media player to the display without any unwanted video levels or color space conversions. What the display does with this input is another matter…as long as black and white are the same as when they left the media player, you can’t ask for much more.

Note: The RGB Output levels checkboxes in LAV Video will not impact these conversions.

Option 1:

If you just connect an HDMI cable from PC to TV, chances are you’ll end up with a signal path like this:

(madVR) PC levels (0-255) -> (GPU) Limited Range RGB 16-235 -> (Display) Output as RGB 16-235

madVR expands the 16-235 source to full range RGB and it is converted back to 16-235 by the graphics card. Expanding the source prevents the GPU from clipping the levels when outputting 16-235. Both videos and the desktop will look accurate. However, it is possible to introduce banding if the GPU fails to use dithering when compressing 0-255 to 16-235. The range is converted twice: by madVR and the GPU.

This option isn’t recommended because of the range compression by the GPU and should only be used if no other suitable option is possible.

If your graphics card doesn’t allow for a full range setting (like many Intel iGPUs or older Nvidia cards), then this may be your only choice. If so, it may be worth running madLevelsTweaker.exe in the madVR installation folder to see if you can force full range output from the GPU.

Option 2:

If your PC is a dedicated HTPC, you might consider this approach:

(madVR) TV levels (16-235) -> (media front-end) Use limited color range (16-235) -> (GPU) Full Range RGB 0-255 -> (Display) Output as RGB 16-235

In this configuration, the signal remains 16-235 all the way to the display. A GPU set to 0-255 will passthrough all output from the media player without clipping the levels. If a media front-end is used, it should also be configured to use 16-235 to match the media player.

When set to 16-235, madVR does not clip Blacker-than-Black (0-15) and Whiter-than-White (236-255) if the source video includes these values. Black and white clipping patterns should be used to adjust brightness and contrast until 16-235 are the only visible bars.

This can be the best option for GPUs that output full range to a display that only accepts limited range RGB. Banding should not occur as madVR handles the only conversion (YCbCr -> RGB) and the GPU is bypassed. However, the desktop and other applications will output incorrect levels. PC applications render black at 0,0,0, while the display expects 16,16,16. The result is crushed blacks. This sacrifice improves the quality of the video player at the expense of all other computing.

Option 3:

A final option involves setting all sources to full range — identical to a traditional PC and computer monitor:

(madVR) PC levels (0-255) -> (GPU) Full Range RGB 0-255 -> (Display) Output as RGB 0-255

madVR expands 16-235 to 0-255 and it is presented in full range by the display. The display’s HDMI black level must be toggled to display full range RGB (Set to High or Normal (0-255) vs. Low (16-235)).

When expanding 16-235 to 0-255, madVR clips both 0-15 and 236-255, as reference black, 16, is mapped to 0, and reference white, 235, is mapped to 255. Clipping both BtB and WtW is acceptable as long as a correct grayscale is maintained. The use of black and white clipping patterns can confirm video levels (16-235) are displayed accurately.

This is usually the optimal setting for those with displays and GPUs supporting full range output (the majority of users). Both videos and the desktop will look correct and banding is unlikely as madVR handles the only required conversion. A PC must already convert from a video color space (YCbCr) to a PC color space (RGB), so the conversion of 16-235 to 0-255 is simply done with a YCbCr -> RGB conversion matrix that converts directly from limited range YCbCr to full range RGB. No additional scaling step is necessary.

Recommended Use (RGB output levels):

Banding is prevented when the GPU is set to passthrough all sources that occurs when set to RGB 0-255. Both Option 2 and Option 3 configure the GPU to 0-255. Option 3 should be considered the default option because it maintains correct output levels for all PC applications, while Option 2 only benefits video playback.

To confirm accurate video levels, it is a good idea to use some test patterns. This may require some adjustment to the display’s brightness and contrast controls to eliminate any black crush or white clipping. For testing, start with these AVS Forum Black and White Clipping Patterns (under Basic Settings) to confirm the display of 16-25 and 230-235, and move on to these videos that can be used to fine-tune «black 16» and «white 235.»

Discussion from madshi on RGB vs. YCbCr

How to Configure a Display and GPU for a HTPC

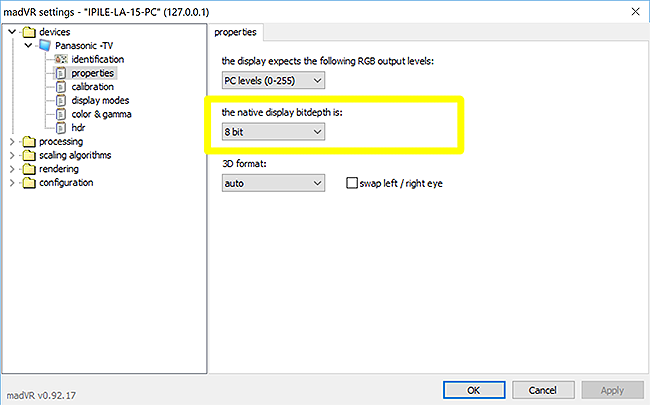

Properties – Native Display Bit Depth

The native display bit depth is the value output from madVR to the GPU. Internal math in madVR is calculated at 32-bits and the final result is dithered to the output bit depth selected here.

What Is a Bit Depth?

Every display panel is manufactured to a specific bit depth. Most displays are either 8-bit or 10-bit. Nearly all 1080p displays are 8-bit and nearly all UHD displays are 10-bit. This doesn’t necessarily mean the display panel is native 8-bit or 10-bit, but that it is capable of displaying detail in gradients up to that bit depth. For example, many current UHD displays are advertised as 10-bit panels, but are actually 8-bit panels that can quickly flash two adjacent colors together to create the illusion of a 10-bit color value (known as Frame Rate Control or FRC temporal dithering — typical of many VA 120 Hz LED TVs). The odd high-end, 1080p computer monitor, TV or projector can also display 10-bit color values, either natively or via FRC. So the display either represents color detail at 8-bits or 10-bits and converts all sources to match this native bit depth.

If you want to determine if your display can natively represent a 10-bit gradient, try using this test protocol along with this gradient test image and these videos. Omit the instructions to use fullscreen exclusive mode for the test if using Windows 10.

10-bit output requires the following is checked in general settings:

- use Direct3D 11 for presentation (Windows 7 and newer)

Other required options:

- Windows 7/8: enable automatic fullscreen exclusive mode;

- Windows 10: 10-bit output is possible in both windowed mode and fullscreen exclusive mode.

If there are no settings conflicts, the output bit depth should be set to match the display’s native bit depth (either 8-bit or 10-bit). Feeding a 10-bit or 12-bit input to an 8-bit display without FRC temporal dithering will lead two outcomes: low-quality dithering noise or color banding. If unsure, testing both 8-bits and 10-bits with the above linked gradient tests with and without dithering enabled can assist in determining if both look the same or one is superior.

Some factors that may force you to choose 8-bit output:

- You are unable to find any official specs for the display’s native bit depth;

- The best option for 4K UHD 60 Hz output is 8-bit RGB due to the bandwidth limitations of HDMI 2.0;

- You have created a custom resolution in madVR that has forced 8-bit output;

- Display mode switching to 12-bits at 23-24 Hz is not working correctly with certain Nvidia video drivers;

- The display has poor processing and creates banding with a 10/12-bit input even though it is a native 10-bit panel.

So is it a good idea to output a 10-bit source at 8-bits?

The answer to this depends on an understanding of madVR’s processing.

A bit depth represents a fixed scale of visible luminance steps. High bit depths are used in image processing to create sources free of banding without having to manipulate the source steps. This ensures content survives the capture, mastering and compression processes without introducing any color banding into the SOURCE VIDEO.

madVR takes the 10-bit YCbCr source values and converts them to 32-bit floating point RGB data. These additional bits are not invented but available to assist in rounding from one color space to another. This high bit depth is maintained until the final processing result, which is dithered in the highest-quality possible. So the end result is a 10-bit source upconverted to 32-bits and then downconverted for display.

madVR is designed to preserve the information from its processing and the initial data provided by the YCbCr to RGB conversion to lower bit depths, so it should never introduce banding at any stage because the data is kept all the way to the final output. This all depends on the quality of the source and whether it had banding to begin with.

Color gamuts are fixed at the top and bottom. Manipulating the source bit depth will not add any new colors. You simply get more shades or steps for each color when the bit depth is increased; everything in between becomes smoother, not more colorful.

madVR can represent any output bit depth with smooth gradients by adding invisible noise to the image before output called dithering. Dithering can make most output bit depths appear nearly indistinguishable from each other by using the information from the higher source bit depth to add missing color steps to lower bit depths. Dithering replicates any missing color steps by combining available colors to approximate the missing color values. This creates a random or repetitive offset pattern at places where banding would otherwise occur to create smooth transitions between every color shade. The higher the output bit depth, the more invisible any noise created by dithering and the dithering pattern itself becomes. By the time the bit depth is increased to 8-bits, the dithering pattern becomes so small that 8-bit color detail and 10-bit (or higher) color detail will appear virtually identical to the human eye. This is why many 8-bit FRC display panels still exist in the display market that employ high-quality dithering to display 10-bit videos.

There is an argument that when capturing something with a digital camera there is no value in using 10-bits if the noise captured by the camera is not below a certain threshold (the signal-to-noise ratio). If it is above this threshold, then the dithering added at 8-bits will be indiscernible from the noise captured at 10-bits. That is really what you are measuring when it comes to bit depths as high as 8-bits: detectable dithering noise. If dithering noise is not detectable, then an 8-bit panel is an acceptable way to show 10-bit content. Dithering noise can be particularly hard to detect at 4K UHD resolutions, especially using madVR’s low-noise dithering algorithms.

Take a look at these images that show the impact of dithering to a bit depth as low as 2-bits:

Dithering — 8-bits (16.8 million color shades) to 2-bits (64 color shades):

2 bit Ordered Dithering

2 bit No Dithering

*Best viewed at 100% browser zoom for the dithering to look most accurate.

Seems remarkable? As the bit depth is increased, the shading created by dithering becomes more and more seamless to the point where the output bit depth becomes somewhat unimportant as gradients will always remain smooth without introducing any color banding not found in the source values.

Dithering is designed to spread out and erase any quantization (digital rounding) errors, so it is not designed to remove banding from the source video. Rather, if the source is free of banding, that information can always be maintained faithfully at lower display bit depths with dithering.

Recommended Use (native display bitdepth):

Those with native 8-bit displays should stick with 8-bit output, as the additional detail of higher bit depths cannot be represented by the display panel and will only result in added image noise. On the other hand, those with 10-bit displays have a choice between either 8-bit or 10-bit output, with each providing nearly identical image quality due to the use of madVR’s excellent dithering algorithms. The high bit depths used for image processing will prevent any loss of color detail from the source video to bit depths of 8-10 bits when the final 16-bit processing result is dithered to the output bit depth (with any remaining differences masked by the blending of colors created by these higher bit depths).

While 10-bit output could be considered the default option for a native 10-bit display panel, simply setting madVR and the GPU to 8-bit RGB can greatly simplify HTPC configuration for HDMI 2.0 devices. There are some common issues that can be encountered when the GPU is set to output 10 or 12-bits. For one, display mode switching from 8-bit RGB @ 60 Hz to 12-bit RGB @ 23-24 Hz is finicky with Nvidia video drivers and sometimes the video driver won’t switch correctly from 8-bits to 12-bits. HDMI 2.0 limits 60 Hz 4K UHD output to 8-bit RGB, and RGB output is always preferred over YCbCr on a PC. Two, Nvidia’s API for custom resolutions is locked to 8-bits, so Nvidia users needing a custom resolution must use 8-bits. Three, certain GPU drivers are known to create color banding when set to output 10 or 12-bits and 8-bit output can avoid any banding. In each of these cases, 8-bit output would be preferred. Those using madVR for the first time may not be accustomed to a video renderer that uses dithering, but it should be stated again: both 8-bit and 10-bit output offer virtually indistinguishable visual quality as a result of high-quality dithering added to all bit depths.

When the bit depth is set below the display’s native bit depth, the only visual change occurs in the noise floor of the image, and this subtlety can be invisible. Setting madVR to 8-bits might even be beneficial for some 10-bit displays (like some LG OLEDs). Providing the display with a good 8-bits as opposed to 10 or 12-bits can sometimes make for less work for the display and a reduced chance of introducing quantization errors. The odd UHD display may struggle with high bit depths due to the use of low bit depths for its internal video processing, not applying dithering correctly when converting 12-bits to 10-bits or some other unknown display deficiency. This is not meant to discourage anyone from choosing 10-bit output; the highest bit depth should produce the highest perceived quality, but your eyes are often the best judge of what bit depth works best for the display.

Regardless of output bit depth, it is advised to check if the GPU or display processing is adding any color banding to the image by using a good high-bit depth gradient test image (such as those linked above). Other good tests for color banding include scenes with open blue skies and animated films with large patches of blended color shades.

Determining Display-Panel Bit Depth

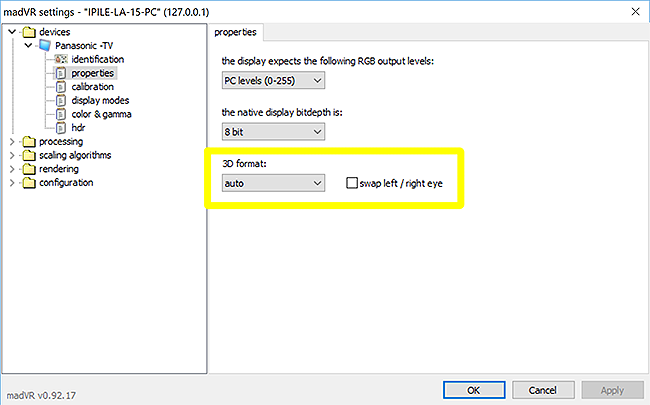

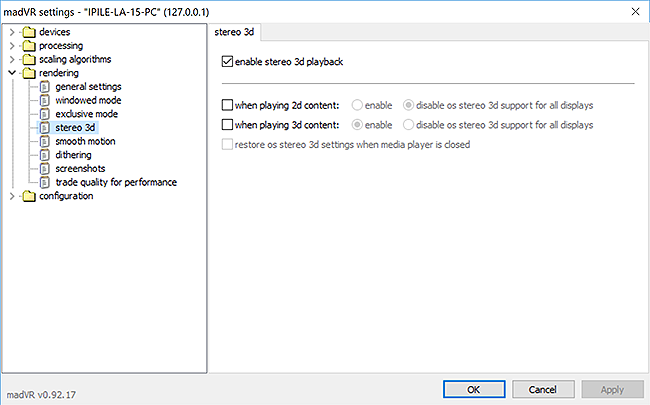

Properties – 3D Format

3D support in madVR is limited to MPEG4-MVC 3D Blu-ray. MVC 3D mkvs can be created from frame packed 3D Blu-rays with software such as MakeMKV.

The input 3D format must be frame packed MPEG4-MVC. The output format depends on the operating system, HDMI spec and display type. 3D formats with the left and right images on the same frame will be sent out as 2D images.

3D playback requires four ingredients:

- enable stereo 3d playback is checked in the madVR control panel (rendering -> stereo 3d);

- A 3D video decoder is used (e.g., LAV Filters 0.68+ with 3D software decoder installation checked);

- A 3D-capable display is used (with its 3D mode enabled);

- Windows 8.1 or Windows 10 is used as the operating system.

In addition, it may be necessary to check enable automatic fullscreen exclusive mode in general settings if MPEG4-MVC videos play in 2D rather than 3D.

Stereoscopic 3D is designed to capture separate images of the same object from slightly different angles to create an image for the left eye and right eye. The brain is able to combine the two images into one, which leads to a sense of enhanced depth.

What Is the Difference Between an Active 3D TV and Passive 3D TV?

auto

The default output format is frame packed 3D Blu-ray. The output is an extra-tall (1920 x 2205 — with padding) frame containing the left eye and right eye images stacked on top of each other at full resolution.

auto – (Windows 8+, GPU — HDMI 1.4+, Display — HDMI 1.4+): Receives the full resolution, frame packed output. On an active 3D display, each frame is split and shown sequentially. A passive 3D display interweaves the two images as a single image.

auto – (Windows+, GPU — HDMI 1.3, Display — HDMI 1.3): Receives a downconverted, half side-by-side format. On an active 3D display, each frame is split, upscaled and shown sequentially. A passive 3D display upscales the two images and then combines them as a single frame.

The above default behavior can be overridden by converting the frame packed source to any format that places the left eye and right eye images on the same frame. These 2D formats function without active GPU stereoscopic 3D and are compatible with all Windows versions and HDMI specifications.

Force 3D format below:

side-by-side

Side-by-side (SbS) stacks the left eye and right eye images horizontally. madVR outputs half SbS, where each eye is stored at half its horizontal resolution (960 x 1080) to fit on one 2D frame. The display splits each frame and scales each image back to its original resolution.

An active 3D display shows half SbS sequentially. Passive 3D displays will split the screen into odd and even horizontal lines. The left eye and right eye odd sections are combined. Then the left eye and right eye even sections are combined. This weaving creates the perception of two seperate images.

top-and-bottom

Top-and-bottom (TaB) stacks the left eye and right eye images vertically. madVR outputs half TaB, where each eye is stored at half its vertical resolution (1920 x 540) to fit on one 2D frame. The display splits each frame and scales each image back to its original resolution.

An active 3D display shows half TaB sequentially. Passive 3D displays will split the screen into odd and even horizontal lines. The left eye and right eye odd sections are combined. Then the left eye and right eye even sections are combined. This weaving creates the perception of two seperate images.

line alternative

Line alternative is an interlaced 3D format designed for passive 3D displays. Each frame contains a left odd field and right odd field. The next frame contains a left even field and right even field. 3D glasses make the appropriate lines visible for the left eye or right eye. For line alternative to function, the display must be set to its native resolution without any visible over or underscan.

column alternative

Column alternative is another interlaced 3D format similar to line alternative, except the frames are matched vertically as opposed to horizontally. This is another passive 3D format. One frame contains a left odd field and right odd field. The next frame contains a left even field and right even field. 3D glasses make the appropriate lines visible for the left or right eye. The display must be set to its native resolution without any visible over or underscan.

Further Detail on the Various 3D Formats

swap left / right eye

Swaps the order in which frames are displayed. This can correct the behavior of some displays that show the left eye and right eye images in the incorrect order. Incorrect eye order can be fixed for all formats, including line and column alternative. Many displays can also swap the eye order in its picture menus.

3D glasses must be synchronized with the display before playback. If the image appears blurry (particularly, the background elements), your 3D glasses are likely not enabled.

Recommended Use (3D format):

AMD and Intel users can safely set 3D format to auto. When functioning correctly, stereoscopic 3D should trigger in the GPU control panel at playback start and the display’s 3D mode should takeover from there. Nvidia, on the other hand, no longer offers support for MVC 3D in its official drivers. Nvidia’s official support for 3D playback ended with driver v425.31 (April 11, 2019) and only the 18 series drivers are to receive legacy updates and patches to keep MVC 3D operable with current Windows builds (recommended: v385.28 or v418.91). Nvidia 3D Vision that enables stereoscopic 3D is incompatible with the newest drivers and manual installation of 3D Vision will not provide any added functionality.

Manual Workaround to Install 3D Vision with Recent Nvidia Drivers

Users of Nvidia drivers after v425.31 must convert MVC 3D to a two-dimensional 3D format (where both 3D images are reduced in resolution and combined into a single frame) using any of the supported 3D formats listed under 3D format. Then 3D content can be passed through to the display without any need for active GPU stereoscopic 3D. The display’s user manual should be consulted for a list of supported 3D formats.

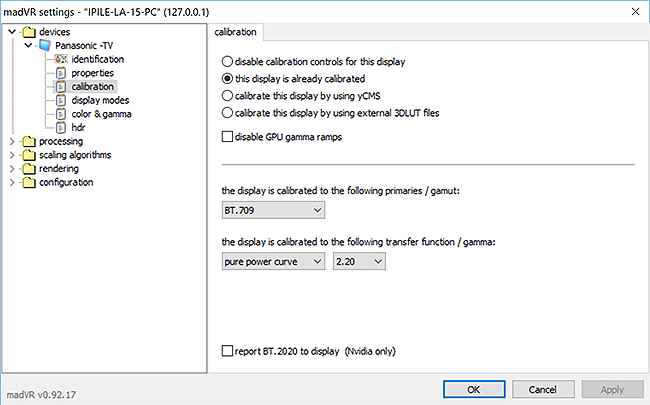

Calibration

When doing any kind of gamut mapping or transfer function conversion, madVR uses the values in calibration as the target. This requires you know your display’s calibrated color gamut and gamma curve and attach any available yCMS or 3D LUT calibration files.

What Is a Color Gamut?

Most 4K UHD displays have separate display modes for HDR and SDR. Calibration settings in madVR only apply to the display’s default SDR mode. BT.2020 HDR content is passed through unless a special setting in hdr is enabled such as converting HDR to SDR.

disable calibration controls for this display

Turns off calibration controls for gamut and transfer function conversions.

If you purchased your display and went through only basic calibration without any knowledge of its calibrated gamma or color gamut, this is the safest choice.

Turning off calibration controls defaults to:

- primaries / gamut: BT.709

- transfer function / gamma: pure power curve 2.20

this display is already calibrated

This enables calibration options used to map content with a different gamut than the calibrated display color profile. For example, a BT.2020 source, such as an UHD Blu-ray, may need to be mapped to the BT.709 color space of an SDR display, or a BT.709 source could be mapped to an UHD display calibrated to BT.2020. Displays with an Automatic color space setting can select the appropriate color profile to match the source, but all other displays require the input gamut matches the calibrated gamut to track the color coordinates correctly and prevent any over or undersatuation. madVR should convert any source gamut that doesn’t match the calibrated gamut.

If you want to use this feature but are unsure of how your display is calibrated, try the following values that are most common.

1080p Display:

- primaries / gamut: BT.709

- transfer function / gamma: pure power curve 2.20

4K UHD Display:

- primaries / gamut: BT.709 (Auto/Normal) / BT.2020 (Wide/Extended/Native)

- transfer function / gamma: pure power curve 2.20

Note: transfer function / gamma is only used if enable gamma processing is checked under color & gamma. Gamma processing is unnecessary as madVR will always use the same gamma as the encoded source mastering monitor. The transfer function is only applied by default for the conversion of HDR to SDR because madVR must convert a PQ HDR source to match the known calibrated SDR gamma of the display.

HDR to SDR Instructions: Mapping Wide Color Gamuts | Choosing a Gamma Curve

calibrate this display by using yCMS

Medium Processing

yCMS and 3DLUT files are forms of color management that use the GPU for gamut and transfer function correction. yCMS is the simpler of the two, only requiring a few measurements with a colorimeter and appropriate software. This a lengthy topic beyond the scope of this guide.

yCMS files can be created with use of HCFR. If you are going this route, it may be better to use the more accurate 3D LUT.

calibrate this display by using external 3DLUT files

Medium — High Processing

Display calibration software such as ArgyllCMS/DisplayCal, CalMAN or LightSpace CMS is used along with madVR to create up to a 256 x 256 x 256 3D LUT.

A 3D LUT (3D lookup table) is a fast and automated form of display calibration that uses the GPU to produce corrected color values for sophisticated grayscale, transfer function and primary color calibration.

What Is a 3D LUT?