I just built an application with expressJs for an institution where they upload video tutorials. At first the videos were being uploaded to the same server but later I switched to Amazon. I mean only the videos are being uploaded to Amazon. Now I get this error whenever I try to upload ENOSPC no space left on device. I have cleared the tmp file to no avail.I need to say that I have searched extensively about this issue but none of d solutions seems to work for me

asked May 2, 2018 at 19:21

Just need to clean up the Docker system in order to tackle it. Worked for me.

$ docker system prune

Link to official docs

answered Aug 29, 2019 at 8:09

RenjithRenjith

1,7853 gold badges12 silver badges19 bronze badges

4

In my case, I got the error ‘npm WARN tar ENOSPC: no space left on device’ while running the nodeJS in docker, I just used below command to reclaim space.

sudo docker system prune -af

answered Apr 12, 2019 at 16:49

Hailin TanHailin Tan

8899 silver badges7 bronze badges

3

I had the same problem, take a look at the selected answer in the Stackoverflow here:

Node.JS Error: ENOSPC

Here is the command that I used (my OS: LinuxMint 18.3 Sylvia which is a Ubuntu/Debian based Linux system).

echo fs.inotify.max_user_watches=524288 | sudo tee -a /etc/sysctl.conf && sudo sysctl -p

answered May 9, 2018 at 17:37

omt66omt66

4,6251 gold badge21 silver badges21 bronze badges

5

I have come across a similar situation where the disk is free but the system is not able to create new files. I am using forever for running my node app. Forever need to open a file to keep track of node process it’s running.

If you’ve got free available storage space on your system but keep getting error messages such as “No space left on device”; you’re likely facing issues with not having sufficient space left in your inode table.

use df -i which gives IUser% like this

Filesystem Inodes IUsed IFree IUse% Mounted on

udev 992637 537 992100 1% /dev

tmpfs 998601 1023 997578 1% /run

If your IUser% reaches 100% means your «inode table» is exhausted

Identify dummy files or unnecessary files in the system and deleted them

answered Nov 6, 2019 at 6:31

1

I got this error when my script was trying to create a new file. It may look like you’ve got lots of space on the disk, but if you’ve got millions of tiny files on the disk then you could have used up all the available inodes. Run df -hi to see how many inodes are free.

answered Aug 23, 2018 at 17:05

alnorth29alnorth29

3,4952 gold badges33 silver badges49 bronze badges

I had the same problem, you can clear the trash if you haven’t already, worked for me:

(The command I searched from a forum, so read about it before you decide to use it, I’m a beginner and just copied it, I don’t know the full scope of what it does exactly)

$ rm -rf ~/.local/share/Trash/*

The command is from this forum:

https://askubuntu.com/questions/468721/how-can-i-empty-the-trash-using-terminal

answered May 20, 2021 at 20:16

XelphinXelphin

2813 silver badges10 bronze badges

0

Well in my own case. What actually happened was while the files were been uploaded on Amazon web service, I wasn’t deleting the files from the temp folder. Well every developer knows that when uploading files to a server they are initially stored in the temp folder before being copied to whichever folder you want it to(I know for Nodejs and php); So try and delete your temp folder and see. And ensure ur upload method handles clearing of your temp folder immediately after every upload

answered Jun 20, 2018 at 13:45

YinkaYinka

1,5942 gold badges14 silver badges22 bronze badges

2

You can set a new limit temporary with:

sudo sysctl fs.inotify.max_user_watches=524288

sudo sysctl -p

If you like to make your limit permanent, use:

echo fs.inotify.max_user_watches=524288 | sudo tee -a /etc/sysctl.conf

sudo sysctl -p

answered Jun 27, 2019 at 7:02

vipinlalrvvipinlalrv

2,8451 gold badge13 silver badges8 bronze badges

1

Adding to the discussion, the above command works even when the program is not run from Docker.

Repeating that command:

sudo sysctl fs.inotify.max_user_watches=524288

docker system prune

answered Aug 12, 2021 at 13:17

The previous answers fixed my problem for a short period of time.

I had to do find the big files that weren’t being used and were filling my disk.

on the host computer I run: df

I got this, my problem was: /dev/nvme0n1p3

Filesystem 1K-blocks Used Available Use% Mounted on

udev 32790508 0 32790508 0% /dev

tmpfs 6563764 239412 6324352 4% /run

/dev/nvme0n1p3 978611404 928877724 0 100% /

tmpfs 32818816 196812 32622004 1% /dev/shm

tmpfs 5120 4 5116 1% /run/lock

tmpfs 32818816 0 32818816 0% /sys/fs/cgroup

/dev/nvme0n1p1 610304 28728 581576 5% /boot/efi

tmpfs 6563764 44 6563720 1% /run/user/1000

I installed ncdu and run it against root directory, you may need to manually delete an small file to make space for ncdu, if that’s is not possible, you can use df to find the files manually:

sudo apt-get install ncdu

sudo ncdu /

that helped me to identify the files, in my case those files were in the /tmp folder, then I used this command to delete the ones that weren’t used in the last 10 days:

With this app I was able to identify the big files and delete tmp files: (Sep-4 12:26)

sudo find /tmp -type f -atime +10 -delete

answered Sep 4, 2020 at 20:13

1

tldr;

Restart Docker Desktop

The only thing that fixed this for me was quitting and restarting Docker Desktop.

I tried docker system prune, removed as many volumes as I could safely do, removed all containers and many images and nothing worked until I quit and restarted Docker Desktop.

Before restarting Docker Desktop the system prune removed 2GB but after restarting it removed 12GB.

So, if you tried to run system prune and it didn’t work, try restarting Docker and running the system prune again.

That’s what I did and it worked. I can’t say I understand why it worked.

answered Jan 30, 2022 at 2:50

Joshua DyckJoshua Dyck

2,03519 silver badges25 bronze badges

This worked for me:

sudo docker system prune -af

answered Dec 5, 2022 at 21:42

- Open

Docker Desktop - Go to

Troubleshoot - Click

Reset to factory defaults

answered Jul 11, 2020 at 9:05

1

The issue was actually as a result of temp folder not being cleared after upload, so all the videos that have been uploaded hitherto were still in the temp folder and the memory has been exhausted. The temp folder has been cleared now and everything works fine now.

answered May 11, 2018 at 15:00

YinkaYinka

1,5942 gold badges14 silver badges22 bronze badges

I struggled hard with it, some time, following command worked.

docker system prune

But then I checked the volume and it was full. I inspected and came to know that node_modules have become the real trouble.

So, I deleted node_modules, ran again NPM install and it worked like charm.

Note:- This worked for me for NODEJS and REACTJS project.

answered May 8, 2021 at 18:34

Zia UllahZia Ullah

3173 silver badges11 bronze badges

In my case, Linux ext4 file system, large_dir feature should be enabled.

// check if it's enabled

sudo tune2fs -l /dev/sdc | grep large_dir

// enable it

sudo tune2fs -O large_dir /dev/sda

On Ubuntu, ext4 FS will have a 64M limit on number of files in a single directory by default, unless large_dir is enabled.

answered Jun 7, 2021 at 2:25

spikeyangspikeyang

6919 silver badges17 bronze badges

I used to check free space first using this command.

to show show human-readable output

free -h

then i reclaimed more free space to almost

Total reclaimed space: 2.77GB from 0.94GB using this command

sudo docker system prune -af

this worked for me.

answered Nov 7, 2022 at 8:23

Description

When trying to deploy a yarn workspace monorepo I receive "ENOSPC: no space left on device, write" (free plan) when just installing dependencies:

11/15 10:53 AM (4h)

installing to /tmp/252defbc/user/packages/enum-server-api

11/15 10:53 AM (4h)

yarn install v1.12.3

11/15 10:53 AM (4h)

[1/5] Validating package.json...

11/15 10:53 AM (4h)

[2/5] Resolving packages...

11/15 10:53 AM (4h)

[3/5] Fetching packages...

11/15 10:53 AM (4h)

info fsevents@1.2.4: The platform "linux" is incompatible with this module.

11/15 10:53 AM (4h)

info "fsevents@1.2.4" is an optional dependency and failed compatibility check. Excluding it from installation.

11/15 10:53 AM (4h)

[4/5] Linking dependencies...

11/15 10:53 AM (4h)

error Could not write file "/tmp/252defbc/user/packages/enum-server-api/yarn-error.log": "ENOSPC: no space left on device, write"

Is there any limitation regarding dependencies size or working with monorepos?

How to reproduce

To reproduce you can simply try to deploy this branch:

https://github.com/nitzano/enum-server/tree/now_deploy

My now.json setup:

{

"version": 2,

"name": "enum-server",

"builds": [

{

"src": "packages/enum-server-api/package.json",

"use": "@now/node-server"

}

]

}

Suggestions for better-than-ext4 choices for storing masses of small files:

If you’re using the filesystem as an object store, you might want to look at using a filesystem that specializes in that, possibly to the detriment of other characteristics. A quick Google search found Ceph, which appears to be open source, and can be mounted as a POSIX filesystem, but also accessed with other APIs. I don’t know if it’s worth using on a single host, without taking advantage of replication.

Another object-storage system is OpenStack’s Swift. Its design docs say it stores each object as a separate file, with metadata in xattrs. Here’s an article about it. Their deployment guide says they found XFS gave the best performance for object storage. So even though the workload isn’t what XFS is best at, it was apparently better than the competitors when RackSpace was testing things. Possibly Swift favours XFS because XFS has good / fast support for extended attributes. It might be that ext3/ext4 would do ok on single disks as an object-store backend if extra metadata wasn’t needed (or if it was kept inside the binary file).

Swift does the replication / load-balancing for you, and suggests that you give it filesystems made on raw disks, not RAID. It points out that its workload is essentially worst-case for RAID5 (which makes sense if we’re talking about a workload with writes of small files. XFS typically doesn’t quite pack them head-to-tail, so you don’t get full-stripe writes, and RAID5 has to do some reads to update the parity stripe. Swift docs also talk about using 100 partitions per drive. I assume that’s a Swift term, and isn’t talking about making 100 different XFS filesystems on each SATA disk.

Running a separate XFS for every disk is actually a huge difference. Instead of one gigantic free-inode map, each disk will have a separate XFS with separate free-lists. Also, it avoids the RAID5 penalty for small writes.

If you already have your software built to use a filesystem directly as an object store, rather than going through something like Swift to handle the replication / load-balancing, then you can at least avoid having all your files in a single directory. (I didn’t see Swift docs say how they lay out their files into multiple directories, but I’m certain they do.)

With almost any normal filesystem, it will help to used a structure like

1234/5678 # nested medium-size directories instead of

./12345678 # one giant directory

Probably about 10k entries is reasonable, so taking a well-distributed 4 characters of your object names and using them as directories is an easy solution. It doesn’t have to be very well balanced. The odd 100k directory probably won’t be a noticeable issue, and neither will some empty directories.

XFS is not ideal for huge masses of small files. It does what it can, but it’s more optimized for streaming writes of larger files. It’s very good overall for general use, though. It doesn’t have ENOSPC on collisions in its directory indexing (AFAIK), and can handle having one directory with millions of entries. (But it’s still better to use at least a one-level tree.)

Dave Chinner had some comments on XFS performance with huge numbers of inodes allocated, leading to slow-ish touch performance. Finding a free inode to allocate starts taking more CPU time, as the free inode bitmap gets fragmented. Note that this is not an issue of one-big-directory vs. multiple directories, but rather an issue of many used inodes over the whole filesystem. Splitting your files into multiple directories helps with some problems, like the one that ext4 choked on in the OP, but not the whole-disk problem of keeping track of free space. Swift’s separate-filesystem-per-disk helps with this, compared to on giant XFS on a RAID5.

I don’t know if btrfs is good at this, but I think it may be. I think Facebook employs its lead developer for a reason.

I know reiserfs was optimized for this case, but it’s barely, if at all, maintained anymore. I really can’t recommend going with reiser4. It might be interesting to experiment, though. But it’s by far the least future-proof choice. I’ve also seen reports of performance degradation on aged reiserFS, and there’s no good defrag tool. (google filesystem millions of small files, and look at some of the existing stackexchange answers.)

I’m probably missing something, so final recommendation: ask about this on serverfault! If I had to pick something right now, I’d say give BTRFS a try, but make sure you have backups. (esp. if you use BTRFS’s build-in multiple disk redundancy, instead of running it on top of RAID. The performance advantages could be big, since small files are bad news for RAID5, unless it’s a read-mostly workload.)

Ошибка no space left on device в Linux может возникать при использовании различных программ или сервисов. В графических программах данная ошибка может выводится во всплывающем сообщении, а для сервисов она обычно появляется в логах. Во всех случаях она означает одно. На разделе диска, куда программа собирается писать свои данные закончилось свободное место.

Избежать такой проблемы можно ещё на этапе планирования установки системы. Выделяйте под каталог /home отдельный раздел диска, тогда если вы займете всю память своими файлами, это не помешает работе системы. Также выделяйте больше 20 гигабайт под корневой раздел чтобы всем программам точно хватило места. Но что делать если такая проблема уже случилась? Давайте рассмотрим как освободить место на диске с Linux.

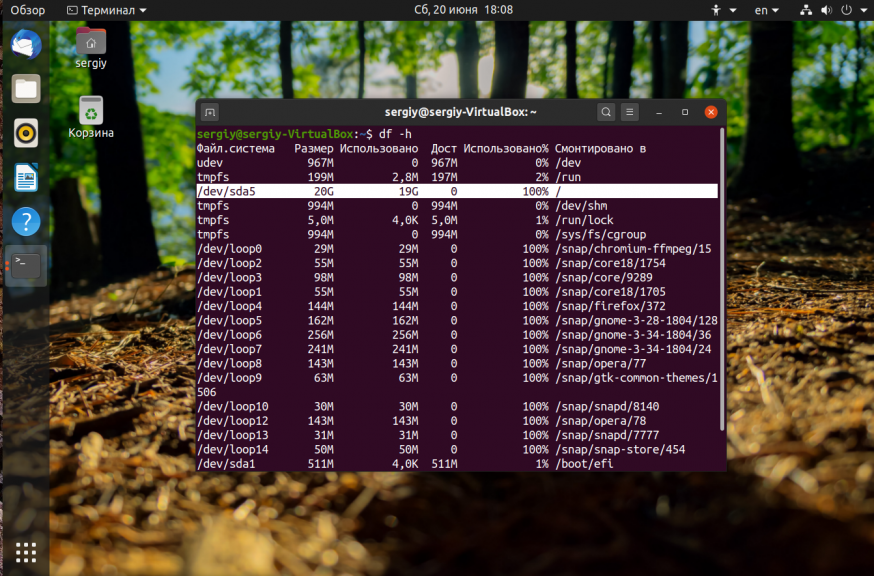

Первым дело надо понять на каком разделе у вас закончилась память. Для этого можно воспользоваться утилитой df. Она поставляется вместе с системой, поэтому никаких проблем с её запуском быть не должно:

df -h

На точки монтирования, начинающиеся со слова snap внимания можно не обращать. Команда отображает общее количество места на диске, занятое и доступное место, а также процент занятого места. В данном случае 100% занято для корневого раздела — /dev/sda5. Конечно, надо разобраться какая программа или файл заняла всё место и устранить эту проблему, но сначала надо вернуть систему в рабочее состояние. Для этого надо освободить немного места. Рассмотрим что можно сделать чтобы экстренно освободить немного памяти.

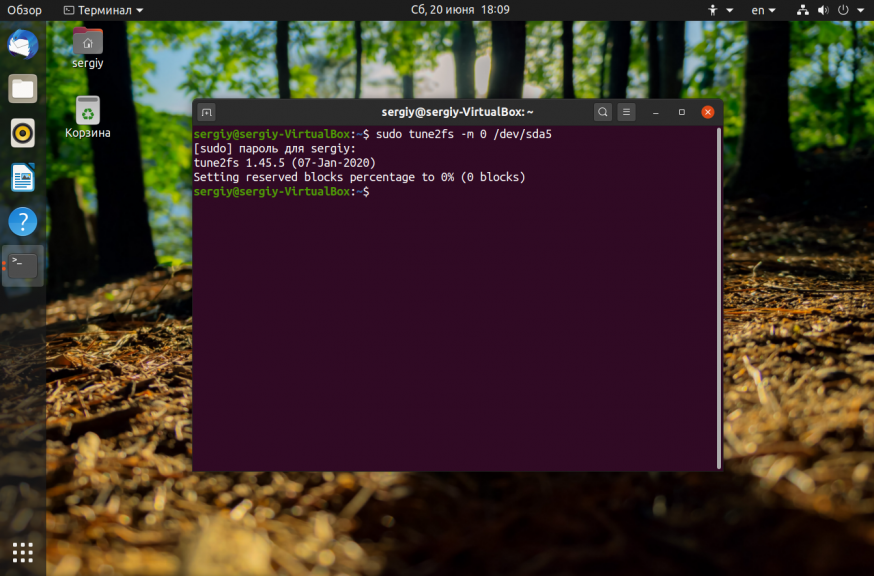

1. Отключить зарезервированное место для root

Обычно, у всех файловых систем семейства Ext, которые принято использовать чаще всего как для корневого, так и для домашнего раздела используется резервирование 5% памяти для пользователя root на случай если на диске закончится место. Вы можете эту память освободить и использовать. Для этого выполните:

sudo tune2fs -m 0 /dev/sda5

Здесь опция -m указывает процент зарезервированного места, а /dev/sda5 — это ваш диск, который надо настроить. После этого места должно стать больше.

2. Очистить кэш пакетного менеджера

Обычно, пакетный менеджер, будь то apt или yum хранит кэш пакетов, репозиториев и другие временные файлы на диске. Они некоторые из них ненужны, а некоторые нужны, но их можно скачать при необходимости. Если вам срочно надо дисковое пространство этот кэш можно почистить. Для очистки кэша apt выполните:

sudo apt clean

sudo apt autoclean

Для очистки кэша yum используйте команды:

yum clean all

3. Очистить кэш файловой системы

Вы могли удалить некоторые большие файлы, но память после этого так и не освободилась. Эта проблема актуальна для серверов, которые работают долгое время без перезагрузки. Чтобы полностью освободить память надо перезагрузить сервер. Просто перезагрузите его и места на диске станет больше.

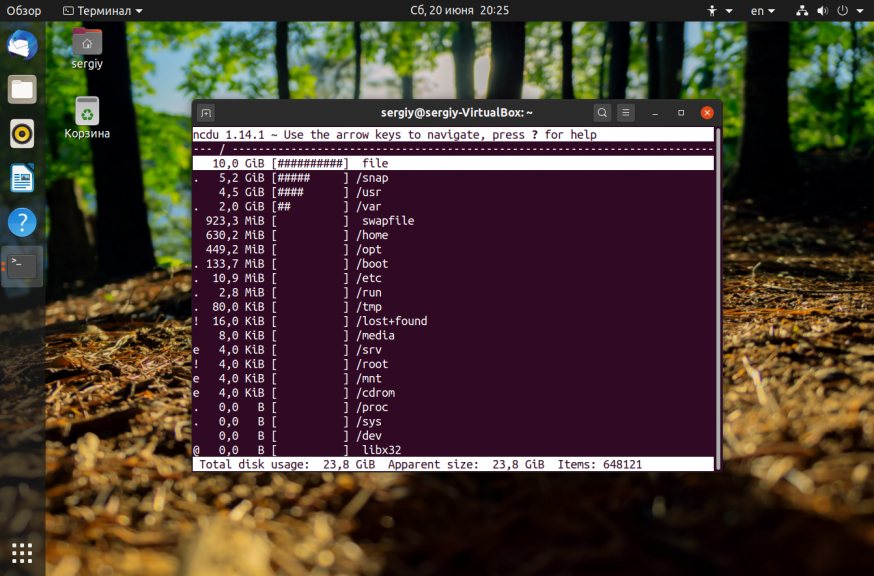

4. Найти большие файлы

После выполнения всех перечисленных выше рекомендаций, у вас уже должно быть достаточно свободного места для установки специальных утилит очистки системы. Для начала вы можете попытаться найти самые большие файлы и если они не нужны — удалить их. Возможно какая-либо программа создала огромный лог файл, который занял всю память. Чтобы узнать что занимает место на диске Linux можно использовать утилиту ncdu:

sudo apt install ncdu

Она сканирует все файлы и отображает их по размеру:

Более подробно про поиск больших файлов читайте в отдельной статье.

5. Найти дубликаты файлов

С помощью утилиты BleachBit вы можете найти и удалить дубликаты файлов. Это тоже поможет сэкономить пространство на диске.

6. Удалите старые ядра

Ядро Linux довольно часто обновляется старые ядра остаются в каталоге /boot и занимают место. Если вы выделили под этот каталог отдельный раздел, то скоро это может стать проблемой и вы получите ошибку при обновлении, поскольку программа просто не сможет записать в этот каталог новое ядро. Решение простое — удалить старые версии ядер, которые больше не нужны.

Выводы

Теперь вы знаете почему возникает ошибка No space left on device, как её предотвратить и как исправить если с вами это уже произошло. Освободить место на диске с Linux не так уж сложно, но надо понять в чём была причина того, что всё место занято и исправить её, ведь на следующий раз не всё эти методы смогут помочь.

Статья распространяется под лицензией Creative Commons ShareAlike 4.0 при копировании материала ссылка на источник обязательна .

Об авторе

Основатель и администратор сайта losst.ru, увлекаюсь открытым программным обеспечением и операционной системой Linux. В качестве основной ОС сейчас использую Ubuntu. Кроме Linux, интересуюсь всем, что связано с информационными технологиями и современной наукой.