@gaos1

Here are the settings on the container

foo=> select * from gp_toolkit.gp_resgroup_config;

-[ RECORD 1 ]----------------+--------------

groupid | 6437

groupname | default_group

concurrency | 20

proposed_concurrency | 20

cpu_rate_limit | 30

memory_limit | 30

proposed_memory_limit | 30

memory_shared_quota | 50

proposed_memory_shared_quota | 50

memory_spill_ratio | 20

proposed_memory_spill_ratio | 20

-[ RECORD 2 ]----------------+--------------

groupid | 6438

groupname | admin_group

concurrency | 10

proposed_concurrency | 10

cpu_rate_limit | 10

memory_limit | 10

proposed_memory_limit | 10

memory_shared_quota | 50

proposed_memory_shared_quota | 50

memory_spill_ratio | 20

proposed_memory_spill_ratio | 20

Here is the explain analyze from the admin_group

foo=# explain analyze CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);

NOTICE: Table doesn't have 'DISTRIBUTED BY' clause. Creating a NULL policy entry.

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------

--------------------------------

Result (cost=0.00..0.00 rows=0 width=0)

Rows out: 2 rows (seg0) with 1.317 ms to first row, 1.637 ms to end.

-> Redistribute Motion 2:2 (slice2; segments: 2) (cost=0.00..2586.14 rows=2 width=20)

Rows out: 2 rows at destination (seg0) with 1.314 ms to first row, 1.632 ms to end.

-> Result (cost=0.00..2586.00 rows=2 width=24)

Rows out: Avg 1.0 rows x 2 workers. Max 1 rows (seg0) with 2.458 ms to first row, 3.518 ms to en

d.

-> Sequence (cost=0.00..2586.00 rows=2 width=24)

Rows out: Avg 1.0 rows x 2 workers. Max 1 rows (seg0) with 2.448 ms to first row, 3.508 ms

to end.

-> Shared Scan (share slice:id 2:0) (cost=0.00..431.00 rows=1 width=1)

Rows out: 1 rows (seg0) with 1.453 ms to end.

-> Materialize (cost=0.00..431.00 rows=1 width=1)

Rows out: 0 rows (seg0) with 1.452 ms to end.

-> GroupAggregate (cost=0.00..431.00 rows=1 width=12)

Group By: public.edge.dest

Rows out: 1 rows (seg0) with 1.361 ms to end.

-> Sort (cost=0.00..431.00 rows=1 width=12)

Sort Key: public.edge.dest

Sort Method: quicksort Max Memory: 33KB Avg Memory: 33KB (2 segme

nts)

Rows out: 1 rows (seg0) with 1.357 ms to end.

Executor memory: 33K bytes avg, 33K bytes max (seg0).

Work_mem used: 33K bytes avg, 33K bytes max (seg0). Workfile: (0 sp

illing)

-> Redistribute Motion 2:2 (slice1; segments: 2) (cost=0.00..431.

00 rows=1 width=12)

Hash Key: public.edge.dest

Rows out: 1 rows at destination (seg0) with 1.339 ms to first

row, 1.340 ms to end.

-> Result (cost=0.00..431.00 rows=1 width=12)

Rows out: 1 rows (seg1) with 0.034 ms to first row, 0.0

35 ms to end.

-> GroupAggregate (cost=0.00..431.00 rows=1 width=12)

Group By: public.edge.dest

Rows out: 1 rows (seg1) with 0.034 ms to end.

-> Sort (cost=0.00..431.00 rows=1 width=4)

Sort Key: public.edge.dest

Sort Method: quicksort Max Memory: 33KB A

vg Memory: 33KB (2 segments)

Rows out: 1 rows (seg1) with 0.030 ms to fi

rst row, 0.031 ms to end.

Executor memory: 33K bytes avg, 33K bytes m

ax (seg0).

Work_mem used: 33K bytes avg, 33K bytes max

(seg0). Workfile: (0 spilling)

-> Table Scan on edge (cost=0.00..431.00 r

ows=1 width=4)

Filter: src <> dest AND NOT src IS NUL

L AND NOT dest IS NULL

Rows out: 1 rows (seg1) with 0.021 ms

to first row, 0.022 ms to end.

-> Sequence (cost=0.00..2155.00 rows=2 width=24)

Rows out: Avg 1.0 rows x 2 workers. Max 1 rows (seg0) with 0.993 ms to first row, 2.

052 ms to end.

-> Shared Scan (share slice:id 2:1) (cost=0.00..431.00 rows=1 width=1)

Rows out: 1 rows (seg1) with 0.113 ms to end.

-> Materialize (cost=0.00..431.00 rows=1 width=1)

Rows out: 0 rows (seg0) with 0.112 ms to end.

-> GroupAggregate (cost=0.00..431.00 rows=1 width=12)

Group By: public.edge.src

Rows out: 1 rows (seg1) with 0.033 ms to end.

-> Sort (cost=0.00..431.00 rows=1 width=4)

Sort Key: public.edge.src

Sort Method: quicksort Max Memory: 33KB Avg Memory: 33KB (2

segments)

Rows out: 1 rows (seg1) with 0.031 ms to end.

Executor memory: 33K bytes avg, 33K bytes max (seg0).

Work_mem used: 33K bytes avg, 33K bytes max (seg0). Workfile:

(0 spilling)

-> Table Scan on edge (cost=0.00..431.00 rows=1 width=4)

Filter: src <> dest AND NOT src IS NULL AND NOT dest IS

NULL

Rows out: 1 rows (seg1) with 0.020 ms to first row, 0.0

21 ms to end.

-> Append (cost=0.00..1724.00 rows=2 width=24)

Rows out: Avg 1.0 rows x 2 workers. Max 1 rows (seg0) with 0.891 ms to first r

ow, 1.949 ms to end.

-> Hash Left Join (cost=0.00..862.00 rows=1 width=24)

Hash Cond: share0_ref2.dest = share1_ref2.src

Rows out: 1 rows (seg0) with 0.890 ms to first row, 1.190 ms to end.

Executor memory: 1K bytes.

Work_mem used: 1K bytes. Workfile: (0 spilling)

-> Shared Scan (share slice:id 2:0) (cost=0.00..431.00 rows=1 width=12)

Rows out: 1 rows (seg0) with 0.001 ms to first row, 0.011 ms to end

.

-> Hash (cost=431.00..431.00 rows=1 width=12)

Rows in: 1 rows (seg1) with 0.004 ms to end, start offset by 7.783

ms.

-> Shared Scan (share slice:id 2:1) (cost=0.00..431.00 rows=1 widt

h=12)

Rows out: 1 rows (seg1) with 0.003 ms to end.

-> Result (cost=0.00..862.00 rows=1 width=24)

Rows out: 1 rows (seg1) with 0.532 ms to first row, 0.682 ms to end.

-> Hash Left Anti Semi Join (cost=0.00..862.00 rows=1 width=12)

Hash Cond: share1_ref3.src = share0_ref3.dest

Rows out: 1 rows (seg1) with 0.531 ms to first row, 0.681 ms to end

.

Executor memory: 1K bytes.

Work_mem used: 1K bytes. Workfile: (0 spilling)

-> Shared Scan (share slice:id 2:1) (cost=0.00..431.00 rows=1 widt

h=12)

Rows out: 1 rows (seg1) with 0.001 ms to end.

-> Hash (cost=431.00..431.00 rows=1 width=4)

Rows in: 1 rows (seg0) with 0.003 ms to end, start offset by

8.335 ms.

-> Shared Scan (share slice:id 2:0) (cost=0.00..431.00 rows=

1 width=4)

Rows out: 1 rows (seg0) with 0.001 ms to end.

Slice statistics:

(slice0) Executor memory: 172K bytes avg x 2 workers, 172K bytes max (seg0).

(slice1) Executor memory: 207K bytes avg x 2 workers, 207K bytes max (seg0). Work_mem: 33K bytes max.

(slice2) Executor memory: 2523K bytes avg x 2 workers, 2531K bytes max (seg0). Work_mem: 33K bytes max.

Statement statistics:

Memory used: 0K bytes

Optimizer status: PQO version 3.9.0

Total runtime: 10.635 ms

(90 rows)

Here is the master log for the isolated non MADlib query failure with default_group. I set client_min_messages and log_min_messages to debug5.

2018-11-29 22:21:54.465997 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd3,seg-1,,,,,"DEBUG5","00000","First char: 'Q'; gp_role = 'dispatch'.",,,,,,,0,,"postgres.c",4940,

2018-11-29 22:21:54.466071 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd3,seg-1,,,,,"DEBUG1","00000","Message type Q received by from libpq, len = 523",,,,,,,0,,"postgres.c",4948,

2018-11-29 22:21:54.466337 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd3,seg-1,,,,,"DEBUG5","00000","Simple query stmt: CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);.",,,,,,,0,,"postgres.c",4955,

2018-11-29 22:21:54.466415 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd3,seg-1,,,,,"DEBUG5","00000","setupRegularDtxContext leaving with DistributedTransactionContext = 'Master Distributed-Capable'.",,,,,,,0,,"cdbtm.c",3452,

2018-11-29 22:21:54.466424 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,,,,"DEBUG3","00000","StartTransactionCommand",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"postgres.c",3202,

2018-11-29 22:21:54.466506 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","createDtx created new distributed transaction gid = 1543277087-0000020569, gxid = 20569.",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"cdbtm.c",2601,

2018-11-29 22:21:54.466556 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","setting SharedLocalSnapshotSlot->startTimestamp = 596845314466010[old=596845308900717])",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"xact.c",2331,

2018-11-29 22:21:54.466624 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","[Distributed Snapshot #0] *StartTransaction* (gxid = 20569, xid = 0, 'Master Distributed-Capable')",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"xact.c",2446,

2018-11-29 22:21:54.466685 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG3","00000","StartTransaction",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"xact.c",5656,

2018-11-29 22:21:54.466745 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG3","00000","name: unnamed; blockState: DEFAULT; state: INPROGR, xid/subid/cid: 0/1/0, nestlvl: 1, children:",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"xact.c",5694,

2018-11-29 22:21:54.466837 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","StartTransaction in DTX Context = 'Master Distributed-Capable', distributed transaction {timestamp 0, xid 0} for local xid 0",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"xact.c",2531,

2018-11-29 22:21:54.466915 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"LOG","00000","statement: CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"postgres.c",1590,

2018-11-29 22:21:54.467039 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","setupRegularDtxContext leaving with DistributedTransactionContext = 'Master Distributed-Capable'.",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"cdbtm.c",3452,

2018-11-29 22:21:54.467101 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData maxCount 250, inProgressEntryArray 0x2f70c70",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1066,

2018-11-29 22:21:54.467178 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData serializable true, xmin 0",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1300,

2018-11-29 22:21:54.467248 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData setting globalxmin and xmin to 38984",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1321,

2018-11-29 22:21:54.467315 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","FillInDistributedSnapshot DTX Context = 'Master Distributed-Capable'",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",884,

2018-11-29 22:21:54.467377 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","createDtxSnapshot distributed snapshot has xmin = 20569, count = 0, xmax = 20569.",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"cdbtm.c",2529,

2018-11-29 22:21:54.467458 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","[Distributed Snapshot #265320] *Create* (gxid = 20569, 'Master Distributed-Capable')",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"cdbtm.c",2534,

2018-11-29 22:21:54.467535 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","Got distributed snapshot from DistributedSnapshotWithLocalXids_Create = true",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",907,

2018-11-29 22:21:54.467623 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData(): WRITER currentcommandid 0 curcid 0 segmatesync 0",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1490,

2018-11-29 22:21:54.468126 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG2","00000","Rolling over previous outstanding Optimizer allocated memory 0",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"memaccounting.c",700,

2018-11-29 22:21:54.472845 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG2","00000","

[OPT]: Using default search strategy",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"COptTasks.cpp",758,

2018-11-29 22:21:54.476000 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"NOTICE","00000","Table doesn't have 'DISTRIBUTED BY' clause. Creating a NULL policy entry.",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"CTranslatorQueryToDXL.cpp",844,

2018-11-29 22:21:54.529863 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG1","00000","GPORCA produced plan",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"orca.c",50,

2018-11-29 22:21:54.530004 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG3","00000","ProcessQuery",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"pquery.c",215,

2018-11-29 22:21:54.530063 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","[Distributed Snapshot #0] (gxid = 20569, 'Master Distributed-Capable')",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"tqual.c",1407,

2018-11-29 22:21:54.530130 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData maxCount 0, inProgressEntryArray (nil)",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1066,

2018-11-29 22:21:54.530196 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData serializable false, xmin 38984",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1300,

2018-11-29 22:21:54.530252 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData setting globalxmin and xmin to 38984",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1321,

2018-11-29 22:21:54.530299 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","FillInDistributedSnapshot DTX Context = 'Master Distributed-Capable'",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",884,

2018-11-29 22:21:54.530362 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","createDtxSnapshot distributed snapshot has xmin = 20569, count = 0, xmax = 20569.",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"cdbtm.c",2529,

2018-11-29 22:21:54.530420 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","[Distributed Snapshot #265321] *Create* (gxid = 20569, 'Master Distributed-Capable')",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"cdbtm.c",2534,

2018-11-29 22:21:54.530488 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","Got distributed snapshot from DistributedSnapshotWithLocalXids_Create = true",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",907,

2018-11-29 22:21:54.530544 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd4,seg-1,,dx20569,,sx1,"DEBUG5","00000","GetSnapshotData(): WRITER currentcommandid 0 curcid 0 segmatesync 0",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"procarray.c",1490,

2018-11-29 22:21:54.530591 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd5,seg-1,,dx20569,,sx1,"ERROR","53000","insufficient memory reserved for statement",,,,,,"CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",0,,"memquota.c",1023,

2018-11-29 22:21:54.530640 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd5,seg-1,,dx20569,,sx1,"LOG","00000","An exception was encountered during the execution of statement: CREATE TABLE out_degrees AS

SELECT

coalesce(in_q.vertex, out_q.vertex) as id,

coalesce(indegree, 0) as indegree,

coalesce(outdegree, 0) as outdegree

FROM

(

SELECT

dest as vertex,

count(*) as indegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

dest

) as in_q

FULL OUTER JOIN

(

SELECT

src as vertex,

count(*) as outdegree

FROM edge

WHERE src != dest AND

src IS NOT NULL AND

dest IS NOT NULL

GROUP BY

src

) as out_q

USING ( vertex);",,,,,,,0,,,,

2018-11-29 22:21:54.530686 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd5,seg-1,,dx20569,,sx1,"DEBUG5","00000","rollbackDtxTransaction called with state = Active Not Distributed, gid = 1543277087-0000020569",,,,,,,0,,"cdbtm.c",1155,

2018-11-29 22:21:54.530693 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd5,seg-1,,dx20569,,sx1,"DEBUG5","00000","releaseGxact called for gid = 1543277087-0000020569 (index = 0)",,,,,,,0,,"cdbtm.c",2622,

2018-11-29 22:21:54.530700 UTC,"gpadmin","foo",p41439,th-157141184,"[local]",,2018-11-29 22:21:34 UTC,0,con3858,cmd5,seg-1,,,,,"DEBUG5","00000","finishDistributedTransactionContext called to change DistributedTransactionContext from Master Distributed-Capable to Local Only (caller = CleanupTransaction, gxid = 0)",,,,,,,0,,"cdbtm.c",3747,

@gaos1 The logs that @goutamtadi1 posted in the above comment are for a madlib query . This query is different than the one in the description but fails for the same insufficient memory error. It will be much easier to debug the non MADlib query because then you don’t need to install MADlib.

- Remove From My Forums

-

Вопрос

-

Hi i am getting the following error when replacing the existing query with parameterised query.

ERROR [XX000] ERROR: insufficient memory reserved for statement (memquota.c:228);

No query has been executed with that handleHave anyone has got this error ? if so kindly let me know !

————————— Radhai Krish | Golden Age is no more far | —————————

Ответы

-

-

Помечено в качестве ответа

Lola Wang

7 декабря 2011 г. 9:10

-

Помечено в качестве ответа

Все ответы

-

Hi Radhai

Can you tell me whether you conver MDX or SQL to parameterised query.

when replacing the existing query with parameterised query. Have you add the Paramter like below screen ?

Suhas | MCP | MCTS

Mark as Answer if this resolves your problem or «Vote as Helpful» if you find it helpful.

-

Hi Suhas !

No its not an MDX query.. its PostgreSQL.

The parameters are not defined as like you ve displayed. I just pasted parameterised query to the dataset property under ‘Query’ and hit ‘Refresh Fields’ .. immediately the error was thrown..

till now unable to modify the query..

please help !

————————— Radhai Krish | Golden Age is no more far | —————————

-

-

Помечено в качестве ответа

Lola Wang

7 декабря 2011 г. 9:10

-

Помечено в качестве ответа

-

Hi Radhai,

This is a common issue, which usually occurs by complex query statement. I suggest you to simplify the query statement as possible as you can, and increase the max server memory inthe meantime.

A ralative thread:

http://blog.sqlauthority.com/2007/03/27/sql-server-fix-error-701-there-is-insufficient-system-memory-to-run-this-query/

Please remember to mark the replies as answers if they help.

We found the issue arised due to the RDL file size got increased , which was got blocked with the settings of SSRS.

Also found a solution somewhere like to increase the value of some kind of <<MaxlifeTime>> in the web.config file and we tried that solution too ..

Finally we did created new rdls and implemented.

————————— Radhai Krish | Golden Age is no more far | —————————

|

0 / 0 / 0 Регистрация: 30.04.2007 Сообщений: 64 |

|

|

1 |

|

|

15.01.2010, 21:13. Показов 38621. Ответов 10

Доброго времени суток. Люди искал на форуме но так ничего не нашел… выходит ошибка insufficient memory и пользователь не может нормально работать до перезагрузки сервера… думал из за размеров индексов бд. бд-30 гб общий размер индексов 9 гб. есть представление с размером индекса в 800 мб. так же на сервере по событию запускаются агенты… Фоновый Агент на локальной машине пинает агент на серваке что бы пользователь не ждал… таким образом на серваке «рождается» больше объектов… количество клиентов около 150-200 чел. Люди SOS!!!! Кто знает просветите.

__________________

0 |

|

0 / 0 / 0 Регистрация: 04.11.2007 Сообщений: 3,019 |

|

|

17.01.2010, 10:23 |

2 |

|

MAdy

0 |

|

0 / 0 / 0 Регистрация: 30.04.2007 Сообщений: 64 |

|

|

17.01.2010, 10:51 |

3 |

|

MAdy клиент 6,5

0 |

|

0 / 0 / 0 Регистрация: 04.11.2007 Сообщений: 3,019 |

|

|

17.01.2010, 10:56 |

4 |

|

Фоновый Агент на локальной машине пинает агент на серваке что бы пользователь не ждал 150 человев одномоменто пинают сервак?

0 |

|

0 / 0 / 0 Регистрация: 30.04.2007 Сообщений: 64 |

|

|

17.01.2010, 11:01 |

5 |

|

не одновременно. дело в том что пользователи видят те доки которые им нужно видеть…. могут отписать док тому кто должен его увидеть соответственно, тот кто должен увидеть должен получить права, вот этот маленький агент запускается на клиентской машине в режиме run in background client thread. Он и запускает агент на серваке.

0 |

|

0 / 0 / 0 Регистрация: 15.12.2006 Сообщений: 641 |

|

|

17.01.2010, 13:34 |

6 |

|

Код обоих агентов в студию. Без этого врядли сможем что-то понять.

0 |

|

dyw |

|

|

25.01.2010, 11:07 |

7 |

|

insufficient memory — очень неприятная ошибка. Но мы ее получали только на клиенте (6.5, 7.0.x). Симптомы везде одинаковые: в форме при рефреше начинают пропадать кнопки, меняется шрифт (становится больше и жирным), в статус-баре пишется ошибка «insufficient memory». Получилось ли у Вас забороть или хотя бы понять из-за чего происходит ошибка? |

|

0 / 0 / 0 Регистрация: 30.04.2007 Сообщений: 64 |

|

|

25.01.2010, 20:17 |

8 |

|

у нас ошибка выходила не в статус баре, а MesssageBox-ом на рабочем месте клиента. Пока ситуация под контролем…

0 |

|

0 / 0 / 0 Регистрация: 04.11.2007 Сообщений: 3,019 |

|

|

26.01.2010, 09:49 |

9 |

|

MAdy 1. Сократили индексы представлений 1 — убили виды? многие ситуации можно пересматривать и предусматривать куда более грамотные варианты?

0 |

|

0 / 0 / 0 Регистрация: 30.04.2007 Сообщений: 64 |

|

|

26.01.2010, 17:46 |

10 |

|

1 — убили виды? не убили а создали дополнительные виды с другой сортировкой, до этого в видах была сортировка по заголовку. таких колонок было 4, размер индекса весил 800 Мб после создания дополнительного вида, размер индекса вида сал весить 300 Мб.

2 — ушли от использования агентов? Не ушли от использования было просто не правильно его запускать в таком виде… по сути, Агент работает не по действию RunOnServer, а по расписанию… insufficient memory — не стандартная ошибка. Еще она может выходит из за того что объекты не успевают удалиться с памяти….

0 |

|

0 / 0 / 0 Регистрация: 28.06.2009 Сообщений: 1,567 |

|

|

26.01.2010, 17:54 |

11 |

|

insufficient memory — не стандартная ошибка но достаточно популярная… примерно как cannot allocate space

0 |

SQL Server 2008 Enterprise SQL Server 2008 Developer SQL Server 2008 Standard SQL Server 2008 Workgroup SQL Server 2008 R2 Datacenter SQL Server 2008 R2 Developer SQL Server 2008 R2 Enterprise SQL Server 2008 R2 Standard SQL Server 2008 R2 Workgroup More…Less

Microsoft distributes Microsoft SQL Server 2008 and Microsoft SQL Server 2008 R2 fixes as one downloadable file. Because the fixes are cumulative, each new release contains all the hotfixes and all the security fixes that were included with the previous SQL Server 2008 or SQL Server 2008 fix release.

Symptoms

When you run a Full-Text query that uses compound words in Microsoft SQL Server 2008 or in Microsoft SQL Server 2008 R2, you receive the following error message in the Error log file:

Date Time spidID Error: 701, Severity: 17, State: 123.

Date Time spidID There is insufficient system memory in resource pool ‘internal’ to run this query.

Additionally, if you run a Full-Text query successfully after this problem occurs, you receive the same error message.

Cause

This problem occurs for all the following reasons:

-

There is a bug in the code that is used to evaluate how many rowsets are required to perform a sorting operation. Therefore, the code greatly overestimates the numbers of rowsets. The required grant estimate in full-text is 64 pages for each rowset that is required to sort.

-

There is a large request that blocks the system from using the memory that is reserved for the full-text query.

Resolution

Cumulative update information

SQL Server 2008 Service Pack 1

The fix for this issue was first released in Cumulative Update 9 for SQL Server 2008 Service Pack 1. For more information about this cumulative update package, click the following article number to view the article in the Microsoft Knowledge Base:

2083921 cumulative update 9 for SQL Server 2008 Service Pack 1Note Because the builds are cumulative, each new fix release contains all the hotfixes and all the security fixes that were included with the previous SQL Server 2008 fix release. Microsoft recommends that you consider applying the most recent fix release that contains this hotfix. For more information, click the following article number to view the article in the Microsoft Knowledge Base:

970365 The SQL Server 2008 builds that were released after SQL Server 2008 Service Pack 1 was released Microsoft SQL Server 2008 hotfixes are created for specific SQL Server service packs. You must apply a SQL Server 2008 Service Pack 1 hotfix to an installation of SQL Server 2008 Service Pack 1. By default, any hotfix that is provided in a SQL Server service pack is included in the next SQL Server service pack.

SQL Server 2008 Service Pack 2

The fix for this issue was first released in Cumulative Update 1 for SQL Server 2008 Service Pack 2. For more information about this cumulative update package, click the following article number to view the article in the Microsoft Knowledge Base:

2289254 Cumulative update 1 for SQL Server 2008 Service Pack 2Note Because the builds are cumulative, each new fix release contains all the hotfixes and all the security fixes that were included with the previous SQL Server 2008 fix release. We recommend that you consider applying the most recent fix release that contains this hotfix. For more information, click the following article number to view the article in the Microsoft Knowledge Base:

2402659 The SQL Server 2008 builds that were released after SQL Server 2008 Service Pack 2 was released

SQL Server 2008 R2

The fix for this issue was first released in Cumulative Update 4. For more information about how to obtain this cumulative update package for SQL Server 2008 R2, click the following article number to view the article in the Microsoft Knowledge Base:

2345451 Cumulative Update package 4 for SQL Server 2008 R2 Note Because the builds are cumulative, each new fix release contains all the hotfixes and all the security fixes that were included with the previous SQL Server 2008 R2 fix release. We recommend that you consider applying the most recent fix release that contains this hotfix. For more information, click the following article number to view the article in the Microsoft Knowledge Base:

981356 The SQL Server 2008 R2 builds that were released after SQL Server 2008 R2 was released

Status

Microsoft has confirmed that this is a problem in the Microsoft products that are listed in the «Applies to» section.

References

For more information about the Incremental Servicing Model for SQL Server, click the following article number to view the article in the Microsoft Knowledge Base:

935897An Incremental Servicing Model is available from the SQL Server team to deliver hotfixes for reported problems

For more information about the naming schema for SQL Server updates, click the following article number to view the article in the Microsoft Knowledge Base:

822499New naming schema for Microsoft SQL Server software update packagesFor more information about Full-Text Search, visit the following Microsoft Developer Network (MSDN) website:

Full-Text Search (SQL Server)For more information about software update terminology, click the following article number to view the article in the Microsoft Knowledge Base:

824684 Description of the standard terminology that is used to describe Microsoft software updates

Need more help?

I get the following error in SQL Server 2008:

There is insufficient system memory in resource pool 'internal' to run this query.

I recently migrated from SQL Server 2000 to SQL Server 2008, and the system is Windows Server 2K8.

asked Feb 3, 2010 at 13:29

5

There are 2 connect bugs reported: One (archive) and Two (archive)

At least two SO questions: One and Two

These may offer something

froque

4322 silver badges13 bronze badges

answered Mar 20, 2010 at 20:22

gbngbn

417k81 gold badges581 silver badges670 bronze badges

Try using the -g switch as described in this MS KB article to increase the amount of virtual memory reserved for sql server.

answered Feb 5, 2010 at 15:05

Nick KavadiasNick Kavadias

7,4622 gold badges36 silver badges45 bronze badges

The are a -G parameter at sqlserver comfiguration to increse the memtoleave portion memory, reponsable area to execute queries, backups, openqueries, etc. By default, the inital value is 256mb. I suggest increase by 128Mb, but take care about that value. Try to serialize simultaneous executions.

answered Feb 24, 2010 at 21:08

You need to take a serious look at your queries. This could happen if you’re doing and ‘IN’/’NOT IN’ compare on a large string value passed into a query. Rather do it on a lookup table/temp table

answered Mar 16, 2010 at 8:53

baldybaldy

5,4844 gold badges21 silver badges18 bronze badges

There is insufficient system memory in resource pool ‘internal’ to run this query

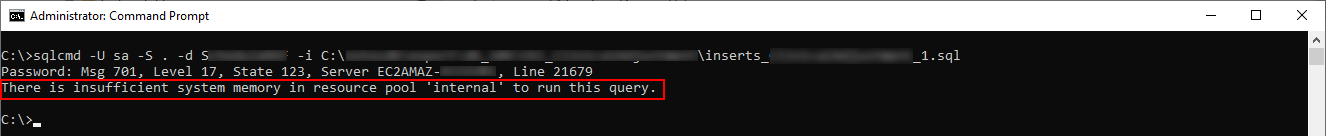

Did you ever try to import a large SQL script in SQL server using SQLCMD utility and got There is insufficient system memory in resource pool ‘internal’ to run this query error as shown in this screenshot.

This error is most likely to occur when there are a lot of consecutive INSERT statements in your SQL script. The SQLCMD utility tries to insert all the records as a single batch and you get this error. No matter how much memory your physical machine has or how much memory you allocate to your SQL server instance, this error will not go away.

Resolution

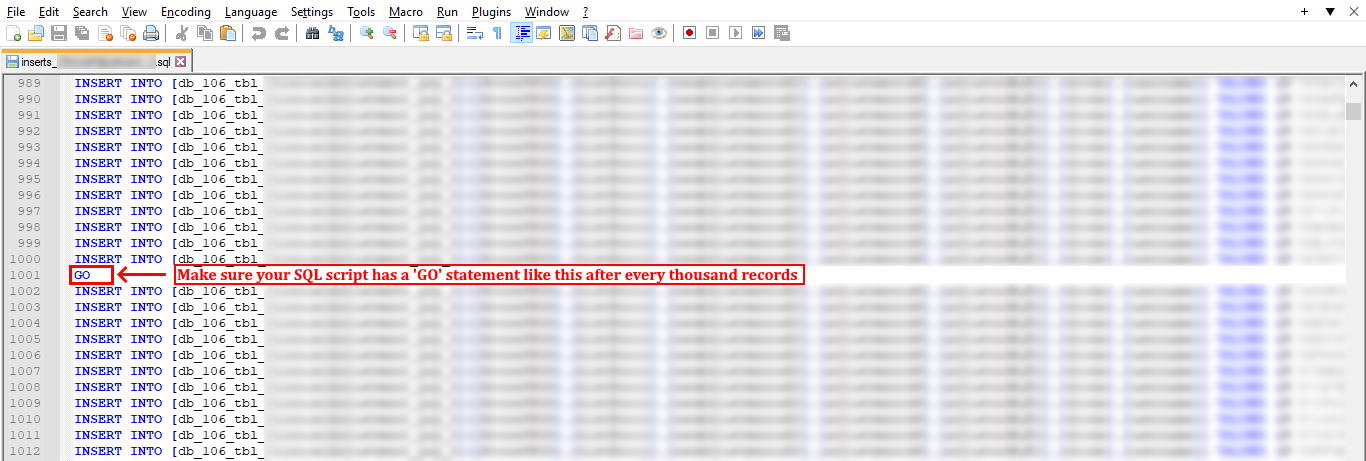

To resolve this error, you just need to insert a GO statement after every few hundred or thousand rows to instruct SQLCMD to treat the rows following the GO statement as a single batch. Make sure your SQL script looks like the one shown in the screenshot:

Now if you’re importing a single SQL script having a few thousand rows, you can do it manually but what if your script has a millions of records or maybe there are hundreds of SQL scripts? In that case, manual process is simply not feasible. Well, PowerShell is here to the rescue.

The following PowerShell script takes all the files having .sql extension recursively from an input directory, inserts a GO statement after every 1000 rows in each file and then saves each file in an output directory with the same name while leaving the original files intact.

<#

.SYNOPSIS

Bulk inserts a GO statement in SQL files

.NOTES

Author : Surender Kumar

Author URI : https://www.techtutsonline.com/staff/surender-kumar/

Version : 1.0

Purpose : Bulk inserts a GO statement in SQL files

#>

$inputDir = "C:inputDir"

$outputDir = "C:outputDir"

$sqlFiles = Get-ChildItem -Path $inputDir -File *.sql -Recurse

$insertLine = @'

GO

'@

foreach($file in $sqlFiles){

Get-Content -Path $file.FullName -ReadCount 1000 | ForEach-Object { $_,$insertLine | Add-Content $outputDir$($file.name) -Force}

}

To save time, you can download my Bulk-InsertLine.ps1 script. You can modify it to meet your own needs. At the end, your SQL script will have a GO statement after every thousand rows. After modifying your SQL script, try importing it again and it will work without error now.

From the output you posted I can see

MEMORYCLERK_SQLQERESERVATIONS 39874 (MB)

The value is 38G which is huge. Why is SQL Server operations(sortand hash) requesting so much memory

What is SQLRESERVATIONS

SQL Server memory allocated during query execution for Sort and Hash operations. Do you really think 38 G is what the sort and hash operations require. This is the problem.

Looking at your resource governor configuration

CREATE WORKLOAD GROUP [fm] WITH(GROUP_MAX_REQUESTS=0,

IMPORTANCE=HIGH,

REQUEST_MAX_CPU_TIME_SEC=0,

REQUEST_MAX_MEMORY_GRANT_PERCENT=100,

REQUEST_MEMORY_GRANT_TIMEOUT_SEC=0,

MAX_DOP=8) USING [fm]

GO

Just have a look at REQUEST_MAX_MEMORY_GRANT_PERCENT=100 now this seems a very wrong configuration to me. According to BOL document

REQUEST_MAX_MEMORY_GRANT_PERCENT = value Specifies the maximum amount

of memory that a single request can take from the pool. This

percentage is relative to the resource pool size specified by

MAX_MEMORY_PERCENT

So this means when queries run on this workload they can request almost ALL memory as memory grant for the execution there by forcing others to starve for memory. And this is may definitely cause OOM error.

Further more what Microsoft says is

We do not recommend setting value greater than 70 because the server

may be unable to set aside enough free memory if other concurrent

queries are running. This may eventually lead to query time-out error

8645

Can you also share the output of

select total_request_count,blocked_task_count,max_request_grant_memory_kb,requested

from sys.dm_resource_governor_workload_groups

SELECT mg.granted_memory_kb, mg.session_id, t.text, qp.query_plan

FROM sys.dm_exec_query_memory_grants AS mg

CROSS APPLY sys.dm_exec_sql_text(mg.sql_handle) AS t

CROSS APPLY sys.dm_exec_query_plan(mg.plan_handle) AS qp

ORDER BY 1 DESC OPTION (MAXDOP 1)

USE master

GO

;WITH cte

AS ( SELECT RP.pool_id ,

RP.Name ,

RP.min_memory_percent ,

RP.max_memory_percent ,

CAST (RP.max_memory_kb / 1024. / 1024.

AS NUMERIC(12, 2)) AS max_memory_gb ,

CAST (RP.used_memory_kb / 1024. / 1024.

AS NUMERIC(12, 2)) AS used_memory_gb ,

CAST (RP.target_memory_kb / 1024. / 1024.

AS NUMERIC(12,2)) AS target_memory_gb,

CAST (SI.committed_target_kb / 1024. / 1024.

AS NUMERIC(12, 2)) AS committed_target_kb

FROM sys.dm_resource_governor_resource_pools RP

CROSS JOIN sys.dm_os_sys_info SI

)

SELECT c.pool_id ,

c.Name ,

c.min_memory_percent ,

c.max_memory_percent ,

c.max_memory_gb ,

c.used_memory_gb ,

c.target_memory_gb ,

CAST(c.committed_target_kb *

CASE WHEN c.committed_target_kb <= 8 THEN 0.7

WHEN c.committed_target_kb < 16 THEN 0.75

WHEN c.committed_target_kb < 32 THEN 0.8

WHEN c.committed_target_kb <= 96 THEN 0.85

WHEN c.committed_target_kb > 96 THEN 0.9

END * c.max_memory_percent /100 AS NUMERIC(12,2))

AS [Max_for_InMemory_Objects_gb]

FROM cte c

EDIT:

From the output you posted

total_request_count blocked_task_count max_request_grant_memory_kb

-------------------- ------------------ ---------------------------

553 0 18000

1633564 0 19344744

0 0 0

89509 0 23448232

2073 0 8032

24999 0 4485384

861807 0 3149248

236419 0 32241240

293 0 32241240

1735195 0 1889544

Now you can see there are so many requests which your workgroup handles and see the amount of memory they requested all ranges from 19G to 32 G. This can be a problem the query requesting huge memory grants can deprive others from memory and also note the memory for workgroup wont be shared.

You can also see

granted_memory_kb session_id

-------------------- ----------

19344744 210

Session ID 210 was granted 19G of memory. This is blunder. just consider a scenario if 5-10 such queries start running.

Can you also add output of

select pool_id,cache_memory_kb,used_memory_kb,out_of_memory_count,used_memgrant_kb from sys.dm_resource_governor_resource_pools