I am running a Spark program on Intellij and getting the below error :

«object apache is not a member of package org».

I have used these import statement in the code :

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

The above import statement is not running on sbt prompt too.

The corresponding lib appears to be missing but I am not sure how to copy the same and at which path.

asked Mar 31, 2017 at 7:10

1

Make sure you have entries like this in SBT:

scalaVersion := "2.11.8"

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "2.1.0",

"org.apache.spark" %% "spark-sql" % "2.1.0"

)

Then make sure IntelliJ knows about these libraries by either enabling «auto-import» or doing it manually by clicking the refresh-looking button on the SBT panel.

answered Mar 31, 2017 at 23:29

VidyaVidya

29.6k6 gold badges40 silver badges68 bronze badges

6

It is about 5 years since the previous answer, but I had the same issue and the answer mentioned here did not work. So, hopefully this answer works for those who find themselves in the same position I was in.

I was able to run my scala program from sbt shell, but it was not working in Intellij. This is what I did to fix the issue:

- Imported the build.sbt file as a project.

File -> Open -> select build.sbt -> choose the «project» option.

- Install the sbt plugin and reload Intellij.

File -> settings -> Plugins -> search and install sbt.

- Run sbt.

Click «View» -> Tool Windows -> sbt. Click on the refresh button in

the SBT window.

Project should load successfully. Rebuild the project.

- Select your file and click «Run». It should ideally work.

answered Jan 6, 2022 at 14:02

SarvavyapiSarvavyapi

7902 gold badges22 silver badges34 bronze badges

Mo Ro

Posted on May 14, 2020

• Updated on Jun 1, 2020

When starting Udemy course «Streaming Big Data with Spark Streaming & Scala — Hands On!» by Sundog I was sure that running the first task would be easy.

As I started doing the first steps in the Scala world I realised there is a learning curve of getting to know the common tools like SBT and using IntelliJ.

Installing IntelliJ as IDE for Scala development should be straight forward. However there are weird things going on the latest version. Trying to put everything in one place for others to go through when needed:

- Installed IntelliJ IDEA Community (currently 2020.1.1)

- On first startup Install Spark or later on the «Create Project» window go to Configure->Plugins — look for the Spark plugin and install it.

- When creating the project go to the «Spark» tab and make sure you use Spark 2.12.11(explanation below), latest SBT.

- Create the project.

- Wait until the Indexing process complete. You can see the progress bar on the bottom of the IntelliJ IDE app.

- After project created, right click the root name-> Click ‘Add Framework Support…’-> Add Scala.

- right click the src folder, choose Mark Directory as-> Sources root.

- Now all should work. Put this in

src.main.scala:

object Main {

def main(args: Array[String]): Unit = {

println("'hi")

}

}

Enter fullscreen mode

Exit fullscreen mode

right click the document and click «Run».

This code has no dependencies and should run ok.

Adding dependencies

I learned that adding dependencies is not straightforward. SBT uses repositories for downloading the dependencies files and you need to «debug» why dependencies fail.

For example go through the process of adding a dependency with SBT.

add this import statement to the code above:

import org.apache.spark.streaming.StreamingContext

object Main {

def main(args: Array[String]): Unit ={

println("hi");

}

}

Enter fullscreen mode

Exit fullscreen mode

When you click Run you should encounter an Error «Error:(1, 12) object apache is not a member of package org

import org.apache.spark.streaming.StreamingContext».

This means the dependency is missing.

Let’s simulate a broken dependency package and how we fix it

Say you found this dependency line in some website and you add it to your build.sbt file:

libraryDependencies += "org.apache.spark" %% "spark-streaming" % "2.3.8"

Enter fullscreen mode

Exit fullscreen mode

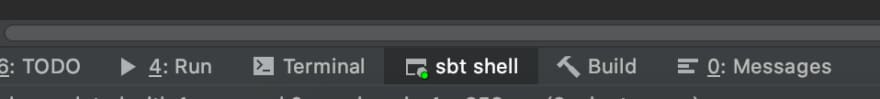

we go to the sbt shell tab

- run

reload - run

update

you should see an error

[error] stack trace is suppressed; run last update for the full output

[error] (update) sbt.librarymanagement.ResolveException: Error downloading org.apache.spark:spark-streaming_2.12:2.3.8

[error] Not found

[error] Not found

[error] not found: /Users/mrot/.ivy2/local/org.apache.spark/spark-streaming_2.12/2.3.8/ivys/ivy.xml

[error] not found: https://repo1.maven.org/maven2/org/apache/spark/spark-streaming_2.12/2.3.8/spark-streaming_2.12-2.3.8.pom

Enter fullscreen mode

Exit fullscreen mode

travel to the maven repo from the url in the last line:

https://repo1.maven.org/maven2/org/apache/spark/

and than travel the directories until you find the correct version you seek.

Than, you can see you need to update the SPARK version of the dependency to at least 2.4.0

Let’s change the dependency to:

libraryDependencies += "org.apache.spark" %% "spark-streaming" % "2.4.0"

You can also use the sbt side window but I didn’t find it as useful as the sbt shell.

The reason we use Scala version 2.12.11 is because I found most packages support it.

Timeless DEV post…

How to write a kickass README

Arguably the single most important piece of documentation for any open source project is the README. A good README not only informs people what the project does and who it is for but also how they use and contribute to it.

If you write a README without sufficient explanation of what your project does or how people can use it then it pretty much defeats the purpose of being open source as other developers are less likely to engage with or contribute towards it.

-

scala

- 05-03-2021

|

Question

import org.apache.tools.ant.Project

object HelloWorld {

def main(args: Array[String]) {

println("Hello, world!")

}

}

I tried to run this code using following command:

java -cp D:toolsapache-ant-1.7.0libant.jar;D:toolsscala-2.9.1.finallibscala-compiler.jar;D:toolsscala-2.9.1.finallibscala-library.jar -Dscala.usejavacp=true scala.tools.nsc.MainGenericRunner D:testscalaant.scala

There is following error:

D:testscalaant.scala:1: error: object apache is not a member of package org

import org.apache.tools.ant.Project

^

one error found

What is wrong?

UPDATE:

As I can see it is impossible to import any org.xxx package.

The same problem with javax.xml.xxx package.

D:testtest2.scala:2: error: object crypto is not a member of package javax.xml

import javax.xml.crypto.Data

^

one error found

Actually I cannot import anything!

D:testtest3.scala:3: error: object test is not a member of package com

import com.test.utils.ant.taskdefs.SqlExt

^

one error found

Solution

I experimented with scala.bat (uncommenting the echo of the final command line, see the line starting with echo "%_JAVACMD%" ...) and found that this should work:

java -Dscala.usejavacp=true -cp d:Devscala-2.9.1.finallibscala-compiler.jar;d:Devscala-2.9.1.finallibscala-library.jar scala.tools.nsc.MainGenericRunner -cp d:Devapache-ant-1.8.2libant.jar D:testscalaant.scala

OTHER TIPS

You haven’t included the ant jar file in your classpath.

The compiler effectively builds objects representing the nested package structure. There is already a top-level package named org from the JDK (org.xml for example) but without additional jars org.apache is not there.

I found that in general when an error of type Object XXX is not a member of package YYY occurs, you should:

Check that all your files are in a package, ie. not in the top-level src/ directory

I would check that ‘D:toolsapache-ant-1.7.0libant.jar’ exist and also check that it is not corrupt (length 0 or unable to open with ‘jar tf D:toolsapache-ant-1.7.0libant.jar’).

Run with scala command instead:

scala -cp D:toolsapache-ant-1.7.0libant.jar;D:toolsscala-2.9.1.finallibscala-compiler.jar;D:toolsscala-2.9.1.finallibscala-library.jar D:testscalaant.scala

When I try sbt package in my below code I get these following errors

- object apache is not a member of package org

-

not found: value SparkSession

MY Spark Version: 2.4.4

My Scala Version: 2.11.12

My build.sbt

name := "simpleApp"

version := "1.0"

scalaVersion := "2.11.12"

//libraryDependencies += "org.apache.spark" %% "spark-core" % "2.4.4"

libraryDependencies ++= {

val sparkVersion = "2.4.4"

Seq( "org.apache.spark" %% "spark-core" % sparkVersion)

}

my Scala project

import org.apache.spark.sql.SparkSession

object demoapp {

def main(args: Array[String]) {

val logfile = "C:/SUPPLENTA_INFORMATICS/demo/hello.txt"

val spark = SparkSession.builder.appName("Simple App in Scala").getOrCreate()

val logData = spark.read.textFile(logfile).cache()

val numAs = logData.filter(line => line.contains("Washington")).count()

println(s"Lines are: $numAs")

spark.stop()

}

}

asked 19 hours ago

New contributor

sudomudo is a new contributor to this site. Take care in asking for clarification, commenting, and answering.

Check out our Code of Conduct.

If you want to use Spark SQL, you also have to add the spark-sql module to the dependencies:

// https://mvnrepository.com/artifact/org.apache.spark/spark-sql

libraryDependencies += "org.apache.spark" %% "spark-sql" % "2.4.4"

Also, note that you have to reload your project in SBT after changing the build definition and import the changes in intelliJ.

answered 18 hours ago

cbleycbley

2,4739 silver badges21 bronze badges