Hello all,

I just installed proxmox on a new Optiplex to act as a new node for my little homelab. It has one NIC and on SSD and I installed and configured the OS and it all seems to be working. I was able to create a cluster and then join the new node to the cluster without any issues. Now the issue arises when I try to move a live VM from one node to another. I get an error:

Code:

2020-03-21 10:48:19 starting migration of VM 113 to node 'proxmox-pve-optiplex980' (192.168.1.14)

2020-03-21 10:48:20 found local disk 'Storage:113/vm-113-disk-0.raw' (in current VM config)

2020-03-21 10:48:20 found local disk 'Storage:113/vm-113-disk-1.raw' (in current VM config)

2020-03-21 10:48:20 copying local disk images

2020-03-21 10:48:20 starting VM 113 on remote node 'proxmox-pve-optiplex980'

2020-03-21 10:48:21 [proxmox-pve-optiplex980] lvcreate 'pve/vm-113-disk-0' error: Run `lvcreate --help' for more information.

2020-03-21 10:48:21 ERROR: online migrate failure - remote command failed with exit code 255

2020-03-21 10:48:21 aborting phase 2 - cleanup resources

2020-03-21 10:48:21 migrate_cancel

2020-03-21 10:48:22 ERROR: migration finished with problems (duration 00:00:03)

TASK ERROR: migration problemsHere is the output of the pveversion -v on the new node:

Code:

oot@proxmox-pve-optiplex980:~# pveversion -v

proxmox-ve: 6.1-2 (running kernel: 5.3.18-2-pve)

pve-manager: 6.1-8 (running version: 6.1-8/806edfe1)

pve-kernel-helper: 6.1-7

pve-kernel-5.3: 6.1-5

pve-kernel-5.0: 6.0-11

pve-kernel-5.3.18-2-pve: 5.3.18-2

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.0.3-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.15-pve1

libpve-access-control: 6.0-6

libpve-apiclient-perl: 3.0-3

libpve-common-perl: 6.0-17

libpve-guest-common-perl: 3.0-5

libpve-http-server-perl: 3.0-5

libpve-storage-perl: 6.1-5

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 3.2.1-1

lxcfs: 3.0.3-pve60

novnc-pve: 1.1.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.1-3

pve-cluster: 6.1-4

pve-container: 3.0-22

pve-docs: 6.1-6

pve-edk2-firmware: 2.20200229-1

pve-firewall: 4.0-10

pve-firmware: 3.0-6

pve-ha-manager: 3.0-9

pve-i18n: 2.0-4

pve-qemu-kvm: 4.1.1-4

pve-xtermjs: 4.3.0-1

qemu-server: 6.1-7

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.3-pve1And here is the output of the cat /etc/pve/storage.cfg command:

Code:

root@proxmox-pve-optiplex980:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso,backup

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

dir: Storage

path /mnt/pve/Storage

content iso,rootdir,vztmpl,snippets,images

is_mountpoint 1

nodes proxmox-pve

shared 0

lvmthin: VM-Storage

thinpool VM-Storage

vgname VM-Storage

content images,rootdir

nodes proxmox-pveAnyone have any ideas what the issue might be? I am sure it has something to do with my storage configuration but I am not super famialir with Linux storage configs. Any help or advice would be great!

G’day there,

With the help of the Proxmox community and @Dominic we were able to migrate our ESXi VMs across to PVE — thank you!

https://forum.proxmox.com/threads/migrating-vms-from-esxi-to-pve-ovftool.80655 (for anybody interested)

The migrated (ex-ESXi) VMs are now part of a 3-node PVE cluster, though being the New Year holidays there had to be trouble!

Strangely we’re unable to move these imported (from ESXi) VMs to other nodes in the newly-made PVE cluster. All imported VMs are currently on node #2, as nodes #1 and #3 had to be reclaimed, reinstalled (from ESXi) and joined to the PVE cluster (had to bear zero guests to do so).

The VMs are all operational on the PVE node that they were imported to, and boot/reboot without issue.

Our problem is isolated to attempting to migrate them.

Problem we’re seeing is:

2020-12-21 00:58:27 starting migration of VM 222 to node ‘pve1’ (x.y.x.y)

2020-12-21 00:58:27 found local disk ‘local-lvm:vm-222-disk-0’ (in current VM config)

2020-12-21 00:58:27 copying local disk images

2020-12-21 00:58:27 starting VM 222 on remote node ‘pve1’

2020-12-21 00:58:29 [pve1] lvcreate ‘pve/vm-222-disk-0’ error: Run `lvcreate —help’ for more information.

2020-12-21 00:58:29 ERROR: online migrate failure — remote command failed with exit code 255

2020-12-21 00:58:29 aborting phase 2 — cleanup resources

2020-12-21 00:58:29 migrate_cancel

2020-12-21 00:58:30 ERROR: migration finished with problems (duration 00:00:03)

TASK ERROR: migration problems

Error 255 & attempt to migrate to other host:

Sadly, it looks like it should be reporting a more useful error than what appears to be the final line of lvcreate’s error output — «Run ‘lvcreate —help’ for more information». Looking through other Proxmox Forum threads, the 255 error code seems to cover a few situations so we’re unclear as to exactly what’s gone wrong.

The error flow above is the same if we attempt with another of the 4x imported VMs, even if we try to send them to the alternative spare host. Does that likely point to a setting/issue that has to do with the migration in from ESXi? Whether it’s a setting, an incompatibility or otherwise is unclear.

Only peculiarity that we can locate:

All 4x of the imported VMs have disks attached that Proxmox seems to not know the size of. Each VM only has 1x disk, which were carried over via ovftool from ESXi. I’m not sure if that’s potentially causing lvcreate on the target node/s to fail due to the disk size not being specified?

EXAMPLE — Imported from ESXi to PVE:

Hard Disk (scsi0) — local-lvm:vm-222-disk-0

EXAMPLE — Created on PVE, never migrated:

Hard Disk (scsi0) — local-lvm:vm-106-disk-0,backup=0,size=800G,ssd=1

Has anyone here had any experience with this? We’ve made some suggestions in the other thread (linked at the top of this post) about ovftool in the PVE wiki based on our experience. The ESXi/ovftool part of the page looks to have been added into the wiki quite recently.

I can add in other logs/files/etc — not overly sure where to look as log-searching for the job ID didn’t give us much additional info.

Hopefully someone is kind enough to shed some light on this for us! Thank you so much, and Happy Holidays!

Cheers,

LinuxOz

Table of Contents

1

Cause of this Error?

Proxmox VM migration failed with error “Permission denied (publickey, password)” is because previously I have 4 nodes in my Proxmox cluster. To work on some testing I have removed three of the physical nodes. Once the testing completed this is the time I need to join those back to Proxmox cluster.

Before starting with adding the nodes they are rebuilt from scratch. While trying to add the nodes it works without any issue. However, the ssh authorized keys are appended below the existing keys, So this makes a conflict. Right after removing the offending key adding the new key with alias name will resolve this issue.

The Actual Error

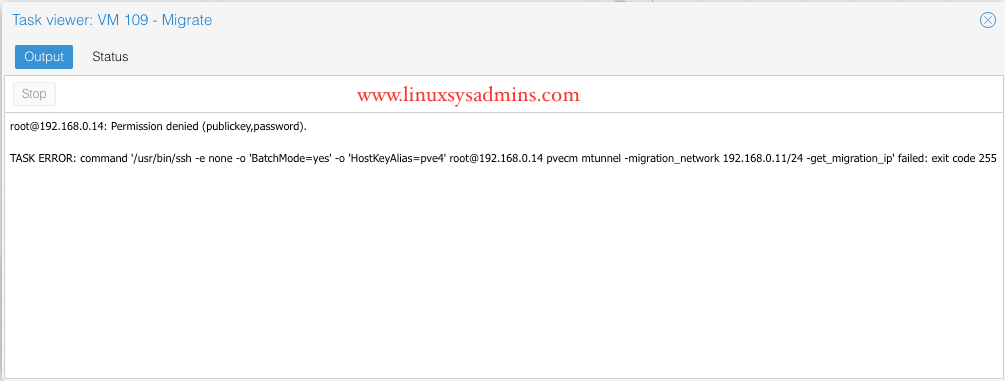

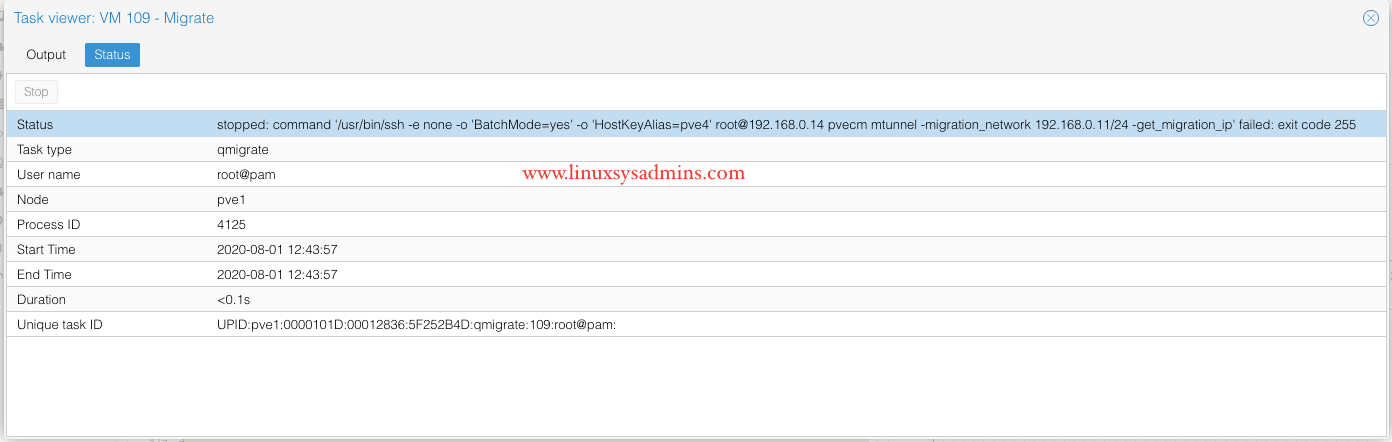

While trying to migrate a VM from graphical console we will get the below error.

root@192.168.0.14: Permission denied (publickey,password).

TASK ERROR: command '/usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=pve4' root@192.168.0.14 pvecm mtunnel -migration_network 192.168.0.11/24 -get_migration_ip' failed: exit code 255

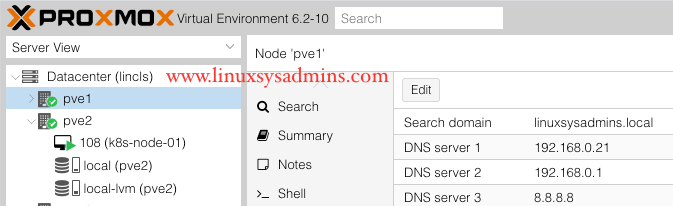

First verify whether your DNS is resolving on first node in your Proxmox cluster.

# cat /etc/resolv.confOr from GUI under Datacenter –> pve1 –> DNS –> DNS entry should be in right side pane.

In my case, DNS entry is missing. I have added it and took a restart for networking service.

Remove the offending key

SSH to the destination server to remove the duplicate offending key.

# ssh root@192.168.0.14Remove the old authorized keys from .ssh/authorized_keys

root@pve4:~# vim .ssh/authorized_keysSave and exit using wq! option.

Copy the new Key

From the first PVE node copy the key using -o option to provide a alias name for the specific IP.

root@pve1:~# ssh-copy-id -o 'HostKeyAlias=pve4' root@192.168.0.14root@pve1:~# ssh-copy-id -o 'HostKeyAlias=pve4' root@192.168.0.14

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@pve4's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh -o 'HostKeyAlias=pve4' 'root@192.168.0.14'"

and check to make sure that only the key(s) you wanted were added.

root@pve1:~#Verify SSH Connection

Now verify the SSH connection once again.

root@pve1:~# ssh root@pve4

Linux pve4 5.4.34-1-pve #1 SMP PVE 5.4.34-2 (Thu, 07 May 2020 10:02:02 +0200) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Sat Aug 1 12:47:26 2020 from 192.168.0.11

root@pve4:~#Migrate and Verify

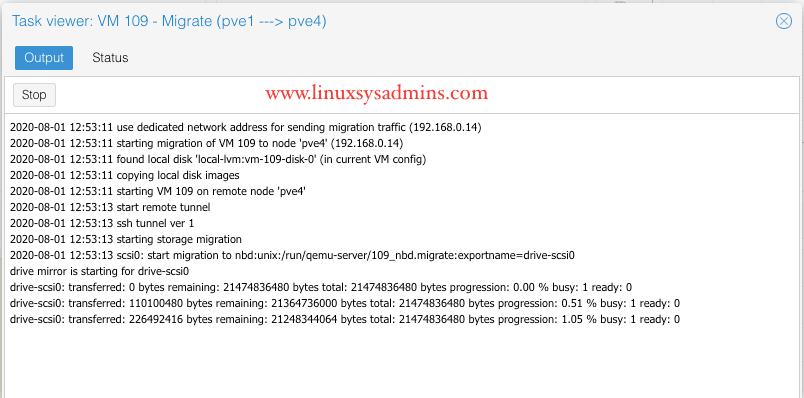

Try to migrate a VM from your PVE1 to PVE4. My remaining nodes will be done after this.

Truncated the long output.

2020-08-01 12:53:11 use dedicated network address for sending migration traffic (192.168.0.14)

2020-08-01 12:53:11 starting migration of VM 109 to node 'pve4' (192.168.0.14)

2020-08-01 12:53:11 found local disk 'local-lvm:vm-109-disk-0' (in current VM config)

2020-08-01 12:53:11 copying local disk images

2020-08-01 12:53:11 starting VM 109 on remote node 'pve4'

2020-08-01 12:53:13 start remote tunnel

2020-08-01 12:53:13 ssh tunnel ver 1

2020-08-01 12:53:13 starting storage migration

2020-08-01 12:53:13 scsi0: start migration to nbd:unix:/run/qemu-server/109_nbd.migrate:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

all mirroring jobs are ready

2020-08-01 12:56:18 volume 'local-lvm:vm-109-disk-0' is 'local-lvm:vm-109-disk-0' on the target

2020-08-01 12:56:18 starting online/live migration on unix:/run/qemu-server/109.migrate

2020-08-01 12:56:18 set migration_caps

2020-08-01 12:56:18 migration speed limit: 8589934592 B/s

2020-08-01 12:56:18 migration downtime limit: 100 ms

2020-08-01 12:56:18 migration cachesize: 536870912 B

2020-08-01 12:56:18 set migration parameters

2020-08-01 12:56:18 start migrate command to unix:/run/qemu-server/109.migrate

2020-08-01 12:56:30 migration speed: 20.79 MB/s - downtime 27 ms

2020-08-01 12:56:30 migration status: completed

drive-scsi0: transferred: 21475295232 bytes remaining: 0 bytes total: 21475295232 bytes progression: 100.00 % busy: 0 ready: 1

all mirroring jobs are ready

drive-scsi0: Completing block job...

drive-scsi0: Completed successfully.

drive-scsi0 : finished

2020-08-01 12:56:31 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=pve4' root@192.168.0.14 pvesr set-state 109 ''{}''

2020-08-01 12:56:32 stopping NBD storage migration server on target.

Logical volume "vm-109-disk-0" successfully removed

2020-08-01 12:56:36 migration finished successfully (duration 00:03:26)

TASK OKThat’s it, It works. We have resolved the Proxmox VM migration failed with Permission denied (publickey,password).

Conclusion

In case if we reinstalled anyone of the node in a Proxmox cluster, we need to make sure to clear the old authorized keys and use the correct alias of nodes. Once we copy over the key from the master node to other nodes with alias it fixes the Proxmox VM migration failed issue. Subscribe to newsletter for more troubleshooting guides.