Comments

vepatel

added a commit

that referenced

this issue

Apr 26, 2021

# This is the 1st commit message: add ingress mtls test # This is the commit message #2: add std vs # This is the commit message #3: change vs host # This is the commit message #4: Update tls secret # This is the commit message #5: update certs with host # This is the commit message #6: modify get_cert # This is the commit message #7: Addind encoded cert # This is the commit message #8: Update secrets # This is the commit message #9: Add correct cert and SNI module # This is the commit message #10: Bump styfle/cancel-workflow-action from 0.8.0 to 0.9.0 (#1527) Bumps [styfle/cancel-workflow-action](https://github.com/styfle/cancel-workflow-action) from 0.8.0 to 0.9.0. - [Release notes](https://github.com/styfle/cancel-workflow-action/releases) - [Commits](styfle/cancel-workflow-action@0.8.0...89f242e) Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> # This is the commit message #11: Remove patch version from Docker image for tests (#1534) # This is the commit message #12: Add tests for Ingress TLS termination # This is the commit message #13: Improve assertion of TLS errors in tests When NGINX terminates a TLS connection for a server with a missing/invalid TLS secret, we expect NGINX to reject the connection with the error TLSV1_UNRECOGNIZED_NAME In this commit we: * ensure the specific error * rename the assertion function to be more specific # This is the commit message #14: Bump k8s.io/client-go from 0.20.5 to 0.21.0 (#1530) Bumps [k8s.io/client-go](https://github.com/kubernetes/client-go) from 0.20.5 to 0.21.0. - [Release notes](https://github.com/kubernetes/client-go/releases) - [Changelog](https://github.com/kubernetes/client-go/blob/master/CHANGELOG.md) - [Commits](kubernetes/client-go@v0.20.5...v0.21.0) Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> # This is the commit message #15: Improve tests Dockerfile * Reorganize layers so that changes to the tests do not cause a full image rebuilt * Use .dockerignore to ignore cache folders * Convert spaces to tabs for consistency with the other Dockerfiles # This is the commit message #16: Upgrade kubernetes-python client to 12.0.1 (#1522) * Upgrade kubernetes-python client to 12.0.1 Co-authored-by: Venktesh Patel <ve.patel@f5.com> # This is the commit message #17: Bump k8s.io/code-generator from 0.20.5 to 0.21.0 (#1531) Bumps [k8s.io/code-generator](https://github.com/kubernetes/code-generator) from 0.20.5 to 0.21.0. - [Release notes](https://github.com/kubernetes/code-generator/releases) - [Commits](kubernetes/code-generator@v0.20.5...v0.21.0) Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> # This is the commit message #18: Test all images (#1533) * Test on all images * Update nightly to test all images * Run all test markers on debian plus also * Update .github/workflows/nightly.yml # This is the commit message #19: Add tests for default server # This is the commit message #20: Support running tests in kind # This is the commit message #21: Update badge for Fossa (#1546) # This is the commit message #22: Fix ensuring connection in tests * Add timeout for establishing a connection to prevent potential "hangs" of the test runs. The problem was noticeable when running tests in kind. * Increase the number of tries to make sure the Ingress Controller pod has enough time to get ready. When running tests in kind locally the number of tries sometimes was not enough. # This is the commit message #23: Ensure connection in Ingress TLS tests Ensure connection to NGINX before running tests. Without ensuring, sometimes the first connection to NGINX would hang (timeout). The problem is noticable when running tests in kind. # This is the commit message #24: Revert changes in nightly for now (#1547) # This is the commit message #25: Bump actions/cache from v2.1.4 to v2.1.5 (#1541) Bumps [actions/cache](https://github.com/actions/cache) from v2.1.4 to v2.1.5. - [Release notes](https://github.com/actions/cache/releases) - [Commits](actions/cache@v2.1.4...1a9e213) Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> # This is the commit message #26: Create release workflow

This topic has been deleted. Only users with topic management privileges can see it.

-

I am getting the following error when trying to push my docker image into this registry. Any suggestions on how to fix?

error parsing HTTP 413 response body: invalid character '<' looking for beginning of value: "<html>rn<head><title>413 Request Entity Too Large</title></head>rn<body bgcolor="white">rn<center><h1>413 Request Entity Too Large</h1></center>rn<hr><center>nginx/1.14.0 (Ubuntu)</center>rn</body>rn</html>rn" -

this means you’re not on the latest version, there’s already a fix for that I believe

-

@msbt Thanks! Looks like I missed the latest update. It’s working fine now.

-

@msbt I am still getting this error for very large images now, while smaller images seem to get pushed without issues. Is there some associated parameter that controls the size of images that can be pushed?

-

@esawtooth Can you confirm you are on Cloudron 6.1.2 and also using the docker registry package 1.0.0 ? The entity too large was specifically fixed in package 1.0.0.

-

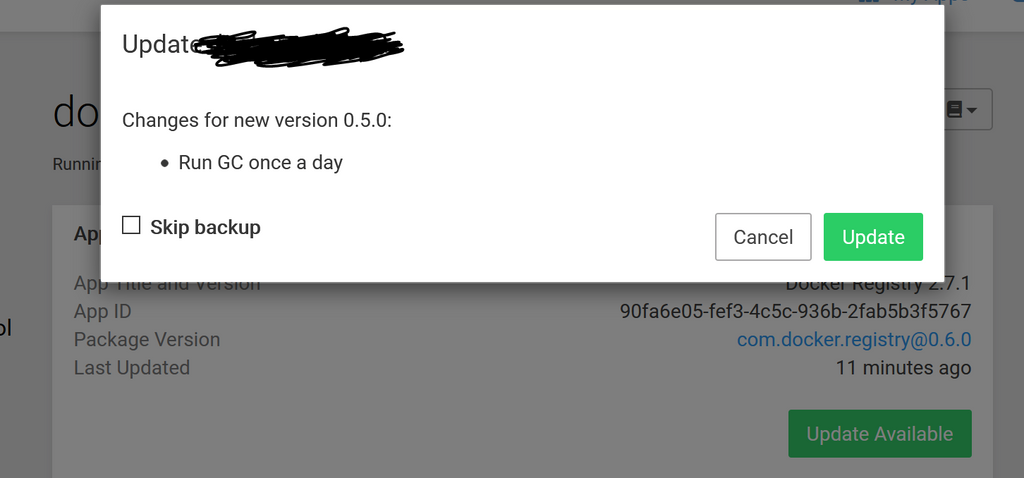

@girish Platform version is 6.1.2. Regarding package version, I see it listed as 0.6.0 (Screenshot below)? An update seems be be available, but for some reason, the updated version seems to be 0.5.0, which cannot be «updated» to.

Clearly, this is not 1.0.0, but am not sure why I am not getting that app update. I updated this app a while ago successfully, but did not note the pkg version I was updating to then I’m afraid.

-

Refreshing the UX a couple of times, got me an available 1.0.0 update. Looks like some weird bug. In any case, I’ll make sure my package version is 1.0.0 and check again.

Update: This is working now. Tested with a few large images. Thanks!

When setting up nginx ingress on Kubernetes for a private Docker Registry, I ran into an error when trying to push an image to it.

Error parsing HTTP response: invalid character '<' looking for beginning of value: "<html>rn<head><title>413 Request Entity Too Large</title></head>rn<body bgcolor="white">rn<center><h1>413 Request Entity Too Large</h1></center>rn<hr><center>nginx/1.9.14</center>rn</body>rn</html>rn"

The “413 Request Entity Too Large” error is something many accustomed to running nginx as a standard web server/proxy. nginx is configured to restrict the size of files it will allow over a post. This restriction helps avoid some DoS attacks. The default values are easy to adjust in the Nginx configuration. However, in the Kubernetes world things are a bit different. I prefer to do things the Kubernetes way; however, there is still a lack of established configuration idioms, since part of its appeal is flexibility. One thousand different ways of doing something means ten thousand variations of remedies to every potential problem. Googling errors in the Kubernetes world leads to a mess of solutions not always explicitly tied to an implementation. I am hoping that as Kubernetes continues to gain popularity more care will be taken to provide context for solutions to common problems.

Advanced Platform Development with Kubernetes

What You’ll Learn

- Build data pipelines with MQTT, NiFi, Logstash, MinIO, Hive, Presto, Kafka and Elasticsearch

- Leverage Serverless ETL with OpenFaaS

- Explore Blockchain networking with Ethereum

- Support a multi-tenant Data Science platform with JupyterHub, MLflow and Seldon Core

- Build a Multi-cloud, Hybrid cluster, securely bridging on-premise and cloud-based Kubernetes nodes

Context

I now use the official NGINX Ingress Controller, there are quite a few options out there, so this solution only applies to the official NGINX Ingress Controller. If you don’t already have it, you can follow the easy ingress controller deployment instructions.

I found this solution in the Ingress examples folder in the Github repository. I probably should have started there on my journey to set up a private Docker registry on my Kubernetes cluster.

Using annotations you can customize various configuration settings. In the case of nginx upload limits, use the annotation below:

nginx.ingress.kubernetes.io/proxy-body-size: "0"

The annotation (see its context in the gist: Registry Ingress Example above) removes any restriction on upload size. Of course, you can set this to a size appropriate to your situation.

Still Not Working?

Maybe you installed the NGINX Ingress Controller wrong as I did. I was rushing to get a project done at 3 am, cutting and pasting commands like a wild monkey. I installed the non-RBAC based configuration; however, my cluster uses RBAC. To make things worse, everything else was working fine for some reason (at least it seemed to) until this configuration issue came up and the annotations were not working. Double check your install steps.

Not the Ingress Controller you are using?

Here are some solutions for alternative implementations:

- 413 Request Entity Too Large NGINX Ingress Controller by nginx

- File upload limit in Kubernetes & Nginx

This blog post, titled: «Kubernetes — 413 Request Entity Too Large: Configuring the NGINX Ingress Controller» by Craig Johnston, is licensed under a Creative Commons Attribution 4.0 International License.

SUPPORT

Order my new Kubernetes book: Advanced Platform Development with Kubernetes: Enabling Data Management, the Internet of Things, Blockchain, and Machine Learning

SHARE

FOLLOW

Есть файл docker-compose:

version: '3'

services:

nginx-balancer:

build:

context: ./nginx-balancer

dockerfile: Dockerfile

container_name: nginx-balancer

image: localapp/nginx-balancer

ports:

- "80:80"

- "443:443"

depends_on:

- nginx-webserver1

- nginx-webserver2

networks:

- app-network

nginx-webserver1:

build:

context: ./nginx-webserver

dockerfile: Dockerfile

container_name: nginx-webserver1

image: localapp/nginx-webserver

ports:

- "8081:80"

volumes:

- ./code:/var/www/application.local

networks:

- app-network

nginx-webserver2:

build:

context: ./nginx-webserver

dockerfile: Dockerfile

container_name: nginx-webserver2

image: localapp/nginx-webserver

ports:

- "8082:80"

volumes:

- ./code:/var/www/application.local

networks:

- app-network

php1:

build:

context: ./php-fpm

dockerfile: Dockerfile

image: localapp/php

container_name: php1

volumes:

- ./code:/var/www/application.local

networks:

- app-network

php2:

build:

context: ./php-fpm

dockerfile: Dockerfile

image: localapp/php

container_name: php2

volumes:

- ./code:/var/www/application.local

networks:

- app-network

elastic_search:

image: docker.elastic.co/elasticsearch/elasticsearch:7.16.3

container_name: elastic_search

ports:

- "9200:9200"

- "9300:9300"

environment:

- "discovery.type=single-node"

networks:

- app-network

kibana:

image: docker.elastic.co/kibana/kibana:7.16.3

container_name: kibana

ports:

- "5601:5601"

environment:

- "ELASTICSEARCH_HOSTS=http://elastic_search:9200"

networks:

- app-network

depends_on:

- elastic_search

networks:

app-network:

driver: bridge

Но при запуске docker-compose up получаю ошибку на стадии скачивания образа ElasticSearch:

ERROR: error pulling image configuration: error parsing HTTP 403 response body: invalid character '<' looking for beginning of v

alue: "<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">n<HTML><HEAD><

META HTTP-EQUIV="Content-Type" CONTENT="text/html; charset=iso-8859-1">n<TITLE>ERROR: The request could not be satisfied</T

ITLE>n</HEAD><BODY>n<H1>403 ERROR</H1>n<H2>The request could not be satisfied.</H2>n<HR noshade size="1px">nThe Amazon Cl

oudFront distribution is configured to block access from your country.nWe can't connect to the server for this app or website a

t this time. There might be too much traffic or a configuration error. Try again later, or contact the app or website owner.n<B

R clear="all">nIf you provide content to customers through CloudFront, you can find steps to troubleshoot and help prevent th

is error by reviewing the CloudFront documentation.n<BR clear="all">n<HR noshade size="1px">n<PRE>nGenerated by cloudfro

nt (CloudFront)nRequest ID: jJDE7TvrCqKBhC8W5tRNl6ajh8oICSXm3EidpZCnSViCHgV7jjtY6A==n</PRE>n<ADDRESS>n</ADDRESS>n</BODY></H

TML>"Подскажите в чем может быть проблема?

Я использую модель google (двоичный файл: около 3 ГБ) в моем файле докеров, а затем использую Jenkins для его создания и развертывания на производственном сервере. Остальная часть кода вытаскивается из репозитория битбакет.

Пример строки из файла докера, где я загружаю и разархивирую файл. Это происходит только один раз, когда эта команда будет кэшироваться.

FROM python:2.7.13-onbuild

RUN mkdir -p /usr/src/app

WORKDIR /usr/src/app

ARG DEBIAN_FRONTEND=noninteractive

RUN apt-get update && apt-get install --assume-yes apt-utils

RUN apt-get update && apt-get install -y curl

RUN apt-get update && apt-get install -y unzip

RUN curl -o - https://s3.amazonaws.com/dl4j-distribution/GoogleNews-vectors-negative300.bin.gz

| gunzip > /usr/src/app/GoogleNews-vectors-negative300.bin

Все работает отлично, когда я создаю и запускаю докер на своей локальной машине. Однако, когда я делаю патч-версию, чтобы продвигать эти изменения на производственный сервер через Jenkins, мой процесс сборки заканчивается неудачей. Фазы установки, сборки и тестирования работают нормально. Однако фаза пост-сборки не выполняется. (Процесс сборки подталкивает изменения к репо, и, согласно журналам, все команды в файле докеров также отлично работают.) После этого происходит что-то, и я получаю следующую ошибку при просмотре журналов.

18:49:27 654f45ecb7e3: Layer already exists

18:49:27 2c40c66f7667: Layer already exists

18:49:27 97108d083e01: Pushed

18:49:31 35a4b123c0a3: Pushed

18:50:10 1e730b4fb0a6: Pushed

18:53:46 error parsing HTTP 413 response body: invalid character '<'

looking for beginning of value: "<html>rn<head><title>413 Request

`Entity Too Large</title></head>rn<body

bgcolor="white">rn<center>`<h1>413 Request

Entity Too Large</h1></center>rn<hr>

center>nginx/1.10.1</center>rn</body>rn</html>rn"

Может быть, файл слишком велик?

Перед добавлением этого файла все с докером и Дженкинсом тоже отлично работало.

Мне интересно, есть ли какие-либо ограничения в docker/Jenkins при обработке большого файла, подобного этому? или я что-то ломаю, как я приближаюсь к нему.

Update:

Увеличение client_max_body_size решило эту конкретную ошибку. Тем не менее, я получаю еще одну ошибку при ssh -o StrictHostKeyChecking=no [email protected] "cd /root/ourapi &&docker-compose pull api &&docker-compose -p somefolder up -d"

Прикрепление к докеру не работает здесь с неожиданным успехом. Он пытается загрузить изображение (1,6 ГБ), но отменит его после почти приближения к этому размеру, а затем повторит попытку, которая заканчивается ошибкой eof.

Что приводит меня к старому вопросу, если в этой ситуации нужно обрабатывать большие файлы?

Обновление 2:

Проблема решена. Мне нужно было увеличить client_max_body_size до 4 ГБ, а также увеличить параметр тайм-аута для вытаскивания репозитория с нашего собственного сервера репозитория. Настройка этих двух параметров привела к решению проблемы.