I’ve run my configuration without using dockers and it works. So it seems that docker-workflow issue.

Make sure you have updated all relevant plugins, especially durable-task, as there have been diagnostic improvements. Typically the problem is that the Docker daemon does not share a filesystem with the client, which is a prerequisite for Image.inside to work.

Jesse Glick

added a comment — 2016-07-25 17:55 Make sure you have updated all relevant plugins, especially durable-task , as there have been diagnostic improvements. Typically the problem is that the Docker daemon does not share a filesystem with the client, which is a prerequisite for Image.inside to work.

Not enough information here.

I’m seeing a similar issue where the sh command inside a docker.image block always terminates after some delay. The point in the sh command that jenkins errors out varies which leads me to believe that jenkins is causing the problem. I’m on the latest version of jenkins and all plugins. Any tips on how to debug this?

Ben Mathews

added a comment — 2016-10-31 17:26 I’m seeing a similar issue where the sh command inside a docker.image block always terminates after some delay. The point in the sh command that jenkins errors out varies which leads me to believe that jenkins is causing the problem. I’m on the latest version of jenkins and all plugins. Any tips on how to debug this?

Typically it means workspace sharing between host & container is failing. If the agent itself is inside a container, make sure —volumes-from is included on the command line; if not, nothing will work.

Jesse Glick

added a comment — 2016-10-31 18:42 Typically it means workspace sharing between host & container is failing. If the agent itself is inside a container, make sure —volumes-from is included on the command line; if not, nothing will work.

The workspace is getting mounted (—volumes-from param is present) and is being interacted with. I’ve got a shell script that should take a couple minutes to complete. But within a couple seconds, the script will terminate and jenkins returns the above mentioned

ERROR: script returned exit code -1 Finished: FAILURE

The Jenkins log has the call stack

hudson.AbortException: script returned exit code -1 at org.jenkinsci.plugins.workflow.steps.durable_task.DurableTaskStep$Execution.check(DurableTaskStep.java:285) at org.jenkinsci.plugins.workflow.steps.durable_task.DurableTaskStep$Execution.run(DurableTaskStep.java:234) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745)

Looking at the pipeline code in the call stack, it appears that Jenkins thinks my process is exiting. But (a) it never fails when I launch the process directly w/ «docker run …» and (b) it fails at a different spot every time.

I’ve replaced my script w/ «for i in `seq 1 20`; do echo $i;date;sleep 5;done» and it never fails. So, it is apparent that something in the interaction between jenkins and my script is failing.

FWIW, the script is a series of «python setup.py develop» commands.

Ben Mathews

added a comment — 2016-11-01 21:02 The workspace is getting mounted (—volumes-from param is present) and is being interacted with. I’ve got a shell script that should take a couple minutes to complete. But within a couple seconds, the script will terminate and jenkins returns the above mentioned

ERROR: script returned exit code -1

Finished: FAILURE

The Jenkins log has the call stack

hudson.AbortException: script returned exit code -1

at org.jenkinsci.plugins.workflow.steps.durable_task.DurableTaskStep$Execution.check(DurableTaskStep.java:285)

at org.jenkinsci.plugins.workflow.steps.durable_task.DurableTaskStep$Execution.run(DurableTaskStep.java:234)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang. Thread .run( Thread .java:745)

Looking at the pipeline code in the call stack, it appears that Jenkins thinks my process is exiting. But (a) it never fails when I launch the process directly w/ «docker run …» and (b) it fails at a different spot every time.

I’ve replaced my script w/ «for i in `seq 1 20`; do echo $i;date;sleep 5;done» and it never fails. So, it is apparent that something in the interaction between jenkins and my script is failing.

FWIW, the script is a series of «python setup.py develop» commands.

benm Hmm, does not sound like a familiar issue. The fake exit status -1 means that Jenkins cannot find the PID of the controller sh script which tracks the output and exit code of your actual script (also sh, unless you specified a #!/bin/command). The typical reason for the failure to find this PID when using Image.inside is that the container does not share the right mount with the agent, for example because —volumes-from was not passed when it should have been. But in your case it was, and you say other shell scripts work, so something trickier is happening. If you can narrow it down to a reproducible test case, that would help a lot of course. Otherwise you will need to inspect the process tree inside the container, to see if the wrapper script is really still running or not; and inspect the …job@tmp sibling workspace that holds the control directory with the PID, output, and exit code.

Jesse Glick

added a comment — 2016-11-01 21:49 benm Hmm, does not sound like a familiar issue. The fake exit status -1 means that Jenkins cannot find the PID of the controller sh script which tracks the output and exit code of your actual script (also sh , unless you specified a #!/bin/command ). The typical reason for the failure to find this PID when using Image.inside is that the container does not share the right mount with the agent, for example because —volumes-from was not passed when it should have been. But in your case it was, and you say other shell scripts work, so something trickier is happening. If you can narrow it down to a reproducible test case, that would help a lot of course. Otherwise you will need to inspect the process tree inside the container, to see if the wrapper script is really still running or not; and inspect the …job@tmp sibling workspace that holds the control directory with the PID, output, and exit code.

An update….

I cut the problem down to this repo

stage('run unit tests') { node() { docker.image("centos:6.7").inside() { sh 'for i in `seq 1 50`; do echo $i;date;sleep 2;done' } } }

This fails on both a docker using https://hub.docker.com/r/axltxl/jenkins-dood/ and jenkins installed on my ubuntu laptop via apt-get.

Before creating a ticket, I made one final test on our production jenkins server and it passed with flying colors four times consecutively. Obviously, something is strange with my box. Not sure what though. Any ideas would be appreciated. I’ll keep poking around

$ uname -a Linux mathewslaptop 4.4.0-45-generic #66-Ubuntu SMP Wed Oct 19 14:12:37 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux $ cat /etc/os-release NAME="Ubuntu" VERSION="16.04.1 LTS (Xenial Xerus)" ID=ubuntu ID_LIKE=debian PRETTY_NAME="Ubuntu 16.04.1 LTS" VERSION_ID="16.04" HOME_URL="http://www.ubuntu.com/" SUPPORT_URL="http://help.ubuntu.com/" BUG_REPORT_URL="http://bugs.launchpad.net/ubuntu/" UBUNTU_CODENAME=xenial $ docker -v Docker version 1.12.1, build 23cf638

Ben Mathews

added a comment — 2016-11-03 17:11 An update….

I cut the problem down to this repo

stage( ‘run unit tests’ ) {

node() {

docker.image( «centos:6.7» ).inside() {

sh ‘ for i in `seq 1 50`; do echo $i;date;sleep 2;done’

}

}

}

This fails on both a docker using https://hub.docker.com/r/axltxl/jenkins-dood/ and jenkins installed on my ubuntu laptop via apt-get.

Before creating a ticket, I made one final test on our production jenkins server and it passed with flying colors four times consecutively. Obviously, something is strange with my box. Not sure what though. Any ideas would be appreciated. I’ll keep poking around

$ uname -a

Linux mathewslaptop 4.4.0-45- generic #66-Ubuntu SMP Wed Oct 19 14:12:37 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

$ cat /etc/os-release

NAME= «Ubuntu»

VERSION= «16.04.1 LTS (Xenial Xerus)»

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME= «Ubuntu 16.04.1 LTS»

VERSION_ID= «16.04»

HOME_URL= «http: //www.ubuntu.com/»

SUPPORT_URL= «http: //help.ubuntu.com/»

BUG_REPORT_URL= «http: //bugs.launchpad.net/ubuntu/»

UBUNTU_CODENAME=xenial

$ docker -v

Docker version 1.12.1, build 23cf638

Are you using a (remote) agent on the production server but not in the test environment, or vice-versa? Generally should not matter except to the extent that it is the functioning of the docker command on the agent where the build is running which matters.

Jesse Glick

added a comment — 2016-11-08 18:01 Are you using a (remote) agent on the production server but not in the test environment, or vice-versa? Generally should not matter except to the extent that it is the functioning of the docker command on the agent where the build is running which matters.

The production server is running the builds on a centos 7 slave. My test environment is running jenkins in a docker container that is mounting /var/run/docker.sock from my laptop.

Ben Mathews

added a comment — 2016-12-24 16:36 The production server is running the builds on a centos 7 slave. My test environment is running jenkins in a docker container that is mounting /var/run/docker.sock from my laptop.

benm did you eventually solve this problem? I’m seeing the same problem

where sh scripts are failing with -1.

I am also using:

docker.image(….).inside {

}

As a further note, my Jenkins instance is also a docker container.

Craig Rodrigues

added a comment — 2017-03-16 01:58 benm did you eventually solve this problem? I’m seeing the same problem

where sh scripts are failing with -1.

I am also using:

docker.image(….).inside {

}

As a further note, my Jenkins instance is also a docker container.

Sorry, I should have followed up back in Dec. I’ve forgotten what happened with this. I stopped trying to do development locally, so I probably never resolved it.

Ben Mathews

added a comment — 2017-03-16 17:13 Sorry, I should have followed up back in Dec. I’ve forgotten what happened with this. I stopped trying to do development locally, so I probably never resolved it.

benm fair enough. I’m running into the same problem so was just curious.

My understanding of the problem is that if your Jenkins server is running inside a Docker container, and then you try to start a docker container on the same server (so you are doing Docker container inside Docker container), then that doesn’t work so well.

The Durable Task plugin used by the pipeline **sh step has some complicated logic for how it figures out the process ID (pid) of the shell script that has been executed, and this logic gets confused when you do Docker inside Docker, and returns -1, even though your shell script is still running. I ran into the -1 problems in JENKINS-32264 , (not in a Docker context).

Craig Rodrigues

added a comment — 2017-03-16 19:20 benm fair enough. I’m running into the same problem so was just curious.

My understanding of the problem is that if your Jenkins server is running inside a Docker container, and then you try to start a docker container on the same server (so you are doing Docker container inside Docker container), then that doesn’t work so well.

The Durable Task plugin used by the pipeline **sh step has some complicated logic for how it figures out the process ID (pid) of the shell script that has been executed, and this logic gets confused when you do Docker inside Docker, and returns -1, even though your shell script is still running. I ran into the -1 problems in JENKINS-32264 , (not in a Docker context).

I have been having the same problems as benm with the shell script returning -1 if it is run inside a docker.inside block in a pipeline.

I took Ben’s testcase here: https://issues.jenkins-ci.org/browse/JENKINS-35370?focusedCommentId=275803&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-275803

and could reproduce the problem.

The environment I used to reproduce the problem was:

- host environment was a VM running Debian stretch

- docker version:Client:

Version: 1.13.1

API version: 1.26

Go version: go1.7.5

Git commit: 092cba372

Built: Wed Feb 8 06:44:30 2017

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Go version: go1.7.5

Git commit: 092cba372

Built: Wed Feb 8 06:44:30 2017

OS/Arch: linux/amd64

So the host environment, and the docker container are on the same machine and sharing the same file system.

Craig Rodrigues

added a comment — 2017-03-20 23:28 I have been having the same problems as benm with the shell script returning -1 if it is run inside a docker.inside block in a pipeline.

I took Ben’s testcase here: https://issues.jenkins-ci.org/browse/JENKINS-35370?focusedCommentId=275803&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-275803

and could reproduce the problem.

The environment I used to reproduce the problem was:

host environment was a VM running Debian stretch

docker version:Client:

Version: 1.13.1

API version: 1.26

Go version: go1.7.5

Git commit: 092cba372

Built: Wed Feb 8 06:44:30 2017

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Go version: go1.7.5

Git commit: 092cba372

Built: Wed Feb 8 06:44:30 2017

OS/Arch: linux/amd64

So the host environment, and the docker container are on the same machine and sharing the same file system.

I’m also using:

- Jenkins 2.51

- Pipeline 2.5

- Durable task plugin 1.13

- Docker pipeline 1.10

I’m seeing this as well. It only shows whenever there is a failing build, though.

I’m experiencing the same issue and it seems to depend on the docker image being used

I have a simple pipeline setup:

node('docker') { stage('test') { docker.image('r-base:3.4.0').inside() { sh(script: 'ping -c 2 jenkins.io') } } }

which fails in all of about 100 test runs.

Log output:

Started by user Mathias Rühle

[Pipeline] node

Running on slave-2 in /home/jenkins/workspace/pipeline-test

[Pipeline] {

[Pipeline] stage

[Pipeline] { (test)

[Pipeline] sh

[pipeline-test] Running shell script

+ docker inspect -f . r-base:3.4.0

.

[Pipeline] withDockerContainer

slave-2 does not seem to be running inside a container

$ docker run -t -d -u 1007:1007 -w /home/jenkins/workspace/pipeline-test -v /home/jenkins/workspace/pipeline-test:/home/jenkins/workspace/pipeline-test:rw -v /home/jenkins/workspace/pipeline-test@tmp:/home/jenkins/workspace/pipeline-test@tmp:rw -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** --entrypoint cat r-base:3.4.0

[Pipeline] {

[Pipeline] sh

[pipeline-test] Running shell script

+ ping -c 2 jenkins.io

PING jenkins.io (140.211.15.101): 56 data bytes

64 bytes from 140.211.15.101: icmp_seq=0 ttl=44 time=163.801 ms

[Pipeline] }

$ docker stop --time=1 352151927bb3d4123f3bcfc467ab1a137b2829599a623807d22e18f9497cc742

$ docker rm -f 352151927bb3d4123f3bcfc467ab1a137b2829599a623807d22e18f9497cc742

[Pipeline] // withDockerContainer

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

ERROR: script returned exit code -1

Finished: FAILURE

When I change the image version to 3.1.2:

node('docker') { stage('test') { docker.image('r-base:3.1.2').inside() { sh(script: 'ping -c 2 jenkins.io') } } }

it succeeds in all of 20 test runs.

Log output:

Started by user Mathias Rühle

[Pipeline] node

Running on slave-2 in /home/jenkins/workspace/pipeline-test

[Pipeline] {

[Pipeline] stage

[Pipeline] { (test)

[Pipeline] sh

[pipeline-test] Running shell script

+ docker inspect -f . r-base:3.1.2

.

[Pipeline] withDockerContainer

slave-2 does not seem to be running inside a container

$ docker run -t -d -u 1007:1007 -w /home/jenkins/workspace/pipeline-test -v /home/jenkins/workspace/pipeline-test:/home/jenkins/workspace/pipeline-test:rw -v /home/jenkins/workspace/pipeline-test@tmp:/home/jenkins/workspace/pipeline-test@tmp:rw -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** --entrypoint cat r-base:3.1.2

[Pipeline] {

[Pipeline] sh

[pipeline-test] Running shell script

+ ping -c 2 jenkins.io

PING jenkins.io (140.211.15.101): 56 data bytes

64 bytes from 140.211.15.101: icmp_seq=0 ttl=44 time=163.756 ms

64 bytes from 140.211.15.101: icmp_seq=1 ttl=44 time=163.624 ms

--- jenkins.io ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 163.624/163.690/163.756/0.066 ms

[Pipeline] }

$ docker stop --time=1 443c8c93c17cc07d08c85be69304100b306191772ff4d5770537d1074b9d3679

$ docker rm -f 443c8c93c17cc07d08c85be69304100b306191772ff4d5770537d1074b9d3679

[Pipeline] // withDockerContainer

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

Finished: SUCCESS

Another working docker image for me is perl:5.24.1.

I used 2 different setups. The first on my development maching running jenkins via

and having a docker container (customized imaged base on ubuntu:14.04) with ssh daemon as slave node. The slave node contains a statically linked docker executable (https://get.docker.com/builds/Linux/x86_64/docker-1.12.1.tgz) and the DOCKER_HOST environment variable is set to the docker network bridge ip 172.17.0.1 (the actual host machine). I would this consider a docker-in-docker setup.

The second setup is a jenkins instance running inside docker using the image jenkins:2.46.2. The jenkins slave is a separate vm running ubuntu 14.04 and having docker 1.12.1 installed. In my opinion this is not a docker-in-docker setup because of the jenkins slave being a real vm.

I get the same results on both setups.

Mathias Rühle

added a comment — 2017-05-11 12:04 — edited I’m experiencing the same issue and it seems to depend on the docker image being used

I have a simple pipeline setup:

node( ‘docker’ ) {

stage( ‘test’ ) {

docker.image( ‘r-base:3.4.0’ ).inside() {

sh(script: ‘ping -c 2 jenkins.io’ )

}

}

}

which fails in all of about 100 test runs.

Log output:

Started by user Mathias Rühle

[Pipeline] node

Running on slave-2 in /home/jenkins/workspace/pipeline-test

[Pipeline] {

[Pipeline] stage

[Pipeline] { (test)

[Pipeline] sh

[pipeline-test] Running shell script

+ docker inspect -f . r-base:3.4.0

.

[Pipeline] withDockerContainer

slave-2 does not seem to be running inside a container

$ docker run -t -d -u 1007:1007 -w /home/jenkins/workspace/pipeline-test -v /home/jenkins/workspace/pipeline-test:/home/jenkins/workspace/pipeline-test:rw -v /home/jenkins/workspace/pipeline-test@tmp:/home/jenkins/workspace/pipeline-test@tmp:rw -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** —entrypoint cat r-base:3.4.0

[Pipeline] {

[Pipeline] sh

[pipeline-test] Running shell script

+ ping -c 2 jenkins.io

PING jenkins.io (140.211.15.101): 56 data bytes

64 bytes from 140.211.15.101: icmp_seq=0 ttl=44 time=163.801 ms

[Pipeline] }

$ docker stop —time=1 352151927bb3d4123f3bcfc467ab1a137b2829599a623807d22e18f9497cc742

$ docker rm -f 352151927bb3d4123f3bcfc467ab1a137b2829599a623807d22e18f9497cc742

[Pipeline] // withDockerContainer

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

ERROR: script returned exit code -1

Finished: FAILURE

When I change the image version to 3.1.2:

node( ‘docker’ ) {

stage( ‘test’ ) {

docker.image( ‘r-base:3.1.2’ ).inside() {

sh(script: ‘ping -c 2 jenkins.io’ )

}

}

}

it succeeds in all of 20 test runs.

Log output:

Started by user Mathias Rühle

[Pipeline] node

Running on slave-2 in /home/jenkins/workspace/pipeline-test

[Pipeline] {

[Pipeline] stage

[Pipeline] { (test)

[Pipeline] sh

[pipeline-test] Running shell script

+ docker inspect -f . r-base:3.1.2

.

[Pipeline] withDockerContainer

slave-2 does not seem to be running inside a container

$ docker run -t -d -u 1007:1007 -w /home/jenkins/workspace/pipeline-test -v /home/jenkins/workspace/pipeline-test:/home/jenkins/workspace/pipeline-test:rw -v /home/jenkins/workspace/pipeline-test@tmp:/home/jenkins/workspace/pipeline-test@tmp:rw -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** -e ******** —entrypoint cat r-base:3.1.2

[Pipeline] {

[Pipeline] sh

[pipeline-test] Running shell script

+ ping -c 2 jenkins.io

PING jenkins.io (140.211.15.101): 56 data bytes

64 bytes from 140.211.15.101: icmp_seq=0 ttl=44 time=163.756 ms

64 bytes from 140.211.15.101: icmp_seq=1 ttl=44 time=163.624 ms

— jenkins.io ping statistics —

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 163.624/163.690/163.756/0.066 ms

[Pipeline] }

$ docker stop —time=1 443c8c93c17cc07d08c85be69304100b306191772ff4d5770537d1074b9d3679

$ docker rm -f 443c8c93c17cc07d08c85be69304100b306191772ff4d5770537d1074b9d3679

[Pipeline] // withDockerContainer

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

Finished: SUCCESS

Another working docker image for me is perl:5.24.1 .

I used 2 different setups. The first on my development maching running jenkins via

mvn hpi:run

and having a docker container (customized imaged base on ubuntu:14.04) with ssh daemon as slave node. The slave node contains a statically linked docker executable ( https://get.docker.com/builds/Linux/x86_64/docker-1.12.1.tgz) and the DOCKER_HOST environment variable is set to the docker network bridge ip 172.17.0.1 (the actual host machine). I would this consider a docker-in-docker setup.

The second setup is a jenkins instance running inside docker using the image jenkins:2.46.2 . The jenkins slave is a separate vm running ubuntu 14.04 and having docker 1.12.1 installed. In my opinion this is not a docker-in-docker setup because of the jenkins slave being a real vm.

I get the same results on both setups.

Encountered the same bug (at least, that is what I’m suspecting). By digging further and debugging the plugin into Eclipse, I was wondering if the docker plugin was faulty, or if it was not in the durable-task plugin instead, ending up my debugging onto the exitStatus() from ShellController class (see org.jenkinsci.plugins.durabletask.BournShellScript class).

Actually, I found out that this bug seemed to appear from our side after the recent Debian Stretch release, and suprisingly:

$ docker run -it --rm debian:stretch ps

docker: Error response from daemon: Container command 'ps' not found or does not exist..

$ docker run -it --rm debian:jessie ps

PID TTY TIME CMD

1 ? 00:00:00 ps

The durable-task makes the assumption that it has the ps command to check if the process is still alive, which does not seem to be the case in new debian:stretch image. Note that if the sh command finishes before Jenkins has the need to control whether the process is still alive, then you won’t encounter the issue.

Pierre Mauduit

added a comment — 2017-06-29 12:00 Encountered the same bug (at least, that is what I’m suspecting). By digging further and debugging the plugin into Eclipse, I was wondering if the docker plugin was faulty, or if it was not in the durable-task plugin instead, ending up my debugging onto the exitStatus() from ShellController class (see org.jenkinsci.plugins.durabletask.BournShellScript class).

Actually, I found out that this bug seemed to appear from our side after the recent Debian Stretch release, and suprisingly:

$ docker run -it —rm debian:stretch ps

docker: Error response from daemon: Container command ‘ps’ not found or does not exist..

$ docker run -it —rm debian:jessie ps

PID TTY TIME CMD

1 ? 00:00:00 ps

The durable-task makes the assumption that it has the ps command to check if the process is still alive, which does not seem to be the case in new debian:stretch image. Note that if the sh command finishes before Jenkins has the need to control whether the process is still alive, then you won’t encounter the issue.

Pierre Mauduit

added a comment — 2017-06-29 16:23 Following my previous comment, I think that even with the ps command available into the underlying docker container, the Java code in ShellController might fail to detect the process return code, I am getting some commands returning -2 as status code where they are not supposed to, so I suspect to fall into the following condition:

https://github.com/jenkinsci/durable-task-plugin/blob/master/src/main/java/org/jenkinsci/plugins/durabletask/BourneShellScript.java#L207-L209

Jesse Glick

added a comment — 2017-06-29 20:08 pmauduit yes that is the well-known JENKINS-40101 . For now these images are not supported for inside .

jglick is there any docker image that can be used to circumvent the issue ?

I can reproduce even with images having ps installed

Something really weird (at least, I cannot explain): first clicking «scan repository now» before launching the actual build seems to solve my issue.

[edit]actually, it still failing after other build attempts

Pierre Mauduit

added a comment — 2017-07-17 16:09 — edited Something really weird (at least, I cannot explain): first clicking «scan repository now» before launching the actual build seems to solve my issue.

[edit] actually, it still failing after other build attempts

Hi,

I am using docker.image.inside() with a centos:7.3.1611 image for building maven project.

It was working perfect when my docker host was installed with Fedora 25.

I changed the docker host os from fedora to centos 7 and i started seeing this issue.

- The centos image has ps inside.

- The Jenkins server is running as docker container

- The pipeline is executed on a jenkins slave that runs on the same server

I can reproduce the issue with the example code from above:

node('build') { docker.image("builder-centos-7.3.1611-maven-3.5-jdk-8:2.2.0").inside() { sh 'for i in `seq 1 50`; do echo $i;date;sleep 2;done' } }

The image above is based on cent os 7 but has jdk and maven installed.

Any hints? My whole environment is down right now…..

Pavel Georgiev

added a comment — 2017-08-10 08:00 — edited Hi,

I am using docker.image.inside() with a centos:7.3.1611 image for building maven project.

It was working perfect when my docker host was installed with Fedora 25 .

I changed the docker host os from fedora to centos 7 and i started seeing this issue.

The centos image has ps inside.

The Jenkins server is running as docker container

The pipeline is executed on a jenkins slave that runs on the same server

I can reproduce the issue with the example code from above:

node( ‘build’ ) {

docker.image( «builder-centos-7.3.1611-maven-3.5-jdk-8:2.2.0» ).inside() {

sh ‘ for i in `seq 1 50`; do echo $i;date;sleep 2;done’

}

}

The image above is based on cent os 7 but has jdk and maven installed.

Any hints? My whole environment is down right now…..

My issue was resolved by either rebooting the server or making sure that the home folder of the user running the jenkins slave is exactly the same as the folder inside the centos image.

Hope that helps someone.

p.s.

The example code above also passes the command was executed 50 times without failure.

Pavel Georgiev

added a comment — 2017-08-10 09:44 My issue was resolved by either rebooting the server or making sure that the home folder of the user running the jenkins slave is exactly the same as the folder inside the centos image.

Hope that helps someone.

p.s.

The example code above also passes the command was executed 50 times without failure.

pgeorgiev, what do you mean by

home folder of the user running the jenkins slave is exactly the same as the folder inside the centos image

My setup is as follows:

node(‘alpine-node’) {

docker.withServer('tcp://dockerhost:2375') {

docker.image('centos-slave’).inside('--net=bridge') {

sh '''

for i in $(seq 3); do sleep 1; echo $i; done

'''

}

}

}

}

alpine-node is run from the same dockerhost as the centos-slave.

Should I map the home folder from dockerhost to the centos-slave?

Misha Yesiev

added a comment — 2017-08-17 14:01 pgeorgiev , what do you mean by

home folder of the user running the jenkins slave is exactly the same as the folder inside the centos image

My setup is as follows:

node(‘alpine-node’) {

docker.withServer( ‘tcp: //dockerhost:2375’ ) {

docker.image( ‘centos-slave’).inside(‘ —net=bridge’) {

sh »’

for i in $(seq 3); do sleep 1; echo $i; done

»’

}

}

}

}

alpine-node is run from the same dockerhost as the centos-slave .

Should I map the home folder from dockerhost to the centos-slave ?

Do not do such one-off mapping.

Probably this is a bug, possibly duplicate. The workaround is as always to just avoid inside. You can accomplish similar goals more portably and transparently using plain docker CLI commands.

Jesse Glick

added a comment — 2017-08-21 16:05 Do not do such one-off mapping.

Probably this is a bug, possibly duplicate. The workaround is as always to just avoid inside . You can accomplish similar goals more portably and transparently using plain docker CLI commands.

Misha Yesiev

added a comment — 2017-08-23 19:25 jglick , thanks for your response.

I managed to fix the issue by rebuilding the Durable Task plugin with the following change:

https://github.com/jenkinsci/durable-task-plugin/pull/40

emishas thanks for the tip. After compiling the plugin with pr#40 Durable Task is able to keep track of the mvn process in my use case, and the pipeline jobs executes properly.

Bruno Didot

added a comment — 2017-08-23 20:44 emishas thanks for the tip. After compiling the plugin with pr#40 Durable Task is able to keep track of the mvn process in my use case, and the pipeline jobs executes properly.

jglick,

The workaround is as always to just avoid inside. You can accomplish similar goals more portably and transparently using plain docker CLI commands.

This does work when I just use ‘sh’ inside the .inside{}, then it would be like:

docker -H <dockerhost> exec <container> curl blablabla

But how do I execute other DSLs (like archiveArtifacts) inside the container?

Thanks!

Misha Yesiev

added a comment — 2017-09-07 18:25 jglick ,

The workaround is as always to just avoid inside. You can accomplish similar goals more portably and transparently using plain docker CLI commands.

This does work when I just use ‘sh’ inside the .inside{}, then it would be like:

docker -H <dockerhost> exec <container> curl blablabla

But how do I execute other DSLs (like archiveArtifacts) inside the container?

Thanks!

how do I execute other DSLs (like archiveArtifacts) inside the container?

You cannot, but you docker-cp files outside, etc.

Jesse Glick

added a comment — 2017-09-18 20:46 how do I execute other DSLs (like archiveArtifacts) inside the container?

You cannot, but you docker-cp files outside, etc.

I am encountering this same problem as well. I see there is at least one pull request associated with this issue emishas. Has the fix been released into an updated plugin somewhere?

Kevin Phillips

added a comment — 2017-09-20 12:35 I am encountering this same problem as well. I see there is at least one pull request associated with this issue emishas . Has the fix been released into an updated plugin somewhere?

Just to be clear, here are a few specifics of the behavior I’m seeing in our production environment in case it helps isolate the problem further:

- for some reason we only started experiencing this issue about 2 weeks ago. What’s even stranger is the instigating factor seems to be a server reboot of our Jenkins master instance. Based on my review, the agents remained unchanged during this outage, and the master remained the same (same core version, same plugin versions, same OS packages, same pipeline DSL code, etc.) but for some reason the reboot has caused this problem to start happening.

- For an example of the DSL code we’re using, see this issue I created — and resolved as a duplicate of this one: JENKINS-46969

- our environment is building a Docker image from a Dockerfile in the workspace and running the build operations inside a container launched from this image — which is slightly different than pulling an existing image from a Docker registry.

- our Docker image is based off a RHEL 7.2 base image

- I’ve confirmed our container has the ‘ps’ command line tool installed (there were some mentions that the Jenkins Docker APIs require this tool to be installed to work correctly)

- Using the exact same docker image and pipeline code results in successful builds about 75% of the time, so the failures are intermittent …. and yet frequent enough to be affecting production work

- We’ve reproduced the bug on 2 different Jenkins farms, one running core v2.43.3 and the other running v2.60.2. There are a variety of plugins installed on each of our farms, so if there are particular plugins that may play a part in this bug feel free to let me know and I’ll compile a list of the versions of those plugins for review.

- The agents attached to these masters are running one of the following host OSes: Centos 7.3, RHEL 7.3, RHEL 7.4

- The agents are running one of the following versions of Docker: 17.03.1-ce, 17.06.0-ce

As mentioned, these failures are affecting our production builds so any assistance with resolving them would be appreciated.

Kevin Phillips

added a comment — 2017-09-20 12:51 Just to be clear, here are a few specifics of the behavior I’m seeing in our production environment in case it helps isolate the problem further:

for some reason we only started experiencing this issue about 2 weeks ago. What’s even stranger is the instigating factor seems to be a server reboot of our Jenkins master instance. Based on my review, the agents remained unchanged during this outage, and the master remained the same (same core version, same plugin versions, same OS packages, same pipeline DSL code, etc.) but for some reason the reboot has caused this problem to start happening.

For an example of the DSL code we’re using, see this issue I created — and resolved as a duplicate of this one: JENKINS-46969

our environment is building a Docker image from a Dockerfile in the workspace and running the build operations inside a container launched from this image — which is slightly different than pulling an existing image from a Docker registry.

our Docker image is based off a RHEL 7.2 base image

I’ve confirmed our container has the ‘ps’ command line tool installed (there were some mentions that the Jenkins Docker APIs require this tool to be installed to work correctly)

Using the exact same docker image and pipeline code results in successful builds about 75% of the time, so the failures are intermittent …. and yet frequent enough to be affecting production work

We’ve reproduced the bug on 2 different Jenkins farms, one running core v2.43.3 and the other running v2.60.2. There are a variety of plugins installed on each of our farms, so if there are particular plugins that may play a part in this bug feel free to let me know and I’ll compile a list of the versions of those plugins for review.

The agents attached to these masters are running one of the following host OSes: Centos 7.3, RHEL 7.3, RHEL 7.4

The agents are running one of the following versions of Docker: 17.03.1-ce, 17.06.0-ce

As mentioned, these failures are affecting our production builds so any assistance with resolving them would be appreciated.

jglick I do appologize if this comment comes across as cynical, but telling people to just not use the built-in docker APIs provided by the Jenkins Pipeline infrastructure doesn’t seem very helpful to me. Cloudbees seems to have made it very clear that they are expecting everyone to adopt the new Pipeline subsystem as a new standard for Jenkins automation, and as part of that infrastructure are APIs for orchestrating Docker containers. Suggesting that these APIs are unstable and should simply not be used seems to contradict that stance. Further, as emishas has already pointed out, trying to get other build steps / plugins to interact correctly with a docker container managed in this way is going to be fragile at best, and impossible at worst. While this might be a reasonable workaround for the trivial case of running simple shell commands within the container, it does not seem to me to be a reasonable workaround for the general case.

Kevin Phillips

added a comment — 2017-09-20 13:00 jglick I do appologize if this comment comes across as cynical, but telling people to just not use the built-in docker APIs provided by the Jenkins Pipeline infrastructure doesn’t seem very helpful to me. Cloudbees seems to have made it very clear that they are expecting everyone to adopt the new Pipeline subsystem as a new standard for Jenkins automation, and as part of that infrastructure are APIs for orchestrating Docker containers. Suggesting that these APIs are unstable and should simply not be used seems to contradict that stance. Further, as emishas has already pointed out, trying to get other build steps / plugins to interact correctly with a docker container managed in this way is going to be fragile at best, and impossible at worst. While this might be a reasonable workaround for the trivial case of running simple shell commands within the container, it does not seem to me to be a reasonable workaround for the general case.

Is there any way to generate verbose output from Jenkins and / or Docker to help debug this issue more effectively?

For example, there are some mentions above that Jenkins may be doing some ‘ps’ operations to detect whether the scripts running within the container have finished execution or not. Is there any way to get details as to what commands are being run, what their command line options are at the time they are run, what their stdout/stderr messages are, what their returns codes are, etc.? Similarly, if there are Docker tools being used to orchestrate these operations, is there any way to see which commands are being issued and when, and what their inputs/outputs are at runtime?

Based on my current evaluation, none of the system logs provide any sort of feedback in this regard. I’ve enabled verbose logging for Jenkins and Docker, examined their logs on both the master and the agents, I’ve looked as the sys logs, etc. and none of them give any indication of how or why the containers are being closed. In fact the only indication of any error happening at all is that message in the build log «ERROR: script returned exit code -1» which is misleading at best. It appears that the script — as in, the one being run as part of the ‘sh’ build step — isn’t actually returning that error code. Perhaps it’s an error code produced by a Docker command run by the Jenkins API under the hood. Not sure. Either way it appears to be of little to no help in debugging this problem.

Any suggestions on how to gather more intel on the problem would be appreciated.

Kevin Phillips

added a comment — 2017-09-20 13:42 Is there any way to generate verbose output from Jenkins and / or Docker to help debug this issue more effectively?

For example, there are some mentions above that Jenkins may be doing some ‘ps’ operations to detect whether the scripts running within the container have finished execution or not. Is there any way to get details as to what commands are being run, what their command line options are at the time they are run, what their stdout/stderr messages are, what their returns codes are, etc.? Similarly, if there are Docker tools being used to orchestrate these operations, is there any way to see which commands are being issued and when, and what their inputs/outputs are at runtime?

Based on my current evaluation, none of the system logs provide any sort of feedback in this regard. I’ve enabled verbose logging for Jenkins and Docker, examined their logs on both the master and the agents, I’ve looked as the sys logs, etc. and none of them give any indication of how or why the containers are being closed. In fact the only indication of any error happening at all is that message in the build log «ERROR: script returned exit code -1» which is misleading at best. It appears that the script — as in, the one being run as part of the ‘sh’ build step — isn’t actually returning that error code. Perhaps it’s an error code produced by a Docker command run by the Jenkins API under the hood. Not sure. Either way it appears to be of little to no help in debugging this problem.

Any suggestions on how to gather more intel on the problem would be appreciated.

I had the same issue in my build where a Jenkins container, is running a Jenkinsfile which in turn runs commands inside another container, based on the latest ubuntu one, with the withDockerContainer or docker.image.inside command. A script that would take a few minutes checking out some git repositories would prematurely end with the ERROR: script returned exit code -1.

There is some discussion in Stackoverflow where it is mentioned that this issue is related with ps not installed in the container you want to run commands inside in. Indeed manually running my container with docker exec -it mycontainer bash, I noticed that running ps was failing with a command not found. I am not sure what the exact connection is but I can confirm that adding apt-get install -y procps in the Dockerfile of the container that I want to run commands inside, solved that issue for me, at least as a temporary workaround.

Ioannis Iosifidis

added a comment — 2017-10-24 15:19 I had the same issue in my build where a Jenkins container, is running a Jenkinsfile which in turn runs commands inside another container, based on the latest ubuntu one, with the withDockerContainer or docker.image.inside command. A script that would take a few minutes checking out some git repositories would prematurely end with the ERROR: script returned exit code -1.

There is some discussion in Stackoverflow where it is mentioned that this issue is related with ps not installed in the container you want to run commands inside in. Indeed manually running my container with docker exec -it mycontainer bash, I noticed that running ps was failing with a command not found. I am not sure what the exact connection is but I can confirm that adding apt-get install -y procps in the Dockerfile of the container that I want to run commands inside, solved that issue for me, at least as a temporary workaround.

I’m seeing this issue in Jenkins ver. 2.63. I am running a python script inside a docker container and getting:

...

[Pipeline] stage

[Pipeline] { (Publish Classes)

[Pipeline] sh

[***] Running shell script

+ ./post_classes.py

...

ERROR: script returned exit code -1

Finished: FAILURE

I’ve tried to reproduce it with a simpler script, but I can’t. It happens only with certain builds.

Update:

The docker container I was building didn’t contain ps (thank you iiosifidis for your message above). After adding ps, it fixed the issue.

Cosmin Stroe

added a comment — 2018-06-08 04:30 — edited I’m seeing this issue in Jenkins ver. 2.63. I am running a python script inside a docker container and getting:

…

[Pipeline] stage

[Pipeline] { (Publish Classes)

[Pipeline] sh

[***] Running shell script

+ ./post_classes.py

…

ERROR: script returned exit code -1

Finished: FAILURE

I’ve tried to reproduce it with a simpler script, but I can’t. It happens only with certain builds.

Update:

The docker container I was building didn’t contain ps (thank you iiosifidis for your message above). After adding ps, it fixed the issue.

I had the same issue,and I had checked my docker container,“ps” command can run in it,so I thought it had no relation with the cmmand “ps” installed in docker image,any other solutions?

wei lan

added a comment — 2018-09-26 06:38 I had the same issue,and I had checked my docker container,“ps” command can run in it,so I thought it had no relation with the cmmand “ps” installed in docker image,any other solutions?

There could be many reasons, not necessarily related to one another.

Contents

- 1 External

- 2 Internal

- 3 Scripted Pipeline

- 3.1 Scripted Pipeline at Runtime

- 3.2 Scripted Pipeline Failure Handling

- 4 Declarative Pipeline

- 4.1 Declarative Pipeline Directives

- 4.1.1 environment

- 4.1.2 parameters

- 4.2 Declarative Pipeline Failure Handling

- 4.1 Declarative Pipeline Directives

- 5 Parameters

- 6 Environment Variables

- 7 Pipeline Steps

- 7.1 node

- 7.2 stage

- 7.3 parallel

- 7.4 sh

- 7.4.1 sh — Script Return Status

- 7.4.2 sh — Script stdout

- 7.4.3 sh — Obtaining both the Return Status and stdout

- 7.4.4 sh — Obtaining stdout and Preventing the Pipeline to Fail on Error

- 7.4.5 sh — Label

- 7.5 ws

- 7.6 build

- 7.7 junit

- 7.8 checkout

- 7.8.1 Git Plugin

- 7.9 withCredentials

- 7.10 Basic Steps

- 7.10.1 echo

- 7.10.2 error

- 7.10.3 stash

- 7.10.4 input

- 7.10.5 timeout

- 7.10.6 withEnv

- 7.10.7 catchError

- 7.11 Basic Steps that Deal with Files

- 7.11.1 dir

- 7.11.2 deleteDir

- 7.11.3 pwd

- 7.11.4 readFile

- 7.11.5 writeFile

- 7.11.6 fileExists

- 7.11.7 findFiles

- 7.12 Core

- 7.12.1 archiveArtifacts

- 7.12.2 fingerprint

- 8 Obtaining the Current Pipeline Build Number

- 9 FlowInterruptedException

- 10 Navigating the Project Model Hierarchy

- 11 Passing an Environment Variable from Downstream Build to Upstream Build

- 12 @NonCPS

- 13 Build Summary

- 14 Dynamically Loaded Classes and Constructors

- 15 Fail a Build

- 16 Dynamically Loading Groovy Code from Repository into a Pipeline

- 17 Groovy on Jenkins Idiosyncrasies

- 17.1 Prefix Static Method Invocations with Declaring Class Name when Calling from Subclass

External

- https://jenkins.io/doc/book/pipeline/syntax/

- https://jenkins.io/doc/pipeline/steps/

- https://jenkins.io/doc/pipeline/steps/core/

Internal

- Jenkins Concepts

- Writing a Jenkins Pipeline

- Simple Pipeline Configuration

Scripted Pipeline

- https://www.jenkins.io/doc/book/pipeline/syntax/#scripted-pipeline

Scripted Pipeline is classical way of declaring Jenkins Pipeline, preceding Declarative Pipeline. Unlike the Declarative Pipeline, the Scripted Pipeline is a general-purpose DSL built with Groovy. The pipelines are declared in Jenkinsfiles and executed from the top of the Jenkinsfile downwards, like most traditional scripts in Groovy. Groovy syntax is available directly in the Scripted Pipeline declaration. The flow control can be declared with if/else conditionals or via Groovy’s exception handling support with try/catch/finally.

The simplest pipeline declaration:

A more complex one:

node('some-worker-label') { echo 'Pipeline logic starts' stage('Build') { if (env.BRANCH_NAME == 'master') { echo 'this is only executed on master' } else { echo 'this is executed elsewhere' } } stage('Test') { // ... } stage('Deploy') { // ... } stage('Example') { try { sh 'exit 1' } catch(ex) { echo 'something failed' throw } } }

The basic building block of the Scripted Pipeline syntax is the step. The Scripted Pipeline does not introduce any steps that are specific to its syntax. The generic pipeline steps, such as node, stage, parallel, etc. are available here: Pipeline Steps.

Scripted Pipeline at Runtime

When the Jenkins server starts to execute the pipeline, it pulls the Jenkinsfile either from a repository, following a checkout sequence similar to the one shown here, or from the pipeline configuration, if it is specified in-line. Then the Jenkins instance instantiates a WorkflowScript (org.jenkinsci.plugins.workflow.cps.CpsScript.java) instance. The «script» instance can be used to access the following state elements:

- pipeline parameters, with

this.params, which is a Map.

Scripted Pipeline Failure Handling

Scripted pipeline fail when an exception is thrown and reached the pipeline layer. The pipeline code can use try/catch/finally semantics to control this behavior, by catching the exceptions and preventing them from reaching the pipeline layer.

stage('Some Stage') { try { throw new Exception ("the build has failed") } catch(Exception e) { // squelch the exception, the pipeline will not fail } }

The pipeline also fails when a command invoked with sh exits with a non-zero exit code. The underlying implementation throws an exception and that makes the build fail. It is possible to configure sh to not fail the build automatically on non-zero exit code, with its returnStatus option.

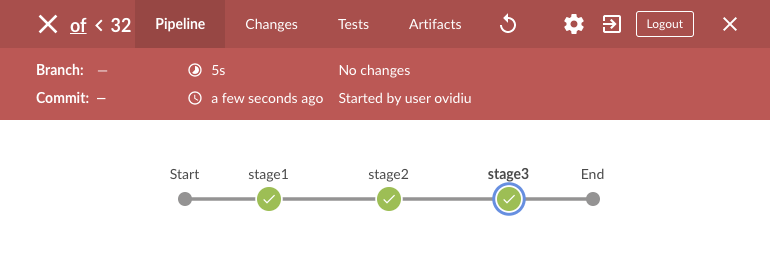

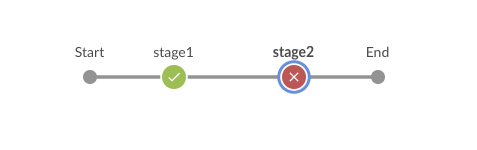

The first failure in a sequential execution will stop the build, no subsequent stages will be executed. The stage that caused the failure will be shown in red in Blue Ocean (in the example below, there were three sequential stages but stage3 did not get executed):

In this case the corresponding stage and the entire build will be marked as ‘FAILURE’.

A build can be programmatically marked as fail by setting the value of the currentBuild.result variable:

currentBuild.result = 'FAILURE'

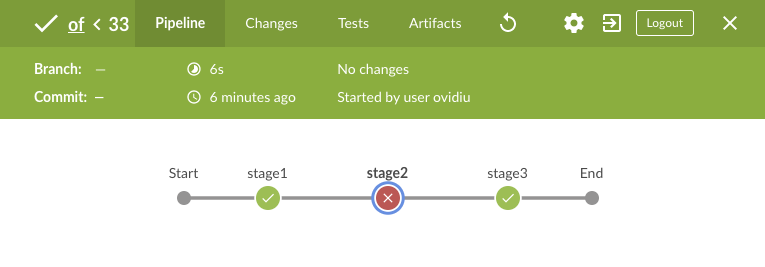

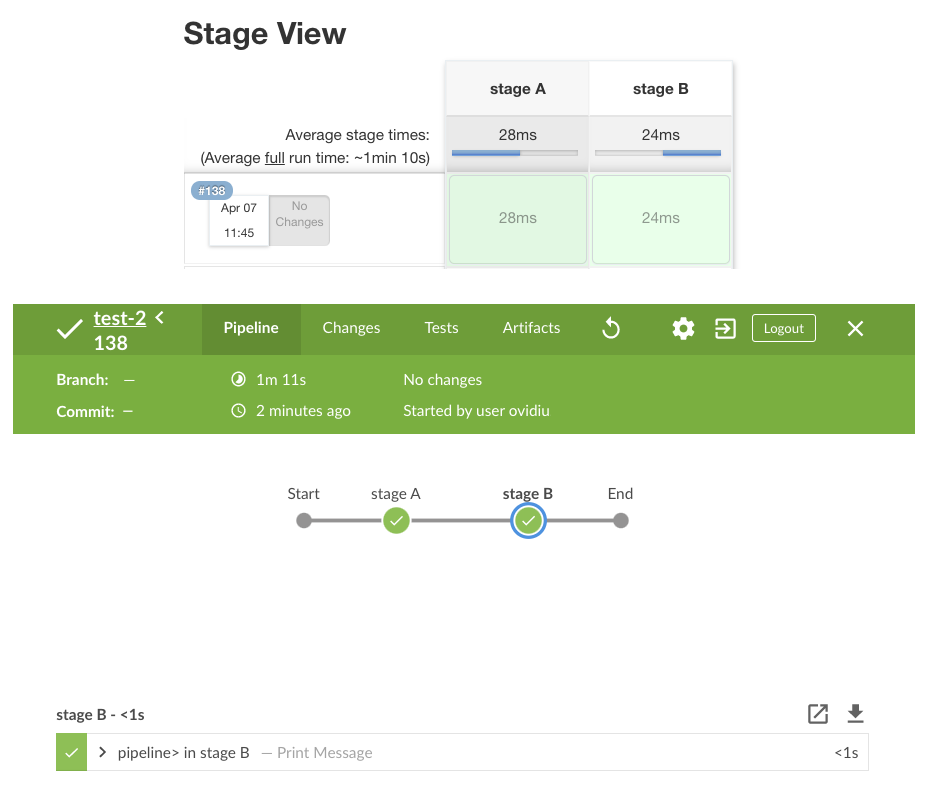

The entire build will be marked as failed (‘FAILURE’, ‘red’ build), but the stage in which the variable assignment was done, stage2 in this case, will not show as failed:

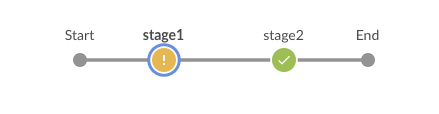

The opposite behavior of marking a specific stage as failed (‘FAILURE’), but allowing the overall build to be successful can be obtained by using the catchError basic step:

stage('stage2') { catchError(buildResult: 'SUCCESS', stageResult: 'FAILURE') { throw new RuntimeException("synthetic") } }

The result is a failed ‘stage2’ but a successful build:

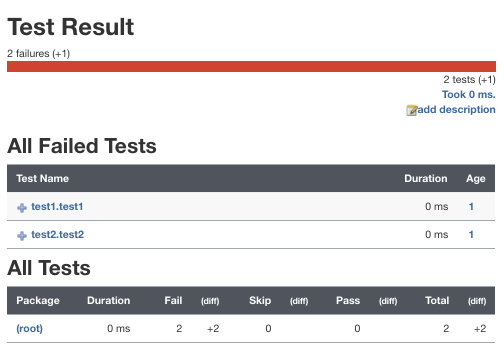

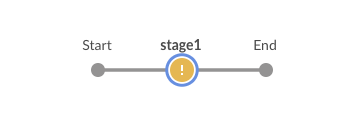

A stage result and the entire build result may be also influenced by the JUnit test report produced for the stage, if any. If the test report exists and it is processed by the junit step, and if the report contains test errors and failures, they’re both handled as «instability» of the build and the corresponding stage and the entire build will be marked as UNSTABLE. The classic view and Blue ocean will render a yellow stage and build:

Also see junit below and:

- currentBuild.result

- currentBuild.currentResult

Declarative Pipeline

- https://www.jenkins.io/doc/book/pipeline/syntax/#declarative-pipeline

Declarative Pipeline is a new way of declaring Jenkins pipelines, and consists in a more simplified and opinionated syntax. Declarative Pipeline is an alternative to Scripted Pipeline.

pipeline { agent any options { skipStagesAfterUnstable() } stages { stage('Build') { steps { sh 'make' } } stage('Test'){ steps { sh 'make check' junit 'reports/**/*.xml' } } stage('Deploy') { steps { sh 'make publish' } } } }

Declarative Pipeline Directives

environment

- https://jenkins.io/doc/book/pipeline/syntax/#environment

See:

- Jenkins Pipeline Environment Variables

parameters

- https://jenkins.io/doc/book/pipeline/syntax/#parameters

See:

- Jenkins Pipeline Parameters

Declarative Pipeline Failure Handling

TODO: https://www.jenkins.io/doc/book/pipeline/jenkinsfile/#handling-failure

Parameters

- Jenkins Pipeline Parameters

Environment Variables

- Jenkins Pipeline Environment Variables

Pipeline Steps

- https://jenkins.io/doc/pipeline/steps/

node

- https://jenkins.io/doc/pipeline/steps/workflow-durable-task-step/#-node-allocate-node

Allocates an executor or a node, typically a worker, and runs the enclosed code in the context of the workspace of that worker. Node may take a label name, computer name or an expression. The labels are declared on workers when they are defined in the master configuration, in their respective «clouds».

String NODE_LABEL = 'infra-worker' node(NODE_LABEL) { sh 'uname -a' }

stage

- https://www.jenkins.io/doc/pipeline/steps/pipeline-stage-step/#stage-stage

The stage step defines a logical stage of the pipeline. The stage creates a labeled block in the pipeline and allows executing a closure in the context of that block:

stage('stage A') { print 'pipeline> in stage A' } stage('stage B') { print 'pipeline> in stage B' }

Embedded stages as in this example are possible, and they will execute correctly, but they do not render well in Blue Ocean (Stage A.1 and Stage A.2 are not represented, just Stage A and B):

stage('stage A') { print('pipeline> in stage A') stage('stage A.1') { print('pipeline> in stage A.1') } stage('stage A.2') { print('pipeline> in stage A.1') } } stage('stage B') { print('pipeline> in stage B') } }

To control failure behavior at stage level, use catchError step, described below.

parallel

- https://www.jenkins.io/doc/pipeline/steps/workflow-cps/#parallel-execute-in-parallel

Takes a map from branch names to closures and an optional argument failFast, and executes the closure code in parallel.

parallel firstBranch: { // do something }, secondBranch: { // do something else }, failFast: true|false

stage("tests") { parallel( "unit tests": { // run unit tests }, "coverage tests": { // run coverage tests } ) }

Allocation to different nodes can be performed inside the closure:

def tasks = [:] tasks["branch-1"] = { stage("task-1") { node('node_1') { sh 'echo $NODE_NAME' } } } tasks["branch-2"] = { stage("task-2") { node('node_1') { sh 'echo $NODE_NAME' } } } parallel tasks

sh

- https://www.jenkins.io/doc/pipeline/steps/workflow-durable-task-step/#sh-shell-script

- Playground sh

Execute a shell script command, or multiple commands, on multiple lines. It can be specified in-line or it can refer to a file available on the filesystem exposed to the Jenkins node. It needs to be enclosed by a node to work.

The metacharacter $ must be escaped: ${LOGDIR}, unless it refers to a variable form the Groovy context.

Example:

stage.sh """ LOGDIR=${fileName}-logs mkdir -p ${LOGDIR}/something """.stripIndent()

stage.sh ''' LOGDIR=some-logs mkdir -p ${LOGDIR}/something '''.stripIndent()

Both """...""" and '''...''' Groovy constructs can be used. For more details on enclosing representing multi-line strings with """ or ''', see:

- Groovy | Multi-Line_Strings

sh — Script Return Status

By default, a script exits with a non-zero return code will cause the step and the pipeline to fail with an exception:

ERROR: script returned exit code 1

To prevent that, configure returnStatus to be equal with true, and the step will return the exit value of the script, instead of failing on non-zero exit value. You may then compare to zero and decide whether to fail the pipeline (throw an exception) or not from the Groovy layer that invoked sh.

int exitCode = sh(returnStatus: true, script: './bin/do-something') if (exitCode != 0) throw new RuntimeException('my script failed')

The pipeline log result on failure looks similar to:

[Pipeline] End of Pipeline java.lang.RuntimeException: my script failed at WorkflowScript.run(WorkflowScript:17) ...

Also see Scripted Pipeline Failure Handling section above.

sh — Script stdout

By default, the standard output of the script is send to the log. If returnStdout is set to true, the script standard output is returned as String as the step value. Call trim() to strip off the trailing newline.

The script’s stderr is always sent to the log.

String result = sh(returnStdout: true, script: './bin/do-something').trim()

sh — Obtaining both the Return Status and stdout

If both returnStatus and returnStdout are turned on, returnStatus takes priority and the function returns the exit code. ⚠️ The stdout is discarded.

sh — Obtaining stdout and Preventing the Pipeline to Fail on Error

In case you want to use the external shell command to return a result to the pipeline, but not fail the pipeline when the external command fails, use this pattern:

try { String stdout = sh(returnStdout: true, returnStatus: false, script: 'my-script') // use the content returned by stdout in the pipeline print "we got this as result of sh invocation: ${stdout.trim()}" } catch(Exception e) { // catching the error will prevent pipeline failure, both stdout and stderr are captured in the pipeline log print "the invocation failed" }

If the command fails, both its stdout and stderr are captured in the pipeline log.

sh — Label

If a «label» argument is specified, the stage will render that label in the Jenkins and Blue Ocean logs:

sh(script: './bin/do-something', label: 'this will show in logs')

ws

- https://jenkins.io/doc/pipeline/steps/workflow-durable-task-step/#-ws-allocate-workspace

Allocate workspace.

build

- https://jenkins.io/doc/pipeline/steps/pipeline-build-step/

This is how a main pipeline launches in execution a subordinate pipeline.

This is how we may be able to return the result: https://support.cloudbees.com/hc/en-us/articles/218554077-How-to-set-current-build-result-in-Pipeline

junit

- https://jenkins.io/doc/pipeline/steps/junit/#-junit-archive-junit-formatted-test-results

Jenkins understands the JUnit test report XML format (which is also used by TestNG). To use this feature, set up the build to run tests, which will generate their test reports into a local agent directory, then specify the path to the test reports in Ant glob syntax to the JUnit plugin pipeline step junit:

stage.junit '**/target/*-report/TEST-*.xml'

⚠️ Do not specify the path to a single test report file. The junit step will not load the file, even if it exists and it is a valid report, and will print an error message similar to:

[Pipeline] junit Recording test results No test report files were found. Configuration error?

Always use Ant glob syntax to specify how the report(s) are to be located:

Jenkins uses this step to ingest the test results, process them and provide historical test result trends, a web UI for viewing test reports, tracking failures, etc.

Both JUnit errors and failures are reported by the junit step as «failures», even if the JUnit XML report indicates both errors and failures. The following JUnit report:

<testsuite name="testsuite1" tests="2" errors="1" failures="1"> <testcase name="test1" classname="test1"> <error message="I have errored out"></error> </testcase> <testcase name="test2" classname="test2"> <failure message="I have failed"></failure> </testcase> </testsuite>

produces this Jenkins report:

The presence of at least one JUnit failure marks the corresponding stage, and the entire build as «UNSTABLE». The stage is rendered in the classical view and also in Blue Ocean in yellow:

Also see: Scripted Pipeline Failure Handling above.

checkout

- https://www.jenkins.io/doc/pipeline/steps/workflow-scm-step/

The «checkout» step is provided by the pipeline «SCM» plugin.

Git Plugin

- https://plugins.jenkins.io/git/

checkout([ $class: 'GitSCM', branches: [[name: 'develop']], doGenerateSubmoduleConfigurations: false, extensions: [ [$class: 'GitLFSPull'], [$class: 'CloneOption', noTags: true, reference: '', timeout: 40, depth: 1], [$class: 'PruneStaleBranch'] ], submoduleCfg: [], userRemoteConfigs: [ [url: 'git@github.com:some-org/some-project.git', credentialsId: 'someCredId'] ] ])

The simplest configuration that works:

checkout([ $class: 'GitSCM', branches: [[name: 'master']], userRemoteConfigs: [ [url: 'https://github.com/ovidiuf/playground.git'] ] ])

The step checks out the repository into the current directory, in does not create a top-level directory (‘some-project’ in this case). .git will be created in the current directory. If the current directory is the workspace, .git will be created in the workspace root.

withCredentials

A step that allows using credentials defined in the Jenkins server. See:

- Jenkins Credentials Binding Plugin

Basic Steps

These basic steps are used invoking on stage.. In a Jenkinsfile, and inside a stage, invoke on this. or simply invoking directly, without qualifying.

echo

echo "pod memory limit: ${params.POD_MEMORY_LIMIT_Gi}"

echo """ Run Configuration: something: ${SOMETHING} something else: ${SOMETHING_ELSE} """

error

- https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#error-error-signal

This step signals an error and fails the pipeline.

Alternatively, you can simply:

throw new Exception("some message")

stash

- https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#stash-stash-some-files-to-be-used-later-in-the-build

input

- https://jenkins.io/doc/pipeline/steps/pipeline-input-step/

In its basic form, renders a «Proceed»/»Abort» input box with a custom message. Selecting «Proceed» passes the control to the next step in the pipeline. Selecting «Abort» throws a org.jenkinsci.plugins.workflow.steps.FlowInterruptedException, which produces «gray» pipelines.

input( id: 'Proceed1', message: 'If the manual test is successful, select 'Proceed'. Otherwise, you can abort the pipeline.' )

timeout

- https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#-timeout-enforce-time-limit

Upon timeout, an org.jenkinsci.plugins.workflow.steps.FlowInterruptedException is thrown from the closure that is being executed, and not from the timeout() invocation. The code shown below prints «A», «B», «D»:

timeout(time: 5, unit: 'SECONDS') { echo "A" try { echo "B" doSometing(); // this step takes a very long time and will time out echo "C" } catch(org.jenkinsci.plugins.workflow.steps.FlowInterruptedException e) { // if this exception propagates up without being caught, the pipeline gets aborted echo "D" } }

withEnv

- https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#withenv-set-environment-variables

Sets one more more environment variables within a block, making them available to any external process initiated within that scope. If a variable value contains spaces, it does need to be quoted inside the sequence, as shown below:

node { withEnv(['VAR_A=something', 'VAR_B=something else']) { sh 'echo "VAR_A: ${VAR_A}, VAR_B: ${VAR_B}"' } }

catchError

- https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#catcherror-catch-error-and-set-build-result-to-failure

catchError { sh 'some-command-that-might-fail' }

If the body throws an exception, mark the build as a failure, but continue to execute the pipeline from the statement following catchError step. If an exception is thrown, the behavior can be configured to:

- print a message

- set the build result other than failure

- change the stage result

- ignore certain kinds of exceptions that are used to interrupt the build

If catchError is used, there’s no need for finally, as the exception is caught and does not propagates up.

The alternative is to use plain try/catch/finally blocks.

Configuration:

catchError(message: 'some message', stageResult: 'FAILURE'|'SUCCESS'|... , buildResult: 'FAILURE'|'SUCCESS'|..., catchInterruptions: true) { sh 'some-command-that-might-fail' }

- message an an optional String message that will be logged to the console. If the stage result is specified, the message will also be associated with that result and may be shown in visualizations.

- stageResult an optional String that will set as stage result when an error is caught. Use SUCCESS or null to keep the stage result from being set when an error is caught.

- buildResult an optional String that will be set as overall build result when an error is caught. Note that the build result can only get worse, so you cannot change the result to SUCCESS if the current result is UNSTABLE or worse. Use SUCCESS or null to keep the build result from being set when an error is caught.

- catchInterruptions If true, certain types of exceptions that are used to interrupt the flow of execution for Pipelines will be caught and handled by the step. If false, those types of exceptions will be caught and immediately rethrown. Examples of these types of exceptions include those thrown when a build is manually aborted through the UI and those thrown by the timeout step.

The default behavior for catchInterruptions is «true»: the code executing inside catchError() will be interrupted, whether it is an external command or pipeline code, and the code immediately following catchError() closure is executed.

stage('stage1') { catchError() { sh 'jenkins/pipelines/failure/long-running' } print ">>>> post catchError()" }

[Pipeline] stage

[Pipeline] { (stage1)

[Pipeline] catchError

[Pipeline] {

[Pipeline] sh

entering long running ....

sleeping for 60 secs

Aborted by ovidiu

Sending interrupt signal to process

jenkins/pipelines/failure/long-running: line 6: 5147 Terminated sleep ${sleep_secs}

done sleeping, exiting long running ....

[Pipeline] }

[Pipeline] // catchError

[Pipeline] echo

>>>> post catchError()

However, it seems that the same behavior occurs if catchError() is invoked with catchInteruptions: true, so it’s not clear what is the difference..

Probably the safest way to invoke is to use this pattern, this way we’re sure that even some exceptions bubble up, the cleanup work will be performed:

stage('stage1') { try { catchError() { sh 'jenkins/pipelines/failure/long-running' } } finally { print ">>>> execute mandatory cleanup code" } }

Also see Scripted Pipeline Failure Handling section above.

Basic Steps that Deal with Files

dir

- https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#dir-change-current-directory

Change current directory, on the node, while the pipeline code runs on the master.

If the dir() argument is a relative directory, the new directory available to the code in the closure is relative to the current directory before the call, obtained with pwd():

dir("dirA") { // execute in the context of pwd()/dirA", where pwd() // is the current directory before and a subdirectory of the workspace }

If the dir() argument is an absolute directory, the new directory available to the code in the closure is the absolute directory specified as argument:

dir("/tmp") { // execute in /tmp }

In both cases, the current directory is restored upon closure exit.

Also see:

- Pipeline and Files

deleteDir

- https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#deletedir-recursively-delete-the-current-directory-from-the-workspace

To recursively delete a directory and all its contents, step into the directory with dir() and then use deleteDir():

dir('tmp') { // ... deleteDir() }

This will delete ./tmp content and the ./tmp directory itself.

pwd

- https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#pwd-determine-current-directory

Return the current directory path on node as a string, while the pipeline code runs on the master.

Parameters:

tmp (boolean, optional) If selected, return a temporary directory associated with the workspace rather than the workspace itself. This is an appropriate place to put temporary files which should not clutter a source checkout; local repositories or caches; etc.

println "current directory: ${pwd()}"

println "temporary directory: ${pwd(tmp: true)}"

Also see:

- Pipeline and Files

readFile

- https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#readfile-read-file-from-workspace

Read a file from the workspace, on the node this operation is made in context of.

String versionFile = readFile("${stage.WORKSPACE}/terraform/my-module/VERSION")

If the file does not exist, the step throws java.nio.file.NoSuchFileException: /no/such/file.txt

writeFile

- https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#writefile-write-file-to-workspace

writeFile will create any intermediate directory if necessary.

To create a directory, dir() into the inexistent directory then create a dummy file writeFile(file: '.dummy', text: ):

dir('tmp') { writeFile(file: '.dummy', text: '') }

Alternatively, the directory can be created with a shell command:

Also see:

- Pipeline and Files

fileExists

- https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#fileexists-verify-if-file-exists-in-workspace

Also see:

- Pipeline and Files

fileExists can be used on directories as well. This is how to check whether a directory exists:

dir('dirA') { if (fileExists('/')) { println "directory exists" } else { println "directory does not exist" } }

fileExists('/'), fileExists('.') and fileExists('') are equivalent, they all check for the existence of a directory into which the last dir() stepped into. The last form fileExists('') issues a warning, so it’s not preferred:

The fileExists step was called with a null or empty string, so the current directory will be checked instead.

findFiles

- https://www.jenkins.io/doc/pipeline/steps/pipeline-utility-steps/#findfiles-find-files-in-the-workspace

Find files in workspace:

def files = findFiles(glob: '**/Test-*.xml', excludes: '')

Uses Ant style pattern: https://ant.apache.org/manual/dirtasks.html#patterns

Returns an array of instance for which the following attributes are available:

- name: the name of the file and extension, without any path component (e.g. «test1.bats»)

- path: the relative path to the current directory set with dir(), including the name of the file (e.g. «dirA/subDirA/test1.bats»)

- directory: a boolean which is true if the file is a directory, false otherwise.

- length: length in bytes.

- lastModified: 1617772442000

Example:

dir('test') { def files = findFiles(glob: '**/*.bats') for(def f: files) { print "name: ${f.name}, path: ${f.path}, directory: ${f.directory}, length: ${f.length}, lastModified: ${f.lastModified}" } }

Playground:

- playground/jenkins/pipelines/findFiles

Core

- https://jenkins.io/doc/pipeline/steps/core/

archiveArtifacts

- https://jenkins.io/doc/pipeline/steps/core/#-archiveartifacts-archive-the-artifacts

Archives the build artifacts (for example, distribution zip files or jar files) so that they can be downloaded later. Archived files will be accessible from the Jenkins webpage. Normally, Jenkins keeps artifacts for a build as long as a build log itself is kept. Note that the Maven job type automatically archives any produced Maven artifacts. Any artifacts configured here will be archived on top of that. Automatic artifact archiving can be disabled under the advanced Maven options.

fingerprint

Obtaining the Current Pipeline Build Number

def buildNumber = currentBuild.rawBuild.getNumber()

FlowInterruptedException

throw new FlowInterruptedException(Result.ABORTED)

Navigating the Project Model Hierarchy

String branch="..." String projectName = JOB_NAME.substring(0, JOB_NAME.size() - JOB_BASE_NAME.size() - 1) WorkflowMultiBranchProject project = Jenkins.instance.getItemByFullName("${projectName}") if (project == null) { ... } WorkflowJob job = project.getBranch(branch) if (job == null) { ... } WorkflowRun run = job.getLastSuccessfulBuild() if (run == null) { ... } List<Run.Artifact> artifacts = run.getArtifacts() ...

Passing an Environment Variable from Downstream Build to Upstream Build

Upstream build:

... def result = build(job: jobName, parameters: params, quietPeriod: 0, propagate: true, wait: true); result.getBuildVariables()["SOME_VAR"] ...

Downstream build:

env.SOME_VAR = "something"

@NonCPS

- Jenkins Concepts | CPS

Build Summary

// // write /tmp/summary-section-1.html // def summarySection1 = util.catFile('/tmp/summary-section-1.html') if (summarySection1) { def summary = manager.createSummary('document.png') summary.appendText(summarySection1, false) } // // write /tmp/summary-section-2.html // def summarySection2 = util.catFile('/tmp/summary-section-2.html') if (summarySection2) { def summary = manager.createSummary('document.png') summary.appendText(summarySection2, false) }

Dynamically Loaded Classes and Constructors

If classes are loaded dynamically in the Jenkinsfile, do not use constructors and new. Use MyClass.newInstance(…).

Fail a Build

See error above.

Dynamically Loading Groovy Code from Repository into a Pipeline

This playground example shows how to dynamically load Groovy classes stored in a GitHub repository into a pipeline.

The example is not complete, in that invocation of a static method from Jenkinsfile does not work yet.

- https://github.com/ovidiuf/playground/tree/master/jenkins/pipelines/dynamic-groovy-loader

Groovy on Jenkins Idiosyncrasies

Prefix Static Method Invocations with Declaring Class Name when Calling from Subclass

Prefix the static method calls with the class name that declares them when calling from a subclass, otherwise you’ll get a:

hudson.remoting.ProxyException: groovy.lang.MissingMethodException: No signature of method: java.lang.Class.locateOverlay() is applicable for argument types: (WorkflowScript, playground.jenkins.kubernetes.KubernetesCluster, playground.jenkins.PlatformVersion, java.lang.String, java.lang.String) values: [WorkflowScript@1db9ab90, <playground.jenkins.kubernetes.KubernetesCluster@376dc438>, ...]

Я определяю конвейер с помощью Jenkins Blue Ocean.

Я пытаюсь сделать простое соглашение о кодировании python pep8, но если я захожу в оболочку и набираю команду напрямую, она работает нормально.

Но когда та же команда выполняется в конвейере, она выполняется, но в конце отображается «сценарий возвратил код выхода 1». Из-за этого кода ошибки переход к следующему шагу не выполняется.

Есть ли обходной путь?

using credential github

> git rev-parse --is-inside-work-tree # timeout=10

Fetching changes from the remote Git repository

> git config remote.origin.url https://github.com/YunTaeIl/jenkins_retest.git # timeout=10

Cleaning workspace

> git rev-parse --verify HEAD # timeout=10

Resetting working tree

> git reset --hard # timeout=10

> git clean -fdx # timeout=10

Fetching without tags

Fetching upstream changes from https://github.com/YunTaeIl/jenkins_retest.git

> git --version # timeout=10

using GIT_ASKPASS to set credentials GitHub Access Token

> git fetch --no-tags --progress -- https://github.com/YunTaeIl/jenkins_retest.git +refs/heads/master:refs/remotes/origin/master # timeout=10

Checking out Revision fe49ddf379732305a7a50f014ab4b25f9382c913 (master)

> git config core.sparsecheckout # timeout=10

> git checkout -f fe49ddf379732305a7a50f014ab4b25f9382c913 # timeout=10

> git branch -a -v --no-abbrev # timeout=10