Recommend Projects

-

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

incaller / prometheus_bot

Goto Github

PK

View Code? Open in Web Editor

NEW

20.0

171.0

104 KB

Telegram bot for prometheus alerting

License: MIT License

Shell 5.95%

Makefile 0.74%

Dockerfile 3.18%

prometheus_bot’s Introduction

- prometheus_bot

- Compile

- Usage

- Configuring alert manager

- Test

- Create your own test

- Customising messages with template

- Template extra functions

- Support this functions list

- Template extra functions

- Production example

This bot is designed to alert messages from alertmanager.

Compile

GOPATH related doc.

export GOPATH="your go path" make clean make

Usage

-

Create Telegram bot with BotFather, it will return your bot token

-

Specify telegram token in

config.yaml:telegram_token: "token goes here" # ONLY IF YOU USING DATA FORMATTING FUNCTION, NOTE for developer: important or test fail time_outdata: "02/01/2006 15:04:05" template_path: "template.tmpl" # ONLY IF YOU USING TEMPLATE time_zone: "Europe/Rome" # ONLY IF YOU USING TEMPLATE split_msg_byte: 4000 send_only: true # use bot only to send messages.

-

Run

telegram_bot. Seeprometheus_bot --helpfor command line options -

Get chat ID with one of two ways

- Start conversation, send message to bot mentioning it

- Add your bot to a group. It should report group id now. To get ID of a group if bot is already a member send a message that starts with

/

Configuring alert manager

Alert manager configuration file:

- name: 'admins' webhook_configs: - send_resolved: True url: http://127.0.0.1:9087/alert/chat_id

Replace chat_id with the value you got from your bot, with everything inside the quotes.

(Some chat_id’s start with a -, in this case, you must also include the - in the url)

To use multiple chats just add more receivers.

Test

To run tests with make test you have to:

- Create

config.ymlwith a valid telegram API key and timezone in the project directory - Create

prometheus_botexecutable binary in the project directory - Define chat ID with

TELEGRAM_CHATIDenvironment variable - Ensure port

9087on localhost is available to bind to

export TELEGRAM_CHATID="YOUR TELEGRAM CHAT ID" make test

Create your own test

When alert manager send alert to telegram bot, only debug flag -d Telegram bot will dump json in that generate alert, in stdout.

You can copy paste this from json for your test, by creating new .json.

Test will send *.json file into testdata folder

or

TELEGRAM_CHATID="YOUR TELEGRAM CHAT ID" make test

Customising messages with template

This bot support go templating language.

Use it for customising your message.

To enable template set these settings in your config.yaml or template will be skipped.

telegram_token: "token here" template_path: "template.tmpl" # your template file name time_zone: "Europe/Rome" # your time zone check it out from WIKI split_token: "|" # token used for split measure label. disable_notification: true # disable notification for messages.

You can also pass template path with -t command line argument, it has higher priority than the config option.

WIKI List of tz database time zones

Best way for build your custom template is:

- Enable bot with

-dflag - Catch some of your alerts in json, then copy it from bot STDOUT

- Save json in testdata/yourname.json

- Launch

make test

-d options will enable debug mode and template file will reload every message, else template is load once on startup.

Is provided as default template file with all possibile variable.

Remember that telegram bot support HTML tag. Check telegram doc here for list of aviable tags.

Template extra functions

Template language support many different functions for text, number and data formatting.

Support this functions list

-

str_UpperCase: Convert string to uppercase -

str_LowerCase: Convert string to lowercase -

str_Title: Convert string in Title, «title» —> «Title» fist letter become Uppercase -

DEPRECATED

str_Format_Byte: Convert number expressed inByteto number in related measure unit. It usestrconv.ParseFloat(..., 64)take look at go related doc for possible input format, usually every think ‘35.95e+06’ is correct converted.

Example:- 35’000’000 [Kb] will converter to ’35 Gb’

- 89’000 [Kb] will converter to ’89 Mb’

-

str_Format_MeasureUnit: Convert string to scaled number and add append measure unit label. For add measure unit label you could add it in prometheus alerting rule. Example of working: 8*e10 become 80G. You cuold also start from a different scale, example kilo:»s|g|3″. Check production example for complete implementation. Requiresplit_token: "|"in conf.yaml -

HasKey: Param:dict map, key_search string Search in map if there requeted key -

str_FormatDate: Convert prometheus string date in your preferred date time format, config file paramtime_outdatacould be used for setup your favourite format

Require more setting in your cofig.yaml

time_zone: "Europe/Rome" time_outdata: "02/01/2006 15:04:05"

WIKI List of tz database time zones

Production example

Production example contains a example of how could be a real template.

testdata/production_example.json

testdata/production_example.tmpl

It could be a base, for build a real template, or simply copy some part, check-out how to use functions.

Sysadmin usually love copy.

prometheus_bot’s People

prometheus_bot’s Issues

How I can insert emoji in Template?

How I can insert emoji in Template?

go 1.8.3: function «str_Format_MeasureUnit» not defined

Hey @AndreaGreco, I can see tests failing with go 1.8.3, any ideas how to fix this?

Problem reading parsing template file: template: production_example.tmpl:20: function "str_Format_MeasureUnit" not defined

TLS handshake timeout

Template works only from current directory

template_path: "testdata/default.tmpl" doesn’t work.

Undefined license

There’s no license defined for this project (or I just didn’t find one), so it cannot be used, modified or distributed legally.

Could you please define it?

Not all chat_id’s contain `-`

I started a private chat with the bot, and got the following message: Chat id is '12345678'.

Per README instructions, I then configured alertmanager with webhook url http://some.host.name/alert/-12345678, resulting in Chat not found error messages from the bot.

Broke my head over this, until I invited the bot into a group chat, and I got a chat_id with - included (Chat id is '-5678901'), which did work with url http://some.host.name/alert/-5678901. Then trying to use the ‘private’ chat_id without - in the url (http://some.host.name/alert/12345678) also worked.

So I suggest updating README to something like:

### Configuring alert manager Alert manager configuration file: - name: 'admins' webhook_configs: - send_resolved: True url: http://127.0.0.1:9087/alert/chat_id Replace chat_id with the number you got from your bot, ***with everything inside the quotes*** (Some chat_id's start with `-`, so if there is a `-` in your chat_id, make sure to include it in the url). To use multiple chats just add more receivers.

Not Found

So i did a git clone go get and go build and i got the executable. but when i run the executable it just says 2019/02/03 16:08:24 Not Found. what could i be doing wrong, i have setup the config.yaml as described in urreadm.e

Why GOGC=off?

Just wondering why the Dockerfile compiles the code disabling go garbage collector? There is no explanation of this decision…

Besides, executing a build that prints the escape analysis returns a lot of heap allocations:

Command:

go build -gcflags="-m" main.go 2>&1 | grep "escapes to heap"

Result:

./main.go:205:21: str_fl escapes to heap

./main.go:205:21: str_Size escapes to heap

./main.go:251:21: str_fl escapes to heap

./main.go:251:21: str_Size escapes to heap

./main.go:134:16: "Could not convert value to int" escapes to heap

./main.go:270:15: "template_time_zone is not set, if you use template and `str_FormatDa... escapes to heap

./main.go:275:15: "template_time_outdata param is not set, if you use template and `str... escapes to heap

./main.go:287:13: (interface {})(string("time: missing Location in call to Time.In")) escapes to heap

./main.go:330:104: update.Message.Chat.ID escapes to heap

./main.go:334:11: msg escapes to heap

./main.go:340:16: update escapes to heap

./main.go:364:14: tmplPath escapes to heap

./main.go:372:18: []string{} escapes to heap

./main.go:377:13: sub + string(r) escapes to heap

./main.go:462:48: string(~R0) escapes to heap

./main.go:463:46: gin.H{...} escapes to heap

./main.go:464:4: fmt.Sprint(... argument...) escapes to heap

./main.go:463:9: render.Render(render.JSON{...}) escapes to heap

./main.go:469:13: chatid escapes to heap

./main.go:470:25: chatid escapes to heap

./main.go:475:26: msg escapes to heap

./main.go:477:11: render.Render(render.String{...}) escapes to heap

./main.go:479:38: gin.H{...} escapes to heap

./main.go:480:4: fmt.Sprint(... argument...) escapes to heap

./main.go:481:4: sendmsg escapes to heap

./main.go:479:9: render.Render(render.JSON{...}) escapes to heap

./main.go:487:14: make([]string, 0, len(alerts.GroupLabels)) escapes to heap

./main.go:491:14: sort.Interface(sort.StringSlice(sort.x)) escapes to heap

./main.go:492:21: make([]string, 0, len(alerts.GroupLabels)) escapes to heap

./main.go:494:49: k escapes to heap

./main.go:497:13: make([]string, 0, len(alerts.CommonLabels)) escapes to heap

./main.go:501:14: sort.Interface(sort.StringSlice(sort.x)) escapes to heap

./main.go:502:22: make([]string, 0, len(alerts.CommonLabels)) escapes to heap

./main.go:505:52: k escapes to heap

./main.go:509:13: make([]string, 0, len(alerts.CommonAnnotations)) escapes to heap

./main.go:513:14: sort.Interface(sort.StringSlice(sort.x)) escapes to heap

./main.go:514:27: make([]string, 0, len(alerts.CommonAnnotations)) escapes to heap

./main.go:516:61: k escapes to heap

./main.go:519:22: make([]string, len(alerts.Alerts)) escapes to heap

./main.go:529:58: a.GeneratorURL escapes to heap

./main.go:529:85: alertDetails[i] escapes to heap

./main.go:534:9: alerts.ExternalURL escapes to heap

./main.go:535:9: alerts.Receiver escapes to heap

./main.go:536:18: strings.ToUpper(alerts.Status) escapes to heap

./main.go:537:6: len(alerts.Alerts) escapes to heap

./main.go:538:15: strings.Join(groupLabels, ", ") escapes to heap

./main.go:539:15: strings.Join(commonLabels, ", ") escapes to heap

./main.go:540:15: strings.Join(commonAnnotations, "") escapes to heap

./main.go:541:15: strings.Join(alertDetails, ", ") escapes to heap

./main.go:558:20: alerts escapes to heap

./main.go:565:25: string(bytes.b.buf[bytes.b.off:]) escapes to heap

./main.go:571:24: &strings.Reader{...} escapes to heap

./main.go:582:16: "HTML is valid, sending it..." escapes to heap

./main.go:587:16: "HTML is not valid, strip all tags to prevent error" escapes to heap

./main.go:607:13: chatid escapes to heap

./main.go:610:48: string(~R0) escapes to heap

./main.go:611:46: gin.H{...} escapes to heap

./main.go:612:4: fmt.Sprint(... argument...) escapes to heap

./main.go:611:9: render.Render(render.JSON{...}) escapes to heap

./main.go:619:24: alerts escapes to heap

./main.go:625:14: "+------------------ A L E R T J S O N -------------------+" escapes to heap

./main.go:626:13: s escapes to heap

./main.go:627:14: "+-----------------------------------------------------------+nn" escapes to heap

./main.go:643:15: "+--------------- F I N A L M E S S A G E ---------------+" escapes to heap

./main.go:644:14: subString escapes to heap

./main.go:645:15: "+-----------------------------------------------------------+" escapes to heap

./main.go:652:27: msg escapes to heap

./main.go:654:12: render.Render(render.String{...}) escapes to heap

./main.go:657:47: gin.H{...} escapes to heap

./main.go:658:5: fmt.Sprint(... argument...) escapes to heap

./main.go:659:5: sendmsg escapes to heap

./main.go:660:5: fmt.Sprint(... argument...) escapes to heap

./main.go:660:26: msgtext escapes to heap

./main.go:657:10: render.Render(render.JSON{...}) escapes to heap

./main.go:666:12: msg escapes to heap

./main.go:410:47: string(content) escapes to heap

./main.go:417:36: &http.Client{} escapes to heap

./main.go:432:9: -1 escapes to heap

./main.go:440:14: (interface {})(string("gin mode unknown: ") + gin.value) escapes to heap

./main.go:440:14: string("gin mode unknown: ") + gin.value escapes to heap

./main.go:443:49: bot.Self.UserName escapes to heap

./main.go:453:12: []gin.HandlerFunc{...} escapes to heap

./main.go:454:13: []gin.HandlerFunc{...} escapes to heap

./main.go:309:31: "html/template".FuncMap{...} escapes to heap

Error sending message: Bad Request: Wrong message entity: Wrong URL host

Occurs when generation URL is empty

thank you / hint for http 400 response on windows

thank you for your work. I am using this bot now in my prometheus installation.

I had to change the deserialization of alerts for me. Though it worked it always hat an 400 http error set — and at least with my windows environment the subsequent «c.AbortWithStatus(http.StatusOK)» would not work — so alertmanager would get an 400 back und retry the message endlessly.

So I changed:

var alerts Alerts // c.BindJSON(&alerts) binding.JSON.Bind(c.Request, &alerts)

Up check — /metrics endpoint

Hi,

I am wondering if there is a way to let Prometheus check whether the prometheus_bot service is running fine.

Basically, I need an entry into the prometheus up metric, for example:

up{instance="prometheus_bot_instance_name", job="my_job"}

This is useful to allow the monitoring of the monitoring infrastructure.

The use case is the following:

- if the monitoring infrastructure is not working, then I will not receive alerts

- so i need to monitor the monitoring infrastructure, by using a second monitoring infrastructure

- this second infrastructure, will just monitor prometheus, alertmanager, and prometheus_bot of the first infrastructure

- finally, to ensure the second infrastructure is working, the first infrastructure will monitor the second one!

This ensure that as soon as one of the monitoring infrastructures stops to work, the other one will fire a warning.

To have the required up metric, I believe it suffices to provide a /metrics endpoint.

Regards

[FEATURE REQUEST] Please add a /healthz endpoint

Hi!

Thank you for your bot application.

Could you please add the endpoint for readiness probe of your bot.

It would be great if it won’t create access log records while do the probe.

Build error

I check out this project with master branch. When I press command «make» then the result is :

can’t load package: package .: code in directory /Users/mac/Documents/prometheusbot/prometheus_bot expects import «github.com/inCaller/prometheus_bot»

make: *** [all] Error 1

unknown field ‘SameSite’ in struct literal of type http.Cookie

Hello.

I have copied your Dockerfile and executed:

docker build -t prometheus_bot:latest .

Then I have received message about error:

...

github.com/ugorji/go/codec

github.com/gin-gonic/gin/binding

github.com/gin-gonic/gin/render

github.com/gin-gonic/gin

# github.com/gin-gonic/gin

/go/src/github.com/gin-gonic/gin/context.go:778:91: undefined: http.SameSite

/go/src/github.com/gin-gonic/gin/context.go:788:11: unknown field 'SameSite' in struct literal of type http.Cookie

The command '/bin/sh -c cd / && apk update && apk add --no-cache git ca-certificates make tzdata && git clone https://github.com/inCaller/prometheus_bot && cd prometheus_bot && go get -d -v && CGO_ENABLED=0 GOOS=linux go build -v -a -installsuffix cgo -o prometheus_bot' returned a non-zero code: 2

prometheus_bot fails with panic with newly created bot

I’m trying prometheus_bot from master branch (271ff17) compiled with Go 1.6.2 from Ubuntu 16.04 64-bit and have observed panic during handling of first requests with newly created Telegram bot.

I can’t reproduce this issue currently and I believe the issue is related to freshly created Telegram bot or some issue on Telegram side.

I want to leave this issue here for the reference, in case anyone else will observe it later.

Here is what I’ve done.

- Created new Telegram bot.

- Created new Chat, invited myself and bot in it.

- Started

./prometheus_bot -c config.yml - Run

make testwithout specification ofTELEGRAM_CHATID:

$ make test

bash t/curl.t

1..2

not ok 1 - simpe.json

# 404

not ok 2 - noGenURL.json

# 404

This lead to 404 responses from prometheus_bot.

- Found out Chat Id and run tests with

TELEGRAM_CHATID:

$ make test TELEGRAM_CHATID=-...

bash t/curl.t

1..2

not ok 1 - simpe.json

not ok 2 - noGenURL.json

This lead to panic. Here is prometheus_bot log for the whole session:

$ ./prometheus_bot -c config.yml

Authorized on account SomeBot

[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.

- using env: export GIN_MODE=release

- using code: gin.SetMode(gin.ReleaseMode)

[GIN-debug] GET /ping/:chatid --> main.GET_Handling (3 handlers)

[GIN-debug] POST /alert/:chatid --> main.POST_Handling (3 handlers)

[GIN-debug] Listening and serving HTTP on :9087

[GIN] 2017/03/06 - 22:17:30 | 404 | 645ns | 127.0.0.1 | POST /alert/

[GIN] 2017/03/06 - 22:17:30 | 404 | 628ns | 127.0.0.1 | POST /alert/

panic: runtime error: invalid memory address or nil pointer dereference

[signal 0xb code=0x1 addr=0xc8 pc=0x4012aa]

goroutine 50 [running]:

panic(0x8f5180, 0xc820010170)

/usr/lib/go-1.6/src/runtime/panic.go:481 +0x3e6

main.telegramBot(0xc8200771a0)

/home/bob/stuff/kubernetes/prometheus_bot/main.go:88 +0x2aa

created by main.main

/home/bob/stuff/kubernetes/prometheus_bot/main.go:138 +0x8f7

panic: runtime error: invalid memory address or nil pointer dereference

Hi,

I have loaded the current code onto my Raspberry Pi4 and then compiled it.

However, after a make test I get the following error when using the templates from this project.

Excerpts from the bot.log

2022/03/16 20:07:04 HTML is valid, sending it...

2022/03/16 20:07:04 +--------------- F I N A L M E S S A G E ---------------+

2022/03/16 20:07:04 <a href='https://alert-manager.example.com/#/alerts?receiver=admins'>[FIRING:1]</a>

grouped by: alertname=<code>something_happend</code>, instance=<code>server01.int:9100</code>

labels: env=<code>prod</code>, job=<code>node</code>, service=<code>prometheus_bot</code>, severity=<code>warning</code>, supervisor=<code>runit</code>

summary: <code>runit service prometheus_bot restarted, server01.int:9100</code>

<a href='https://example.com/graph#...'>server01.int[node]</a>

2022/03/16 20:07:04 +-----------------------------------------------------------+

[GIN] 2022/03/16 - 20:07:04 | 200 | 65.982888ms | 127.0.0.1 | POST "/alert/-4*******3"

****** Run prometheus_bot with template testdata/default.tmpl ******

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x10 pc=0x45db1c]

goroutine 1 [running]:

main.main()

/usr/src/prometheus_bot/main.go:418 +0x31c

This error only occurs in conjunction with the templates. Sending without using a template works.

Excerpts from the bot.log

2022/03/16 20:07:04 HTML is valid, sending it...

2022/03/16 20:07:04 +--------------- F I N A L M E S S A G E ---------------+

2022/03/16 20:07:04 <a href='http://alert.greco.cf/alert-manager/#/alerts?receiver=telegram_bot'>[FIRING:11]</a>

grouped by: scada_uuid=<code>483b197c-7fe8-11e6-b772-acb57db47f23</code>

labels:

<a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%2215min%22%7D+%3E+%283+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_memory%7Bmode%3D%22memavailable%22%7D+%3E+%281024+%2A+100%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=100+-+%28avg%28irate%28linux_stats_cpu%7Bmode%3D%22idle%22%7D%5B2m%5D%29%29+BY+%28scada_uuid%29%29+%3E+60&g0.tab=0'></a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%221min%22%7D+%3E+%288+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%221min%22%7D+%3E+%2810+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%225min%22%7D+%3E+%285+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%225min%22%7D+%3E+%288+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%2215min%22%7D+%3E+%282+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%2215min%22%7D+%3E+%282+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%2215min%22%7D+%3E+%282+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>, <a href='http://localhost.localdomain:9090/graph?g0.expr=linux_loadavg%7Bmode%3D%2215min%22%7D+%3E+%282+%2A+10+%2A+4%29&g0.tab=0'>localhost[statsd]</a>

2022/03/16 20:07:04 +-----------------------------------------------------------+

[GIN] 2022/03/16 - 20:07:04 | 200 | 53.328811ms | 127.0.0.1 | POST "/alert/-4*******3"

2022/03/16 20:07:04 Bot alert post: -4*******3

2022/03/16 20:07:04 +------------------ A L E R T J S O N -------------------+

2022/03/16 20:07:04 {"alerts":[{"annotations":{"summary":"Oops, something happend!"},"endsAt":"0001-01-01T00:00:00Z","generatorURL":"https://example.com/graph#...","labels":{"alertname":"something_happend","env":"prod","instance":"server01.int:9100","job":"node","service":"prometheus_bot","severity":"warning","supervisor":"runit"},"startsAt":"2016-04-27T20:46:37.903Z","status":"firing"}],"commonAnnotations":{"summary":"runit service prometheus_bot restarted, server01.int:9100"},"commonLabels":{"alertname":"something_happend","env":"prod","instance":"server01.int:9100","job":"node","service":"prometheus_bot","severity":"warning","supervisor":"runit"},"externalURL":"https://alert-manager.example.com","groupKey":0,"groupLabels":{"alertname":"something_happend","instance":"server01.int:9100"},"receiver":"admins","status":"firing","version":0}

2022/03/16 20:07:04 +-----------------------------------------------------------+

Output after make test

make test

go build -o prometheus_bot

prove -v

t/curl.t ..

1..25

ok 1 - noGenURL.json template none

ok 2 - emptyValue.json template none

ok 3 - production_example.json template none

ok 4 - simpe.json template none

ok 5 - big_output.json template none

not ok 6 - noGenURL.json template default.tmpl

not ok 7 - emptyValue.json template default.tmpl

not ok 8 - production_example.json template default.tmpl

not ok 9 - simpe.json template default.tmpl

not ok 10 - big_output.json template default.tmpl

not ok 11 - noGenURL.json template malformed_html.tmpl

not ok 12 - emptyValue.json template malformed_html.tmpl

not ok 13 - production_example.json template malformed_html.tmpl

not ok 14 - simpe.json template malformed_html.tmpl

not ok 15 - big_output.json template malformed_html.tmpl

not ok 16 - noGenURL.json template detailed_vars.tmpl

not ok 17 - emptyValue.json template detailed_vars.tmpl

not ok 18 - production_example.json template detailed_vars.tmpl

not ok 19 - simpe.json template detailed_vars.tmpl

not ok 20 - big_output.json template detailed_vars.tmpl

not ok 21 - noGenURL.json template production_example.tmpl

not ok 22 - emptyValue.json template production_example.tmpl

not ok 23 - production_example.json template production_example.tmpl

not ok 24 - simpe.json template production_example.tmpl

not ok 25 - big_output.json template production_example.tmpl

Failed 20/25 subtests

Test Summary Report

-------------------

t/curl.t (Wstat: 0 Tests: 25 Failed: 20)

Failed tests: 6-25

Files=1, Tests=25, 16 wallclock secs ( 0.06 usr 0.02 sys + 0.73 cusr 0.79 csys = 1.60 CPU)

Result: FAIL

make: *** [Makefile:6: test] Fehler 1

I have already used this code in July 2021.

The commit at that time was:

commit 2dc161747f6a6ee8afbc8afd1b7e02f0bc195daf (HEAD -> master, origin/master, origin/HEAD)

Merge: ac4533f 1d366fe

Author: Roman Belyakovsky <[email protected]>

Date: Sun May 30 23:20:07 2021 +0300

Merge pull request #64 from dmaes/master

Clarify documentation about `-` in chat_id and url

This code from May 2021 still works today.

I can compile it in the same system environment and use it with the same templates.

There are no errors.

Where is the difference to today’s version?

My current environment

$ uname -a

Linux node5.local 5.10.92-v8+ #1514 SMP PREEMPT Mon Jan 17 17:39:38 GMT 2022 aarch64 GNU/Linux

$ cat /etc/*rel*

PRETTY_NAME="Debian GNU/Linux 11 (bullseye)"

NAME="Debian GNU/Linux"

VERSION_ID="11"

VERSION="11 (bullseye)"

VERSION_CODENAME=bullseye

ID=debian

HOME_URL="https://www.debian.org/"

SUPPORT_URL="https://www.debian.org/support"

BUG_REPORT_URL="https://bugs.debian.org/"

$ go version

go version go1.17.2 linux/arm64

Can you help me fix the problem?

Greetings…

Stefan

getting error while trying parce big json

Hello!

I’m using bot in docker, and there is problem with big alerting message, in telegram chat we getting «Error sending message, checkout logs».

In docker logs there are errors «Error sending message: Bad Request: can’t parse entities: Unexpected end tag at byte offset 0», with different digits at the end.

JSON data is here: https://pastebin.com/31uZTPZa

Add support for topics

Telegram introduced forums: a chat that has multiple topics inside (technically these are one big chat). Bots also have support for this feature. I’d like to send a notification in the specific topic, but can’t find anything to set it, so I guess it’s not implemented.

Adding this feature is as simple as adding one more parameter named message_thread_id to the Telegram Bot API request.

Mix of firing and resolved alerts is displayed incorrectly

I’m migrating all alerts from Slack to Telegram right now. My testing shows wrong output from prometheus_bot.

Slack:

Telegram:

How to reproduce. The easiest way to trigger an alert is to turn off an exporter (node exporter in my case).

- turn off some exporters.

- wait for an alert to come.

- turn on those exporters and turn off some other at the same time.

- wait for a wrong alert.

Expected behavior. Firing and resolved alerts are not mixed together. I don’t see the way to achieve it with custom templates.

My environment.

All running in docker:

- alertmanager:v0.16.1

- prometheus_bot:v2

- prometheus:v2.7.2

slack alerts are configured using these custom templates.

Container build fails

[email protected]:~/prometheus_bot$ podman build -t incaller_prometheus_bot .

[truncated output]

# github.com/gin-gonic/gin

/go/src/github.com/gin-gonic/gin/context.go:77:11: undefined: http.SameSite

github.com/technoweenie/multipartstreamer

gopkg.in/telegram-bot-api.v4

Error: error building at STEP "RUN cd / && apk update && apk add --no-cache git ca-certificates make tzdata && git clone https://github.com/inCaller/prometheus_bot && cd prometheus_bot &

& go get -d -v && CGO_ENABLED=0 GOOS=linux go build -v -a -installsuffix cgo -o prometheus_bot": error while running runtime: exit status 2

Change u configuration by my library gostructor

Hey! I would like to help you with the configuration, I see that you use global variables in your code and I didn’t really like it. Let me implement the gostructor configuration library into your bot for configuration. It allows you to configure from different sources, including secrets from vault, in the future there will be support for kubernetes secrets

Library place here: https://github.com/goreflect/gostructor

I will be glad to suggestions and comments on my library

Create docker build for bot

It will be nice to have container like that.

Or at least have binary executable in release

Conflict: can’t use getUpdates method while webhook is active

prometheus_bot Version: Latest commit 3767980 on Jul 26

2018/10/05 16:48:41 getUpdates resp: {«ok»:false,»error_code»:409,»description»:»Conflict: can’t use getUpdates method while webhook is active»}

2018/10/05 16:48:41 Conflict: can’t use getUpdates method while webhook is active

2018/10/05 16:48:41 Failed to get updates, retrying in 3 seconds…

Bot responds.

Is it possible to make bot stop reading input from the channel? Is it possible to make one way communication? Because when I type something in chat, bot tell my chat ID.

Golden output test missing

As we have lots of pull requests adding more message styles it would be good to ensure original formats are not affected.

Send messages to multiple telegram groups

Is it possible to send messages to several groups if multiple receivers are configured in the Alertmanager? Listing several telegram_token separated by commas does not work. It also fails to run by pointing to several configuration files (-c config1.yaml -c config2.yaml). Thanks.

Docker container constantly in restarting state

Hello,

I have set up telegram bot with the manual steps but after starting up, container stays in restarting phase and because of this I am not able to receive any alterts on Telegram channel.

What can I see in logs is:

2021/06/21 13:38:45 Load template file:/etc/telegrambot/template.tmpl 2021/06/21 13:38:45 Authorised on account mine_alerts_bot

Can anyone help here, please?

put on dockerhub?

Hi, thanks for making this telegram adapter! It looks like just the thing I need. Could you publish it on Docker Hub to make it easier to try out? Thanks!

Flood by «Error sending message, checkout logs» messages

Steps to reproduce

- use template with map fields, for example (we get

summaryfield of CommonAnnotations):

Alert {{.Status}}

{{.CommonAnnotations.summary}}

- put JSON with empty map, for example

###skipped###

"startsAt": "2017-03-15T09:23:37.861+03:00"

}

],

"commonAnnotations": {

},

"commonLabels": {

"alertname": "low_disk_space",

###skipped###

Expected behavior: bot sends empty string instead of {{.CommonAnnotations.summary}}

Real behavior:

- with test suite we got 1

Error sending message, checkout logsand 503 error in logs:

+--------------- F I N A L M E S S A G E ---------------+

Alert firing

<no value>

+-----------------------------------------------------------+

Error sending message: Bad Request

[GIN] 2017/03/15 - 17:06:04 | 503 | 1.585562539s | 127.0.0.1 | POST /alert/-XXXXXX

- with real Alertmanager we got infinity flood of

Error sending message, checkout logsmessages. Looks like Alertmanager send a POST, got 503, resend POST, got 503 again etc…

Error sending message: Bad Request: can’t parse entities: Expected end tag at byte offset 472

log:

2019/10/31 11:51:38 Bot alert post: 638489237

2019/10/31 11:51:38 +------------------ A L E R T J S O N -------------------+

2019/10/31 11:51:38 {"alerts":[{"annotations":{"description":"Disk is almost running out of available space (u003c 10% left)n VALUE = 3.102n LABELS: map[datacenter:fr device:/dev/mapper/san1ve-alertmanager fstype:xfs instance:st-csb2.fr:9100 job:node_exporter mountpoint:/mnt/san_volumes/alertmanager]","summary":"Out of disk space (instance st-csb2.fr:9100)"},"endsAt":"0001-01-01T00:00:00Z","generatorURL":"http://prometheus.local:9090/graph?g0.expr=node_filesystem_free_bytes+%2F+node_filesystem_size_bytes+%2A+100+%3C+10u0026g0.tab=1","labels":{"alertname":"OutOfDiskSpace","datacenter":"fr","device":"/dev/mapper/san1ve-alertmanager","fstype":"xfs","instance":"st-csb2.fr:9100","job":"node_exporter","mountpoint":"/mnt/san_volumes/alertmanager","severity":"warning"},"startsAt":"2019-10-31T11:49:35.277221523Z"}],"commonAnnotations":{"description":"Disk is almost running out of available space (u003c 10% left)n VALUE = 3.102n LABELS: map[datacenter:fr device:/dev/mapper/san1ve-alertmanager fstype:xfs instance:st-csb2.fr:9100 job:node_exporter mountpoint:/mnt/san_volumes/alertmanager]","summary":"Out of disk space (instance st-csb2.fr:9100)"},"commonLabels":{"alertname":"OutOfDiskSpace","datacenter":"fr","device":"/dev/mapper/san1ve-alertmanager","fstype":"xfs","instance":"st-csb2.fr:9100","job":"node_exporter","mountpoint":"/mnt/san_volumes/alertmanager","severity":"warning"},"externalURL":"http://alertmanager.fr.local:9093","groupKey":0,"groupLabels":{"alertname":"OutOfDiskSpace","instance":"st-csb2.fr:9100"},"receiver":"adm","status":"firing","version":0}

2019/10/31 11:51:38 +-----------------------------------------------------------+

2019/10/31 11:51:38 +--------------- F I N A L M E S S A G E ---------------+

2019/10/31 11:51:38 <a href='http://alertmanager.fr.local:9093/#/alerts?receiver=adm'>[FIRING:1]</a>

grouped by: alertname=<code>OutOfDiskSpace</code>, instance=<code>st-csb2.fr:9100</code>

labels: datacenter=<code>fr</code>, device=<code>/dev/mapper/san1ve-alertmanager</code>, fstype=<code>xfs</code>, job=<code>node_exporter</code>, mountpoint=<code>/mnt/san_volumes/alertmanager</code>, severity=<code>warning</code>

description: <code>Disk is almost running out of available space (< 10% left)

VALUE = 3.102

LABELS: map[datacenter:fr device:/dev/mapper/san1ve-alertmanager fstype:xfs instance:st-csb2.fr:9100 job:node_exporter mountpoint:/mnt/san_volumes/alertmanager]</code>

summary: <code>Out of disk space (instance st-csb2.fr:9100)</code>

<a href='http://prometheus.local:9090/graph?g0.expr=node_filesystem_free_bytes+%2F+node_filesystem_size_bytes+%2A+100+%3C+10&g0.tab=1'>st-csb2.fr[node_exporter]</a>

2019/10/31 11:51:38 +-----------------------------------------------------------+

2019/10/31 11:51:38 Error sending message: Bad Request: can't parse entities: Expected end tag at byte offset 472

[GIN] 2019/10/31 - 11:51:38 | 503 | 66.474775ms | 192.168.100.128 | POST /alert/638489237

config.yaml :

telegram_token: "5327342:GVJsadjgvwequJHSAGh"

time_zone: "Europe/Sofia"

split_token: "|"

alertmanager config:

- alert: OutOfDiskSpace

expr: node_filesystem_free_bytes / node_filesystem_size_bytes * 100 < 10

for: 5m

labels:

severity: warning

annotations:

summary: "Out of disk space (instance {{ $labels.instance }})"

description: "Disk is almost running out of available space (< 10% left)n VALUE = {{ $value | humanize }}n LABELS: {{ $labels }}"

# description: "Disk is almost full (< 10% left)n {{ $labels.instance_short }}n {{ $labels.mountpoint }}n VALUE = {{ printf "node_filesystem_avail_bytes{mountpoint='%s'}" .Labels.mountpoint | query | first | value | humanize1024 }}"

The problem with description — (< 10% left) , special symbols can not be parsed.

P.S. I am not a programmer and i dunno how to fix it, so i can’t make a PR, sry.

Value nul

why are the type(summary), Instance and description empty?

config template.tmpl:

Type: "{{ .CommonAnnotations.description }}"

Summary: "{{.CommonAnnotations.summary}}"

Alertname: "{{ .CommonLabels.alertname }}"

Instance: "{{ .CommonLabels.instance }}"

Severity: {{ .CommonLabels.severity}}

Status: {{ .Status }}

output:

Type: ""

Summary: ""

Alertname: "HostOutOfMemory"

Instance: ""

Severity: warning

Status: firing

This happens because every time series is uniquely identified by its metric name and a set of labels. And every unique combination of key-value label pairs represents a new alert for this time series.

Error sending message: Bad Request: chat not found

Hello i made setup and my chat ID contains — in it.

I put it in alertmanager just like said in manual with — together, but all the time im getting error:

Error sending message: Bad Request: chat not found

Im my alert manager i put it as:

‘http://prometheus-bot:9087/alert/-1001719930860’

Why test Failed

cat config.yaml telegram_token: "xxx:xxx" # ONLY IF YOU USING DATA FORMATTING FUNCTION, NOTE for developer: important or test fail time_outdata: "02/01/2006 15:04:05" #template_path: "template.tmpl" # ONLY IF YOU USING TEMPLATE time_zone: "Asia/Shanghai" # ONLY IF YOU USING TEMPLATE split_msg_byte: 4000 send_only: true # use bot only to send messages. TELEGRAM_CHATID="-100xxx" make test go build -o prometheus_bot prove -v t/curl.t .. 1..25 head: illegal line count -- -1 ok 1 - emptyValue.json template none head: illegal line count -- -1 ok 2 - simpe.json template none head: illegal line count -- -1 ok 3 - big_output.json template none head: illegal line count -- -1 ok 4 - production_example.json template none head: illegal line count -- -1 ok 5 - noGenURL.json template none head: illegal line count -- -1 ok 6 - emptyValue.json template production_example.tmpl head: illegal line count -- -1 ok 7 - simpe.json template production_example.tmpl head: illegal line count -- -1 ok 8 - big_output.json template production_example.tmpl head: illegal line count -- -1 ok 9 - production_example.json template production_example.tmpl head: illegal line count -- -1 ok 10 - noGenURL.json template production_example.tmpl head: illegal line count -- -1 ok 11 - emptyValue.json template default.tmpl head: illegal line count -- -1 ok 12 - simpe.json template default.tmpl head: illegal line count -- -1 ok 13 - big_output.json template default.tmpl head: illegal line count -- -1 ok 14 - production_example.json template default.tmpl head: illegal line count -- -1 ok 15 - noGenURL.json template default.tmpl head: illegal line count -- -1 ok 16 - emptyValue.json template malformed_html.tmpl head: illegal line count -- -1 ok 17 - simpe.json template malformed_html.tmpl head: illegal line count -- -1 not ok 18 - big_output.json template malformed_html.tmpl head: illegal line count -- -1 not ok 19 - production_example.json template malformed_html.tmpl head: illegal line count -- -1 not ok 20 - noGenURL.json template malformed_html.tmpl head: illegal line count -- -1 not ok 21 - emptyValue.json template detailed_vars.tmpl head: illegal line count -- -1 not ok 22 - simpe.json template detailed_vars.tmpl head: illegal line count -- -1 not ok 23 - big_output.json template detailed_vars.tmpl head: illegal line count -- -1 not ok 24 - production_example.json template detailed_vars.tmpl head: illegal line count -- -1 not ok 25 - noGenURL.json template detailed_vars.tmpl Failed 8/25 subtests Test Summary Report ------------------- t/curl.t (Wstat: 0 Tests: 25 Failed: 8) Failed tests: 18-25 Files=1, Tests=25, 40 wallclock secs ( 0.01 usr 0.01 sys + 1.29 cusr 0.66 csys = 1.97 CPU) Result: FAIL make: *** [test] Error 1

can I use the alertmanager templates?

hello Thanks for prometheus_bot.

can I use the alertmanager templates for the telegram templates?

thank you~

prometheus_bot fails when message too long

In some cases, we have very long alert message (for example, we have alert for underreplicated kafka topics, grouped by instance, and entire instance with 40 topics was failed).

When prometheus_bot tries to send the similar message — it fails and flood the channel with Error sending message, checkout logs messages.

Telegram API have message length limit — 4096 UTF8 characters (https://core.telegram.org/method/messages.sendMessage). Looks like your bot doesn’t check message length.

How to get instance of servers down

Hi !

I have encountered some trouble is when alert via telegram is only like below:

Status: FIRING 🔥

Active Alert List:

Alert:

Current value:Severity: critical

Active from:

How can I get the server down?

Thanks u so much !!

Tag releases and regularly publish on Dockerhub

Can you tag releases and regularly build and publish a Docker Image? Would make it easier to include this in a docker-compose based deployment of prometheus.

Automate publish docker image to github registry

Hi, I saw that you do not have an automatic build of the project and publication on the github registry, maybe you need help with this? I can issue an MR if you agree

What will it give? People do not have to build the project locally, they can immediately pull this image to their local / production env

[FEATURE REQUEST] Please create binary release

Hello! Thanks for prometheus_bot.

Please create binary release.

Introduction

In PART — 1, We have successfully setup Prometheus and exporters. In this part, we are going to setup alertmanager and will send our first alert.

Alertmanager is software that is maintained by the prometheus and it is written in Go. It takes care of deduplicating, grouping, and routing them to the correct receiver integration such as email, PagerDuty, or OpsGenie. It also takes care of silencing and inhibition of alerts.

Let’s setup alertmanage: )

Installation:

Prerequisites will be the same as PART — 1 . We will download the precompiled binary of alertmanager. Although there is a docker image also available which you can use.

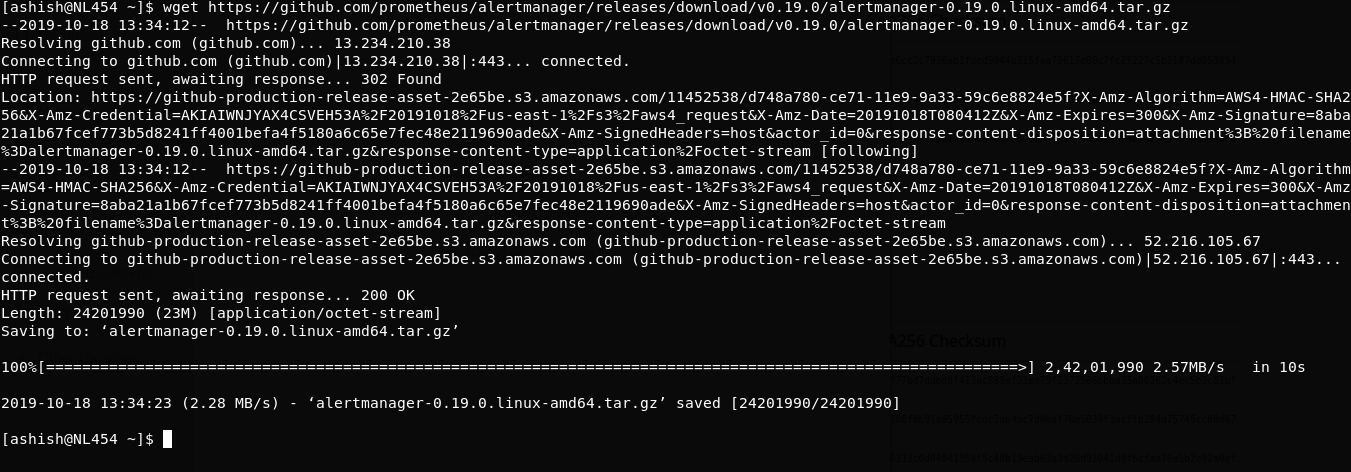

Step 1: Download alertmanager

$ wget https://github.com/prometheus/prometheus/releases/download/v2.13.1/prometheus-2.13.1.linux-amd64.tar.gz

$ tar -xvzf alertmanager-0.19.0.linux-amd64.tar.gz

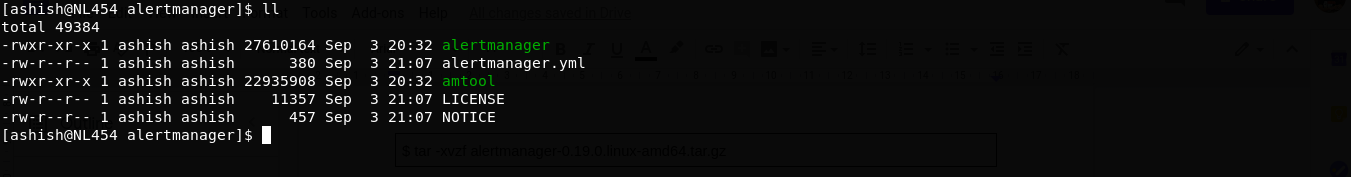

Step 3: Folder structure

$ mv alertmanager-0.19.0.linux-amd64 alertmanager

$ cd alertmanager/

$ ll

Folder contains below files:

alertmanager: It is a binary file that is core Daemon of alertmanager.alertmanager.yml: This is config file for alertmanager service.amtool: It is another binary that can be used as a command line utility to manager or silence the alerts on alertmanager.

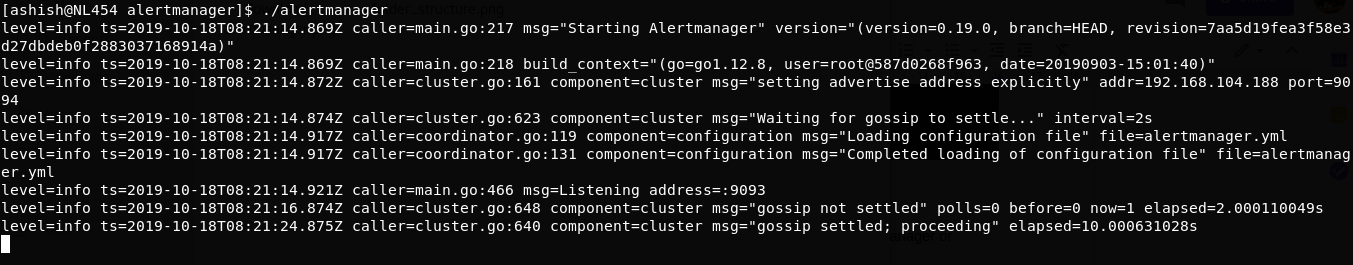

Step 4: Run alertmanager

Execute binary:

Visit to localhost:9093 on your browser:

Your alertmanager is up

data. Alertmanager starts storing data in /data folder.

To check alertmanager metrics just visit localhost:9093/metrics

My production execution command is

~/alertmanager/alertmanager --config.file=~/alertmanager/alertmanager.yml --storage.path=/var/lib/alertmanager --web.external-url=http://myurl.com:9093

--storage.tsdb.path: Specify the path where you want to save prometheus data.--web.external-url: You can use this option if you want to bind your address with your URL.

Step 5: Run alertmanager as service

- Create file

vim /etc/systemd/system/alertmanager.service

- Paste Below code

[Unit]

Description=AlertManager Server Service

Wants=network-online.target

After=network-online.target

[Service]

User=root

Type=Simple

ExecStart=~/alertmanager/alertmanager --config.file=~/alertmanager/alertmanager.yml --storage.path=/var/lib/alertmanager --web.external-url=http://myurl.com:9093

[Install]

WantedBy=multi-user.target

Save and exit.

It won’t run because alertmanager.yml is not defined yet. alertmanager.yml file is defined below.

- Reload the Systemctl Daemon:

$ sudo systemctl daemon-reload

- To start alertmanager service

$ sudo systemctl start alertmanager

Setup Alerts

Step 1: Add settings in prometheus.yml

Open prometheus.yml file

$ vim ~/prometheus/prometheus.yml

Add below code

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

# Add below block for node_exporter

- job_name: node_exporter

scrape_interval: 1m

scrape_timeout: 1m

metrics_path: "/metrics"

static_configs:

- targets: ['localhost:9100']

#Alertmanager settings

rule_files:

- '~/prometheus/alert.rules.yml'

alerting:

alertmanagers:

- static_configs:

- targets:

- 'myurl.com:9093'

-

rule_files: These are the files that contain all kinds of rules. Prometheus has own syntax to define rules. We will see this below. -

alerting: This is an option where we have to define alertmanager configuration. In target, we have definedmyurl.com:9093this is the exact port where our alertmanager is running.

Step 2: How prometheus service will work?

In above scrape_config we have defined scrape configurations. In the above example, prometheus will scrape node_exporter job at every 1 minute (scrape_interval is 1m. it will scrape all information which is available on localhost:9100/metrics (It will scrape all targets which is defined in the targets array).

It will store the data in the Internal database.

At every scraping, it will keep evaluating alert rules which defined in alert.rules.yml. As soon as any alert rules get true it will send an event to alertmanager on myurl.com:9093

Once alertmanager received events, It will check by which channel alert needs to be trigger like via slack, email pagerduty, etc which defined in alertmanager.yml & it will trigger an alert via appropriate channel. Now let’s define two files :

alert.rules.ymlalertmanager.yml

Step 3: Create alert.rules.yml

Create ~/prometheus/alert.rules.yml file and paste below sample rule:

groups:

- name: Disk-usage

rules:

- alert: 'Low data disk space'

expr: ceil(((node_filesystem_size_bytes{mountpoint!="/boot"} - node_filesystem_free_bytes{mountpoint!="/boot"}) / node_filesystem_size_bytes{mountpoint!="/boot"} * 100)) > 95

labels:

severity: 'critical'

annotations:

title: "Disk Usage"

description: 'Partition : {{$labels.mountpoint}}'

summary: "Disk usage is `{{humanize $value}}%`"

host: "{{$labels.instance}}"

- name: Memory-usage

rules:

- alert: 'High memory usage'

expr: ceil((((node_memory_MemTotal_bytes - node_memory_MemFree_bytes - node_memory_Buffers_bytes - node_memory_Cached_bytes) / node_memory_MemTotal_bytes) * 100)) > 80

labels:

severity: 'critical'

annotations:

title: "Memory Usage"

description: 'Memory usage threshold set to `80%`.'

summary: "Memory usage is `{{humanize $value}}%`"

host: "{{$labels.instance}}"

Here we have defined two rules.

- If memory utilization is exceeded than 80% then it will trigger an email.

- If any disk partition usage exceeded than 95% then it will trigger an email.

You will get more insights on defining alerting rules here.

Now you might be wondering what is node_filesystem_size_bytes or node_memory_MemTotal_bytes ?

If we recall part — 1 when we set up the node exporter and visited on localhost:9100/metrics, It has shown some metric. In that will get the above variable name. If you want to put any alert rules irrespective of any exporters, You have to make expr using these variables.

You can compile your alert rule file :

$ promtool check rules alert.rules.yml

promtool is binary which we got in prometheus folder when we extracted it.

You can explore some sample rules here.

Step 4: Create alertmanager.yml

Create ~/alertmanager/alertmanager.yml file and paste below code:

global:

slack_api_url: "https://hooks.slack.com/services/XXXXXXXXXXXXXXX"

route:

receiver: "default"

routes:

- match:

severity: info

receiver: slack

- match:

severity: critical

receiver: email

group_wait: 30s

group_interval: 5m

repeat_interval: 5m

receivers:

- name: default

email_configs:

- to: 'to@youremail.com'

from: 'default-alerts@yourdomain.com'

smarthost: 'smtp.host.com:2525'

auth_username: "smtpusername"

auth_password: "smtppassword"

html: '{{ template "email" .}}'

- name: slack

slack_configs:

- send_resolved: true

username: '{{ template "slack.default.username" . }}'

color: '{{ if eq .Status "firing" }}good{{ else }}good{{ end }}'

title: '{{ template "slack.default.title" . }}'

title_link: '{{ template "slack.default.titlelink" . }}'

pretext: '{{ .CommonAnnotations.summary }}'

text:

>-

{{ range .Alerts }}

*Alert:* {{ .Annotations.summary }} - `{{ .Labels.severity }}`📊

*Description:* {{ .Annotations.description }}

*Details:*

{{ range .Labels.SortedPairs }} • *{{ .Name }}:* `{{ .Value }}`

{{ end }}

{{ end }}

- name: email

email_configs:

- to: 'to@youremail.com'

from: 'default-alerts@yourdomain.com'

smarthost: 'smtp.host.com:2525'

auth_username: "smtpusername"

auth_password: "smtppassword"

html: '{{ template "email" .}}'

templates:

- '~/prometheus/alert.tmpl'

Here we have setup two channel email and slack for alert. if you see alertmanager.yml file, There are four main components I defined.

global: Here we can define any global variable like we definedslack_api_url.route: It is a routing block. I am playing routing onseverity. Similarly, it is your choice on which variable you want to route your alerts. So here ifseverity == ‘info’, Alert will go from slack or ifseverity == ‘critical’, Alert will go via an email.receivers: Here we can define the channel by which alert will go. For now, I have defined only email & slack. You can explore more receivers here.templates: It is an alert template where I have defined the HTML template for an email alert. It is not restricted to email. You can define a template for any channel. Explore more details about templates here.

Step 5: Sample template

Create and Paste below code in ~/prometheus/alert.tmpl

{{ define "email" }}

<html>

<head>

<style type="text/css">

table {

font-family: verdana,arial,sans-serif;

font-size:11px;

color:#333333;

border-width: 1px;

border-color: #999999;

border-collapse: collapse;

}

table th {

background-color:#ff6961;

border-width: 1px;

padding: 8px;

border-style: solid;

border-color: #F54C44;

}

table td {

border-width: 1px;

padding: 8px;

border-style: solid;

border-color: #F54C44;

text-align: right;

}

</style>

</head>

<body>

<table border=1>

<thead>

<tr>

<th>Alert name</th>

<th>Host</th>

<th>Summary</th>

<th>Description</th>

</tr>

</thead>

<tbody>

{{ range .Alerts }}

<tr>

<td>{{ .Labels.alertname }}</td>

<td>{{ .Annotations.host }}</td>

<td>{{ .Annotations.summary }}</td>

<td>{{ .Annotations.description }}</td>

</tr>

{{ end }}

</tbody>

</table>

</body>

</html>

{{end}}

Step 6: Start alertmanager service & restart prometheus

$ sudo systemctl start alertmanager

$ sudo systemctl restart prometheus

Test complete Integration:

If everything is set up correctly and alert rule getting true then it will trigger an alert. You can enable the log of alertmanager for debugging purposes.

If you want to test alert, Then simply make threshold to very less 0% or 1% after 30s it should trigger the alert.

Visit localhost:9093. If there is some alert you will get the list on the dashboard.

So we successfully setup alert using alertmanager. In part — 3 I have explained how you can write your own custom exporters & You can check part — 4 to integrate Grafana with Prometheus.