I am facing this error, There were other errors which i resolved myself but got stuck on this one. please help me resolve this.

- I have created «spark» RBAC account for the pods and passing it into my manifest file.

Error:

20/05/12 16:57:39 ERROR SparkContext: Error initializing SparkContext.

org.apache.spark.SparkException: External scheduler cannot be instantiated

at org.apache.spark.SparkContext$.org$apache$spark$SparkContext$$createTaskScheduler(SparkContext.sc ala:2934)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:548)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java: 45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: io.fabric8.kubernetes.client.KubernetesClientException: Operation: [get] for kind: [Pod] with n ame: [spark-py-driver] in namespace: [default] failed.

at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException .java:64)

at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException .java:72)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.getMandatory(BaseOperation.java:237)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.get(BaseOperation.java:170)

at org.apache.spark.scheduler.cluster.k8s.ExecutorPodsAllocator.$anonfun$driverPod$1(ExecutorPodsAll ocator.scala:59)

at scala.Option.map(Option.scala:230)

at org.apache.spark.scheduler.cluster.k8s.ExecutorPodsAllocator.<init>(ExecutorPodsAllocator.scala:5 8)

at org.apache.spark.scheduler.cluster.k8s.KubernetesClusterManager.createSchedulerBackend(Kubernetes ClusterManager.scala:113)

at org.apache.spark.SparkContext$.org$apache$spark$SparkContext$$createTaskScheduler(SparkContext.sc ala:2928)

... 13 more

Caused by: java.net.SocketException: Broken pipe (Write failed)

at java.net.SocketOutputStream.socketWrite0(Native Method)

at java.net.SocketOutputStream.socketWrite(SocketOutputStream.java:111)

at java.net.SocketOutputStream.write(SocketOutputStream.java:155)

at sun.security.ssl.OutputRecord.writeBuffer(OutputRecord.java:431)

at sun.security.ssl.OutputRecord.write(OutputRecord.java:417)

at sun.security.ssl.SSLSocketImpl.writeRecordInternal(SSLSocketImpl.java:894)

at sun.security.ssl.SSLSocketImpl.writeRecord(SSLSocketImpl.java:865)

at sun.security.ssl.AppOutputStream.write(AppOutputStream.java:123)

at okio.Okio$1.write(Okio.java:79)

at okio.AsyncTimeout$1.write(AsyncTimeout.java:180)

at okio.RealBufferedSink.flush(RealBufferedSink.java:224)

at okhttp3.internal.http2.Http2Writer.settings(Http2Writer.java:203)

at okhttp3.internal.http2.Http2Connection.start(Http2Connection.java:514)

at okhttp3.internal.http2.Http2Connection.start(Http2Connection.java:504)

at okhttp3.internal.connection.RealConnection.startHttp2(RealConnection.java:299)

at okhttp3.internal.connection.RealConnection.establishProtocol(RealConnection.java:288)

at okhttp3.internal.connection.RealConnection.connect(RealConnection.java:169)

at okhttp3.internal.connection.StreamAllocation.findConnection(StreamAllocation.java:258)

at okhttp3.internal.connection.StreamAllocation.findHealthyConnection(StreamAllocation.java:135)

at okhttp3.internal.connection.StreamAllocation.newStream(StreamAllocation.java:114)

at okhttp3.internal.connection.ConnectInterceptor.intercept(ConnectInterceptor.java:42)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.internal.cache.CacheInterceptor.intercept(CacheInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.internal.http.BridgeInterceptor.intercept(BridgeInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RetryAndFollowUpInterceptor.intercept(RetryAndFollowUpInterceptor.java:127)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.BackwardsCompatibilityInterceptor.intercept(BackwardsCompatibi lityInterceptor.java:119)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.ImpersonatorInterceptor.intercept(ImpersonatorInterceptor.java :68)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.HttpClientUtils.lambda$createHttpClient$3(HttpClientUtils.java :111)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.RealCall.getResponseWithInterceptorChain(RealCall.java:257)

at okhttp3.RealCall.execute(RealCall.java:93)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:411)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:372)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleGet(OperationSupport.java:337)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleGet(OperationSupport.java:318)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.handleGet(BaseOperation.java:833)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.getMandatory(BaseOperation.java:226)

... 19 more

20/05/12 16:57:39 INFO SparkUI: Stopped Spark web UI at http://spark-py-1589302652405-driver-svc.default.svc :4040

20/05/12 16:57:39 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

20/05/12 16:57:39 INFO MemoryStore: MemoryStore cleared

20/05/12 16:57:39 INFO BlockManager: BlockManager stopped

20/05/12 16:57:39 INFO BlockManagerMaster: BlockManagerMaster stopped

20/05/12 16:57:39 WARN MetricsSystem: Stopping a MetricsSystem that is not running

20/05/12 16:57:39 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stop ped!

20/05/12 16:57:39 INFO SparkContext: Successfully stopped SparkContext

Traceback (most recent call last):

File "/opt/spark/examples/src/main/python/pi.py", line 33, in <module>

.appName("PythonPi")

File "/opt/spark/python/lib/pyspark.zip/pyspark/sql/session.py", line 183, in getOrCreate

File "/opt/spark/python/lib/pyspark.zip/pyspark/context.py", line 370, in getOrCreate

File "/opt/spark/python/lib/pyspark.zip/pyspark/context.py", line 130, in __init__

File "/opt/spark/python/lib/pyspark.zip/pyspark/context.py", line 192, in _do_init

File "/opt/spark/python/lib/pyspark.zip/pyspark/context.py", line 309, in _initialize_context

File "/opt/spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1554, in __call__

File "/opt/spark/python/lib/py4j-0.10.8.1-src.zip/py4j/protocol.py", line 328, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext .

: org.apache.spark.SparkException: External scheduler cannot be instantiated

at org.apache.spark.SparkContext$.org$apache$spark$SparkContext$$createTaskScheduler(SparkContext.sc ala:2934)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:548)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java: 45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: io.fabric8.kubernetes.client.KubernetesClientException: Operation: [get] for kind: [Pod] with n ame: [spark-py-driver] in namespace: [default] failed.

at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException .java:64)

at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException .java:72)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.getMandatory(BaseOperation.java:237)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.get(BaseOperation.java:170)

at org.apache.spark.scheduler.cluster.k8s.ExecutorPodsAllocator.$anonfun$driverPod$1(ExecutorPodsAll ocator.scala:59)

at scala.Option.map(Option.scala:230)

at org.apache.spark.scheduler.cluster.k8s.ExecutorPodsAllocator.<init>(ExecutorPodsAllocator.scala:5 8)

at org.apache.spark.scheduler.cluster.k8s.KubernetesClusterManager.createSchedulerBackend(Kubernetes ClusterManager.scala:113)

at org.apache.spark.SparkContext$.org$apache$spark$SparkContext$$createTaskScheduler(SparkContext.sc ala:2928)

... 13 more

Caused by: java.net.SocketException: Broken pipe (Write failed)

at java.net.SocketOutputStream.socketWrite0(Native Method)

at java.net.SocketOutputStream.socketWrite(SocketOutputStream.java:111)

at java.net.SocketOutputStream.write(SocketOutputStream.java:155)

at sun.security.ssl.OutputRecord.writeBuffer(OutputRecord.java:431)

at sun.security.ssl.OutputRecord.write(OutputRecord.java:417)

at sun.security.ssl.SSLSocketImpl.writeRecordInternal(SSLSocketImpl.java:894)

at sun.security.ssl.SSLSocketImpl.writeRecord(SSLSocketImpl.java:865)

at sun.security.ssl.AppOutputStream.write(AppOutputStream.java:123)

at okio.Okio$1.write(Okio.java:79)

at okio.AsyncTimeout$1.write(AsyncTimeout.java:180)

at okio.RealBufferedSink.flush(RealBufferedSink.java:224)

at okhttp3.internal.http2.Http2Writer.settings(Http2Writer.java:203)

at okhttp3.internal.http2.Http2Connection.start(Http2Connection.java:514)

at okhttp3.internal.http2.Http2Connection.start(Http2Connection.java:504)

at okhttp3.internal.connection.RealConnection.startHttp2(RealConnection.java:299)

at okhttp3.internal.connection.RealConnection.establishProtocol(RealConnection.java:288)

at okhttp3.internal.connection.RealConnection.connect(RealConnection.java:169)

at okhttp3.internal.connection.StreamAllocation.findConnection(StreamAllocation.java:258)

at okhttp3.internal.connection.StreamAllocation.findHealthyConnection(StreamAllocation.java:135)

at okhttp3.internal.connection.StreamAllocation.newStream(StreamAllocation.java:114)

at okhttp3.internal.connection.ConnectInterceptor.intercept(ConnectInterceptor.java:42)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.internal.cache.CacheInterceptor.intercept(CacheInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.internal.http.BridgeInterceptor.intercept(BridgeInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RetryAndFollowUpInterceptor.intercept(RetryAndFollowUpInterceptor.java:127)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.BackwardsCompatibilityInterceptor.intercept(BackwardsCompatibi lityInterceptor.java:119)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.ImpersonatorInterceptor.intercept(ImpersonatorInterceptor.java :68)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.HttpClientUtils.lambda$createHttpClient$3(HttpClientUtils.java :111)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.RealCall.getResponseWithInterceptorChain(RealCall.java:257)

at okhttp3.RealCall.execute(RealCall.java:93)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:411)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:372)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleGet(OperationSupport.java:337)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleGet(OperationSupport.java:318)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.handleGet(BaseOperation.java:833)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.getMandatory(BaseOperation.java:226)

... 19 more

I am trying to run my spark Job in Hadoop YARN client mode, and I am using the following command

$/usr/hdp/current/spark-client/bin/spark-submit --master yarn-client --driver-memory 1g --executor-memory 1g --executor-cores 1 --files parma1 --jars param1 param2 --class com.dc.analysis.jobs.AggregationJob sparkanalytics.jar param1 param2 param3

spark-default.sh

spark.driver.extraJavaOptions -Dhdp.verion=2.6.1.0-129 spark.driver.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64 spark.eventLog.dir hdfs:///spark-history spark.eventLog.enabled true spark.executor.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64 spark.history.fs.logDirectory hdfs:///spark-history spark.history.kerberos.keytab none spark.history.kerberos.principal none spark.history.provider org.apache.spark.deploy.history.FsHistoryProvider spark.history.ui.port 18080 spark.yarn.am.extraJavaOptions -Dhdp.verion=2.6.1.0-129 spark.yarn.containerLauncherMaxThreads 25 spark.yarn.driver.memoryOverhead 384 spark.yarn.executor.memoryOverhead 384 spark.yarn.historyServer.address clustername:18080 spark.yarn.preserve.staging.files false spark.yarn.queue default spark.yarn.scheduler.heartbeat.interval-ms 5000 spark.yarn.submit.file.replication 3

I am getting error below(in attachment).

error-logs.txt — attachment

I could see the below error in yarn application log

$ yarn logs -applicationId application_1510129660245_0004

application-1510129660245-0004-log.txt — attachment

Exception in thread "main" java.lang.ExceptionInInitializerError at javax.crypto.JceSecurityManager.<clinit>(JceSecurityManager.java:65) at javax.crypto.Cipher.getConfiguredPermission(Cipher.java:2587) at javax.crypto.Cipher.getMaxAllowedKeyLength(Cipher.java:2611) at sun.security.ssl.CipherSuite$BulkCipher.isUnlimited(Unknown Source) at sun.security.ssl.CipherSuite$BulkCipher.<init>(Unknown Source) at sun.security.ssl.CipherSuite.<clinit>(Unknown Source) at sun.security.ssl.SSLContextImpl.getApplicableCipherSuiteList(Unknown Source) at sun.security.ssl.SSLContextImpl.access$100(Unknown Source) at sun.security.ssl.SSLContextImpl$AbstractTLSContext.<clinit>(Unknown Source) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Unknown Source) at java.security.Provider$Service.getImplClass(Unknown Source) at java.security.Provider$Service.newInstance(Unknown Source) at sun.security.jca.GetInstance.getInstance(Unknown Source) at sun.security.jca.GetInstance.getInstance(Unknown Source)

kindly suggest whats going wrong.

java.net.BindException is a common exception when Spark is trying to initialize SparkContext. This is especially a common error when you try to run Spark locally.

16/01/04 13:49:40 ERROR SparkContext: Error initializing SparkContext. java.net.BindException: Can't assign requested address: Service 'sparkDriver' failed after 16 retries! at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:444) at sun.nio.ch.Net.bind(Net.java:436) at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:214) at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) at io.netty.channel.socket.nio.NioServerSocketChannel.doBind(NioServerSocketChannel.java:125) at io.netty.channel.AbstractChannel$AbstractUnsafe.bind(AbstractChannel.java:485) at io.netty.channel.DefaultChannelPipeline$HeadContext.bind(DefaultChannelPipeline.java:1089) at io.netty.channel.AbstractChannelHandlerContext.invokeBind(AbstractChannelHandlerContext.java:430) at io.netty.channel.AbstractChannelHandlerContext.bind(AbstractChannelHandlerContext.java:415) at io.netty.channel.DefaultChannelPipeline.bind(DefaultChannelPipeline.java:903) at io.netty.channel.AbstractChannel.bind(AbstractChannel.java:198) at io.netty.bootstrap.AbstractBootstrap$2.run(AbstractBootstrap.java:348) at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:357) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:357) at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111) at java.lang.Thread.run(Thread.java:745)

Do you like us to send you a 47 page Definitive guide on Spark join algorithms? ===>

Reason

Most common reason is Spark is trying to bind to the localhost (that is your computer) for the master node and not able to do so.

Solution

Find the hostname of your computer and add it the /etc/hosts.

Find hostname

hostname command will get you the hostname

[osboxes@wk1 ~]$ hostname Wk1.hirw.com

Add hostname to hosts file

Add an entry to your /etc/hosts file like below

[osboxes@wk1 ~]$ cat /etc/hosts 127.0.0.1 wk1.hirw.com

If you are using Windows, hosts file will be under C:WindowsSystem32driversetc

By doing this when Spark ping 127.0.0.1 it will properly resolve to a hostname and will be able to bind to the address.

Spark reported an error when submitting the spark job

./spark-shell

19/05/14 05:37:40 WARN util.NativeCodeLoader: Unable to load native-hadoop

library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

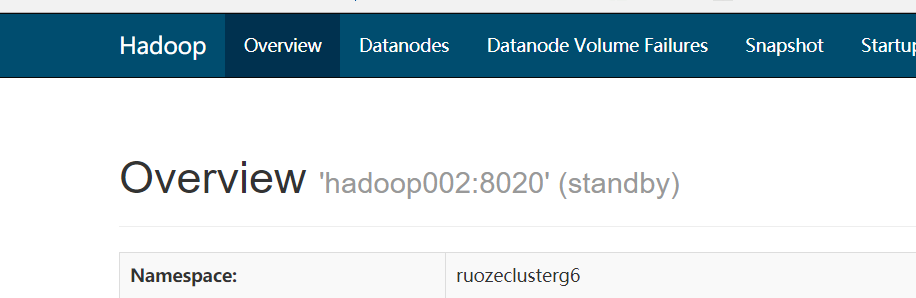

19/05/14 05:37:49 ERROR spark.SparkContext: Error initializing SparkContext.

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException):

Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-error

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:88)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:1826)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1404)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:4208)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:895)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getFileInfo(AuthorizationProviderProxyClientProtocol.java:527)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:824)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080)

Cause analysis

Today, I opened Spark’s history server. I used it well during the test, but later I found that the spark job could not be submitted if it could not be started.

By analyzing the log and looking at the Web interface of HDFS, it is found that my spark cannot connect to the ActiveNN of HDFS, and the only service that needs to connect to HDFS when spark starts is to write the job log, so I checked the spark-defaults.conf file that specifies the write path of the sparkJob log, and sure enough, the path specifies standByNN

spark.eventLog.dir hdfs://hadoop002:8020/g6_direcory

So spark can’t write logs to HDFS by connecting to standByNN

Solve

Just change the path of log directory file in spark-defaults.conf and spark-env.sh from single NN to namespace

My namespace is

<property>

<name>fs.defaultFS</name>

<value>hdfs://ruozeclusterg6</value>

</property>

Modify spark-defaults.conf

spark.eventLog.enabled true spark.eventLog.dir hdfs://ruozeclusterg6:8020/g6_direcory

Modify spark env.sh

SPARK_HISTORY_OPTS="-Dspark.history.fs.logDirectory=hdfs://ruozeclusterg6:8020/g6_direcory"

test

[hadoop@hadoop002 spark]$ spark-shell

19/05/14 06:00:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop002:4040

Spark context available as 'sc' (master = local[*], app id = local-1557828013138).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ '_/

/___/ .__/_,_/_/ /_/_ version 2.4.2

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_131)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

Solve!

-

Type:

Bug

-

Status:

Resolved -

Priority:

Major

-

Resolution:

Not A Problem

-

Affects Version/s:

None

-

Fix Version/s:

None

-

Component/s:

EC2

Hi, Im trying to start spark with yarn-client, like this «spark-shell —master yarn-client» but Im getting the error below.

If I start spark just with «spark-shell» everything works fine.

I have a single node machine where I have all hadoop processes running, and a hive metastore server running.

I already try more than 30 different configurations, but nothing is working, the config that I have now is this:

core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://masternode:9000</value>

</property>

</configuration>

hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

yarn-site.xml:

<configuration>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>masternode:8031</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>masternode:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>masternode:8030</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>masternode:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>masternode:8088</value>

</property>

</configuration>

About spark confs:

spark-env.sh:

HADOOP_CONF_DIR=/usr/local/hadoop-2.7.1/hadoop

SPARK_MASTER_IP=masternode

spark-defaults.conf

spark.master spark://masternode:7077

spark.serializer org.apache.spark.serializer.KryoSerializer

Do you understand why this is happening?

hadoopadmin@mn:~$ spark-shell —master yarn-client

16/05/14 23:21:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

16/05/14 23:21:07 INFO spark.SecurityManager: Changing view acls to: hadoopadmin

16/05/14 23:21:07 INFO spark.SecurityManager: Changing modify acls to: hadoopadmin

16/05/14 23:21:07 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoopadmin); users with modify permissions: Set(hadoopadmin)

16/05/14 23:21:08 INFO spark.HttpServer: Starting HTTP Server

16/05/14 23:21:08 INFO server.Server: jetty-8.y.z-SNAPSHOT

16/05/14 23:21:08 INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:36979

16/05/14 23:21:08 INFO util.Utils: Successfully started service ‘HTTP class server’ on port 36979.

Welcome to

____ __

/ _/_ ___ ____/ /_

/ _ / _ `/ __/ ‘/

/__/ ./_,// //_ version 1.6.1

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_77)

Type in expressions to have them evaluated.

Type :help for more information.

16/05/14 23:21:12 INFO spark.SparkContext: Running Spark version 1.6.1

16/05/14 23:21:12 INFO spark.SecurityManager: Changing view acls to: hadoopadmin

16/05/14 23:21:12 INFO spark.SecurityManager: Changing modify acls to: hadoopadmin

16/05/14 23:21:12 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoopadmin); users with modify permissions: Set(hadoopadmin)

16/05/14 23:21:12 INFO util.Utils: Successfully started service ‘sparkDriver’ on port 33128.

16/05/14 23:21:13 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/05/14 23:21:13 INFO Remoting: Starting remoting

16/05/14 23:21:13 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@10.15.0.11:34382]

16/05/14 23:21:13 INFO util.Utils: Successfully started service ‘sparkDriverActorSystem’ on port 34382.

16/05/14 23:21:13 INFO spark.SparkEnv: Registering MapOutputTracker

16/05/14 23:21:13 INFO spark.SparkEnv: Registering BlockManagerMaster

16/05/14 23:21:13 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-a0048199-bf2f-404b-9cd2-b5988367783f

16/05/14 23:21:13 INFO storage.MemoryStore: MemoryStore started with capacity 511.1 MB

16/05/14 23:21:13 INFO spark.SparkEnv: Registering OutputCommitCoordinator

16/05/14 23:21:13 INFO server.Server: jetty-8.y.z-SNAPSHOT

16/05/14 23:21:13 INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:4040

16/05/14 23:21:13 INFO util.Utils: Successfully started service ‘SparkUI’ on port 4040.

16/05/14 23:21:13 INFO ui.SparkUI: Started SparkUI at http://10.15.0.11:4040

16/05/14 23:21:14 INFO client.RMProxy: Connecting to ResourceManager at localhost/127.0.0.1:8032

16/05/14 23:21:14 INFO yarn.Client: Requesting a new application from cluster with 1 NodeManagers

16/05/14 23:21:14 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

16/05/14 23:21:14 INFO yarn.Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

16/05/14 23:21:14 INFO yarn.Client: Setting up container launch context for our AM

16/05/14 23:21:14 INFO yarn.Client: Setting up the launch environment for our AM container

16/05/14 23:21:14 INFO yarn.Client: Preparing resources for our AM container

16/05/14 23:21:15 INFO yarn.Client: Uploading resource file:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar -> hdfs://localhost:9000/user/hadoopadmin/.sparkStaging/application_1463264445515_0001/spark-assembly-1.6.1-hadoop2.6.0.jar

16/05/14 23:21:17 INFO yarn.Client: Uploading resource file:/tmp/spark-3df9a858-4bdb-4c3f-87cb-8768fb2987e7/__spark_conf__6806563942591505644.zip -> hdfs://localhost:9000/user/hadoopadmin/.sparkStaging/application_1463264445515_0001/_spark_conf_6806563942591505644.zip

16/05/14 23:21:17 INFO spark.SecurityManager: Changing view acls to: hadoopadmin

16/05/14 23:21:17 INFO spark.SecurityManager: Changing modify acls to: hadoopadmin

16/05/14 23:21:17 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoopadmin); users with modify permissions: Set(hadoopadmin)

16/05/14 23:21:17 INFO yarn.Client: Submitting application 1 to ResourceManager

16/05/14 23:21:17 INFO impl.YarnClientImpl: Submitted application application_1463264445515_0001

16/05/14 23:21:19 INFO yarn.Client: Application report for application_1463264445515_0001 (state: ACCEPTED)

16/05/14 23:21:19 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1463264477898

final status: UNDEFINED

tracking URL: http://masternode:8088/proxy/application_1463264445515_0001/

user: hadoopadmin

16/05/14 23:21:20 INFO yarn.Client: Application report for application_1463264445515_0001 (state: ACCEPTED)

16/05/14 23:21:21 INFO yarn.Client: Application report for application_1463264445515_0001 (state: ACCEPTED)

16/05/14 23:21:22 INFO yarn.Client: Application report for application_1463264445515_0001 (state: ACCEPTED)

16/05/14 23:21:23 INFO yarn.Client: Application report for application_1463264445515_0001 (state: ACCEPTED)

16/05/14 23:21:24 INFO yarn.Client: Application report for application_1463264445515_0001 (state: ACCEPTED)

16/05/14 23:21:24 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(null)

16/05/14 23:21:24 INFO cluster.YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> masternode, PROXY_URI_BASES -> http://masternode:8088/proxy/application_1463264445515_0001), /proxy/application_1463264445515_0001

16/05/14 23:21:24 INFO ui.JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

16/05/14 23:21:25 INFO yarn.Client: Application report for application_1463264445515_0001 (state: RUNNING)

16/05/14 23:21:25 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.15.0.11

ApplicationMaster RPC port: 0

queue: default

start time: 1463264477898

final status: UNDEFINED

tracking URL: http://masternode:8088/proxy/application_1463264445515_0001/

user: hadoopadmin

16/05/14 23:21:25 INFO cluster.YarnClientSchedulerBackend: Application application_1463264445515_0001 has started running.

16/05/14 23:21:25 INFO util.Utils: Successfully started service ‘org.apache.spark.network.netty.NettyBlockTransferService’ on port 45282.

16/05/14 23:21:25 INFO netty.NettyBlockTransferService: Server created on 45282

16/05/14 23:21:25 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/05/14 23:21:25 INFO storage.BlockManagerMasterEndpoint: Registering block manager 10.15.0.11:45282 with 511.1 MB RAM, BlockManagerId(driver, 10.15.0.11, 45282)

16/05/14 23:21:25 INFO storage.BlockManagerMaster: Registered BlockManager

16/05/14 23:21:31 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(null)

16/05/14 23:21:31 INFO cluster.YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> masternode, PROXY_URI_BASES -> http://masternode:8088/proxy/application_1463264445515_0001), /proxy/application_1463264445515_0001

16/05/14 23:21:31 INFO ui.JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

16/05/14 23:21:34 ERROR cluster.YarnClientSchedulerBackend: Yarn application has already exited with state FINISHED!

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/metrics/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages/stage/kill,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/api,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/static,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/executors/threadDump/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/executors/threadDump,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/executors/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/executors,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/environment/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/environment,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/storage/rdd/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/storage/rdd,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/storage/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/storage,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages/pool/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages/pool,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages/stage/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages/stage,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/stages,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/jobs/job/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/jobs/job,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/jobs/json,null}

16/05/14 23:21:34 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler

{/jobs,null}

16/05/14 23:21:34 INFO ui.SparkUI: Stopped Spark web UI at http://10.15.0.11:4040

16/05/14 23:21:34 INFO cluster.YarnClientSchedulerBackend: Shutting down all executors

16/05/14 23:21:34 INFO cluster.YarnClientSchedulerBackend: Asking each executor to shut down

16/05/14 23:21:34 INFO cluster.YarnClientSchedulerBackend: Stopped

16/05/14 23:21:34 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/05/14 23:21:34 INFO storage.MemoryStore: MemoryStore cleared

16/05/14 23:21:34 INFO storage.BlockManager: BlockManager stopped

16/05/14 23:21:34 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

16/05/14 23:21:34 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/05/14 23:21:34 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/05/14 23:21:34 INFO spark.SparkContext: Successfully stopped SparkContext

16/05/14 23:21:34 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/05/14 23:21:34 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/05/14 23:21:44 INFO cluster.YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after waiting maxRegisteredResourcesWaitingTime: 30000(ms)

16/05/14 23:21:44 ERROR spark.SparkContext: Error initializing SparkContext.

java.lang.NullPointerException

at org.apache.spark.SparkContext.<init>(SparkContext.scala:584)

at org.apache.spark.repl.SparkILoop.createSparkContext(SparkILoop.scala:1017)

at $line3.$read$$iwC$$iwC.<init>(<console>:15)

at $line3.$read$$iwC.<init>(<console>:24)

at $line3.$read.<init>(<console>:26)

at $line3.$read$.<init>(<console>:30)

at $line3.$read$.<clinit>(<console>)

at $line3.$eval$.<init>(<console>:7)

at $line3.$eval$.<clinit>(<console>)

at $line3.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065)

at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1346)

at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819)

at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857)

at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902)

at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814)

at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:125)

at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124)

at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324)

at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124)

at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974)

at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159)

at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108)

at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945)

at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135)

at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059)

at org.apache.spark.repl.Main$.main(Main.scala:31)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:731)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

16/05/14 23:21:44 INFO spark.SparkContext: SparkContext already stopped.

java.lang.NullPointerException

at org.apache.spark.SparkContext.<init>(SparkContext.scala:584)

at org.apache.spark.repl.SparkILoop.createSparkContext(SparkILoop.scala:1017)

at $iwC$$iwC.<init>(<console>:15)

at $iwC.<init>(<console>:24)

at <init>(<console>:26)

at .<init>(<console>:30)

at .<clinit>(<console>)

at .<init>(<console>:7)

at .<clinit>(<console>)

at $print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065)

at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1346)

at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819)

at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857)

at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902)

at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814)

at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:125)

at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124)

at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324)

at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124)

at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974)

at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159)

at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108)

at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945)

at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135)

at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059)

at org.apache.spark.repl.Main$.main(Main.scala:31)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:731)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

java.lang.NullPointerException

at org.apache.spark.sql.SQLContext$.createListenerAndUI(SQLContext.scala:1367)

at org.apache.spark.sql.hive.HiveContext.<init>(HiveContext.scala:101)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.spark.repl.SparkILoop.createSQLContext(SparkILoop.scala:1028)

at $iwC$$iwC.<init>(<console>:15)

at $iwC.<init>(<console>:24)

at <init>(<console>:26)

at .<init>(<console>:30)

at .<clinit>(<console>)

at .<init>(<console>:7)

at .<clinit>(<console>)

at $print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065)

at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1346)

at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819)

at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857)

at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902)

at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814)

at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:132)

at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124)

at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324)

at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124)

at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974)

at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159)

at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108)

at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945)

at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945)

at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135)

at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059)

at org.apache.spark.repl.Main$.main(Main.scala:31)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:731)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

<console>:16: error: not found: value sqlContext

import sqlContext.implicits._

^

<console>:16: error: not found: value sqlContext

import sqlContext.sql

Versions:

spark-1.6.1-bin-hadoop2.6.tgz and hadoop-2.7.1

Yarn NodeManager logs:

2016-05-15 00:06:03,188 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000001 transitioned from LOCALIZING to LOCALIZED

2016-05-15 00:06:03,234 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000001 transitioned from LOCALIZED to RUNNING

2016-05-15 00:06:03,243 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: launchContainer: [bash, /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_01_000001/default_container_executor.sh]

2016-05-15 00:06:05,144 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Starting resource-monitoring for container_1463267120616_0001_01_000001

2016-05-15 00:06:05,271 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10000 for container-id container_1463267120616_0001_01_000001: 125.3 MB of 1 GB physical memory used; 2.1 GB of 2.1 GB virtual memory used

2016-05-15 00:06:07,045 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1463267120616_0001_000001 (auth:SIMPLE)

2016-05-15 00:06:07,063 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Start request for container_1463267120616_0001_01_000002 by user hadoopadmin

2016-05-15 00:06:07,064 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Adding container_1463267120616_0001_01_000002 to application application_1463267120616_0001

2016-05-15 00:06:07,065 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000002 transitioned from NEW to LOCALIZING

2016-05-15 00:06:07,065 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_INIT for appId application_1463267120616_0001

2016-05-15 00:06:07,065 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000002 transitioned from LOCALIZING to LOCALIZED

2016-05-15 00:06:07,064 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin IP=10.15.0.11 OPERATION=Start Container Request TARGET=ContainerManageImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_01_000002

2016-05-15 00:06:07,192 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000002 transitioned from LOCALIZED to RUNNING

2016-05-15 00:06:07,213 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: launchContainer: [bash, /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_01_000002/default_container_executor.sh]

2016-05-15 00:06:07,972 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1463267120616_0001_000001 (auth:SIMPLE)

2016-05-15 00:06:07,987 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Start request for container_1463267120616_0001_01_000003 by user hadoopadmin

2016-05-15 00:06:07,988 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin IP=10.15.0.11 OPERATION=Start Container Request TARGET=ContainerManageImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_01_000003

2016-05-15 00:06:07,988 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Adding container_1463267120616_0001_01_000003 to application application_1463267120616_0001

2016-05-15 00:06:07,988 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000003 transitioned from NEW to LOCALIZING

2016-05-15 00:06:07,989 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_INIT for appId application_1463267120616_0001

2016-05-15 00:06:07,989 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000003 transitioned from LOCALIZING to LOCALIZED

2016-05-15 00:06:08,099 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000003 transitioned from LOCALIZED to RUNNING

2016-05-15 00:06:08,117 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: launchContainer: [bash, /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_01_000003/default_container_executor.sh]

2016-05-15 00:06:08,271 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Starting resource-monitoring for container_1463267120616_0001_01_000002

2016-05-15 00:06:08,272 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Starting resource-monitoring for container_1463267120616_0001_01_000003

2016-05-15 00:06:08,368 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10000 for container-id container_1463267120616_0001_01_000001: 264.2 MB of 1 GB physical memory used; 2.2 GB of 2.1 GB virtual memory used

2016-05-15 00:06:08,368 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Process tree for container: container_1463267120616_0001_01_000001 has processes older than 1 iteration running over the configured limit. Limit=2254857728, current usage = 2331357184

2016-05-15 00:06:08,374 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Container [pid=10000,containerID=container_1463267120616_0001_01_000001] is running beyond virtual memory limits. Current usage: 264.2 MB of 1 GB physical memory used; 2.2 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1463267120616_0001_01_000001 :

|

|

|

2016-05-15 00:06:08,382 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000001 transitioned from RUNNING to KILLING

2016-05-15 00:06:08,382 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Cleaning up container container_1463267120616_0001_01_000001

2016-05-15 00:06:08,383 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Removed ProcessTree with root 10000

2016-05-15 00:06:08,457 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exit code from container container_1463267120616_0001_01_000001 is : 143

2016-05-15 00:06:08,516 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10043 for container-id container_1463267120616_0001_01_000002: 83.0 MB of 2 GB physical memory used; 2.6 GB of 4.2 GB virtual memory used

2016-05-15 00:06:08,562 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000001 transitioned from KILLING to CONTAINER_CLEANEDUP_AFTER_KILL

2016-05-15 00:06:08,582 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10067 for container-id container_1463267120616_0001_01_000003: 43.2 MB of 2 GB physical memory used; 2.6 GB of 4.2 GB virtual memory used

2016-05-15 00:06:08,583 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_01_000001

2016-05-15 00:06:08,585 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin OPERATION=Container Finished — Killed TARGET=ContainerImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_01_000001

2016-05-15 00:06:08,593 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000001 transitioned from CONTAINER_CLEANEDUP_AFTER_KILL to DONE

2016-05-15 00:06:08,593 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Removing container_1463267120616_0001_01_000001 from application application_1463267120616_0001

2016-05-15 00:06:08,593 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_STOP for appId application_1463267120616_0001

2016-05-15 00:06:09,574 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1463267120616_0001_000001 (auth:SIMPLE)

2016-05-15 00:06:09,601 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Stopping container with container Id: container_1463267120616_0001_01_000001

2016-05-15 00:06:09,601 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin IP=10.15.0.11 OPERATION=Stop Container Request TARGET=ContainerManageImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_01_000001

2016-05-15 00:06:09,608 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Removed completed containers from NM context: [container_1463267120616_0001_01_000001]

2016-05-15 00:06:09,609 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000002 transitioned from RUNNING to KILLING

2016-05-15 00:06:09,609 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000003 transitioned from RUNNING to KILLING

2016-05-15 00:06:09,609 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Cleaning up container container_1463267120616_0001_01_000002

2016-05-15 00:06:09,661 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1463267120616_0001_000002 (auth:SIMPLE)

2016-05-15 00:06:09,689 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exit code from container container_1463267120616_0001_01_000002 is : 143

2016-05-15 00:06:09,710 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Start request for container_1463267120616_0001_02_000001 by user hadoopadmin

2016-05-15 00:06:09,710 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin IP=10.15.0.11 OPERATION=Start Container Request TARGET=ContainerManageImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_02_000001

2016-05-15 00:06:09,734 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Cleaning up container container_1463267120616_0001_01_000003

2016-05-15 00:06:09,767 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exit code from container container_1463267120616_0001_01_000003 is : 143

2016-05-15 00:06:09,796 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000002 transitioned from KILLING to CONTAINER_CLEANEDUP_AFTER_KILL

2016-05-15 00:06:09,796 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Adding container_1463267120616_0001_02_000001 to application application_1463267120616_0001

2016-05-15 00:06:09,796 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000003 transitioned from KILLING to CONTAINER_CLEANEDUP_AFTER_KILL

2016-05-15 00:06:09,796 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_01_000002

2016-05-15 00:06:09,797 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000001 transitioned from NEW to LOCALIZING

2016-05-15 00:06:09,797 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin OPERATION=Container Finished — Killed TARGET=ContainerImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_01_000002

2016-05-15 00:06:09,797 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000002 transitioned from CONTAINER_CLEANEDUP_AFTER_KILL to DONE

2016-05-15 00:06:09,797 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_INIT for appId application_1463267120616_0001

2016-05-15 00:06:09,797 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_01_000003

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin OPERATION=Container Finished — Killed TARGET=ContainerImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_01_000003

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_01_000003 transitioned from CONTAINER_CLEANEDUP_AFTER_KILL to DONE

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Removing container_1463267120616_0001_01_000002 from application application_1463267120616_0001

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_STOP for appId application_1463267120616_0001

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000001 transitioned from LOCALIZING to LOCALIZED

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Removing container_1463267120616_0001_01_000003 from application application_1463267120616_0001

2016-05-15 00:06:09,798 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_STOP for appId application_1463267120616_0001

2016-05-15 00:06:09,821 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000001 transitioned from LOCALIZED to RUNNING

2016-05-15 00:06:09,827 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: launchContainer: [bash, /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_02_000001/default_container_executor.sh]

2016-05-15 00:06:11,583 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Starting resource-monitoring for container_1463267120616_0001_02_000001

2016-05-15 00:06:11,583 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Stopping resource-monitoring for container_1463267120616_0001_01_000001

2016-05-15 00:06:11,583 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Stopping resource-monitoring for container_1463267120616_0001_01_000002

2016-05-15 00:06:11,583 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Stopping resource-monitoring for container_1463267120616_0001_01_000003

2016-05-15 00:06:11,668 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10121 for container-id container_1463267120616_0001_02_000001: 121.8 MB of 1 GB physical memory used; 2.1 GB of 2.1 GB virtual memory used

2016-05-15 00:06:13,645 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Removed completed containers from NM context: [container_1463267120616_0001_01_000002, container_1463267120616_0001_01_000003]

2016-05-15 00:06:14,567 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1463267120616_0001_000002 (auth:SIMPLE)

2016-05-15 00:06:14,571 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Start request for container_1463267120616_0001_02_000002 by user hadoopadmin

2016-05-15 00:06:14,572 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin IP=10.15.0.11 OPERATION=Start Container Request TARGET=ContainerManageImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_02_000002

2016-05-15 00:06:14,572 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Adding container_1463267120616_0001_02_000002 to application application_1463267120616_0001

2016-05-15 00:06:14,572 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000002 transitioned from NEW to LOCALIZING

2016-05-15 00:06:14,572 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_INIT for appId application_1463267120616_0001

2016-05-15 00:06:14,573 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000002 transitioned from LOCALIZING to LOCALIZED

2016-05-15 00:06:14,594 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000002 transitioned from LOCALIZED to RUNNING

2016-05-15 00:06:14,597 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: launchContainer: [bash, /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_02_000002/default_container_executor.sh]

2016-05-15 00:06:14,668 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Starting resource-monitoring for container_1463267120616_0001_02_000002

2016-05-15 00:06:14,700 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10159 for container-id container_1463267120616_0001_02_000002: 23.0 MB of 2 GB physical memory used; 2.6 GB of 4.2 GB virtual memory used

2016-05-15 00:06:14,722 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 10121 for container-id container_1463267120616_0001_02_000001: 222.1 MB of 1 GB physical memory used; 2.1 GB of 2.1 GB virtual memory used

2016-05-15 00:06:14,722 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Process tree for container: container_1463267120616_0001_02_000001 has processes older than 1 iteration running over the configured limit. Limit=2254857728, current usage = 2285281280

2016-05-15 00:06:14,723 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Container [pid=10121,containerID=container_1463267120616_0001_02_000001] is running beyond virtual memory limits. Current usage: 222.1 MB of 1 GB physical memory used; 2.1 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1463267120616_0001_02_000001 :

|

|

|

2016-05-15 00:06:14,723 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000001 transitioned from RUNNING to KILLING

2016-05-15 00:06:14,723 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Cleaning up container container_1463267120616_0001_02_000001

2016-05-15 00:06:14,724 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Removed ProcessTree with root 10121

2016-05-15 00:06:14,762 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exit code from container container_1463267120616_0001_02_000001 is : 143

2016-05-15 00:06:14,784 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000001 transitioned from KILLING to CONTAINER_CLEANEDUP_AFTER_KILL

2016-05-15 00:06:14,785 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_02_000001

2016-05-15 00:06:14,791 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin OPERATION=Container Finished — Killed TARGET=ContainerImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_02_000001

2016-05-15 00:06:14,791 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000001 transitioned from CONTAINER_CLEANEDUP_AFTER_KILL to DONE

2016-05-15 00:06:14,791 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Removing container_1463267120616_0001_02_000001 from application application_1463267120616_0001

2016-05-15 00:06:14,792 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_STOP for appId application_1463267120616_0001

2016-05-15 00:06:15,685 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1463267120616_0001_000002 (auth:SIMPLE)

2016-05-15 00:06:15,716 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Stopping container with container Id: container_1463267120616_0001_02_000001

2016-05-15 00:06:15,717 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin IP=10.15.0.11 OPERATION=Stop Container Request TARGET=ContainerManageImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_02_000001

2016-05-15 00:06:15,720 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Removed completed containers from NM context: [container_1463267120616_0001_02_000001]

2016-05-15 00:06:15,724 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Application application_1463267120616_0001 transitioned from RUNNING to FINISHING_CONTAINERS_WAIT

2016-05-15 00:06:15,724 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000002 transitioned from RUNNING to KILLING

2016-05-15 00:06:15,724 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Cleaning up container container_1463267120616_0001_02_000002

2016-05-15 00:06:15,759 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exit code from container container_1463267120616_0001_02_000002 is : 143

2016-05-15 00:06:15,776 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000002 transitioned from KILLING to CONTAINER_CLEANEDUP_AFTER_KILL

2016-05-15 00:06:15,777 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001/container_1463267120616_0001_02_000002

2016-05-15 00:06:15,778 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=hadoopadmin OPERATION=Container Finished — Killed TARGET=ContainerImpl RESULT=SUCCESS APPID=application_1463267120616_0001 CONTAINERID=container_1463267120616_0001_02_000002

2016-05-15 00:06:15,778 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1463267120616_0001_02_000002 transitioned from CONTAINER_CLEANEDUP_AFTER_KILL to DONE

2016-05-15 00:06:15,778 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Removing container_1463267120616_0001_02_000002 from application application_1463267120616_0001

2016-05-15 00:06:15,778 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Application application_1463267120616_0001 transitioned from FINISHING_CONTAINERS_WAIT to APPLICATION_RESOURCES_CLEANINGUP

2016-05-15 00:06:15,778 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_STOP for appId application_1463267120616_0001

2016-05-15 00:06:15,779 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-hadoopadmin/nm-local-dir/usercache/hadoopadmin/appcache/application_1463267120616_0001

2016-05-15 00:06:15,779 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event APPLICATION_STOP for appId application_1463267120616_0001

2016-05-15 00:06:15,779 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Application application_1463267120616_0001 transitioned from APPLICATION_RESOURCES_CLEANINGUP to FINISHED

2016-05-15 00:06:15,779 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.loghandler.NonAggregatingLogHandler: Scheduling Log Deletion for application: application_1463267120616_0001, with delay of 10800 seconds

2016-05-15 00:06:16,726 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl: Event EventType: KILL_CONTAINER sent to absent container container_1463267120616_0001_02_000002

2016-05-15 00:06:17,724 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Stopping resource-monitoring for container_1463267120616_0001_02_000001

2016-05-15 00:06:17,725 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Stopping resource-monitoring for container_1463267120616_0001_02_000002

2016-05-15 03:06:15,785 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting path : /usr/local/hadoop-2.7.1/logs/userlogs/application_1463267120616_0001

2016-05-16 00:05:20,714 INFO org.apache.hadoop.yarn.server.nodemanager.security.NMContainerTokenSecretManager: Rolling master-key for container-tokens, got key with id -22032173

2016-05-16 00:05:20,714 INFO org.apache.hadoop.yarn.server.nodemanager.security.NMTokenSecretMan