In this tutorial, we’ll see how to solve a common Pandas read_csv() error – Error Tokenizing Data. The full error is something like:

ParserError: Error tokenizing data. C error: Expected 2 fields in line 4, saw 4

The Pandas parser error when reading csv is very common but difficult to investigate and solve for big CSV files.

There could be many different reasons for this error:

- «wrong» data in the file

- different number of columns

- mixed data

- several data files stored as a single file

- nested separators

- wrong parsing parameters for read_csv()

- different separator

- line terminators

- wrong parsing engine

Let’s cover the steps to diagnose and solve this error

Step 1: Analyze and Skip Bad Lines for Error Tokenizing Data

Suppose we have CSV file like:

col_1,col_2,col_3

11,12,13

21,22,23

31,32,33,44

which we are going to read by — read_csv() method:

import pandas as pd

pd.read_csv('test.csv')

We will get an error:

ParserError: Error tokenizing data. C error: Expected 3 fields in line 4, saw 4

We can easily see where the problem is. But what should be the solution in this case? Remove the 44 or add a new column? It depends on the context of this data.

If we don’t need the bad data we can use parameter — on_bad_lines='skip' in order to skip bad lines:

pd.read_csv('test.csv', on_bad_lines='skip')

For older Pandas versions you may need to use: error_bad_lines=False which will be deprecated in future.

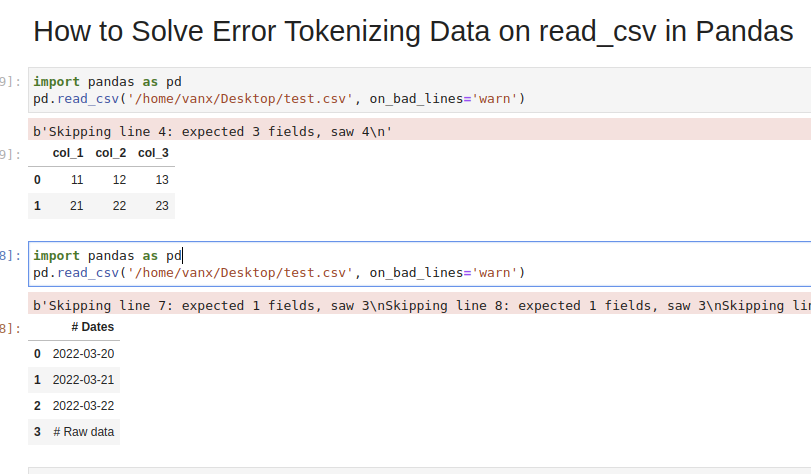

Using warn instead of skip will produce:

pd.read_csv('test.csv', on_bad_lines='warn')

warning like:

b'Skipping line 4: expected 3 fields, saw 4n'

To find more about how to drop bad lines with read_csv() read the linked article.

Step 2: Use correct separator to solve Pandas tokenizing error

In some cases the reason could be the separator used to read the CSV file. In this case we can open the file and check its content.

If you don’t know how to read huge files in Windows or Linux — then check the article.

Depending on your OS and CSV file you may need to use different parameters like:

sep—lineterminator—engine

More information on the parameters can be found in Pandas doc for read_csv()

If the CSV file has tab as a separator and different line endings we can use:

import pandas as pd

pd.read_csv('test.csv', sep='t', lineterminator='rn')

Note that delimiter is an alias for sep.

Step 3: Use different engine for read_csv()

The default C engine cannot automatically detect the separator, but the python parsing engine can.

There are 3 engines in the latest version of Pandas:

cpythonpyarrow

Python engine is the slowest one but the most feature-complete.

Using python as engine can help detecting the correct delimiter and solve the Error Tokenizing Data:

pd.read_csv('test.csv', engine='python')

Sometimes Error Tokenizing Data problem may be related to the headers.

For example multiline headers or additional data can produce the error. In this case we can skip the first or last few lines by:

pd.read_csv('test.csv', skiprows=1, engine='python')

or skipping the last lines:

pd.read_csv('test.csv', skipfooter=1, engine='python')

Note that we need to use python as the read_csv() engine for parameter — skipfooter.

In some cases header=None can help in order to solve the error:

pd.read_csv('test.csv', header=None)

Step 5: Autodetect skiprows for read_csv()

Suppose we had CSV file like:

Dates

2022-03-20

2022-03-21

2022-03-22

Raw data

col_1,col_2,col_3

11,12,13

21,22,23

31,32,33

We are interested in reading the data stored after the line «Raw data». If this line is changing for different files and we need to autodetect the line.

To search for a line containing some match in Pandas we can use a separator which is not present in the file «@@».

In case of multiple occurrences we can get the biggest index. To autodetect skiprows parameter in Pandas read_csv() use:

df_temp = pd.read_csv('test.csv', sep='@@', engine='python', names=['col'])

ix_last_start = df_temp[df_temp['col'].str.contains('# Raw data')].index.max()

result is:

4

Finding the starting line can be done by visually inspecting the file in the text editor.

Then we can simply read the file by:

df_analytics = pd.read_csv(file, skiprows=ix_last_start)

Conclusion

In this post we saw how to investigate the error:

ParserError: Error tokenizing data. C error: Expected 3 fields in line 4, saw 4

We covered different reasons and solutions in order to read any CSV file with Pandas.

Some solutions will warn and skip problematic lines. Others will try to resolve the problem automatically.

While reading a CSV file, you may get the “Pandas Error Tokenizing Data“. This mostly occurs due to the incorrect data in the CSV file.

You can solve python pandas error tokenizing data error by ignoring the offending lines using error_bad_lines=False.

In this tutorial, you’ll learn the cause and how to solve the error tokenizing data error.

If you’re in Hurry

You can use the below code snippet to solve the tokenizing error. You can solve the error by ignoring the offending lines and suppressing errors.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', error_bad_lines=False, engine ='python')

dfIf You Want to Understand Details, Read on…

In this tutorial, you’ll learn the causes for the exception “Error Tokenizing Data” and how it can be solved.

Cause of the Problem

- CSV file has two header lines

- Different separator is used

r– is a new line character and it is present in column names which makes subsequent column names to be read as next line- Lines of the CSV files have inconsistent number of columns

In the case of invalid rows which has an inconsistent number of columns, you’ll see an error as Expected 1 field in line 12, saw m. This means it expected only 1 field in the CSV file but it saw 12 values after tokenizing it. Hence, it doesn’t know how the tokenized values need to be handled. You can solve the errors by using one of the options below.

Finding the Problematic Line (Optional)

If you want to identify the line which is creating the problem while reading, you can use the below code snippet.

It uses the CSV reader. hence it is similar to the read_csv() method.

Snippet

import csv

with open("sample.csv", 'rb') as file_obj:

reader = csv.reader(file_obj)

line_no = 1

try:

for row in reader:

line_no += 1

except Exception as e:

print (("Error in the line number %d: %s %s" % (line_no, str(type(e)), e.message)))Using Err_Bad_Lines Parameter

When there is insufficient data in any of the rows, the tokenizing error will occur.

You can skip such invalid rows by using the err_bad_line parameter within the read_csv() method.

This parameter controls what needs to be done when a bad line occurs in the file being read.

If it’s set to,

False– Errors will be suppressed for Invalid linesTrue– Errors will be thrown for invalid lines

Use the below snippet to read the CSV file and ignore the invalid lines. Only a warning will be shown with the line number when there is an invalid lie found.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', error_bad_lines=False)

dfIn this case, the offending lines will be skipped and only the valid lines will be read from CSV and a dataframe will be created.

Using Python Engine

There are two engines supported in reading a CSV file. C engine and Python Engine.

C Engine

- Faster

- Uses C language to parse the CSV file

- Supports

float_precision - Cannot automatically detect the separator

- Doesn’t support skipping footer

Python Engine

- Slower when compared to C engine but its feature complete

- Uses Python language to parse the CSV file

- Doesn’t support

float_precision. Not required with Python - Can automatically detect the separator

- Supports skipping footer

Using the python engine can solve the problems faced while parsing the files.

For example, When you try to parse large CSV files, you may face the Error tokenizing data. c error out of memory. Using the python engine can solve the memory issues while parsing such big CSV files using the read_csv() method.

Use the below snippet to use the Python engine for reading the CSV file.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', engine='python', error_bad_lines=False)

dfThis is how you can use the python engine to parse the CSV file.

Optionally, this could also solve the error Error tokenizing data. c error out of memory when parsing the big CSV files.

Using Proper Separator

CSV files can have different separators such as tab separator or any other special character such as ;. In this case, an error will be thrown when reading the CSV file, if the default C engine is used.

You can parse the file successfully by specifying the separator explicitly using the sep parameter.

As an alternative, you can also use the python engine which will automatically detect the separator and parse the file accordingly.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', sep='t')

dfThis is how you can specify the separator explicitly which can solve the tokenizing errors while reading the CSV files.

Using Line Terminator

CSV file can contain r carriage return for separating the lines instead of the line separator n.

In this case, you’ll face CParserError: Error tokenizing data. C error: Buffer overflow caught - possible malformed input file when the line contains the r instead on n.

You can solve this error by using the line terminator explicitly using the lineterminator parameter.

Snippet

df = pd.read_csv('sample.csv',

lineterminator='n')This is how you can use the line terminator to parse the files with the terminator r.

Using header=None

CSV files can have incomplete headers which can cause tokenizing errors while parsing the file.

You can use header=None to ignore the first line headers while reading the CSV files.

This will parse the CSV file without headers and create a data frame. You can also add headers to column names by adding columns attribute to the read_csv() method.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', header=None, error_bad_lines=False)

dfThis is how you can ignore the headers which are incomplete and cause problems while reading the file.

Using Skiprows

CSV files can have headers in more than one row. This can happen when data is grouped into different sections and each group is having a name and has columns in each section.

In this case, you can ignore such rows by using the skiprows parameter. You can pass the number of rows to be skipped and the data will be read after skipping those number of rows.

Use the below snippet to skip the first two rows while reading the CSV file.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', header=None, skiprows=2, error_bad_lines=False)

dfThis is how you can skip or ignore the erroneous headers while reading the CSV file.

Reading As Lines and Separating

In a CSV file, you may have a different number of columns in each row. This can occur when some of the columns in the row are considered optional. You may need to parse such files without any problems during tokenizing.

In this case, you can read the file as lines and separate it later using the delimiter and create a dataframe out of it. This is helpful when you have varying lengths of rows.

In the below example,

- the file is read as lines by specifying the separator as a new line using

sep='n'. Now the file will be tokenized on each new line, and a single column will be available in the dataframe. - You can split the lines using the separator or regex and create different columns out of it.

expand=Trueexpands the split string into multiple columns.

Use the below snippet to read the file as lines and separate it using the separator.

Snippet

import pandas as pd

df = pd.read_csv('sample.csv', header=None, sep='n')

df = df[0].str.split('s|s', expand=True)

dfThis is how you can read the file as lines and later separate it to avoid problems while parsing the lines with an inconsistent number of columns.

Conclusion

To summarize, you’ve learned the causes of the Python Pandas Error tokenizing data and the different methods to solve it in different scenarios.

Different Errors while tokenizing data are,

Error tokenizing data. C error: Buffer overflow caught - possible malformed input fileParserError: Expected n fields in line x, saw mError tokenizing data. c error out of memory

Also learned the different engines available in the read_csv() method to parse the CSV file and the advantages and disadvantages of it.

You’ve also learned when to use the different methods appropriately.

If you have any questions, comment below.

You May Also Like

- How to write pandas dataframe to CSV

- How To Read An Excel File In Pandas – With Examples

- How To Read Excel With Multiple Sheets In Pandas?

- How To Solve Truth Value Of A Series Is Ambiguous. Use A.Empty, A.Bool(), A.Item(), A.Any() Or A.All()?

- How to solve xlrd.biffh.XLRDError: Excel xlsx file; not supported Error?

If engine='python' is used, curiously, it loads the DataFrame without any hiccups though. I used the following snippet to extract the first 3 lines in the file and 3 of the offending lines (got the line numbers from the error message).

from csv import reader

N = int(input('What line do you need? > '))

with open(filename) as f:

print(next((x for i, x in enumerate(reader(f)) if i == N), None))

Lines 1-3:

['08', '8', '7', '5', '0', '12', '54', '0', '11', '1', '58', '9', '68', '48.2', '0.756', '11.6', '17.5', '13.3', '4.3', '11.3', '32.2', '6.4', '4.1', '5.6', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '', '', '', '', '', '', '', '', '', '', '', '32']

['08', '8', '7', '5', '0', '15', '80', '0', '11', '1', '62', '9', '69', '77.8', '3.267', '11.2', '17.7', '14.8', '4.2', '15.2', '29.1', '18.4', '10.0', '18.1', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '', '', '', '', '', '', '', '', '', '', '', '32']

['08', '8', '7', '5', '0', '21', '52', '0', '11', '1', '61', '11', '51', '29.4', '0.076', '4.1', '13.8', '8.3', '21.5', '5.3', '3.1', '5.7', '3.0', '6.1', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '', '', '', '', '', '', '', '', '', '', '', '32']

Offending lines:

['09', '9', '15', '22', '46', '9', '51', '0', '11', '1', '57', '9', '70', '36.3', '0.242', '11.8', '16.2', '6.4', '4.1', '5.8', '31.3', '5.5', '3.9', '6.8', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '', '', '', '', '', '', '', '', '', '', '', '32']

['09', '9', '15', '22', '46', '25', '31', '0', '11', '1', '70', '9', '73', '67.8', '2.196', '10.4', '17.0', '13.4', '4.4', '12.2', '31.8', '15.6', '4.2', '16.2', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '', '', '', '', '', '', '', '', '', '', '', '32']

['09', '9', '15', '22', '46', '28', '41', '0', '11', '1', '70', '5', '22', '7.4', '0.003', '4.0', '13.1', '3.4', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '0.0', '', '', '', '', '', '', '', '', '', '', '', '32']

As you suggested, I will try to read the file, then modify the DataFrame (rename columns, delete unnecessary ones etc.) or simply use the python engine (long processing time).

Common reasons for ParserError: Error tokenizing data when initiating a Pandas DataFrame include:

-

Using the wrong delimiter

-

Number of fields in certain rows do not match with header

To resolve the error we can try the following:

-

Specifying the delimiter through

sepparameter inread_csv(~) -

Fixing the original source file

-

Skipping bad rows

Examples

Specifying sep

By default the read_csv(~) method assumes sep=",". Therefore when reading files that use a different delimiter, make sure to explicitly specify the delimiter to use.

Consider the following slash-delimited file called test.txt:

col1/col2/col3

1/A/4

2/B/5

3/C,D,E/6

To initialize a DataFrame using default sep=",":

import pandas as pd

df = pd.read_csv('test.txt')

ParserError: Error tokenizing data. C error: Expected 1 fields in line 4, saw 3

An error is raised as the first line in the file does not contain any commas, so read_csv(~) expects all lines in the file to only contain 1 field. However, line 4 has 3 fields as it contains two commas, which results in the ParserError.

To initialize the DataFrame by correctly specifying slash (/) as the delimiter:

df = pd.read_csv('test.txt', sep='/')

df

col1 col2 col3

0 1 A 4

1 2 B 5

2 3 C,D,E 6

We can now see the DataFrame is initialized as expected with each line containing the 3 fields which were separated by slashes (/) in the original file.

Fixing original source file

Consider the following comma-separated file called test.csv:

col1,col2,col3

1,A,4

2,B,5,

3,C,6

To initialize a DataFrame using the above file:

import pandas as pd

df = pd.read_csv('test.csv')

ParserError: Error tokenizing data. C error: Expected 3 fields in line 3, saw 4

Here there is an error on the 3rd line as 4 fields are observed instead of 3, caused by the additional comma at the end of the line.

To resolve this error, we can correct the original file by removing the extra comma at the end of line 3:

col1,col2,col3

1,A,4

2,B,5

3,C,6

To now initialize the DataFrame again using the corrected file:

df = pd.read_csv('test.csv')

df

col1 col2 col3

0 1 A 4

1 2 B 5

2 3 C 6

Skipping bad rows

Consider the following comma-separated file called test.csv:

col1,col2,col3

1,A,4

2,B,5,

3,C,6

To initialize a DataFrame using the above file:

import pandas as pd

df = pd.read_csv('test.csv')

ParserError: Error tokenizing data. C error: Expected 3 fields in line 3, saw 4

Here there is an error on the 3rd line as 4 fields are observed instead of 3, caused by the additional comma at the end of the line.

To skip bad rows pass on_bad_lines='skip' to read_csv(~):

df = pd.read_csv('test.csv', on_bad_lines='skip')

df

col1 col2 col3

0 1 A 4

1 3 C 6

Notice how the problematic third line in the original file has been skipped in the resulting DataFrame.

WARNING

This should be your last resort as valuable information could be contained within the problematic lines. Skipping these rows means you lose this information. As much as possible try to identify the root cause of the error and fix the underlying problem.

- What Is the

ParserError: Error tokenizing data. C errorin Python - How to Fix the

ParserError: Error tokenizing data. C errorin Python - Skip Rows to Fix the

ParserError: Error tokenizing data. C error - Use the Correct Separator to Fix the

ParserError: Error tokenizing data. C error - Use

dropna()to Fix theParserError: Error tokenizing data. C error - Use the

fillna()Function to Fill Up theNaNValues

When playing with data for any purpose, it is mandatory to clean the data, which means filling the null values and removing invalid entries to clean the data, so it doesn’t affect the results, and the program runs smoothly.

Furthermore, the causes of the ParserError: Error tokenizing data. C error can be providing the wrong data in the files, like mixed data, a different number of columns, or several data files stored as a single file.

And you can also encounter this error if you read a CSV file as read_csv but provide different separators and line terminators.

What Is the ParserError: Error tokenizing data. C error in Python

As discussed, the ParserError: Error tokenizing data. C error occurs when your Python program parses CSV data but encounters errors like invalid values, null values, unfilled columns, etc.

Let’s say we have this data in the data.csv file, and we are using it to read with the help of pandas, although it has an error.

Name,Roll,Course,Marks,CGPA

Ali,1,SE,87,3

John,2,CS,78,

Maria,3,DS,13,,

Code example:

import pandas as pd

pd.read_csv('data.csv')

Output:

ParserError: Error tokenizing data. C error: Expected 5 fields in line 4, saw 6

As you can see, the above code has thrown a ParserError: Error tokenizing data. C error while reading data from the data.csv file, which says that the compiler was expecting 5 fields in line 4 but got 6 instead.

The error itself is self-explanatory; it indicates the exact point of the error and shows the reason for the error, too, so we can fix it.

How to Fix the ParserError: Error tokenizing data. C error in Python

So far, we have understood the ParserError: Error tokenizing data. C error in Python; now let’s see how we can fix it.

It is always recommended to clean the data before analyzing it because it may affect the results or fail your program to run.

Data cleansing helps in removing invalid data inputs, null values, and invalid entries; basically, it is a pre-processing stage of the data analysis.

In Python, we have different functions and parameters that help clean the data and avoid errors.

Skip Rows to Fix the ParserError: Error tokenizing data. C error

This is one of the most common techniques that skip the row, causing the error; as you can see from the above data, the last line was causing the error.

Now using the argument on_bad_lines = 'skip', it has ignored the buggy row and stored the remaining in data frame df.

import pandas as pd

df = pd.read_csv('data.csv', on_bad_lines='skip')

df

Output:

Name Roll Course Marks CGPA

0 Ali 1 SE 87 3.0

1 John 2 CS 78 NaN

The above code will skip all those lines causing errors and printing the others; as you can see in the output, the last line is skipping because it was causing the error.

But we are getting the NaN values that need to be fixed; otherwise, it will affect the results of our statistical analysis.

Use the Correct Separator to Fix the ParserError: Error tokenizing data. C error

Using an invalid separator can also cause the ParserError, so it is important to use the correct and suitable separator depending on the data you provide.

Sometimes we use tab to separate the CSV data or space, so it is important to specify that separator in your program too.

import pandas as pd

pd.read_csv('data.csv', sep=',',on_bad_lines='skip' ,lineterminator='n')

Output:

Name Roll Course Marks CGPAr

0 Ali 1 SE 87 3r

1 John 2 CS 78 r

The separator is , that’s why we have mentioned sep=',' and the lineterminator ='n' because our line ends with n.

Use dropna() to Fix the ParserError: Error tokenizing data. C error

The dropna function is used to drop all the rows that contain any Null or NaN values.

import pandas as pd

df = pd.read_csv('data.csv', on_bad_lines='skip')

print(" **** Before dropna ****")

print(df)

print("n **** After dropna ****")

print(df.dropna())

Output:

**** Before dropna ****

Name Roll Course Marks CGPA

0 Ali 1 SE 87 3.0

1 John 2 CS 78 NaN

**** After dropna ****

Name Roll Course Marks CGPA

0 Ali 1 SE 87 3.0

Since we have only two rows, one row has all the attributes but the second row has NaN values so the dropna() function has skip the row with the NaN value and displayed just a single row.

Use the fillna() Function to Fill Up the NaN Values

When you get NaN values in your data, you can use the fillna() function to replace other values that use the default value 0.

Code Example:

import pandas as pd

print(" **** Before fillna ****")

df = pd.read_csv('data.csv', on_bad_lines='skip')

print(df,"nn")

print(" **** After fillna ****")

print(df.fillna(0)) # using 0 inplace of NaN

Output:

**** Before fillna ****

Name Roll Course Marks CGPA

0 Ali 1 SE 87 3.0

1 John 2 CS 78 NaN

**** After fillna ****

Name Roll Course Marks CGPA

0 Ali 1 SE 87 3.0

1 John 2 CS 78 0.0

The fillna() has replaced the NaN with 0 so we can analyze the data properly.