Updated on 07/13/2016. See the update this post, I might find the ultimate solution, even I am still not sure what the cause of the issue.

Recently I had an issue to vMotion some VMs between the vSphere v 6.x cluster hosts. Long story short, here are the symptoms:

- I consistently got the error “Failed waiting for data. Error 195887167. Connection closed by remote host, possibly due to timeout” when vMotioning (host only, no storage vMotion) on some VMs, particularly the vCenter Server Appliance VM. I had two vCSA VMs. Both were have the same issue. But vCSA is not the only VM that I got the error.

- I successfully vMotion some VMs between the hosts. The vMotion network configuration should be okay.

- In other words, some VMs are okay; some are not. The size (CPU, RAM, storage) of the VMs does not seem the problem. The successfully vMotioned VMs can have more/less CPU, RAM, storage than the failed VMs.

- The failed VM has more disks than other VMs. The vCSA VM created 11 disks by default.

- When the VMs were powered off, vMotion successfully.

- Restarted the hosts and restarted the VMs. No difference.

- Verified no IP address conflicts.

- Tried one VMkernel adapter for both management and vMotion or a dedicated VMkernel adapter for vMotion. No difference.

- Tested vmkping successfully between the hosts.

- In the vmkernel.log of the hosts, the error is “2016-05-18T22:47:34.959Z cpu15:39089)WARNING: Migrate: 270: 1463611379229538 D: Failed: Connection closed by remote host, possibly due to timeout (0xbad003f) @0x418000e149ee” on the destination host or “2016-05-19T18:29:23.930Z cpu1:130133)WARNING: Migrate: 270: 1463682486286991 S: Failed: Migration determined a failure by the VMX (0xbad0092) @0x41803a7f6993” on the source host.

Possible solutions

- Remove the snapshot on the VM if it has one

- After removing the snap shot on one of vCSA VMs, vMotion worked fine. But another vCSA had no snapshot, it still failed.

- Try using the vSphere Client instead of the vSphere Web Client. This worked on some VMs, but not always.

- Assign VM’s network adapter to different port group; and change back to its original port group

- This seems the ultimate fix. After doing this, the vCSA VMs, which failed vMotion consistently, are vMotioned successfully.

Conclusion

- I’m not sure the root cause of this issue. But it may relate to the network setting on the vSwitch or port group. Some hits about this: vMotion fails with the general system error: 0xbad003f (KB2008394)

- These KBs are not the solution in my case:

- vMotion fails with the error: Error bad003F (KB1036834)

- vMotion fails with connection errors (KB1030389)

- Understanding and troubleshooting VMware vMotion (KB1003734)

07/13/2016 update

- I run into the same error when migrating some VMs, including the vCenter 6.x appliance (vCSA), between hosts in the Vmware cluster. I am able to migrate other VMs. This leads me to believe the problem on the VM, instead of the VM infrastructure.

- Within the VMs having this error, some of them, which I can power off, are migrated successfully. However, I can not power down the vCSA VM. Because I cannot perform the migration without the vCenter available.

- I try assigning the NIC of the vCSA VM to different port group, and change back. However, I cannot do that, because this VM is the vCenter server and is configured with a distributed switch. If I change the vCSA to different port group (configured with a different VLAN), the vCenter server will be down (because its NIC is assigned to the wrong port group with the wrong VLAN); and I cannot change it back to the original port group with the right VLAN.

- I try connecting directly to the ESXi host of the vCSA VM via the vSphere C# client, then assign the NIC of the vCSA VM to different port group. However, I cannot do that either. Because the host is only configured with the distributed switches, there is no other port group in the selection at this situation.

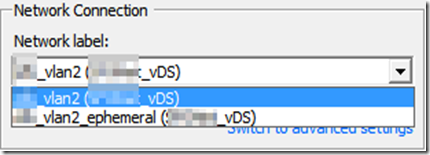

- I try create a new port group with the ephemeral port binding on the distributed switch. This new ephemeral port group is available in the vSphere C# client when connecting to the host directly. Then I assign the VM to the ephemeral port group and change back. However, the migration still fails with the same error.

- Since I could fix this issue last time by changing the port group and changing back, I guess that somehow reset the NIC on the VM or the virtual switch port to which the VM is connected. That gives me an idea to manually assign the VM to another virtual switch port.

Solutions

- In the screenshot above, the NIC of the vCSA is assigned to port 214 on the vSwitch.

- Log back in the vCenter via the vSphere C# client or Web client (I cannot see the ports on the distributed switch, nor change the port assigned to the VM’s NIC when connecting to the ESXi host via the vSphere C# client)

- I find an used port on the same port group (e.g. port 423 in my case).

- Edit the vCSA VM setting and assign its NIC to the unused port.

- Then I can successfully migrate the vCSA VM to another host.

Conclusion

- Changing the NIC to an used port will be my first attempt when this issue happens again (I bet it will happen).

- I still don’t know the cause of the this issue.

10/15/2019 update

- I got the exact error again when Storage vMotion a VM across two vCenter (from vCSA 6.0 to vCSA 6.5). Deleted the VM snapshot, reran the vMotion, and completed successfully.

Содержание

- Eddie’s Blog

- Search This Blog

- Troubleshoot vMotion Error 195887167

- Migration to host failed with error connection closed by remote host possibly due to timeout

- Migration to host failed with error connection closed by remote host possibly due to timeout

- Migration to host failed with error connection closed by remote host possibly due to timeout

Eddie’s Blog

Never be afraid of sharing what you’ve done and being proud of good, quality work

Search This Blog

Troubleshoot vMotion Error 195887167

Updated on 07/13/2016. See the update this post, I might find the ultimate solution, even I am still not sure what the cause of the issue.

Recently I had an issue to vMotion some VMs between the vSphere v 6.x cluster hosts. Long story short, here are the symptoms:

- I consistently got the error “Failed waiting for data. Error 195887167. Connection closed by remote host, possibly due to timeout” when vMotioning (host only, no storage vMotion) on some VMs, particularly the vCenter Server Appliance VM. I had two vCSA VMs. Both were have the same issue. But vCSA is not the only VM that I got the error.

- I successfully vMotion some VMs between the hosts. The vMotion network configuration should be okay.

- In other words, some VMs are okay; some are not. The size (CPU, RAM, storage) of the VMs does not seem the problem. The successfully vMotioned VMs can have more/less CPU, RAM, storage than the failed VMs.

- The failed VM has more disks than other VMs. The vCSA VM created 11 disks by default.

- When the VMs were powered off, vMotion successfully.

- Restarted the hosts and restarted the VMs. No difference.

- Verified no IP address conflicts.

- Tried one VMkernel adapter for both management and vMotion or a dedicated VMkernel adapter for vMotion. No difference.

- Tested vmkping successfully between the hosts.

- In the vmkernel.log of the hosts, the error is “2016-05-18T22:47:34.959Z cpu15:39089)WARNING: Migrate: 270: 1463611379229538 D: Failed: Connection closed by remote host, possibly due to timeout (0xbad003f) @0x418000e149ee” on the destination host or “2016-05-19T18:29:23.930Z cpu1:130133)WARNING: Migrate: 270: 1463682486286991 S: Failed: Migration determined a failure by the VMX (0xbad0092) @0x41803a7f6993” on the source host.

Possible solutions

- Remove the snapshot on the VM if it has one

- After removing the snap shot on one of vCSA VMs, vMotion worked fine. But another vCSA had no snapshot, it still failed.

- Try using the vSphere Client instead of the vSphere Web Client. This worked on some VMs, but not always.

- Assign VM’s network adapter to different port group; and change back to its original port group

- This seems the ultimate fix. After doing this, the vCSA VMs, which failed vMotion consistently, are vMotioned successfully.

Conclusion

07/13/2016 update

- I run into the same error when migrating some VMs, including the vCenter 6.x appliance (vCSA), between hosts in the Vmware cluster. I am able to migrate other VMs. This leads me to believe the problem on the VM, instead of the VM infrastructure.

- Within the VMs having this error, some of them, which I can power off, are migrated successfully. However, I can not power down the vCSA VM. Because I cannot perform the migration without the vCenter available.

- I try assigning the NIC of the vCSA VM to different port group, and change back. However, I cannot do that, because this VM is the vCenter server and is configured with a distributed switch. If I change the vCSA to different port group (configured with a different VLAN), the vCenter server will be down (because its NIC is assigned to the wrong port group with the wrong VLAN); and I cannot change it back to the original port group with the right VLAN.

- I try connecting directly to the ESXi host of the vCSA VM via the vSphere C# client, then assign the NIC of the vCSA VM to different port group. However, I cannot do that either. Because the host is only configured with the distributed switches, there is no other port group in the selection at this situation.

- I try create a new port group with the ephemeral port binding on the distributed switch. This new ephemeral port group is available in the vSphere C# client when connecting to the host directly. Then I assign the VM to the ephemeral port group and change back. However, the migration still fails with the same error.

- Since I could fix this issue last time by changing the port group and changing back, I guess that somehow reset the NIC on the VM or the virtual switch port to which the VM is connected. That gives me an idea to manually assign the VM to another virtual switch port.

Solutions

- In the screenshot above, the NIC of the vCSA is assigned to port 214 on the vSwitch.

- Log back in the vCenter via the vSphere C# client or Web client (I cannot see the ports on the distributed switch, nor change the port assigned to the VM’s NIC when connecting to the ESXi host via the vSphere C# client)

- I find an used port on the same port group (e.g. port 423 in my case).

- Edit the vCSA VM setting and assign its NIC to the unused port.

- Then I can successfully migrate the vCSA VM to another host.

Conclusion

- Changing the NIC to an used port will be my first attempt when this issue happens again (I bet it will happen).

- I still don’t know the cause of the this issue.

10/15/2019 update

- I got the exact error again when Storage vMotion a VM across two vCenter (from vCSA 6.0 to vCSA 6.5). Deleted the VM snapshot, reran the vMotion, and completed successfully.

Источник

Migration to host failed with error connection closed by remote host possibly due to timeout

My VSphere environment stopped to move servers when they are online, is only working when they are turned off.

I have cluster enabled, HA enabled with DRS, all storages is showing in all VMWare servers, but the disks is showing

Multipathing Status = Partial/No redundancy

The disk size at storage is 80GB and the disk from virtual servers is Thin with 80GB also.

The error when I try to migrate one turned machine is:

Migration to host > failed with error Connection closed by remote host, possibly due to timeout (195887167).

Timed out waiting for migration data.

If this same machine is turned off, the migration occurs without problem.

How can I resolve this?

Thank you for all.

3 servers with VMware 5.0.0, esxi 623860

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you already verify the vMotion network., i.e. vmkping from one host to the others?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Andre thank you for the fast response.

The Vmotion switch for each server has the same configuration

Server 01 192.168.11.11

Server 02 192.168.11.12

Server 03 192.168.11.13

With 2 network cards working as a team, the mtu is configured for 9000

They are connecting in one specific vlan, and the switch Dell PowerConnect is configured for jumbo files also.

Inside each Vmotion switch the option choose are:

General: 120, 9000

Security: reject, accept, accept

Traffic shaping: disabled

Nic teaming: routed based on the originating on the virtual port id, link status only, yes, yes

Both network cards are active.

How I do this command that you told me?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can run the vmkping from the command promt of the ESXi hosts.

Regarding your answer. You wrote «With 2 network cards working as a team . «. How is this team configured on the physical switch?

Anyway, I’d recommend you take a look at Multi NIC vMotion ( http://kb.vmware.com/kb/2007467 ) which has been introduced with vSphere 5.0 and takes advantage of both NICs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have also verified that the vMotion tag is enabled on each VMkernel interface on the hosts?

You could also check that there are no IP duplicates, this could cause timeouts. As for the physical switches, you mention that you have jumbo frames configured on those, but I would recommend to doublecheck this. Perhaps the configuration was not saved and the switches rebooted, which breaks the jumbo end-to-end need.

Источник

Migration to host failed with error connection closed by remote host possibly due to timeout

I am trying to do a compute and svMotion of a multi TB VM (FTT1) from one vSAN cluster to another over 1Gbit uplink.

After several hours (around 10 hours and was more than 50%) it fails with Event: Migration to host xx.xx.xx.xx failed with error Connection closed by remote host, possibly due to timeout (195887167)

Source vSAN is 6.2U2 and destination vSAN is 6.7U2

vmkping -I does not show any ping loss from source to destination vmk when I test RTT min=0173 avg=0.460 max=1.218

Both cluster/hosts connected to the same switch

VM is not busy but still powered on, does it help if I try again with the VM powerd off?

I selected «Schedule vMotion with high priority (recommended) — not sure if instead I select «Schedule regular vMotion» would help?

I am thinking this might be saturation the uplink during the migration and timing out, could that be the case and would it not lower the transfer speed if so?

Any specific logs I should check or any other methods I can try to migrate?

I tested a migration (compute and svMotion) of a very small VM from the same cluster (same source host) to the same destination host in other cluster and migration worked fine, so think it must be failing because of its size.

On source host vmkernel I see events related to:

S: failed to read stream keepalive: Connection reset by peer

S: Migration considered a failure by the VMX. It is most likely a timeout.

Destroying Device for world xxxxxxxx

Destroying Device for world xxxxxxxx

disabled port xxxxxxxxxxxx

XVMotion: 2479 Timout out while waiting for disk 2’s queue count to drop below the minimum limit of 32768 blocks. This could indicate network or storage problems.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should consider moving this to general vSphere sub-forum as while there is a vSAN at either end of the vMotion, this is likely failing for generic reasons (unless of course either cluster on either end is having some issues — do of course check this).

«vmkping -I does not show any ping loss from source to destination vmk when I test»

Unless you were pinging this for the 10 hours that this was running, this is not exactly a valid test, e.g. that you see no connectivity issue now does not mean one did not occur.

Using high priority or regular shouldn’t matter unless you are vMotioning other VMs at the same time:

If they are going over 1Gb uplinks and Management network (what it will go over if cold) also has 1Gb uplinks then doing this cold may be beneficial as the data will be static.

Is the VM comprised of multiple vmdks or one relatively large one? If multiple, then you could consider moving it using the advanced vMotion options of 1 to few disks at a time (which by the law of odds has less chance of timing out).

«I tested a migration (compute and svMotion) of a very small VM from the same cluster (same source host) to the same destination host in other cluster and migration worked fine, so think it must be failing because of its size.»

Yes it surely is, if you have X chance of something timing out per hour (for whatever reason) and you multiply that by multiple orders of magnitude then the odds of this occurring are multiple orders of magnitude higher.

Источник

Migration to host failed with error connection closed by remote host possibly due to timeout

at my customer, a new server infrastructure (4 ESXi 6.0U2 hosts, vcenter VCSA, shared storage already used with old servers).

The configuration is:

4 1Gbsp NICs used for VMnetworks

2 10Gbps NICs used only for VMotion

2 hosts in Production Site (host1a, host2a) and 2 hosts in the DR Site (host1b, host2b) (about 200mt. between them)

All is working as espected except the vMotion, that:

from host1a to all other hosts

from host2a to host1b and host2b

from host1b to host2a and host2b

from host2b to host2a and host1b

from host2a, host1b, host2b to host1a

vmkping is working from and to all hosts.

The error message is the following

Failed waiting for data. Error 195887371. The ESX hosts failed to connect over the VMotion network.

vMotion migration [168435367:1473922772312347] failed to read stream keepalive: Connection closed by remote host, possibly due to timeout

Migration to host failed with error Connection closed by remote host, possibly due to timeout (195887167).

Migration [168435367:1473922772312347] failed to connect to remote host from host : Timeout.

vMotion migration [168435367:1473922772312347] failed to create a connection with remote host : The ESX hosts failed to connect over the VMotion network

Does anyone can help me to find where is the problem ?

Thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you for your detailed explanation.

On switch side all is ok, otherwise also the other three hosts wold not work.

Not having more time to search for the problem, I reinstalled ESXi on the server and now vmotion is working also on this host.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let us go back to basics.

You have 10GB for vMotion with 1 GB for VMs. Actually I would switch that myself, unless you also are planning on using the vMotion network hardware for SMP-FT or VSAN. Something to consider.

That aside, I would look at your switches, in the past, switches either had to have their ports hardcoded to a speed or set to autonegotiate. If they were not set properly, the vMotion would stall or fail outright. Look at the switches for any errors associated with the ports to which vMotion is configured. Also, ensure any VLANs are trunked to those ports correctly. I would (a) clear any errors in the switch (b) verify VLANs trunked porperly (c) run a vMotion (d) look at switch error counts.

From the ESXi side of the shop, there is not much you can do actually. It is either connected or it is not, not much tuning needed there. However, that being said, ensure all the cables are plugged in properly (unplug, replug is my suggestion), look for any network card errors in the vSphere Log files (LogInsight is extremely helpful for this). if there are errors fix those if possible.

Ensure that ONLY ESXi hosts are connected to the vMotion network VLAN/switch. If anything else is connected it can contribute to failure. This is one of the sacrosanct networks.

Note, most of vMotion failures in the past are due to physical mis-configurations not due to software issues unless it is in the switch itself.

Best regards,

Edward L. Haletky

VMware Communities User Moderator, VMware vExpert 2009-2016

Источник

We have an ESXi host in our VMware Cluster which we are not able to migrate VMs off of. All VMs on this host are affected by the issue.

vMotion fails at 21% with the following error message: «Failed waiting for data. Error 195887137. Timeout.»

vCenter Logs

What’s interesting is that we are able to migrate VMs TO the problem host. It is only when the problem host is the source host that we see the error message. All other migrations between all other hosts in the cluster work fine.

Logs:

/var/log/vmkernel.log:

2020-01-31T3:26:33.987Z cpu3:8322451)Migrate: vm 8322452: 3885: Setting VMOTION info: Source ts = 1426483424790667834, src ip = <x.x.x.16> dest ip = <x.x.x.45> Dest wid = 4218927 using SHARED swap, encrypted

2020-01-31T13:26:33.989Z cpu3:8322451)Hbr: 3561: Migration start received (worldID=8322452) (migrateType=1) (event=0) (isSource=1) (sharedConfig=1)

2020-01-31T13:26:33.989Z cpu14:8943031)MigrateNet: 1751: 1426483424790667834 S: Successfully bound connection to vmknic vmk2 - 'x.x.x.16'

2020-01-31T13:26:33.991Z cpu20:8902233)MigrateNet: vm 8902233: 3263: Accepted connection from <::ffff:x.x.x.45>

2020-01-31T13:26:33.991Z cpu20:8902233)MigrateNet: vm 8902233: 3351: dataSocket 0x430efb2fce30 receive buffer size is 563272

2020-01-31T13:26:33.991Z cpu14:8943031)MigrateNet: 1751: 1426483424790667834 S: Successfully bound connection to vmknic vmk2 - 'x.x.x.16'

2020-01-31T13:26:33.991Z cpu14:8943031)VMotionUtil: 5199: 1426483424790667834 S: Stream connection 1 added.

2020-01-31T13:26:53.994Z cpu1:8943028)WARNING: VMotionUtil: 862: 1426483424790667834 S: failed to read stream keepalive: Connection closed by remote host, possibly due to timeout

2020-01-31T13:26:53.994Z cpu1:8943028)WARNING: Migrate: 282: 1426483424790667834 S: Failed: Connection closed by remote host, possibly due to timeout (0xbad003f) u/0x41802bf0e273

2020-01-31T13:26:54.012Z cpu35:8322484)WARNING: Migrate: 6189: 1426483424790667834 S: Migration considered a failure by the VMX. It is most likely a timeout, but check the VMX log for the true error.

2020-01-31T13:26:54.013Z cpu35:8322484)Hbr: 3655: Migration end received (worldID=8322452) (migrateType=1) (event=1) (isSource=1) (sharedConfig=1)

2020-01-31T13:26:54.014Z cpu0:8943028)VMotionUtil: 7560: 1426483424790667834 S: Socket 0x430efb2fce30 rcvMigFree pending: 33200/33304 snd 0 rcv

VMX log:

2020-01-31T13:26:33.965Z| vmx| I125: MigrateSetState: Transitioning from state 8 to 9.

2020-01-31T13:26:33.995Z| vmx| I125: MigrateRPC_RetrieveMessages: Informed of a new user message, but can't handle messages in state 4. Leaving the message queued.

2020-01-31T13:26:35.131Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'Tools' (cmd=queryFields)

2020-01-31T13:26:35.131Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'GuestInfo' (cmd=queryFields)

2020-01-31T13:26:35.131Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'GuestPeriodic' (cmd=queryFields)

2020-01-31T13:26:35.131Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'GuestAppMonitor' (cmd=queryFields)

2020-01-31T13:26:35.131Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'CrashDetector' (cmd=queryFields)

2020-01-31T13:26:38.833Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'Tools' (cmd=queryFields)

2020-01-31T13:26:38.833Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'GuestInfo' (cmd=queryFields)

2020-01-31T13:26:38.833Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'GuestPeriodic' (cmd=queryFields)

2020-01-31T13:26:38.833Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'GuestAppMonitor' (cmd=queryFields)

2020-01-31T13:26:38.833Z| vmx| I125: Vigor_ClientRequestCb: failed to do op=3 on unregistered device 'CrashDetector' (cmd=queryFields)

2020-01-31T13:26:53.996Z| vmx| I125: MigrateSetStateFinished: type=2 new state=12

2020-01-31T13:26:53.996Z| vmx| I125: MigrateSetState: Transitioning from state 9 to 12.

2020-01-31T13:26:53.996Z| vmx| I125: Migrate: Caching migration error message list:

2020-01-31T13:26:53.996Z| vmx| I125: [msg.migrate.waitdata.platform] Failed waiting for data. Error bad0021. Timeout.

2020-01-31T13:26:53.996Z| vmx| I125: [vob.vmotion.net.send.start.failed] vMotion migration [a011610:1426483424790667834] failed to send init message to the remote host <x.x.x.16>

2020-01-31T13:26:53.996Z| vmx| I125: [vob.vmotion.net.sbwait.timeout] vMotion migration [a011610:1426483424790667834] timed out waiting 20001 ms to transmit data.

2020-01-31T13:26:53.996Z| vmx| I125: Migrate: cleaning up migration state.

2020-01-31T13:26:53.997Z| vmx| I125: Migrate: Final status reported through Vigor.

2020-01-31T13:26:53.997Z| vmx| I125: MigrateSetState: Transitioning from state 12 to 0.

2020-01-31T13:26:53.997Z| vmx| I125: Module 'Migrate' power on failed.

Things I’ve checked:

-

EVC is configured at the cluster level so there are no CPU compatibility issues.

-

vmkping is successful between the source and destination hosts using the vmotion tp/ip stack:

[root@:~] vmkping ++netstack=vmotion -I vmk2 x.x.x.16 -s 1472

1480 bytes from x.x.x.16: icmp_seq=0 ttl=64 time=0.263 ms

1480 bytes from x.x.x.16: icmp_seq=1 ttl=64 time=0.490 ms

1480 bytes from x.x.x.16: icmp_seq=2 ttl=64 time=0.548 ms

[root@:~] vmkping ++netstack=vmotion -I vmk1 -d x.x.x.45 -s 1472

1480 bytes from x.x.x.45: icmp_seq=0 ttl=64 time=0.351 ms

1480 bytes from x.x.x.45: icmp_seq=1 ttl=64 time=0.299 ms

1480 bytes from x.x.x.45: icmp_seq=2 ttl=64 time=0.393 ms

-

MTU is set to 1500 on the vmotion vmk interfaces, and is higher on the dvswitch and physical switch, so no issue there.

-

VLAN configuration on the physical switches is correct.

-

Tried moving the vmotion vmk to a VSS instead of the VDS.

-

Verified that we have only a single interface configured for vmotion on the host, even though multi vmks is supported.

-

Restarted the management agents on the ESXi host didn’t help.

-

Disabling vmotion encryption for a VM resulted in the same error.

-

Reconfigured the host for HA, but this didn’t help.

Unfortunately the server in question is no longer supported on the HCL, so VMware support would not help with the troubleshooting. However, had we been running Broadwell generation CPUs (v4) instead of Haswell (v3), then it would be supported:

SYS-2048U-RTR4 Intel Xeon E5-4600-v3 Series 6.0 U3

SYS-2048U-RTR4 Intel Xeon E5-4600-v4 Series 6.7 U3

I struggle to imagine this error we’re seeing is caused by running a Haswell CPU instead of Broadwell, but I guess anything is possible…

We’ve made no changes recently to the environment, and vMotion was working previously a few days ago on this host.

Anyway, I just wanted to reach out to see if anyone else is experiencing a similar issue, or if there are some VMware enginners lurking on Reddit who would be interested in troubleshooting this problem to confirm whether it is hardware compatibility related or not.

Update:

I never found the root cause of the issue. As part of my troubleshooting, I configured the ESXi management vmk for vmotion traffic on two of the hosts in my cluster. I was successfully able to migrate a VM off the host with this configuration. I then rolled back the change (disabled vmotion on the management vmk, and re-enabled it on my vmotion vmk) and voilà, I could now vmotion all the VMs off the host.

-

filipsmeets

- Enthusiast

- Posts: 37

- Liked: 2 times

- Joined: Jun 26, 2019 3:28 pm

- Full Name: Filip Smeets

- Contact:

VMs protected by CDP Policy will power off if you try to do a vMotion

Case #05144209

As mentioned in the subject, VMs protected by CDP Policy will power off if you try to do a vMotion. vMotion does not occur and the VMs gets restarted by vSphere HA.

I see the following events in vCenter:

Task Name

Migrate virtual machine

Status

Failed waiting for data. Error 195887167. Connection closed by remote host, possibly due to timeout. 2021-11-22T11:00:13.91759Z Remote connection failure Failed to establish transport connection. Disconnected from virtual machine. 2021-11-22T11:00:13.751014Z vMotion migration [184164886:7352562474509221539] the remote host closed the connection unexpectedly and migration has stopped. The closed connection probably results from a migration failure detected on the remote host.

Error stack:

Remote connection failure

Failed to establish transport connection.

Disconnected from virtual machine.

2021-11-22T11:00:13.751014Z

vMotion migration [184164886:7352562474509221539] the remote host closed the connection unexpectedly and migration has stopped. The closed connection probably results from a migration failure detected on the remote host.

Failed waiting for data. Error 195887167. Connection closed by remote host, possibly due to timeout.

The virtual machine was restarted automatically by vSphere HA on this host. This response may be triggered by a failure of the host the virtual machine was originally running on or by an unclean power-off of the virtual machine (eg. if the vmx process was killed).

-

filipsmeets

- Enthusiast

- Posts: 37

- Liked: 2 times

- Joined: Jun 26, 2019 3:28 pm

- Full Name: Filip Smeets

- Contact:

Re: VMs protected by CDP Policy will power off if you try to do a vMotion

Post

by filipsmeets » Nov 24, 2021 11:14 am

Hi Andreas

I have checked the IO filters on the source and destination cluster and they are all up to date.

Replication actually is working.

I have 2 test VMs running in Datacenter DCR1 under the Cluster Workloads. This Cluster contains 5 ESXi Hosts and has DRS and HA enabled with the default settings. VMs are running on a shared storage (FC SAN).

I then created a CDP Policy to replicate these two VMs to Datacenter DCR2 under the Cluster Workloads. This Cluster also contains 5 ESXi Hosts with DRS and HA enabled with the default settings.

While these VMs were protected by the CDP policy, I tried to vMotion one of the original VMs in DCR1 to another host in the same cluster. I then noticed the VM got powered off and after a while got restarted by vCenter HA.

While looking at the CDP Job in Veeam, I could see it switching over to CBT.

I hope this makes some more sense.

-

filipsmeets

- Enthusiast

- Posts: 37

- Liked: 2 times

- Joined: Jun 26, 2019 3:28 pm

- Full Name: Filip Smeets

- Contact:

Re: VMs protected by CDP Policy will power off if you try to do a vMotion

Post

by filipsmeets » Nov 24, 2021 11:46 am

Each host has 3 vmkernels. One for management, a second for vMotion and a third for Veeam.

The vmkernels are connected to 3 different port groups with different subnets/vlans on a distributed switch with two active uplinks. No LACP. Load balance policy is set to physical NIC usage.

-

werner.vergauwen

- Influencer

- Posts: 13

- Liked: 3 times

- Joined: Jun 16, 2020 8:36 am

- Full Name: Werner Vergauwen

- Contact:

Re: VMs protected by CDP Policy will power off if you try to do a vMotion

Post

by werner.vergauwen » Mar 10, 2022 3:17 pm

1 person likes this post

small feedback

the issue is now resolved. There was a bug registered at Veeam (Bug 368345)

A private fix was delivered for 11.0.1.1261, which needed to be installed on the VBR.

We have upgraded our Veeam environment to the proper level, applied the hotfix, and lo and behold, no more VM shutdown during vmotion.

-

Andreas Neufert

- VP, Product Management

- Posts: 6256

- Liked: 1301 times

- Joined: May 04, 2011 8:36 am

- Full Name: Andreas Neufert

- Location: Germany

-

Contact:

Who is online

Users browsing this forum: No registered users and 25 guests