«Interleaver» redirects here. For the fiber-optic device, see optical interleaver.

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding[1][2][3] is a technique used for controlling errors in data transmission over unreliable or noisy communication channels.

The central idea is that the sender encodes the message in a redundant way, most often by using an error correction code or error correcting code, (ECC).[4][5] The redundancy allows the receiver not only to detect errors that may occur anywhere in the message, but often to correct a limited number of errors. Therefore a reverse channel to request re-transmission may not be needed. The cost is a fixed, higher forward channel bandwidth.

The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code.[5]

FEC can be applied in situations where re-transmissions are costly or impossible, such as one-way communication links or when transmitting to multiple receivers in multicast.

Long-latency connections also benefit; in the case of a satellite orbiting Uranus, retransmission due to errors can create a delay of five hours. FEC is widely used in modems and in cellular networks, as well.

FEC processing in a receiver may be applied to a digital bit stream or in the demodulation of a digitally modulated carrier. For the latter, FEC is an integral part of the initial analog-to-digital conversion in the receiver. The Viterbi decoder implements a soft-decision algorithm to demodulate digital data from an analog signal corrupted by noise. Many FEC decoders can also generate a bit-error rate (BER) signal which can be used as feedback to fine-tune the analog receiving electronics.

FEC information is added to mass storage (magnetic, optical and solid state/flash based) devices to enable recovery of corrupted data, and is used as ECC computer memory on systems that require special provisions for reliability.

The maximum proportion of errors or missing bits that can be corrected is determined by the design of the ECC, so different forward error correcting codes are suitable for different conditions. In general, a stronger code induces more redundancy that needs to be transmitted using the available bandwidth, which reduces the effective bit-rate while improving the received effective signal-to-noise ratio. The noisy-channel coding theorem of Claude Shannon can be used to compute the maximum achievable communication bandwidth for a given maximum acceptable error probability. This establishes bounds on the theoretical maximum information transfer rate of a channel with some given base noise level. However, the proof is not constructive, and hence gives no insight of how to build a capacity achieving code. After years of research, some advanced FEC systems like polar code[3] come very close to the theoretical maximum given by the Shannon channel capacity under the hypothesis of an infinite length frame.

How it works[edit]

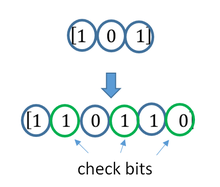

ECC is accomplished by adding redundancy to the transmitted information using an algorithm. A redundant bit may be a complex function of many original information bits. The original information may or may not appear literally in the encoded output; codes that include the unmodified input in the output are systematic, while those that do not are non-systematic.

A simplistic example of ECC is to transmit each data bit 3 times, which is known as a (3,1) repetition code. Through a noisy channel, a receiver might see 8 versions of the output, see table below.

| Triplet received | Interpreted as |

|---|---|

| 000 | 0 (error-free) |

| 001 | 0 |

| 010 | 0 |

| 100 | 0 |

| 111 | 1 (error-free) |

| 110 | 1 |

| 101 | 1 |

| 011 | 1 |

This allows an error in any one of the three samples to be corrected by «majority vote», or «democratic voting». The correcting ability of this ECC is:

- Up to 1 bit of triplet in error, or

- up to 2 bits of triplet omitted (cases not shown in table).

Though simple to implement and widely used, this triple modular redundancy is a relatively inefficient ECC. Better ECC codes typically examine the last several tens or even the last several hundreds of previously received bits to determine how to decode the current small handful of bits (typically in groups of 2 to 8 bits).

Averaging noise to reduce errors[edit]

ECC could be said to work by «averaging noise»; since each data bit affects many transmitted symbols, the corruption of some symbols by noise usually allows the original user data to be extracted from the other, uncorrupted received symbols that also depend on the same user data.

- Because of this «risk-pooling» effect, digital communication systems that use ECC tend to work well above a certain minimum signal-to-noise ratio and not at all below it.

- This all-or-nothing tendency – the cliff effect – becomes more pronounced as stronger codes are used that more closely approach the theoretical Shannon limit.

- Interleaving ECC coded data can reduce the all or nothing properties of transmitted ECC codes when the channel errors tend to occur in bursts. However, this method has limits; it is best used on narrowband data.

Most telecommunication systems use a fixed channel code designed to tolerate the expected worst-case bit error rate, and then fail to work at all if the bit error rate is ever worse.

However, some systems adapt to the given channel error conditions: some instances of hybrid automatic repeat-request use a fixed ECC method as long as the ECC can handle the error rate, then switch to ARQ when the error rate gets too high;

adaptive modulation and coding uses a variety of ECC rates, adding more error-correction bits per packet when there are higher error rates in the channel, or taking them out when they are not needed.

Types of ECC[edit]

A block code (specifically a Hamming code) where redundant bits are added as a block to the end of the initial message

A continuous code convolutional code where redundant bits are added continuously into the structure of the code word

The two main categories of ECC codes are block codes and convolutional codes.

- Block codes work on fixed-size blocks (packets) of bits or symbols of predetermined size. Practical block codes can generally be hard-decoded in polynomial time to their block length.

- Convolutional codes work on bit or symbol streams of arbitrary length. They are most often soft decoded with the Viterbi algorithm, though other algorithms are sometimes used. Viterbi decoding allows asymptotically optimal decoding efficiency with increasing constraint length of the convolutional code, but at the expense of exponentially increasing complexity. A convolutional code that is terminated is also a ‘block code’ in that it encodes a block of input data, but the block size of a convolutional code is generally arbitrary, while block codes have a fixed size dictated by their algebraic characteristics. Types of termination for convolutional codes include «tail-biting» and «bit-flushing».

There are many types of block codes; Reed–Solomon coding is noteworthy for its widespread use in compact discs, DVDs, and hard disk drives. Other examples of classical block codes include Golay, BCH, Multidimensional parity, and Hamming codes.

Hamming ECC is commonly used to correct NAND flash memory errors.[6]

This provides single-bit error correction and 2-bit error detection.

Hamming codes are only suitable for more reliable single-level cell (SLC) NAND.

Denser multi-level cell (MLC) NAND may use multi-bit correcting ECC such as BCH or Reed–Solomon.[7][8] NOR Flash typically does not use any error correction.[7]

Classical block codes are usually decoded using hard-decision algorithms,[9] which means that for every input and output signal a hard decision is made whether it corresponds to a one or a zero bit. In contrast, convolutional codes are typically decoded using soft-decision algorithms like the Viterbi, MAP or BCJR algorithms, which process (discretized) analog signals, and which allow for much higher error-correction performance than hard-decision decoding.

Nearly all classical block codes apply the algebraic properties of finite fields. Hence classical block codes are often referred to as algebraic codes.

In contrast to classical block codes that often specify an error-detecting or error-correcting ability, many modern block codes such as LDPC codes lack such guarantees. Instead, modern codes are evaluated in terms of their bit error rates.

Most forward error correction codes correct only bit-flips, but not bit-insertions or bit-deletions.

In this setting, the Hamming distance is the appropriate way to measure the bit error rate.

A few forward error correction codes are designed to correct bit-insertions and bit-deletions, such as Marker Codes and Watermark Codes.

The Levenshtein distance is a more appropriate way to measure the bit error rate when using such codes.

[10]

Code-rate and the tradeoff between reliability and data rate[edit]

The fundamental principle of ECC is to add redundant bits in order to help the decoder to find out the true message that was encoded by the transmitter. The code-rate of a given ECC system is defined as the ratio between the number of information bits and the total number of bits (i.e., information plus redundancy bits) in a given communication package. The code-rate is hence a real number. A low code-rate close to zero implies a strong code that uses many redundant bits to achieve a good performance, while a large code-rate close to 1 implies a weak code.

The redundant bits that protect the information have to be transferred using the same communication resources that they are trying to protect. This causes a fundamental tradeoff between reliability and data rate.[11] In one extreme, a strong code (with low code-rate) can induce an important increase in the receiver SNR (signal-to-noise-ratio) decreasing the bit error rate, at the cost of reducing the effective data rate. On the other extreme, not using any ECC (i.e., a code-rate equal to 1) uses the full channel for information transfer purposes, at the cost of leaving the bits without any additional protection.

One interesting question is the following: how efficient in terms of information transfer can an ECC be that has a negligible decoding error rate? This question was answered by Claude Shannon with his second theorem, which says that the channel capacity is the maximum bit rate achievable by any ECC whose error rate tends to zero:[12] His proof relies on Gaussian random coding, which is not suitable to real-world applications. The upper bound given by Shannon’s work inspired a long journey in designing ECCs that can come close to the ultimate performance boundary. Various codes today can attain almost the Shannon limit. However, capacity achieving ECCs are usually extremely complex to implement.

The most popular ECCs have a trade-off between performance and computational complexity. Usually, their parameters give a range of possible code rates, which can be optimized depending on the scenario. Usually, this optimization is done in order to achieve a low decoding error probability while minimizing the impact to the data rate. Another criterion for optimizing the code rate is to balance low error rate and retransmissions number in order to the energy cost of the communication.[13]

Concatenated ECC codes for improved performance[edit]

Classical (algebraic) block codes and convolutional codes are frequently combined in concatenated coding schemes in which a short constraint-length Viterbi-decoded convolutional code does most of the work and a block code (usually Reed–Solomon) with larger symbol size and block length «mops up» any errors made by the convolutional decoder. Single pass decoding with this family of error correction codes can yield very low error rates, but for long range transmission conditions (like deep space) iterative decoding is recommended.

Concatenated codes have been standard practice in satellite and deep space communications since Voyager 2 first used the technique in its 1986 encounter with Uranus. The Galileo craft used iterative concatenated codes to compensate for the very high error rate conditions caused by having a failed antenna.

Low-density parity-check (LDPC)[edit]

Low-density parity-check (LDPC) codes are a class of highly efficient linear block

codes made from many single parity check (SPC) codes. They can provide performance very close to the channel capacity (the theoretical maximum) using an iterated soft-decision decoding approach, at linear time complexity in terms of their block length. Practical implementations rely heavily on decoding the constituent SPC codes in parallel.

LDPC codes were first introduced by Robert G. Gallager in his PhD thesis in 1960,

but due to the computational effort in implementing encoder and decoder and the introduction of Reed–Solomon codes,

they were mostly ignored until the 1990s.

LDPC codes are now used in many recent high-speed communication standards, such as DVB-S2 (Digital Video Broadcasting – Satellite – Second Generation), WiMAX (IEEE 802.16e standard for microwave communications), High-Speed Wireless LAN (IEEE 802.11n),[14] 10GBase-T Ethernet (802.3an) and G.hn/G.9960 (ITU-T Standard for networking over power lines, phone lines and coaxial cable). Other LDPC codes are standardized for wireless communication standards within 3GPP MBMS (see fountain codes).

Turbo codes[edit]

Turbo coding is an iterated soft-decoding scheme that combines two or more relatively simple convolutional codes and an interleaver to produce a block code that can perform to within a fraction of a decibel of the Shannon limit. Predating LDPC codes in terms of practical application, they now provide similar performance.

One of the earliest commercial applications of turbo coding was the CDMA2000 1x (TIA IS-2000) digital cellular technology developed by Qualcomm and sold by Verizon Wireless, Sprint, and other carriers. It is also used for the evolution of CDMA2000 1x specifically for Internet access, 1xEV-DO (TIA IS-856). Like 1x, EV-DO was developed by Qualcomm, and is sold by Verizon Wireless, Sprint, and other carriers (Verizon’s marketing name for 1xEV-DO is Broadband Access, Sprint’s consumer and business marketing names for 1xEV-DO are Power Vision and Mobile Broadband, respectively).

Local decoding and testing of codes[edit]

Sometimes it is only necessary to decode single bits of the message, or to check whether a given signal is a codeword, and do so without looking at the entire signal. This can make sense in a streaming setting, where codewords are too large to be classically decoded fast enough and where only a few bits of the message are of interest for now. Also such codes have become an important tool in computational complexity theory, e.g., for the design of probabilistically checkable proofs.

Locally decodable codes are error-correcting codes for which single bits of the message can be probabilistically recovered by only looking at a small (say constant) number of positions of a codeword, even after the codeword has been corrupted at some constant fraction of positions. Locally testable codes are error-correcting codes for which it can be checked probabilistically whether a signal is close to a codeword by only looking at a small number of positions of the signal.

Interleaving[edit]

«Interleaver» redirects here. For the fiber-optic device, see optical interleaver.

A short illustration of interleaving idea

Interleaving is frequently used in digital communication and storage systems to improve the performance of forward error correcting codes. Many communication channels are not memoryless: errors typically occur in bursts rather than independently. If the number of errors within a code word exceeds the error-correcting code’s capability, it fails to recover the original code word. Interleaving alleviates this problem by shuffling source symbols across several code words, thereby creating a more uniform distribution of errors.[15] Therefore, interleaving is widely used for burst error-correction.

The analysis of modern iterated codes, like turbo codes and LDPC codes, typically assumes an independent distribution of errors.[16] Systems using LDPC codes therefore typically employ additional interleaving across the symbols within a code word.[17]

For turbo codes, an interleaver is an integral component and its proper design is crucial for good performance.[15][18] The iterative decoding algorithm works best when there are not short cycles in the factor graph that represents the decoder; the interleaver is chosen to avoid short cycles.

Interleaver designs include:

- rectangular (or uniform) interleavers (similar to the method using skip factors described above)

- convolutional interleavers

- random interleavers (where the interleaver is a known random permutation)

- S-random interleaver (where the interleaver is a known random permutation with the constraint that no input symbols within distance S appear within a distance of S in the output).[19]

- a contention-free quadratic permutation polynomial (QPP).[20] An example of use is in the 3GPP Long Term Evolution mobile telecommunication standard.[21]

In multi-carrier communication systems, interleaving across carriers may be employed to provide frequency diversity, e.g., to mitigate frequency-selective fading or narrowband interference.[22]

Example[edit]

Transmission without interleaving:

Error-free message: aaaabbbbccccddddeeeeffffgggg Transmission with a burst error: aaaabbbbccc____deeeeffffgggg

Here, each group of the same letter represents a 4-bit one-bit error-correcting codeword. The codeword cccc is altered in one bit and can be corrected, but the codeword dddd is altered in three bits, so either it cannot be decoded at all or it might be decoded incorrectly.

With interleaving:

Error-free code words: aaaabbbbccccddddeeeeffffgggg Interleaved: abcdefgabcdefgabcdefgabcdefg Transmission with a burst error: abcdefgabcd____bcdefgabcdefg Received code words after deinterleaving: aa_abbbbccccdddde_eef_ffg_gg

In each of the codewords «aaaa», «eeee», «ffff», and «gggg», only one bit is altered, so one-bit error-correcting code will decode everything correctly.

Transmission without interleaving:

Original transmitted sentence: ThisIsAnExampleOfInterleaving Received sentence with a burst error: ThisIs______pleOfInterleaving

The term «AnExample» ends up mostly unintelligible and difficult to correct.

With interleaving:

Transmitted sentence: ThisIsAnExampleOfInterleaving... Error-free transmission: TIEpfeaghsxlIrv.iAaenli.snmOten. Received sentence with a burst error: TIEpfe______Irv.iAaenli.snmOten. Received sentence after deinterleaving: T_isI_AnE_amp_eOfInterle_vin_...

No word is completely lost and the missing letters can be recovered with minimal guesswork.

Disadvantages of interleaving[edit]

Use of interleaving techniques increases total delay. This is because the entire interleaved block must be received before the packets can be decoded.[23] Also interleavers hide the structure of errors; without an interleaver, more advanced decoding algorithms can take advantage of the error structure and achieve more reliable communication than a simpler decoder combined with an interleaver[citation needed]. An example of such an algorithm is based on neural network[24] structures.

Software for error-correcting codes[edit]

Simulating the behaviour of error-correcting codes (ECCs) in software is a common practice to design, validate and improve ECCs. The upcoming wireless 5G standard raises a new range of applications for the software ECCs: the Cloud Radio Access Networks (C-RAN) in a Software-defined radio (SDR) context. The idea is to directly use software ECCs in the communications. For instance in the 5G, the software ECCs could be located in the cloud and the antennas connected to this computing resources: improving this way the flexibility of the communication network and eventually increasing the energy efficiency of the system.

In this context, there are various available Open-source software listed below (non exhaustive).

- AFF3CT(A Fast Forward Error Correction Toolbox): a full communication chain in C++ (many supported codes like Turbo, LDPC, Polar codes, etc.), very fast and specialized on channel coding (can be used as a program for simulations or as a library for the SDR).

- IT++: a C++ library of classes and functions for linear algebra, numerical optimization, signal processing, communications, and statistics.

- OpenAir: implementation (in C) of the 3GPP specifications concerning the Evolved Packet Core Networks.

List of error-correcting codes[edit]

| Distance | Code |

|---|---|

| 2 (single-error detecting) | Parity |

| 3 (single-error correcting) | Triple modular redundancy |

| 3 (single-error correcting) | perfect Hamming such as Hamming(7,4) |

| 4 (SECDED) | Extended Hamming |

| 5 (double-error correcting) | |

| 6 (double-error correct-/triple error detect) | Nordstrom-Robinson code |

| 7 (three-error correcting) | perfect binary Golay code |

| 8 (TECFED) | extended binary Golay code |

- AN codes

- BCH code, which can be designed to correct any arbitrary number of errors per code block.

- Barker code used for radar, telemetry, ultra sound, Wifi, DSSS mobile phone networks, GPS etc.

- Berger code

- Constant-weight code

- Convolutional code

- Expander codes

- Group codes

- Golay codes, of which the Binary Golay code is of practical interest

- Goppa code, used in the McEliece cryptosystem

- Hadamard code

- Hagelbarger code

- Hamming code

- Latin square based code for non-white noise (prevalent for example in broadband over powerlines)

- Lexicographic code

- Linear Network Coding, a type of erasure correcting code across networks instead of point-to-point links

- Long code

- Low-density parity-check code, also known as Gallager code, as the archetype for sparse graph codes

- LT code, which is a near-optimal rateless erasure correcting code (Fountain code)

- m of n codes

- Nordstrom-Robinson code, used in Geometry and Group Theory[25]

- Online code, a near-optimal rateless erasure correcting code

- Polar code (coding theory)

- Raptor code, a near-optimal rateless erasure correcting code

- Reed–Solomon error correction

- Reed–Muller code

- Repeat-accumulate code

- Repetition codes, such as Triple modular redundancy

- Spinal code, a rateless, nonlinear code based on pseudo-random hash functions[26]

- Tornado code, a near-optimal erasure correcting code, and the precursor to Fountain codes

- Turbo code

- Walsh–Hadamard code

- Cyclic redundancy checks (CRCs) can correct 1-bit errors for messages at most

bits long for optimal generator polynomials of degree

, see Mathematics of cyclic redundancy checks#Bitfilters

See also[edit]

- Code rate

- Erasure codes

- Soft-decision decoder

- Burst error-correcting code

- Error detection and correction

- Error-correcting codes with feedback

References[edit]

- ^ Charles Wang; Dean Sklar; Diana Johnson (Winter 2001–2002). «Forward Error-Correction Coding». Crosslink. The Aerospace Corporation. 3 (1). Archived from the original on 14 March 2012. Retrieved 5 March 2006.

- ^ Charles Wang; Dean Sklar; Diana Johnson (Winter 2001–2002). «Forward Error-Correction Coding». Crosslink. The Aerospace Corporation. 3 (1). Archived from the original on 14 March 2012. Retrieved 5 March 2006.

How Forward Error-Correcting Codes Work]

- ^ a b Maunder, Robert (2016). «Overview of Channel Coding».

- ^ Glover, Neal; Dudley, Trent (1990). Practical Error Correction Design For Engineers (Revision 1.1, 2nd ed.). CO, USA: Cirrus Logic. ISBN 0-927239-00-0.

- ^ a b Hamming, Richard Wesley (April 1950). «Error Detecting and Error Correcting Codes». Bell System Technical Journal. USA: AT&T. 29 (2): 147–160. doi:10.1002/j.1538-7305.1950.tb00463.x. S2CID 61141773.

- ^ «Hamming codes for NAND flash memory devices» Archived 21 August 2016 at the Wayback Machine. EE Times-Asia. Apparently based on «Micron Technical Note TN-29-08: Hamming Codes for NAND Flash Memory Devices». 2005. Both say: «The Hamming algorithm is an industry-accepted method for error detection and correction in many SLC NAND flash-based applications.»

- ^ a b «What Types of ECC Should Be Used on Flash Memory?» (Application note). Spansion. 2011.

Both Reed–Solomon algorithm and BCH algorithm are common ECC choices for MLC NAND flash. … Hamming based block codes are the most commonly used ECC for SLC…. both Reed–Solomon and BCH are able to handle multiple errors and are widely used on MLC flash.

- ^ Jim Cooke (August 2007). «The Inconvenient Truths of NAND Flash Memory» (PDF). p. 28.

For SLC, a code with a correction threshold of 1 is sufficient. t=4 required … for MLC.

- ^ Baldi, M.; Chiaraluce, F. (2008). «A Simple Scheme for Belief Propagation Decoding of BCH and RS Codes in Multimedia Transmissions». International Journal of Digital Multimedia Broadcasting. 2008: 1–12. doi:10.1155/2008/957846.

- ^ Shah, Gaurav; Molina, Andres; Blaze, Matt (2006). «Keyboards and covert channels». USENIX. Retrieved 20 December 2018.

- ^ Tse, David; Viswanath, Pramod (2005), Fundamentals of Wireless Communication, Cambridge University Press, UK

- ^ Shannon, C. E. (1948). «A mathematical theory of communication» (PDF). Bell System Technical Journal. 27 (3–4): 379–423 & 623–656. doi:10.1002/j.1538-7305.1948.tb01338.x. hdl:11858/00-001M-0000-002C-4314-2.

- ^ Rosas, F.; Brante, G.; Souza, R. D.; Oberli, C. (2014). «Optimizing the code rate for achieving energy-efficient wireless communications». Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC). pp. 775–780. doi:10.1109/WCNC.2014.6952166. ISBN 978-1-4799-3083-8.

- ^ IEEE Standard, section 20.3.11.6 «802.11n-2009» Archived 3 February 2013 at the Wayback Machine, IEEE, 29 October 2009, accessed 21 March 2011.

- ^ a b Vucetic, B.; Yuan, J. (2000). Turbo codes: principles and applications. Springer Verlag. ISBN 978-0-7923-7868-6.

- ^ Luby, Michael; Mitzenmacher, M.; Shokrollahi, A.; Spielman, D.; Stemann, V. (1997). «Practical Loss-Resilient Codes». Proc. 29th Annual Association for Computing Machinery (ACM) Symposium on Theory of Computation.

- ^ «Digital Video Broadcast (DVB); Second generation framing structure, channel coding and modulation systems for Broadcasting, Interactive Services, News Gathering and other satellite broadband applications (DVB-S2)». En 302 307. ETSI (V1.2.1). April 2009.

- ^ Andrews, K. S.; Divsalar, D.; Dolinar, S.; Hamkins, J.; Jones, C. R.; Pollara, F. (November 2007). «The Development of Turbo and LDPC Codes for Deep-Space Applications». Proceedings of the IEEE. 95 (11): 2142–2156. doi:10.1109/JPROC.2007.905132. S2CID 9289140.

- ^ Dolinar, S.; Divsalar, D. (15 August 1995). «Weight Distributions for Turbo Codes Using Random and Nonrandom Permutations». TDA Progress Report. 122: 42–122. Bibcode:1995TDAPR.122…56D. CiteSeerX 10.1.1.105.6640.

- ^ Takeshita, Oscar (2006). «Permutation Polynomial Interleavers: An Algebraic-Geometric Perspective». IEEE Transactions on Information Theory. 53 (6): 2116–2132. arXiv:cs/0601048. Bibcode:2006cs……..1048T. doi:10.1109/TIT.2007.896870. S2CID 660.

- ^ 3GPP TS 36.212, version 8.8.0, page 14

- ^ «Digital Video Broadcast (DVB); Frame structure, channel coding and modulation for a second generation digital terrestrial television broadcasting system (DVB-T2)». En 302 755. ETSI (V1.1.1). September 2009.

- ^ Techie (3 June 2010). «Explaining Interleaving». W3 Techie Blog. Retrieved 3 June 2010.

- ^ Krastanov, Stefan; Jiang, Liang (8 September 2017). «Deep Neural Network Probabilistic Decoder for Stabilizer Codes». Scientific Reports. 7 (1): 11003. arXiv:1705.09334. Bibcode:2017NatSR…711003K. doi:10.1038/s41598-017-11266-1. PMC 5591216. PMID 28887480.

- ^ Nordstrom, A.W.; Robinson, J.P. (1967), «An optimum nonlinear code», Information and Control, 11 (5–6): 613–616, doi:10.1016/S0019-9958(67)90835-2

- ^ Perry, Jonathan; Balakrishnan, Hari; Shah, Devavrat (2011). «Rateless Spinal Codes». Proceedings of the 10th ACM Workshop on Hot Topics in Networks. pp. 1–6. doi:10.1145/2070562.2070568. hdl:1721.1/79676. ISBN 9781450310598.

Further reading[edit]

- MacWilliams, Florence Jessiem; Sloane, Neil James Alexander (2007) [1977]. Written at AT&T Shannon Labs, Florham Park, New Jersey, USA. The Theory of Error-Correcting Codes. North-Holland Mathematical Library. Vol. 16 (digital print of 12th impression, 1st ed.). Amsterdam / London / New York / Tokyo: North-Holland / Elsevier BV. ISBN 978-0-444-85193-2. LCCN 76-41296. (xxii+762+6 pages)

- Clark, Jr., George C.; Cain, J. Bibb (1981). Error-Correction Coding for Digital Communications. New York, USA: Plenum Press. ISBN 0-306-40615-2.

- Arazi, Benjamin (1987). Swetman, Herb (ed.). A Commonsense Approach to the Theory of Error Correcting Codes. MIT Press Series in Computer Systems. Vol. 10 (1 ed.). Cambridge, Massachusetts, USA / London, UK: Massachusetts Institute of Technology. ISBN 0-262-01098-4. LCCN 87-21889. (x+2+208+4 pages)

- Wicker, Stephen B. (1995). Error Control Systems for Digital Communication and Storage. Englewood Cliffs, New Jersey, USA: Prentice-Hall. ISBN 0-13-200809-2.

- Wilson, Stephen G. (1996). Digital Modulation and Coding. Englewood Cliffs, New Jersey, USA: Prentice-Hall. ISBN 0-13-210071-1.

- «Error Correction Code in Single Level Cell NAND Flash memories» 2007-02-16

- «Error Correction Code in NAND Flash memories» 2004-11-29

- Observations on Errors, Corrections, & Trust of Dependent Systems, by James Hamilton, 2012-02-26

- Sphere Packings, Lattices and Groups, By J. H. Conway, Neil James Alexander Sloane, Springer Science & Business Media, 2013-03-09 – Mathematics – 682 pages.

External links[edit]

- Morelos-Zaragoza, Robert (2004). «The Correcting Codes (ECC) Page». Retrieved 5 March 2006.

- lpdec: library for LP decoding and related things (Python)

Forward Error Correction (FEC) is a technique used to minimize errors in data transmission over communication channels. In real-time multimedia transmission, re-transmission of corrupted and lost packets is not useful because it creates an unacceptable delay in reproducing : one needs to wait until the lost or corrupted packet is resent. Thus, there must be some technique which could correct the error or reproduce the packet immediately and give the receiver the ability to correct errors without needing a reverse channel to request re-transmission of data. There are various FEC techniques designed for this purpose.

These are as follows :

1. Using Hamming Distance :

For error correction, the minimum hamming distance required to correct t errors is:

For example, if 20 errors are to be corrected then the minimum hamming distance has to be 2*20+1= 41 bits. This means, lots of redundant bits need to be sent with the data. This technique is very rarely used as we have large amount of data to be sent over the networks, and such a high redundancy cannot be afforded most of the time.

2. Using XOR :

The exclusive OR technique is quite useful as the data items can be recreated by this technique. The XOR property is used as follows –

If the XOR property is applied on N data items, we can recreate any of the data items P1 to PN by exclusive-Oring all of the items, replacing the one to be created by the result of the previous operation(R). In this technique, a packet is divided into N chunks, and then the exclusive OR of all the chunks is created and then, N+1 chunks are sent. If any chunk is lost or corrupted, it can be recreated at the receiver side.

Practically, if N=4, it means that 25 percent extra data has to be sent and the data can be corrected if only one out of the four chunks is lost.

3. Chunk Interleaving :

In this technique, each data packet is divided into chunks. The data is then created chunk by chunk(horizontally) but the chunks are combined into packets vertically. This is done because by doing so, each packet sent carries a chunk from several original packets. If the packet is lost, we miss only one chunk in each packet, which is normally acceptable in multimedia communication. Some small chunks are allowed to be missing at the receiver. One chunk can be afforded to be missing in each packet as all the chunks from the same packet cannot be allowed to miss.

4.3. Метод коррекции ошибок FEC (Forward Error Correction)

Транспортировка данных подвержена влиянию шумов и наводок, которые вносят искажения. Если вероятность повреждения данных мала, достаточно зарегистрировать сам факт искажения и повторить передачу поврежденного фрагмента.

Когда вероятность искажения велика, например, в каналах коммуникаций с геостационарными спутниками, используются методы коррекции ошибок. Одним из таких методов является FEC (Forward Error Correction, иногда называемое канальным кодированием ) [4.1]. Технология FEC в последнее время достаточно широко используется в беспроводных локальных сетях (WLAN). Существуют две основные разновидности FEC: блочное кодирование и кодирование по методу свертки.

Блочное кодирование работает с блоками (пакетами) бит или символов фиксированного размера. Метод свертки работает с потоками бит или символов произвольной протяженности. Коды свертки при желании могут быть преобразованы в блочные коды.

Существует большое число блочных кодов, одним из наиболее важных является алгоритм Рида-Соломона, который используется при работе с CD, DVD и жесткими дисками ЭВМ. Блочные коды и коды свертки могут использоваться и совместно.

Для FEC -кодирования иногда используется метод сверки, который впервые был применен в 1955 году. Главной особенностью этого метода является сильная зависимость кодирования от предыдущих информационных битов и высокие требования к объему памяти. FEC -код обычно просматривает при декодировании 2-8 бит десятки или даже сотни бит, полученных ранее.

В 1967 году Эндрю Витерби (Andrew Viterbi) разработал технику декодирования, которая стала стандартной для кодов свертки. Эта методика требовала меньше памяти. Метод свертки более эффективен, когда ошибки распределены случайным образом, а не группируются в кластеры. Работа же с кластерами ошибок более эффективна при использовании алгебраического кодирования.

Одной из широко применяемых разновидностей коррекции ошибок является турбо-кодирование, разработанное американской аэрокосмической корпорацией. В этой схеме комбинируется два или более относительно простых кодов свертки. В FEC, так же как и в других методах коррекции ошибок (коды Хэмминга, алгоритм Рида-Соломона и др.), блоки данных из k бит снабжаются кодами четности, которые пересылаются вместе с данными и обеспечивают не только детектирование, но и исправление ошибок. Каждый дополнительный (избыточный) бит является сложной функцией многих исходных информационных бит. Исходная информация может содержаться в выходном передаваемом коде, тогда такой код называется систематическим, а может и не содержаться.

В результате через канал передается n -битовое кодовое слово ( n>k ). Конкретная реализация алгоритма FEC характеризуется комбинацией ( n, k ). Применение FEC в Интернете регламентируется документом RFC3452. Коды FEC могут исключить необходимость обратной связи при потере или искажении доставленных данных (запросы повторной передачи). Особенно привлекательна технология FEC при работе с мультикастинг-потоками, где ретрансмиссия не предусматривается (см. RFC-3453).

В 1974 году Йозеф Оденвальдер (Joseph odenwalder) объединил возможности алгебраического кодирования и метода свертки. Хорошего результата можно добиться, введя специальную операцию псевдослучайного перемешивания бит (interleaver).

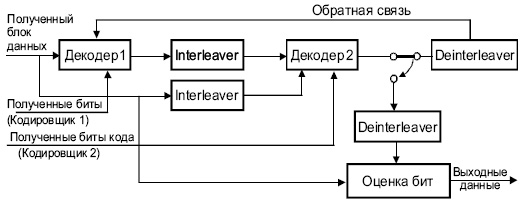

В 1993 году группой Клода Берроу (Claude Berrou) был разработан турбо-код. В кодеке, реализующем этот алгоритм, содержатся кодировщики как минимум двух компонент (реализующие алгебраический метод или свертку). Кодирование осуществляется для блоков данных. Здесь также используется псевдослучайное перемешивание бит перед передачей. Это приводит к тому, что кластеры ошибок, внесенных при транспортировке, оказываются разнесенными случайным образом в пределах блока данных.

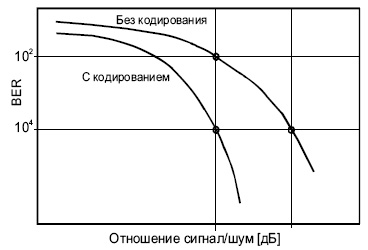

На

рис.

4.8 проводится сравнение вариантов BER (Bit Error Rate) при обычной транспортировке данных через канал и при передаче тех же данных с использованием коррекции ошибок FEC для разных значений отношения сигнал-шум ( S/N ). Из этих данных видно, что при отношении S/N= 8 дБ применение FEC позволяет понизить BER примерно в 100 раз. При этом достигается результат, близкий (в пределах одного децибела) к теоретическому пределу Шеннона.

За последние пять лет были разработаны программы, которые позволяют оптимизировать структуры турбо-кодов. Улучшение BER для турбокодов имеет асимптотический предел, и дальнейшее увеличение S/N уже не дает никакого выигрыша. Но схемы, позволяющие смягчить влияние этого насыщения, продолжают разрабатываться.

Рис.

4.8.

Турбо-кодек должен иметь столько же компонентных декодеров, сколько имеется кодировщиков на стороне передатчика. Декодеры соединяются последовательно.

Рис.

4.9.

Турбо-декодер

Техника FEC находит все большее применение в телекоммуникациях, например, при передачи мультимедиа [2].

Следует помнить, что, как в случае FEC , так и в других известных методах коррекции ошибок ( BCH , Golay, Hamming и др.) скорректированный код является верным лишь с определенной конечной вероятностью.

This is the approved revision of this page, as well as being the most recent.

In telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or noisy communication channels.

The central idea is the sender encodes the message in a redundant way by using an error-correcting code (ECC).

The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code.

The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. FEC gives the receiver the ability to correct errors without needing a reverse channel to request retransmission of data, but at the cost of a fixed, higher forward channel bandwidth. FEC is therefore applied in situations where retransmissions are costly or impossible, such as one-way communication links and when transmitting to multiple receivers in multicast. FEC information is usually added to mass storage devices to enable recovery of corrupted data, is widely used in modems, and is used on systems where the primary memory is ECC memory.

FEC processing in a receiver may be applied to a digital bit stream or in the demodulation of a digitally modulated carrier. For the latter, FEC is an integral part of the initial analog-to-digital conversion in the receiver. The Viterbi decoder implements a soft-decision algorithm to demodulate digital data from an analog signal corrupted by noise. Many FEC coders can also generate a bit-error rate (BER) signal which can be used as feedback to fine-tune the analog receiving electronics.

The noisy-channel coding theorem establishes bounds on the theoretical maximum information transfer rate of a channel with some given noise level.

Some advanced FEC systems come very close to the theoretical maximum.

The maximum fractions of errors or of missing bits that can be corrected is determined by the design of the FEC code, so different forward error correcting codes are suitable for different conditions.

Contents

- 1 How it works

- 2 Averaging noise to reduce errors

- 3 Types of FEC

- 4 Concatenated FEC codes for improved performance

- 5 Low-density parity-check (LDPC)

- 6 Turbo codes

- 7 Local decoding and testing of codes

-

8 Interleaving

- 8.1 Example

- 8.2 Disadvantages of interleaving

- 9 Software for error-correcting codes

- 10 List of error-correcting codes

- 11 See also

- 12 Source

How it works[edit]

FEC is accomplished by adding redundancy to the transmitted information using an algorithm. A redundant bit may be a complex function of many original information bits. The original information may or may not appear literally in the encoded output; codes that include the unmodified input in the output are systematic, while those that do not are non-systematic.

A simplistic example of FEC is to transmit each data bit 3 times, which is known as a (3,1) repetition code. Through a noisy channel, a receiver might see 8 versions of the output, see table below.

| Triplet received | Interpreted as |

|---|---|

| 000 | 0 (error free) |

| 001 | 0 |

| 010 | 0 |

| 100 | 0 |

| 111 | 1 (error free) |

| 110 | 1 |

| 101 | 1 |

| 011 | 1 |

This allows an error in any one of the three samples to be corrected by «majority vote» or «democratic voting». The correcting ability of this FEC is:

- Up to 1 bit of triplet in error, or

- up to 2 bits of triplet omitted (cases not shown in table).

Though simple to implement and widely used, this triple modular redundancy is a relatively inefficient FEC. Better FEC codes typically examine the last several dozen, or even the last several hundred, previously received bits to determine how to decode the current small handful of bits (typically in groups of 2 to 8 bits).

Averaging noise to reduce errors[edit]

FEC could be said to work by «averaging noise»; since each data bit affects many transmitted symbols, the corruption of some symbols by noise usually allows the original user data to be extracted from the other, uncorrupted received symbols that also depend on the same user data.

- Because of this «risk-pooling» effect, digital communication systems that use FEC tend to work well above a certain minimum signal-to-noise ratio and not at all below it.

- This all-or-nothing tendency — the cliff effect — becomes more pronounced as stronger codes are used that more closely approach the theoretical Shannon limit.

- Interleaving FEC coded data can reduce the all or nothing properties of transmitted FEC codes when the channel errors tend to occur in bursts. However, this method has limits; it is best used on narrowband data.

Most telecommunication systems use a fixed channel code designed to tolerate the expected worst-case bit error rate, and then fail to work at all if the bit error rate is ever worse.

However, some systems adapt to the given channel error conditions: some instances of hybrid automatic repeat-request use a fixed FEC method as long as the FEC can handle the error rate, then switch to ARQ when the error rate gets too high;

adaptive modulation and coding uses a variety of FEC rates, adding more error-correction bits per packet when there are higher error rates in the channel, or taking them out when they are not needed.

Types of FEC[edit]

The two main categories of FEC codes are block codes and convolutional codes.

- Block codes work on fixed-size blocks (packets) of bits or symbols of predetermined size. Practical block codes can generally be hard-decoded in polynomial time to their block length.

- Convolutional codes work on bit or symbol streams of arbitrary length. They are most often soft decoded with the Viterbi algorithm, though other algorithms are sometimes used. Viterbi decoding allows asymptotically optimal decoding efficiency with increasing constraint length of the convolutional code, but at the expense of exponentially increasing complexity. A convolutional code that is terminated is also a ‘block code’ in that it encodes a block of input data, but the block size of a convolutional code is generally arbitrary, while block codes have a fixed size dictated by their algebraic characteristics. Types of termination for convolutional codes include «tail-biting» and «bit-flushing».

There are many types of block codes, but among the classical ones the most notable is Reed-Solomon coding because of its widespread use in compact discs, DVDs, and hard disk drives. Other examples of classical block codes include Golay, BCH, Multidimensional parity, and Hamming codes.

Hamming ECC is commonly used to correct NAND flash memory errors.

This provides single-bit error correction and 2-bit error detection.

Hamming codes are only suitable for more reliable single level cell (SLC) NAND.

Denser multi level cell (MLC) NAND requires stronger multi-bit correcting ECC such as BCH or Reed–Solomon.

NOR Flash typically does not use any error correction. which means that for every input and output signal a hard decision is made whether it corresponds to a one or a zero bit. In contrast, convolutional codes are typically decoded using soft-decision algorithms like the Viterbi, MAP or BCJR algorithms, which process (discretized) analog signals, and which allow for much higher error-correction performance than hard-decision decoding.

Nearly all classical block codes apply the algebraic properties of finite fields. Hence classical block codes are often referred to as algebraic codes.

In contrast to classical block codes that often specify an error-detecting or error-correcting ability, many modern block codes such as LDPC codes lack such guarantees. Instead, modern codes are evaluated in terms of their bit error rates.

Most forward error correction codes correct only bit-flips, but not bit-insertions or bit-deletions.

In this setting, the Hamming distance is the appropriate way to measure the bit error rate.

A few forward error correction codes are designed to correct bit-insertions and bit-deletions, such as Marker Codes and Watermark Codes.

The Levenshtein distance is a more appropriate way to measure the bit error rate when using such codes.

Concatenated FEC codes for improved performance[edit]

Classical (algebraic) block codes and convolutional codes are frequently combined in concatenated coding schemes in which a short constraint-length Viterbi-decoded convolutional code does most of the work and a block code (usually Reed-Solomon) with larger symbol size and block length «mops up» any errors made by the convolutional decoder. Single pass decoding with this family of error correction codes can yield very low error rates, but for long range transmission conditions (like deep space) iterative decoding is recommended.

Concatenated codes have been standard practice in satellite and deep space communications since Voyager 2 first used the technique in its 1986 encounter with Uranus. The Galileo craft used iterative concatenated codes to compensate for the very high error rate conditions caused by having a failed antenna.

Low-density parity-check (LDPC)[edit]

Low-density parity-check (LDPC) codes are a class of recently re-discovered highly efficient linear block

codes made from many single parity check (SPC) codes. They can provide performance very close to the channel capacity (the theoretical maximum) using an iterated soft-decision decoding approach, at linear time complexity in terms of their block length. Practical implementations rely heavily on decoding the constituent SPC codes in parallel.

LDPC codes were first introduced by Robert G. Gallager in his PhD thesis in 1960,

but due to the computational effort in implementing encoder and decoder and the introduction of Reed–Solomon codes,

they were mostly ignored until recently.

LDPC codes are now used in many recent high-speed communication standards, such as DVB-S2 (Digital video broadcasting), WiMAX (IEEE 802.16e standard for microwave communications), High-Speed Wireless LAN (IEEE 802.11n), 10GBase-T Ethernet (802.3an) and G.hn/G.9960 (ITU-T Standard for networking over power lines, phone lines and coaxial cable). Other LDPC codes are standardized for wireless communication standards within 3GPP MBMS (see fountain codes).

Turbo codes[edit]

Turbo coding is an iterated soft-decoding scheme that combines two or more relatively simple convolutional codes and an interleaver to produce a block code that can perform to within a fraction of a decibel of the Shannon limit. Predating LDPC codes in terms of practical application, they now provide similar performance.

One of the earliest commercial applications of turbo coding was the CDMA2000 1x (TIA IS-2000) digital cellular technology developed by Qualcomm and sold by Verizon Wireless, Sprint, and other carriers. It is also used for the evolution of CDMA2000 1x specifically for Internet access, 1xEV-DO (TIA IS-856). Like 1x, EV-DO was developed by Qualcomm, and is sold by Verizon Wireless, Sprint, and other carriers (Verizon’s marketing name for 1xEV-DO is Broadband Access, Sprint’s consumer and business marketing names for 1xEV-DO are Power Vision and Mobile Broadband, respectively).

Local decoding and testing of codes[edit]

Sometimes it is only necessary to decode single bits of the message, or to check whether a given signal is a codeword, and do so without looking at the entire signal. This can make sense in a streaming setting, where codewords are too large to be classically decoded fast enough and where only a few bits of the message are of interest for now. Also such codes have become an important tool in computational complexity theory, e.g., for the design of probabilistically checkable proofs.

Locally decodable codes are error-correcting codes for which single bits of the message can be probabilistically recovered by only looking at a small (say constant) number of positions of a codeword, even after the codeword has been corrupted at some constant fraction of positions. Locally testable codes are error-correcting codes for which it can be checked probabilistically whether a signal is close to a codeword by only looking at a small number of positions of the signal.

Interleaving[edit]

Interleaving is frequently used in digital communication and storage systems to improve the performance of forward error correcting codes. Many communication channels are not memoryless: errors typically occur in bursts rather than independently. If the number of errors within a code word exceeds the error-correcting code’s capability, it fails to recover the original code word. Interleaving ameliorates this problem by shuffling source symbols across several code words, thereby creating a more uniform distribution of errors. Therefore, interleaving is widely used for burst error-correction.

The analysis of modern iterated codes, like turbo codes and LDPC codes, typically assumes an independent distribution of errors. Systems using LDPC codes therefore typically employ additional interleaving across the symbols within a code word.

For turbo codes, an interleaver is an integral component and its proper design is crucial for good performance. The iterative decoding algorithm works best when there are not short cycles in the factor graph that represents the decoder; the interleaver is chosen to avoid short cycles.

Interleaver designs include:

- rectangular (or uniform) interleavers (similar to the method using skip factors described above)

- convolutional interleavers

- random interleavers (where the interleaver is a known random permutation)

- S-random interleaver (where the interleaver is a known random permutation with the constraint that no input symbols within distance S appear within a distance of S in the output).

- Another possible construction is a contention-free quadratic permutation polynomial (QPP). It is used for example in the 3GPP Long Term Evolution mobile telecommunication standard.

In multi-carrier communication systems, interleaving across carriers may be employed to provide frequency diversity, e.g., to mitigate frequency-selective fading or narrowband interference.

Example[edit]

Transmission without interleaving:

Error-free message: aaaabbbbccccddddeeeeffffgggg Transmission with a burst error: aaaabbbbccc____deeeeffffgggg

Here, each group of the same letter represents a 4-bit one-bit error-correcting codeword. The codeword cccc is altered in one bit and can be corrected, but the codeword dddd is altered in three bits, so either it cannot be decoded at all or it might be decoded incorrectly.

With interleaving:

Error-free code words: aaaabbbbccccddddeeeeffffgggg Interleaved: abcdefgabcdefgabcdefgabcdefg Transmission with a burst error: abcdefgabcd____bcdefgabcdefg Received code words after deinterleaving: aa_abbbbccccdddde_eef_ffg_gg

In each of the codewords aaaa, eeee, ffff, gggg, only one bit is altered, so one-bit error-correcting code will decode everything correctly.

Transmission without interleaving:

Original transmitted sentence: ThisIsAnExampleOfInterleaving Received sentence with a burst error: ThisIs______pleOfInterleaving

The term «AnExample» ends up mostly unintelligible and difficult to correct.

With interleaving:

Transmitted sentence: ThisIsAnExampleOfInterleaving... Error-free transmission: TIEpfeaghsxlIrv.iAaenli.snmOten. Received sentence with a burst error: TIEpfe______Irv.iAaenli.snmOten. Received sentence after deinterleaving: T_isI_AnE_amp_eOfInterle_vin_...

No word is completely lost and the missing letters can be recovered with minimal guesswork.

Disadvantages of interleaving[edit]

Use of interleaving techniques increases total delay. This is because the entire interleaved block must be received before the packets can be decoded. Also interleavers hide the structure of errors; without an interleaver, more advanced decoding algorithms can take advantage of the error structure and achieve more reliable communication than a simpler decoder combined with an interleaver.

Software for error-correcting codes[edit]

Simulating the behaviour of error-correcting codes (ECCs) in software is a common practice to design, validate and improve ECCs. The upcoming wireless 5G standard raises a new range of applications for the software ECCs: the Cloud Radio Access Networks (C-RAN) in a Software-defined radio (SDR) context. The idea is to directly use software ECCs in the communications. For instance in the 5G, the software ECCs could be located in the cloud and the antennas connected to this computing resources: improving this way the flexibility of the communication network and eventually increasing the energy efficiency of the system.

In this context, there are various available Open-source software listed below (non exhaustive).

- AFF3CT(A Fast Forward Error Correction Tool): a full communication chain in C++ (many supported codes like Turbo, LDPC, Polar codes, etc.), very fast and specialized on channel coding (can be used as a program for simulations or as a library for the SDR).

- IT++: a C++ library of classes and functions for linear algebra, numerical optimization, signal processing, communications, and statistics.

- OpenAir: implementation (in C) of the 3GPP specifications concerning the Evolved Packet Core Networks.

List of error-correcting codes[edit]

| Distance | Code |

|---|---|

| 2 (single-error detecting) | Parity |

| 3 (single-error correcting) | Triple modular redundancy |

| 3 (single-error correcting) | perfect Hamming such as Hamming(7,4) |

| 4 (SECDED) | Extended Hamming |

| 5 (double-error correcting) | |

| 6 (double-error correct-/triple error detect) | |

| 7 (three-error correcting) | perfect binary Golay code |

| 8 (TECFED) | extended binary Golay code |

<!—it would be nice to have some categorization of codes, e.g. into linear codes, cyclic codes, etc.—>

- AN codes

- BCH code, which can be designed to correct any arbitrary number of errors per code block.

- Berger code

- Constant-weight code

- Convolutional code

- Expander codes

- Group codes

- Golay codes, of which the Binary Golay code is of practical interest

- Goppa code, used in the McEliece cryptosystem

- Hadamard code

- Hagelbarger code

- Hamming code

- Latin square based code for non-white noise (prevalent for example in broadband over powerlines)

- Lexicographic code

- Long code

- Low-density parity-check code, also known as Gallager code, as the archetype for sparse graph codes

- LT code, which is a near-optimal rateless erasure correcting code (Fountain code)

- m of n codes

- Online code, a near-optimal rateless erasure correcting code

- Polar code (coding theory)

- Raptor code, a near-optimal rateless erasure correcting code

- Reed–Solomon error correction

- Reed–Muller code

- Repeat-accumulate code

- Repetition codes, such as Triple modular redundancy

- Spinal code, a rateless, nonlinear code based on pseudo-random hash functions

- Tornado code, a near-optimal erasure correcting code, and the precursor to Fountain codes

- Turbo code

- Walsh–Hadamard code

- Cyclic redundancy checks (CRCs) can correct 1-bit errors for messages at most <math>2^{n-1}-1</math> bits long for optimal generator polynomials of degree <math>n</math>, see Mathematics of cyclic redundancy checks#Bitfilters

See Also on BitcoinWiki[edit]

- Code rate

- Erasure codes

- Soft-decision decoder

- Error detection and correction

- Error-correcting codes with feedback

- Burst error-correcting code

Source[edit]

http://wikipedia.org/

High-capacity long-haul optical fiber transmission

Xiang Liu, in Optical Communications in the 5G Era, 2022

8.4.4 Capacity-approaching FEC

Forward-error correction is an important technology to enable a communication link to approach the Shannon limit. The use of FEC in optical fiber communication links has gone through three generations [94]

- •

-

The first generation of FEC codes appeared in the 1987–93 period, and the representative FEC code is Reed–Solomon (RS) code (255,239) with a FEC overhead of 6.7% and a net coding gain (NCG) of 5.8 dB at an output BER of 10−15.

- •

-

The second generation of FEC codes in the 2000–04 period, and the representative FEC codes are the concatenated codes, showing an NCG of 9.4 dB at an FEC overhead of ~25%.

- •

-

The third generation of FEC codes started to be adopted in real systems around 2006, and the representative FEC codes are SD decoding enabled low-density parity check (LDPC) codes, turbo codes, etc., showing an NCG of over 10 dB at an FEC overhead of between ~15% and ~25%. The key feature of the third generation of FEC codes is the use of SD decoding [94].

A key performance indicator of FEC is its NCG, which is defined as

(8.27)NCGdB=10log10SNRBERout−10log10SNRBERin+10log10(R)

where BERin is the maximally allowed input BER to the FEC to achieve a reference output BER of BERout, SNR(x) is the SNR needed for a given modulation format to reach a BER of x without coding, and R is the FEC code rate. For BPSK modulation format, we have

(8.28)SNRBPSKBER=erfc−1(2BER)

where erfc−1() is the inverse complementary error function. For QPSK modulation format, we have

(8.29)SNRQPSKBER=2erfc−1(2BER)

For 16-QAM modulation format, we have

(8.30)SNR16QAMBER=10erfc−1(83BER)

For high-speed transmission based on 16-QAM, multiple high-performance FEC codes have been studied. Table 8.1 shows some of the FEC codes and their performances. The first code is the concentrated FEC (CFEC) code adopted by the Optical Internetworking Forum (OIF) for 400ZR [95,96]. Its required BERin for a BERout of 10−15 is 1.22×10−2, and its code rate is 0.871, leading to an NCG of 11.76 dB. The second code is an enhanced version of CFEC, named as CFEC+, which offers an increased NCG of 11.45 dB [97]. The third code is referred to as open FEC (OFEC), which was adopted by the Open ROADM Multisource Agreement (MSA) and was proposed to the ITU project on 200 G/400 G FlexO-LR for 450 km black link applications [98]. The OFEC offers a further increased NCG of 11.6 dB.

Table 8.1. High-performance FEC codes used for 16-QAM based high-speed transmission.

| FEC code | BERin (SNRin) for BERout=10−15* | Code rate | NCG |

|---|---|---|---|

| CFEC [95,96] | 1.22×10−2 (13.6 dB) | 0.871 | 10.76 dB |

| CFEC+ [97] | 1.81×10−2 (12.9 dB) | 0.871 | 11.45 dB |

| OFEC [98] | 1.98×10−2 (12.7 dB) | 0.867 | 11.60 dB |

| A 20%-OH LDPC [57] | ~2.76×10−2 (12.0 dB) | 0.833 | 12.12 dB |

| A 25%-OH LDPC [99] | ~3.45×10−2 (11.5 dB) | ~0.8 | ~12.5 dB |

FEC, forward-error correction; BER, bit error ratio; SNR, signal-to-noise ratio; NCG, net coding gain; LDPC, low-density parity check.

- *

- At the reference BERout of 10−15, the corresponding SNR for 16-QAM is ~24.95 dB.

In a recent 800-Gb/second-per-wavelength demonstration, a 20% overhead (OH) LDPC code was used, and a high NCG of 12.12 dB was achieved [57]. Finally, a 25%-OH FEC was demonstrated in real-time 200-Gb/second coherent transceivers, achieving a remarkably high NCG of 12.5 dB [99]. Similar performance has also been shown in the 800-Gb/second demonstration when the LDPC FEC OH was increased to 25% [57].

It is worthwhile to compare the above FEC performances with the theoretical limit. The ultimate NCG can be derived from the Shannon’s capacity theorem. The channel capacity C of a binary symmetric channel with HD decoding is given as

(8.31)CHD=1+BERin·log2BERin+1−BERin·log21−BERin

where BERin is the input BER threshold and the channel capacity CHD can be set to the FEC code rate R [94]. For a given FEC code rate R, we can calculate BERin. Together with Eqs. (8.27–8.30), we can then calculate the NCG for a given modulation format at a given reference BERout.

For SD decoding, the capacity of a binary symmetric channel, which has two possible inputs X=A and X=−A, can be expressed as [35]

(8.32)CSD=12∫−∞+∞p(y|A)log2p(y|A)p(y)dy+12∫−∞+∞p(y|−A)log2p(y|−A)p(y)dy

where p(y|A) is the conditional probability of getting y at the receiver when the input is A, and p(y) is the probability of receiving y. Similar to the case with HD, for a given FEC code rate R=CSD, we can calculate BERin, from which we can then calculate the NCG for a given modulation format at a given reference BERout. In the idealized case of SD decoding with infinite quantization bits, the NCG obtained by SD decoding is π/2 times as large as (or ~2 dB higher than) that obtained by HD decoding when R approaches zero.

Fig. 8.13 shows the Shannon limits of HD and SD NCGs for BPSK/QPSK, together with some recently demonstrated high-performance FEC codes. For HD decoding, KP4 and staircase FEC [100] are widely used in the optical communication industry. Remarkably, the staircase FEC offers a NCG of 9.41 dB, which is only 0.56 dB away from the HD Shannon limit [100]. For SD decoding, NCGs of 11.6 and 12.25 dB have been achieved with 20% and 33% OHs, respectively [57]. These SD NCGs are only about 1 dB away from the SD Shannon limit.

Figure 8.13. The Shannon limits of HD and SD NCGs for BPSK/QPSK and some recently demonstrated FEC codes [57,96,100]. The reference output BER (BERout) is set at 10−15.

Fig. 8.14 shows the Shannon limits of HD and SD NCGs for 16-QAM, together with some recently demonstrated high-performance FEC codes. The Shannon limits of NCGs for 16-QAM are slightly higher than those for BPSK/QPSK. This can be understood by the slightly flatter BER curve of 16-QAM at high BER values as compared to BPSK/QPSK, as shown in Figure 7.6. For HD decoding, the staircase FEC is again only ~0.6 dB away from the HD Shannon limit. For SD decoding, the CFEC+, OFEC, 20%-OH LDPC, and 25%-OH LDPC described in Table 8.1 are all within 1.4 dB away from the SD Shannon limit. In terms of the absolute NCG, it increases as the OH increases. This offers the flexibility of adjusting the system performance and throughput based on link conditions.

Figure 8.14. The Shannon limits of HD and SD NCGs for 16-QAM and some recently demonstrated FEC codes [57,96–99,100]. The reference output BER (BERout) is set at 10−15.

The above analysis and review have shown the remarkable works done by the optical communication industry in approaching the Shannon limit via advanced FEC coding designs and implementations.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128216279000140

Ultralong-distance undersea transmission systems

Jin-Xing Cai, … Neal S. Bergano, in Optical Fiber Telecommunications VII, 2020

13.2.3.1 Adaptive rate forward error correction

Using multiple FECs with different FEC thresholds in a WDM system can squeeze more capacity than using a single FEC. Ref. [43] designed a family of 52 Spatially-Coupled LDPC codes and studied the gain of using different number of FECs. Capacity increase due to using 8 FECs (with respect to single FEC, both without NLC) is between 15.5% and 21% for transmission distances from 10,200 to 6000 km. Further increase of the number of FECs used does not provide much more gain in capacity, as shown in Fig. 13.24. The shortcoming of this scheme is that the FEC implementation penalty increases for stronger FEC code. For example, the implementation penalty increases from 0.5 to >1 dB when the FEC code rate drops from 0.87 down to 0.52.

Figure 13.24. Net transmitted capacity versus number of forward error corrections (FECs), © [2015] IEEE. Reprinted, with permission, from [43].

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128165027000154

Technique Developments and Market Prospects of Submarine Optical Cable Engineering

In Submarine Optical Cable Engineering, 2018

10.1.2 Development Trends of the Forward Error Correction Technique

FEC facilitates the development of 100 Gbps and super 100 Gbps technology discussed earlier. Soft decision (SD) is the latest evolution used in FEC application. SD FEC is named on the reference of traditional hard decision (HD). FEC decoding from the receiver is the difference between HD and SD. Threshold is the baseline for HD. The input signals will be determined as 0 or 1 arbitrarily. On the other hand, threshold is the reference for SD. The input signals will be speculated, and speculation credibility is provided. SD does not generate decisions but provides inspection and credibility for the further information process and decision-making with an error correction algorithm. The provided credibility will, furthermore, enhance FEC coding gain. Compared with HD, the coding gain of FEC generated from SD will improve 1.5–2.5 dB.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128134757000102

Communicating pictures: delivery across networks

David R. Bull, Fan Zhang, in Intelligent Image and Video Compression (Second Edition), 2021

Cross-packet FEC

If FEC is applied within a packet or appended to individual packets, in cases where packets are not just erroneous but are lost completely during transmission (e.g., over a congested internet connection), the correction capability of the code is lost. Instead, it is beneficial to apply FEC across a number of packets, as shown in Fig. 11.10. One problem with using FEC is that all the k data packets need to be of the same length, which can be an issue if GOB fragmentation is not allowed. The performance of cross-packet FEC for the case of different coding depths (8 and 32) is shown in Fig. 11.11. This clearly demonstrates the compromise between clean channel performance and error resilience for different coding rates.

Figure 11.10. Cross-packet FEC.

Figure 11.11. Performance of cross-packet FEC for a coding depth of 8 (left) and 32 (right) for various coding rates.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128203538000207

Error-Resilience Video Coding Techniques

Mohammed Ebrahim Al-Mualla, … David R. Bull, in Video Coding for Mobile Communications, 2002

9.6.1 Forward Error Correction (FEC)

Forward error correction works by adding redundant bits to a bitstream to help the decoder detect and correct some transmission errors without the need for retransmission. The name forward stems from the fact that the flow of data is always in the forward direction (i.e., from encoder to decoder).

For example, in block codes the transmitted bitstream is divided into blocks of k bits. Each block is then appended with r parity bits to form an n-bit codeword. This is called an (n, k) code.

For example, Annex H of the H.263 standard provides an optional FEC mode. This mode uses a (511,493) BCH (Bose-Chaudhuri-Hocquenghem) code. Blocks of k = 493 bits (consisting of 492 video bits and 1 fill indicator bit) are appended with r = 18 parity bits to form a codeword of n = 511 bits. Use of this mode allows the detection of double-bit errors and the correction of single-bit errors within each block.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780120530793500111

Applications to Communication Systems

Yasuo Hirata, Osamu Yamada, in Essentials of Error-Control Coding Techniques, 1990

6.2.2 Recent Trends in Operational Systems

FEC techniques have been introduced in a variety of satellite communication systems. This section briefly surveys recent trends in FEC application to the operational systems, focusing on the International Telecommunications Satellite Organization (INTELSAT), which has been taking the leading position in the area of commercial satellite communications, and on International Maritime Satellite Organization (INMARSAT), which offers mobile satellite communication service on an international basis.

Table 6.2 summarizes the FEC codes applied to the INTELSAT system. In the INTELSAT system, the double error-correcting self-orthogonal convolutional codes with the code rate of 3/4 and 7/8 are adopted in the single channel per carrier (SCPC) data-transmission system for 48-kbit/sec and 56-kbit/sec user rates. Since the decoder of the self-orthogonal code is simply implemented by using the threshold decoding technique, it has been widely utilized for data transmission in satellite communication systems. In addition to self-orthogonal codes, the (120, 112) modified BCH code with the code rate of 14/15 which is derived from (127, 119) single-error-correcting/double-error-detecting BCH code is also specified in the INTELSAT SCPC system to transmit the voice-band data of higher than 4.8 kbit/sec through the 56-kbit/sec PCM voice channel.

Table 6.2. FEC Codes Applied for INTELSAT System

| Systems | Applied FEC Codes | |

|---|---|---|

| SCPC | 3/4 self-orthogonal code (double-error correction) 7/8 self-orthogonal code (120, 112) modified BCH code | for 48-kbit/sec, 50-kbit/sec data for 56-kbit/sec data for voice-band data transmission above 4.8 kbit/sec |

| TDMA/DSI | (128,112) BCH code | for TDMA data burst (120 Mbit/sec) |

| (24, 12) Golay code | for DSI assignment message | |

| IBS, IDR | 1/2 and 3/4 convolutional coding/soft decision Viterbi decoding (K = 7, punctured) |

In the time division multiple access/digital speech interpolation (TDMA/DSI) system (Pontano et al., 1981), the (128, 112) modified BCH code with the code rate of 7/8, which consists of (127, 112) double-error-correcting/triple-error-detecting BCH code and one dummy bit, is adopted in communication channels. The INTELSAT TDMA system has several tight constraints in selecting the FEC codes to be applied. One of the most significant constraints is that the very high speed data must be transmitted in burst mode. Another requirement is to keep the reduction of channel-utilization efficiency due to applying FEC as low as possible. In view of those requirements, the BCH code mentioned previously has been selected as the standard FEC code (Muratani et al., 1978; Koga et al., 1979, 1980). In addition to that, the (24,12) modified Golay code is applied to the assignment control channel for the DSI system to improve the reliability of assignment message. This modified Golay code is constructed by adding one dummy bit to the (23,12) triple-error-correcting Golay code.

Recently, a new service called INTELSAT Business Services (IBS) has commenced (Lee et al,1983), in which the digital-communication networks can be established among earth stations with small dish antennas. In order to overcome the severe power limitation due to reduction of the antenna size, the soft decision Viterbi decoding for the rate 1/2 or 3/4 convolutional code with the constraint length of 7 is applied, which can offer high coding gain as stated in Section 6.2.1 of Chapter 6. As for the code with a code rate of 3/4, the punctured coding is applied. These FEC codes using Viterbi decoding are also going to be applied to the new data transmission service called intermediate data rate (IDR). Table 6.3, Table 6.4, and Table 6.5 summarize the specifications of FEC codes applied to INTELSAT SCPC, TDMA/DSI, and IBS systems, respectively.

Table 6.3. Specification of FEC Codes Applied to INTELSAT SCPC System

| Data | FEC Code Applied | Generator Polynomial |

|---|---|---|

| 48-kbit/sec | 3/4 self-orthogonal code | g1 = 1 + x3 + x15 + x19 |

| 50-kbit/sec data | (double-error correction) | g2 = 1 + x8 + x17 + x18 |

| (constraint length = 80 bits) | g3 = 1 + x6 + x11 + x13 | |

| 56-kbit/sec data | 7/8 self-orthogonal code | g1 = 1 + x3 + x19 + x42, |

| (constraint length = 384 bits) | g2 = 1 + x21 + x34 + x43 | |

| g3 = 1 + x29 + x33 + x47, g4 = 1 + x25 + x36 + x37, g5 = 1 + x15 + x20 + x46, g6 = 1 + x2 + x8 + x32, g7 = 1 + x7 + x17 + x45 |

||

| Voice-band data transmission above | (120, 112) modified BCH codea | g (x) = (x + 1)(x7 + x3 + 1) = x8 + x7 + x4 + x3 + x + 1 |

| 48 kbit/sec |

- a

- BCH code is applied after 56-kbit/sec PCM encoding. The error-correcting process is inhibited when the double-bit error is detected within a block.

Table 6.4. Specification of FEC Codes Applied to INTELSAT TDMA/DSI System

| Data | FEC Code Applied | Generator Polynomial |

|---|---|---|

| TDMA data burst (120 Mbit/sec) | (128,112) BCH code (d =6, t = 2)a | G(x) = (x + 1)(x14 + x12 + x10 + x6 + x5 + x4 + x3 + x2 + 1) = x15 + x14 + x13 + x12 + x11 + x10 + x7 + x2 + x + 1 |

| DSI assignment message | (24,12) Golay code (d =7, t = 3)b | G(x) = x11 + x9 + x7 + x6 + x5 + x + 1 |

- a

- One dummy bit is added to the (127,112) BCH code

- b

- One dummy bit is added to the (23,12) Golay code.

Table 6.5. Specification of FEC Codes Applied to INTELSAT IBS System

| Data | FEC Code Applieda | Generator Polynomial |

|---|---|---|

| 64 kbit/sec, ∼ 10 Mbit/sec | 1/2, K = 7 convolutional code/Viterbi decoding | g1 = 1 + x2 + x3 + x5 + x6 g2 = 1 + x + x2 + x3 + x6 bit deleting pattern |

| 3/4 punctured code (d = 5)/Viterbi decoding derived from 1/2 code | 110101(1: send0: delete) |

- a

- One dummy bit is added to the (23,12) Golay code.

Furthermore, several new coding schemes are also being studied and partly developed in INTELSAT for future application. A viable coding scheme under development is the coded 8-phase PSK combined with the rate 2/3 convolutional coding-soft decision Viterbi decoding. It is reported that the signal-power requirement can be reduced by around 4 dB compared with the conventional 4-phase PSK applied to TDMA and SCPC systems, while keeping the bandwidth requirement constant (Rhodes et al., 1983; Ungerboeck et al., 1986).

Table 6.6 summarizes the FEC codes applied to the INMARSAT system. In the INMARSAT system, the (63,57) and (63,39) BCH codes are used to detect the bit errors in the access control channels for the Standard-A system. Also, the rate 1/2 convolutional code with constraint length of 7/Viterbi decoding is adopted to the 56-kbit/sec data channel in the ship-to-shore direction. INMARSAT is now planning to introduce a new ship earth station standard called Standard-B system which is based on digital-transmission techniques in the second generation starting in 1991 (Hirata et al, 1984). In order to save transmission power, the Standard-B system is designed based on use of Viterbi decoding for codes similar to the ones employed in the INTELSAT systems. Figures 6.6, 6.7, and 6.8 show the BER performances of self-orthogonal code, BCH code, and soft decision Viterbi decoding, respectively, all of which are already used in INTELSAT and/or INMARSAT systems.

Table 6.6. FEC Codes Applied to INMARSAT System

| Systems | Applied FEC Codes |

|---|---|

| Assignment/request channel | (63,57) BCH code for assignment message |

| (63,39) BCH code for request message | |

| High-speed data transmission (ship-to-shore) | 1/2 conventional coding/soft decision Viterbi decoding (K = 7) for 56-kbit/sec data |

| Digital ship earth station standard (Standard B) | 1/2 and/or 3/4 convolutional coding/soft decision Viterbi decoding (K = 7) for 9.6-kbit/sec data and 16-kbit/sec voice |

Fig. 6.6. BER versus Eb/No performance of self-orthogonal convolutional codes (double error-correcting).

Fig. 6.7. BER versus Eb/No performance of BCH codes applied to INTELSAT TDMA/DSI system.

Fig. 6.8. BER versus Eb/No performance of soft decision Viterbi decoding.