| Error function | |

|---|---|

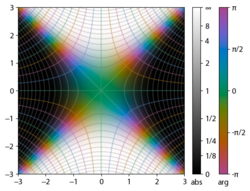

Plot of the error function |

|

| General information | |

| General definition |  |

| Fields of application | Probability, thermodynamics |

| Domain, Codomain and Image | |

| Domain |  |

| Image |  |

| Basic features | |

| Parity | Odd |

| Specific features | |

| Root | 0 |

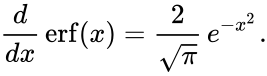

| Derivative |  |

| Antiderivative |  |

| Series definition | |

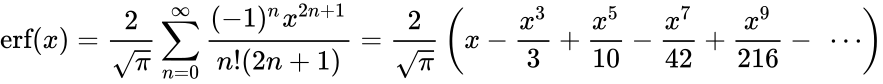

| Taylor series |  |

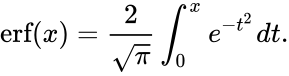

In mathematics, the error function (also called the Gauss error function), often denoted by erf, is a complex function of a complex variable defined as:[1]

This integral is a special (non-elementary) sigmoid function that occurs often in probability, statistics, and partial differential equations. In many of these applications, the function argument is a real number. If the function argument is real, then the function value is also real.

In statistics, for non-negative values of x, the error function has the following interpretation: for a random variable Y that is normally distributed with mean 0 and standard deviation 1/√2, erf x is the probability that Y falls in the range [−x, x].

Two closely related functions are the complementary error function (erfc) defined as

and the imaginary error function (erfi) defined as

where i is the imaginary unit

Name[edit]

The name «error function» and its abbreviation erf were proposed by J. W. L. Glaisher in 1871 on account of its connection with «the theory of Probability, and notably the theory of Errors.»[2] The error function complement was also discussed by Glaisher in a separate publication in the same year.[3]

For the «law of facility» of errors whose density is given by

(the normal distribution), Glaisher calculates the probability of an error lying between p and q as:

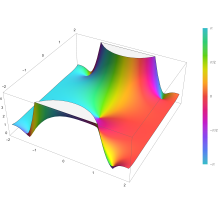

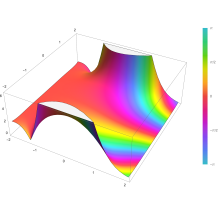

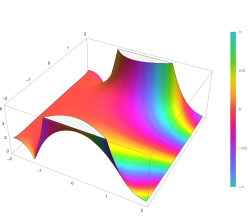

Plot of the error function Erf(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

Applications[edit]

When the results of a series of measurements are described by a normal distribution with standard deviation σ and expected value 0, then erf (a/σ √2) is the probability that the error of a single measurement lies between −a and +a, for positive a. This is useful, for example, in determining the bit error rate of a digital communication system.

The error and complementary error functions occur, for example, in solutions of the heat equation when boundary conditions are given by the Heaviside step function.

The error function and its approximations can be used to estimate results that hold with high probability or with low probability. Given a random variable X ~ Norm[μ,σ] (a normal distribution with mean μ and standard deviation σ) and a constant L < μ:

where A and B are certain numeric constants. If L is sufficiently far from the mean, specifically μ − L ≥ σ√ln k, then:

so the probability goes to 0 as k → ∞.

The probability for X being in the interval [La, Lb] can be derived as

Properties[edit]

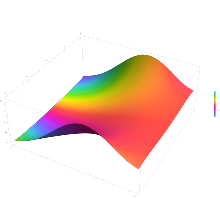

Integrand exp(−z2)

erf z

The property erf (−z) = −erf z means that the error function is an odd function. This directly results from the fact that the integrand e−t2 is an even function (the antiderivative of an even function which is zero at the origin is an odd function and vice versa).

Since the error function is an entire function which takes real numbers to real numbers, for any complex number z:

where z is the complex conjugate of z.

The integrand f = exp(−z2) and f = erf z are shown in the complex z-plane in the figures at right with domain coloring.

The error function at +∞ is exactly 1 (see Gaussian integral). At the real axis, erf z approaches unity at z → +∞ and −1 at z → −∞. At the imaginary axis, it tends to ±i∞.

Taylor series[edit]

The error function is an entire function; it has no singularities (except that at infinity) and its Taylor expansion always converges, but is famously known «[…] for its bad convergence if x > 1.»[4]

The defining integral cannot be evaluated in closed form in terms of elementary functions, but by expanding the integrand e−z2 into its Maclaurin series and integrating term by term, one obtains the error function’s Maclaurin series as:

which holds for every complex number z. The denominator terms are sequence A007680 in the OEIS.

For iterative calculation of the above series, the following alternative formulation may be useful:

because −(2k − 1)z2/k(2k + 1) expresses the multiplier to turn the kth term into the (k + 1)th term (considering z as the first term).

The imaginary error function has a very similar Maclaurin series, which is:

which holds for every complex number z.

Derivative and integral[edit]

The derivative of the error function follows immediately from its definition:

From this, the derivative of the imaginary error function is also immediate:

An antiderivative of the error function, obtainable by integration by parts, is

An antiderivative of the imaginary error function, also obtainable by integration by parts, is

Higher order derivatives are given by

where H are the physicists’ Hermite polynomials.[5]

Bürmann series[edit]

An expansion,[6] which converges more rapidly for all real values of x than a Taylor expansion, is obtained by using Hans Heinrich Bürmann’s theorem:[7]

where sgn is the sign function. By keeping only the first two coefficients and choosing c1 = 31/200 and c2 = −341/8000, the resulting approximation shows its largest relative error at x = ±1.3796, where it is less than 0.0036127:

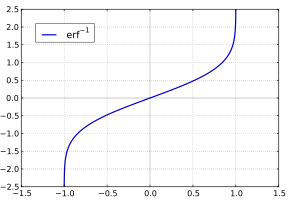

Inverse functions[edit]

Given a complex number z, there is not a unique complex number w satisfying erf w = z, so a true inverse function would be multivalued. However, for −1 < x < 1, there is a unique real number denoted erf−1 x satisfying

The inverse error function is usually defined with domain (−1,1), and it is restricted to this domain in many computer algebra systems. However, it can be extended to the disk |z| < 1 of the complex plane, using the Maclaurin series

where c0 = 1 and

So we have the series expansion (common factors have been canceled from numerators and denominators):

(After cancellation the numerator/denominator fractions are entries OEIS: A092676/OEIS: A092677 in the OEIS; without cancellation the numerator terms are given in entry OEIS: A002067.) The error function’s value at ±∞ is equal to ±1.

For |z| < 1, we have erf(erf−1 z) = z.

The inverse complementary error function is defined as

For real x, there is a unique real number erfi−1 x satisfying erfi(erfi−1 x) = x. The inverse imaginary error function is defined as erfi−1 x.[8]

For any real x, Newton’s method can be used to compute erfi−1 x, and for −1 ≤ x ≤ 1, the following Maclaurin series converges:

where ck is defined as above.

Asymptotic expansion[edit]

A useful asymptotic expansion of the complementary error function (and therefore also of the error function) for large real x is

where (2n − 1)!! is the double factorial of (2n − 1), which is the product of all odd numbers up to (2n − 1). This series diverges for every finite x, and its meaning as asymptotic expansion is that for any integer N ≥ 1 one has

where the remainder, in Landau notation, is

as x → ∞.

Indeed, the exact value of the remainder is

which follows easily by induction, writing

and integrating by parts.

For large enough values of x, only the first few terms of this asymptotic expansion are needed to obtain a good approximation of erfc x (while for not too large values of x, the above Taylor expansion at 0 provides a very fast convergence).

Continued fraction expansion[edit]

A continued fraction expansion of the complementary error function is:[9]

Integral of error function with Gaussian density function[edit]

which appears related to Ng and Geller, formula 13 in section 4.3[10] with a change of variables.

Factorial series[edit]

The inverse factorial series:

converges for Re(z2) > 0. Here

zn denotes the rising factorial, and s(n,k) denotes a signed Stirling number of the first kind.[11][12]

There also exists a representation by an infinite sum containing the double factorial:

Numerical approximations[edit]

Approximation with elementary functions[edit]

- Abramowitz and Stegun give several approximations of varying accuracy (equations 7.1.25–28). This allows one to choose the fastest approximation suitable for a given application. In order of increasing accuracy, they are:

(maximum error: 5×10−4)

where a1 = 0.278393, a2 = 0.230389, a3 = 0.000972, a4 = 0.078108

(maximum error: 2.5×10−5)

where p = 0.47047, a1 = 0.3480242, a2 = −0.0958798, a3 = 0.7478556

(maximum error: 3×10−7)

where a1 = 0.0705230784, a2 = 0.0422820123, a3 = 0.0092705272, a4 = 0.0001520143, a5 = 0.0002765672, a6 = 0.0000430638

(maximum error: 1.5×10−7)

where p = 0.3275911, a1 = 0.254829592, a2 = −0.284496736, a3 = 1.421413741, a4 = −1.453152027, a5 = 1.061405429

All of these approximations are valid for x ≥ 0. To use these approximations for negative x, use the fact that erf x is an odd function, so erf x = −erf(−x).

- Exponential bounds and a pure exponential approximation for the complementary error function are given by[13]

- The above have been generalized to sums of N exponentials[14] with increasing accuracy in terms of N so that erfc x can be accurately approximated or bounded by 2Q̃(√2x), where

In particular, there is a systematic methodology to solve the numerical coefficients {(an,bn)}N

n = 1 that yield a minimax approximation or bound for the closely related Q-function: Q(x) ≈ Q̃(x), Q(x) ≤ Q̃(x), or Q(x) ≥ Q̃(x) for x ≥ 0. The coefficients {(an,bn)}N

n = 1 for many variations of the exponential approximations and bounds up to N = 25 have been released to open access as a comprehensive dataset.[15] - A tight approximation of the complementary error function for x ∈ [0,∞) is given by Karagiannidis & Lioumpas (2007)[16] who showed for the appropriate choice of parameters {A,B} that

They determined {A,B} = {1.98,1.135}, which gave a good approximation for all x ≥ 0. Alternative coefficients are also available for tailoring accuracy for a specific application or transforming the expression into a tight bound.[17]

- A single-term lower bound is[18]

where the parameter β can be picked to minimize error on the desired interval of approximation.

-

- Another approximation is given by Sergei Winitzki using his «global Padé approximations»:[19][20]: 2–3

where

This is designed to be very accurate in a neighborhood of 0 and a neighborhood of infinity, and the relative error is less than 0.00035 for all real x. Using the alternate value a ≈ 0.147 reduces the maximum relative error to about 0.00013.[21]

This approximation can be inverted to obtain an approximation for the inverse error function:

- An approximation with a maximal error of 1.2×10−7 for any real argument is:[22]

with

and

Table of values[edit]

| x | erf x | 1 − erf x |

|---|---|---|

| 0 | 0 | 1 |

| 0.02 | 0.022564575 | 0.977435425 |

| 0.04 | 0.045111106 | 0.954888894 |

| 0.06 | 0.067621594 | 0.932378406 |

| 0.08 | 0.090078126 | 0.909921874 |

| 0.1 | 0.112462916 | 0.887537084 |

| 0.2 | 0.222702589 | 0.777297411 |

| 0.3 | 0.328626759 | 0.671373241 |

| 0.4 | 0.428392355 | 0.571607645 |

| 0.5 | 0.520499878 | 0.479500122 |

| 0.6 | 0.603856091 | 0.396143909 |

| 0.7 | 0.677801194 | 0.322198806 |

| 0.8 | 0.742100965 | 0.257899035 |

| 0.9 | 0.796908212 | 0.203091788 |

| 1 | 0.842700793 | 0.157299207 |

| 1.1 | 0.880205070 | 0.119794930 |

| 1.2 | 0.910313978 | 0.089686022 |

| 1.3 | 0.934007945 | 0.065992055 |

| 1.4 | 0.952285120 | 0.047714880 |

| 1.5 | 0.966105146 | 0.033894854 |

| 1.6 | 0.976348383 | 0.023651617 |

| 1.7 | 0.983790459 | 0.016209541 |

| 1.8 | 0.989090502 | 0.010909498 |

| 1.9 | 0.992790429 | 0.007209571 |

| 2 | 0.995322265 | 0.004677735 |

| 2.1 | 0.997020533 | 0.002979467 |

| 2.2 | 0.998137154 | 0.001862846 |

| 2.3 | 0.998856823 | 0.001143177 |

| 2.4 | 0.999311486 | 0.000688514 |

| 2.5 | 0.999593048 | 0.000406952 |

| 3 | 0.999977910 | 0.000022090 |

| 3.5 | 0.999999257 | 0.000000743 |

[edit]

Complementary error function[edit]

The complementary error function, denoted erfc, is defined as

-

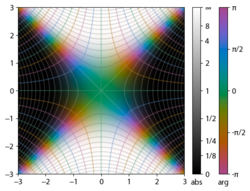

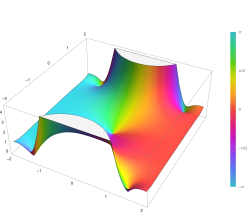

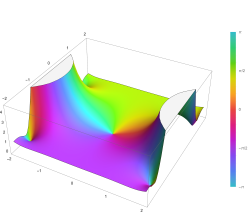

Plot of the complementary error function Erfc(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

which also defines erfcx, the scaled complementary error function[23] (which can be used instead of erfc to avoid arithmetic underflow[23][24]). Another form of erfc x for x ≥ 0 is known as Craig’s formula, after its discoverer:[25]

This expression is valid only for positive values of x, but it can be used in conjunction with erfc x = 2 − erfc(−x) to obtain erfc(x) for negative values. This form is advantageous in that the range of integration is fixed and finite. An extension of this expression for the erfc of the sum of two non-negative variables is as follows:[26]

Imaginary error function[edit]

The imaginary error function, denoted erfi, is defined as

Plot of the imaginary error function Erfi(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

where D(x) is the Dawson function (which can be used instead of erfi to avoid arithmetic overflow[23]).

Despite the name «imaginary error function», erfi x is real when x is real.

When the error function is evaluated for arbitrary complex arguments z, the resulting complex error function is usually discussed in scaled form as the Faddeeva function:

Cumulative distribution function[edit]

The error function is essentially identical to the standard normal cumulative distribution function, denoted Φ, also named norm(x) by some software languages[citation needed], as they differ only by scaling and translation. Indeed,

-

the normal cumulative distribution function plotted in the complex plane

or rearranged for erf and erfc:

Consequently, the error function is also closely related to the Q-function, which is the tail probability of the standard normal distribution. The Q-function can be expressed in terms of the error function as

The inverse of Φ is known as the normal quantile function, or probit function and may be expressed in terms of the inverse error function as

The standard normal cdf is used more often in probability and statistics, and the error function is used more often in other branches of mathematics.

The error function is a special case of the Mittag-Leffler function, and can also be expressed as a confluent hypergeometric function (Kummer’s function):

It has a simple expression in terms of the Fresnel integral.[further explanation needed]

In terms of the regularized gamma function P and the incomplete gamma function,

sgn x is the sign function.

Generalized error functions[edit]

Graph of generalised error functions En(x):

grey curve: E1(x) = 1 − e−x/√π

red curve: E2(x) = erf(x)

green curve: E3(x)

blue curve: E4(x)

gold curve: E5(x).

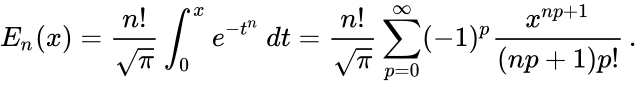

Some authors discuss the more general functions:[citation needed]

Notable cases are:

- E0(x) is a straight line through the origin: E0(x) = x/e√π

- E2(x) is the error function, erf x.

After division by n!, all the En for odd n look similar (but not identical) to each other. Similarly, the En for even n look similar (but not identical) to each other after a simple division by n!. All generalised error functions for n > 0 look similar on the positive x side of the graph.

These generalised functions can equivalently be expressed for x > 0 using the gamma function and incomplete gamma function:

Therefore, we can define the error function in terms of the incomplete gamma function:

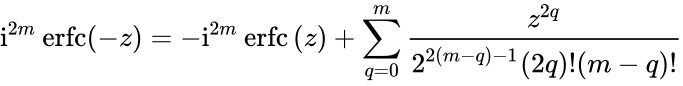

Iterated integrals of the complementary error function[edit]

The iterated integrals of the complementary error function are defined by[27]

The general recurrence formula is

They have the power series

from which follow the symmetry properties

and

Implementations[edit]

As real function of a real argument[edit]

- In Posix-compliant operating systems, the header

math.hshall declare and the mathematical librarylibmshall provide the functionserfanderfc(double precision) as well as their single precision and extended precision counterpartserff,erflanderfcf,erfcl.[28] - The GNU Scientific Library provides

erf,erfc,log(erf), and scaled error functions.[29]

As complex function of a complex argument[edit]

libcerf, numeric C library for complex error functions, provides the complex functionscerf,cerfc,cerfcxand the real functionserfi,erfcxwith approximately 13–14 digits precision, based on the Faddeeva function as implemented in the MIT Faddeeva Package

See also[edit]

[edit]

- Gaussian integral, over the whole real line

- Gaussian function, derivative

- Dawson function, renormalized imaginary error function

- Goodwin–Staton integral

In probability[edit]

- Normal distribution

- Normal cumulative distribution function, a scaled and shifted form of error function

- Probit, the inverse or quantile function of the normal CDF

- Q-function, the tail probability of the normal distribution

References[edit]

- ^ Andrews, Larry C. (1998). Special functions of mathematics for engineers. SPIE Press. p. 110. ISBN 9780819426161.

- ^ Glaisher, James Whitbread Lee (July 1871). «On a class of definite integrals». London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 4. 42 (277): 294–302. doi:10.1080/14786447108640568. Retrieved 6 December 2017.

- ^ Glaisher, James Whitbread Lee (September 1871). «On a class of definite integrals. Part II». London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 4. 42 (279): 421–436. doi:10.1080/14786447108640600. Retrieved 6 December 2017.

- ^ «A007680 – OEIS». oeis.org. Retrieved 2 April 2020.

- ^ Weisstein, Eric W. «Erf». MathWorld.

- ^ Schöpf, H. M.; Supancic, P. H. (2014). «On Bürmann’s Theorem and Its Application to Problems of Linear and Nonlinear Heat Transfer and Diffusion». The Mathematica Journal. 16. doi:10.3888/tmj.16-11.

- ^ Weisstein, Eric W. «Bürmann’s Theorem». MathWorld.

- ^ Bergsma, Wicher (2006). «On a new correlation coefficient, its orthogonal decomposition and associated tests of independence». arXiv:math/0604627.

- ^ Cuyt, Annie A. M.; Petersen, Vigdis B.; Verdonk, Brigitte; Waadeland, Haakon; Jones, William B. (2008). Handbook of Continued Fractions for Special Functions. Springer-Verlag. ISBN 978-1-4020-6948-2.

- ^ Ng, Edward W.; Geller, Murray (January 1969). «A table of integrals of the Error functions». Journal of Research of the National Bureau of Standards Section B. 73B (1): 1. doi:10.6028/jres.073B.001.

- ^ Schlömilch, Oskar Xavier (1859). «Ueber facultätenreihen». Zeitschrift für Mathematik und Physik (in German). 4: 390–415. Retrieved 4 December 2017.

- ^ Nielson, Niels (1906). Handbuch der Theorie der Gammafunktion (in German). Leipzig: B. G. Teubner. p. 283 Eq. 3. Retrieved 4 December 2017.

- ^ Chiani, M.; Dardari, D.; Simon, M.K. (2003). «New Exponential Bounds and Approximations for the Computation of Error Probability in Fading Channels» (PDF). IEEE Transactions on Wireless Communications. 2 (4): 840–845. CiteSeerX 10.1.1.190.6761. doi:10.1109/TWC.2003.814350.

- ^ Tanash, I.M.; Riihonen, T. (2020). «Global minimax approximations and bounds for the Gaussian Q-function by sums of exponentials». IEEE Transactions on Communications. 68 (10): 6514–6524. arXiv:2007.06939. doi:10.1109/TCOMM.2020.3006902. S2CID 220514754.

- ^ Tanash, I.M.; Riihonen, T. (2020). «Coefficients for Global Minimax Approximations and Bounds for the Gaussian Q-Function by Sums of Exponentials [Data set]». Zenodo. doi:10.5281/zenodo.4112978.

- ^ Karagiannidis, G. K.; Lioumpas, A. S. (2007). «An improved approximation for the Gaussian Q-function» (PDF). IEEE Communications Letters. 11 (8): 644–646. doi:10.1109/LCOMM.2007.070470. S2CID 4043576.

- ^ Tanash, I.M.; Riihonen, T. (2021). «Improved coefficients for the Karagiannidis–Lioumpas approximations and bounds to the Gaussian Q-function». IEEE Communications Letters. 25 (5): 1468–1471. arXiv:2101.07631. doi:10.1109/LCOMM.2021.3052257. S2CID 231639206.

- ^ Chang, Seok-Ho; Cosman, Pamela C.; Milstein, Laurence B. (November 2011). «Chernoff-Type Bounds for the Gaussian Error Function». IEEE Transactions on Communications. 59 (11): 2939–2944. doi:10.1109/TCOMM.2011.072011.100049. S2CID 13636638.

- ^ Winitzki, Sergei (2003). «Uniform approximations for transcendental functions». Computational Science and Its Applications – ICCSA 2003. Lecture Notes in Computer Science. Vol. 2667. Springer, Berlin. pp. 780–789. doi:10.1007/3-540-44839-X_82. ISBN 978-3-540-40155-1.

- ^ Zeng, Caibin; Chen, Yang Cuan (2015). «Global Padé approximations of the generalized Mittag-Leffler function and its inverse». Fractional Calculus and Applied Analysis. 18 (6): 1492–1506. arXiv:1310.5592. doi:10.1515/fca-2015-0086. S2CID 118148950.

Indeed, Winitzki [32] provided the so-called global Padé approximation

- ^ Winitzki, Sergei (6 February 2008). «A handy approximation for the error function and its inverse».

- ^ Numerical Recipes in Fortran 77: The Art of Scientific Computing (ISBN 0-521-43064-X), 1992, page 214, Cambridge University Press.

- ^ a b c Cody, W. J. (March 1993), «Algorithm 715: SPECFUN—A portable FORTRAN package of special function routines and test drivers» (PDF), ACM Trans. Math. Softw., 19 (1): 22–32, CiteSeerX 10.1.1.643.4394, doi:10.1145/151271.151273, S2CID 5621105

- ^ Zaghloul, M. R. (1 March 2007), «On the calculation of the Voigt line profile: a single proper integral with a damped sine integrand», Monthly Notices of the Royal Astronomical Society, 375 (3): 1043–1048, Bibcode:2007MNRAS.375.1043Z, doi:10.1111/j.1365-2966.2006.11377.x

- ^ John W. Craig, A new, simple and exact result for calculating the probability of error for two-dimensional signal constellations Archived 3 April 2012 at the Wayback Machine, Proceedings of the 1991 IEEE Military Communication Conference, vol. 2, pp. 571–575.

- ^ Behnad, Aydin (2020). «A Novel Extension to Craig’s Q-Function Formula and Its Application in Dual-Branch EGC Performance Analysis». IEEE Transactions on Communications. 68 (7): 4117–4125. doi:10.1109/TCOMM.2020.2986209. S2CID 216500014.

- ^ Carslaw, H. S.; Jaeger, J. C. (1959), Conduction of Heat in Solids (2nd ed.), Oxford University Press, ISBN 978-0-19-853368-9, p 484

- ^ https://pubs.opengroup.org/onlinepubs/9699919799/basedefs/math.h.html

- ^ «Special Functions – GSL 2.7 documentation».

Further reading[edit]

- Abramowitz, Milton; Stegun, Irene Ann, eds. (1983) [June 1964]. «Chapter 7». Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. Vol. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. p. 297. ISBN 978-0-486-61272-0. LCCN 64-60036. MR 0167642. LCCN 65-12253.

- Press, William H.; Teukolsky, Saul A.; Vetterling, William T.; Flannery, Brian P. (2007), «Section 6.2. Incomplete Gamma Function and Error Function», Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8

- Temme, Nico M. (2010), «Error Functions, Dawson’s and Fresnel Integrals», in Olver, Frank W. J.; Lozier, Daniel M.; Boisvert, Ronald F.; Clark, Charles W. (eds.), NIST Handbook of Mathematical Functions, Cambridge University Press, ISBN 978-0-521-19225-5, MR 2723248

External links[edit]

- A Table of Integrals of the Error Functions

: Sigmoid shape special function

Template:Infobox mathematical function

In mathematics, the error function (also called the Gauss error function), often denoted by erf, is a complex function of a complex variable defined as:[1]

- [math]displaystyle{ operatorname{erf} z = frac{2}{sqrtpi}int_0^z e^{-t^2},dt. }[/math]

This integral is a special (non-elementary) sigmoid function that occurs often in probability, statistics, and partial differential equations. In many of these applications, the function argument is a real number. If the function argument is real, then the function value is also real.

In statistics, for non-negative values of x, the error function has the following interpretation: for a random variable Y that is normally distributed with mean 0 and standard deviation 1/√2, erf x is the probability that Y falls in the range [−x, x].

Two closely related functions are the complementary error function (erfc) defined as

- [math]displaystyle{ operatorname{erfc} z = 1 — operatorname{erf} z, }[/math]

and the imaginary error function (erfi) defined as

- [math]displaystyle{ operatorname{erfi} z = -ioperatorname{erf} iz, }[/math]

where i is the imaginary unit

Name

The name «error function» and its abbreviation erf were proposed by J. W. L. Glaisher in 1871 on account of its connection with «the theory of Probability, and notably the theory of Errors.»[2] The error function complement was also discussed by Glaisher in a separate publication in the same year.[3]

For the «law of facility» of errors whose density is given by

- [math]displaystyle{ f(x)=left(frac{c}{pi}right)^frac12 e^{-cx^2} }[/math]

(the normal distribution), Glaisher calculates the probability of an error lying between p and q as:

- [math]displaystyle{ left(frac{c}{pi}right)^frac12 int_p^qe^{-cx^2},dx = tfrac12left(operatorname{erf} left(qsqrt{c}right) -operatorname{erf} left(psqrt{c}right)right). }[/math]

Plot of the error function Erf(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

Applications

When the results of a series of measurements are described by a normal distribution with standard deviation σ and expected value 0, then erf (a/σ √2) is the probability that the error of a single measurement lies between −a and +a, for positive a. This is useful, for example, in determining the bit error rate of a digital communication system.

The error and complementary error functions occur, for example, in solutions of the heat equation when boundary conditions are given by the Heaviside step function.

The error function and its approximations can be used to estimate results that hold with high probability or with low probability. Given a random variable X ~ Norm[μ,σ] (a normal distribution with mean μ and standard deviation σ) and a constant L < μ:

- [math]displaystyle{ begin{align}

Pr[Xleq L] &= frac12 + frac12operatorname{erf}frac{L-mu}{sqrt{2}sigma} \

&approx A exp left(-B left(frac{L-mu}{sigma}right)^2right) end{align} }[/math]

where A and B are certain numeric constants. If L is sufficiently far from the mean, specifically μ − L ≥ σ√ln k, then:

- [math]displaystyle{ Pr[Xleq L] leq A exp (-B ln{k}) = frac{A}{k^B} }[/math]

so the probability goes to 0 as k → ∞.

The probability for X being in the interval [La, Lb] can be derived as

- [math]displaystyle{ begin{align}

Pr[L_aleq X leq L_b] &= int_{L_a}^{L_b} frac{1}{sqrt{2pi}sigma} expleft(-frac{(x-mu)^2}{2sigma^2}right) ,dx \

&= frac12left(operatorname{erf}frac{L_b-mu}{sqrt{2}sigma} — operatorname{erf}frac{L_a-mu}{sqrt{2}sigma}right).end{align} }[/math]

Properties

Integrand exp(−z2)

erf z

The property erf (−z) = −erf z means that the error function is an odd function. This directly results from the fact that the integrand e−t2 is an even function (the antiderivative of an even function which is zero at the origin is an odd function and vice versa).

Since the error function is an entire function which takes real numbers to real numbers, for any complex number z:

- [math]displaystyle{ operatorname{erf} overline{z} = overline{operatorname{erf} z} }[/math]

where z is the complex conjugate of z.

The integrand f = exp(−z2) and f = erf z are shown in the complex z-plane in the figures at right with domain coloring.

The error function at +∞ is exactly 1 (see Gaussian integral). At the real axis, erf z approaches unity at z → +∞ and −1 at z → −∞. At the imaginary axis, it tends to ±i∞.

Taylor series

The error function is an entire function; it has no singularities (except that at infinity) and its Taylor expansion always converges, but is famously known «[…] for its bad convergence if x > 1.»[4]

The defining integral cannot be evaluated in closed form in terms of elementary functions, but by expanding the integrand e−z2 into its Maclaurin series and integrating term by term, one obtains the error function’s Maclaurin series as:

- [math]displaystyle{ begin{align} operatorname{erf} z

&= frac{2}{sqrtpi}sum_{n=0}^inftyfrac{(-1)^n z^{2n+1}}{n! (2n+1)} \[6pt]

&=frac{2}{sqrtpi} left(z-frac{z^3}{3}+frac{z^5}{10}-frac{z^7}{42}+frac{z^9}{216}-cdotsright)

end{align} }[/math]

which holds for every complex number z. The denominator terms are sequence A007680 in the OEIS.

For iterative calculation of the above series, the following alternative formulation may be useful:

- [math]displaystyle{ begin{align} operatorname{erf} z

&= frac{2}{sqrtpi}sum_{n=0}^inftyleft(z prod_{k=1}^n {frac{-(2k-1) z^2}{k (2k+1)}}right) \[6pt]

&= frac{2}{sqrtpi} sum_{n=0}^infty frac{z}{2n+1} prod_{k=1}^n frac{-z^2}{k}

end{align} }[/math]

because −(2k − 1)z2/k(2k + 1) expresses the multiplier to turn the kth term into the (k + 1)th term (considering z as the first term).

The imaginary error function has a very similar Maclaurin series, which is:

- [math]displaystyle{ begin{align} operatorname{erfi} z &= frac{2}{sqrtpi}sum_{n=0}^inftyfrac{z^{2n+1}}{n! (2n+1)} \[6pt] &=frac{2}{sqrtpi} left(z+frac{z^3}{3}+frac{z^5}{10}+frac{z^7}{42}+frac{z^9}{216}+cdotsright) end{align} }[/math]

which holds for every complex number z.

Derivative and integral

The derivative of the error function follows immediately from its definition:

- [math]displaystyle{ frac{d}{dz}operatorname{erf} z =frac{2}{sqrtpi} e^{-z^2}. }[/math]

From this, the derivative of the imaginary error function is also immediate:

- [math]displaystyle{ frac{d}{dz}operatorname{erfi} z =frac{2}{sqrtpi} e^{z^2}. }[/math]

An antiderivative of the error function, obtainable by integration by parts, is

- [math]displaystyle{ zoperatorname{erf}z + frac{e^{-z^2}}{sqrtpi}. }[/math]

An antiderivative of the imaginary error function, also obtainable by integration by parts, is

- [math]displaystyle{ zoperatorname{erfi}z — frac{e^{z^2}}{sqrtpi}. }[/math]

Higher order derivatives are given by

- [math]displaystyle{ operatorname{erf}^{(k)}z = frac{2 (-1)^{k-1}}{sqrtpi} mathit{H}_{k-1}(z) e^{-z^2} = frac{2}{sqrtpi} frac{d^{k-1}}{dz^{k-1}} left(e^{-z^2}right),qquad k=1, 2, dots }[/math]

where H are the physicists’ Hermite polynomials.[5]

Bürmann series

An expansion,[6] which converges more rapidly for all real values of x than a Taylor expansion, is obtained by using Hans Heinrich Bürmann’s theorem:[7]

- [math]displaystyle{ begin{align}

operatorname{erf} x &= frac 2 {sqrtpi} sgn x cdot sqrt{1-e^{-x^2}} left( 1-frac{1}{12} left (1-e^{-x^2} right ) -frac{7}{480} left (1-e^{-x^2} right )^2 -frac{5}{896} left (1-e^{-x^2} right )^3-frac{787}{276 480} left (1-e^{-x^2} right )^4 — cdots right) \[10pt]

&= frac{2}{sqrtpi} sgn x cdot sqrt{1-e^{-x^2}} left(frac{sqrtpi}{2} + sum_{k=1}^infty c_k e^{-kx^2} right).

end{align} }[/math]

where sgn is the sign function. By keeping only the first two coefficients and choosing c1 = 31/200 and c2 = −341/8000, the resulting approximation shows its largest relative error at x = ±1.3796, where it is less than 0.0036127:

- [math]displaystyle{ operatorname{erf} x approx frac{2}{sqrtpi}sgn x cdot sqrt{1-e^{-x^2}} left(frac{sqrt{pi }}{2} + frac{31}{200}e^{-x^2}-frac{341}{8000} e^{-2x^2}right). }[/math]

Inverse functions

Given a complex number z, there is not a unique complex number w satisfying erf w = z, so a true inverse function would be multivalued. However, for −1 < x < 1, there is a unique real number denoted erf−1 x satisfying

- [math]displaystyle{ operatorname{erf}left(operatorname{erf}^{-1} xright) = x. }[/math]

The inverse error function is usually defined with domain (−1,1), and it is restricted to this domain in many computer algebra systems. However, it can be extended to the disk |z| < 1 of the complex plane, using the Maclaurin series

- [math]displaystyle{ operatorname{erf}^{-1} z=sum_{k=0}^inftyfrac{c_k}{2k+1}left (frac{sqrtpi}{2}zright )^{2k+1}, }[/math]

where c0 = 1 and

- [math]displaystyle{ begin{align}

c_k&=sum_{m=0}^{k-1}frac{c_m c_{k-1-m}}{(m+1)(2m+1)} \ &= left{1,1,frac{7}{6},frac{127}{90},frac{4369}{2520},frac{34807}{16200},ldotsright}.

end{align} }[/math]

So we have the series expansion (common factors have been canceled from numerators and denominators):

- [math]displaystyle{ operatorname{erf}^{-1} z=frac{sqrt{pi}}{2} left (z+frac{pi}{12}z^3+frac{7pi^2}{480}z^5+frac{127pi^3}{40320}z^7+frac{4369pi^4}{5806080}z^9+frac{34807pi^5}{182476800}z^{11}+cdotsright ). }[/math]

(After cancellation the numerator/denominator fractions are entries OEIS: A092676/OEIS: A092677 in the OEIS; without cancellation the numerator terms are given in entry OEIS: A002067.) The error function’s value at ±∞ is equal to ±1.

For |z| < 1, we have erf(erf−1 z) = z.

The inverse complementary error function is defined as

- [math]displaystyle{ operatorname{erfc}^{-1}(1-z) = operatorname{erf}^{-1} z. }[/math]

For real x, there is a unique real number erfi−1 x satisfying erfi(erfi−1 x) = x. The inverse imaginary error function is defined as erfi−1 x.[8]

For any real x, Newton’s method can be used to compute erfi−1 x, and for −1 ≤ x ≤ 1, the following Maclaurin series converges:

- [math]displaystyle{ operatorname{erfi}^{-1} z =sum_{k=0}^inftyfrac{(-1)^k c_k}{2k+1}left (frac{sqrtpi}{2}zright )^{2k+1}, }[/math]

where ck is defined as above.

Asymptotic expansion

A useful asymptotic expansion of the complementary error function (and therefore also of the error function) for large real x is

- [math]displaystyle{ begin{align}

operatorname{erfc} x &= frac{e^{-x^2}}{xsqrt{pi}}left(1 + sum_{n=1}^infty (-1)^n frac{1cdot3cdot5cdots(2n — 1)}{left(2x^2right)^n}right) \[6pt]

&= frac{e^{-x^2}}{xsqrt{pi}}sum_{n=0}^infty (-1)^n frac{(2n — 1)!!}{left(2x^2right)^n},

end{align} }[/math]

where (2n − 1)!! is the double factorial of (2n − 1), which is the product of all odd numbers up to (2n − 1). This series diverges for every finite x, and its meaning as asymptotic expansion is that for any integer N ≥ 1 one has

- [math]displaystyle{ operatorname{erfc} x = frac{e^{-x^2}}{xsqrt{pi}}sum_{n=0}^{N-1} (-1)^n frac{(2n — 1)!!}{left(2x^2right)^n} + R_N(x) }[/math]

where the remainder, in Landau notation, is

- [math]displaystyle{ R_N(x) = Oleft(x^{- (1 + 2N)} e^{-x^2}right) }[/math]

as x → ∞.

Indeed, the exact value of the remainder is

- [math]displaystyle{ R_N(x) := frac{(-1)^N}{sqrtpi} 2^{1 — 2N}frac{(2N)!}{N!}int_x^infty t^{-2N}e^{-t^2},dt, }[/math]

which follows easily by induction, writing

- [math]displaystyle{ e^{-t^2} = -(2t)^{-1}left(e^{-t^2}right)’ }[/math]

and integrating by parts.

For large enough values of x, only the first few terms of this asymptotic expansion are needed to obtain a good approximation of erfc x (while for not too large values of x, the above Taylor expansion at 0 provides a very fast convergence).

Continued fraction expansion

A continued fraction expansion of the complementary error function is:[9]

- [math]displaystyle{ operatorname{erfc} z = frac{z}{sqrtpi}e^{-z^2} cfrac{1}{z^2+ cfrac{a_1}{1+cfrac{a_2}{z^2+ cfrac{a_3}{1+dotsb}}}},qquad a_m = frac{m}{2}. }[/math]

Integral of error function with Gaussian density function

- [math]displaystyle{ int_{-infty}^{infty} operatorname{erf} left(ax+b right) frac{1}{sqrt{2pi sigma^2}}expleft(-frac{(x-mu)^2}{2 sigma^2} right),dx= operatorname{erf} frac{amu+b}{sqrt{1+2 a^2 sigma^2}} , qquad a,b,mu,sigma in R }[/math]

which appears related to Ng and Geller, formula 13 in section 4.3[10] with a change of variables.

Factorial series

The inverse factorial series:

- [math]displaystyle{ begin{align}

operatorname{erfc} z &= frac{e^{-z^2}}{sqrt{pi},z} sum_{n=0}^infty frac{(-1)^n Q_n}{{(z^2+1)}^{bar{n}}}\

&= frac{e^{-z^2}}{sqrt{pi},z}left(1 -frac12frac{1}{(z^2+1)} + frac{1}{4}frac{1}{(z^2+1)(z^2+2)} — cdots right)

end{align} }[/math]

converges for Re(z2) > 0. Here

- [math]displaystyle{ begin{align} Q_n

&overset{text{def}}{{}={}} frac{1}{Gammaleft(frac12right)} int_0^infty tau(tau-1)cdots(tau-n+1)tau^{-frac12} e^{-tau} ,dtau \

&= sum_{k=0}^n left(tfrac12right)^{bar{k}} s(n,k), end{align} }[/math]

zn denotes the rising factorial, and s(n,k) denotes a signed Stirling number of the first kind.[11][12]

There also exists a representation by an infinite sum containing the double factorial:

- [math]displaystyle{ operatorname{erf} z = frac{2}{sqrtpi} sum_{n=0}^infty frac{(-2)^n(2n-1)!!}{(2n+1)!}z^{2n+1} }[/math]

Numerical approximations

Approximation with elementary functions

- Abramowitz and Stegun give several approximations of varying accuracy (equations 7.1.25–28). This allows one to choose the fastest approximation suitable for a given application. In order of increasing accuracy, they are:

- [math]displaystyle{ operatorname{erf} x approx 1 — frac{1}{left(1 + a_1x + a_2x^2 + a_3x^3 + a_4x^4right)^4}, qquad x geq 0 }[/math]

(maximum error: 5×10−4)

where a1 = 0.278393, a2 = 0.230389, a3 = 0.000972, a4 = 0.078108

- [math]displaystyle{ operatorname{erf} x approx 1 — left(a_1t + a_2t^2 + a_3t^3right)e^{-x^2},quad t=frac{1}{1 + px}, qquad x geq 0 }[/math]

(maximum error: 2.5×10−5)

where p = 0.47047, a1 = 0.3480242, a2 = −0.0958798, a3 = 0.7478556

[math]displaystyle{ operatorname{erf} x approx 1 — frac{1}{left(1 + a_1x + a_2x^2 + cdots + a_6x^6right)^{16}}, qquad x geq 0 }[/math]

(maximum error: 3×10−7)where a1 = 0.0705230784, a2 = 0.0422820123, a3 = 0.0092705272, a4 = 0.0001520143, a5 = 0.0002765672, a6 = 0.0000430638

[math]displaystyle{ operatorname{erf} x approx 1 — left(a_1t + a_2t^2 + cdots + a_5t^5right)e^{-x^2},quad t = frac{1}{1 + px} }[/math]

(maximum error: 1.5×10−7)where p = 0.3275911, a1 = 0.254829592, a2 = −0.284496736, a3 = 1.421413741, a4 = −1.453152027, a5 = 1.061405429

All of these approximations are valid for x ≥ 0. To use these approximations for negative x, use the fact that erf x is an odd function, so erf x = −erf(−x).

- Exponential bounds and a pure exponential approximation for the complementary error function are given by[13]

- [math]displaystyle{ begin{align}

operatorname{erfc} x &leq tfrac12e^{-2 x^2} + tfrac12e^{- x^2} leq e^{-x^2}, &quad x > 0 \

operatorname{erfc} x &approx tfrac16e^{-x^2} + tfrac12e^{-frac43 x^2}, &quad x > 0 .

end{align} }[/math]

- [math]displaystyle{ begin{align}

- The above have been generalized to sums of N exponentials[14] with increasing accuracy in terms of N so that erfc x can be accurately approximated or bounded by 2Q̃(√2x), where

- [math]displaystyle{ tilde{Q}(x) = sum_{n=1}^N a_n e^{-b_n x^2}. }[/math]

In particular, there is a systematic methodology to solve the numerical coefficients {(an,bn)}Nn = 1 that yield a minimax approximation or bound for the closely related Q-function: Q(x) ≈ Q̃(x), Q(x) ≤ Q̃(x), or Q(x) ≥ Q̃(x) for x ≥ 0. The coefficients {(an,bn)}Nn = 1 for many variations of the exponential approximations and bounds up to N = 25 have been released to open access as a comprehensive dataset.[15]

- A tight approximation of the complementary error function for x ∈ [0,∞) is given by Karagiannidis & Lioumpas (2007)[16] who showed for the appropriate choice of parameters {A,B} that

- [math]displaystyle{ operatorname{erfc} x approx frac{left(1 — e^{-Ax}right)e^{-x^2}}{Bsqrt{pi} x}. }[/math]

They determined {A,B} = {1.98,1.135}, which gave a good approximation for all x ≥ 0. Alternative coefficients are also available for tailoring accuracy for a specific application or transforming the expression into a tight bound.[17]

- A single-term lower bound is[18]

- [math]displaystyle{ operatorname{erfc} x geq sqrt{frac{2 e}{pi}} frac{sqrt{beta — 1}}{beta} e^{- beta x^2}, qquad x ge 0,quad beta gt 1, }[/math]

where the parameter β can be picked to minimize error on the desired interval of approximation.

- Another approximation is given by Sergei Winitzki using his «global Padé approximations»:[19][20]:2–3

- [math]displaystyle{ operatorname{erf} x approx sgn x cdot sqrt{1 — expleft(-x^2frac{frac{4}{pi} + ax^2}{1 + ax^2}right)} }[/math]

where

- [math]displaystyle{ a = frac{8(pi — 3)}{3pi(4 — pi)} approx 0.140012. }[/math]

This is designed to be very accurate in a neighborhood of 0 and a neighborhood of infinity, and the relative error is less than 0.00035 for all real x. Using the alternate value a ≈ 0.147 reduces the maximum relative error to about 0.00013.[21]

This approximation can be inverted to obtain an approximation for the inverse error function:

- [math]displaystyle{ operatorname{erf}^{-1}x approx sgn x cdot sqrt{sqrt{left(frac{2}{pi a} + frac{lnleft(1 — x^2right)}{2}right)^2 — frac{lnleft(1 — x^2right)}{a}} -left(frac{2}{pi a} + frac{lnleft(1 — x^2right)}{2}right)}. }[/math]

- An approximation with a maximal error of 1.2×10−7 for any real argument is:[22]

- [math]displaystyle{ operatorname{erf} x = begin{cases}

1-tau & xge 0\

tau-1 & x lt 0

end{cases} }[/math]

with

- [math]displaystyle{ begin{align}

tau &= tcdotexpleft(-x^2-1.26551223+1.00002368 t+0.37409196 t^2+0.09678418 t^3 -0.18628806 t^4right.\

&left. qquadqquadqquad +0.27886807 t^5-1.13520398 t^6+1.48851587 t^7 -0.82215223 t^8+0.17087277 t^9right)

end{align} }[/math]

and

- [math]displaystyle{ t=frac{1}{1+frac12|x|}. }[/math]

- [math]displaystyle{ operatorname{erf} x = begin{cases}

Table of values

| x | erf x | 1 − erf x |

|---|---|---|

| 0 | 0 | 1 |

| 0.02 | 0.022564575 | 0.977435425 |

| 0.04 | 0.045111106 | 0.954888894 |

| 0.06 | 0.067621594 | 0.932378406 |

| 0.08 | 0.090078126 | 0.909921874 |

| 0.1 | 0.112462916 | 0.887537084 |

| 0.2 | 0.222702589 | 0.777297411 |

| 0.3 | 0.328626759 | 0.671373241 |

| 0.4 | 0.428392355 | 0.571607645 |

| 0.5 | 0.520499878 | 0.479500122 |

| 0.6 | 0.603856091 | 0.396143909 |

| 0.7 | 0.677801194 | 0.322198806 |

| 0.8 | 0.742100965 | 0.257899035 |

| 0.9 | 0.796908212 | 0.203091788 |

| 1 | 0.842700793 | 0.157299207 |

| 1.1 | 0.880205070 | 0.119794930 |

| 1.2 | 0.910313978 | 0.089686022 |

| 1.3 | 0.934007945 | 0.065992055 |

| 1.4 | 0.952285120 | 0.047714880 |

| 1.5 | 0.966105146 | 0.033894854 |

| 1.6 | 0.976348383 | 0.023651617 |

| 1.7 | 0.983790459 | 0.016209541 |

| 1.8 | 0.989090502 | 0.010909498 |

| 1.9 | 0.992790429 | 0.007209571 |

| 2 | 0.995322265 | 0.004677735 |

| 2.1 | 0.997020533 | 0.002979467 |

| 2.2 | 0.998137154 | 0.001862846 |

| 2.3 | 0.998856823 | 0.001143177 |

| 2.4 | 0.999311486 | 0.000688514 |

| 2.5 | 0.999593048 | 0.000406952 |

| 3 | 0.999977910 | 0.000022090 |

| 3.5 | 0.999999257 | 0.000000743 |

Complementary error function

The complementary error function, denoted erfc, is defined as

-

Plot of the complementary error function Erfc(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

[math]displaystyle{ begin{align}

operatorname{erfc} x

& = 1-operatorname{erf} x \[5pt]

& = frac{2}{sqrtpi} int_x^infty e^{-t^2}, dt \[5pt]

& = e^{-x^2} operatorname{erfcx} x,

end{align} }[/math]

which also defines erfcx, the scaled complementary error function[23] (which can be used instead of erfc to avoid arithmetic underflow[23][24]). Another form of erfc x for x ≥ 0 is known as Craig’s formula, after its discoverer:[25]

- [math]displaystyle{ operatorname{erfc} (x mid xge 0)

= frac 2 pi int_0^frac{pi}{2} exp left( — frac{x^2}{sin^2 theta} right) , dtheta. }[/math]

This expression is valid only for positive values of x, but it can be used in conjunction with erfc x = 2 − erfc(−x) to obtain erfc(x) for negative values. This form is advantageous in that the range of integration is fixed and finite. An extension of this expression for the erfc of the sum of two non-negative variables is as follows:[26]

- [math]displaystyle{ operatorname{erfc} (x+y mid x,yge 0) = frac 2 pi int_0^frac{pi}{2} exp left( — frac{x^2}{sin^2 theta} — frac{y^2}{cos^2 theta} right) , dtheta. }[/math]

Imaginary error function

The imaginary error function, denoted erfi, is defined as

Plot of the imaginary error function Erfi(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

- [math]displaystyle{ begin{align}

operatorname{erfi} x

& = -ioperatorname{erf} ix \[5pt]

& = frac{2}{sqrtpi} int_0^x e^{t^2},dt \[5pt]

& = frac{2}{sqrtpi} e^{x^2} D(x),

end{align} }[/math]

where D(x) is the Dawson function (which can be used instead of erfi to avoid arithmetic overflow[23]).

Despite the name «imaginary error function», erfi x is real when x is real.

When the error function is evaluated for arbitrary complex arguments z, the resulting complex error function is usually discussed in scaled form as the Faddeeva function:

- [math]displaystyle{ w(z) = e^{-z^2}operatorname{erfc}(-iz) = operatorname{erfcx}(-iz). }[/math]

Cumulative distribution function

The error function is essentially identical to the standard normal cumulative distribution function, denoted Φ, also named norm(x) by some software languages, as they differ only by scaling and translation. Indeed,

- [math]displaystyle{ begin{align}Phi(x)

&=frac{1}{sqrt{2pi}}int_{-infty}^x e^tfrac{-t^2}{2},dt\[6pt]

&= frac12 left(1+operatorname{erf}frac{x}{sqrt 2}right)\[6pt]

&=frac12operatorname{erfc}left(-frac{x}{sqrt 2}right)

end{align} }[/math]

or rearranged for erf and erfc:

- [math]displaystyle{ begin{align}

operatorname{erf}(x) &= 2 Phi left ( x sqrt{2} right ) — 1 \[6pt]

operatorname{erfc}(x) &= 2 Phi left ( — x sqrt{2} right ) \ &=2left(1-Phi left ( x sqrt{2} right)right).

end{align} }[/math]

Consequently, the error function is also closely related to the Q-function, which is the tail probability of the standard normal distribution. The Q-function can be expressed in terms of the error function as

- [math]displaystyle{ begin{align}

Q(x) &=frac12 — frac12 operatorname{erf} frac{x}{sqrt 2}\

&=frac12operatorname{erfc}frac{x}{sqrt 2}.

end{align} }[/math]

The inverse of Φ is known as the normal quantile function, or probit function and may be expressed in terms of the inverse error function as

- [math]displaystyle{ operatorname{probit}(p) = Phi^{-1}(p) = sqrt{2}operatorname{erf}^{-1}(2p-1) = -sqrt{2}operatorname{erfc}^{-1}(2p). }[/math]

The standard normal cdf is used more often in probability and statistics, and the error function is used more often in other branches of mathematics.

The error function is a special case of the Mittag-Leffler function, and can also be expressed as a confluent hypergeometric function (Kummer’s function):

- [math]displaystyle{ operatorname{erf} x = frac{2x}{sqrtpi} Mleft(tfrac12,tfrac32,-x^2right). }[/math]

It has a simple expression in terms of the Fresnel integral.

In terms of the regularized gamma function P and the incomplete gamma function,

- [math]displaystyle{ operatorname{erf} x = sgn x cdot Pleft(tfrac12, x^2right) = frac{sgn x}{sqrtpi}gammaleft(tfrac12, x^2right). }[/math]

sgn x is the sign function.

Generalized error functions

Graph of generalised error functions En(x):

grey curve: E1(x) = 1 − e−x/√π

red curve: E2(x) = erf(x)

green curve: E3(x)

blue curve: E4(x)

gold curve: E5(x).

Some authors discuss the more general functions:

- [math]displaystyle{ E_n(x) = frac{n!}{sqrtpi} int_0^x e^{-t^n},dt =frac{n!}{sqrtpi}sum_{p=0}^infty(-1)^pfrac{x^{np+1}}{(np+1)p!}.

}[/math]

Notable cases are:

- E0(x) is a straight line through the origin: E0(x) = x/e√π

- E2(x) is the error function, erf x.

After division by n!, all the En for odd n look similar (but not identical) to each other. Similarly, the En for even n look similar (but not identical) to each other after a simple division by n!. All generalised error functions for n > 0 look similar on the positive x side of the graph.

These generalised functions can equivalently be expressed for x > 0 using the gamma function and incomplete gamma function:

- [math]displaystyle{ E_n(x) = frac{1}{sqrtpi}Gamma(n)left(Gammaleft(frac{1}{n}right) — Gammaleft(frac{1}{n}, x^nright)right), qquad xgt 0. }[/math]

Therefore, we can define the error function in terms of the incomplete gamma function:

- [math]displaystyle{ operatorname{erf} x = 1 — frac{1}{sqrtpi}Gammaleft(tfrac12, x^2right). }[/math]

Iterated integrals of the complementary error function

The iterated integrals of the complementary error function are defined by[27]

- [math]displaystyle{ begin{align}

operatorname{i}^n!operatorname{erfc} z &= int_z^infty operatorname{i}^{n-1}!operatorname{erfc} zeta, dzeta \[6pt]

operatorname{i}^0!operatorname{erfc} z &= operatorname{erfc} z \

operatorname{i}^1!operatorname{erfc} z &= operatorname{ierfc} z = frac{1}{sqrtpi} e^{-z^2} — z operatorname{erfc} z \

operatorname{i}^2!operatorname{erfc} z &= tfrac14 left( operatorname{erfc} z -2 z operatorname{ierfc} z right) \

end{align} }[/math]

The general recurrence formula is

- [math]displaystyle{ 2 n cdot operatorname{i}^n!operatorname{erfc} z = operatorname{i}^{n-2}!operatorname{erfc} z -2 z cdot operatorname{i}^{n-1}!operatorname{erfc} z }[/math]

They have the power series

- [math]displaystyle{ operatorname{i}^n!operatorname{erfc} z =sum_{j=0}^infty frac{(-z)^j}{2^{n-j}j! ,Gamma left( 1 + frac{n-j}{2}right)}, }[/math]

from which follow the symmetry properties

- [math]displaystyle{ operatorname{i}^{2m}!operatorname{erfc} (-z) =-operatorname{i}^{2m}!operatorname{erfc} z +sum_{q=0}^m frac{z^{2q}}{2^{2(m-q)-1}(2q)! (m-q)!} }[/math]

and

- [math]displaystyle{ operatorname{i}^{2m+1}!operatorname{erfc}(-z) =operatorname{i}^{2m+1}!operatorname{erfc} z +sum_{q=0}^m frac{z^{2q+1}}{2^{2(m-q)-1}(2q+1)! (m-q)!}.

}[/math]

Implementations

As real function of a real argument

- In Posix-compliant operating systems, the header

math.hshall declare and the mathematical librarylibmshall provide the functionserfanderfc(double precision) as well as their single precision and extended precision counterpartserff,erflanderfcf,erfcl.[28] - The GNU Scientific Library provides

erf,erfc,log(erf), and scaled error functions.[29]

As complex function of a complex argument

libcerf, numeric C library for complex error functions, provides the complex functionscerf,cerfc,cerfcxand the real functionserfi,erfcxwith approximately 13–14 digits precision, based on the Faddeeva function as implemented in the MIT Faddeeva Package

See also

- Gaussian integral, over the whole real line

- Gaussian function, derivative

- Dawson function, renormalized imaginary error function

- Goodwin–Staton integral

In probability

- Normal distribution

- Normal cumulative distribution function, a scaled and shifted form of error function

- Probit, the inverse or quantile function of the normal CDF

- Q-function, the tail probability of the normal distribution

References

- ↑ Andrews, Larry C. (1998). Special functions of mathematics for engineers. SPIE Press. p. 110. ISBN 9780819426161. https://books.google.com/books?id=2CAqsF-RebgC&pg=PA110.

- ↑ Glaisher, James Whitbread Lee (July 1871). «On a class of definite integrals». London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 4 42 (277): 294–302. doi:10.1080/14786447108640568. https://books.google.com/books?id=8Po7AQAAMAAJ&pg=RA1-PA294. Retrieved 6 December 2017.

- ↑ Glaisher, James Whitbread Lee (September 1871). «On a class of definite integrals. Part II». London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 4 42 (279): 421–436. doi:10.1080/14786447108640600. https://books.google.com/books?id=yJ1YAAAAcAAJ&pg=PA421. Retrieved 6 December 2017.

- ↑ «A007680 – OEIS». https://oeis.org/A007680.

- ↑ Weisstein, Eric W.. «Erf». MathWorld. Wolfram. http://mathworld.wolfram.com/Erf.html.

- ↑ Schöpf, H. M.; Supancic, P. H. (2014). «On Bürmann’s Theorem and Its Application to Problems of Linear and Nonlinear Heat Transfer and Diffusion». The Mathematica Journal 16. doi:10.3888/tmj.16-11. http://www.mathematica-journal.com/2014/11/on-burmanns-theorem-and-its-application-to-problems-of-linear-and-nonlinear-heat-transfer-and-diffusion/#more-39602/.

- ↑ Weisstein, E. W.. «Bürmann’s Theorem». http://mathworld.wolfram.com/BuermannsTheorem.html.

- ↑ Bergsma, Wicher (2006). «On a new correlation coefficient, its orthogonal decomposition and associated tests of independence». arXiv:math/0604627.

- ↑ Cuyt; Petersen, Vigdis B.; Verdonk, Brigitte; Waadeland, Haakon; Jones, William B. (2008). Handbook of Continued Fractions for Special Functions. Springer-Verlag. ISBN 978-1-4020-6948-2.

- ↑ Ng, Edward W.; Geller, Murray (January 1969). «A table of integrals of the Error functions». Journal of Research of the National Bureau of Standards Section B 73B (1): 1. doi:10.6028/jres.073B.001.

- ↑ Schlömilch, Oskar Xavier (1859). «Ueber facultätenreihen» (in de). Zeitschrift für Mathematik und Physik 4: 390–415. https://archive.org/details/zeitschriftfrma09runggoog. Retrieved 2017-12-04.

- ↑ Nielson, Niels (1906) (in de). Handbuch der Theorie der Gammafunktion. Leipzig: B. G. Teubner. p. 283 Eq. 3. https://archive.org/details/handbuchgamma00nielrich. Retrieved 2017-12-04.

- ↑ Chiani, M.; Dardari, D.; Simon, M.K. (2003). «New Exponential Bounds and Approximations for the Computation of Error Probability in Fading Channels». IEEE Transactions on Wireless Communications 2 (4): 840–845. doi:10.1109/TWC.2003.814350. http://campus.unibo.it/85943/1/mcddmsTranWIR2003.pdf.

- ↑ Tanash, I.M.; Riihonen, T. (2020). «Global minimax approximations and bounds for the Gaussian Q-function by sums of exponentials». IEEE Transactions on Communications 68 (10): 6514–6524. doi:10.1109/TCOMM.2020.3006902.

- ↑ Tanash, I.M.; Riihonen, T. (2020). Coefficients for Global Minimax Approximations and Bounds for the Gaussian Q-Function by Sums of Exponentials [Data set]. doi:10.5281/zenodo.4112978. https://zenodo.org/record/4112978.

- ↑ Karagiannidis, G. K.; Lioumpas, A. S. (2007). «An improved approximation for the Gaussian Q-function». IEEE Communications Letters 11 (8): 644–646. doi:10.1109/LCOMM.2007.070470. http://users.auth.gr/users/9/3/028239/public_html/pdf/Q_Approxim.pdf.

- ↑ Tanash, I.M.; Riihonen, T. (2021). «Improved coefficients for the Karagiannidis–Lioumpas approximations and bounds to the Gaussian Q-function». IEEE Communications Letters 25 (5): 1468–1471. doi:10.1109/LCOMM.2021.3052257.

- ↑ Chang, Seok-Ho; Cosman, Pamela C.; Milstein, Laurence B. (November 2011). «Chernoff-Type Bounds for the Gaussian Error Function». IEEE Transactions on Communications 59 (11): 2939–2944. doi:10.1109/TCOMM.2011.072011.100049. http://escholarship.org/uc/item/6hw4v7pg.

- ↑ Winitzki, Sergei (2003). «Uniform approximations for transcendental functions». Computational Science and Its Applications – ICCSA 2003. Lecture Notes in Computer Science. 2667. Springer, Berlin. pp. 780–789. doi:10.1007/3-540-44839-X_82. ISBN 978-3-540-40155-1. https://archive.org/details/computationalsci0000iccs_a2w6.

- ↑ Zeng, Caibin; Chen, Yang Cuan (2015). «Global Padé approximations of the generalized Mittag-Leffler function and its inverse». Fractional Calculus and Applied Analysis 18 (6): 1492–1506. doi:10.1515/fca-2015-0086. «Indeed, Winitzki [32] provided the so-called global Padé approximation».

- ↑ Winitzki, Sergei (6 February 2008). A handy approximation for the error function and its inverse.

- ↑ Numerical Recipes in Fortran 77: The Art of Scientific Computing (ISBN 0-521-43064-X), 1992, page 214, Cambridge University Press.

- ↑ 23.0 23.1 23.2 Cody, W. J. (March 1993), «Algorithm 715: SPECFUN—A portable FORTRAN package of special function routines and test drivers», ACM Trans. Math. Softw. 19 (1): 22–32, doi:10.1145/151271.151273, http://www.stat.wisc.edu/courses/st771-newton/papers/p22-cody.pdf

- ↑ Zaghloul, M. R. (1 March 2007), «On the calculation of the Voigt line profile: a single proper integral with a damped sine integrand», Monthly Notices of the Royal Astronomical Society 375 (3): 1043–1048, doi:10.1111/j.1365-2966.2006.11377.x, Bibcode: 2007MNRAS.375.1043Z

- ↑ John W. Craig, A new, simple and exact result for calculating the probability of error for two-dimensional signal constellations , Proceedings of the 1991 IEEE Military Communication Conference, vol. 2, pp. 571–575.

- ↑ Behnad, Aydin (2020). «A Novel Extension to Craig’s Q-Function Formula and Its Application in Dual-Branch EGC Performance Analysis». IEEE Transactions on Communications 68 (7): 4117–4125. doi:10.1109/TCOMM.2020.2986209.

- ↑ Carslaw, H. S.; Jaeger, J. C. (1959), Conduction of Heat in Solids (2nd ed.), Oxford University Press, ISBN 978-0-19-853368-9, p 484

- ↑ https://pubs.opengroup.org/onlinepubs/9699919799/basedefs/math.h.html

- ↑ «Special Functions – GSL 2.7 documentation». https://www.gnu.org/software/gsl/doc/html/specfunc.html#error-functions.

Further reading

- Abramowitz, Milton; Stegun, Irene Ann, eds (1983). «Chapter 7». Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. pp. 297. LCCN 65-12253. ISBN 978-0-486-61272-0. http://www.math.sfu.ca/~cbm/aands/page_297.htm.

- Press, William H.; Teukolsky, Saul A.; Vetterling, William T.; Flannery, Brian P. (2007), «Section 6.2. Incomplete Gamma Function and Error Function», Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8, http://apps.nrbook.com/empanel/index.html#pg=259

- Temme, Nico M. (2010), «Error Functions, Dawson’s and Fresnel Integrals», in Olver, Frank W. J.; Lozier, Daniel M.; Boisvert, Ronald F. et al., NIST Handbook of Mathematical Functions, Cambridge University Press, ISBN 978-0-521-19225-5, http://dlmf.nist.gov/7

External links

- MathWorld – Erf

- A Table of Integrals of the Error Functions

{{ safesubst:#invoke:Unsubst||$N=Use dmy dates |date=__DATE__ |$B=

}}

Plot of the error function

In mathematics, the error function (also called the Gauss error function) is a special function (non-elementary) of sigmoid shape that occurs in probability, statistics, and partial differential equations describing diffusion. It is defined as:[1][2]

The complementary error function, denoted erfc, is defined as

which also defines erfcx, the scaled complementary error function[3] (which can be used instead of erfc to avoid arithmetic underflow[3][4]).

The imaginary error function, denoted erfi, is defined as

,

where D(x) is the Dawson function (which can be used instead of erfi to avoid arithmetic overflow[3]).

When the error function is evaluated for arbitrary complex arguments z, the resulting complex error function is usually discussed in scaled form as the Faddeeva function:

The name «error function»

The error function is used in measurement theory (using probability and statistics), and although its use in other branches of mathematics has nothing to do with the characterization of measurement errors, the name has stuck.

The error function is related to the cumulative distribution

The error function, evaluated at

Properties

{{#invoke:Multiple image|render}}

The property

For any complex number z:

where

The integrand ƒ = exp(−z2) and ƒ = erf(z) are shown in the complex z-plane in figures 2 and 3. Level of Im(ƒ) = 0 is shown with a thick green line. Negative integer values of Im(ƒ) are shown with thick red lines. Positive integer values of Im(f) are shown with thick blue lines. Intermediate levels of Im(ƒ) = constant are shown with thin green lines. Intermediate levels of Re(ƒ) = constant are shown with thin red lines for negative values and with thin blue lines for positive values.

At the real axis, erf(z) approaches unity at z → +∞ and −1 at z → −∞. At the imaginary axis, it tends to ±i∞.

Taylor series

The error function is an entire function; it has no singularities (except that at infinity) and its Taylor expansion always converges.

The defining integral cannot be evaluated in closed form in terms of elementary functions, but by expanding the integrand e−z2 into its Taylor series and integrating term by term, one obtains the error function’s Taylor series as:

which holds for every complex number z. The denominator terms are sequence A007680 in the OEIS.

For iterative calculation of the above series, the following alternative formulation may be useful:

because

The error function at +∞ is exactly 1 (see Gaussian integral).

The derivative of the error function follows immediately from its definition:

An antiderivative of the error function is

Bürmann series

An expansion,[6] which converges more rapidly for all real values of

By keeping only the first two coefficients and choosing

Inverse functions

The inverse error function can be defined in terms of the Maclaurin series

where c0 = 1 and

So we have the series expansion (note that common factors have been canceled from numerators and denominators):

(After cancellation the numerator/denominator fractions are entries A092676/A132467 in the OEIS; without cancellation the numerator terms are given in entry A002067.) Note that the error function’s value at ±∞ is equal to ±1.

The inverse complementary error function is defined as

Asymptotic expansion

A useful asymptotic expansion of the complementary error function (and therefore also of the error function) for large real x is

where (2n – 1)!! is the double factorial: the product of all odd numbers up to (2n – 1). This series diverges for every finite x, and its meaning as asymptotic expansion is that, for any

where the remainder, in Landau notation, is

as

.

Indeed, the exact value of the remainder is

which follows easily by induction, writing

For large enough values of x, only the first few terms of this asymptotic expansion are needed to obtain a good approximation of erfc(x) (while for not too large values of x note that the above Taylor expansion at 0 provides a very fast convergence).

Continued fraction expansion

A continued fraction expansion of the complementary error function is:[8]

Integral of error function with Gaussian density function

Approximation with elementary functions

Abramowitz and Stegun give several approximations of varying accuracy (equations 7.1.25–28). This allows one to choose the fastest approximation suitable for a given application. In order of increasing accuracy, they are:

(maximum error: 5×10−4)

where a1 = 0.278393, a2 = 0.230389, a3 = 0.000972, a4 = 0.078108

(maximum error: 2.5×10−5)

where p = 0.47047, a1 = 0.3480242, a2 = −0.0958798, a3 = 0.7478556

(maximum error: 3×10−7)

where a1 = 0.0705230784, a2 = 0.0422820123, a3 = 0.0092705272, a4 = 0.0001520143, a5 = 0.0002765672, a6 = 0.0000430638

(maximum error: 1.5×10−7)

where p = 0.3275911, a1 = 0.254829592, a2 = −0.284496736, a3 = 1.421413741, a4 = −1.453152027, a5 = 1.061405429

All of these approximations are valid for x ≥ 0. To use these approximations for negative x, use the fact that erf(x) is an odd function, so erf(x) = −erf(−x).

Another approximation is given by

where

This is designed to be very accurate in a neighborhood of 0 and a neighborhood of infinity, and the error is less than 0.00035 for all x. Using the alternate value a ≈ 0.147 reduces the maximum error to about 0.00012.[9]

This approximation can also be inverted to calculate the inverse error function:

Exponential bounds and a pure exponential approximation for the complementary error function are given by [10]

A single-term lower bound is

[11]

where the parameter β can be picked to minimize error on the desired interval of approximation.

Numerical approximation

Over the complete range of values, there is an approximation with a maximal error of

with

and

Applications

When the results of a series of measurements are described by a normal distribution with standard deviation

The error and complementary error functions occur, for example, in solutions of the heat equation when boundary conditions are given by the Heaviside step function.

The error function is essentially identical to the standard normal cumulative distribution function, denoted Φ, also named norm(x) by software languages, as they differ only by scaling and translation. Indeed,

or rearranged for erf and erfc:

Consequently, the error function is also closely related to the Q-function, which is the tail probability of the standard normal distribution. The Q-function can be expressed in terms of the error function as

The inverse of

The standard normal cdf is used more often in probability and statistics, and the error function is used more often in other branches of mathematics.

The error function is a special case of the Mittag-Leffler function, and can also be expressed as a confluent hypergeometric function (Kummer’s function):

It has a simple expression in terms of the Fresnel integral.Template:Elucidate

In terms of the Regularized Gamma function P and the incomplete gamma function,

Generalized error functions

Graph of generalised error functions En(x):

grey curve: E1(x) = (1 − e −x)/

red curve: E2(x) = erf(x)

green curve: E3(x)

blue curve: E4(x)

gold curve: E5(x).

Some authors discuss the more general functions:{{ safesubst:#invoke:Unsubst||date=__DATE__ |$B=

{{#invoke:Category handler|main}}{{#invoke:Category handler|main}}[citation needed]

}}

Notable cases are:

- E0(x) is a straight line through the origin:

- E2(x) is the error function, erf(x).

After division by n!, all the En for odd n look similar (but not identical) to each other. Similarly, the En for even n look similar (but not identical) to each other after a simple division by n!. All generalised error functions for n > 0 look similar on the positive x side of the graph.

These generalised functions can equivalently be expressed for x > 0 using the Gamma function and incomplete Gamma function:

Therefore, we can define the error function in terms of the incomplete Gamma function:

Iterated integrals of the complementary error function

The iterated integrals of the complementary error function are defined by

They have the power series

from which follow the symmetry properties

and

Implementations

- C: C99 provides the functions

double erf(double x)anddouble erfc(double x)in the header math.h. The pairs of functions {erff(),erfcf()} and {erfl(),erfcl()} take and return values of typefloatandlong doublerespectively. Forcomplex doublearguments, the function namescerfandcerfcare «reserved for future use»; the missing implementation is provided by the open-source project libcerf, which is based on the Faddeeva package.

- C++: C++11 provides

erf()anderfc()in the headercmath. Both functions are overloaded to accept arguments of typefloat,double, andlong double. Forcomplex<double>, the Faddeeva package provides a C++complex<double>implementation.

- Excel: Microsoft Excel provides the

erf, and theerfcfunctions, nonetheless both inverse functions are not in the current library.[13]

- Fortran: The Fortran 2008 standard provides the

ERF,ERFCandERFC_SCALEDfunctions to calculate the error function and its complement for real arguments. Fortran 77 implementations are available in SLATEC.

- Google search: Google’s search also acts as a calculator and will evaluate «erf(…)» and «erfc(…)» for real arguments.

- Haskell: An erf package[14] exists that provides a typeclass for the error function and implementations for the native (real) floating point types.

- IDL: provides both erf and erfc for real and complex arguments.

- Java: Apache commons-math[15] provides implementations of erf and erfc for real arguments.

- Maple: Maple implements both erf and erfc for real and complex arguments.

- MathCAD provides both erf(x) and erfc(x) for real arguments.

- Mathematica: erf is implemented as Erf and Erfc in Mathematica for real and complex arguments, which are also available in Wolfram Alpha.

- Matlab provides both erf and erfc for real arguments, also via W. J. Cody’s algorithm.[16]

- Maxima provides both erf and erfc for real and complex arguments.

- PARI/GP: provides erfc for real and complex arguments, via tanh-sinh quadrature plus special cases.

- Perl: erf (for real arguments, using Cody’s algorithm[16]) is implemented in the Perl module Math::SpecFun

- Python: Included since version 2.7 as

math.erf()for real arguments. For previous versions or for complex arguments, SciPy includes implementations of erf, erfc, erfi, and related functions for complex arguments inscipy.special.[17] A complex-argument erf is also in the arbitrary-precision arithmetic mpmath library asmpmath.erf()

- R: «The so-called ‘error function'»[18] is not provided directly, but is detailed as an example of the normal cumulative distribution function (

?pnorm), which is based on W. J. Cody’s rational Chebyshev approximation algorithm.[16]

- Ruby: Provides

Math.erf()andMath.erfc()for real arguments.

See also

- Gaussian integral, over the whole real line

- Gaussian function, derivative

- Dawson function, renormalized imaginary error function

In probability

- Normal distribution

- Normal cumulative distribution function, a scaled and shifted form of error function

- Probit, the inverse or quantile function of the normal CDF

- Q-function, the tail probability of the normal distribution

References

- ↑ Andrews, Larry C.; Special functions of mathematics for engineers

- ↑ 2.0 2.1 Greene, William H.; Econometric Analysis (fifth edition), Prentice-Hall, 1993, p. 926, fn. 11

- ↑ 3.0 3.1 3.2 {{#invoke:citation/CS1|citation

|CitationClass=citation

}} - ↑ {{#invoke:citation/CS1|citation

|CitationClass=citation

}} - ↑ Van Zeghbroeck, Bart; Principles of Semiconductor Devices, University of Colorado, 2011. [1]

- ↑ H. M. Schöpf and P. H. Supancic, «On Bürmann’s Theorem and Its Application to Problems of Linear and Nonlinear Heat Transfer and Diffusion,» The Mathematica Journal, 2014. doi:10.3888/tmj.16–11.Schöpf, Supancic

- ↑ E. W. Weisstein. «Bürmann’s Theorem» from Wolfram MathWorld—A Wolfram Web Resource./ E. W. Weisstein

- ↑ {{#invoke:citation/CS1|citation

|CitationClass=book

}} - ↑ Template:Cite web

- ↑ Chiani, M., Dardari, D., Simon, M.K. (2003). New Exponential Bounds and Approximations for the Computation of Error Probability in Fading Channels. IEEE Transactions on Wireless Communications, 4(2), 840–845, doi=10.1109/TWC.2003.814350.

- ↑ {{#invoke:Citation/CS1|citation

|CitationClass=journal

}} - ↑ Numerical Recipes in Fortran 77: The Art of Scientific Computing (ISBN 0-521-43064-X), 1992, page 214, Cambridge University Press.

- ↑ These results can however be obtained using the

NormSInvfunction as follows:erf_inverse(p) = -NormSInv((1 - p)/2)/SQRT(2);erfc_inverse(p) = -NormSInv(p/2)/SQRT(2). See [2]. - ↑ http://hackage.haskell.org/package/erf

- ↑ Commons Math: The Apache Commons Mathematics Library

- ↑ 16.0 16.1 16.2 {{#invoke:Citation/CS1|citation

|CitationClass=journal

}} - ↑ Error Function and Fresnel Integrals, SciPy v0.13.0 Reference Guide.

- ↑ {{#invoke:citation/CS1|citation

|CitationClass=citation

}}

- Template:AS ref

- {{#invoke:citation/CS1|citation

|CitationClass=citation

}}

- Template:Dlmf

External links

- MathWorld – Erf

- Error-function numerical table and calculator

Plot of the error function

In mathematics, the error function (also called the Gauss error function) is a special function (non-elementary) of sigmoid shape that occurs in probability, statistics, and partial differential equations describing diffusion. It is defined as:[1][2]

The complementary error function, denoted erfc, is defined as

which also defines erfcx, the scaled complementary error function[3] (which can be used instead of erfc to avoid arithmetic underflow[3][4]). Another form of

The imaginary error function, denoted erfi, is defined as

where D(x) is the Dawson function (which can be used instead of erfi to avoid arithmetic overflow[3]).

Despite the name «imaginary error function»,

When the error function is evaluated for arbitrary complex arguments z, the resulting complex error function is usually discussed in scaled form as the Faddeeva function:

Contents

- 1 The name «error function»

- 2 Properties

- 2.1 Taylor series

- 2.2 Derivative and integral

- 2.3 Bürmann series

- 2.4 Inverse functions

- 2.5 Asymptotic expansion

- 2.6 Continued fraction expansion

- 2.7 Integral of error function with Gaussian density function

- 3 Approximation with elementary functions

- 4 Numerical approximation

- 5 Applications

- 6 Related functions

- 6.1 Generalized error functions

- 6.2 Iterated integrals of the complementary error function

- 7 Implementations

- 8 See also

- 8.1 Related functions

- 8.2 In probability

- 9 References

- 10 External links

The name «error function»

The error function is used in measurement theory (using probability and statistics), and its use in other branches of mathematics is typically unrelated to the characterization of measurement errors.

The error function is related to the cumulative distribution

The error function, evaluated at

Properties

Plots in the complex plane

Integrand exp(−z2)

erf(z)

The property

For any complex number z:

where

The integrand ƒ = exp(−z2) and ƒ = erf(z) are shown in the complex z-plane in figures 2 and 3. Level of Im(ƒ) = 0 is shown with a thick green line. Negative integer values of Im(ƒ) are shown with thick red lines. Positive integer values of Im(f) are shown with thick blue lines. Intermediate levels of Im(ƒ) = constant are shown with thin green lines. Intermediate levels of Re(ƒ) = constant are shown with thin red lines for negative values and with thin blue lines for positive values.

The error function at +∞ is exactly 1 (see Gaussian integral). At the real axis, erf(z) approaches unity at z → +∞ and −1 at z → −∞. At the imaginary axis, it tends to ±i∞.

Taylor series

The error function is an entire function; it has no singularities (except that at infinity) and its Taylor expansion always converges.

The defining integral cannot be evaluated in closed form in terms of elementary functions, but by expanding the integrand e−z2 into its Maclaurin series and integrating term by term, one obtains the error function’s Maclaurin series as:

which holds for every complex number z. The denominator terms are sequence A007680 in the OEIS.

For iterative calculation of the above series, the following alternative formulation may be useful:

because

The imaginary error function has a very similar Maclaurin series, which is:

which holds for every complex number z.

Derivative and integral

The derivative of the error function follows immediately from its definition:

From this, the derivative of the imaginary error function is also immediate:

An antiderivative of the error function, obtainable by integration by parts, is

An antiderivative of the imaginary error function, also obtainable by integration by parts, is

Higher order derivatives are given by

where

Bürmann series

An expansion,[8] which converges more rapidly for all real values of

By keeping only the first two coefficients and choosing

Inverse functions

Given complex number z, there is not a unique complex number w satisfying