-

#1

O.K something stupid today while trying to use gpu passthrough which locked it up.

after a reboot I got the grub recover screen and started following this : https://pve.proxmox.com/wiki/Recover_From_Grub_Failure

Got a Ubuntu live cd , removed the other SSD drives which was passed to some VMs direct and started to follow it .

**** i’ve found the fault , how do I fix the :—-

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr’ not found.

Code:

sudo vgscan

Found volume group "pve" using metadata type lvm2

ubuntu@ubuntu:~$ sudo vgchange -ay

26 logical volume(s) in volume group "pve" now active

ubuntu@ubuntu:~$ sudo mkdir /media/rescue

ubuntu@ubuntu:~$ sudo mount /dev/pve/root /media/rescue/

ubuntu@ubuntu:~$ sudo mount /dev/sda2 /media/rescue/boot/efi

ubuntu@ubuntu:~$ sudo mount -t proc proc /media/rescue/proc

ubuntu@ubuntu:~$ sudo mount -t sysfs sys /media/rescue/sys

ubuntu@ubuntu:~$ sudo mount -o bind /dev /media/rescue/dev

ubuntu@ubuntu:~$ sudo mount -o bind /run /media/run

ubuntu@ubuntu:~$ sudo mount -o bind /run /media/rescue/run

ubuntu@ubuntu:~$ chroot /media/rescue

ubuntu@ubuntu:~$ sudo chroot /media/rescue

root@ubuntu:/# update-grub

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.13.19-4-pve

Found initrd image: /boot/initrd.img-5.13.19-4-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found linux image: /boot/vmlinuz-5.13.19-3-pve

Found initrd image: /boot/initrd.img-5.13.19-3-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found linux image: /boot/vmlinuz-5.13.19-2-pve

Found initrd image: /boot/initrd.img-5.13.19-2-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found linux image: /boot/vmlinuz-5.13.19-1-pve

Found initrd image: /boot/initrd.img-5.13.19-1-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found linux image: /boot/vmlinuz-5.11.22-7-pve

Found initrd image: /boot/initrd.img-5.11.22-7-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found linux image: /boot/vmlinuz-5.11.22-5-pve

Found initrd image: /boot/initrd.img-5.11.22-5-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found linux image: /boot/vmlinuz-5.11.22-4-pve

Found initrd image: /boot/initrd.img-5.11.22-4-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

Found memtest86+ image: /boot/memtest86+.bin

Found memtest86+ multiboot image: /boot/memtest86+_multiboot.bin

done

root@ubuntu:/# grub-install /dev/sda

Installing for x86_64-efi platform.

File descriptor 4 (/dev/sda2) leaked on vgs invocation. Parent PID 1295811: grub-install.real

File descriptor 4 (/dev/sda2) leaked on vgs invocation. Parent PID 1295811: grub-install.real

grub-install.real: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr' not found.

root@ubuntu:/# proxmox-boot-tool format /dev/sda2

UUID="0AB5-0AB6" SIZE="536870912" FSTYPE="vfat" PARTTYPE="c12a7328-f81f-11d2-ba4b-00a0c93ec93b" PKNAME="sda" MOUNTPOINT="/boot/efi"

E: '/dev/sda2' is mounted on '/boot/efi' - exiting.

root@ubuntu:/# proxmox-boot-tool status

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

E: /etc/kernel/proxmox-boot-uuids does not exist.

root@ubuntu:/# umount /dev/sda2

root@ubuntu:/# proxmox-boot-tool format /dev/sda2

UUID="0AB5-0AB6" SIZE="536870912" FSTYPE="vfat" PARTTYPE="c12a7328-f81f-11d2-ba4b-00a0c93ec93b" PKNAME="sda" MOUNTPOINT=""

E: '/dev/sda2' contains a filesystem ('vfat') - exiting (use --force to override)

root@ubuntu:/#

vgscan … found volume group pve using type lvmw …. looking good

vgchange -ay … 26 logical volumes on group pve … so far so good

mounted /dev/pve/root and can see the contents

Last edited: Mar 15, 2022

-

#2

The recover from grub failure most be wrong ?

I’ve loaded proxmox on a second machine to workout how to fix it and made a couple of VMs on it.

I don’t think from all I’ve read you can’t mount a bios boot partition sda1 ? I think it should be sda2 ?

root@pve:~# lsblk -o +FSTYPE,UUID /dev/sda*

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT FSTYPE UUID

sda 8:0 0 447.1G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part /boot/efi vfat 8365-463F

└─sda3 8:3 0 446.6G 0 part LVM2_member YpaNXr-Chct-9n7A-HYP1-oTeo-tCI4-kuYfs2

├─pve-swap 253:0 0 8G 0 lvm [SWAP] swap fb96e8b0-d5a6-4090-ba8e-04387e36371e

├─pve-root 253:1 0 96G 0 lvm / ext4 db97cf6d-8f19-4df8-88ef-bae662e95bf7

├─pve-data_tmeta 253:2 0 3.3G 0 lvm

│ └─pve-data-tpool 253:4 0 320.1G 0 lvm

│ ├─pve-data 253:5 0 320.1G 1 lvm

│ ├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

│ └─pve-vm—101—disk—0 253:7 0 16G 0 lvm

└─pve-data_tdata 253:3 0 320.1G 0 lvm

└─pve-data-tpool 253:4 0 320.1G 0 lvm

├─pve-data 253:5 0 320.1G 1 lvm

├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

└─pve-vm—101—disk—0 253:7 0 16G 0 lvm

sda1 8:1 0 1007K 0 part

sda2 8:2 0 512M 0 part /boot/efi vfat 8365-463F

sda3 8:3 0 446.6G 0 part LVM2_member YpaNXr-Chct-9n7A-HYP1-oTeo-tCI4-kuYfs2

├─pve-swap 253:0 0 8G 0 lvm [SWAP] swap fb96e8b0-d5a6-4090-ba8e-04387e36371e

├─pve-root 253:1 0 96G 0 lvm / ext4 db97cf6d-8f19-4df8-88ef-bae662e95bf7

├─pve-data_tmeta 253:2 0 3.3G 0 lvm

│ └─pve-data-tpool 253:4 0 320.1G 0 lvm

│ ├─pve-data 253:5 0 320.1G 1 lvm

│ ├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

│ └─pve-vm—101—disk—0 253:7 0 16G 0 lvm

└─pve-data_tdata 253:3 0 320.1G 0 lvm

└─pve-data-tpool 253:4 0 320.1G 0 lvm

├─pve-data 253:5 0 320.1G 1 lvm

├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

└─pve-vm—101—disk—0 253:7 0 16G 0 lvm

root@pve:~#

so is the problem with sda1 or sda2 and how do I fix it ? , because I don’t think the guide works now ?

Last edited: Mar 13, 2022

-

#3

I think that guide is too old. sda1 is a biosboot partition that is not intended to be mounted. sda2 is an ESP parition, but I don’t think you should mount that as /boot. Nowadays, Proxmox uses proxmox-boot-tool instead of running grub-install manually.

-

#4

I think that guide is too old.

sda1is a biosboot partition that is not intended to be mounted.sda2is an ESP parition, but I don’t think you should mount that as /boot. Nowadays, Proxmox uses proxmox-boot-tool instead of running grub-install manually.

Thanks , from what they say that looks correct it makes 3 partition , I’ve looked at all the what to do with a grub fault on debian and don’t think any would work. but I’m not sure how to use it on a dead system.

The created partitions are:

- a 1 MB BIOS Boot Partition (gdisk type EF02)

- a 512 MB EFI System Partition (ESP, gdisk type EF00)

- a third partition spanning the set hdsize parameter or the remaining space used for the chosen storage type

Last edited: Mar 13, 2022

-

#5

The partitions look fine (for a modern version of Proxmox). I suggest chroot-ing (as done in the old guide) into the pve-root and running proxmox-boot-tool to fix GRUB.

EDIT: Or maybe a grub-install /dev/sda1 is enough (from within the chroot of pve-root)?

Last edited: Mar 13, 2022

-

#6

The partitions look fine (for a modern version of Proxmox). I suggest chroot-ing (as done in the old guide) into the pve-root and running proxmox-boot-tool to fix GRUB.

EDIT: Or maybe a grub-install /dev/sda1 is enough (from within the chroot of pve-root)?

Thank you , I’ve spent a full day going nowhere , so I will try that on my test machine and make sure it doesn’t do any thing stupid like deleting the LVM partition .

-

#7

The partitions look fine (for a modern version of Proxmox). I suggest chroot-ing (as done in the old guide) into the pve-root and running proxmox-boot-tool to fix GRUB.

EDIT: Or maybe a grub-install /dev/sda1 is enough (from within the chroot of pve-root)?

good try on the proxmox boot tool , on a fully working system I tried following the guide with just a couple of changes , eg the mount points sda2 to boot/efi and the grub install and install didn’t kill it , them tried the proxmox-boot-tool , and that didn’t kill my system .

So now it’s the real system I doing to be format and working on …. Can’t be any worse that the place I’m in now.

-

#8

Anyone got any ideas what the missing disk is and how to fix it.

root@ubuntu:/# update-grub

Generating grub configuration file …

Found linux image: /boot/vmlinuz-5.13.19-4-pve

Found initrd image: /boot/initrd.img-5.13.19-4-pve

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr’ not found.

fiona

Proxmox Staff Member

-

#9

Hi,

you may be hitting Debian bug 987008. According to message 21 there, the workaround is to change something in the LVM, e.g. adding a new volume to the thin pool might work, and then re-run update-grub.

-

#13

This worked for me. Thanks!

I tried everything for a week , but in the end I just pulled all the VM backups from the disk , which was only a day old at the time, formatted and started again , one of my VMs was a Emby server which had a sperate zfs drive passed to it directly and was glad I could see all that data .

It was not what I wanted to do , but was getting nowhere fast or even slow .

Thanks .

-

#14

HI,

I got this exact problem but..

I got no room to lvextend root partition.

Lv reduce seems to corrupt the install. Good thing I’m testing everything on a clone.

Still. it repairs grub anyway so I was able to confirm, booting off two drives, that my proxmox is still alive but locked under the broken GRUB.

I can’t believe this suddenly happened, I don’t even think it was caused by an update. The day before it booted fine.

Is there any other way to »nudge» the lv to commit a grub-update than to play with it’s boundaries?

Or, is there ANY other way at all to simply restore grub with proxmox….? In the past, i know there were some automated tools to do this but I suspect it can’t be that easy as I read threads about Proxmox Grub repairs.

any help will be appreciated.

-

#15

HI,

I got this exact problem but..

I got no room to lvextend root partition.

Lv reduce seems to corrupt the install. Good thing I’m testing everything on a clone.Still. it repairs grub anyway so I was able to confirm, booting off two drives, that my proxmox is still alive but locked under the broken GRUB.

I can’t believe this suddenly happened, I don’t even think it was caused by an update. The day before it booted fine.Is there any other way to »nudge» the lv to commit a grub-update than to play with it’s boundaries?

Or, is there ANY other way at all to simply restore grub with proxmox….? In the past, i know there were some automated tools to do this but I suspect it can’t be that easy as I read threads about Proxmox Grub repairs.any help will be appreciated.

Instead if playing with lvexpand, you can nudge the lvs another way:

Did a vgrename to pve (like pvee)

Revert back to pve,

did the grub-update, and it worked

(Note: It took a while, probably because it’s changing sub reps names for all my 52 lvs)

*Warning, In another occurrence, I tried renaming the LV (root) as I thought it would be more gentle, (like root to roooty back to root)

The update-grub worked and the instance booted but the screen got painted with errors with read only file system jazz and everything.

Still trying to determine if I’m dealing with a failed drive in the first place… Just wanted to avoid people trying to get as smart as I tried if it’s related, the Above pve vgrename method worked on the other drive.

* I really can’t confirm this aforementioned method is safe, but it can be.

https://forum.proxmox.com/threads/ext4-fs-error.116822/

This thread shows the errors I got, I suspect the m.2 drive to be dying or somehing wrong with the mobo … But at the moment I don’t have a perfect clue.

Last edited: Oct 19, 2022

-

#16

Hi,

I also had issues with grub /usr/sbin/grub-probe: error: disk `lvmid/xxxxxxxxxxxx’ not found.

I’ve done that:

lvextend -L +1g /dev/pve/root

resize2fs /dev/pve/root

update-grub

and reboot

Now grub is ok but I have another issue during the boot process.

Superblock checksum does not match superblock while trying to open /dev/mapper/pve-root

….

….

…. you might try running efsck with an alternate block

….

….

mount: mounting /dev/mapper/pve-root on /root failed: Bad message.

Failed to mount /dev/mapper/pve-root as root file system.

And I only have BusyBox prompt after that.

I’ve try to fix the bad block while booting Proxmox from CD in advanced install (Linux prompt) but without success.

I’m using nvme ssd and I have 3 partitions : /dev/nvme0n1p1 , /dev/nvme0n1p2 and /dev/nvme0n1p3

Any suggestions / recommendations are welcome.

Regards,

Last edited: Jan 7, 2023

-

#17

Hi,

I also had issues with grub /usr/sbin/grub-probe: error: disk `lvmid/xxxxxxxxxxxx’ not found.

I’ve done that:

Now grub is ok but I have another issue during the boot process.

And I only have BusyBox prompt after that.

I’ve try to fix the bad block while booting Proxmox from CD in advanced install (Linux prompt) but without success.

I’m using nvme ssd and I have 3 partitions : /dev/nvme0n1p1 , /dev/nvme0n1p2 and /dev/nvme0n1p3

View attachment 45328

Any suggestions / recommendations are welcome.

Regards,

I tried a week to fix my problem and in the end I just give up and started again with the backups . But what a learned is don’t keep the backups on the same disk it boots from . do daily backup of VM which change .

If you have all your backup which you can get to easy it is just a install proxmox , install from backups and you are off in under a hour .

-

#18

I tried a week to fix my problem and in the end I just give up and started again with the backups . But what a learned is don’t keep the backups on the same disk it boots from . do daily backup of VM which change .

If you have all your backup which you can get to easy it is just a install proxmox , install from backups and you are off in under a hour .

Hi peter,

Thanks, I’m also considering this option but I would like to move VM and LXC data disk from my previous lvm pve partition to the new partition.

-

#19

Hi peter,

Thanks, I’m also considering this option but I would like to move VM and LXC data disk from my previous lvm pve partition to the new partition.

I did that to one of my VMs I didn’t backup . I just did the create a new VM with the same number and just replaced the data disk for that VM.

That looked easier than working out to write all the config files .

-

#20

Hi,

I also had issues with grub /usr/sbin/grub-probe: error: disk `lvmid/xxxxxxxxxxxx’ not found.

I’ve done that:

Now grub is ok but I have another issue during the boot process.

And I only have BusyBox prompt after that.

I’ve try to fix the bad block while booting Proxmox from CD in advanced install (Linux prompt) but without success.

I’m using nvme ssd and I have 3 partitions : /dev/nvme0n1p1 , /dev/nvme0n1p2 and /dev/nvme0n1p3

View attachment 45328

Any suggestions / recommendations are welcome.

Regards,

yes. as Peter suggested.

I’ve personally found similar issues with two Proxmox node having a single m.2 as Os/boot drive.

— In the process I cloned the m.2 to a ssd. And the instance ran fine… without this error.

— Pushed fresh backups, reinstalled, rejoin cluster, import backups. Less than 15 minutes

Never had an issue with any Sata SSD. And dedicate your m.2 storage for your Vms.

Содержание

- Arch Linux

- #1 2021-06-17 17:03:49

- [SOLVED] LUKS2 with UEFI/GRUB provides «error: disk lvmid not found»

- Arch Linux

- #1 2019-09-28 16:46:25

- [solved] Grub error ‘lvmid/xxx’ not found

- #2 2019-09-28 17:48:59

- Re: [solved] Grub error ‘lvmid/xxx’ not found

- #3 2019-09-28 18:38:04

- Re: [solved] Grub error ‘lvmid/xxx’ not found

- #4 2019-09-28 18:50:46

- Re: [solved] Grub error ‘lvmid/xxx’ not found

- Booting from Hard Disk error, Entering rescue mode

- Введение

- grub rescue

- Обновление загрузчика

- Почему система не загрузилась

- Что еще предпринять, чтобы починить загрузку

- /usr/sbin/grub-probe: error: disk `lvmid/********’ not found.

- peter247

- peter247

Arch Linux

You are not logged in.

#1 2021-06-17 17:03:49

[SOLVED] LUKS2 with UEFI/GRUB provides «error: disk lvmid not found»

Hi Arch community!

Each year for the past 3 years I’ve tried setting up Arch using UEFI/GRUB2 with LUKS2 disk encryption on different laptops. It’s basically become a tradition at this point.

Every time I’ve been thwarted with errors I seemingly couldn’t solve within a couple of days so every time I’ve decided to use the good old MBR/GRUB and be done with it.

This time I’ve decided would be different but again I reached a wall I couldn’t climb, which is why I would like you smart people’s help.

Unfortunately I cannot remember the errors I got the previous years but this time it’s: `error: disk lvmid/x/y not found` when booting the system as it puts me in the grub rescue shell.

I also went deep into the «GRUB2 is not compatible with LUKS2» issue and found the bug: https://savannah.gnu.org/bugs/?55093 and this gentleman’s post: https://askubuntu.com/a/1259424

I followed it by adding the `—pbkdf pbkdf2` parameter on the `cryptsetup` binary. (cryptsetup —verbose —cipher aes-xts-plain64 —key-size 512 —hash sha512 —iter-time 5000 —use-random —pbkdf pbkdf2 luksFormat /dev/nvme0n1p2) This gives me the same error as above however.

I was going to try using LUKS1 next but the error being the same got me questioning if I’m really encountering the «GRUB2+LUKS2» incompatibility or if I’m doing something completely wrong on the UEFI side of things.

After 3 years of using Arch I have the installation process noted down in a separate file which I use step by step alongside the Arch installation page but it’s quite long so I’m going to try and post the most relevant commands.

The filesystem layout looks something like this (except sdc became nvme0n1) (https://wiki.archlinux.org/title/Dm-cry … VM_on_LUKS):

/dev/sdc (DISK)

/dev/sdc1 (BOOT) [1GB EFI SYSTEM]

/dev/sdc2 (ENCRYPTED LVM PARTITION) [1TB LINUX FILESYSTEM]

cryptroot

lvm pv

lvm vg vg0

lvm lv

swap [32GB SWAP]

root [300GB EXT4]

home [6??GB EXT4]

«`

# I mount the volumes

$ mount /dev/vg0/root /mnt

$ mount /dev/nvme0n1p1 /mnt/efi

$ mount /dev/vg0/home /mnt/home

# pacstrap and arch-chroot happens

# I move keyboard to the front, add keymap, encrypt and lvm to the HOOKS param. I do this because I used to use AZERTY and want my keyboard to be loaded when the disk encryption prompt appears. I use QWERTY now though so this may not be necessary.

$ vim /etc/mkinitcpio.conf

HOOKS=(base udev autodetect keyboard keymap modconf block encrypt lvm2 filesystems fsck)

$ mkinitcpio -p linux

$ pacman -S grub efibootmgr

# I temporarily add the volume path, later when it boots I switch it to a /dev/disk/by-uuid path.

$ vim /etc/default/grub

GRUB_CMDLINE_LINUX=»cryptdevice=/dev/nvme0n1p2:cryptroot»

# This is the part I’m very unsure about. With MBR I put everything under /boot. Now config is devided between /boot and /efi.

$ grub-install —target=x86_64-efi —efi-directory=/efi —bootloader-id=arch-linux-grub

$ grub-mkconfig -o /boot/grub/grub.cfg

«`

That’s basically it. After that I unmount and close cryptsetup.

I can provide additional info of course, either from the grub rescue shell or from the thumb drive.

I eagerly await your valuable insights (even if not related to the error)!!

Last edited by knoebst (2021-06-17 21:25:08)

Источник

Arch Linux

You are not logged in.

#1 2019-09-28 16:46:25

[solved] Grub error ‘lvmid/xxx’ not found

I tried to set up a system following [https://wiki.archlinux.org/index.php/Dm … ion_(GRUB)].

I’m running an UEFI system, so I omitted the BIOS boot partition. Therefore, /dev/sda2 becomes /dev/sda1 and /dev/sda3 becomes /dev/sda2 on my computer. And I named the volume group SysVolGroup. Therefore lsblk shows:

In the mkinitcpio.conf, I added the hooks as shown in the wiki:

to my /etc/default/grub (after chroot’ing into the nascent system).

After installing Grub and creating the config with

I rebooted. Immediately after starting GRUB, I was presented with a screen saying

In the generated /boot/grub/grub.cfg this corresponds to the following line:

ls -l /dev/disk/by-id contains the line

ls -l /dev/disk/by-label contains

So my question is: What can I do to get my system to boot properly?

Beside taking some time to read up on stuff, I had a rather smoth sail until I tried to reboot after installation.

Last edited by Mox (2019-09-28 18:38:47)

#2 2019-09-28 17:48:59

Re: [solved] Grub error ‘lvmid/xxx’ not found

How should the system read the key for decryption from the encrypted drive?

Last edited by Swiggles (2019-09-28 17:51:12)

#3 2019-09-28 18:38:04

Re: [solved] Grub error ‘lvmid/xxx’ not found

That actually was it. I was so sure I had formatted to Luks1 that I forgot to recheck this when it did not work. So thank you for pointing me in the right direction. It boots now.

How should the system read the key for decryption from the encrypted drive?

This actually works. See https://wiki.archlinux.org/index.php/Dm … rase_twice. When the kernel is starting, the keyfile inside the initramfs is available, so it can thus use it to re-unlock the encrypted drive. Kind of a mind-twister, but it seems nobody has found a better way to avoid having to enter the password a second time yet (please correct me, if I am mistaken there).

Anyways, this problem is [solved].

#4 2019-09-28 18:50:46

Re: [solved] Grub error ‘lvmid/xxx’ not found

This actually works. See https://wiki.archlinux.org/index.php/Dm … rase_twice. When the kernel is starting, the keyfile inside the initramfs is available, so it can thus use it to re-unlock the encrypted drive. Kind of a mind-twister, but it seems nobody has found a better way to avoid having to enter the password a second time yet (please correct me, if I am mistaken there).

Oh, I didn’t think about it. Neat.

I am glad your problem is solved

Источник

Booting from Hard Disk error, Entering rescue mode

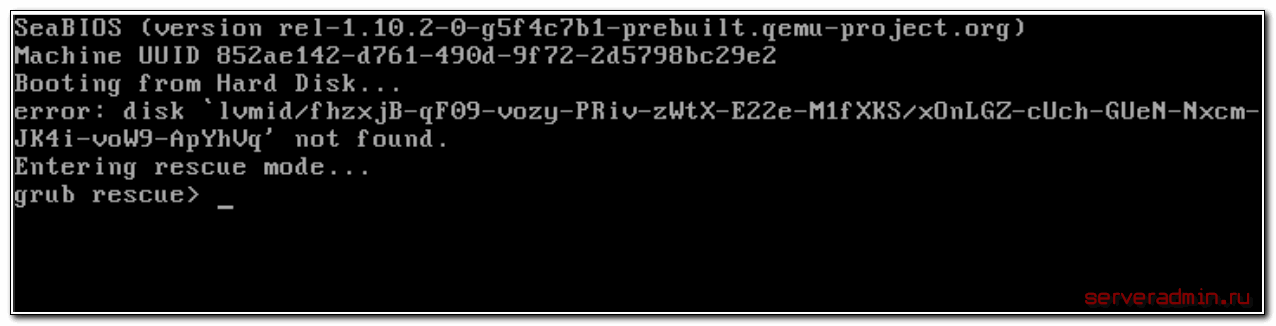

Пример решения проблемы, от которой холодок пробегает по коже, когда ее видишь на рабочем сервере в продакшене. После плановой перезагрузки виртуальная машина не загрузилась, показав ошибку и перейдя в grub rescue. Я уже не первый раз сталкиваюсь с подобным и примерный план восстановления в голове присутствует. Делюсь информацией с вами.

Введение

Есть сильно нагруженная виртуальная машина, для которой нужно было добавить ядер и оперативной памяти. Аптайм у нее был примерно пол года. Ничего не предвещало беды. Я предупредил, что простой будет секунд 30 и ребутнул машину. Как только увидел консоль виртуалки, понял, что дальше начинается веселье с непредсказуемым результатом. Адреналина добавила информация от разработчиков, что бэкапов у них нет 🙂

Для тех, кто еще не знаком с подобным, поясню. Начальный загрузчик не смог найти /boot раздел для продолжения загрузки. Вместо этого он сообщил, что раздел с указанным lvmid, где располагается boot, он не видит и дальше загрузиться не может. Машина находится в режиме grub rescue. Причин появления этого режима может быть много. Мне всегда приходится с чем-то новым сталкиваться, но методика решения проблемы примерно одна, и я дальше о ней расскажу. А потом поясню, что было с этой конкретной виртуалкой.

grub rescue

В grub rescue mode доступно всего четыре команды:

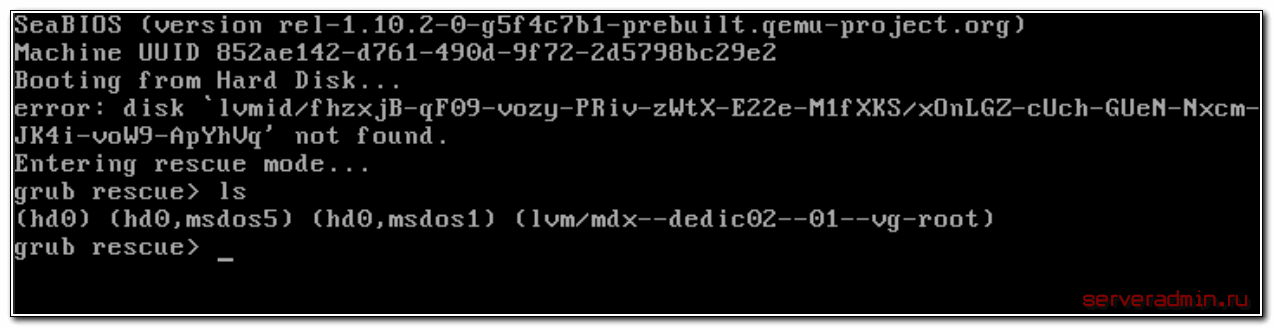

Для начала воспользуемся командой ls и посмотрим, какие разделы видит grub.

В моем случае несколько отдельных разделов диска и lvm том. К слову сказать, в моем случае раздел /boot расположен на lvm разделе, но по какой-то причине загрузчик не смог с него загрузиться. У вас может вообще не быть lvm, а проблема в чем-то другом. Например, если у вас в grub.cfg указан UUID раздела, с которого надо грузиться (это может быть массив mdadm), а раздел этот по какой-то причине исчез, или изменил свой uuid, вы как раз получите эту ошибку.

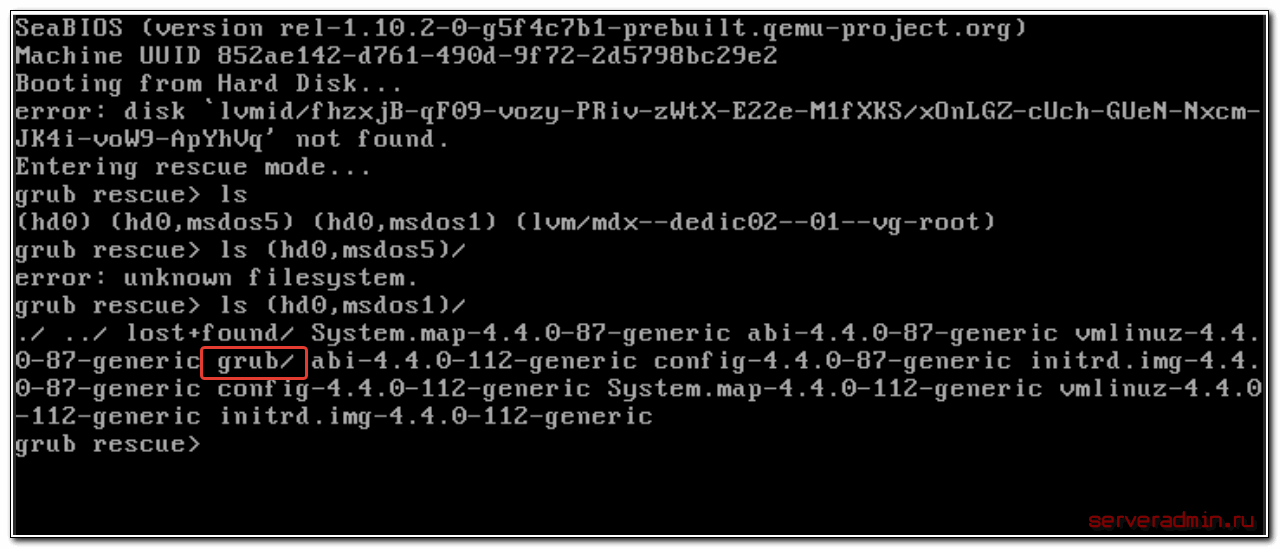

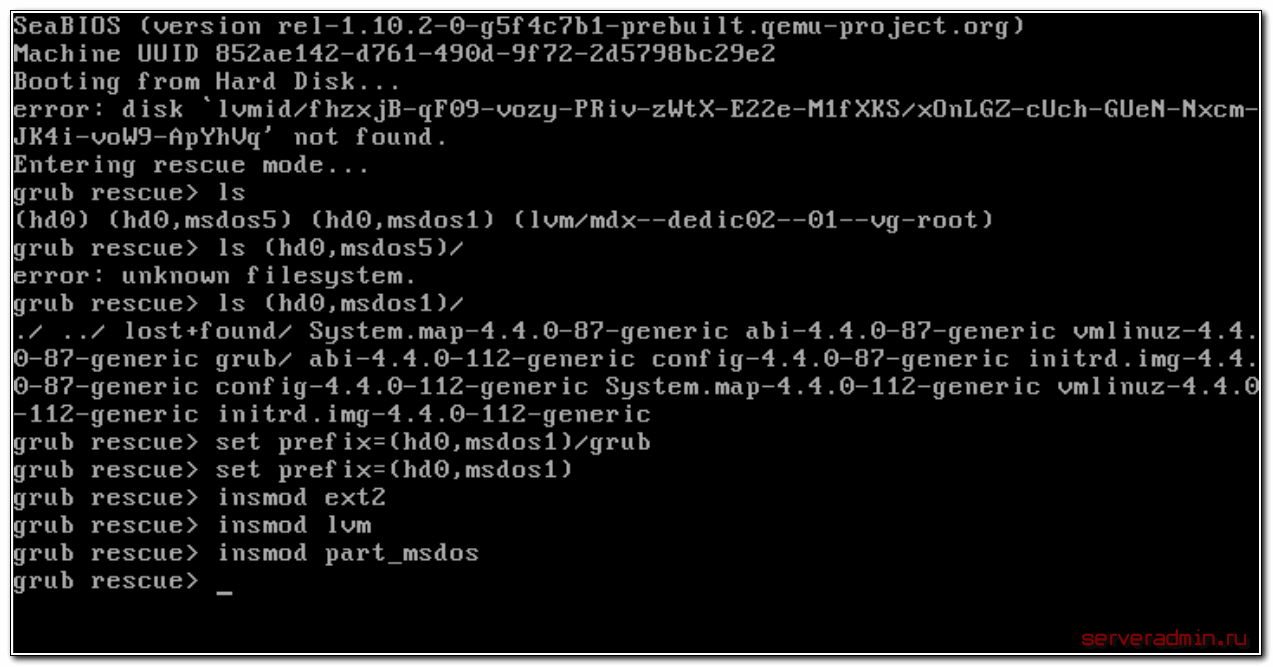

Сейчас нам нужно найти раздел, на котором расположен загрузчик. Первая часть загрузчика, которая записана в MBR диска очень примитивная и почти ничего не умеет. Она даже разделы диска толком не определила, решив почему-то, что там файловая система msdos, хотя это не она. Нам нужно проверить все разделы диска hd0 и найти реальный загрузчик. Проверяем это командами:

Я нашел на msdos1 искомый раздел /boot. Понял это по содержимому. В разделе есть директория /grub, где располагается вторая часть загрузчика. Искомая директория может называться /grub2 или /boot/grub. Указываем загрузчику использовать этот раздел при выполнении дальнейших команд.

Далее загружаем необходимые модули. Какие будут нужны, зависит от конкретной ситуации. На всякий случай показываю самые популярные:

Начать стоит вообще без модулей, а потом добавлять, в зависимости от вашей ситуации. В завершении загружаем модуль normal и вводим одноименную команду:

После этого вы должны увидеть стандартное меню загрузчика grub. Дальше вы загрузитесь в операционную систему.

Обновление загрузчика

Дальнейшее решение проблемы с загрузкой будет зависеть от того, что у вас сломалось. Возможно будет достаточно просто переустановить загрузчик:

Эта команда переустановит в MBR код загрузчика, который будет подхватывать тот раздел /boot, с которого вы в данный момент загрузились. Если это не поможет, то внесите необходимые изменения в в конфиг grub и пересоздайте его командой:

А после этого установите на диск:

Конфиг груба находится в разных дистрибутивах в разных местах. Какие туда вносить изменения, заранее тоже не могу сказать, будет зависеть от проблем. Скорее всего все это придется вам гуглить, если не получится сходу починиться по моим рекомендациям.

Почему система не загрузилась

Теперь рассказываю, что было в моем случае. Корень системы / располагался на lvm разделе вместе с /boot разделом. В какой-то момент корневой раздел был увеличен в размере за счет расширения тома lvm еще одним диском. Все это было сделано на лету, без перезагрузки системы. Причем сделано было мной давно, и с тех пор сервер ни разу не перезагружался до настоящего времени. Я не знаю почему, но данная операция привела к тому, что grub перестал загружаться с этого lvm раздела.

UUID физического тома и логического раздела не поменялись. То есть там информация, в начале загрузки, с ошибкой загрузки диска с lvmid, верная. Уиды правильные. Я понял, что причина в изменении размера диска только по аналогичным сообщениям в интернете. Наткнулся на несколько человек, которые обращались с похожей проблемой, где перед этим они тоже изменяли корневой раздел. Похоже это какой-то системный баг, возможно даже конкретной системы.

В моем случае на диске почему-то оказался отдельный раздел на 500 мб с файловой системой ext2. На нем как раз и был загрузчик, с которого я загрузился в rescue boot. Откуда взялся этот раздел, я не знаю. По идее, если он был создан автоматически во время установки системы, на нем бы и должен быть актуальный раздел /boot. Но нет, его не было в fstab и он не использовался. Я не стал долго разбираться, почему так получилось, а просто подмонтировал этот раздел в систему, обновил на нем grub и записал обновленный grub в MBR. После этого система благополучно загрузилась с этого раздела.

Если кто-то знает, почему мой загрузчик не смог загрузиться с lvm раздела, при том, что uuid указан правильно, прошу подсказки. Самому очень интересно, так как ситуация получилась неприятная и совершенно мне не понятная. Я часто расширяю корневой lvm раздел на ходу, но первый раз сталкиваюсь с тем, что это приводит к поломке загрузчика. Grub уже давно умеет грузиться с lvm раздела и каких-то дополнительных действий для этого делать не надо.

Что еще предпринять, чтобы починить загрузку

Если ничего из описанного не помогает, то дальше могут быть такие варианты:

- У вас проблемы с самими данными на разделе. Раздел /boot или корневой, просто не читаются, уничтожены или развалилась файловая система. Попробуйте починить с помощью fsck.

- Если починить /boot не получилось, то его нужно создать заново. Загрузитесь с livecd. Найдите раздел с загрузчиком, либо создайте новый. Отформатируйте его, установите на него загрузчик и запишите его в MBR с указанием на вновь созданный раздел.

Если ничего не помогло и вы не понимаете, что нужно сделать, то посмотрите вот это руководство по grub. Здесь очень хорошо и подробно все описано.

Еще совет. Если у вас живы сами данные, то зачастую бывает проще настроить новую виртуалку, подключить к ней диск от старой и перенести все данные. Так вы точно сможете спрогнозировать время восстановления системы. Обычно за час на все про все можно уложиться. Когда вы начинаете чинить упавшую систему, никогда точно не знаете, сколько времени уйдет на восстановление. В моем случае я загрузку за 30 минут и запустил машину. Потом еще 2 часа разбирался на копии виртуальной машины, что случилось и пытался найти решение проблемы без переустановки виртулаки. Получил некоторый опыт, но если бы я сразу все перенес на новую виртуальную машину, то потратил бы меньше времени.

Источник

/usr/sbin/grub-probe: error: disk `lvmid/********’ not found.

peter247

Member

I’ve been told I made a double post on how to fix my problem , which is not really the same question I asked at first .

O.K something stupid today while trying to use gpu passthrough which locked it up.

after a reboot I got the grub recover screen and started following this : https://pve.proxmox.com/wiki/Recover_From_Grub_Failure

Got a Ubuntu live cd , removed the other SSD drives which was passed to some VMs direct and started to follow it .

**** i’ve found the fault , how do I fix the :—-

/usr/sbin/grub-probe: error: disk `lvmid/vOy1fK-X2mn-83hH-h36r-9S2m-9QfZ-2ZYdTl/YdnS0p-FaHM-D2yz-DMca-5bla-10Hy-VDYIvr’ not found.

peter247

Member

The recover from grub failure most be wrong ?

I’ve loaded proxmox on a second machine to workout how to fix it and made a couple of VMs on it.

I don’t think from all I’ve read you can’t mount a bios boot partition sda1 ? I think it should be sda2 ?

# lsblk -o +FSTYPE,UUID /dev/sda*

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT FSTYPE UUID

sda 8:0 0 447.1G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part /boot/efi vfat 8365-463F

└─sda3 8:3 0 446.6G 0 part LVM2_member YpaNXr-Chct-9n7A-HYP1-oTeo-tCI4-kuYfs2

├─pve-swap 253:0 0 8G 0 lvm [SWAP] swap fb96e8b0-d5a6-4090-ba8e-04387e36371e

├─pve-root 253:1 0 96G 0 lvm / ext4 db97cf6d-8f19-4df8-88ef-bae662e95bf7

├─pve-data_tmeta 253:2 0 3.3G 0 lvm

│ └─pve-data-tpool 253:4 0 320.1G 0 lvm

│ ├─pve-data 253:5 0 320.1G 1 lvm

│ ├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

│ └─pve-vm—101—disk—0 253:7 0 16G 0 lvm

└─pve-data_tdata 253:3 0 320.1G 0 lvm

└─pve-data-tpool 253:4 0 320.1G 0 lvm

├─pve-data 253:5 0 320.1G 1 lvm

├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

└─pve-vm—101—disk—0 253:7 0 16G 0 lvm

sda1 8:1 0 1007K 0 part

sda2 8:2 0 512M 0 part /boot/efi vfat 8365-463F

sda3 8:3 0 446.6G 0 part LVM2_member YpaNXr-Chct-9n7A-HYP1-oTeo-tCI4-kuYfs2

├─pve-swap 253:0 0 8G 0 lvm [SWAP] swap fb96e8b0-d5a6-4090-ba8e-04387e36371e

├─pve-root 253:1 0 96G 0 lvm / ext4 db97cf6d-8f19-4df8-88ef-bae662e95bf7

├─pve-data_tmeta 253:2 0 3.3G 0 lvm

│ └─pve-data-tpool 253:4 0 320.1G 0 lvm

│ ├─pve-data 253:5 0 320.1G 1 lvm

│ ├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

│ └─pve-vm—101—disk—0 253:7 0 16G 0 lvm

└─pve-data_tdata 253:3 0 320.1G 0 lvm

└─pve-data-tpool 253:4 0 320.1G 0 lvm

├─pve-data 253:5 0 320.1G 1 lvm

├─pve-vm—100—disk—0 253:6 0 16G 0 lvm

└─pve-vm—101—disk—0 253:7 0 16G 0 lvm

root@pve:

so is the problem with sda1 or sda2 and how do I fix it ? , because I don’t think the guide works now ?

Источник

Hi Arch community!

Each year for the past 3 years I’ve tried setting up Arch using UEFI/GRUB2 with LUKS2 disk encryption on different laptops. It’s basically become a tradition at this point.

Every time I’ve been thwarted with errors I seemingly couldn’t solve within a couple of days so every time I’ve decided to use the good old MBR/GRUB and be done with it.

This time I’ve decided would be different but again I reached a wall I couldn’t climb, which is why I would like you smart people’s help.

Unfortunately I cannot remember the errors I got the previous years but this time it’s: `error: disk lvmid/x/y not found` when booting the system as it puts me in the grub rescue shell.

I also went deep into the «GRUB2 is not compatible with LUKS2» issue and found the bug: https://savannah.gnu.org/bugs/?55093 and this gentleman’s post: https://askubuntu.com/a/1259424

I followed it by adding the `—pbkdf pbkdf2` parameter on the `cryptsetup` binary. (cryptsetup —verbose —cipher aes-xts-plain64 —key-size 512 —hash sha512 —iter-time 5000 —use-random —pbkdf pbkdf2 luksFormat /dev/nvme0n1p2) This gives me the same error as above however.

I was going to try using LUKS1 next but the error being the same got me questioning if I’m really encountering the «GRUB2+LUKS2» incompatibility or if I’m doing something completely wrong on the UEFI side of things.

After 3 years of using Arch I have the installation process noted down in a separate file which I use step by step alongside the Arch installation page but it’s quite long so I’m going to try and post the most relevant commands.

The filesystem layout looks something like this (except sdc became nvme0n1) (https://wiki.archlinux.org/title/Dm-cry … VM_on_LUKS):

/dev/sdc (DISK)

/dev/sdc1 (BOOT) [1GB EFI SYSTEM]

/dev/sdc2 (ENCRYPTED LVM PARTITION) [1TB LINUX FILESYSTEM]

cryptroot

lvm pv

lvm vg vg0

lvm lv

swap [32GB SWAP]

root [300GB EXT4]

home [6??GB EXT4]

«`

# I mount the volumes

$ mount /dev/vg0/root /mnt

$ mount /dev/nvme0n1p1 /mnt/efi

$ mount /dev/vg0/home /mnt/home

# pacstrap and arch-chroot happens

# I move keyboard to the front, add keymap, encrypt and lvm to the HOOKS param. I do this because I used to use AZERTY and want my keyboard to be loaded when the disk encryption prompt appears. I use QWERTY now though so this may not be necessary.

$ vim /etc/mkinitcpio.conf

HOOKS=(base udev autodetect keyboard keymap modconf block encrypt lvm2 filesystems fsck)

$ mkinitcpio -p linux

$ pacman -S grub efibootmgr

# I temporarily add the volume path, later when it boots I switch it to a /dev/disk/by-uuid path.

$ vim /etc/default/grub

GRUB_CMDLINE_LINUX=»cryptdevice=/dev/nvme0n1p2:cryptroot»

# This is the part I’m very unsure about. With MBR I put everything under /boot. Now config is devided between /boot and /efi.

$ grub-install —target=x86_64-efi —efi-directory=/efi —bootloader-id=arch-linux-grub

$ grub-mkconfig -o /boot/grub/grub.cfg

«`

That’s basically it. After that I unmount and close cryptsetup.

I can provide additional info of course, either from the grub rescue shell or from the thumb drive.

I eagerly await your valuable insights (even if not related to the error)!!

Knoebst

Last edited by knoebst (2021-06-17 21:25:08)

p.H wrote: ↑2022-11-12 13:05

What exactly did you do ? Please post the exact and complete commands and output.

Installing the grub-pc-bin package should not do anything but install the package files. «vgs leak» messages may happen only when running grub-install or grub-mkconfig/update-grub and should be harmless.

I tried to install it using «Synaptic» but now I wonder if I ticked «grub-pc» instead of «grub-pc-bin». But nevermind as the package is now installed as per:

Code: Select all

dpkg --get-selections "grub*"

grub-common install

grub-customizer install

grub-efi install

grub-efi-amd64 install

grub-efi-amd64-bin install

grub-efi-amd64-signed install

grub-pc-bin install

grub2-common install

grub2-splashimages installp.H wrote: ↑2022-11-12 13:05The boot drives have GPT partition tables but I do not see «BIOS boot» partitions which are required to install GRUB legacy. So I wonder how GRUB was installed in the first place.

I don’t know neither but for sure the BIOS settings were UEFI CSM —> UEFI Only (no legacy option was involved AFAIK so I guess CSM implies sort of a legacy setup).

p.H wrote: ↑2022-11-12 13:05I would be interested in the output of «fdisk -l» and the report generated by bootinfoscript (from package boot-info-script). If needed you should be able to create a BIOS boot partition in any empty space of at least 100 kB (yes, kilobytes) such as the one between the primary partition table and the first partition.

Code: Select all

fdisk -l

Disco /dev/sdb: 1,82 TiB, 2000398934016 bytes, 3907029168 sectores

Modelo de disco: ST2000DM008-2FR1

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Tipo de etiqueta de disco: gpt

Identificador del disco: 5DE38EA7-338A-43CE-A8BF-599FC28B64AA

Disposit. Comienzo Final Sectores Tamaño Tipo

/dev/sdb1 2048 3906054143 3906052096 1,8T Linux RAID

Disco /dev/sda: 931,52 GiB, 1000207286272 bytes, 1953529856 sectores

Modelo de disco: SanDisk SSD PLUS

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Tipo de etiqueta de disco: gpt

Identificador del disco: 90C6CA8E-D556-4DA6-8124-B0096C99DDA4

Disposit. Comienzo Final Sectores Tamaño Tipo

/dev/sda1 2048 1050623 1048576 512M Sistema EFI

/dev/sda2 1050624 1952929791 1951879168 930,7G Sistema de ficheros de Linux

Disco /dev/sdc: 931,52 GiB, 1000207286272 bytes, 1953529856 sectores

Modelo de disco: SanDisk SSD PLUS

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Tipo de etiqueta de disco: gpt

Identificador del disco: 0548E76D-E49D-4AD7-A13B-4AD67D34AD05

Disposit. Comienzo Final Sectores Tamaño Tipo

/dev/sdc1 2048 1050623 1048576 512M Sistema EFI

/dev/sdc2 1050624 1952929791 1951879168 930,7G Sistema de ficheros de Linux

Disco /dev/sdd: 1,82 TiB, 2000398934016 bytes, 3907029168 sectores

Modelo de disco: ST2000DM008-2FR1

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Tipo de etiqueta de disco: gpt

Identificador del disco: 5DE38EA7-338A-43CE-A8BF-599FC28B64AA

Disposit. Comienzo Final Sectores Tamaño Tipo

/dev/sdd1 2048 3906054143 3906052096 1,8T Linux RAID

Disco /dev/md127: 511 MiB, 535822336 bytes, 1046528 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Tipo de etiqueta de disco: dos

Identificador del disco: 0x00000000

Disco /dev/md126: 930,6 GiB, 999226867712 bytes, 1951614976 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Disco /dev/mapper/vg1-lvroot: 128 GiB, 137438953472 bytes, 268435456 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Disco /dev/mapper/vg1-lvhome: 802,6 GiB, 861786865664 bytes, 1683177472 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Disco /dev/md125: 1,82 TiB, 1999763406848 bytes, 3905787904 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Disco /dev/mapper/vg2-lvdatos: 927,21 GiB, 995585163264 bytes, 1944502272 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Disco /dev/mapper/vg2-lvbackuppc: 931,21 GiB, 999880130560 bytes, 1952890880 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Disco /dev/mapper/vg2-lvswap: 4 GiB, 4294967296 bytes, 8388608 sectores

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Code: Select all

Boot Info Script 0.78 [09 October 2019]

============================= Boot Info Summary: ===============================

=> Grub2 (v2.00) is installed in the MBR of /dev/sda and looks at sector

1953155072 of the same hard drive for core.img. core.img is at this

location and looks for

(lvmid/jwecKl-QRBL-Yvag-FKhy-Pnl2-dI2x-33gWVQ/TECboT-6Oqv-ox8N-P2NH-3DRZ-23

lq-DCFCt1)/boot/grub. It also embeds following components:

modules

---------------------------------------------------------------------------

fshelp ext2 diskfilter lvm part_gpt mdraid1x biosdisk

---------------------------------------------------------------------------

=> No boot loader is installed in the MBR of /dev/sdb.

=> Grub2 (v2.00) is installed in the MBR of /dev/sdc and looks at sector

1953155072 of the same hard drive for core.img. core.img is at this

location and looks for

(lvmid/jwecKl-QRBL-Yvag-FKhy-Pnl2-dI2x-33gWVQ/TECboT-6Oqv-ox8N-P2NH-3DRZ-23

lq-DCFCt1)/boot/grub. It also embeds following components:

modules

---------------------------------------------------------------------------

fshelp ext2 diskfilter lvm part_gpt mdraid1x biosdisk

---------------------------------------------------------------------------

=> No boot loader is installed in the MBR of /dev/sdd.

sda1: __________________________________________________________________________

File system: linux_raid_member

Boot sector type: FAT32

Boot sector info:

sda2: __________________________________________________________________________

File system: linux_raid_member

Boot sector type: -

Boot sector info:

sdb1: __________________________________________________________________________

File system: linux_raid_member

Boot sector type: -

Boot sector info:

sdc1: __________________________________________________________________________

File system: linux_raid_member

Boot sector type: FAT32

Boot sector info:

sdc2: __________________________________________________________________________

File system: linux_raid_member

Boot sector type: -

Boot sector info:

sdd1: __________________________________________________________________________

File system: linux_raid_member

Boot sector type: -

Boot sector info:

md/uefi: _______________________________________________________________________

File system: vfat

Boot sector type: FAT32

Boot sector info: No errors found in the Boot Parameter Block.

Mounting failed: mount: /tmp/BootInfo-A6vTIRjE/MDRaid/md/uefi: /dev/md127 ya está montado en /boot/efi.

md/roothome: ___________________________________________________________________

File system: LVM2_member

Boot sector type: -

Boot sector info:

md/tamaran:datos: ______________________________________________________________

File system: LVM2_member

Boot sector type: -

Boot sector info:

============================ Drive/Partition Info: =============================

Drive: sda _____________________________________________________________________

Disco /dev/sda: 931,52 GiB, 1000207286272 bytes, 1953529856 sectores

Modelo de disco: SanDisk SSD PLUS

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Partition Boot Start Sector End Sector # of Sectors Id System

/dev/sda1 1 1,953,529,855 1,953,529,855 ee GPT

GUID Partition Table detected.

Partition Attrs Start Sector End Sector # of Sectors System

/dev/sda1 2,048 1,050,623 1,048,576 EFI System partition

/dev/sda2 1,050,624 1,952,929,791 1,951,879,168 Data partition (Linux)

Attributes: R=Required, N=No Block IO, B=Legacy BIOS Bootable, +=More bits set

Drive: sdb _____________________________________________________________________

Disco /dev/sdb: 1,82 TiB, 2000398934016 bytes, 3907029168 sectores

Modelo de disco: ST2000DM008-2FR1

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Partition Boot Start Sector End Sector # of Sectors Id System

/dev/sdb1 1 3,907,029,167 3,907,029,167 ee GPT

GUID Partition Table detected.

Partition Attrs Start Sector End Sector # of Sectors System

/dev/sdb1 2,048 3,906,054,143 3,906,052,096 RAID partition (Linux)

Attributes: R=Required, N=No Block IO, B=Legacy BIOS Bootable, +=More bits set

Drive: sdc _____________________________________________________________________

Disco /dev/sdc: 931,52 GiB, 1000207286272 bytes, 1953529856 sectores

Modelo de disco: SanDisk SSD PLUS

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 512 bytes

Tamaño de E/S (mínimo/óptimo): 512 bytes / 512 bytes

Partition Boot Start Sector End Sector # of Sectors Id System

/dev/sdc1 1 1,953,529,855 1,953,529,855 ee GPT

GUID Partition Table detected.

Partition Attrs Start Sector End Sector # of Sectors System

/dev/sdc1 2,048 1,050,623 1,048,576 EFI System partition

/dev/sdc2 1,050,624 1,952,929,791 1,951,879,168 Data partition (Linux)

Attributes: R=Required, N=No Block IO, B=Legacy BIOS Bootable, +=More bits set

Drive: sdd _____________________________________________________________________

Disco /dev/sdd: 1,82 TiB, 2000398934016 bytes, 3907029168 sectores

Modelo de disco: ST2000DM008-2FR1

Unidades: sectores de 1 * 512 = 512 bytes

Tamaño de sector (lógico/físico): 512 bytes / 4096 bytes

Tamaño de E/S (mínimo/óptimo): 4096 bytes / 4096 bytes

Partition Boot Start Sector End Sector # of Sectors Id System

/dev/sdd1 1 3,907,029,167 3,907,029,167 ee GPT

GUID Partition Table detected.

Partition Attrs Start Sector End Sector # of Sectors System

/dev/sdd1 2,048 3,906,054,143 3,906,052,096 RAID partition (Linux)

Attributes: R=Required, N=No Block IO, B=Legacy BIOS Bootable, +=More bits set

"blkid" output: ________________________________________________________________

Device UUID TYPE LABEL

/dev/mapper/vg1-lvhome 953eb556-5f00-4b4b-a62e-aff4831796b7 ext4

/dev/mapper/vg1-lvroot a3088bf8-8a02-44aa-a9b2-64aa8d70047f ext4

/dev/mapper/vg2-lvbackuppc 9bc25994-f569-47e5-88cd-2ce4b87d76ae ext4

/dev/mapper/vg2-lvdatos b0c0a5b3-16ea-4607-8c29-c5026cdc54fd ext4

/dev/mapper/vg2-lvswap cced8fe0-e77d-4caa-97d9-c629071e81e6 swap

/dev/md125 bwPTmn-2fCX-EPCD-cQY9-AAEo-mwkW-ZXPtGg LVM2_member

/dev/md126 tULnOX-O3F8-vqIS-pH3X-kbzK-vG1y-yha4wR LVM2_member

/dev/md127 F05D-8337 vfat

/dev/sda1 38dffdad-3784-17a0-a9f0-a3dabbf28c39 linux_raid_member sysresccd:uefi

/dev/sda2 45bdc492-9c8e-a915-f14d-8ce590516430 linux_raid_member sysresccd:roothome

/dev/sdb1 b4695e2c-f5ae-5ed5-b431-172e6608680b linux_raid_member tamaran:datos

/dev/sdc1 38dffdad-3784-17a0-a9f0-a3dabbf28c39 linux_raid_member sysresccd:uefi

/dev/sdc2 45bdc492-9c8e-a915-f14d-8ce590516430 linux_raid_member sysresccd:roothome

/dev/sdd1 b4695e2c-f5ae-5ed5-b431-172e6608680b linux_raid_member tamaran:datos

========================= "ls -l /dev/disk/by-id" output: ======================

total 0

lrwxrwxrwx 1 root root 9 nov 12 13:24 ata-SanDisk_SSD_PLUS_1000GB_1925BD479010 -> ../../sda

lrwxrwxrwx 1 root root 10 nov 12 13:24 ata-SanDisk_SSD_PLUS_1000GB_1925BD479010-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 nov 12 13:24 ata-SanDisk_SSD_PLUS_1000GB_1925BD479010-part2 -> ../../sda2

lrwxrwxrwx 1 root root 9 nov 12 13:24 ata-SanDisk_SSD_PLUS_1000GB_1925BD479607 -> ../../sdc

lrwxrwxrwx 1 root root 10 nov 12 13:24 ata-SanDisk_SSD_PLUS_1000GB_1925BD479607-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 nov 12 13:24 ata-SanDisk_SSD_PLUS_1000GB_1925BD479607-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 9 nov 12 13:24 ata-ST2000DM008-2FR102_WFL5AGCQ -> ../../sdb

lrwxrwxrwx 1 root root 10 nov 12 13:24 ata-ST2000DM008-2FR102_WFL5AGCQ-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 9 nov 12 13:24 ata-ST2000DM008-2FR102_WFL5AGJP -> ../../sdd

lrwxrwxrwx 1 root root 10 nov 12 13:24 ata-ST2000DM008-2FR102_WFL5AGJP-part1 -> ../../sdd1

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-name-vg1-lvhome -> ../../dm-1

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-name-vg1-lvroot -> ../../dm-0

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-name-vg2-lvbackuppc -> ../../dm-3

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-name-vg2-lvdatos -> ../../dm-2

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-name-vg2-lvswap -> ../../dm-4

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-uuid-LVM-5nNlcdEkBER3f59eST9T5ihUOtBZWf1yhu7IAr3ti1mwHWcEWQbE1D3eaAPSCXnX -> ../../dm-0

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-uuid-LVM-5nNlcdEkBER3f59eST9T5ihUOtBZWf1yP2Eub11iZfVk15k0fujiey323XKu52UA -> ../../dm-1

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-uuid-LVM-KJfxw1r34zac76GrwEmsY8XSp4Fjgolr3hXSQ3LKSKUaGmSmRMSyIBl8SNFCQAJc -> ../../dm-3

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-uuid-LVM-KJfxw1r34zac76GrwEmsY8XSp4FjgolrU3Wu0DzIOubOT7rNiaEE4zMkNgB5FL63 -> ../../dm-2

lrwxrwxrwx 1 root root 10 nov 12 13:24 dm-uuid-LVM-KJfxw1r34zac76GrwEmsY8XSp4FjgolrV5JIA7C3MZGxmuFsuYG813nnAI7O2xg8 -> ../../dm-4

lrwxrwxrwx 1 root root 11 nov 12 13:24 lvm-pv-uuid-bwPTmn-2fCX-EPCD-cQY9-AAEo-mwkW-ZXPtGg -> ../../md125

lrwxrwxrwx 1 root root 11 nov 12 13:24 lvm-pv-uuid-tULnOX-O3F8-vqIS-pH3X-kbzK-vG1y-yha4wR -> ../../md126

lrwxrwxrwx 1 root root 11 nov 12 13:24 md-name-sysresccd:roothome -> ../../md126

lrwxrwxrwx 1 root root 11 nov 12 13:24 md-name-sysresccd:uefi -> ../../md127

lrwxrwxrwx 1 root root 11 nov 12 13:24 md-name-tamaran:datos -> ../../md125

lrwxrwxrwx 1 root root 11 nov 12 13:24 md-uuid-38dffdad:378417a0:a9f0a3da:bbf28c39 -> ../../md127

lrwxrwxrwx 1 root root 11 nov 12 13:24 md-uuid-45bdc492:9c8ea915:f14d8ce5:90516430 -> ../../md126

lrwxrwxrwx 1 root root 11 nov 12 13:24 md-uuid-b4695e2c:f5ae5ed5:b431172e:6608680b -> ../../md125

lrwxrwxrwx 1 root root 9 nov 12 13:24 wwn-0x5000c500d64b7c3c -> ../../sdd

lrwxrwxrwx 1 root root 10 nov 12 13:24 wwn-0x5000c500d64b7c3c-part1 -> ../../sdd1

lrwxrwxrwx 1 root root 9 nov 12 13:24 wwn-0x5000c500d64b7ead -> ../../sdb

lrwxrwxrwx 1 root root 10 nov 12 13:24 wwn-0x5000c500d64b7ead-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 9 nov 12 13:24 wwn-0x5001b444a8ec5865 -> ../../sdc

lrwxrwxrwx 1 root root 10 nov 12 13:24 wwn-0x5001b444a8ec5865-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 nov 12 13:24 wwn-0x5001b444a8ec5865-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 9 nov 12 13:24 wwn-0x5001b444a8ece23a -> ../../sda

lrwxrwxrwx 1 root root 10 nov 12 13:24 wwn-0x5001b444a8ece23a-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 nov 12 13:24 wwn-0x5001b444a8ece23a-part2 -> ../../sda2

========================= "ls -R /dev/mapper/" output: =========================

/dev/mapper:

control

vg1-lvhome

vg1-lvroot

vg2-lvbackuppc

vg2-lvdatos

vg2-lvswap

================================ Mount points: =================================

Device Mount_Point Type Options

/dev/mapper/vg1-lvhome /home ext4 (rw,noatime,nodiratime)

/dev/mapper/vg1-lvroot / ext4 (rw,noatime,nodiratime,errors=remount-ro)

/dev/mapper/vg2-lvbackuppc /var/lib/backuppc ext4 (rw,relatime)

/dev/mapper/vg2-lvdatos /usr/local/share/datos ext4 (rw,noatime,nodiratime)

/dev/md127 /boot/efi vfat (rw,relatime,fmask=0077,dmask=0077,codepage=437,iocharset=ascii,shortname=mixed,utf8,errors=remount-ro)

=============================== StdErr Messages: ===============================

/usr/sbin/bootinfoscript: línea 2553: 1683177472S: valor demasiado grande para la base (el elemento de error es "1683177472S")

Looks like «bootinfoscript» is giving some interesting info since its very beginning about holding the wrong lvmid in a «core.img» file. Should I search and delete it?

p.H wrote: ↑2022-11-12 13:05

According to the output of the commands, /boot/efi is a RAID1 array with metadata 1.2 (at the beginning). Such layout cannot be read by the UEFI firmware. The UEFI firmware can only read a plain (non RAID) EFI partition or a RAID1 array with metadata 1.0 (at the end) — but this is a hack.

UEFI boot redundancy is tricky to achieve, so I recommend sticking with legacy boot if it works.

So if I backup «/boot/efi» contents elsewhere, dismantle «/dev/md127», format «/dev/sd[ac]1» (FAT32) and recover the backup I’d be able to boot from EFI, shouldn’t I? Even if I don’t achieve boot redundancy.

| Grub error: disk ‘lvmid/…’ Not found |

View unanswered posts View posts from last 24 hours |

|

Gentoo Forums Forum Index |

| View previous topic :: View next topic | |||||||

| Author | Message | ||||||

|---|---|---|---|---|---|---|---|

| ccm7676 n00b

Joined: 22 Jul 2022 |

|

||||||

| Back to top |

|

||||||

| ccm7676 n00b

Joined: 22 Jul 2022 |

|

||||||

| Back to top |

|

||||||

| Hu Moderator

Joined: 06 Mar 2007 |

|

||||||

| Back to top |

|

||||||

|

|

Gentoo Forums Forum Index |

All times are GMT |

| Page 1 of 1 |

|

Jump to: You cannot post new topics in this forum |

This document (7024181) is provided subject to the disclaimer at the end of this document.

Environment

SUSE Linux Enterprise Server 12 for POWER including all Service Packs

SUSE Linux Enterprise Server 15 for POWER including all Service Packs

Situation

After rebooting the system, grub2 can’t find the root volume anymore and is stuck with an error message similar to:

error: disk 'lvmid/eBY8sJ-l5YB-nVGq-dhld-lbDa-hQxT-P8eXl9/zbpPOS-yUU1-pgTr-QLJC-5jeK-d3ts-gNwtzw' not found.

The root volume group (LVM) was consisting of one physical volume (PV) but was running out of space, thus the VG was extended with new PV — the PV is located on a disk without partitions, ie. raw disk.

Resolution

On Power systems every boot disk must have partitions and its first partition must be PReP type.

An example of two root VG PVs which has PReP partition:

If a disk where root VG PV is located is a raw disk or does not have PReP partition as the first partition, new disk has to be used, partitioned, and existing LVM physical extends (PEs) have to be moved from to the correctly partitioned disk, that is, to the PV located on such disk.

GRUB2 uses PReP partitions to save boot data, thus raw disks, formatted as physical volumes (LVM), do not have a reserved space for the bootloader and boot may fail.

To correct the boot issue, the correct workflow would be (for demonstration purposes let’s assume the raw disk PV is /dev/mapper/360000000000000000000000000000000):

- assign another LUN to the system (e.g. /dev/mapper/360000000000000000000000000000010)

- boot from ISO

- setup multipath if required (see link in ‘Additional information‘)

- chroot to the installed system (see link in ‘Additional information‘)

- update grub2 package to latest version! (old versions of GRUB2 had issues if root VG was extended over multiple PVs); eg: zypper up grub2*

- create a GPT partition table on the newly added disk; eg. via fdisk /dev/mapper/360000000000000000000000000000010

- create a PReP partition of about 8MB size on the newly added disk; eg. /dev/mapper/360000000000000000000000000000010-part1

- create a second partition consuming all free space on the LUN, eg: /dev/mapper/360000000000000000000000000000010-part2

- use `pvcreate‘ to create PV on the second partition on the newly added disk; eg: pvcreate /dev/mapper/360000000000000000000000000000010-part2

- extend root VG with newly created PV; eg: vgextend system /dev/mapper/360000000000000000000000000000010-part2

- use `pvmove‘ to move all physical extends (PEs) from the raw disk PV to the newly added PV; eg: pvmove /dev/mapper/360000000000000000000000000000000 /dev/mapper/360000000000000000000000000000010

- modify /etc/default/grub_installdevice to refer to first partition on all disks where root VG is located

- reinstall bootloader to first partition on all disks where root VG is located; eg: grub2-install /dev/mapper/360000000000000000000000000000000-part1 and continue with first partitions on the remaining root volume group disks

- regenerate bootloader configuration: grub2-mkconfig -o /boot/grub2/grub.cfg

Cause

NOTE: This issue is only seen on POWER, other architectures are not affected as it’s caused by an issue in OpenFirmware.

Whenever there is more than one disk/LUN in the LVM volume group Openfirmware cannot find the bootloader so there must a PReP partition on each LUN and the bootloader needs to be installed (or refreshed in case of any changes) on all disks/LUNS.

Additional Information

Disclaimer

This Support Knowledgebase provides a valuable tool for SUSE customers and parties interested in our products and solutions to acquire information, ideas and learn from one another. Materials are provided for informational, personal or non-commercial use within your organization and are presented «AS IS» WITHOUT WARRANTY OF ANY KIND.

- Document ID:7024181

- Creation Date:

15-Oct-2019 - Modified Date:24-Oct-2022

-

- SUSE Linux Enterprise Server

- SUSE Linux Enterprise Server for SAP Applications

< Back to Support Search

For questions or concerns with the SUSE Knowledgebase please contact: tidfeedback[at]suse.com

Forum rules

Before you post please read how to get help. Topics in this forum are automatically closed 6 months after creation.

-

Qortal1Windozz0

- Level 1

- Posts: 1

- Joined: Mon Dec 20, 2021 7:30 pm

LVM: error: disk ‘lvmid/(uuid)’ not found. Entering grub rescue

Aloha! I keep having boot issues after I add a second disk. I thought maybe I could Avoid rebooting but that’s not true. System completes POST and never Grub- instead lands me in a black screen that says:

error: disk ‘lvmid/(uuid)’ not found.

Entering rescue mode …

grub rescue> _

What do I need to learn to command my computer to boot? I’m attempting to run a Qortal data node if I can ever get passed this problem.

Relevant hardware: gigabyte gbace w glued-on Celeron N3150 external Tb SSD was /dev/sda and Tb HDD was /dev/sdb before adding to VG. still a noob tho my skills at the command line have been increasing as I deal w new and more complex tissues TYVM for any time u have to help.

Last edited by LockBot on Wed Dec 28, 2022 7:16 am, edited 1 time in total.

Reason: Topic automatically closed 6 months after creation. New replies are no longer allowed.

-

jwiz

- Level 4

- Posts: 276

- Joined: Tue Dec 20, 2016 6:59 am

Re: LVM: error: disk ‘lvmid/(uuid)’ not found. Entering grub rescue

Post

by jwiz » Thu Dec 23, 2021 8:12 am

You’ve somehow managed to mess up grub so that it now doesn’t find the boot loader installation at startup.

For further info how to remedy this look here: https://www.howtoforge.com/tutorial/rep … ub-rescue/

Basically, you run the ‘set’ command to see the current grub default values, then do an ‘ls’ to figure out where the hdd with the grub installation now is.

Then do a ‘set prefix’ and ‘set root’ to point grub to that correct destination.

After that do an ‘insmod normal’ to load the module for normal start and then run the ‘normal’ command to boot the system.

Once you’ve succeded to boot up into your system do a ‘(sudo) grub reinstall’ to repair the broken boot installation.

-

Ubuntu 20.04 clean install fails with grub error

I’m trying to install Ubuntu 20.04 on a system with 2x SSD and one HDD. I’d like to raid0 the OS, swap and cache for the HDD. Thus efi is native on gpt 1st partition, root and home etc are all on LVM. I guess it is likely the raid that’s causing grub issues. But I can’t figure out how to resolve the issue. I’m not using encryption and this setup worked fine in a Ubuntu 18.04 VM easlier this week.

Error halting installation:

Code:

Executing 'glub-install /dev/sda' failed. This is a fatal error.

/var/log/syslog (filtered)

ubuntu ubiquity: grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n’ not found.

ubuntu ubiquity: grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n’ not found.

ubuntu ubiquity: /usr/sbin/grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n’ not found.

ubuntu ubiquity: message repeated 2 times: [ /usr/sbin/grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n’ not found.]

ubuntu grub-installer: grub-install: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n’ not found.

ubuntu grub-installer: error: Running ‘grub-install —force «/dev/sdb»‘ failed.Manual disk layout

/dev/sda1 efi (autodetected as efi during install)

/dev/sda2 lvm pv

/dev/sdb1 efi (autodetected as efi during install)

/dev/sdb2 lvm pv

/dev/sdc lvm pv/vg_ml1/lv_root — raid0 on sda2 sdb2 (set as ext4 / during install)

/vg_ml1/lv_swap — raid0 on sda2 sdb2 (autodetected as swap during install)

/vg_ml1/lv_cache — raid0 on sda2 sdb2 (cache for lv_home)

/vg_ml1/lv_home_ssd — raid1 on sda2 sdb2 (unassigned during install)

/vg_ml1/lv_home — 100% of sdc (set as /home during install)blkid (removed live cd items)

Code:

root@ubuntu:/# blkid | grep -v loop /dev/sda1: LABEL_FATBOOT="EFI_0" LABEL="EFI_0" UUID="B2A0-A1AA" TYPE="vfat" PARTLABEL="EFI" PARTUUID="b1aa1788-b3b6-431c-ae89-b3ce50d736ee" /dev/sdb1: LABEL_FATBOOT="EFI_1" LABEL="EFI_1" UUID="8C4A-CFD3" TYPE="vfat" PARTLABEL="EFI" PARTUUID="9d63359b-1165-4323-b7b4-804a69367d30" /dev/sda2: UUID="eEfA9v-wQ1a-sBQ3-5t4x-oS33-h0zQ-kECQ0n" TYPE="LVM2_member" PARTUUID="a3f54acd-e8ac-4d06-8dc1-c311fcae2969" /dev/sdb2: UUID="jvoDwD-grcT-BqIL-YFLY-GBJJ-eRdC-Cr9805" TYPE="LVM2_member" PARTUUID="4973df34-e312-4429-b743-e9aaf4a028c4" /dev/sdc: UUID="vBNV2M-yA22-dpY4-6hFT-a7rF-CibM-OY66q2" TYPE="LVM2_member" /dev/mapper/vg_ml1-lv_home: UUID="fb6c2b91-b6d2-4ee1-b59e-5f40428b5a13" TYPE="ext4" /dev/mapper/vg_ml1-lv_root: UUID="355b3cc1-82e9-4986-88ed-71c450aff185" TYPE="ext4" /dev/mapper/vg_ml1-lv_root_rimage_0: UUID="355b3cc1-82e9-4986-88ed-71c450aff185" TYPE="ext4"

LVM

Code:

root@ubuntu:/# pvs PV VG Fmt Attr PSize PFree /dev/sda2 vg_ml1 lvm2 a-- <465.26g 7.16g /dev/sdb2 vg_ml1 lvm2 a-- <476.44g 18.40g /dev/sdc vg_ml1 lvm2 a-- 931.51g 0 root@ubuntu:/# vgs VG #PV #LV #SN Attr VSize VFree vg_ml1 3 4 0 wz--n- <1.83t 25.56g root@ubuntu:/# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert lv_home vg_ml1 Cwi-aoC--- 931.51g [lv_cache_cpool] [lv_home_corig] 29.06 9.68 0.00 lv_home_ssd vg_ml1 Rwi-a-r--- 360.00g 100.00 lv_root vg_ml1 rwi-aor--- 100.00g lv_swap vg_ml1 rwi---r--- 32.00g

Root VG/LV details

Code:

root@ubuntu:/# vgdisplay --- Volume group --- VG Name vg_ml1 System ID Format lvm2 Metadata Areas 3 Metadata Sequence No 11 VG Access read/write VG Status resizable MAX LV 0 Cur LV 4 Open LV 2 Max PV 0 Cur PV 3 Act PV 3 VG Size <1.83 TiB PE Size 4.00 MiB Total PE 479541 Alloc PE / Size 472997 / 1.80 TiB Free PE / Size 6544 / 25.56 GiB VG UUID 16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z root@ubuntu:/# lvdisplay --- Logical volume --- LV Path /dev/vg_ml1/lv_swap LV Name lv_swap VG Name vg_ml1 LV UUID TkmuqW-aXrR-vFHv-PybY-WO0z-E8JF-jlgBJF LV Write Access read/write LV Creation host, time ubuntu, 2020-04-23 21:28:41 +0100 LV Status NOT available LV Size 32.00 GiB Current LE 8192 Segments 1 Allocation inherit Read ahead sectors auto --- Logical volume --- LV Path /dev/vg_ml1/lv_root LV Name lv_root VG Name vg_ml1 LV UUID xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n LV Write Access read/write LV Creation host, time ubuntu, 2020-04-23 21:32:37 +0100 LV Status available # open 1 LV Size 100.00 GiB Current LE 25600 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:7

update-grub from /target chroot

Code:

root@ubuntu:/# update-grub2 Sourcing file `/etc/default/grub' Sourcing file `/etc/default/grub.d/init-select.cfg' Generating grub configuration file ... grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n' not found. grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n' not found. Found linux image: /boot/vmlinuz-5.4.0-26-generic Found initrd image: /boot/initrd.img-5.4.0-26-generic /usr/sbin/grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n' not found. /usr/sbin/grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n' not found. /usr/sbin/grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n' not found. Adding boot menu entry for UEFI Firmware Settings done

grub-probe doesn’t seem to be raid aware:

Code:

root@ubuntu:/# grub-probe --device /dev/sda1 fat root@ubuntu:/# grub-probe --device /dev/sda grub-probe: error: unknown filesystem. root@ubuntu:/# grub-probe --device /dev/mapper/vg_ml1-lv_home grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/wQ7o4e-cGat-F4xQ-6tyv-jX5H-uyBO-q2XSNX' not found. root@ubuntu:/# grub-probe --device /dev/mapper/vg_ml1-lv_root grub-probe: error: disk `lvmid/16fyZ7-hrJe-UuEX-F0qj-dO3V-lGXO-T5cA3z/xahMGS-mUuh-nLwp-d0cN-Rdyd-fjlW-AySH7n' not found. root@ubuntu:/# grub-probe --device /dev/mapper/vg_ml1-lv_root_rimage_0 ext2

rimage_0 refers to an element of the raid.

Last edited by djerkg; April 24th, 2020 at 03:51 PM.

Reason: correction

-

Re: Ubuntu 20.04 clean install fails with grub error

I removed lv_root and recreated it as a standard LV, same size using space from both sda2 and sdb2. Installation succeeds without issue. Is grub just incompatible with raid0 on LVM?

-

Re: Ubuntu 20.04 clean install fails with grub error

-

Re: Ubuntu 20.04 clean install fails with grub error

The advice to use a bios boot area. I thought this wasn’t needed when using GPT. Should this bios boot area reside outside of LVM and how is this different to installing grub to /dev/sda or why not put grub on the efi partition?

The old link is about mdadm. I’m using dm_raid which is part of (or used by) lvm.

-

Re: Ubuntu 20.04 clean install fails with grub error

Originally Posted by djerkg

The advice to use a bios boot area. I thought this wasn’t needed when using GPT. Should this bios boot area reside outside of LVM and how is this different to installing grub to /dev/sda or why not put grub on the efi partition?

The old link is about mdadm. I’m using dm_raid which is part of (or used by) lvm.

The «bios_grub» partition is for GPT drives when installing in BIOS/Legacy/CSM mode, something you DON’T want if installing in a modern machine, in UEFI mode, as it should be. All you need is the ESP (EFI System Partition).

There’s a lot of bad advice still floating around and sadly promoted by people who should know better.

-

Re: Ubuntu 20.04 clean install fails with grub error

That’s what I figured. The key seems to be that grub-probe can’t find find a raid0 lvm logical volume. The path exists but grub just can’t see it. It throws an «grub-probe: error: disk ‘lvmid/<vg-uuid>/<lv-uuid>’ not found.»

If I can fix this, then all should start working. I wonder if I need to load a dm_raid module during installation. However booting from a live disk, chroot and editing /etc/initramfs-tools/modules to add those modules doesn’t fix anything. Editing /etc/default/grub to add GRUB_PRELOAD_MODULES with raid0, raid1 and dm_raid doesn’t make any difference either.

-

Re: Ubuntu 20.04 clean install fails with grub error

As a side note. raid0 for swap appears to be overkill as the OS stripes between two partitions by default.

-

Re: Ubuntu 20.04 clean install fails with grub error

I’ve simplified things to the point of just sda1 and sdb1 being efi partitions and a single lv for /. If this lv is raid0 then things break. Even if I add an lv for /boot as raid1.

So the next thing to try is to create lv_root as raid1 instead of raid0. I noticed that at one point between live USB boots when I landed in grub> that ls showed only the raid1 volumes and none of the raid0 ones. A different error this time about apt, a warning about potential broken packages, but the system boots just fine.