I would like to read the following html,

import pandas as pd

daily_info=pd.read_html('https://www.investing.com/earnings-calendar/',flavor='html5lib')

print(daily_info)

Unfortunatelly appears :

urllib.error.HTTPError: HTTP Error 403: Forbidden

Is there anyway to fix it?

asked Apr 24, 2017 at 14:00

JamesHudson81JamesHudson81

2,2554 gold badges20 silver badges42 bronze badges

0

Pretend to be a browser:

import requests

url = 'https://www.investing.com/earnings-calendar/'

header = {

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.75 Safari/537.36",

"X-Requested-With": "XMLHttpRequest"

}

r = requests.get(url, headers=header)

dfs = pd.read_html(r.text)

Result:

In [201]: len(dfs)

Out[201]: 7

In [202]: dfs[0]

Out[202]:

0 1 2 3

0 NaN NaN NaN NaN

In [203]: dfs[1]

Out[203]:

Unnamed: 0 Company EPS / Forecast Revenue / Forecast.1 Market Cap Time

0 Monday, April 24, 2017 NaN NaN NaN NaN NaN NaN NaN

1 NaN Acadia (AKR) -- / 0.11 -- / -- 2.63B NaN

2 NaN Agree (ADC) -- / 0.39 -- / -- 1.34B NaN

3 NaN Alcoa (AA) -- / 0.53 -- / -- 5.84B NaN

4 NaN American Campus (ACC) -- / 0.27 -- / -- 6.62B NaN

5 NaN Ameriprise Financial (AMP) -- / 2.52 -- / -- 19.76B NaN

6 NaN Avacta Group (AVTG) -- / -- 1.26M / -- 47.53M NaN

7 NaN Bank of Hawaii (BOH) 1.2 / 1.08 165.8M / -- 3.48B NaN

8 NaN Bank of Marin (BMRC) 0.74 / 0.8 -- / -- 422.29M NaN

9 NaN Banner (BANR) -- / 0.68 -- / -- 1.82B NaN

10 NaN Barrick Gold (ABX) -- / 0.2 -- / -- 22.44B NaN

11 NaN Barrick Gold (ABX) -- / 0.28 -- / -- 30.28B NaN

12 NaN Berkshire Hills Bancorp (BHLB) -- / 0.54 -- / -- 1.25B NaN

13 NaN Brookfield Canada Office Properties (BOXC) -- / -- -- / -- NaN NaN

...

answered Apr 24, 2017 at 14:05

0

This is what I initially tried:

pd.read_html('https://www.redfin.com/home-trends/city/30749/NY/New-York')

But it throws this at me:

---------------------------------------------------------------------------

HTTPError Traceback (most recent call last)

<ipython-input-20-c20ce10f612c> in <module>()

31 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36'

32 }

---> 33 pd.read_html('https://www.redfin.com/home-trends/city/30749/NY/New-York')

34

35

12 frames

/usr/lib/python3.7/urllib/request.py in http_error_default(self, req, fp, code, msg, hdrs)

647 class HTTPDefaultErrorHandler(BaseHandler):

648 def http_error_default(self, req, fp, code, msg, hdrs):

--> 649 raise HTTPError(req.full_url, code, msg, hdrs, fp)

650

651 class HTTPRedirectHandler(BaseHandler):

HTTPError: HTTP Error 403: Forbidden

I also tried using request headers to spoof the user agent like this:

req_headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'en-US,en;q=0.8',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36'

}

pd.read_html('https://www.redfin.com/home-trends/city/30749/NY/New-York', header=req_headers)

But I get this (slightly different) exception:

---------------------------------------------------------------------------

HTTPError Traceback (most recent call last)

<ipython-input-19-6528c4149478> in <module>()

31 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36'

32 }

---> 33 pd.read_html('https://www.redfin.com/home-trends/city/30749/NY/New-York', header=req_headers)

34

35

12 frames

/usr/lib/python3.7/urllib/request.py in http_error_default(self, req, fp, code, msg, hdrs)

647 class HTTPDefaultErrorHandler(BaseHandler):

648 def http_error_default(self, req, fp, code, msg, hdrs):

--> 649 raise HTTPError(req.full_url, code, msg, hdrs, fp)

650

651 class HTTPRedirectHandler(BaseHandler):

HTTPError: HTTP Error 403: Forbidden

I found this on stackoverflow and tried the following solution:

req = Request('https://www.redfin.com/home-trends/city/30749/NY/New-York', headers={'User-Agent': 'Mozilla/5.0'})

webpage = urlopen(req).read()

tables = pd.read_html('https://www.redfin.com/home-trends/city/30749/NY/New-York')

print(tables[0])

But alas, it still gives a 403 error at webpage = urlopen(req).read()

Does anyone know how to get around a 403 HTTP error when trying to read html using pandas?

$begingroup$

The csv file is downloadable. I can download the file and use read_csv, But I want to read the file via direct URL in jupyter, I used the following code, but I get the HTTP 403 Forbidden

error

from io import StringIO

import pandas as pd

import requests

url="https://fineli.fi/fineli/en/elintarvikkeet/resultset.csv"

s=requests.get(url).text

c=pd.read_csv(StringIO(s))

c

how do I read the csv file via URL directly in python with a delimeter «;»

asked Apr 23, 2019 at 6:03

KHAN irfanKHAN irfan

4111 gold badge7 silver badges16 bronze badges

$endgroup$

$begingroup$

The problem is that the url you have doesn’t accept «non-browser» requests. The default header of Python requests is

'User-Agent': 'python-requests/2.13.0'

You can pass your own headers as an argument like that

from io import StringIO

import pandas as pd

import requests

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.76 Safari/537.36'}

url="https://fineli.fi/fineli/en/elintarvikkeet/resultset.csv"

s=requests.get(url, headers= headers).text

c=pd.read_csv(StringIO(s), sep=";")

c

answered Apr 23, 2019 at 6:28

TasosTasos

3,8304 gold badges21 silver badges54 bronze badges

$endgroup$

2

$begingroup$

I read the file using the following code

from urllib.request import urlopen, Request

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.3"}

reg_url = "https://fineli.fi/fineli/en/elintarvikkeet/resultset.csv"

req = Request(url=reg_url, headers=headers)

html = urlopen(req).read()

print(html)

answered Apr 23, 2019 at 6:27

KHAN irfanKHAN irfan

4111 gold badge7 silver badges16 bronze badges

$endgroup$

- the

urllibModule in Python - Check

robots.txtto PreventurllibHTTP Error 403 Forbidden Message - Adding Cookie to the Request Headers to Solve

urllibHTTP Error 403 Forbidden Message - Use Session Object to Solve

urllibHTTP Error 403 Forbidden Message

Today’s article explains how to deal with an error message (exception), urllib.error.HTTPError: HTTP Error 403: Forbidden, produced by the error class on behalf of the request classes when it faces a forbidden resource.

the urllib Module in Python

The urllib Python module handles URLs for python via different protocols. It is famous for web scrapers who want to obtain data from a particular website.

The urllib contains classes, methods, and functions that perform certain operations such as reading, parsing URLs, and robots.txt. There are four classes, request, error, parse, and robotparser.

Check robots.txt to Prevent urllib HTTP Error 403 Forbidden Message

When using the urllib module to interact with clients or servers via the request class, we might experience specific errors. One of those errors is the HTTP 403 error.

We get urllib.error.HTTPError: HTTP Error 403: Forbidden error message in urllib package while reading a URL. The HTTP 403, the Forbidden Error, is an HTTP status code that indicates that the client or server forbids access to a requested resource.

Therefore, when we see this kind of error message, urllib.error.HTTPError: HTTP Error 403: Forbidden, the server understands the request but decides not to process or authorize the request that we sent.

To understand why the website we are accessing is not processing our request, we need to check an important file, robots.txt. Before web scraping or interacting with a website, it is often advised to review this file to know what to expect and not face any further troubles.

To check it on any website, we can follow the format below.

https://<website.com>/robots.txt

For example, check YouTube, Amazon, and Google robots.txt files.

https://www.youtube.com/robots.txt

https://www.amazon.com/robots.txt

https://www.google.com/robots.txt

Checking YouTube robots.txt gives the following result.

# robots.txt file for YouTube

# Created in the distant future (the year 2000) after

# the robotic uprising of the mid-'90s wiped out all humans.

User-agent: Mediapartners-Google*

Disallow:

User-agent: *

Disallow: /channel/*/community

Disallow: /comment

Disallow: /get_video

Disallow: /get_video_info

Disallow: /get_midroll_info

Disallow: /live_chat

Disallow: /login

Disallow: /results

Disallow: /signup

Disallow: /t/terms

Disallow: /timedtext_video

Disallow: /user/*/community

Disallow: /verify_age

Disallow: /watch_ajax

Disallow: /watch_fragments_ajax

Disallow: /watch_popup

Disallow: /watch_queue_ajax

Sitemap: https://www.youtube.com/sitemaps/sitemap.xml

Sitemap: https://www.youtube.com/product/sitemap.xml

We can notice a lot of Disallow tags there. This Disallow tag shows the website’s area, which is not accessible. Therefore, any request to those areas will not be processed and is forbidden.

In other robots.txt files, we might see an Allow tag. For example, http://youtube.com/comment is forbidden to any external request, even with the urllib module.

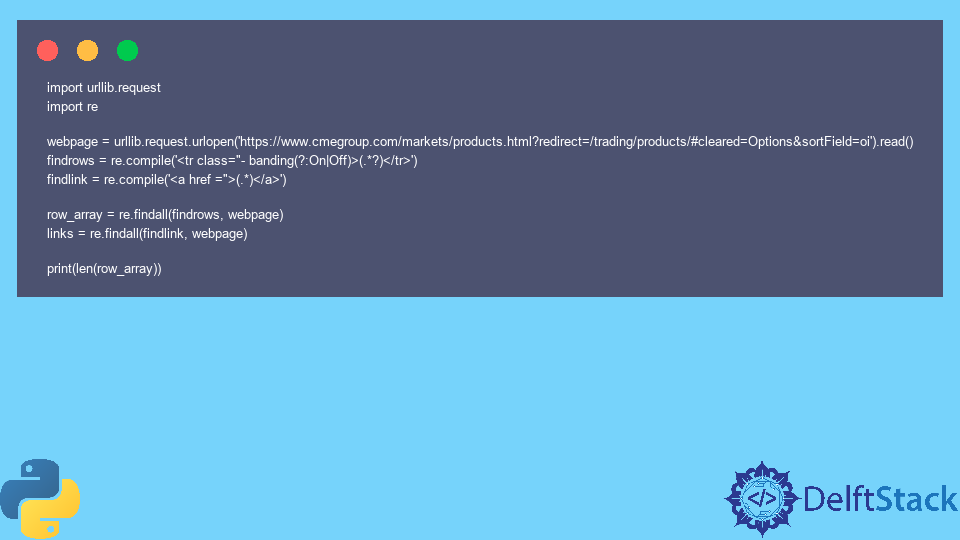

Let’s write code to scrape data from a website that returns an HTTP 403 error when accessed.

Example Code:

import urllib.request

import re

webpage = urllib.request.urlopen('https://www.cmegroup.com/markets/products.html?redirect=/trading/products/#cleared=Options&sortField=oi').read()

findrows = re.compile('<tr class="- banding(?:On|Off)>(.*?)</tr>')

findlink = re.compile('<a href =">(.*)</a>')

row_array = re.findall(findrows, webpage)

links = re.findall(findlink, webpage)

print(len(row_array))

Output:

Traceback (most recent call last):

File "c:UsersakinlDocumentsPythonindex.py", line 7, in <module>

webpage = urllib.request.urlopen('https://www.cmegroup.com/markets/products.html?redirect=/trading/products/#cleared=Options&sortField=oi').read()

File "C:Python310liburllibrequest.py", line 216, in urlopen

return opener.open(url, data, timeout)

File "C:Python310liburllibrequest.py", line 525, in open

response = meth(req, response)

File "C:Python310liburllibrequest.py", line 634, in http_response

response = self.parent.error(

File "C:Python310liburllibrequest.py", line 563, in error

return self._call_chain(*args)

File "C:Python310liburllibrequest.py", line 496, in _call_chain

result = func(*args)

File "C:Python310liburllibrequest.py", line 643, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 403: Forbidden

The reason is that we are forbidden from accessing the website. However, if we check the robots.txt file, we will notice that https://www.cmegroup.com/markets/ is not with a Disallow tag. However, if we go down the robots.txt file for the website we wanted to scrape, we will find the below.

User-agent: Python-urllib/1.17

Disallow: /

The above text means that the user agent named Python-urllib is not allowed to crawl any URL within the site. That means using the Python urllib module is not allowed to crawl the site.

Therefore, check or parse the robots.txt to know what resources we have access to. we can parse robots.txt file using the robotparser class. These can prevent our code from experiencing an urllib.error.HTTPError: HTTP Error 403: Forbidden error message.

Passing a valid user agent as a header parameter will quickly fix the problem. The website may use cookies as an anti-scraping measure.

The website may set and ask for cookies to be echoed back to prevent scraping, which is maybe against its policy.

from urllib.request import Request, urlopen

def get_page_content(url, head):

req = Request(url, headers=head)

return urlopen(req)

url = 'https://example.com'

head = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.84 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Charset': 'ISO-8859-1,utf-8;q=0.7,*;q=0.3',

'Accept-Encoding': 'none',

'Accept-Language': 'en-US,en;q=0.8',

'Connection': 'keep-alive',

'refere': 'https://example.com',

'cookie': """your cookie value ( you can get that from your web page) """

}

data = get_page_content(url, head).read()

print(data)

Output:

<!doctype html>n<html>n<head>n <title>Example Domain</title>nn <meta

'

'

'

<p><a href="https://www.iana.org/domains/example">More information...</a></p>n</div>n</body>n</html>n'

Passing a valid user agent as a header parameter will quickly fix the problem.

Use Session Object to Solve urllib HTTP Error 403 Forbidden Message

Sometimes, even using a user agent won’t stop this error from occurring. The Session object of the requests module can then be used.

from random import seed

import requests

url = "https://stackoverflow.com/search?q=html+error+403"

session_obj = requests.Session()

response = session_obj.get(url, headers={"User-Agent": "Mozilla/5.0"})

print(response.status_code)

Output:

The above article finds the cause of the urllib.error.HTTPError: HTTP Error 403: Forbidden and the solution to handle it. mod_security basically causes this error as different web pages use different security mechanisms to differentiate between human and automated computers (bots).

I concur

Python 3.6.9 (default, Apr 18 2020, 01:56:04) Type "copyright", "credits" or "license" for more information. IPython 5.5.0 -- An enhanced Interactive Python. ? -> Introduction and overview of IPython's features. %quickref -> Quick reference. help -> Python's own help system. object? -> Details about 'object', use 'object??' for extra details. In [1]: import datapackage In [2]: import pandas In [3]: package = datapackage.Package('https://datahub.io/JohnSnowLabs/populatio ...: n-figures-by-country/datapackage.json') In [4]: pandas.read_csv(package.resources[4].descriptor['path']) --------------------------------------------------------------------------- HTTPError Traceback (most recent call last) <ipython-input-4-4a930361f83a> in <module>() ----> 1 pandas.read_csv(package.resources[4].descriptor['path']) /home/kranz/.local/lib/python3.6/site-packages/pandas/io/parsers.py in parser_f(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, dialect, error_bad_lines, warn_bad_lines, delim_whitespace, low_memory, memory_map, float_precision) 674 ) 675 --> 676 return _read(filepath_or_buffer, kwds) 677 678 parser_f.__name__ = name /home/kranz/.local/lib/python3.6/site-packages/pandas/io/parsers.py in _read(filepath_or_buffer, kwds) 429 # See https://github.com/python/mypy/issues/1297 430 fp_or_buf, _, compression, should_close = get_filepath_or_buffer( --> 431 filepath_or_buffer, encoding, compression 432 ) 433 kwds["compression"] = compression /home/kranz/.local/lib/python3.6/site-packages/pandas/io/common.py in get_filepath_or_buffer(filepath_or_buffer, encoding, compression, mode) 170 171 if isinstance(filepath_or_buffer, str) and is_url(filepath_or_buffer): --> 172 req = urlopen(filepath_or_buffer) 173 content_encoding = req.headers.get("Content-Encoding", None) 174 if content_encoding == "gzip": /home/kranz/.local/lib/python3.6/site-packages/pandas/io/common.py in urlopen(*args, **kwargs) 139 import urllib.request 140 --> 141 return urllib.request.urlopen(*args, **kwargs) 142 143 /usr/lib/python3.6/urllib/request.py in urlopen(url, data, timeout, cafile, capath, cadefault, context) 221 else: 222 opener = _opener --> 223 return opener.open(url, data, timeout) 224 225 def install_opener(opener): /usr/lib/python3.6/urllib/request.py in open(self, fullurl, data, timeout) 530 for processor in self.process_response.get(protocol, []): 531 meth = getattr(processor, meth_name) --> 532 response = meth(req, response) 533 534 return response /usr/lib/python3.6/urllib/request.py in http_response(self, request, response) 640 if not (200 <= code < 300): 641 response = self.parent.error( --> 642 'http', request, response, code, msg, hdrs) 643 644 return response /usr/lib/python3.6/urllib/request.py in error(self, proto, *args) 568 if http_err: 569 args = (dict, 'default', 'http_error_default') + orig_args --> 570 return self._call_chain(*args) 571 572 # XXX probably also want an abstract factory that knows when it makes /usr/lib/python3.6/urllib/request.py in _call_chain(self, chain, kind, meth_name, *args) 502 for handler in handlers: 503 func = getattr(handler, meth_name) --> 504 result = func(*args) 505 if result is not None: 506 return result /usr/lib/python3.6/urllib/request.py in http_error_default(self, req, fp, code, msg, hdrs) 648 class HTTPDefaultErrorHandler(BaseHandler): 649 def http_error_default(self, req, fp, code, msg, hdrs): --> 650 raise HTTPError(req.full_url, code, msg, hdrs, fp) 651 652 class HTTPRedirectHandler(BaseHandler): HTTPError: HTTP Error 403: Forbidden

The urllib.error.httperror: http error 403: forbidden occurs when you try to scrap a webpage using urllib.request module and the mod_security blocks the request. There are several reasons why you get this error. Let’s take a look at each of the use cases in detail.

Usually, the websites are protected with App Gateway, WAF rules, etc., which monitor whether the requests are from the actual users or triggered through the automated bot system. The mod_security or the WAF rule will block these requests treating them as spider/bot requests. These security features are the most standard ones to prevent DDOS attacks on the server.

Now coming back to the error when you make a request to any site using urllib.request basically, you will not set any user-agents and headers and by default the urllib sets something like python urllib/3.3.0, which is easily detected by the mod_security.

The mod_security is usually configured in such a way that if any requests happen without a valid user-agent header(browser user-agent), the mod_security will block the request and return the urllib.error.httperror: http error 403: forbidden

Example of 403 forbidden error

from urllib.request import Request, urlopen

req = Request('http://www.cmegroup.com/')

webpage = urlopen(req).read()Output

File "C:UsersuserAppDataLocalProgramsPythonPython39liburllibrequest.py", line 494, in _call_chain

result = func(*args)

urllib.error.HTTPError: HTTP Error 403: Forbidden

PS C:ProjectsTryouts> from urllib.request import Request, urlopenThe easy way to resolve the error is by passing a valid user-agent as a header parameter, as shown below.

from urllib.request import Request, urlopen

req = Request('https://www.yahoo.com', headers={'User-Agent': 'Mozilla/5.0'})

webpage = urlopen(req).read()

Alternatively, you can even set a timeout if you are not getting the response from the website. Python will raise a socket exception if the website doesn’t respond within the mentioned timeout period.

from urllib.request import Request, urlopen

req = Request('http://www.cmegroup.com/', headers={'User-Agent': 'Mozilla/5.0'})

webpage = urlopen(req,timeout=10).read()

In some cases, like getting a real-time bitcoin or stock market value, you will send requests every second, and the servers can block if there are too many requests coming from the same IP address and throws 403 security error.

If you get this error because of too many requests, consider adding delay between each request to resolve the error.

Srinivas Ramakrishna is a Solution Architect and has 14+ Years of Experience in the Software Industry. He has published many articles on Medium, Hackernoon, dev.to and solved many problems in StackOverflow. He has core expertise in various technologies such as Microsoft .NET Core, Python, Node.JS, JavaScript, Cloud (Azure), RDBMS (MSSQL), React, Powershell, etc.

Sign Up for Our Newsletters

Subscribe to get notified of the latest articles. We will never spam you. Be a part of our ever-growing community.

By checking this box, you confirm that you have read and are agreeing to our terms of use regarding the storage of the data submitted through this form.

Глядя, чтобы получить данные из таблиц на конкретном сайте киберспорта, и я, кажется, изо всех сил.

Мне сказали, что библиотека панд может помочь мне достичь этого всего за несколько строк.

import pandas as pdtables = pd.read_html ('https://www.hltv.org/stats/teams/matches/5752/Cloud9')

print(tables[0])

Я пытаюсь отредактировать это, чтобы заставить меня работать, но у меня нет успеха.

import pandas as pdfrom urllib.request import Request, urlopen

req = Request('https://www.hltv.org/stats/teams/matches/5752/Cloud9', headers={'User-Agent': 'Mozilla/5.0'})

webpage = urlopen(req).read()tables = pd.read_html ('https://www.hltv.org/stats/teams/matches/5752/Cloud9)

print(tables[0])

Я был уверен, что это может быть решение, которое я искал, или что-то похожее на него, но когда я пытаюсь решить проблему таким способом, у меня нет успеха.

"Traceback (most recent call last):

File "C:UsersanthoOneDriveDocumentsPythontables clloud9.py", line 6, in <module>

webpage = urlopen(req).read()

File "C:UsersanthoAppDataLocalProgramsPythonPython37-32liburllibrequest.py", line 222, in urlopen

return opener.open(url, data, timeout)

File "C:UsersanthoAppDataLocalProgramsPythonPython37-32liburllibrequest.py", line 531, in open

response = meth(req, response)

File "C:UsersanthoAppDataLocalProgramsPythonPython37-32liburllibrequest.py", line 641, in http_response

'http', request, response, code, msg, hdrs)

File "C:UsersanthoAppDataLocalProgramsPythonPython37-32liburllibrequest.py", line 569, in error

return self._call_chain(*args)

File "C:UsersanthoAppDataLocalProgramsPythonPython37-32liburllibrequest.py", line 503, in _call_chain

result = func(*args)

File "C:UsersanthoAppDataLocalProgramsPythonPython37-32liburllibrequest.py", line 649, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 403: Forbidden"

Все, что я хочу на данный момент, это чтобы таблица на ссылку была вытащена.