- Engine

- Licenses

- Forum

- Blog

- Downloads

- Wiki

- Reference

- Login

- Login

-

EN

-

#1, by smac96Tuesday, 16. January 2018, 11:00 5 years ago

Hi,

What could be the problem if the game doesn’t start at all and the stderr.txt file containt this: «libpng error: IHDR: CRC error»?

Thanks for any help!

(Win 10 64 bit, Visionaire 3.7)

smac96

Newbie

97 Posts

-

#2, by sebastianTuesday, 16. January 2018, 12:16 5 years ago

I know this error from another software…

mostly caused by invalid png images (color profile and such stuff)

In case of VS im not sure though

sebastian

Thread Captain

2346 Posts

-

#3, by smac96Tuesday, 16. January 2018, 12:23 5 years ago

Maybe not a Visionaire error but instehad a conflict with another software or hardware component?

smac96

Newbie

97 Posts

-

#4, by SimonSTuesday, 16. January 2018, 12:40 5 years ago

Visionaire 3.7 has a very old libpng, so you need to set the generated pngs to maximum compatibility.

Pngs have a format with header part, that has a checksum, if that doesn’t match, there are errors, it’s also possible that the png is a newer standard and the libpng doesn’t understand them.

SimonS

Thread Captain

1554 Posts

-

#5, by smac96Tuesday, 16. January 2018, 12:47 5 years ago

Visionaire 3.7 has a very old libpng, so you need to set the generated pngs to maximum compatibility.

Pngs have a format with header part, that has a checksum, if that doesn’t match, there are errors, it’s also possible that the png is a newer standard and the libpng doesn’t understand them.

Thanks SimonS!

smac96

Newbie

97 Posts

-

#6, by smac96Tuesday, 16. January 2018, 12:53 5 years ago

Do you know why only one player so far had this issue but others don’t?

smac96

Newbie

97 Posts

-

#7, by SimonSTuesday, 16. January 2018, 12:57 5 years ago

Maybe the files are corrupted while downloading. Steam has an option to check the files.

SimonS

Thread Captain

1554 Posts

-

#8, by smac96Tuesday, 16. January 2018, 13:22 5 years ago

Maybe the files are corrupted while downloading. Steam has an option to check the files.

Thanks!

smac96

Newbie

97 Posts

-

#9, by LebosteinTuesday, 23. January 2018, 12:57 5 years ago

@smac (Stefano Maccarinelli?): Have you considered an update to Visionaire 4? Visionaire 3.x was very sluggish, memory-hungry, error-prone and has very long load and save times. And it is from 2012 and problems with current operating systems like Windows 10 are possible. Visionaire 4 was rewritten and it runs much faster and more powerful than Visionaire 3.x.

Lebostein

Key Killer

620 Posts

-

#10, by smac96Tuesday, 23. January 2018, 13:11 5 years ago

@smac (Stefano Maccarinelli?): Have you considered an update to Visionaire 4? Visionaire 3.x was very sluggish, memory-hungry, error-prone and has very long load and save times. And it is from 2012 and problems with current operating systems like Windows 10 are possible. Visionaire 4 was rewritten and it runs much faster and more powerful than Visionaire 3.x.

Hi! Yes. We considered it and we probably do it. We already bought the license. It will of course take a lot of time to re-test everything because the game is huge but the main problem is that using a newer version of Visionaire will make the current savegames incompatible. If it’s not a problem for the players I think it will be ok to do it.

There are hundreds of people that played/are playing the game and right now only very few of them directly reported us major problems. Unfortunately one of the players that had big issues playing was a reviewer of an important website for point and click lovers and the note she gave us really hurts a lot! Of course she had the full right to write what happened to her and let other players know that they might have problems too. This is important for us. Luckily the majority of reviews are positive and we’re very happy about it! Anyway, after so many years of hard work and huge sacrifices for a 2-person studio it’s very sad and frustrating to receive critics mostly because of the old buggy 3.7 game engine

smac96

Newbie

97 Posts

-

#11, by smac96Tuesday, 23. January 2018, 15:04 5 years ago

After some tests with version 4.2.5… I think we could update the game sooner as expected and let the players decide which version play

smac96

Newbie

97 Posts

- 1

- 2

- 3

Доброго времени суток!

Вам когда-нибудь хотелось узнать как устроены файлы PNG? Нет? А я все равно расскажу.

Формат PNG(Portable Network Graphics) был изобретен в 1995 году, чтобы стать заменой GIF, а уже в 1996, с выходом версии 1.0, он был рекомендован W3C, в качестве полноправного сетевого формата. На сегодняшний день PNG является одним из основных форматов веб-графики.

Под катом вы найдете общее описание строения PNG-файла, некоторое количество картинок-схем, препарирование

Общее строение

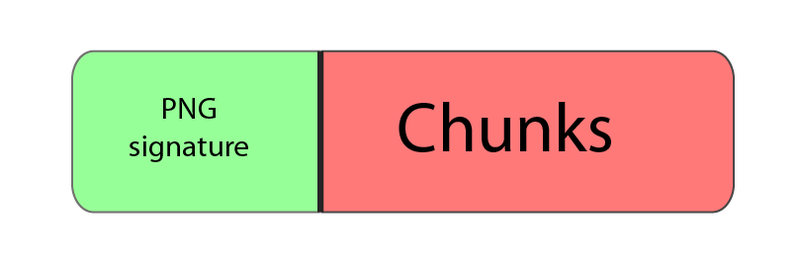

Структура PNG в самом общем виде представлена на следующем рисунке.

То есть файл состоит из подписи и некоторого количества блоков(чанков, chunks), каждый из которых несет в себе некоторую информацию (спасибо КО!). Но почему подпись нельзя считать одним из чанков? Давайте разберемся поподробнее.

Подпись файла

Подпись PNG-файла всегда одинакова, состоит из 8 байт, и представляет собой (в hex-записи)

89 50 4E 47 0D 0A 1A 0A

Что же это означает?

- 89 — non-ASCII символ. Препятствует распознаванию PNG, как текстового файла, и наоборот.

- 50 4E 47 — PNG в ASCII записи.

- 0D 0A — CRLF (Carriage-return, Line-feed), DOS-style перевод строки.

- 1A — останавливает вывод файла в DOS режиме (end-of-file), чтобы вам не вываливалось многокилобайтное изображение в текстовом виде.

- 0A — LF, Unix-style перевод строки.

Chunks

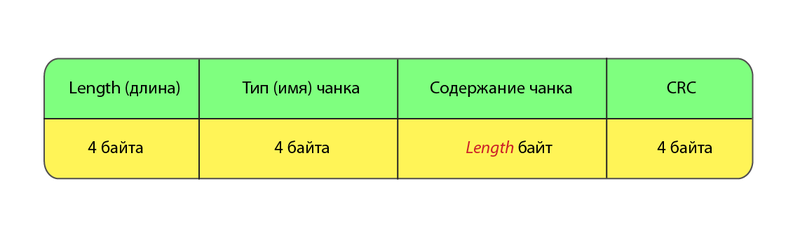

Чанки — это блоки данных, из которых состоит файл. Каждый чанк состоит из 4 секций.

Разберем эти секции по порядку.

Длина

Ну, с длиной вроде все ясно. Просто числовое значение длины блока данных.

Тип (имя)

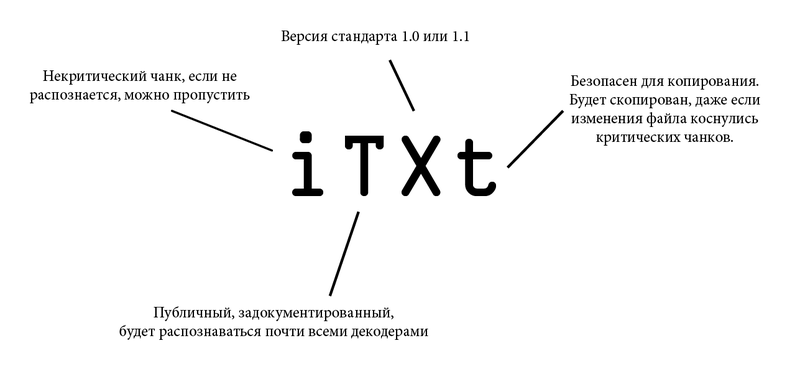

С типом немного поинтересней. Тип представляет собой 4 чувствительных к регистру ASCII-символа. Регистры символов (пятый бит в числовой записи символа) в имени чанка различаются неспроста — это флаги, которые сообщают декодеру некоторую дополнительную информацию.

- Регистр первого символа определяет является ли данный чанк критическим(верхний регистр) или вспомогательным(нижний регистр). Критические чанки должны распознаваться каждым декодером. Если декодер встречает критический чанк, тип которого не может распознать, он обязан завершить выполнение с ошибкой.

- Регистр второго символа задает «публичность»(верхний регистр) или «приватность»(нижний регистр) чанка. «Публичные» чанки — официальные, задокументированные, распознаваемые большинством декодеров. Но если вдруг вам для каких-то своих нужд понадобится кодировать специфическую информацию, то просто в имени чанка сделайте второй символ маленьким.

- Регистр третьего символа оставлен для будущих свершений. Предполагается, что он будет использоваться для дифференциации различных версий стандарта. Для версий 1.0 и 1.1 третий символ должен быть большим. Если он (внезапно!) оказался маленьким, все нынешние декодеры должны поступать с чанком, так же как и с любым другим не распознанным (то есть выходить с ошибкой если чанк критический, или пропускать в противном случае).

- Регистр же четвертого символа означает возможность копирования данного чанка редакторами, которые не могут его распознать. Если регистр нижний, чанк может быть скопирован, вне зависимости от степени модификации файла, иначе (верхний регистр) он копируется только в случае, когда при модификации не были затронуты никакие критические чанки.

Для лучшего понимания, давайте разберем флаги на примере чанка, содержащего текст.

Ниже приведен список типов чанков с краткими пояснениями.

Критические чанки

- IHDR — заголовок файла, содержит основную информацию о изображении. Обязан быть первым чанком.

- PLTE — палитра, список цветов.

- IDAT — содержит, собственно, изображение. Рисунок можно разбить на несколько IDAT чанков, для потоковой передачи. В каждом файле должен быть хотя бы один IDAT чанк.

- IEND — завершающий чанк, обязан быть последним в файле.

Вспомогательные чанки

- bKGD — этот чанк задает основной фоновый цвет.

- cHRM используется для задания CIE 1931 цветового пространства.

- gAMA — определяет гамму.

- hIST — в этом чанке может храниться гистограмма или общее содержание каждого цвета в изображении.

- iCCP — цветовой профиль ICC

- iTXt — содержит текст в UTF-8, возможно сжатый, с необязательной языковой меткой. iTXt чанк с ключевым словом ‘XML:com.adobe.xmp’ может содержать Extensible Metadata Platform (XMP).

- pHYs — содержит предполагаемый размер пикселя и/или отношение сторон изображения.

- sBIT (significant bits) — определяет «цветовую точность» (color-accuracy) изображения (черно-белое, полный цвет, черно-белое с прозрачностью и т.д.), для более простого декодирования.

- sPLT — предлагает палитру для использования, если полный спектр цветов недоступен.

- sRGB — свидетельствует о использовании стандартной sRGB схемы.

- sTER — индикатор стереоскопических изображений.

- tEXt — может содержать текст в ISO/IEC 8859-1 формате, с одной name=value парой для каждого чанка.

- tIME — хранит дату последнего изменения изображения.

- tRNS — содержит информацию о прозрачности.

- zTXt — сжатый текст, с теми же ограничениям, что и tEXt.

Более подробную информацию можно найти в спецификации.

CRC

Контрольная сумма CRC-32. Кстати на днях был топик о ее подсчете в Windows.

Минимальный PNG

С общей структурой разобрались. Теперь разберем содержание обязательных чанков. Но какие из них обязательные (не критические, критические обязаны распознаваться декодером, а не присутствовать в каждом файле), и как выглядит минимальный PNG-файл? А вот как:

IHDR

Блок данных в IHDR содержит следующие поля:

- Ширина, 4 байта

- Высота, 4 байта

- Битовая глубина (bit depth), определяет количество бит на каждый сэмпл(не пиксель), 1 байт

- Тип цвета, состоит из 3 флагов 1 (используется палитра), 2 (используется цвет, не монохромное изображение), and 4 (присутствует альфа-канал), 1 байт

- Метод сжатия. На данный момент доступно только значение 0 — сжатие по алгоритму deflate. Если значение отлично от 0, чанк считается нераспознанным, и декодер рапортует об ошибке. 1 байт

- Метод фильтрации. Так же, как и в случае сжатия, на данный момент может быть только нулем. 1 байт

- Interlace(переплетение) метод. Определяет порядок передачи данных. На данный момент доступно 2 значения: 0 (no interlace) и 1 (Adam7 interlace). 1 байт

Adam7 interlacing прекрасно демонстрирует картинка из википедии (да-да, GIF в статье про PNG):

IEND

Сигнализирует о конце файла, блок данных этого чанка не содержит ничего.

IDAT

Содержит данные, закодированные, в соответствии с полем метода сжатия в заголовке. Алгоритм декодирования выходит за рамки данной статьи (однако если будут желающие, может появиться в следующей), но в довольно хорошо (и по-русски) описан здесь.

Таким образом, простейший PNG-файл (на примере

Заключение

При написании данной статьи я ставил своей задачей дать читателю общие знания о строении PNG-файла, для более глубокого понимания рекомендуется читать спецификации.

Топик на хабре про строение JPEG: habrahabr.ru/blogs/algorithm/102521

Топик на хабре про строение GIF: habrahabr.ru/blogs/algorithm/127083

Спасибо за внимание, буду рад любой критике!

File Structure¶

For a PNG file, the header is always represented by fixed bytes, and the remaining parts consist of three or more chunks of PNG data in a specific order.

File header: 89 50 4E 47 0D 0A 1A 0A + chunk + chunk + chunk…

Chunks¶

PNG contains two types of chunks: one called critical chunks that are the standard chunks, another called ancillary chunks that are optional. Critical chunks define four standard chucks that must be included in every PNG file, and a PNG reader must support these blocks.

| Chunk Characters | Chunk Name | Multiple Chucks | Optional | Position Limitation |

|---|---|---|---|---|

| IHDR | Image Header | False | False | First Chunk |

| cHRM | Primary Chromaticities and White Point | False | True | Before PLTE and IDAT |

| gAMA | Image Gamma | False | True | Before PLTE and IDAT |

| sBIT | Significant Bits | False | True | Before PLTE and IDAT |

| PLTE | Palette | False | True | Before IDAT |

| bKGD | Background Color | False | True | Before PLTE and IDAT |

| hIST | Image Histogram | False | True | Before PLTE and IDAT |

| tRNS | Transparency | False | True | Before PLTE and IDAT |

| oFFs | Image Offset | False | True | Before IDAT |

| pHYs | Physical Pixel Dimensions | False | True | Before IDAT |

| sCAL | Physical Scale | False | True | Before IDAT |

| IDAT | Image Data | True | False | Consecutive with Other IDATs |

| tIME | Image Last Modification Time | False | True | No limit |

| tEXt | Textual Data | True | True | No limit |

| zTXt | Compressed Textual Data | True | True | No limit |

| fRAc | Fractal Parameters | True | True | No limit |

| gIFg | GIF Conversion Info | True | True | No limit |

| gIFt | GIF Plain Text | True | True | No limit |

| gIFx | GIF Conversion Info | True | True | No limit |

| IEND | Image Trailer | False | False | End of PNG Data Stream |

There is a unified structure for each chunk, and each chunk is composed of four parts:

| Name | Length in Bytes | Description |

|---|---|---|

| Length | 4 Bytes | Specifies the length of the data field in the chunk, which does not exceed (231-1) bytes |

| Chunk Type Code | 4 Bytes | Consists of ASCII letters (A-Z and A-Z) |

| Chunk Data | length Varies | Stores the data specified by the Chunk Type Code |

| CRC | 4 Bytes | Stores the Cyclic Redundancy Check information |

CRC(Cyclic Redundancy Check)value is calculated based on the Chunk Type Code and Chunk Data.

For more details, see PNG Chunks

IHDR¶

IHDR (Image Header Chunk): It stores basic image information, 13-bytes long, must be the first chunk in a PNG data stream, and there must be only one file header chunk in a PNG data stream.

| Name | Number of Bytes | Description |

|---|---|---|

| Width | 4 bytes | Image width (in pixels) |

| Height | 4 bytes | Image height (in pixels) |

We often change the height or width of an image to corrupt it to hide information.

You can see here Kali won’t open the image, showing the IHDR CRC error. This is a sign that the IHDR chunk was modified.

You might be able to restore the corrupted image by changing the image’s width and length, or file header back to the correct values.

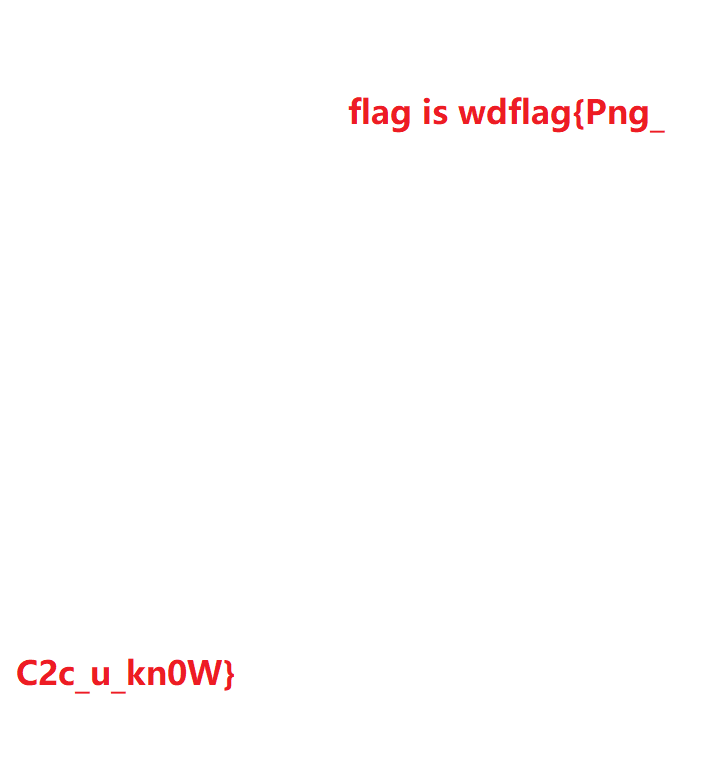

CTF Example¶

WDCTF-finals-2017¶

Download the challenge here

If you look at the file, you can see that the header and width of the PNG file are incorrect.

00000000 80 59 4e 47 0d 0a 1a 0a 00 00 00 0d 49 48 44 52 |.YNG........IHDR|

00000010 00 00 00 00 00 00 02 f8 08 06 00 00 00 93 2f 8a |............../.|

00000020 6b 00 00 00 04 67 41 4d 41 00 00 9c 40 20 0d e4 |[email protected] ..|

00000030 cb 00 00 00 20 63 48 52 4d 00 00 87 0f 00 00 8c |.... cHRM.......|

00000040 0f 00 00 fd 52 00 00 81 40 00 00 7d 79 00 00 e9 |[email protected]}y...|

...

Note that you can’t just randomly change the image’s width, you need to brute force the width based on the IHDR chunk’s CRC value (see Python script below).

import os

import binascii

import struct

misc = open("misc4.png","rb").read()

for i in range(1024):

data = misc[12:16] + struct.pack('>i',i)+ misc[20:29]

crc32 = binascii.crc32(data) & 0xffffffff

if crc32 == 0x932f8a6b:

print(i)

The script returned 709, so changing the image’s width to 709 will restore the image and get you the flag.

PLTE¶

PLTE (Palette Chunk): It contains from 1 to 256 palette entries, each a three-byte series of the form.

— Red: 1 byte (0 = black, 255 = red)

— Green: 1 byte (0 = black, 255 = green)

— Blue: 1 byte (0 = black, 255 = blue)

IDAT¶

IDAT (Image Data Chunk): It stores the actual image data which contains multiple image chucks in the data stream.

— Stores image data

— Contains multiple image chucks in the data stream

— Uses a derivative of LZ77 algorithm to perform compression

— Uses zlib to perform decompression

Note that IDAT will only continue to a new chunk when the previous chunk is full.

CTF Example¶

sctf 2014 — misc¶

Use pngcheck display information about the PNG file

Download the challenge here

.pngcheck.exe -v sctf.png

File: sctf.png (1421461 bytes)

chunk IHDR at offset 0x0000c, length 13

1000 x 562 image, 32-bit RGB+alpha, non-interlaced

chunk sRGB at offset 0x00025, length 1

rendering intent = perceptual

chunk gAMA at offset 0x00032, length 4: 0.45455

chunk pHYs at offset 0x00042, length 9: 3780x3780 pixels/meter (96 dpi)

chunk IDAT at offset 0x00057, length 65445

zlib: deflated, 32K window, fast compression

chunk IDAT at offset 0x10008, length 65524

...

chunk IDAT at offset 0x150008, length 45027

chunk IDAT at offset 0x15aff7, length 138

chunk IEND at offset 0x15b08d, length 0

No errors detected in sctf.png (28 chunks, 36.8% compression).

We can see when the first IDAT is full (length 65524), it continued to the second IDAT. Now, we know the length for a full IDAT. Try to compare the last two IDAT (offset 0x150008 and 0x15aff7 ), do you see anything strange?

The second to last IDAT length is 45027 and the last IDAT length is 138.

Clearly, something is wrong with the last IDAT because the second to last IDAT is not full.

Use python and zlib to decompress the content of the last IDAT. Note that length, chunk type, and CRC check value at the end are excluded.

import zlib

import binascii

IDAT = "789...667".decode('hex')

result = binascii.hexlify(zlib.decompress(IDAT))

print result

IEND¶

IEND (Image Trailer Chunk): It is used to mark the end of a PNG data stream or file, and it must be placed at the end of the file.

Example¶

- IEND’s chunk length is always

00 00 00 00 - IEND’s chunk type is always IEND

49 45 4E 44 - IEND’S CRC value is always

AE 42 60 82

That means a PNG file will always end with these bytes:

00 00 00 00 49 45 4E 44 AE 42 60 82

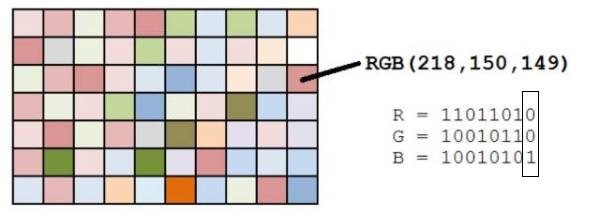

LSB¶

LSB stands for Least Significant Bit. Each pixel in a PNG image is generally composed of RGB three primary colors (red, green, and blue), each color occupies 8 bits, and the value range is from ‘0x00’ to ‘0xFF’, so there are 256 colors. The total number of colors is equal to 256 to the third power or 16777216.

The human eye can distinguish about 10 million different colors, which means the human eye cannot distinguish the remaining 6,777,216 colors.

LSB steganography is to modify the Least Significant Bit of each color, containing 8 bits, in an RGB value.

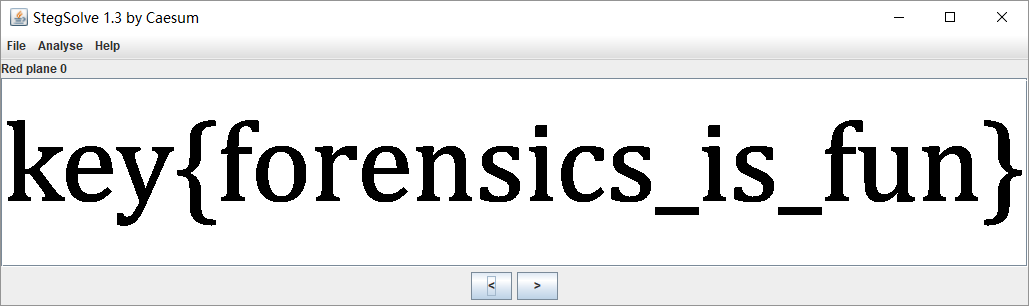

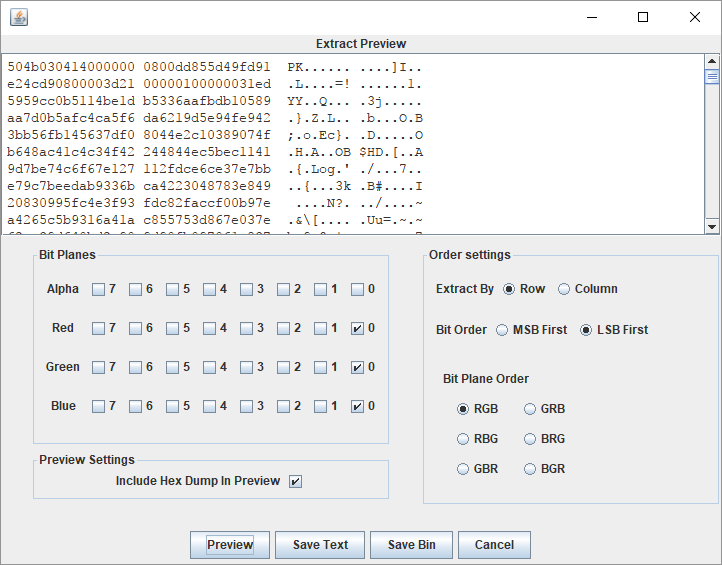

If you want to find informing hidden using LSB, you can use this tool Stegsolve to perform analysis.

Use the buttons at the bottom of Stegsolve to see information extracted from each color channel.

In the following image, we found the hidden information by checking LSB on the red channel:

With the help of StegSolve, you can find hidden information by checking LSB each color channel.

CTF Example¶

HCTF 2016 — pic again¶

Download the challenge here

There is information hidden in the LSB of the RGB colors. Use Stegsolve-->Analyse-->Data Extract to extract it.

We can see zip file header, use save bin to save the zip file, and run the ELF executable to obtain the flag.

本页面的全部内容在 CC BY-NC-SA 4.0 协议之条款下提供,附加条款亦可能应用。

Abstract

This document describes PNG (Portable Network Graphics), an extensible file format for the lossless, portable, well-compressed storage of static and animated raster images. PNG provides a patent-free replacement for GIF and can also replace many common uses of TIFF. Indexed-colour, greyscale, and truecolour images are supported, plus an optional alpha channel. Sample depths range from 1 to 16 bits.

PNG is designed to work well in online viewing applications, such as the World Wide Web, so it is fully streamable with a progressive display option. PNG is robust, providing both full file integrity checking and simple detection of common transmission errors. Also, PNG can store colour space data for improved colour matching on heterogeneous platforms.

This specification defines two Internet Media Types, image/png and image/apng.

Status of This Document

This section describes the status of this

document at the time of its publication. A list of current W3C

publications and the latest revision of this technical report can be found

in the W3C technical reports index at

https://www.w3.org/TR/.

This specification is intended to become

an International Standard,

but is not yet one.

It is inappropriate to refer to this specification

as an International Standard.

This document was published by the Portable Network Graphics (PNG) Working Group as

a First Public Working Draft using the

Recommendation track.

Publication as a First Public Working Draft does not

imply endorsement by W3C and its Members.

This is a draft document and may be updated, replaced or obsoleted by other

documents at any time. It is inappropriate to cite this document as other

than work in progress.

This document was produced by a group

operating under the

W3C Patent

Policy.

W3C maintains a

public list of any patent disclosures

made in connection with the deliverables of

the group; that page also includes

instructions for disclosing a patent. An individual who has actual

knowledge of a patent which the individual believes contains

Essential Claim(s)

must disclose the information in accordance with

section 6 of the W3C Patent Policy.

This document is governed by the

2 November 2021 W3C Process Document.

The design goals for this specification were:

- Portability: encoding, decoding, and transmission should be

software and hardware platform independent. - Completeness: it should be possible to represent truecolour,

indexed-colour, and greyscale images, in each case with the

option of transparency, colour space information, and ancillary

information such as textual comments. - Serial encode and decode: it should be possible for

datastreams to be generated serially and read serially, allowing

the datastream format to be used for on-the-fly generation and

display of images across a serial communication channel. - Progressive presentation: it should be possible to transmit

datastreams so that an approximation of the whole image can be

presented initially, and progressively enhanced as the datastream

is received. - Robustness to transmission errors: it should be possible to

detect datastream transmission errors reliably. - Losslessness: filtering and compression should preserve all

information. - Performance: any filtering, compression, and progressive

image presentation should be aimed at efficient decoding and

presentation. Fast encoding is a less important goal than fast

decoding. Decoding speed may be achieved at the expense of

encoding speed. - Compression: images should be compressed effectively,

consistent with the other design goals. - Simplicity: developers should be able to implement the

standard easily. - Interchangeability: any standard-conforming PNG decoder shall

be capable of reading all conforming PNG datastreams. - Flexibility: future extensions and private additions should

be allowed for without compromising the interchangeability of

standard PNG datastreams. - Freedom from legal restrictions: no algorithms should be used

that are not freely available.

This specification specifies a datastream and an

associated file format, Portable Network Graphics (PNG,

pronounced «ping»), for a lossless, portable, compressed

individual computer graphics image

or frame-based animation,

transmitted across the

Internet. Indexed-colour, greyscale, and truecolour images are

supported, with optional transparency. Sample depths range from 1

to 16 bits. PNG is fully streamable with a progressive display

option. It is robust, providing both full file integrity checking

and simple detection of common transmission errors. PNG can store

gamma value and chromaticity data as well as a full ICC colour profile

or CICP image format signaling metadata,

for accurate colour matching on heterogenous platforms. This

Standard defines the Internet Media types «image/png»

and «image/apng». The

datastream and associated file format have value outside of the

main design goal.

For the purposes of this specification the following

definitions apply.

- alpha

- a value representing a pixel degree of opacity. The more

opaque a pixel, the more it hides the background against which

the image is presented. Zero alpha represents a completely

transparent pixel, maximum alpha represents a completely opaque

pixel. - alpha compaction

- an implicit representation of transparent

pixels. If every

pixel with a specific colour or greyscale value is fully

transparent and all other pixels are fully opaque, the

alpha

channel may be

represented implicitly. - alpha separation

- separating an alpha channel in which every

pixel is fully

opaque; all alpha values are the maximum value.

The fact that all pixels are fully opaque is represented implicitly. - alpha table

- indexed table of alpha sample values, which in an indexed-colour image defines the alpha

sample values of the reference image. The alpha table has the

same number of entries as the palette. - ancillary chunk

- class of chunk that provides additional

information. A PNG decoder, without processing an

ancillary chunk, can still produce a meaningful image, though not

necessarily the best possible image. - animated image

- Optional animation, consisting of a series of frames.

The first frame may be,

but need not be,

the static image. - bit depth

- for indexed-colour images, the number of bits

per palette index. For other images, the

number of bits per sample in the image. This is the value

that appears in the

11.2.1 IHDR Image

header chunk. - byte

- 8 bits; also called an octet. The highest bit (value 128) of

a byte is numbered bit 7; the lowest bit (value 1) is numbered

bit 0.

It represents an unsigned integer limited to the range 0 to

28-1. - byte order

- ordering of bytes for multi-byte data values within a

PNG file

or PNG datastream. PNG uses

network byte order. - canvas

- the area on the output device on which the frames are to be displayed.

The contents of the canvas are not necessarily available to the decoder.

If a bKGD chunk exists,

it may be used to fill the canvas if there is no preferable background - channel

- array of all per-pixel information of a particular kind

within a reference image. There are five kinds of

information: red, green, blue, greyscale, and alpha. For example the alpha

channel is the array of alpha values within a reference

image. - chromaticity

- pair of CIE x,y values [COLORIMETRY] that precisely specify a colour,

except for the brightness information. - chunk

- section of a PNG datastream. Each chunk has a chunk

type. Most chunks also include data. The format and meaning of

the data within the chunk are determined by the chunk type.

Each chunk is either a

critical chunk or an

ancillary chunk. - composite (verb)

- to form an image by merging a foreground image and a

background image, using transparency information to determine

where and to what extent the background should be visible. The

foreground image is said to be «composited against» the

background. - critical chunk

- chunk

that

shall be understood and processed by the decoder in order to

produce a meaningful image from a PNG datastream. - datastream

- sequence of bytes. This term is used rather than

«file» to describe a byte sequence that may be only a portion of

a file. It is also used to emphasize that the sequence of bytes

might be generated and consumed «on the fly», never appearing in

a stored file at all. - deflate

- name of a particular compression algorithm. This algorithm is

used, in compression mode 0, in conforming

PNG

datastreams. Deflate is a member of the LZ77 family of

compression methods. It is defined in [RFC1951]. - delivered image

- image constructed from a decoded

PNG datastream. - frame buffer

- the final digital storage area for the image shown by most

types of computer display. Software causes an image to appear on

screen by loading the image into the frame buffer. - fully transparent black

- the red, green, blue and alpha components are all set to zero.

- gamma value

- value of the exponent of a gamma transfer function

- gamma

- power-law transfer function

- full-range image

- Image where reference black and white correspond to sample values

0and2bit depth - 1, respectively. - greyscale

- image representation in which each pixel is defined by a single

sample of

colour information, representing overall

luminance (on a

scale from black to white), and optionally an

alpha sample (in

which case it is called greyscale with alpha). - image data

- 1-dimensional array of scanlines within an image.

- indexed-colour

- image representation in which each pixel of the original image is

represented by a single index into a palette. The selected palette entry

defines the actual colour of the pixel. - interlaced PNG image

- sequence of reduced images generated from the

PNG image

by pass extraction. - lossless

- method of data compression that permits reconstruction of the

original data exactly, bit-for-bit. - luminance

- formal definition of luminance is in [COLORIMETRY].

Informally it is the perceived brightness, or

greyscale

level, of a colour. Luminance and chromaticity together fully define

a perceived colour. - LZ77

- data compression algorithm described in

[Ziv-Lempel]. - narrow-range image

- Image where reference black and white do not correspond to sample values

0and2bit depth - 1, respectively. - network byte order

- byte order in which the most significant byte comes first,

then the less significant bytes in descending order of

significance (MSB LSB for two-byte integers,

MSB B2 B1

LSB for four-byte

integers). - output buffer

- The output buffer is a pixel array

with dimensions specified by the width and height parameters of the PNG IHDR chunk. Conceptually, each frame is constructed in the output buffer before being composited onto the canvas. The contents of the output buffer are available to the decoder. The corners of the output buffer are mapped to the corners of the canvas. - palette

- indexed table of three 8-bit sample values, red, green, and blue,

which with an indexed-colour image defines the red,

green, and blue sample values of the

reference image. In other cases, the palette may be a suggested

palette that viewers may use to present the image on

indexed-colour display hardware. Alpha samples may be defined for palette

entries via the alpha table and may be used to

reconstruct the alpha sample values of the reference image. - organizing a PNG image as a sequence of reduced images

to change the order of transmission and enable

progressive display. - pixel

- information stored for a single grid point in an image. A

pixel consists of (or points to) a sequence of samples from all

channels. The

complete image is a rectangular array of pixels. - PNG datastream

- result of encoding a PNG image. A PNG

datastream

consists of a PNG signature followed by a sequence of

chunks. - PNG decoder

- process or device which reconstructs the

reference image from a PNG datastream and generates a

corresponding delivered image. - PNG editor

- process or device which creates a modification of an existing

PNG datastream, preserving unmodified ancillary

information wherever possible, and obeying the

chunk ordering

rules, even for unknown chunk types. - PNG encoder

- process or device which constructs a

reference image from a source image, and generates a

PNG datastream representing the reference image. - PNG file

- PNG datastream stored as a file.

- PNG four-byte unsigned integer

-

a four-byte unsigned integer limited to the range 0 to 231-1.

Note

The restriction is imposed in order to accommodate languages

that have difficulty with unsigned four-byte values. - PNG image

- result of transformations applied by a

PNG encoder to

a reference image, in preparation for encoding as a

PNG datastream, and the result of decoding a PNG

datastream. - PNG signature

- sequence of bytes appearing at the start of every

PNG datastream. It differentiates a PNG datastream from

other types of datastream and allows early detection of

some transmission errors. - reduced image

- pass of the interlaced PNG image extracted from the

PNG image by pass extraction. - reference image

- rectangular array of rectangular pixels, each having the same number

of samples, either three (red, green, blue)

or four (red, green, blue, alpha). Every reference image can be

represented exactly by a PNG datastream and every PNG datastream

can be converted into a reference image. Each

channel has a

sample depth in the range 1 to 16. All samples in the same

channel have the same sample depth. Different channels may have

different sample depths. - sample

- intersection of a channel and a pixel in an image.

- sample depth

- number of bits used to represent a sample value. In an

indexed-colour PNG image, samples are stored in

the palette and thus the sample depth is

always 8 by definition of the palette. In other types of PNG

image it is the same as the bit depth. - scanline

- row of pixels within an image or

interlaced PNG image. - source image

- image which is presented to a PNG encoder.

- static image

- non-animated image

corresponding to the reference image

encoded in the IDAT chunk.

All PNG and APNG images contain a static image,

and non animation-capable displays (such as printers)

will display this rather than the animation. - transfer function

- function relating image luminance with image samples

- white point

- chromaticity of a computer display’s

nominal white value. - zlib

-

deflate-style compression method.

Note

The format is defined in [rfc1950].

Note

Also refers to the name of a library containing a sample

implementation of this method.

- APNG

- Animated PNG, a type of PNG

which — in addition to a

static image —

also contains an animated image. - Cyclic Redundancy Code

- CRC

-

type of check value designed to detect most transmission errors.

Note

A decoder calculates the CRC for the received data and checks

by comparing it to the CRC calculated by the encoder and appended to the data.

A mismatch indicates that the data or the CRC were corrupted in transit. - Cathode Ray Tube

- CRT

- vacuum tube containing one or more electron guns, which emit electron beams that are manipulated to display images on a phosphorescent screen.

- LSB

- Least Significant Byte of a multi-byte value.

- MSB

- Most Significant Byte of a multi-byte value.

All PNG images contain a single

static image.

Some PNG images —

called APNG

for Animated PNG —

also contain a frame-based animation sequence,

the animated image.

The first frame of this may be —

but need not be —

the static image.

The static image,

and each individual frame of an

animated image,

corresponds to a reference image

and is stored as a PNG image.

This specification specifies the PNG datastream, and

places some requirements on PNG encoders, which generate PNG

datastreams, PNG decoders, which interpret PNG datastreams, and

PNG editors, which transform one PNG datastream into another. It

does not specify the interface between an application and either

a PNG encoder, decoder, or editor. The precise form in which an

image is presented to an encoder or delivered by a decoder is not

specified. Four kinds of image are distinguished.

- The source image is the image presented to a PNG

encoder. - The reference image, which only exists conceptually,

is a rectangular array of rectangular pixels, all having the same

width and height, and all containing the same number of unsigned

integer samples, either three (red, green, blue) or four (red,

green, blue, alpha). The array of all samples of a particular

kind (red, green, blue, or alpha) is called a channel. Each

channel has a sample depth in the range 1 to 16, which is the

number of bits used by every sample in the channel. Different

channels may have different sample depths. The red, green, and

blue samples determine the intensities of the red, green, and

blue components of the pixel’s colour; if they are all zero, the

pixel is black, and if they all have their maximum values

(2sampledepth-1), the pixel is white. The alpha sample

determines a pixel’s degree of opacity, where zero means fully

transparent and the maximum value means fully opaque. In a

three-channel reference image all pixels are fully opaque. (It is

also possible for a four-channel reference image to have all

pixels fully opaque; the difference is that the latter has a

specific alpha sample depth, whereas the former does not.) Each

horizontal row of pixels is called a scanline. Pixels are ordered

from left to right within each scanline, and scanlines are

ordered from top to bottom. A PNG encoder may transform the source

image directly into a PNG image, but conceptually it first

transforms the source image into a reference image, then

transforms the reference image into a PNG image. Depending on the

type of source image, the transformation from the source image to

a reference image may require the loss of information. That

transformation is beyond the scope of this International Standard.

The reference image, however, can always be recovered

exactly from a PNG datastream. - The PNG image is obtained from the reference image by

a series of transformations: alpha separation, indexing, RGB

merging, alpha compaction, and sample depth scaling. Five types

of PNG image are defined (see 6.1 Colour types and values). (If the PNG encoder actually transforms the

source image directly into the PNG image, and the source image

format is already similar to the PNG image format, the encoder

may be able to avoid doing some of these transformations.)

Although not all sample depths in the range 1 to 16 bits are

explicitly supported in the PNG image, the number of significant

bits in each channel of the reference image may be recorded. All

channels in the PNG image have the same sample depth. A PNG

encoder generates a PNG datastream from the PNG image. A PNG

decoder takes the PNG datastream and recreates the PNG

image. - The delivered image is constructed from the PNG image

obtained by decoding a PNG datastream. No specific format is

specified for the delivered image. A viewer presents an image to

the user as close to the appearance of the original source image

as it can achieve.

The relationships between the four kinds of image are

illustrated in Figure 1.

The relationships between samples, channels, pixels, and

sample depth are illustrated in Figure 2.

The RGB colour space in which colour samples are situated may

be specified in one of four ways:

- by CICP image format signaling metadata;

- by an ICC profile;

- by specifying explicitly that the colour space is sRGB when

the samples conform to this colour space; - by specifying a gamma value and the 1931 CIE x,y

chromaticities of the red, green, and blue primaries used in the

image and the reference white point.

For high-end applications the first two methods provides the most

flexibility and control. The third method enables one particular,

but extremely common,

colour space to be indicated. The fourth method,

which was standardized before ICC profiles were widely adopted,

enables the exact

chromaticities of the RGB data to be specified, along with the

gamma correction to be applied (see C. Gamma and chromaticity).

However, colour-aware applications will prefer one of the first three methods,

while colour-unaware applications will typically ignore all four methods.

Gamma correction is not applied to the alpha channel, if

present. Alpha samples always represent a linear fraction of full

opacity.

A number of transformations are applied to the reference image

to create the PNG image to be encoded (see Figure 3). The

transformations are applied in the following sequence, where

square brackets mean the transformation is optional:

[alpha separation]

indexing or ( [RGB merging] [alpha compaction] )

sample depth scalingWhen every pixel is either fully transparent or fully opaque,

the alpha separation, alpha compaction, and indexing

transformations can cause the recovered reference image to have

an alpha sample depth different from the original reference

image, or to have no alpha channel. This has no effect on the

degree of opacity of any pixel. The two reference images are

considered equivalent, and the transformations are considered

lossless. Encoders that nevertheless wish to preserve the alpha

sample depth may elect not to perform transformations that would

alter the alpha sample depth.

If all alpha samples in a reference image have the maximum

value, then the alpha channel may be omitted, resulting in an

equivalent image that can be encoded more compactly.

If the number of distinct pixel values is 256 or less, and the

RGB sample depths are not greater than 8, and the alpha channel

is absent or exactly 8 bits deep or every pixel is either fully

transparent or fully opaque, then an alternative representation

called indexed-colour may be more efficient for encoding.

In the indexing transformation, each pixel is replaced by an index into a palette.

The palette is a list of entries each containing

three 8-bit samples (red, green, blue). If an alpha channel is

present, there is also a parallel table of 8-bit alpha

samples.

A suggested palette or palettes may be constructed even when

the PNG image is not indexed-colour in order to assist viewers

that are capable of displaying only a limited number of

colours.

For indexed-colour images, encoders can rearrange the palette

so that the table entries with the maximum alpha value are

grouped at the end. In this case the table can be encoded in a

shortened form that does not include these entries.

Encoders creating indexed-color PNG must not insert

index values greater than the actual length of the palette table;

to do so is an error, and decoders will vary in their handling of this error.

If the red, green, and blue channels have the same sample

depth, and, for each pixel, the values of the red, green, and blue

samples are equal, then these three channels may be merged into a

single greyscale channel.

For non-indexed images, if there exists an RGB (or greyscale)

value such that all pixels with that value are fully transparent

while all other pixels are fully opaque, then the alpha channel

can be represented more compactly by merely identifying the RGB

(or greyscale) value that is transparent.

In the PNG image, not all sample depths are supported (see

6.1 Colour types and values), and all channels shall have the same

sample depth. All channels of the PNG image use the smallest

allowable sample depth that is not less than any sample depth in

the reference image, and the possible sample values in the

reference image are linearly mapped into the next allowable range

for the PNG image. Figure 5 shows how samples of depth 3 might

be mapped into samples of depth 4.

Allowing only a few sample depths reduces the number of cases

that decoders have to cope with. Sample depth scaling is

reversible with no loss of data, because the reference image

sample depths can be recorded in the PNG datastream. In the

absence of recorded sample depths, the reference image sample

depth equals the PNG image sample depth. See 12.4 Sample depth scaling and 13.12 Sample depth

rescaling.

The transformation of the reference image results in one of

five types of PNG image (see Figure 6) :

- Truecolour with alpha: each pixel is defined by

samples, representing red, green, and blue intensities, and an

alpha sample. - Greyscale with alpha: each pixel consists of two samples:

grey and alpha. - Truecolour: each pixel is defined by

samples, representing red, green, and blue intensities. The alpha channel

may be represented by a single RGB pixel value. Matching pixels are fully

transparent, and all others are fully opaque. If the alpha channel is not

represented in this way, all pixels are fully opaque. - Greyscale: each pixel consists of a single sample: grey. The

alpha channel may be represented by a single greyscale pixel value,

similar to

the previous case. If the alpha channel is not represented in

this way, all pixels are fully opaque. - Indexed-colour: each pixel consists of an index into a palette (and into an associated table of alpha values, if present).

The format of each pixel depends on the PNG image type and the

bit depth. For PNG image types other than indexed-colour,

the bit depth specifies the number of bits per sample, not the

total number of bits per pixel.

For indexed-colour images, the bit depth specifies the

number of bits in each palette index, not the sample depth of the

colours in the palette or alpha table. Within the pixel the

samples appear in the following order, depending on the PNG image

type.

- Truecolour with alpha: red, green, blue, alpha.

- Greyscale with alpha: grey, alpha.

- Truecolour: red, green, blue.

- Greyscale: grey.

- Indexed-colour: palette index.

A conceptual model of the process of encoding a PNG image is

given in Figure 7.

The steps refer to the operations on the array of

pixels or indices in the PNG image. The palette and alpha table

are not encoded in this way.

- Pass extraction: to allow for progressive display, the PNG

image pixels can be rearranged to form several smaller images

called reduced images or passes. - Scanline serialization: the image is serialized a scanline at

a time. Pixels are ordered left to right in a scanline and

scanlines are ordered top to bottom. - Filtering: each scanline is transformed into a filtered

scanline using one of the defined filter types to prepare the

scanline for image compression. - Compression: occurs on all the filtered scanlines in the

image. - Chunking: the compressed image is divided into conveniently

sized chunks. An error detection code is added to each

chunk. - Datastream construction: the chunks are inserted into the

datastream.

Pass extraction (see Figure 7) splits a PNG image into a

sequence of reduced images where the first image defines a coarse

view and subsequent images enhance this coarse view until the

last image completes the PNG image. The set of reduced images is

also called an interlaced PNG image. Two interlace methods are

defined in this specification. The first method is a

null method; pixels are stored sequentially from left to right

and scanlines from top to bottom. The second method makes

multiple scans over the image to produce a sequence of seven

reduced images. The seven passes for a sample image are

illustrated in Figure 7. See 8. Interlacing and pass

extraction.

Each row of pixels, called a scanline, is represented as a

sequence of bytes.

PNG allows image data to be filtered before it is compressed.

Filtering can improve the compressibility of the data. The filter

operation is deterministic, reversible, and lossless. This allows

the decompressed data to be reverse-filtered in order to obtain

the original data. See 7.3 Filtering.

The sequence of filtered scanlines in the pass or passes of

the PNG image is compressed (see Figure 9) by one of the defined

compression methods. The concatenated filtered scanlines form the

input to the compression stage. The output from the compression

stage is a single compressed datastream. See 10. Compression.

Chunking provides a convenient breakdown of the compressed

datastream into manageable chunks (see Figure 9). Each chunk has its own

redundancy check. See 11. Chunk specifications.

Ancillary information may be associated with an image.

Decoders may ignore all or some of the ancillary information. The

types of ancillary information provided are described in Table 1.

| Type of information | Description |

|---|---|

| Animation information | An animated image, defined as a series of frames with associated timing, position and handling information, to be displayed if the viewer is capable of doing so. For other cases such as printers, the static image will be displayed instead. |

| Background colour | Solid background colour to be used when presenting the image if no better option is available. |

| Coding-independent code points | Identifies the colour space by enumerating metadata such as the transfer function and colour primaries. Originally for SDR and HDR video, also used for still and animated images. |

| EXIF information | Exchangeable image file format metadata such as shutter speed, aperture, and orientation |

| Gamma and chromaticity | Gamma value of the image with respect to the desired output intensity, and chromaticity characteristics of the RGB values used in the image. |

| ICC profile | Description of the colour space (in the form of an International Color Consortium (ICC) profile) to which the samples in the image conform. |

| Image histogram | Estimates of how frequently the image uses each palette entry. |

| Physical pixel dimensions | Intended pixel size and aspect ratio to be used in presenting the PNG image. |

| Significant bits | The number of bits that are significant in the samples. |

| sRGB colour space | A rendering intent (as defined by the International Color Consortium) and an indication that the image samples conform to this colour space. |

| Suggested palette | A reduced palette that may be used when the display device is not capable of displaying the full range of colours in the image. |

| Textual data | Textual information (which may be compressed) associated with the image. |

| Time | The time when the PNG image was last modified. |

| Transparency | Alpha information that allows the reference image to be reconstructed when the alpha channel is not retained in the PNG image. |

The PNG datastream consists of a PNG signature (see

5.2 PNG signature) followed by a sequence of chunks (see

11. Chunk specifications). Each chunk has a chunk type which

specifies its function.

There are 19 chunk types defined in this International

Standard. Chunk types are four-byte sequences chosen so that they

correspond to readable labels when interpreted in the ISO 646.IRV:1991 [ISO646]

character set. The first four are termed critical chunks, which

shall be understood and correctly interpreted according to the

provisions of this specification. These are:

- IHDR: image

header, which is the first chunk in a PNG datastream. - PLTE:

palette table associated with indexed PNG images. - IDAT: image

data chunks. - IEND: image

trailer, which is the last chunk in a PNG datastream.

The remaining 18 chunk types are termed ancillary chunk types,

which encoders may generate and decoders may interpret.

- Transparency information:

tRNS (see 11.3.1 Transparency

information). - Colour space information:

cHRM,

gAMA,

iCCP,

sBIT,

sRGB,

cICP (see 11.3.2 Colour space

information). - Textual information:

iTXt,

tEXt,

zTXt (see 11.3.3 Textual information). - Miscellaneous information:

bKGD,

hIST,

pHYs,

sPLT,

eXIf

(see 11.3.4 Miscellaneous

information). - Time information:

tIME (see 11.3.5 Time stamp

information). - Animation information:

acTL,

fcTL,

fdAT

(see 11.3.6 Animation information).

Animated PNG (APNG)

is an extension of the PNG format,

adding support for frame-based animated images.

It is intended to be a replacement for simple animated images

that have traditionally used the GIF format [GIF],

while adding support for 24-bit images and 8-bit transparency,

which GIF lacks.

APNG is backwards-compatible with earlier versions of PNG;

a non-animated PNG decoder will ignore the ancillary APNG-specific chunks

and display the static image.

An APNG stream is a normal PNG stream

as defined in previous versions of the PNG Specification,

with three additional chunk types

describing the animation

and providing additional frame data.

To be recognized as an APNG, an acTL chunk

must appear in the stream before any IDAT chunks.

The acTL structure is

described below.

Conceptually, at the beginning of each play

the output buffer

shall be completely initialized to a

fully transparent black rectangle,

with width and height dimensions from the IHDR chunk.

The static image may be included

as the first frame of the animation

by the presence of a single fcTL chunk before IDAT.

Otherwise, the static image is not part of the animation.

Subsequent frames are encoded in fdAT chunks,

which have the same structure as IDAT chunks,

except preceded by a sequence number.

Information for each frame

about placement and rendering

is stored in fcTL chunks.

The full layout of fdAT and fcTL chunks is

described below.

The boundaries of the entire animation

are specified by the width and height parameters

of the IHDR chunk,

regardless of whether the default image is part of the animation.

The default image should be appropriately padded

with fully transparent black pixels

if extra space will be needed for later frames.

Each frame is identical for each play,

therefore it is safe for applications to cache the frames.

The fcTL and fdAT chunks

have a zero-based, 4 byte sequence number.

Both chunk types share the sequence.

The purpose of this number is to detect (and optionally correct)

sequence errors in an Animated PNG,

since the PNG specification does not impose ordering restrictions on ancillary chunks.

The first fcTL chunk shall contain sequence number 0,

and the sequence numbers in the remaining

fcTL and fdAT chunks

shall be in ascending order,

with no gaps or duplicates.

The tables below illustrate the use of sequence numbers

for images with more than one frame,

and more than one fdAT chunk

for the second frame.

(IHDR and

IEND chunks

omitted in these tables, for clarity).

| Sequence number | Chunk |

|---|---|

| (none) | acTL |

| 0 | fcTL first frame |

| (none) | IDAT first frame / static image |

| 1 | fcTL second frame |

| 2 | first fdAT for second frame |

| 3 | second fdAT for second frame |

| Sequence number | Chunk |

|---|---|

| (none) | acTL |

| (none) | IDAT static image |

| 0 | fcTL first frame |

| 1 | first fdAT for first frame |

| 2 | second fdAT for first frame |

| 3 | fcTL second frame |

| 4 | first fdAT for second frame |

| 5 | second fdAT for second frame |

Errors in a PNG datastream fall into two general classes:

- transmission errors or damage to a computer file system,

which tend to corrupt much or all of the datastream; - syntax errors, which appear as invalid values in chunks, or

as missing or misplaced chunks. Syntax errors can be caused not

only by encoding mistakes, but also by the use of registered or

private values, if those values are unknown to the decoder.

PNG decoders should detect errors as early as possible,

recover from errors whenever possible, and fail gracefully

otherwise. The error handling philosophy is described in detail

in 13.1 Error handling.

This section is non-normative.

The PNG format exposes several extension points:

- chunk type;

- text keyword; and

- private field values.

Some of these extension points are reserved by W3C, while others are

available for private use.

The PNG datastream consists of a PNG signature followed by a sequence of chunks.

The first eight bytes of a PNG datastream always contain the

following (decimal) values:

137 80 78 71 13 10 26 10which are (in hexadecimal):

89 50 4E 47 0D 0A 1A 0AThis signature indicates that the remainder of the datastream

contains a single PNG image, consisting of a series of chunks

beginning with an

IHDR chunk and ending with an IEND chunk.

Each chunk consists of three or four fields (see Figure 10).

The meaning of the fields is described in Table 4.

The chunk data field may be empty.

| Name | Description |

|---|---|

| Length | A PNG four-byte unsigned integer giving the number of bytes in the chunk’s data field. The length counts only the data field, not itself, the chunk type, or the CRC. Zero is a valid length. Although encoders and decoders should treat the length as unsigned, its value shall not exceed 231-1 bytes. |

| Chunk Type | A sequence of four bytes defining the chunk type. Each byte of a chunk type is restricted to the decimal values 65 to 90 and 97 to 122. These correspond to the uppercase and lowercase ISO 646 [ISO646] letters ( A—Z and a—z)respectively for convenience in description and examination of PNG datastreams. Encoders and decoders shall treat the chunk types as fixed binary values, not character strings. For example, it would not be correct to represent the chunk type IDAT by the equivalents of those letters in the UCS 2 character set. Additional naming conventions for chunk types are discussed in 5.4 Chunk naming conventions. |

| Chunk Data | The data bytes appropriate to the chunk type, if any. This field can be of zero length. |

| CRC | A four-byte CRC calculated on the preceding bytes in the chunk, including the chunk type field and chunk data fields, but not including the length field. The CRC can be used to check for corruption of the data. The CRC is always present, even for chunks containing no data. See 5.5 CRC algorithm. |

The chunk data length may be any number of bytes up to the

maximum; therefore, implementors cannot assume that chunks are

aligned on any boundaries larger than bytes.

Chunk types are chosen to be meaningful names when the bytes

of the chunk type are interpreted as ISO 646 letters [ISO646]. Chunk types

are assigned so that a decoder can determine some properties of a

chunk even when the type is not recognized. These rules allow

safe, flexible extension of the PNG format, by allowing a PNG

decoder to decide what to do when it encounters an unknown chunk.

The naming rules are normally of interest only when the decoder does not

recognize the chunk’s type, as specified at 13. PNG decoders and viewers.

Four bits of the chunk type, the property bits, namely bit 5

(value 32) of each byte, are used to convey chunk properties.

This choice means that a human can read off the assigned

properties according to whether the letter corresponding to each

byte of the chunk type is uppercase (bit 5 is 0) or lowercase

(bit 5 is 1).

The property bits are an inherent part of the chunk type, and

hence are fixed for any chunk type. Thus, CHNK and cHNk would be

unrelated chunk types, not the same chunk with different

properties.

The semantics of the property bits are

defined in

Table 5.

| Name & location | Definition | Description |

|---|---|---|

| Ancillary bit: first byte | 0 (uppercase) = critical, 1 (lowercase) = ancillary. |

Critical chunks are necessary for successful display of the contents of the datastream, for example the image header chunk (IHDR). A decoder trying to extract the image, upon encountering an unknown chunk type in which the ancillary bit is 0, shall indicate to the user that the image contains information it cannot safely interpret. Ancillary chunks are not strictly necessary in order to meaningfully display the contents of the datastream, for example the time chunk ( tIME). A decoder encountering an unknown chunk type in which the ancillary bit is 1 can safely ignore the chunk and proceed to display the image. |

| Private bit: second byte | 0 (uppercase) = public, 1 (lowercase) = private. |

Public chunks are reserved for definition by the W3C. The definition of private chunks is specified at 12.10.1 Use of private chunks. The names of private chunks have a lowercase second letter, while the names of public chunks have uppercase second letters. |

| Reserved bit: third byte | 0 (uppercase) in this version of PNG. If the reserved bit is 1, the datastream does not conform to this version of PNG. |

The significance of the case of the third letter of the chunk name is reserved for possible future extension. In this International Standard, all chunk names shall have uppercase third letters. |

| Safe-to-copy bit: fourth byte | 0 (uppercase) = unsafe to copy, 1 (lowercase) = safe to copy. |

This property bit is not of interest to pure decoders, but it is needed by PNG editors. This bit defines the proper handling of unrecognized chunks in a datastream that is being modified. Rules for PNG editors are discussed further in 14.2 Behaviour of PNG editors. |

EXAMPLE The hypothetical chunk type «cHNk» has the property bits:

cHNk <-- 32 bit chunk type represented in text form

||||

|||+- Safe-to-copy bit is 1 (lower case letter; bit 5 is 1)

||+-- Reserved bit is 0 (upper case letter; bit 5 is 0)

|+--- Private bit is 0 (upper case letter; bit 5 is 0)

+---- Ancillary bit is 1 (lower case letter; bit 5 is 1)Therefore, this name represents an ancillary, public,

safe-to-copy chunk.

CRC fields are calculated using standardized CRC methods with

pre and post conditioning, as defined by [ISO_3309]

and [ITU-T_V.42].

The CRC polynomial employed—

which is identical to that used in the

GZIP file format specification [RFC1952]—

is

x32 + x26 + x23 +

x22 + x16 + x12 + x11

+ x10 + x8 + x7 + x5

+ x4 + x2 + x + 1

In PNG, the 32-bit CRC is initialized to all 1’s, and then the

data from each byte is processed from the least significant bit

(1) to the most significant bit (128). After all the data bytes

are processed, the CRC is inverted (its ones complement is

taken). This value is transmitted (stored in the datastream) MSB

first. For the purpose of separating into bytes and ordering, the

least significant bit of the 32-bit CRC is defined to be the

coefficient of the x31 term.

Practical calculation of the CRC often employs a precalculated

table to accelerate the computation. See D. Sample CRC

implementation.

The constraints on the positioning of the individual chunks

are listed in

Table 6 and illustrated diagrammatically

for static images in Figure 11 and Figure 12,

for animated images where the static image forms the first frame in

Figure 13 and

Figure 14, and

for animated images where the static image is not part of the animation in

Figure 15 and

Figure 16.

These lattice diagrams represent the constraints on positioning

imposed by this specification. The lines in the diagrams

define partial ordering relationships. Chunks higher up shall

appear before chunks lower down. Chunks which are horizontally

aligned and appear between two other chunk types (higher and

lower than the horizontally aligned chunks) may appear in any

order between the two higher and lower chunk types to which they

are connected. The superscript associated with the chunk type is

defined in

Table 7. It indicates whether the chunk is mandatory,

optional, or may appear more than once. A vertical bar between

two chunk types indicates alternatives.

| Critical chunks (shall appear in this order, except PLTE is optional) |

||

|---|---|---|

| Chunk name | Multiple allowed | Ordering constraints |

| IHDR | No | Shall be first |

| PLTE | No | Before first IDAT |

| IDAT | Yes | Multiple IDAT chunks shall be consecutive |

| IEND | No | Shall be last |

| Ancillary chunks (need not appear in this order) |

||

| Chunk name | Multiple allowed | Ordering constraints |

| acTL | No | Before PLTE and IDAT |

| cHRM | No | Before PLTE and IDAT |

| cICP | No | Before PLTE and IDAT |

| gAMA | No | Before PLTE and IDAT |

| iCCP | No | Before PLTE and IDAT. If the iCCP chunk is present, the sRGB chunk should not be present. |

| sBIT | No | Before PLTE and IDAT |

| sRGB | No | Before PLTE and IDAT. If the sRGB chunk is present, the iCCP chunk should not be present. |

| bKGD | No | After PLTE; before IDAT |

| hIST | No | After PLTE; before IDAT |

| tRNS | No | After PLTE; before IDAT |

| eXIf | No | Before IDAT |

| fcTL | Yes | One may occur before IDAT; all others shall be after IDAT |

| pHYs | No | Before IDAT |

| sPLT | Yes | Before IDAT |

| fdAT | Yes | After IDAT |

| tIME | No | None |

| iTXt | Yes | None |

| tEXt | Yes | None |

| zTXt | Yes | None |

| Symbol | Meaning |

|---|---|

| + | One or more |

| 1 | Only one |

| ? | Zero or one |

| * | Zero or more |

| | | Alternative |

PLTE

PLTE

PLTE, static image forms the first frame

PLTE, static image forms the first frame

PLTE, static image not part of animation

PLTE, static image not part of animation

All chunks, private and public, SHOULD be listed at [PNG-EXTENSIONS].

Public chunks are reserved for definition by the W3C.

Public chunks are intended for broad use consistent with the philosophy of PNG.

Organizations and applications are encouraged to submit any chunk that meet

the criteria above for definition as a public chunk by the PNG Working Group.

The definition as a public chunk is neither automatic nor immediate. A

proposed public chunk type SHALL not be used in publicly available software or

datastreams until defined as such.

The definition of new critical chunk types is discouraged unless

necessary.

Organizations and applications MAY define private chunks for private and

experimental use.

A private chunk SHOULD NOT be defined merely to carry textual information

of interest to a human user. Instead

iTXt chunk SHOULD BE used and corresponding

keyword SHOULD BE used and a suitable keyword defined.

Listing private chunks at [PNG-EXTENSIONS] reduces, but does not eliminate,

the chance that the same private chunk is used for incompatible purposes by

different applications.If a private chunk type is used, additional

identifying information SHOULD BE be stored at the beginning of the chunk

data to further reduce the risk of conflicts.

An ancillary chunk type, not a critical chunk type, SHOULD be used for

all private chunks that store information that is not absolutely essential

to view the image.

Private critical chunks SHOULD NOT be defined because PNG datastreams

containing such chunks are not portable, and SHOULD NOT be used in publicly

available software or datastreams. If a private critical chunk is essential

for an application, it SHOULD appear near the start of the datastream, so

that a standard decoder need not read very far before discovering that it

cannot handle the datastream.

See B. Guidelines for private chunk types for additional guidelines on defining

private chunks.

Values greater than or equal to 128 in the following fields are

private field values:

- compression method

- interlace method

- filter method

These private field values are neither defined nor reserved by this

specification.

Private field values MAY be used for experimental or private

semantics.

Private field values SHOULD NOT appear in publicly available

software or datastreams since they can result in datastreams that are

unreadable by PNG decoders as detailed at 13. PNG decoders and viewers.

As explained in 4.5 PNG image there are five types of PNG

image. Corresponding to each type is a colour type, which is the

sum of the following values: 1 (palette used), 2 (truecolour

used) and 4 (alpha used). Greyscale and truecolour images may

have an explicit alpha channel. The PNG image types and

corresponding colour types are listed in Table 8.

| PNG image type | Colour type |

|---|---|

| Greyscale | 0 |

| Truecolour | 2 |

| Indexed-colour | 3 |

| Greyscale with alpha | 4 |

| Truecolour with alpha | 6 |

The allowed bit depths and sample depths for each PNG image

type are listed in Image

header.

Greyscale samples represent luminance if the transfer curve is

indicated (by

gAMA,

sRGB, or

iCCP) or device-dependent greyscale if not.

RGB samples represent calibrated colour information if the colour

space is indicated (by

gAMA and

cHRM, or

sRGB, or

iCCP,

or uncalibrated device-dependent colour

if not.

Sample values are not necessarily proportional to light

intensity; the

gAMA chunk specifies the relationship between

sample values and display output intensity. Viewers are strongly

encouraged to compensate properly. See 4.3 Colour spaces, 13.13 Decoder gamma

handling and C. Gamma and chromaticity.

In a PNG datastream transparency may be represented in one of

four ways, depending on the PNG image type (see alpha separation

and alpha compaction).

- Truecolour with alpha, greyscale with alpha: an alpha channel

is part of the image array. - truecolour, greyscale: A

tRNS chunk contains a single pixel value

distinguishing the fully transparent pixels from the fully opaque

pixels. - Indexed-colour: A

tRNS chunk contains the alpha table that

associates an alpha sample with each palette entry. - truecolour, greyscale, indexed-colour: there is no tRNS chunk present and

all pixels are fully opaque.

An alpha channel included in the image array has 8-bit or

16-bit samples, the same size as the other samples. The alpha

sample for each pixel is stored immediately following the

greyscale or RGB samples of the pixel. An alpha value of zero

represents full transparency, and a value of

2sampledepth — 1 represents full opacity. Intermediate

values indicate partially transparent pixels that can be

composited against a background image to yield the delivered

image.

The colour values in a pixel are not premultiplied by the

alpha value assigned to the pixel. This rule is sometimes called

«unassociated» or «non-premultiplied» alpha. (Another common

technique is to store sample values premultiplied by the alpha

value; in effect, such an image is already composited against a

black background. PNG does not use premultiplied alpha.

In consequence an image editor can take a PNG image and easily

change its transparency.) See 12.3 Alpha channel

creation

and 13.16 Alpha channel

processing.

All integers that require more than one byte shall be in

network byte order (as illustrated in Figure 17

): the most significant byte

comes first, then the less significant bytes in descending order

of significance (MSB LSB for two-byte integers, MSB B2 B1 LSB for

four-byte integers). The highest bit (value 128) of a byte is

numbered bit 7; the lowest bit (value 1) is numbered bit 0.

Values are unsigned unless otherwise noted. Values explicitly

noted as signed are represented in two’s complement notation.

PNG four-byte unsigned integers are limited to the range 0 to

231-1 to accommodate languages that have difficulty

with unsigned four-byte values.

A PNG image (or pass, see 8. Interlacing and pass

extraction) is a rectangular pixel array, with pixels

appearing left-to-right within each scanline, and scanlines

appearing top-to-bottom. The size of each pixel is determined by

the number of bits per pixel.

Pixels within a scanline are always packed into a sequence of

bytes with no wasted bits between pixels. Scanlines always begin

on byte boundaries. Permitted bit depths and colour types are

restricted so that in all cases the packing is simple and

efficient.

In PNG images of colour type 0 (greyscale) each pixel is a single sample, which may have precision less than a byte (1, 2, or 4 bits). These samples are packed into bytes with the leftmost sample in the high-order bits of a byte followed by the other samples for the scanline.

In PNG images of colour type 3 (indexed-colour) each pixel is a single palette index. These indices are packed into bytes in the same way as the samples for colour type 0.

When there are multiple pixels per byte, some low-order bits

of the last byte of a scanline may go unused. The contents of

these unused bits are not specified.

PNG images that are not indexed-colour images may have sample

values with a bit depth of 16. Such sample values are in network

byte order (MSB first, LSB second). PNG permits multi-sample

pixels only with 8 and 16-bit samples, so multiple samples of a

single pixel are never packed into one byte.

A filter method is a transformation applied to an

array of scanlines with the aim of improving their compressibility.

PNG standardizes one filter method and several filter types

that may be used to prepare image data for compression. It

transforms the byte sequence into an equal length

sequence of bytes preceded by a filter type byte (see Figure 18 for an

example).

The encoder shall use only a single filter method for

an interlaced PNG image, but may use different filter types for

each scanline in a reduced image. An intelligent encoder can

switch filters from one scanline to the next. The method for

choosing which filter to employ is left to the encoder.

The filter type byte is not considered part of

the image data, but it is included in the datastream sent to the

compression step. See 9. Filtering.

Pass extraction (see

figure 4.8) splits a PNG image into a

sequence of reduced images (the interlaced PNG image) where the

first image defines a coarse view and subsequent images enhance

this coarse view until the last image completes the PNG image.