When ILM executes a lifecycle policy, it’s possible for errors to occur

while performing the necessary index operations for a step.

When this happens, ILM moves the index to an ERROR step.

If ILM cannot resolve the error automatically, execution is halted

until you resolve the underlying issues with the policy, index, or cluster.

For example, you might have a shrink-index policy that shrinks an index to four shards once it

is at least five days old:

PUT _ilm/policy/shrink-index

{

"policy": {

"phases": {

"warm": {

"min_age": "5d",

"actions": {

"shrink": {

"number_of_shards": 4

}

}

}

}

}

}

There is nothing that prevents you from applying the shrink-index policy to a new

index that has only two shards:

PUT /my-index-000001

{

"settings": {

"index.number_of_shards": 2,

"index.lifecycle.name": "shrink-index"

}

}

After five days, ILM attempts to shrink my-index-000001 from two shards to four shards.

Because the shrink action cannot increase the number of shards, this operation fails

and ILM moves my-index-000001 to the ERROR step.

You can use the ILM Explain API to get information about

what went wrong:

GET /my-index-000001/_ilm/explain

Which returns the following information:

{

"indices" : {

"my-index-000001" : {

"index" : "my-index-000001",

"managed" : true,

"index_creation_date_millis" : 1541717265865,

"time_since_index_creation": "5.1d",

"policy" : "shrink-index",

"lifecycle_date_millis" : 1541717265865,

"age": "5.1d",

"phase" : "warm",

"phase_time_millis" : 1541717272601,

"action" : "shrink",

"action_time_millis" : 1541717272601,

"step" : "ERROR",

"step_time_millis" : 1541717272688,

"failed_step" : "shrink",

"step_info" : {

"type" : "illegal_argument_exception",

"reason" : "the number of target shards [4] must be less that the number of source shards [2]"

},

"phase_execution" : {

"policy" : "shrink-index",

"phase_definition" : {

"min_age" : "5d",

"actions" : {

"shrink" : {

"number_of_shards" : 4

}

}

},

"version" : 1,

"modified_date_in_millis" : 1541717264230

}

}

}

}

|

The policy being used to manage the index: |

|

|

The index age: 5.1 days |

|

|

The phase the index is currently in: |

|

|

The current action: |

|

|

The step the index is currently in: |

|

|

The step that failed to execute: |

|

|

The type of error and a description of that error. |

|

|

The definition of the current phase from the |

To resolve this, you could update the policy to shrink the index to a single shard after 5 days:

PUT _ilm/policy/shrink-index

{

"policy": {

"phases": {

"warm": {

"min_age": "5d",

"actions": {

"shrink": {

"number_of_shards": 1

}

}

}

}

}

}

Retrying failed lifecycle policy stepsedit

Once you fix the problem that put an index in the ERROR step,

you might need to explicitly tell ILM to retry the step:

POST /my-index-000001/_ilm/retry

ILM subsequently attempts to re-run the step that failed.

You can use the ILM Explain API to monitor the progress.

Common ILM errorsedit

Here’s how to resolve the most common errors reported in the ERROR step.

Problems with rollover aliases are a common cause of errors.

Consider using data streams instead of managing rollover with aliases.

Rollover alias [x] can point to multiple indices, found duplicated alias [x] in index template [z]edit

The target rollover alias is specified in an index template’s index.lifecycle.rollover_alias setting.

You need to explicitly configure this alias one time when you

bootstrap the initial index.

The rollover action then manages setting and updating the alias to

roll over to each subsequent index.

Do not explicitly configure this same alias in the aliases section of an index template.

index.lifecycle.rollover_alias [x] does not point to index [y]edit

Either the index is using the wrong alias or the alias does not exist.

Check the index.lifecycle.rollover_alias index setting.

To see what aliases are configured, use _cat/aliases.

Setting [index.lifecycle.rollover_alias] for index [y] is empty or not definededit

The index.lifecycle.rollover_alias setting must be configured for the rollover action to work.

Update the index settings to set index.lifecycle.rollover_alias.

Alias [x] has more than one write index [y,z]edit

Only one index can be designated as the write index for a particular alias.

Use the aliases API to set is_write_index:false for all but one index.

index name [x] does not match pattern ^.*-d+edit

The index name must match the regex pattern ^.*-d+ for the rollover action to work.

The most common problem is that the index name does not contain trailing digits.

For example, my-index does not match the pattern requirement.

Append a numeric value to the index name, for example my-index-000001.

CircuitBreakingException: [x] data too large, data for [y]edit

This indicates that the cluster is hitting resource limits.

Before continuing to set up ILM, you’ll need to take steps to alleviate the resource issues.

For more information, see Circuit breaker errors.

High disk watermark [x] exceeded on [y]edit

This indicates that the cluster is running out of disk space.

This can happen when you don’t have index lifecycle management set up to roll over from hot to warm nodes.

Consider adding nodes, upgrading your hardware, or deleting unneeded indices.

security_exception: action [<action-name>] is unauthorized for user [<user-name>] with roles [<role-name>], this action is granted by the index privileges [manage_follow_index,manage,all]edit

This indicates the ILM action cannot be executed because the user used by ILM to perform the action doesn’t have the proper privileges. This can happen when user’s privileges has been dropped after updating the ILM policy.

ILM actions are run as though they were performed by the last user who modify the policy. The account used to create or modify the policy from should have permissions to perform all operations that are part of that policy.

When {ilm-init} executes a lifecycle policy, it’s possible for errors to occur

while performing the necessary index operations for a step.

When this happens, {ilm-init} moves the index to an ERROR step.

If {ilm-init} cannot resolve the error automatically, execution is halted

until you resolve the underlying issues with the policy, index, or cluster.

For example, you might have a shrink-index policy that shrinks an index to four shards once it

is at least five days old:

PUT _ilm/policy/shrink-index { "policy": { "phases": { "warm": { "min_age": "5d", "actions": { "shrink": { "number_of_shards": 4 } } } } } }

There is nothing that prevents you from applying the shrink-index policy to a new

index that has only two shards:

PUT /my-index-000001 { "settings": { "index.number_of_shards": 2, "index.lifecycle.name": "shrink-index" } }

After five days, {ilm-init} attempts to shrink my-index-000001 from two shards to four shards.

Because the shrink action cannot increase the number of shards, this operation fails

and {ilm-init} moves my-index-000001 to the ERROR step.

You can use the {ilm-init} Explain API to get information about

what went wrong:

GET /my-index-000001/_ilm/explain

Which returns the following information:

{

"indices" : {

"my-index-000001" : {

"index" : "my-index-000001",

"managed" : true,

"index_creation_date_millis" : 1541717265865,

"time_since_index_creation": "5.1d",

"policy" : "shrink-index", (1)

"lifecycle_date_millis" : 1541717265865,

"age": "5.1d", (2)

"phase" : "warm", (3)

"phase_time_millis" : 1541717272601,

"action" : "shrink", (4)

"action_time_millis" : 1541717272601,

"step" : "ERROR", (5)

"step_time_millis" : 1541717272688,

"failed_step" : "shrink", (6)

"step_info" : {

"type" : "illegal_argument_exception", (7)

"reason" : "the number of target shards [4] must be less that the number of source shards [2]"

},

"phase_execution" : {

"policy" : "shrink-index",

"phase_definition" : { (8)

"min_age" : "5d",

"actions" : {

"shrink" : {

"number_of_shards" : 4

}

}

},

"version" : 1,

"modified_date_in_millis" : 1541717264230

}

}

}

}-

The policy being used to manage the index:

shrink-index -

The index age: 5.1 days

-

The phase the index is currently in:

warm -

The current action:

shrink -

The step the index is currently in:

ERROR -

The step that failed to execute:

shrink -

The type of error and a description of that error.

-

The definition of the current phase from the

shrink-indexpolicy

To resolve this, you could update the policy to shrink the index to a single shard after 5 days:

PUT _ilm/policy/shrink-index { "policy": { "phases": { "warm": { "min_age": "5d", "actions": { "shrink": { "number_of_shards": 1 } } } } } }

Retrying failed lifecycle policy steps

Once you fix the problem that put an index in the ERROR step,

you might need to explicitly tell {ilm-init} to retry the step:

POST /my-index-000001/_ilm/retry

{ilm-init} subsequently attempts to re-run the step that failed.

You can use the {ilm-init} Explain API to monitor the progress.

Common {ilm-init} errors

Here’s how to resolve the most common errors reported in the ERROR step.

|

Tip |

Problems with rollover aliases are a common cause of errors. Consider using data streams instead of managing rollover with aliases. |

Rollover alias [x] can point to multiple indices, found duplicated alias [x] in index template [z]

The target rollover alias is specified in an index template’s index.lifecycle.rollover_alias setting.

You need to explicitly configure this alias one time when you

bootstrap the initial index.

The rollover action then manages setting and updating the alias to

roll over to each subsequent index.

Do not explicitly configure this same alias in the aliases section of an index template.

index.lifecycle.rollover_alias [x] does not point to index [y]

Either the index is using the wrong alias or the alias does not exist.

Check the index.lifecycle.rollover_alias index setting.

To see what aliases are configured, use _cat/aliases.

Setting [index.lifecycle.rollover_alias] for index [y] is empty or not defined

The index.lifecycle.rollover_alias setting must be configured for the rollover action to work.

Update the index settings to set index.lifecycle.rollover_alias.

Alias [x] has more than one write index [y,z]

Only one index can be designated as the write index for a particular alias.

Use the aliases API to set is_write_index:false for all but one index.

index name [x] does not match pattern ^.*-d+

The index name must match the regex pattern ^.*-d+ for the rollover action to work.

The most common problem is that the index name does not contain trailing digits.

For example, my-index does not match the pattern requirement.

Append a numeric value to the index name, for example my-index-000001.

CircuitBreakingException: [x] data too large, data for [y]

This indicates that the cluster is hitting resource limits.

Before continuing to set up {ilm-init}, you’ll need to take steps to alleviate the resource issues.

For more information, see [circuit-breaker-errors].

High disk watermark [x] exceeded on [y]

This indicates that the cluster is running out of disk space.

This can happen when you don’t have {ilm} set up to roll over from hot to warm nodes.

Consider adding nodes, upgrading your hardware, or deleting unneeded indices.

security_exception: action [<action-name>] is unauthorized for user [<user-name>] with roles [<role-name>], this action is granted by the index privileges [manage_follow_index,manage,all]

This indicates the ILM action cannot be executed because the user used by ILM to perform the action doesn’t have the proper privileges. This can happen when user’s privileges has been dropped after updating the ILM policy.

ILM actions are run as though they were performed by the last user who modify the policy. The account used to create or modify the policy from should have permissions to perform all operations that are part of that policy.

Содержание

- Illegal argument exception elasticsearch

- Retrying failed lifecycle policy stepsedit

- Common ILM errorsedit

- Rollover alias [x] can point to multiple indices, found duplicated alias [x] in index template [z]edit

- index.lifecycle.rollover_alias [x] does not point to index [y]edit

- Setting [index.lifecycle.rollover_alias] for index [y] is empty or not definededit

- Alias [x] has more than one write index [y,z]edit

- index name [x] does not match pattern ^.*-d+edit

- CircuitBreakingException: [x] data too large, data for [y]edit

- High disk watermark [x] exceeded on [y]edit

- security_exception: action [] is unauthorized for user [ ] with roles [ ], this action is granted by the index privileges [manage_follow_index,manage,all]edit

- Fixing IllegalArgumentException in Elasticsearch

- What is Elasticsearch?

- Error found in the logs

- Index with the wrong mapping

- How to fix the error

- Conclusion

- ILM reports error illegal_argument_exception #45453

- Comments

- illegal_argument_exception when using scripted fields. #20885

- Comments

- Hands-on-Exercises: Mapping Exceptions with Elasticsearch

- The Process

- The Result

- The Challenges

- Hands-on Exercises

- Field datatypes – mapper_parsing_exception

- Limits – illegal_argument_exception

Illegal argument exception elasticsearch

When ILM executes a lifecycle policy, it’s possible for errors to occur while performing the necessary index operations for a step. When this happens, ILM moves the index to an ERROR step. If ILM cannot resolve the error automatically, execution is halted until you resolve the underlying issues with the policy, index, or cluster.

For example, you might have a shrink-index policy that shrinks an index to four shards once it is at least five days old:

There is nothing that prevents you from applying the shrink-index policy to a new index that has only two shards:

After five days, ILM attempts to shrink my-index-000001 from two shards to four shards. Because the shrink action cannot increase the number of shards, this operation fails and ILM moves my-index-000001 to the ERROR step.

You can use the ILM Explain API to get information about what went wrong:

Which returns the following information:

The policy being used to manage the index: shrink-index

The index age: 5.1 days

The phase the index is currently in: warm

The current action: shrink

The step the index is currently in: ERROR

The step that failed to execute: shrink

The type of error and a description of that error.

The definition of the current phase from the shrink-index policy

To resolve this, you could update the policy to shrink the index to a single shard after 5 days:

Retrying failed lifecycle policy stepsedit

Once you fix the problem that put an index in the ERROR step, you might need to explicitly tell ILM to retry the step:

ILM subsequently attempts to re-run the step that failed. You can use the ILM Explain API to monitor the progress.

Common ILM errorsedit

Here’s how to resolve the most common errors reported in the ERROR step.

Problems with rollover aliases are a common cause of errors. Consider using data streams instead of managing rollover with aliases.

Rollover alias [x] can point to multiple indices, found duplicated alias [x] in index template [z]edit

The target rollover alias is specified in an index template’s index.lifecycle.rollover_alias setting. You need to explicitly configure this alias one time when you bootstrap the initial index. The rollover action then manages setting and updating the alias to roll over to each subsequent index.

Do not explicitly configure this same alias in the aliases section of an index template.

index.lifecycle.rollover_alias [x] does not point to index [y]edit

Either the index is using the wrong alias or the alias does not exist.

Check the index.lifecycle.rollover_alias index setting. To see what aliases are configured, use _cat/aliases.

Setting [index.lifecycle.rollover_alias] for index [y] is empty or not definededit

The index.lifecycle.rollover_alias setting must be configured for the rollover action to work.

Update the index settings to set index.lifecycle.rollover_alias .

Alias [x] has more than one write index [y,z]edit

Only one index can be designated as the write index for a particular alias.

Use the aliases API to set is_write_index:false for all but one index.

index name [x] does not match pattern ^.*-d+edit

The index name must match the regex pattern ^.*-d+ for the rollover action to work. The most common problem is that the index name does not contain trailing digits. For example, my-index does not match the pattern requirement.

Append a numeric value to the index name, for example my-index-000001 .

CircuitBreakingException: [x] data too large, data for [y]edit

This indicates that the cluster is hitting resource limits.

Before continuing to set up ILM, you’ll need to take steps to alleviate the resource issues. For more information, see Circuit breaker errors.

High disk watermark [x] exceeded on [y]edit

This indicates that the cluster is running out of disk space. This can happen when you don’t have index lifecycle management set up to roll over from hot to warm nodes.

Consider adding nodes, upgrading your hardware, or deleting unneeded indices.

security_exception: action [] is unauthorized for user [ ] with roles [ ], this action is granted by the index privileges [manage_follow_index,manage,all]edit

This indicates the ILM action cannot be executed because the user used by ILM to perform the action doesn’t have the proper privileges. This can happen when user’s privileges has been dropped after updating the ILM policy. ILM actions are run as though they were performed by the last user who modify the policy. The account used to create or modify the policy from should have permissions to perform all operations that are part of that policy.

Источник

Fixing IllegalArgumentException in Elasticsearch

In the following post, we are going to see how to solve an error that I found in an application that is using Elasticsearch as storage.

What is Elasticsearch?

Elasticsearch is a non-relational database that is powerful for doing searches by text. The definition from wikipedia:

Elasticsearch is a search engine based on the Lucene library. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents

I have used it to store millions of documents for things like storing prices searched.

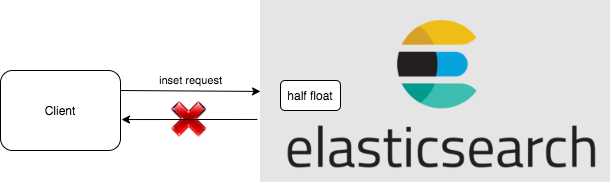

Error found in the logs

Some days ago I found the following error in the logs of the application that I was working on:

Caused by: ElasticsearchException[Elasticsearch exception [type=illegal_argument_exception, reason=[half_float] supports only finite values, but got [94433.46]]]

According to the elasticsearch documentation the maximum value that can have a half_float is 65500 , so if we try to insert a document with a value higher than the maximum allowed we will receive the previous error.

Index with the wrong mapping

Looking at the mappings of the existing index I could see that there were fields with this half_float

How to fix the error

We can update an index template of an existing index. With the execution of the following command, we can update the property amount setting his type to float instead of the half_float that was generating the error.

In our case, we don’t need to reindex the documents into a new index because we are only setting a type that is larger than the previous one. It would be different that we change i.e: from String to date, that would need reindexing. of the data from the old index to the new index.

Conclusion

In this post, we have seen how to fix a half_float problem that we had in our Elasticsearch index where we were storing prices that were higher than the half_float maximum value.

Источник

ILM reports error illegal_argument_exception #45453

Elasticsearch version : 7.2.0

Installed by eck-operator: 0.9.0-rc7

Plugins installed: as installed by eck-operator version 0.9.0-rc7

docker.elastic.co/elasticsearch/elasticsearch:7.2.0

JVM version ( java -version ): as used in elasticsearch docker image

OS version ( uname -a if on a Unix-like system): Linux sworkstation 4.15.0-55-generic

Description of the problem including expected versus actual behavior:

The following options are used for deploying metricbeat helm chart — overwritten values below.

ILM reports the following error:

illegal_argument_exception: index.lifecycle.rollover_alias [metricbeat] does not point to index [metricbeat-nfs-provisioner-2019.08.09]

Steps to reproduce:

install elasticsearch 7.2.0 using eck-operator at elasticsearch namespace.

Default install with 2 nodes.

install metricbeat using helm chart: stable/metricbeat with the following overwrite myvalues.yml:

helm install -f myvalues.yml —name metric —namespace elasticsearch stable/metricbeat

myvalues.yml — > rename txt to yml

myvalues.txt

- Wait 2-5min to see error on kibana. It is not seen immediately.

The text was updated successfully, but these errors were encountered:

This line right here is the problem:

That setting in Beats generally doesn’t play well with ILM — this is something that should probably be addressed in Beats. When Beats configures ILM, it does so based on the ILM configuration (see below). When the output index is manually set to something other than the alias that Beats set up, it will cause errors similar to this.

The proper way to change the Beats output when using ILM can be found in the Configure index lifecycle management page in the Beats documentation.

I’ve answered this here because this is something that looks on the surface like it could be a bug in our products, but for more help on this problem please post to the forums, where we typically provide configuration assistance and troubleshooting, as we try to keep GitHub issues reserved for bugs and feature requests.

Because this is not an Elasticsearch bug (although it is arguably a Beats usability bug), I hope you don’t mind that I close this issue.

Источник

illegal_argument_exception when using scripted fields. #20885

There is a bug in the Kibana Repo that looks like it might be exposing an ES bug. Could someone take a look? elastic/kibana#8404

Here is the relevant request/response that is triggering the error, copied from the above issue:

The text was updated successfully, but these errors were encountered:

@stacey-gammon In painless, params need to be referenced as params.value , not just value

Thanks for the update @clintongormley. To confirm, this is not the case with groovy as the selected language? That one appears to break when I use params.value, but not if I just use value.

That is correct. Groovy dumps all the params into local variables. Painless has them in the params var.

That is correct. Groovy dumps all the params into local variables. Painless has them in the params var.

I think this is a thing we’ll be able to improve on with #20621 but that isn’t ready. This level of clarity is about the best we’ll get with painless for the next while. Painless is marked experimental because we’re really trying to make improve on it constantly. It is the default because groovy security is a nightmare and we want off of groovy as fast as we can.

I think this is a thing we’ll be able to improve on with #20621

Yes and no. Params from the user (passed in through the runtime api) will stay in params. But things that each context provides (eg _source for scripted fields) will be accessible as a local variable again once we have contexts done.

Источник

Hands-on-Exercises: Mapping Exceptions with Elasticsearch

Mapping is an essential foundation of an index that can generally be considered the heart of Elasticsearch. So you can be sure of the importance of a well-managed mapping. But just as it is with many important things, sometimes mappings can go wrong. Let’s take a look at various issues that can arise with mappings and how to deal with them.

Before delving into the possible challenges with mappings, let’s quickly recap some key points about Mappings. A mapping essentially entails two parts:

- The Process: A process of defining how your JSON documents will be stored in an index

- The Result: The actual metadata structure resulting from the definition process

The Process

If we first consider the process aspect of the mapping definition, there are generally two ways this can happen:

- An explicit mapping process where you define what fields and their types you want to store along with any additional parameters.

- A dynamic mapping Elasticsearch automatically attempts to determine the appropropriate datatype and updates the mapping accordingly.

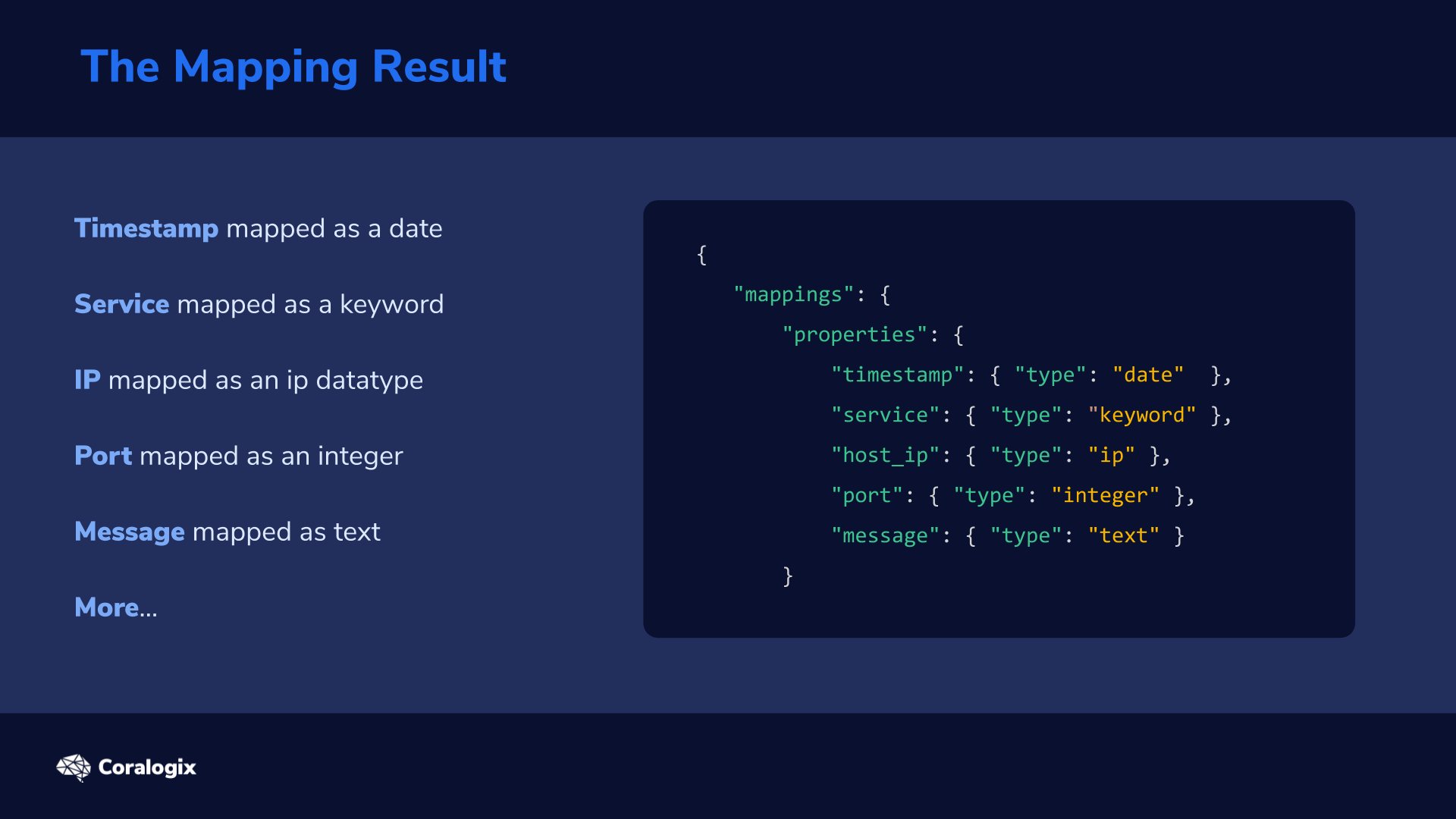

The Result

The result of the mapping process defines what we can “index” via individual fields and their datatypes, and also how the indexing happens via related parameters.

Consider this mapping example:

It’s a very simple mapping example for a basic logs collection microservice. The individual logs consist of the following fields and their associated datatypes:

- Timestamp of the log mapped as a date

- Service name which created the log mapped as a keyword

- IP of the host on which the log was produced mapped as an ip datatype

- Port number mapped as an integer

- The actual log Message mapped as text to enable full-text searching

- More… As we have not disabled the default dynamic mapping process so we’ll be able to see how we can introduce new fields arbitrarily and they will be added to the mapping automatically.

The Challenges

So what could go wrong :)?

There are generally two potential issues that many will end up facing with Mappings:

- If we create an explicit mapping and fields don’t match, we’ll get an exception if the mismatch falls beyond a certain “safety zone”. We’ll explain this in more detail later.

- If we keep the default dynamic mapping and then introduce many more fields, we’re in for a “mapping explosion” which can take our entire cluster down.

Let’s continue with some interesting hands-on examples where we’ll simulate the issues and attempt to resolve them.

Hands-on Exercises

Field datatypes – mapper_parsing_exception

Let’s get back to the “safety zone” we mentioned before when there’s a mapping mismatch.

We’ll create our index and see it in action. We are using the exact same mapping that we saw earlier:

A well-defined JSON log for our mapping would look something like this:

But what if another service tries to log its port as a string and not a numeric value? (notice the double quotation marks). Let’s give that try:

Great! It worked without throwing an exception. This is the “safety zone” I mentioned earlier.

But what if that service logged a string that has no relation to numeric values at all into the Port field, which we earlier defined as an Integer? Let’s see what happens:

We’re now entering the world of Elastisearch mapping exceptions! We received a code 400 and the mapper_parsing_exception that is informing us about our datatype issue. Specifically that it has failed to parse the provided value of “NONE” to the type integer.

So how we solve this kind of issue? Unfortunately, there isn’t a one-size-fits-all solution. In this specific case we can “partially” resolve the issue by defining an ignore_malformed mapping parameter.

Keep in mind that this parameter is non-dynamic so you either need to set it when creating your index or you need to: close the index → change the setting value → reopen the index. Something like this.

Now let’s try to index the same document:

Checking the document by its ID will show us that the port field was omitted for indexing. We can see it in the “ignored” section.

The reason this is only a “partial” solution is because this setting has its limits and they are quite considerable. Let’s reveal one in the next example.

A developer might decide that when a microservice receives some API request it should log the received JSON payload in the message field. We already mapped the message field as text and we still have the ignore_malformed parameter set. So what would happen? Let’s see:

We see our old friend, the mapper_parsing_exception! This is because ignore_malformed can’t handle JSON objects on the input. Which is a significant limitation to be aware of.

Now, when speaking of JSON objects be aware that all the mapping ideas remains valid for their nested parts as well. Continuing our scenario, after losing some logs to mapping exceptions, we decide it’s time to introduce a new payload field of the type object where we can store the JSON at will.

Remember we have dynamic mapping in place so you can index it without first creating its mapping:

All good. Now we can check the mapping and focus on the payload field.

It was mapped as an object with (sub)properties defining the nested fields. So apparently the dynamic mapping works! But there is a trap. The payloads (or generally any JSON object) in the world of many producers and consumers can consist of almost anything. So you know what will happen with different JSON payload which also consists of a payload.data.received field but with a different type of data:

…again we get the mapper_parsing_exception!

So what else can we do?

- Engineers on the team need to be made aware of these mapping mechanics. You can also eastablish shared guidelines for the log fields.

- Secondly you may consider what’s called a Dead Letter Queue pattern that would store the failed documents in a separate queue. This either needs to be handled on an application level or by employing Logstash DLQ which allows us to still process the failed documents.

Limits – illegal_argument_exception

Now the second area of caution in relation to mappings, are limits. Even from the super-simple examples with payloads you can see that the number of nested fields can start accumulating pretty quickly. Where does this road end? At the number 1000. Which is the default limit of the number of fields in a mapping.

Let’s simulate this exception in our safe playground environment before you’ll unwillingly meet it in your production environment.

We’ll start by creating a large dummy JSON document with 1001 fields, POST it and then see what happens.

To create the document, you can either use the example command below with jq tool (apt-get install jq if you don’t already have it) or create the JSON manually if you prefer:

We can now create a new plain index:

And if we then POST our generated JSON, can you guess what’ll happen?

… straight to the illegal_argument_exception exception! This informs us about the limit being exceeded.

So how do we handle that? First, you should definitely think about what you are storing in your indices and for what purpose. Secondly, if you still need to, you can increase this 1,000 limit. But be careful as with bigger complexity might come a much bigger price of potential performance degradations and high memory pressure (see the docs for more info).

Changing this limit can be performed with a simple dynamic setting change:

Now that you’re more aware of the dangers lurking with Mappings, you’re much better prepared for the production battlefield 🙂

Источник

In order to evaluate its potential to help on our daily operations, I have deployed Elastic Search and Kibana (7.7.1 with BASIC license) and created an index template for Ntopng (our monitoring platform).

Since indexes keep growing, I want to delete Ntopng indexes older than 20 days or so, therefore I have created a life cycle policy called ntopng where the time-stamped index should rollover after 1 day (for testing purposes) and then will be deleted after 2 days of the rollover:

Next I picked a time-stamped index created that day and applied the lifecycle policy to it:

Before that, I had to create an alias for that Index, so I did it manually:

POST /_aliases

{

"actions" : [

{ "add" : { "index" : "ntopng-2020.09.09", "alias" : "ntopng_Alias" } }

]

}

All looked good after that ( I guess) as no errors or alarms were displayed:

"indices" : {

"ntopng-2020.09.09" : {

"index" : "ntopng-2020.09.09",

"managed" : true,

"policy" : "ntopng",

"lifecycle_date_millis" : 1599609600433,

"age" : "20.14h",

"phase" : "hot",

"phase_time_millis" : 1599681721821,

"action" : "rollover",

"action_time_millis" : 1599680521920,

"step" : "check-rollover-ready",

"step_time_millis" : 1599681721821,

"is_auto_retryable_error" : true,

"failed_step_retry_count" : 1,

"phase_execution" : {

"policy" : "ntopng",

"phase_definition" : {

"min_age" : "0ms",

"actions" : {

"rollover" : {

"max_age" : "1d"

},

"set_priority" : {

"priority" : 100

}

}

},

"version" : 4,

"modified_date_in_millis" : 1599509572867

}

}

My expectation was that in the next day the policy would be automatically rolled over to the next index (ntopng-2020.10.10) so that the initial index would be eventually deleted the next two days.

Instead, I got the following errors:

GET ntopng-*/_ilm/explain

{

"indices" : {

"ntopng-2020.09.09" : {

"index" : "ntopng-2020.09.09",

"managed" : true,

"policy" : "ntopng",

"lifecycle_date_millis" : 1599609600433,

"age" : "1.94d",

"phase" : "hot",

"phase_time_millis" : 1599776521822,

"action" : "rollover",

"action_time_millis" : 1599680521920,

"step" : "ERROR",

"step_time_millis" : 1599777121822,

"failed_step" : "check-rollover-ready",

"is_auto_retryable_error" : true,

"failed_step_retry_count" : 80,

"step_info" : {

"type" : "illegal_argument_exception",

"reason" : """index name [ntopng-2020.09.09] does not match pattern '^.*-d+$'""",

"stack_trace" : """java.lang.IllegalArgumentException: index name [ntopng-2020.09.09] does not match pattern '^.*-d+$'

at org.elasticsearch.action.admin.indices.rollover.TransportRolloverAction.generateRolloverIndexName(TransportRolloverAction.java:241)

at org.elasticsearch.action.admin.indices.rollover.TransportRolloverAction.masterOperation(TransportRolloverAction.java:133)

at org.elasticsearch.action.admin.indices.rollover.TransportRolloverAction.masterOperation(TransportRolloverAction.java:73)

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction.lambda$doStart$3(TransportMasterNodeAction.java:170)

at org.elasticsearch.action.ActionRunnable$2.doRun(ActionRunnable.java:73)

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

at org.elasticsearch.common.util.concurrent.EsExecutors$DirectExecutorService.execute(EsExecutors.java:225)

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction.doStart(TransportMasterNodeAction.java:170)

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction.start(TransportMasterNodeAction.java:133)

at org.elasticsearch.action.support.master.TransportMasterNodeAction.doExecute(TransportMasterNodeAction.java:110)

at org.elasticsearch.action.support.master.TransportMasterNodeAction.doExecute(TransportMasterNodeAction.java:59)

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:153)

at org.elasticsearch.xpack.security.action.filter.SecurityActionFilter.apply(SecurityActionFilter.java:123)

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:151)

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:129)

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:64)

at org.elasticsearch.client.node.NodeClient.executeLocally(NodeClient.java:83)

at org.elasticsearch.client.node.NodeClient.doExecute(NodeClient.java:72)

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:399)

at org.elasticsearch.xpack.core.ClientHelper.executeAsyncWithOrigin(ClientHelper.java:92)

at org.elasticsearch.xpack.core.ClientHelper.executeWithHeadersAsync(ClientHelper.java:155)

at org.elasticsearch.xpack.ilm.LifecyclePolicySecurityClient.doExecute(LifecyclePolicySecurityClient.java:51)

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:399)

at org.elasticsearch.client.support.AbstractClient$IndicesAdmin.execute(AbstractClient.java:1234)

at org.elasticsearch.client.support.AbstractClient$IndicesAdmin.rolloverIndex(AbstractClient.java:1736)

at org.elasticsearch.xpack.core.ilm.WaitForRolloverReadyStep.evaluateCondition(WaitForRolloverReadyStep.java:127)

at org.elasticsearch.xpack.ilm.IndexLifecycleRunner.runPeriodicStep(IndexLifecycleRunner.java:173)

at org.elasticsearch.xpack.ilm.IndexLifecycleService.triggerPolicies(IndexLifecycleService.java:329)

at org.elasticsearch.xpack.ilm.IndexLifecycleService.triggered(IndexLifecycleService.java:267)

at org.elasticsearch.xpack.core.scheduler.SchedulerEngine.notifyListeners(SchedulerEngine.java:183)

at org.elasticsearch.xpack.core.scheduler.SchedulerEngine$ActiveSchedule.run(SchedulerEngine.java:211)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:304)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1130)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:630)

at java.base/java.lang.Thread.run(Thread.java:832)

"""

},

"phase_execution" : {

"policy" : "ntopng",

"phase_definition" : {

"min_age" : "0ms",

"actions" : {

"rollover" : {

"max_age" : "1d"

},

"set_priority" : {

"priority" : 100

}

}

},

"version" : 4,

"modified_date_in_millis" : 1599509572867

}

}

"ntopng-2020.09.10" : {

"index" : "ntopng-2020.09.10",

"managed" : true,

"policy" : "ntopng",

"lifecycle_date_millis" : 1599696000991,

"age" : "22.57h",

"phase" : "hot",

"phase_time_millis" : 1599776521844,

"action" : "rollover",

"action_time_millis" : 1599696122033,

"step" : "ERROR",

"step_time_millis" : 1599777121839,

"failed_step" : "check-rollover-ready",

"is_auto_retryable_error" : true,

"failed_step_retry_count" : 67,

"step_info" : {

"type" : "illegal_argument_exception",

"reason" : "index.lifecycle.rollover_alias [ntopng_Alias] does not point to index [ntopng-2020.09.10]",

"stack_trace" : """java.lang.IllegalArgumentException: index.lifecycle.rollover_alias [ntopng_Alias] does not point to index [ntopng-2020.09.10]

at org.elasticsearch.xpack.core.ilm.WaitForRolloverReadyStep.evaluateCondition(WaitForRolloverReadyStep.java:104)

at org.elasticsearch.xpack.ilm.IndexLifecycleRunner.runPeriodicStep(IndexLifecycleRunner.java:173)

at org.elasticsearch.xpack.ilm.IndexLifecycleService.triggerPolicies(IndexLifecycleService.java:329)

at org.elasticsearch.xpack.ilm.IndexLifecycleService.triggered(IndexLifecycleService.java:267)

at org.elasticsearch.xpack.core.scheduler.SchedulerEngine.notifyListeners(SchedulerEngine.java:183)

at org.elasticsearch.xpack.core.scheduler.SchedulerEngine$ActiveSchedule.run(SchedulerEngine.java:211)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:304)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1130)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:630)

at java.base/java.lang.Thread.run(Thread.java:832)

"""

},

"phase_execution" : {

"policy" : "ntopng",

"phase_definition" : {

"min_age" : "0ms",

"actions" : {

"rollover" : {

"max_age" : "1d"

},

"set_priority" : {

"priority" : 100

}

}

}

The first index error reads «index name [ntopng-2020.09.09] does not match pattern ‘^.*-d+$»

while second one displays: «»index.lifecycle.rollover_alias [ntopng_Alias] does not point to index [ntopng-2020.09.10]»

Please note that I’m learning the basics on ES Index management, so I’d appreciate any clue on what the problem might be.

During Index Lifecycle Management’s execution of the policy for an index, it’s

possible for a step to encounter an error during its execution. When this

happens, ILM will move the management state into an «error» step. This halts

further execution of the policy and gives an administrator the chance to address

any issues with the policy, index, or cluster.

An example will be helpful in illustrating this, imagine the following policy

has been created by a user:

PUT _ilm/policy/shrink-the-index

{

"policy": {

"phases": {

"warm": {

"min_age": "5d",

"actions": {

"shrink": {

"number_of_shards": 4

}

}

}

}

}

}

This policy waits until the index is at least 5 days old, and then shrinks

the index to 4 shards.

Now imagine that a user creates a new index «myindex» with two primary shards,

telling it to use the policy they have created:

PUT /myindex

{

"settings": {

"index.number_of_shards": 2,

"index.lifecycle.name": "shrink-the-index"

}

}

After five days have passed, ILM will attempt to shrink this index from 2

shards to 4, which is invalid since the shrink action cannot increase the

number of shards. When this occurs, ILM will move this

index to the «error» step. Once an index is in this step, information about the

reason for the error can be retrieved from the ILM Explain API:

GET /myindex/_ilm/explain

Which returns the following information:

{

"indices" : {

"myindex" : {

"index" : "myindex",

"managed" : true,  "policy" : "shrink-the-index",

"policy" : "shrink-the-index",  "lifecycle_date_millis" : 1541717265865,

"phase" : "warm",

"lifecycle_date_millis" : 1541717265865,

"phase" : "warm",  "phase_time_millis" : 1541717272601,

"action" : "shrink",

"phase_time_millis" : 1541717272601,

"action" : "shrink",  "action_time_millis" : 1541717272601,

"step" : "ERROR",

"action_time_millis" : 1541717272601,

"step" : "ERROR",  "step_time_millis" : 1541717272688,

"failed_step" : "shrink",

"step_time_millis" : 1541717272688,

"failed_step" : "shrink",  "step_info" : {

"type" : "illegal_argument_exception",

"step_info" : {

"type" : "illegal_argument_exception",  "reason" : "the number of target shards [4] must be less that the number of source shards [2]"

"reason" : "the number of target shards [4] must be less that the number of source shards [2]"  },

"phase_execution" : {

"policy" : "shrink-the-index",

"phase_definition" : {

},

"phase_execution" : {

"policy" : "shrink-the-index",

"phase_definition" : {  "min_age" : "5d",

"actions" : {

"shrink" : {

"number_of_shards" : 4

}

}

},

"version" : 1,

"modified_date_in_millis" : 1541717264230

}

}

}

}

"min_age" : "5d",

"actions" : {

"shrink" : {

"number_of_shards" : 4

}

}

},

"version" : 1,

"modified_date_in_millis" : 1541717264230

}

}

}

}

|

|

this index is managed by ILM |

|

|

the policy in question, in this case, «shrink-the-index» |

|

|

what phase the index is currently in |

|

|

what action the index is currently on |

|

|

what step the index is currently on, in this case, because there is an error, the index is in the «ERROR» step |

|

|

the name of the step that failed to execute, in this case «shrink» |

|

|

the error class that occurred during this step |

|

|

the error message that occurred during the execution failure |

|

|

the definition of the phase (in this case, the «warm» phase) that the index is currently on |

The index here has been moved to the error step because the shrink definition in

the policy is using an incorrect number of shards. So rectifying that in the

policy entails updating the existing policy to use one instead of four for

the targeted number of shards.

PUT _ilm/policy/shrink-the-index

{

"policy": {

"phases": {

"warm": {

"min_age": "5d",

"actions": {

"shrink": {

"number_of_shards": 1

}

}

}

}

}

}

Retrying failed index lifecycle management steps

Once the underlying issue that caused an index to move to the error step has

been corrected, index lifecycle management must be told to retry the step to see

if it can progress further. This is accomplished by invoking the retry API

Once this has been issue, index lifecycle management will asynchronously pick up

on the step that is in a failed state, attempting to re-run it. The

ILM Explain API can again be used to monitor the status of

re-running the step.

>

Overview

In Elasticsearch, an index (plural: indices) contains a schema and can have one or more shards and replicas. An Elasticsearch index is divided into shards and each shard is an instance of a Lucene index.

Indices are used to store the documents in dedicated data structures corresponding to the data type of fields. For example, text fields are stored inside an inverted index whereas numeric and geo fields are stored inside BKD trees.

Examples

Create index

The following example is based on Elasticsearch version 5.x onwards. An index with two shards, each having one replica will be created with the name test_index1

PUT /test_index1?pretty

{

"settings" : {

"number_of_shards" : 2,

"number_of_replicas" : 1

},

"mappings" : {

"properties" : {

"tags" : { "type" : "keyword" },

"updated_at" : { "type" : "date" }

}

}

}

List indices

All the index names and their basic information can be retrieved using the following command:

GET _cat/indices?v

Index a document

Let’s add a document in the index with the command below:

PUT test_index1/_doc/1

{

"tags": [

"opster",

"elasticsearch"

],

"date": "01-01-2020"

}

Query an index

GET test_index1/_search

{

"query": {

"match_all": {}

}

}

Query multiple indices

It is possible to search multiple indices with a single request. If it is a raw HTTP request, index names should be sent in comma-separated format, as shown in the example below, and in the case of a query via a programming language client such as python or Java, index names are to be sent in a list format.

GET test_index1,test_index2/_search

Delete indices

DELETE test_index1

Common problems

- It is good practice to define the settings and mapping of an Index wherever possible because if this is not done, Elasticsearch tries to automatically guess the data type of fields at the time of indexing. This automatic process may have disadvantages, such as mapping conflicts, duplicate data and incorrect data types being set in the index. If the fields are not known in advance, it’s better to use dynamic index templates.

- Elasticsearch supports wildcard patterns in Index names, which sometimes aids with querying multiple indices, but can also be very destructive too. For example, It is possible to delete all the indices in a single command using the following commands:

DELETE /*

To disable this, you can add the following lines in the elasticsearch.yml:

action.destructive_requires_name: true

Elasticsearch Version

8.6.0

Installed Plugins

No response

Java Version

bundled

OS Version

Elasticsearch Service (ESS)

Problem Description

Users may accidentally configure an ILM policy with the downsample action where the ILM policy is attached to a regular index or a data stream (but not a time series data stream (TSDS)).

In this situation, the regular index or backing index will remain stuck in the downsample ILM action (even after updating the ILM policy to remove the downsample action).

Steps to Reproduce

-

Create a hot/warm deployment version 8.6.0 in ESS.

-

Create an ILM policy with a

downsampleaction:

PUT _ilm/policy/my-lifecycle-policy

{

"policy": {

"phases": {

"hot": {

"actions": {

"rollover": {

"max_docs": "2"

},

"downsample": {

"fixed_interval": "1m"

}

}

},

"warm": {

"min_age": "1m",

"actions": {

"allocate": {

"number_of_replicas": 0

}

}

},

"delete": {

"min_age": "2m",

"actions": {

"delete": {}

}

}

}

}

}

- Update the

indices.lifecycle.poll_intervalfor this current issue recreation:

PUT _cluster/settings

{

"persistent": {

"indices.lifecycle.poll_interval": "10s"

}

}

- Create a component template for mappings:

PUT _component_template/my-mappings

{

"template": {

"mappings": {

"properties": {

"@timestamp": {

"type": "date",

"format": "date_optional_time||epoch_millis"

},

"message": {

"type": "wildcard"

}

}

}

},

"_meta": {

"description": "Mappings for @timestamp and message fields",

"my-custom-meta-field": "More arbitrary metadata"

}

}

- Create a component template for index settings:

PUT _component_template/my-settings

{

"template": {

"settings": {

"index.lifecycle.name": "my-lifecycle-policy"

}

},

"_meta": {

"description": "Settings for ILM",

"my-custom-meta-field": "More arbitrary metadata"

}

}

- Create an index template:

PUT _index_template/my-index-template

{

"index_patterns": ["my-data-stream*"],

"data_stream": { },

"composed_of": [ "my-mappings", "my-settings" ],

"priority": 500,

"_meta": {

"description": "Template for my time series data",

"my-custom-meta-field": "More arbitrary metadata"

}

}

- Create the data stream:

PUT _data_stream/my-data-stream

- Add some documents:

POST my-data-stream/_doc

{

"@timestamp": "2023-01-22T00:00:01.000Z",

"message": "document 1"

}

POST my-data-stream/_doc

{

"@timestamp": "2023-01-22T00:00:02.000Z",

"message": "document 2"

}

POST my-data-stream/_doc

{

"@timestamp": "2023-01-22T00:00:03.000Z",

"message": "document 3"

}

- Verify that the documents are stored:

GET my-data-stream/_search

- Verify that a new backing index has been created (i.e

.ds-my-data-stream-2023.01.22-000002) (will be created after the next poll interval)

GET _cat/indices?expand_wildcards=all

- Check the ILM explain results of the first index (i.e

.ds-my-data-stream-2023.01.22-000001) and observe that the index is stuck in thedownsampleaction with the following exception:

Rollup requires setting [index.mode=time_series] for index [.ds-my-data-stream-2023.01.22-000001]

GET .ds-my-data-stream-2023.01.22-000001/_ilm/explain

{

"indices": {

".ds-my-data-stream-2023.01.22-000001": {

"index": ".ds-my-data-stream-2023.01.22-000001",

"managed": true,

"policy": "my-lifecycle-policy",

"index_creation_date_millis": 1674363304040,

"time_since_index_creation": "2.04m",

"lifecycle_date_millis": 1674363319364,

"age": "1.78m",

"phase": "hot",

"phase_time_millis": 1674363419410,

"action": "downsample",

"action_time_millis": 1674363319564,

"step": "ERROR",

"step_time_millis": 1674363419410,

"failed_step": "rollup",

"is_auto_retryable_error": true,

"failed_step_retry_count": 9,

"step_info": {

"type": "exception",

"reason": "Rollup requires setting [index.mode=time_series] for index [.ds-my-data-stream-2023.01.22-000001]"

},

"phase_execution": {

"policy": "my-lifecycle-policy",

"phase_definition": {

"min_age": "0ms",

"actions": {

"downsample": {

"fixed_interval": "1m"

},

"rollover": {

"max_docs": 2

}

}

},

"version": 1,

"modified_date_in_millis": 1674363301001

}

}

}

}

- Update the ILM policy to remove the

downsampleaction:

PUT _ilm/policy/my-lifecycle-policy

{

"policy": {

"phases": {

"hot": {

"actions": {

"rollover": {

"max_docs": "2"

}

}

},

"warm": {

"min_age": "1m",

"actions": {

"allocate": {

"number_of_replicas": 0

}

}

},

"delete": {

"min_age": "2m",

"actions": {

"delete": {}

}

}

}

}

}

- Check the ILM explain results of index

.ds-my-data-stream-2023.01.22-000001and observe that the index remains stuck in thedownsampleaction, despite the ILM policy update:

{

"indices": {

".ds-my-data-stream-2023.01.22-000001": {

"index": ".ds-my-data-stream-2023.01.22-000001",

"managed": true,

"policy": "my-lifecycle-policy",

"index_creation_date_millis": 1674363304040,

"time_since_index_creation": "2.79m",

"lifecycle_date_millis": 1674363319364,

"age": "2.54m",

"phase": "hot",

"phase_time_millis": 1674363439419,

"action": "downsample",

"action_time_millis": 1674363319564,

"step": "ERROR",

"step_time_millis": 1674363469445,

"failed_step": "rollup",

"is_auto_retryable_error": true,

"failed_step_retry_count": 14,

"step_info": {

"type": "exception",

"reason": "Rollup requires setting [index.mode=time_series] for index [.ds-my-data-stream-2023.01.22-000001]"

},

"phase_execution": {

"policy": "my-lifecycle-policy",

"phase_definition": {

"min_age": "0ms",

"actions": {

"downsample": {

"fixed_interval": "1m"

},

"rollover": {

"max_docs": 2

}

}

},

"version": 1,

"modified_date_in_millis": 1674363301001

}

}

}

}

Logs (if relevant)

No response

Workaround

I am not aware of any workarounds for now. To be discussed with @elastic/es-data-management .