| Error function | |

|---|---|

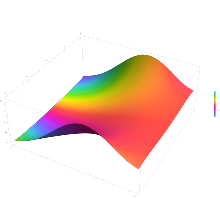

Plot of the error function |

|

| General information | |

| General definition |  |

| Fields of application | Probability, thermodynamics |

| Domain, Codomain and Image | |

| Domain |  |

| Image |  |

| Basic features | |

| Parity | Odd |

| Specific features | |

| Root | 0 |

| Derivative |  |

| Antiderivative |  |

| Series definition | |

| Taylor series |  |

In mathematics, the error function (also called the Gauss error function), often denoted by erf, is a complex function of a complex variable defined as:[1]

This integral is a special (non-elementary) sigmoid function that occurs often in probability, statistics, and partial differential equations. In many of these applications, the function argument is a real number. If the function argument is real, then the function value is also real.

In statistics, for non-negative values of x, the error function has the following interpretation: for a random variable Y that is normally distributed with mean 0 and standard deviation 1/√2, erf x is the probability that Y falls in the range [−x, x].

Two closely related functions are the complementary error function (erfc) defined as

and the imaginary error function (erfi) defined as

where i is the imaginary unit

Name[edit]

The name «error function» and its abbreviation erf were proposed by J. W. L. Glaisher in 1871 on account of its connection with «the theory of Probability, and notably the theory of Errors.»[2] The error function complement was also discussed by Glaisher in a separate publication in the same year.[3]

For the «law of facility» of errors whose density is given by

(the normal distribution), Glaisher calculates the probability of an error lying between p and q as:

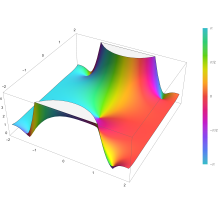

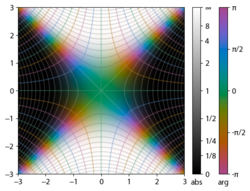

Plot of the error function Erf(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

Applications[edit]

When the results of a series of measurements are described by a normal distribution with standard deviation σ and expected value 0, then erf (a/σ √2) is the probability that the error of a single measurement lies between −a and +a, for positive a. This is useful, for example, in determining the bit error rate of a digital communication system.

The error and complementary error functions occur, for example, in solutions of the heat equation when boundary conditions are given by the Heaviside step function.

The error function and its approximations can be used to estimate results that hold with high probability or with low probability. Given a random variable X ~ Norm[μ,σ] (a normal distribution with mean μ and standard deviation σ) and a constant L < μ:

where A and B are certain numeric constants. If L is sufficiently far from the mean, specifically μ − L ≥ σ√ln k, then:

so the probability goes to 0 as k → ∞.

The probability for X being in the interval [La, Lb] can be derived as

Properties[edit]

Integrand exp(−z2)

erf z

The property erf (−z) = −erf z means that the error function is an odd function. This directly results from the fact that the integrand e−t2 is an even function (the antiderivative of an even function which is zero at the origin is an odd function and vice versa).

Since the error function is an entire function which takes real numbers to real numbers, for any complex number z:

where z is the complex conjugate of z.

The integrand f = exp(−z2) and f = erf z are shown in the complex z-plane in the figures at right with domain coloring.

The error function at +∞ is exactly 1 (see Gaussian integral). At the real axis, erf z approaches unity at z → +∞ and −1 at z → −∞. At the imaginary axis, it tends to ±i∞.

Taylor series[edit]

The error function is an entire function; it has no singularities (except that at infinity) and its Taylor expansion always converges, but is famously known «[…] for its bad convergence if x > 1.»[4]

The defining integral cannot be evaluated in closed form in terms of elementary functions, but by expanding the integrand e−z2 into its Maclaurin series and integrating term by term, one obtains the error function’s Maclaurin series as:

which holds for every complex number z. The denominator terms are sequence A007680 in the OEIS.

For iterative calculation of the above series, the following alternative formulation may be useful:

because −(2k − 1)z2/k(2k + 1) expresses the multiplier to turn the kth term into the (k + 1)th term (considering z as the first term).

The imaginary error function has a very similar Maclaurin series, which is:

which holds for every complex number z.

Derivative and integral[edit]

The derivative of the error function follows immediately from its definition:

From this, the derivative of the imaginary error function is also immediate:

An antiderivative of the error function, obtainable by integration by parts, is

An antiderivative of the imaginary error function, also obtainable by integration by parts, is

Higher order derivatives are given by

where H are the physicists’ Hermite polynomials.[5]

Bürmann series[edit]

An expansion,[6] which converges more rapidly for all real values of x than a Taylor expansion, is obtained by using Hans Heinrich Bürmann’s theorem:[7]

where sgn is the sign function. By keeping only the first two coefficients and choosing c1 = 31/200 and c2 = −341/8000, the resulting approximation shows its largest relative error at x = ±1.3796, where it is less than 0.0036127:

Inverse functions[edit]

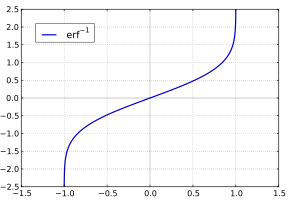

Given a complex number z, there is not a unique complex number w satisfying erf w = z, so a true inverse function would be multivalued. However, for −1 < x < 1, there is a unique real number denoted erf−1 x satisfying

The inverse error function is usually defined with domain (−1,1), and it is restricted to this domain in many computer algebra systems. However, it can be extended to the disk |z| < 1 of the complex plane, using the Maclaurin series

where c0 = 1 and

So we have the series expansion (common factors have been canceled from numerators and denominators):

(After cancellation the numerator/denominator fractions are entries OEIS: A092676/OEIS: A092677 in the OEIS; without cancellation the numerator terms are given in entry OEIS: A002067.) The error function’s value at ±∞ is equal to ±1.

For |z| < 1, we have erf(erf−1 z) = z.

The inverse complementary error function is defined as

For real x, there is a unique real number erfi−1 x satisfying erfi(erfi−1 x) = x. The inverse imaginary error function is defined as erfi−1 x.[8]

For any real x, Newton’s method can be used to compute erfi−1 x, and for −1 ≤ x ≤ 1, the following Maclaurin series converges:

where ck is defined as above.

Asymptotic expansion[edit]

A useful asymptotic expansion of the complementary error function (and therefore also of the error function) for large real x is

where (2n − 1)!! is the double factorial of (2n − 1), which is the product of all odd numbers up to (2n − 1). This series diverges for every finite x, and its meaning as asymptotic expansion is that for any integer N ≥ 1 one has

where the remainder, in Landau notation, is

as x → ∞.

Indeed, the exact value of the remainder is

which follows easily by induction, writing

and integrating by parts.

For large enough values of x, only the first few terms of this asymptotic expansion are needed to obtain a good approximation of erfc x (while for not too large values of x, the above Taylor expansion at 0 provides a very fast convergence).

Continued fraction expansion[edit]

A continued fraction expansion of the complementary error function is:[9]

Integral of error function with Gaussian density function[edit]

which appears related to Ng and Geller, formula 13 in section 4.3[10] with a change of variables.

Factorial series[edit]

The inverse factorial series:

converges for Re(z2) > 0. Here

zn denotes the rising factorial, and s(n,k) denotes a signed Stirling number of the first kind.[11][12]

There also exists a representation by an infinite sum containing the double factorial:

Numerical approximations[edit]

Approximation with elementary functions[edit]

- Abramowitz and Stegun give several approximations of varying accuracy (equations 7.1.25–28). This allows one to choose the fastest approximation suitable for a given application. In order of increasing accuracy, they are:

(maximum error: 5×10−4)

where a1 = 0.278393, a2 = 0.230389, a3 = 0.000972, a4 = 0.078108

(maximum error: 2.5×10−5)

where p = 0.47047, a1 = 0.3480242, a2 = −0.0958798, a3 = 0.7478556

(maximum error: 3×10−7)

where a1 = 0.0705230784, a2 = 0.0422820123, a3 = 0.0092705272, a4 = 0.0001520143, a5 = 0.0002765672, a6 = 0.0000430638

(maximum error: 1.5×10−7)

where p = 0.3275911, a1 = 0.254829592, a2 = −0.284496736, a3 = 1.421413741, a4 = −1.453152027, a5 = 1.061405429

All of these approximations are valid for x ≥ 0. To use these approximations for negative x, use the fact that erf x is an odd function, so erf x = −erf(−x).

- Exponential bounds and a pure exponential approximation for the complementary error function are given by[13]

- The above have been generalized to sums of N exponentials[14] with increasing accuracy in terms of N so that erfc x can be accurately approximated or bounded by 2Q̃(√2x), where

In particular, there is a systematic methodology to solve the numerical coefficients {(an,bn)}N

n = 1 that yield a minimax approximation or bound for the closely related Q-function: Q(x) ≈ Q̃(x), Q(x) ≤ Q̃(x), or Q(x) ≥ Q̃(x) for x ≥ 0. The coefficients {(an,bn)}N

n = 1 for many variations of the exponential approximations and bounds up to N = 25 have been released to open access as a comprehensive dataset.[15] - A tight approximation of the complementary error function for x ∈ [0,∞) is given by Karagiannidis & Lioumpas (2007)[16] who showed for the appropriate choice of parameters {A,B} that

They determined {A,B} = {1.98,1.135}, which gave a good approximation for all x ≥ 0. Alternative coefficients are also available for tailoring accuracy for a specific application or transforming the expression into a tight bound.[17]

- A single-term lower bound is[18]

where the parameter β can be picked to minimize error on the desired interval of approximation.

-

- Another approximation is given by Sergei Winitzki using his «global Padé approximations»:[19][20]: 2–3

where

This is designed to be very accurate in a neighborhood of 0 and a neighborhood of infinity, and the relative error is less than 0.00035 for all real x. Using the alternate value a ≈ 0.147 reduces the maximum relative error to about 0.00013.[21]

This approximation can be inverted to obtain an approximation for the inverse error function:

- An approximation with a maximal error of 1.2×10−7 for any real argument is:[22]

with

and

Table of values[edit]

| x | erf x | 1 − erf x |

|---|---|---|

| 0 | 0 | 1 |

| 0.02 | 0.022564575 | 0.977435425 |

| 0.04 | 0.045111106 | 0.954888894 |

| 0.06 | 0.067621594 | 0.932378406 |

| 0.08 | 0.090078126 | 0.909921874 |

| 0.1 | 0.112462916 | 0.887537084 |

| 0.2 | 0.222702589 | 0.777297411 |

| 0.3 | 0.328626759 | 0.671373241 |

| 0.4 | 0.428392355 | 0.571607645 |

| 0.5 | 0.520499878 | 0.479500122 |

| 0.6 | 0.603856091 | 0.396143909 |

| 0.7 | 0.677801194 | 0.322198806 |

| 0.8 | 0.742100965 | 0.257899035 |

| 0.9 | 0.796908212 | 0.203091788 |

| 1 | 0.842700793 | 0.157299207 |

| 1.1 | 0.880205070 | 0.119794930 |

| 1.2 | 0.910313978 | 0.089686022 |

| 1.3 | 0.934007945 | 0.065992055 |

| 1.4 | 0.952285120 | 0.047714880 |

| 1.5 | 0.966105146 | 0.033894854 |

| 1.6 | 0.976348383 | 0.023651617 |

| 1.7 | 0.983790459 | 0.016209541 |

| 1.8 | 0.989090502 | 0.010909498 |

| 1.9 | 0.992790429 | 0.007209571 |

| 2 | 0.995322265 | 0.004677735 |

| 2.1 | 0.997020533 | 0.002979467 |

| 2.2 | 0.998137154 | 0.001862846 |

| 2.3 | 0.998856823 | 0.001143177 |

| 2.4 | 0.999311486 | 0.000688514 |

| 2.5 | 0.999593048 | 0.000406952 |

| 3 | 0.999977910 | 0.000022090 |

| 3.5 | 0.999999257 | 0.000000743 |

[edit]

Complementary error function[edit]

The complementary error function, denoted erfc, is defined as

-

Plot of the complementary error function Erfc(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

which also defines erfcx, the scaled complementary error function[23] (which can be used instead of erfc to avoid arithmetic underflow[23][24]). Another form of erfc x for x ≥ 0 is known as Craig’s formula, after its discoverer:[25]

This expression is valid only for positive values of x, but it can be used in conjunction with erfc x = 2 − erfc(−x) to obtain erfc(x) for negative values. This form is advantageous in that the range of integration is fixed and finite. An extension of this expression for the erfc of the sum of two non-negative variables is as follows:[26]

Imaginary error function[edit]

The imaginary error function, denoted erfi, is defined as

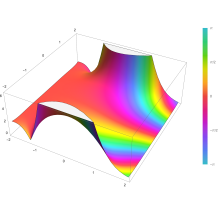

Plot of the imaginary error function Erfi(z) in the complex plane from -2-2i to 2+2i with colors created with Mathematica 13.1 function ComplexPlot3D

where D(x) is the Dawson function (which can be used instead of erfi to avoid arithmetic overflow[23]).

Despite the name «imaginary error function», erfi x is real when x is real.

When the error function is evaluated for arbitrary complex arguments z, the resulting complex error function is usually discussed in scaled form as the Faddeeva function:

Cumulative distribution function[edit]

The error function is essentially identical to the standard normal cumulative distribution function, denoted Φ, also named norm(x) by some software languages[citation needed], as they differ only by scaling and translation. Indeed,

-

the normal cumulative distribution function plotted in the complex plane

or rearranged for erf and erfc:

Consequently, the error function is also closely related to the Q-function, which is the tail probability of the standard normal distribution. The Q-function can be expressed in terms of the error function as

The inverse of Φ is known as the normal quantile function, or probit function and may be expressed in terms of the inverse error function as

The standard normal cdf is used more often in probability and statistics, and the error function is used more often in other branches of mathematics.

The error function is a special case of the Mittag-Leffler function, and can also be expressed as a confluent hypergeometric function (Kummer’s function):

It has a simple expression in terms of the Fresnel integral.[further explanation needed]

In terms of the regularized gamma function P and the incomplete gamma function,

sgn x is the sign function.

Generalized error functions[edit]

Graph of generalised error functions En(x):

grey curve: E1(x) = 1 − e−x/√π

red curve: E2(x) = erf(x)

green curve: E3(x)

blue curve: E4(x)

gold curve: E5(x).

Some authors discuss the more general functions:[citation needed]

Notable cases are:

- E0(x) is a straight line through the origin: E0(x) = x/e√π

- E2(x) is the error function, erf x.

After division by n!, all the En for odd n look similar (but not identical) to each other. Similarly, the En for even n look similar (but not identical) to each other after a simple division by n!. All generalised error functions for n > 0 look similar on the positive x side of the graph.

These generalised functions can equivalently be expressed for x > 0 using the gamma function and incomplete gamma function:

Therefore, we can define the error function in terms of the incomplete gamma function:

Iterated integrals of the complementary error function[edit]

The iterated integrals of the complementary error function are defined by[27]

The general recurrence formula is

They have the power series

from which follow the symmetry properties

and

Implementations[edit]

As real function of a real argument[edit]

- In Posix-compliant operating systems, the header

math.hshall declare and the mathematical librarylibmshall provide the functionserfanderfc(double precision) as well as their single precision and extended precision counterpartserff,erflanderfcf,erfcl.[28] - The GNU Scientific Library provides

erf,erfc,log(erf), and scaled error functions.[29]

As complex function of a complex argument[edit]

libcerf, numeric C library for complex error functions, provides the complex functionscerf,cerfc,cerfcxand the real functionserfi,erfcxwith approximately 13–14 digits precision, based on the Faddeeva function as implemented in the MIT Faddeeva Package

See also[edit]

[edit]

- Gaussian integral, over the whole real line

- Gaussian function, derivative

- Dawson function, renormalized imaginary error function

- Goodwin–Staton integral

In probability[edit]

- Normal distribution

- Normal cumulative distribution function, a scaled and shifted form of error function

- Probit, the inverse or quantile function of the normal CDF

- Q-function, the tail probability of the normal distribution

References[edit]

- ^ Andrews, Larry C. (1998). Special functions of mathematics for engineers. SPIE Press. p. 110. ISBN 9780819426161.

- ^ Glaisher, James Whitbread Lee (July 1871). «On a class of definite integrals». London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 4. 42 (277): 294–302. doi:10.1080/14786447108640568. Retrieved 6 December 2017.

- ^ Glaisher, James Whitbread Lee (September 1871). «On a class of definite integrals. Part II». London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 4. 42 (279): 421–436. doi:10.1080/14786447108640600. Retrieved 6 December 2017.

- ^ «A007680 – OEIS». oeis.org. Retrieved 2 April 2020.

- ^ Weisstein, Eric W. «Erf». MathWorld.

- ^ Schöpf, H. M.; Supancic, P. H. (2014). «On Bürmann’s Theorem and Its Application to Problems of Linear and Nonlinear Heat Transfer and Diffusion». The Mathematica Journal. 16. doi:10.3888/tmj.16-11.

- ^ Weisstein, Eric W. «Bürmann’s Theorem». MathWorld.

- ^ Bergsma, Wicher (2006). «On a new correlation coefficient, its orthogonal decomposition and associated tests of independence». arXiv:math/0604627.

- ^ Cuyt, Annie A. M.; Petersen, Vigdis B.; Verdonk, Brigitte; Waadeland, Haakon; Jones, William B. (2008). Handbook of Continued Fractions for Special Functions. Springer-Verlag. ISBN 978-1-4020-6948-2.

- ^ Ng, Edward W.; Geller, Murray (January 1969). «A table of integrals of the Error functions». Journal of Research of the National Bureau of Standards Section B. 73B (1): 1. doi:10.6028/jres.073B.001.

- ^ Schlömilch, Oskar Xavier (1859). «Ueber facultätenreihen». Zeitschrift für Mathematik und Physik (in German). 4: 390–415. Retrieved 4 December 2017.

- ^ Nielson, Niels (1906). Handbuch der Theorie der Gammafunktion (in German). Leipzig: B. G. Teubner. p. 283 Eq. 3. Retrieved 4 December 2017.

- ^ Chiani, M.; Dardari, D.; Simon, M.K. (2003). «New Exponential Bounds and Approximations for the Computation of Error Probability in Fading Channels» (PDF). IEEE Transactions on Wireless Communications. 2 (4): 840–845. CiteSeerX 10.1.1.190.6761. doi:10.1109/TWC.2003.814350.

- ^ Tanash, I.M.; Riihonen, T. (2020). «Global minimax approximations and bounds for the Gaussian Q-function by sums of exponentials». IEEE Transactions on Communications. 68 (10): 6514–6524. arXiv:2007.06939. doi:10.1109/TCOMM.2020.3006902. S2CID 220514754.

- ^ Tanash, I.M.; Riihonen, T. (2020). «Coefficients for Global Minimax Approximations and Bounds for the Gaussian Q-Function by Sums of Exponentials [Data set]». Zenodo. doi:10.5281/zenodo.4112978.

- ^ Karagiannidis, G. K.; Lioumpas, A. S. (2007). «An improved approximation for the Gaussian Q-function» (PDF). IEEE Communications Letters. 11 (8): 644–646. doi:10.1109/LCOMM.2007.070470. S2CID 4043576.

- ^ Tanash, I.M.; Riihonen, T. (2021). «Improved coefficients for the Karagiannidis–Lioumpas approximations and bounds to the Gaussian Q-function». IEEE Communications Letters. 25 (5): 1468–1471. arXiv:2101.07631. doi:10.1109/LCOMM.2021.3052257. S2CID 231639206.

- ^ Chang, Seok-Ho; Cosman, Pamela C.; Milstein, Laurence B. (November 2011). «Chernoff-Type Bounds for the Gaussian Error Function». IEEE Transactions on Communications. 59 (11): 2939–2944. doi:10.1109/TCOMM.2011.072011.100049. S2CID 13636638.

- ^ Winitzki, Sergei (2003). «Uniform approximations for transcendental functions». Computational Science and Its Applications – ICCSA 2003. Lecture Notes in Computer Science. Vol. 2667. Springer, Berlin. pp. 780–789. doi:10.1007/3-540-44839-X_82. ISBN 978-3-540-40155-1.

- ^ Zeng, Caibin; Chen, Yang Cuan (2015). «Global Padé approximations of the generalized Mittag-Leffler function and its inverse». Fractional Calculus and Applied Analysis. 18 (6): 1492–1506. arXiv:1310.5592. doi:10.1515/fca-2015-0086. S2CID 118148950.

Indeed, Winitzki [32] provided the so-called global Padé approximation

- ^ Winitzki, Sergei (6 February 2008). «A handy approximation for the error function and its inverse».

- ^ Numerical Recipes in Fortran 77: The Art of Scientific Computing (ISBN 0-521-43064-X), 1992, page 214, Cambridge University Press.

- ^ a b c Cody, W. J. (March 1993), «Algorithm 715: SPECFUN—A portable FORTRAN package of special function routines and test drivers» (PDF), ACM Trans. Math. Softw., 19 (1): 22–32, CiteSeerX 10.1.1.643.4394, doi:10.1145/151271.151273, S2CID 5621105

- ^ Zaghloul, M. R. (1 March 2007), «On the calculation of the Voigt line profile: a single proper integral with a damped sine integrand», Monthly Notices of the Royal Astronomical Society, 375 (3): 1043–1048, Bibcode:2007MNRAS.375.1043Z, doi:10.1111/j.1365-2966.2006.11377.x

- ^ John W. Craig, A new, simple and exact result for calculating the probability of error for two-dimensional signal constellations Archived 3 April 2012 at the Wayback Machine, Proceedings of the 1991 IEEE Military Communication Conference, vol. 2, pp. 571–575.

- ^ Behnad, Aydin (2020). «A Novel Extension to Craig’s Q-Function Formula and Its Application in Dual-Branch EGC Performance Analysis». IEEE Transactions on Communications. 68 (7): 4117–4125. doi:10.1109/TCOMM.2020.2986209. S2CID 216500014.

- ^ Carslaw, H. S.; Jaeger, J. C. (1959), Conduction of Heat in Solids (2nd ed.), Oxford University Press, ISBN 978-0-19-853368-9, p 484

- ^ https://pubs.opengroup.org/onlinepubs/9699919799/basedefs/math.h.html

- ^ «Special Functions – GSL 2.7 documentation».

Further reading[edit]

- Abramowitz, Milton; Stegun, Irene Ann, eds. (1983) [June 1964]. «Chapter 7». Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. Vol. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. p. 297. ISBN 978-0-486-61272-0. LCCN 64-60036. MR 0167642. LCCN 65-12253.

- Press, William H.; Teukolsky, Saul A.; Vetterling, William T.; Flannery, Brian P. (2007), «Section 6.2. Incomplete Gamma Function and Error Function», Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8

- Temme, Nico M. (2010), «Error Functions, Dawson’s and Fresnel Integrals», in Olver, Frank W. J.; Lozier, Daniel M.; Boisvert, Ronald F.; Clark, Charles W. (eds.), NIST Handbook of Mathematical Functions, Cambridge University Press, ISBN 978-0-521-19225-5, MR 2723248

External links[edit]

- A Table of Integrals of the Error Functions

Syntax

Description

Examples

Imaginary Error Function for Floating-Point and Symbolic Numbers

Depending on its arguments, erfi can

return floating-point or exact symbolic results.

Compute the imaginary error function for these numbers. Because these numbers are

not symbolic objects, you get floating-point results.

s = [erfi(1/2), erfi(1.41), erfi(sqrt(2))]

Compute the imaginary error function for the same numbers converted to symbolic

objects. For most symbolic (exact) numbers, erfi returns

unresolved symbolic calls.

s = [erfi(sym(1/2)), erfi(sym(1.41)), erfi(sqrt(sym(2)))]

s = [ erfi(1/2), erfi(141/100), erfi(2^(1/2))]

Use vpa to approximate this result with

the 10-digit accuracy:

ans = [ 0.6149520947, 3.738199581, 3.773122512]

Imaginary Error Function for Variables and Expressions

Compute the imaginary error function for x

and sin(x) + x*exp(x). For most symbolic variables and

expressions, erfi returns unresolved symbolic calls.

syms x f = sin(x) + x*exp(x); erfi(x) erfi(f)

ans = erfi(x) ans = erfi(sin(x) + x*exp(x))

Imaginary Error Function for Vectors and Matrices

If the input argument is a vector or a matrix,

erfi returns the imaginary error function for each

element of that vector or matrix.

Compute the imaginary error function for elements of matrix M

and vector V:

M = sym([0 inf; 1/3 -inf]); V = sym([1; -i*inf]); erfi(M) erfi(V)

ans =

[ 0, Inf]

[ erfi(1/3), -Inf]

ans =

erfi(1)

-1i

Special Values of Imaginary Error Function

Compute the imaginary error function for x = 0, x = ∞, and x = –∞. Use sym to convert 0

and infinities to symbolic objects. The imaginary error function has special

values for these parameters:

[erfi(sym(0)), erfi(sym(inf)), erfi(sym(-inf))]

Compute the imaginary error function for complex infinities. Use

sym to convert complex infinities to symbolic objects:

[erfi(sym(i*inf)), erfi(sym(-i*inf))]

Handling Expressions That Contain Imaginary Error Function

Many functions, such as diff and

int, can handle expressions containing

erfi.

Compute the first and second derivatives of the imaginary error function:

syms x diff(erfi(x), x) diff(erfi(x), x, 2)

ans = (2*exp(x^2))/pi^(1/2) ans = (4*x*exp(x^2))/pi^(1/2)

Compute the integrals of these expressions:

int(erfi(x), x) int(erfi(log(x)), x)

ans = x*erfi(x) - exp(x^2)/pi^(1/2) ans = x*erfi(log(x)) - int((2*exp(log(x)^2))/pi^(1/2), x)

Plot Imaginary Error Function

Plot the imaginary error function on the interval from -2 to 2.

syms x fplot(erfi(x),[-2,2]) grid on

Input Arguments

collapse all

x — Input

floating-point number | symbolic number | symbolic variable | symbolic expression | symbolic function | symbolic vector | symbolic matrix

Input, specified as a floating-point or symbolic number, variable,

expression, function, vector, or matrix.

More About

collapse all

Imaginary Error Function

The imaginary error function is defined as:

erfi(x)=−i erf(ix)=2π∫0xet2dt

Tips

-

erfireturns special values for these parameters:-

erfi(0) = 0 -

erfi(inf) = inf -

erfi(-inf) = -inf -

erfi(i*inf) = i -

erfi(-i*inf) = -i

-

Version History

Introduced in R2013a

DiracDelta#

- class sympy.functions.special.delta_functions.DiracDelta(arg, k=0)[source]#

-

The DiracDelta function and its derivatives.

Explanation

DiracDelta is not an ordinary function. It can be rigorously defined either

as a distribution or as a measure.DiracDelta only makes sense in definite integrals, and in particular,

integrals of the formIntegral(f(x)*DiracDelta(x - x0), (x, a, b)),

where it equalsf(x0)ifa <= x0 <= band0otherwise. Formally,

DiracDelta acts in some ways like a function that is0everywhere except

at0, but in many ways it also does not. It can often be useful to treat

DiracDelta in formal ways, building up and manipulating expressions with

delta functions (which may eventually be integrated), but care must be taken

to not treat it as a real function. SymPy’soois similar. It only

truly makes sense formally in certain contexts (such as integration limits),

but SymPy allows its use everywhere, and it tries to be consistent with

operations on it (like1/oo), but it is easy to get into trouble and get

wrong results ifoois treated too much like a number. Similarly, if

DiracDelta is treated too much like a function, it is easy to get wrong or

nonsensical results.DiracDelta function has the following properties:

-

(frac{d}{d x} theta(x) = delta(x))

-

(int_{-infty}^infty delta(x — a)f(x), dx = f(a)) and (int_{a-

epsilon}^{a+epsilon} delta(x — a)f(x), dx = f(a)) -

(delta(x) = 0) for all (x neq 0)

-

(delta(g(x)) = sum_i frac{delta(x — x_i)}{|g'(x_i)|}) where (x_i)

are the roots of (g) -

(delta(-x) = delta(x))

Derivatives of

k-th order of DiracDelta have the following properties:-

(delta(x, k) = 0) for all (x neq 0)

-

(delta(-x, k) = -delta(x, k)) for odd (k)

-

(delta(-x, k) = delta(x, k)) for even (k)

Examples

>>> from sympy import DiracDelta, diff, pi >>> from sympy.abc import x, y

>>> DiracDelta(x) DiracDelta(x) >>> DiracDelta(1) 0 >>> DiracDelta(-1) 0 >>> DiracDelta(pi) 0 >>> DiracDelta(x - 4).subs(x, 4) DiracDelta(0) >>> diff(DiracDelta(x)) DiracDelta(x, 1) >>> diff(DiracDelta(x - 1), x, 2) DiracDelta(x - 1, 2) >>> diff(DiracDelta(x**2 - 1), x, 2) 2*(2*x**2*DiracDelta(x**2 - 1, 2) + DiracDelta(x**2 - 1, 1)) >>> DiracDelta(3*x).is_simple(x) True >>> DiracDelta(x**2).is_simple(x) False >>> DiracDelta((x**2 - 1)*y).expand(diracdelta=True, wrt=x) DiracDelta(x - 1)/(2*Abs(y)) + DiracDelta(x + 1)/(2*Abs(y))

References

- classmethod eval(arg, k=0)[source]#

-

Returns a simplified form or a value of DiracDelta depending on the

argument passed by the DiracDelta object.- Parameters:

-

k : integer

order of derivative

arg : argument passed to DiracDelta

Explanation

The

eval()method is automatically called when theDiracDelta

class is about to be instantiated and it returns either some simplified

instance or the unevaluated instance depending on the argument passed.

In other words,eval()method is not needed to be called explicitly,

it is being called and evaluated once the object is called.Examples

>>> from sympy import DiracDelta, S >>> from sympy.abc import x

>>> DiracDelta(x) DiracDelta(x)

>>> DiracDelta(-x, 1) -DiracDelta(x, 1)

>>> DiracDelta(0) DiracDelta(0)

>>> DiracDelta(S.NaN) nan

>>> DiracDelta(x - 100).subs(x, 5) 0

>>> DiracDelta(x - 100).subs(x, 100) DiracDelta(0)

- fdiff(argindex=1)[source]#

-

Returns the first derivative of a DiracDelta Function.

- Parameters:

-

argindex : integer

degree of derivative

Explanation

The difference between

diff()andfdiff()is:diff()is the

user-level function andfdiff()is an object method.fdiff()is

a convenience method available in theFunctionclass. It returns

the derivative of the function without considering the chain rule.

diff(function, x)callsFunction._eval_derivativewhich in turn

callsfdiff()internally to compute the derivative of the function.Examples

>>> from sympy import DiracDelta, diff >>> from sympy.abc import x

>>> DiracDelta(x).fdiff() DiracDelta(x, 1)

>>> DiracDelta(x, 1).fdiff() DiracDelta(x, 2)

>>> DiracDelta(x**2 - 1).fdiff() DiracDelta(x**2 - 1, 1)

>>> diff(DiracDelta(x, 1)).fdiff() DiracDelta(x, 3)

- is_simple(x)[source]#

-

Tells whether the argument(args[0]) of DiracDelta is a linear

expression in x.- Parameters:

-

x : can be a symbol

Examples

>>> from sympy import DiracDelta, cos >>> from sympy.abc import x, y

>>> DiracDelta(x*y).is_simple(x) True >>> DiracDelta(x*y).is_simple(y) True

>>> DiracDelta(x**2 + x - 2).is_simple(x) False

>>> DiracDelta(cos(x)).is_simple(x) False

-

Heaviside#

- class sympy.functions.special.delta_functions.Heaviside(arg, H0=1 / 2)[source]#

-

Heaviside step function.

Explanation

The Heaviside step function has the following properties:

-

(frac{d}{d x} theta(x) = delta(x))

-

(theta(x) = begin{cases} 0 & text{for}: x < 0 \ frac{1}{2} &

text{for}: x = 0 \1 & text{for}: x > 0 end{cases}) -

(frac{d}{d x} max(x, 0) = theta(x))

Heaviside(x) is printed as (theta(x)) with the SymPy LaTeX printer.

The value at 0 is set differently in different fields. SymPy uses 1/2,

which is a convention from electronics and signal processing, and is

consistent with solving improper integrals by Fourier transform and

convolution.To specify a different value of Heaviside at

x=0, a second argument

can be given. UsingHeaviside(x, nan)gives an expression that will

evaluate to nan for x=0.Changed in version 1.9:

Heaviside(0)now returns 1/2 (before: undefined)Examples

>>> from sympy import Heaviside, nan >>> from sympy.abc import x >>> Heaviside(9) 1 >>> Heaviside(-9) 0 >>> Heaviside(0) 1/2 >>> Heaviside(0, nan) nan >>> (Heaviside(x) + 1).replace(Heaviside(x), Heaviside(x, 1)) Heaviside(x, 1) + 1

References

- classmethod eval(arg, H0=1 / 2)[source]#

-

Returns a simplified form or a value of Heaviside depending on the

argument passed by the Heaviside object.- Parameters:

-

arg : argument passed by Heaviside object

H0 : value of Heaviside(0)

Explanation

The

eval()method is automatically called when theHeaviside

class is about to be instantiated and it returns either some simplified

instance or the unevaluated instance depending on the argument passed.

In other words,eval()method is not needed to be called explicitly,

it is being called and evaluated once the object is called.Examples

>>> from sympy import Heaviside, S >>> from sympy.abc import x

>>> Heaviside(x) Heaviside(x)

>>> Heaviside(x - 100).subs(x, 5) 0

>>> Heaviside(x - 100).subs(x, 105) 1

- fdiff(argindex=1)[source]#

-

Returns the first derivative of a Heaviside Function.

- Parameters:

-

argindex : integer

order of derivative

Examples

>>> from sympy import Heaviside, diff >>> from sympy.abc import x

>>> Heaviside(x).fdiff() DiracDelta(x)

>>> Heaviside(x**2 - 1).fdiff() DiracDelta(x**2 - 1)

>>> diff(Heaviside(x)).fdiff() DiracDelta(x, 1)

- property pargs#

-

Args without default S.Half

-

Singularity Function#

- class sympy.functions.special.singularity_functions.SingularityFunction(variable, offset, exponent)[source]#

-

Singularity functions are a class of discontinuous functions.

Explanation

Singularity functions take a variable, an offset, and an exponent as

arguments. These functions are represented using Macaulay brackets as:SingularityFunction(x, a, n) := <x — a>^n

The singularity function will automatically evaluate to

Derivative(DiracDelta(x - a), x, -n - 1)ifn < 0

and(x - a)**n*Heaviside(x - a)ifn >= 0.Examples

>>> from sympy import SingularityFunction, diff, Piecewise, DiracDelta, Heaviside, Symbol >>> from sympy.abc import x, a, n >>> SingularityFunction(x, a, n) SingularityFunction(x, a, n) >>> y = Symbol('y', positive=True) >>> n = Symbol('n', nonnegative=True) >>> SingularityFunction(y, -10, n) (y + 10)**n >>> y = Symbol('y', negative=True) >>> SingularityFunction(y, 10, n) 0 >>> SingularityFunction(x, 4, -1).subs(x, 4) oo >>> SingularityFunction(x, 10, -2).subs(x, 10) oo >>> SingularityFunction(4, 1, 5) 243 >>> diff(SingularityFunction(x, 1, 5) + SingularityFunction(x, 1, 4), x) 4*SingularityFunction(x, 1, 3) + 5*SingularityFunction(x, 1, 4) >>> diff(SingularityFunction(x, 4, 0), x, 2) SingularityFunction(x, 4, -2) >>> SingularityFunction(x, 4, 5).rewrite(Piecewise) Piecewise(((x - 4)**5, x > 4), (0, True)) >>> expr = SingularityFunction(x, a, n) >>> y = Symbol('y', positive=True) >>> n = Symbol('n', nonnegative=True) >>> expr.subs({x: y, a: -10, n: n}) (y + 10)**n

The methods

rewrite(DiracDelta),rewrite(Heaviside), and

rewrite('HeavisideDiracDelta')returns the same output. One can use any

of these methods according to their choice.>>> expr = SingularityFunction(x, 4, 5) + SingularityFunction(x, -3, -1) - SingularityFunction(x, 0, -2) >>> expr.rewrite(Heaviside) (x - 4)**5*Heaviside(x - 4) + DiracDelta(x + 3) - DiracDelta(x, 1) >>> expr.rewrite(DiracDelta) (x - 4)**5*Heaviside(x - 4) + DiracDelta(x + 3) - DiracDelta(x, 1) >>> expr.rewrite('HeavisideDiracDelta') (x - 4)**5*Heaviside(x - 4) + DiracDelta(x + 3) - DiracDelta(x, 1)

See also

DiracDelta,HeavisideReferences

- classmethod eval(variable, offset, exponent)[source]#

-

Returns a simplified form or a value of Singularity Function depending

on the argument passed by the object.Explanation

The

eval()method is automatically called when the

SingularityFunctionclass is about to be instantiated and it

returns either some simplified instance or the unevaluated instance

depending on the argument passed. In other words,eval()method is

not needed to be called explicitly, it is being called and evaluated

once the object is called.Examples

>>> from sympy import SingularityFunction, Symbol, nan >>> from sympy.abc import x, a, n >>> SingularityFunction(x, a, n) SingularityFunction(x, a, n) >>> SingularityFunction(5, 3, 2) 4 >>> SingularityFunction(x, a, nan) nan >>> SingularityFunction(x, 3, 0).subs(x, 3) 1 >>> SingularityFunction(4, 1, 5) 243 >>> x = Symbol('x', positive = True) >>> a = Symbol('a', negative = True) >>> n = Symbol('n', nonnegative = True) >>> SingularityFunction(x, a, n) (-a + x)**n >>> x = Symbol('x', negative = True) >>> a = Symbol('a', positive = True) >>> SingularityFunction(x, a, n) 0

- fdiff(argindex=1)[source]#

-

Returns the first derivative of a DiracDelta Function.

Explanation

The difference between

diff()andfdiff()is:diff()is the

user-level function andfdiff()is an object method.fdiff()is

a convenience method available in theFunctionclass. It returns

the derivative of the function without considering the chain rule.

diff(function, x)callsFunction._eval_derivativewhich in turn

callsfdiff()internally to compute the derivative of the function.

Gamma, Beta and related Functions#

- class sympy.functions.special.gamma_functions.gamma(arg)[source]#

-

The gamma function

[Gamma(x) := int^{infty}_{0} t^{x-1} e^{-t} mathrm{d}t.]

Explanation

The

gammafunction implements the function which passes through the

values of the factorial function (i.e., (Gamma(n) = (n — 1)!) when n is

an integer). More generally, (Gamma(z)) is defined in the whole complex

plane except at the negative integers where there are simple poles.Examples

>>> from sympy import S, I, pi, gamma >>> from sympy.abc import x

Several special values are known:

>>> gamma(1) 1 >>> gamma(4) 6 >>> gamma(S(3)/2) sqrt(pi)/2

The

gammafunction obeys the mirror symmetry:>>> from sympy import conjugate >>> conjugate(gamma(x)) gamma(conjugate(x))

Differentiation with respect to (x) is supported:

>>> from sympy import diff >>> diff(gamma(x), x) gamma(x)*polygamma(0, x)

Series expansion is also supported:

>>> from sympy import series >>> series(gamma(x), x, 0, 3) 1/x - EulerGamma + x*(EulerGamma**2/2 + pi**2/12) + x**2*(-EulerGamma*pi**2/12 + polygamma(2, 1)/6 - EulerGamma**3/6) + O(x**3)

We can numerically evaluate the

gammafunction to arbitrary precision

on the whole complex plane:>>> gamma(pi).evalf(40) 2.288037795340032417959588909060233922890 >>> gamma(1+I).evalf(20) 0.49801566811835604271 - 0.15494982830181068512*I

See also

lowergamma-

Lower incomplete gamma function.

uppergamma-

Upper incomplete gamma function.

polygamma-

Polygamma function.

loggamma-

Log Gamma function.

digamma-

Digamma function.

trigamma-

Trigamma function.

beta-

Euler Beta function.

References

- class sympy.functions.special.gamma_functions.loggamma(z)[source]#

-

The

loggammafunction implements the logarithm of the

gamma function (i.e., (logGamma(x))).Examples

Several special values are known. For numerical integral

arguments we have:>>> from sympy import loggamma >>> loggamma(-2) oo >>> loggamma(0) oo >>> loggamma(1) 0 >>> loggamma(2) 0 >>> loggamma(3) log(2)

And for symbolic values:

>>> from sympy import Symbol >>> n = Symbol("n", integer=True, positive=True) >>> loggamma(n) log(gamma(n)) >>> loggamma(-n) oo

For half-integral values:

>>> from sympy import S >>> loggamma(S(5)/2) log(3*sqrt(pi)/4) >>> loggamma(n/2) log(2**(1 - n)*sqrt(pi)*gamma(n)/gamma(n/2 + 1/2))

And general rational arguments:

>>> from sympy import expand_func >>> L = loggamma(S(16)/3) >>> expand_func(L).doit() -5*log(3) + loggamma(1/3) + log(4) + log(7) + log(10) + log(13) >>> L = loggamma(S(19)/4) >>> expand_func(L).doit() -4*log(4) + loggamma(3/4) + log(3) + log(7) + log(11) + log(15) >>> L = loggamma(S(23)/7) >>> expand_func(L).doit() -3*log(7) + log(2) + loggamma(2/7) + log(9) + log(16)

The

loggammafunction has the following limits towards infinity:>>> from sympy import oo >>> loggamma(oo) oo >>> loggamma(-oo) zoo

The

loggammafunction obeys the mirror symmetry

if (x in mathbb{C} setminus {-infty, 0}):>>> from sympy.abc import x >>> from sympy import conjugate >>> conjugate(loggamma(x)) loggamma(conjugate(x))

Differentiation with respect to (x) is supported:

>>> from sympy import diff >>> diff(loggamma(x), x) polygamma(0, x)

Series expansion is also supported:

>>> from sympy import series >>> series(loggamma(x), x, 0, 4).cancel() -log(x) - EulerGamma*x + pi**2*x**2/12 + x**3*polygamma(2, 1)/6 + O(x**4)

We can numerically evaluate the

gammafunction to arbitrary precision

on the whole complex plane:>>> from sympy import I >>> loggamma(5).evalf(30) 3.17805383034794561964694160130 >>> loggamma(I).evalf(20) -0.65092319930185633889 - 1.8724366472624298171*I

See also

gamma-

Gamma function.

lowergamma-

Lower incomplete gamma function.

uppergamma-

Upper incomplete gamma function.

polygamma-

Polygamma function.

digamma-

Digamma function.

trigamma-

Trigamma function.

beta-

Euler Beta function.

References

- class sympy.functions.special.gamma_functions.polygamma(n, z)[source]#

-

The function

polygamma(n, z)returnslog(gamma(z)).diff(n + 1).Explanation

It is a meromorphic function on (mathbb{C}) and defined as the ((n+1))-th

derivative of the logarithm of the gamma function:[psi^{(n)} (z) := frac{mathrm{d}^{n+1}}{mathrm{d} z^{n+1}} logGamma(z).]

Examples

Several special values are known:

>>> from sympy import S, polygamma >>> polygamma(0, 1) -EulerGamma >>> polygamma(0, 1/S(2)) -2*log(2) - EulerGamma >>> polygamma(0, 1/S(3)) -log(3) - sqrt(3)*pi/6 - EulerGamma - log(sqrt(3)) >>> polygamma(0, 1/S(4)) -pi/2 - log(4) - log(2) - EulerGamma >>> polygamma(0, 2) 1 - EulerGamma >>> polygamma(0, 23) 19093197/5173168 - EulerGamma

>>> from sympy import oo, I >>> polygamma(0, oo) oo >>> polygamma(0, -oo) oo >>> polygamma(0, I*oo) oo >>> polygamma(0, -I*oo) oo

Differentiation with respect to (x) is supported:

>>> from sympy import Symbol, diff >>> x = Symbol("x") >>> diff(polygamma(0, x), x) polygamma(1, x) >>> diff(polygamma(0, x), x, 2) polygamma(2, x) >>> diff(polygamma(0, x), x, 3) polygamma(3, x) >>> diff(polygamma(1, x), x) polygamma(2, x) >>> diff(polygamma(1, x), x, 2) polygamma(3, x) >>> diff(polygamma(2, x), x) polygamma(3, x) >>> diff(polygamma(2, x), x, 2) polygamma(4, x)

>>> n = Symbol("n") >>> diff(polygamma(n, x), x) polygamma(n + 1, x) >>> diff(polygamma(n, x), x, 2) polygamma(n + 2, x)

We can rewrite

polygammafunctions in terms of harmonic numbers:>>> from sympy import harmonic >>> polygamma(0, x).rewrite(harmonic) harmonic(x - 1) - EulerGamma >>> polygamma(2, x).rewrite(harmonic) 2*harmonic(x - 1, 3) - 2*zeta(3) >>> ni = Symbol("n", integer=True) >>> polygamma(ni, x).rewrite(harmonic) (-1)**(n + 1)*(-harmonic(x - 1, n + 1) + zeta(n + 1))*factorial(n)

See also

gamma-

Gamma function.

lowergamma-

Lower incomplete gamma function.

uppergamma-

Upper incomplete gamma function.

loggamma-

Log Gamma function.

digamma-

Digamma function.

trigamma-

Trigamma function.

beta-

Euler Beta function.

References

- class sympy.functions.special.gamma_functions.digamma(z)[source]#

-

The

digammafunction is the first derivative of theloggamma

function[psi(x) := frac{mathrm{d}}{mathrm{d} z} logGamma(z)

= frac{Gamma'(z)}{Gamma(z) }.]In this case,

digamma(z) = polygamma(0, z).Examples

>>> from sympy import digamma >>> digamma(0) zoo >>> from sympy import Symbol >>> z = Symbol('z') >>> digamma(z) polygamma(0, z)

To retain

digammaas it is:>>> digamma(0, evaluate=False) digamma(0) >>> digamma(z, evaluate=False) digamma(z)

See also

gamma-

Gamma function.

lowergamma-

Lower incomplete gamma function.

uppergamma-

Upper incomplete gamma function.

polygamma-

Polygamma function.

loggamma-

Log Gamma function.

trigamma-

Trigamma function.

beta-

Euler Beta function.

References

- class sympy.functions.special.gamma_functions.trigamma(z)[source]#

-

The

trigammafunction is the second derivative of theloggamma

function[psi^{(1)}(z) := frac{mathrm{d}^{2}}{mathrm{d} z^{2}} logGamma(z).]

In this case,

trigamma(z) = polygamma(1, z).Examples

>>> from sympy import trigamma >>> trigamma(0) zoo >>> from sympy import Symbol >>> z = Symbol('z') >>> trigamma(z) polygamma(1, z)

To retain

trigammaas it is:>>> trigamma(0, evaluate=False) trigamma(0) >>> trigamma(z, evaluate=False) trigamma(z)

See also

gamma-

Gamma function.

lowergamma-

Lower incomplete gamma function.

uppergamma-

Upper incomplete gamma function.

polygamma-

Polygamma function.

loggamma-

Log Gamma function.

digamma-

Digamma function.

beta-

Euler Beta function.

References

- class sympy.functions.special.gamma_functions.uppergamma(a, z)[source]#

-

The upper incomplete gamma function.

Explanation

It can be defined as the meromorphic continuation of

[Gamma(s, x) := int_x^infty t^{s-1} e^{-t} mathrm{d}t = Gamma(s) — gamma(s, x).]

where (gamma(s, x)) is the lower incomplete gamma function,

lowergamma. This can be shown to be the same as[Gamma(s, x) = Gamma(s) — frac{x^s}{s} {}_1F_1left({s atop s+1} middle| -xright),]

where ({}_1F_1) is the (confluent) hypergeometric function.

The upper incomplete gamma function is also essentially equivalent to the

generalized exponential integral:[operatorname{E}_{n}(x) = int_{1}^{infty}{frac{e^{-xt}}{t^n} , dt} = x^{n-1}Gamma(1-n,x).]

Examples

>>> from sympy import uppergamma, S >>> from sympy.abc import s, x >>> uppergamma(s, x) uppergamma(s, x) >>> uppergamma(3, x) 2*(x**2/2 + x + 1)*exp(-x) >>> uppergamma(-S(1)/2, x) -2*sqrt(pi)*erfc(sqrt(x)) + 2*exp(-x)/sqrt(x) >>> uppergamma(-2, x) expint(3, x)/x**2

See also

gamma-

Gamma function.

lowergamma-

Lower incomplete gamma function.

polygamma-

Polygamma function.

loggamma-

Log Gamma function.

digamma-

Digamma function.

trigamma-

Trigamma function.

beta-

Euler Beta function.

References

[R327]

Abramowitz, Milton; Stegun, Irene A., eds. (1965), Chapter 6,

Section 5, Handbook of Mathematical Functions with Formulas, Graphs,

and Mathematical Tables

- class sympy.functions.special.gamma_functions.lowergamma(a, x)[source]#

-

The lower incomplete gamma function.

Explanation

It can be defined as the meromorphic continuation of

[gamma(s, x) := int_0^x t^{s-1} e^{-t} mathrm{d}t = Gamma(s) — Gamma(s, x).]

This can be shown to be the same as

[gamma(s, x) = frac{x^s}{s} {}_1F_1left({s atop s+1} middle| -xright),]

where ({}_1F_1) is the (confluent) hypergeometric function.

Examples

>>> from sympy import lowergamma, S >>> from sympy.abc import s, x >>> lowergamma(s, x) lowergamma(s, x) >>> lowergamma(3, x) -2*(x**2/2 + x + 1)*exp(-x) + 2 >>> lowergamma(-S(1)/2, x) -2*sqrt(pi)*erf(sqrt(x)) - 2*exp(-x)/sqrt(x)

See also

gamma-

Gamma function.

uppergamma-

Upper incomplete gamma function.

polygamma-

Polygamma function.

loggamma-

Log Gamma function.

digamma-

Digamma function.

trigamma-

Trigamma function.

beta-

Euler Beta function.

References

[R333]

Abramowitz, Milton; Stegun, Irene A., eds. (1965), Chapter 6,

Section 5, Handbook of Mathematical Functions with Formulas, Graphs,

and Mathematical Tables

- class sympy.functions.special.gamma_functions.multigamma(x, p)[source]#

-

The multivariate gamma function is a generalization of the gamma function

[Gamma_p(z) = pi^{p(p-1)/4}prod_{k=1}^p Gamma[z + (1 — k)/2].]

In a special case,

multigamma(x, 1) = gamma(x).- Parameters:

-

p : order or dimension of the multivariate gamma function

Examples

>>> from sympy import S, multigamma >>> from sympy import Symbol >>> x = Symbol('x') >>> p = Symbol('p', positive=True, integer=True)

>>> multigamma(x, p) pi**(p*(p - 1)/4)*Product(gamma(-_k/2 + x + 1/2), (_k, 1, p))

Several special values are known:

>>> multigamma(1, 1) 1 >>> multigamma(4, 1) 6 >>> multigamma(S(3)/2, 1) sqrt(pi)/2

Writing

multigammain terms of thegammafunction:>>> multigamma(x, 1) gamma(x)

>>> multigamma(x, 2) sqrt(pi)*gamma(x)*gamma(x - 1/2)

>>> multigamma(x, 3) pi**(3/2)*gamma(x)*gamma(x - 1)*gamma(x - 1/2)

References

- class sympy.functions.special.beta_functions.beta(x, y=None)[source]#

-

The beta integral is called the Eulerian integral of the first kind by

Legendre:[mathrm{B}(x,y) int^{1}_{0} t^{x-1} (1-t)^{y-1} mathrm{d}t.]

Explanation

The Beta function or Euler’s first integral is closely associated

with the gamma function. The Beta function is often used in probability

theory and mathematical statistics. It satisfies properties like:[begin{split}mathrm{B}(a,1) = frac{1}{a} \

mathrm{B}(a,b) = mathrm{B}(b,a) \

mathrm{B}(a,b) = frac{Gamma(a) Gamma(b)}{Gamma(a+b)}end{split}]Therefore for integral values of (a) and (b):

[mathrm{B} = frac{(a-1)! (b-1)!}{(a+b-1)!}]

A special case of the Beta function when (x = y) is the

Central Beta function. It satisfies properties like:[mathrm{B}(x) = 2^{1 — 2x}mathrm{B}(x, frac{1}{2})

mathrm{B}(x) = 2^{1 — 2x} cos(pi x) mathrm{B}(frac{1}{2} — x, x)

mathrm{B}(x) = int_{0}^{1} frac{t^x}{(1 + t)^{2x}} dt

mathrm{B}(x) = frac{2}{x} prod_{n = 1}^{infty} frac{n(n + 2x)}{(n + x)^2}]Examples

>>> from sympy import I, pi >>> from sympy.abc import x, y

The Beta function obeys the mirror symmetry:

>>> from sympy import beta, conjugate >>> conjugate(beta(x, y)) beta(conjugate(x), conjugate(y))

Differentiation with respect to both (x) and (y) is supported:

>>> from sympy import beta, diff >>> diff(beta(x, y), x) (polygamma(0, x) - polygamma(0, x + y))*beta(x, y)

>>> diff(beta(x, y), y) (polygamma(0, y) - polygamma(0, x + y))*beta(x, y)

>>> diff(beta(x), x) 2*(polygamma(0, x) - polygamma(0, 2*x))*beta(x, x)

We can numerically evaluate the Beta function to

arbitrary precision for any complex numbers x and y:>>> from sympy import beta >>> beta(pi).evalf(40) 0.02671848900111377452242355235388489324562

>>> beta(1 + I).evalf(20) -0.2112723729365330143 - 0.7655283165378005676*I

See also

gamma-

Gamma function.

uppergamma-

Upper incomplete gamma function.

lowergamma-

Lower incomplete gamma function.

polygamma-

Polygamma function.

loggamma-

Log Gamma function.

digamma-

Digamma function.

trigamma-

Trigamma function.

References

Error Functions and Fresnel Integrals#

- class sympy.functions.special.error_functions.erf(arg)[source]#

-

The Gauss error function.

Explanation

This function is defined as:

[mathrm{erf}(x) = frac{2}{sqrt{pi}} int_0^x e^{-t^2} mathrm{d}t.]

Examples

>>> from sympy import I, oo, erf >>> from sympy.abc import z

Several special values are known:

>>> erf(0) 0 >>> erf(oo) 1 >>> erf(-oo) -1 >>> erf(I*oo) oo*I >>> erf(-I*oo) -oo*I

In general one can pull out factors of -1 and (I) from the argument:

The error function obeys the mirror symmetry:

>>> from sympy import conjugate >>> conjugate(erf(z)) erf(conjugate(z))

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(erf(z), z) 2*exp(-z**2)/sqrt(pi)

We can numerically evaluate the error function to arbitrary precision

on the whole complex plane:>>> erf(4).evalf(30) 0.999999984582742099719981147840

>>> erf(-4*I).evalf(30) -1296959.73071763923152794095062*I

See also

erfc-

Complementary error function.

erfi-

Imaginary error function.

erf2-

Two-argument error function.

erfinv-

Inverse error function.

erfcinv-

Inverse Complementary error function.

erf2inv-

Inverse two-argument error function.

References

- inverse(argindex=1)[source]#

-

Returns the inverse of this function.

- class sympy.functions.special.error_functions.erfc(arg)[source]#

-

Complementary Error Function.

Explanation

The function is defined as:

[mathrm{erfc}(x) = frac{2}{sqrt{pi}} int_x^infty e^{-t^2} mathrm{d}t]

Examples

>>> from sympy import I, oo, erfc >>> from sympy.abc import z

Several special values are known:

>>> erfc(0) 1 >>> erfc(oo) 0 >>> erfc(-oo) 2 >>> erfc(I*oo) -oo*I >>> erfc(-I*oo) oo*I

The error function obeys the mirror symmetry:

>>> from sympy import conjugate >>> conjugate(erfc(z)) erfc(conjugate(z))

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(erfc(z), z) -2*exp(-z**2)/sqrt(pi)

It also follows

We can numerically evaluate the complementary error function to arbitrary

precision on the whole complex plane:>>> erfc(4).evalf(30) 0.0000000154172579002800188521596734869

>>> erfc(4*I).evalf(30) 1.0 - 1296959.73071763923152794095062*I

See also

erf-

Gaussian error function.

erfi-

Imaginary error function.

erf2-

Two-argument error function.

erfinv-

Inverse error function.

erfcinv-

Inverse Complementary error function.

erf2inv-

Inverse two-argument error function.

References

- inverse(argindex=1)[source]#

-

Returns the inverse of this function.

- class sympy.functions.special.error_functions.erfi(z)[source]#

-

Imaginary error function.

Explanation

The function erfi is defined as:

[mathrm{erfi}(x) = frac{2}{sqrt{pi}} int_0^x e^{t^2} mathrm{d}t]

Examples

>>> from sympy import I, oo, erfi >>> from sympy.abc import z

Several special values are known:

>>> erfi(0) 0 >>> erfi(oo) oo >>> erfi(-oo) -oo >>> erfi(I*oo) I >>> erfi(-I*oo) -I

In general one can pull out factors of -1 and (I) from the argument:

>>> from sympy import conjugate >>> conjugate(erfi(z)) erfi(conjugate(z))

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(erfi(z), z) 2*exp(z**2)/sqrt(pi)

We can numerically evaluate the imaginary error function to arbitrary

precision on the whole complex plane:>>> erfi(2).evalf(30) 18.5648024145755525987042919132

>>> erfi(-2*I).evalf(30) -0.995322265018952734162069256367*I

See also

erf-

Gaussian error function.

erfc-

Complementary error function.

erf2-

Two-argument error function.

erfinv-

Inverse error function.

erfcinv-

Inverse Complementary error function.

erf2inv-

Inverse two-argument error function.

References

- class sympy.functions.special.error_functions.erf2(x, y)[source]#

-

Two-argument error function.

Explanation

This function is defined as:

[mathrm{erf2}(x, y) = frac{2}{sqrt{pi}} int_x^y e^{-t^2} mathrm{d}t]

Examples

>>> from sympy import oo, erf2 >>> from sympy.abc import x, y

Several special values are known:

>>> erf2(0, 0) 0 >>> erf2(x, x) 0 >>> erf2(x, oo) 1 - erf(x) >>> erf2(x, -oo) -erf(x) - 1 >>> erf2(oo, y) erf(y) - 1 >>> erf2(-oo, y) erf(y) + 1

In general one can pull out factors of -1:

>>> erf2(-x, -y) -erf2(x, y)

The error function obeys the mirror symmetry:

>>> from sympy import conjugate >>> conjugate(erf2(x, y)) erf2(conjugate(x), conjugate(y))

Differentiation with respect to (x), (y) is supported:

>>> from sympy import diff >>> diff(erf2(x, y), x) -2*exp(-x**2)/sqrt(pi) >>> diff(erf2(x, y), y) 2*exp(-y**2)/sqrt(pi)

See also

erf-

Gaussian error function.

erfc-

Complementary error function.

erfi-

Imaginary error function.

erfinv-

Inverse error function.

erfcinv-

Inverse Complementary error function.

erf2inv-

Inverse two-argument error function.

References

- class sympy.functions.special.error_functions.erfinv(z)[source]#

-

Inverse Error Function. The erfinv function is defined as:

[mathrm{erf}(x) = y quad Rightarrow quad mathrm{erfinv}(y) = x]

Examples

>>> from sympy import erfinv >>> from sympy.abc import x

Several special values are known:

>>> erfinv(0) 0 >>> erfinv(1) oo

Differentiation with respect to (x) is supported:

>>> from sympy import diff >>> diff(erfinv(x), x) sqrt(pi)*exp(erfinv(x)**2)/2

We can numerically evaluate the inverse error function to arbitrary

precision on [-1, 1]:>>> erfinv(0.2).evalf(30) 0.179143454621291692285822705344

See also

erf-

Gaussian error function.

erfc-

Complementary error function.

erfi-

Imaginary error function.

erf2-

Two-argument error function.

erfcinv-

Inverse Complementary error function.

erf2inv-

Inverse two-argument error function.

References

- inverse(argindex=1)[source]#

-

Returns the inverse of this function.

- class sympy.functions.special.error_functions.erfcinv(z)[source]#

-

Inverse Complementary Error Function. The erfcinv function is defined as:

[mathrm{erfc}(x) = y quad Rightarrow quad mathrm{erfcinv}(y) = x]

Examples

>>> from sympy import erfcinv >>> from sympy.abc import x

Several special values are known:

>>> erfcinv(1) 0 >>> erfcinv(0) oo

Differentiation with respect to (x) is supported:

>>> from sympy import diff >>> diff(erfcinv(x), x) -sqrt(pi)*exp(erfcinv(x)**2)/2

See also

erf-

Gaussian error function.

erfc-

Complementary error function.

erfi-

Imaginary error function.

erf2-

Two-argument error function.

erfinv-

Inverse error function.

erf2inv-

Inverse two-argument error function.

References

- inverse(argindex=1)[source]#

-

Returns the inverse of this function.

- class sympy.functions.special.error_functions.erf2inv(x, y)[source]#

-

Two-argument Inverse error function. The erf2inv function is defined as:

[mathrm{erf2}(x, w) = y quad Rightarrow quad mathrm{erf2inv}(x, y) = w]

Examples

>>> from sympy import erf2inv, oo >>> from sympy.abc import x, y

Several special values are known:

>>> erf2inv(0, 0) 0 >>> erf2inv(1, 0) 1 >>> erf2inv(0, 1) oo >>> erf2inv(0, y) erfinv(y) >>> erf2inv(oo, y) erfcinv(-y)

Differentiation with respect to (x) and (y) is supported:

>>> from sympy import diff >>> diff(erf2inv(x, y), x) exp(-x**2 + erf2inv(x, y)**2) >>> diff(erf2inv(x, y), y) sqrt(pi)*exp(erf2inv(x, y)**2)/2

See also

erf-

Gaussian error function.

erfc-

Complementary error function.

erfi-

Imaginary error function.

erf2-

Two-argument error function.

erfinv-

Inverse error function.

erfcinv-

Inverse complementary error function.

References

- class sympy.functions.special.error_functions.FresnelIntegral(z)[source]#

-

Base class for the Fresnel integrals.

- class sympy.functions.special.error_functions.fresnels(z)[source]#

-

Fresnel integral S.

Explanation

This function is defined by

[operatorname{S}(z) = int_0^z sin{frac{pi}{2} t^2} mathrm{d}t.]

It is an entire function.

Examples

>>> from sympy import I, oo, fresnels >>> from sympy.abc import z

Several special values are known:

>>> fresnels(0) 0 >>> fresnels(oo) 1/2 >>> fresnels(-oo) -1/2 >>> fresnels(I*oo) -I/2 >>> fresnels(-I*oo) I/2

In general one can pull out factors of -1 and (i) from the argument:

>>> fresnels(-z) -fresnels(z) >>> fresnels(I*z) -I*fresnels(z)

The Fresnel S integral obeys the mirror symmetry

(overline{S(z)} = S(bar{z})):>>> from sympy import conjugate >>> conjugate(fresnels(z)) fresnels(conjugate(z))

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(fresnels(z), z) sin(pi*z**2/2)

Defining the Fresnel functions via an integral:

>>> from sympy import integrate, pi, sin, expand_func >>> integrate(sin(pi*z**2/2), z) 3*fresnels(z)*gamma(3/4)/(4*gamma(7/4)) >>> expand_func(integrate(sin(pi*z**2/2), z)) fresnels(z)

We can numerically evaluate the Fresnel integral to arbitrary precision

on the whole complex plane:>>> fresnels(2).evalf(30) 0.343415678363698242195300815958

>>> fresnels(-2*I).evalf(30) 0.343415678363698242195300815958*I

See also

fresnelc-

Fresnel cosine integral.

References

[R362]

The converging factors for the fresnel integrals

by John W. Wrench Jr. and Vicki Alley

- class sympy.functions.special.error_functions.fresnelc(z)[source]#

-

Fresnel integral C.

Explanation

This function is defined by

[operatorname{C}(z) = int_0^z cos{frac{pi}{2} t^2} mathrm{d}t.]

It is an entire function.

Examples

>>> from sympy import I, oo, fresnelc >>> from sympy.abc import z

Several special values are known:

>>> fresnelc(0) 0 >>> fresnelc(oo) 1/2 >>> fresnelc(-oo) -1/2 >>> fresnelc(I*oo) I/2 >>> fresnelc(-I*oo) -I/2

In general one can pull out factors of -1 and (i) from the argument:

>>> fresnelc(-z) -fresnelc(z) >>> fresnelc(I*z) I*fresnelc(z)

The Fresnel C integral obeys the mirror symmetry

(overline{C(z)} = C(bar{z})):>>> from sympy import conjugate >>> conjugate(fresnelc(z)) fresnelc(conjugate(z))

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(fresnelc(z), z) cos(pi*z**2/2)

Defining the Fresnel functions via an integral:

>>> from sympy import integrate, pi, cos, expand_func >>> integrate(cos(pi*z**2/2), z) fresnelc(z)*gamma(1/4)/(4*gamma(5/4)) >>> expand_func(integrate(cos(pi*z**2/2), z)) fresnelc(z)

We can numerically evaluate the Fresnel integral to arbitrary precision

on the whole complex plane:>>> fresnelc(2).evalf(30) 0.488253406075340754500223503357

>>> fresnelc(-2*I).evalf(30) -0.488253406075340754500223503357*I

See also

fresnels-

Fresnel sine integral.

References

[R367]

The converging factors for the fresnel integrals

by John W. Wrench Jr. and Vicki Alley

Exponential, Logarithmic and Trigonometric Integrals#

- class sympy.functions.special.error_functions.Ei(z)[source]#

-

The classical exponential integral.

Explanation

For use in SymPy, this function is defined as

[operatorname{Ei}(x) = sum_{n=1}^infty frac{x^n}{n, n!}

+ log(x) + gamma,]where (gamma) is the Euler-Mascheroni constant.

If (x) is a polar number, this defines an analytic function on the

Riemann surface of the logarithm. Otherwise this defines an analytic

function in the cut plane (mathbb{C} setminus (-infty, 0]).Background

The name exponential integral comes from the following statement:

[operatorname{Ei}(x) = int_{-infty}^x frac{e^t}{t} mathrm{d}t]

If the integral is interpreted as a Cauchy principal value, this statement

holds for (x > 0) and (operatorname{Ei}(x)) as defined above.Examples

>>> from sympy import Ei, polar_lift, exp_polar, I, pi >>> from sympy.abc import x

This yields a real value:

>>> Ei(-1).n(chop=True) -0.219383934395520

On the other hand the analytic continuation is not real:

>>> Ei(polar_lift(-1)).n(chop=True) -0.21938393439552 + 3.14159265358979*I

The exponential integral has a logarithmic branch point at the origin:

>>> Ei(x*exp_polar(2*I*pi)) Ei(x) + 2*I*pi

Differentiation is supported:

>>> Ei(x).diff(x) exp(x)/x

The exponential integral is related to many other special functions.

For example:>>> from sympy import expint, Shi >>> Ei(x).rewrite(expint) -expint(1, x*exp_polar(I*pi)) - I*pi >>> Ei(x).rewrite(Shi) Chi(x) + Shi(x)

See also

expint-

Generalised exponential integral.

E1-

Special case of the generalised exponential integral.

li-

Logarithmic integral.

Li-

Offset logarithmic integral.

Si-

Sine integral.

Ci-

Cosine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

uppergamma-

Upper incomplete gamma function.

References

- class sympy.functions.special.error_functions.expint(nu, z)[source]#

-

Generalized exponential integral.

Explanation

This function is defined as

[operatorname{E}_nu(z) = z^{nu — 1} Gamma(1 — nu, z),]

where (Gamma(1 — nu, z)) is the upper incomplete gamma function

(uppergamma).Hence for (z) with positive real part we have

[operatorname{E}_nu(z)

= int_1^infty frac{e^{-zt}}{t^nu} mathrm{d}t,]which explains the name.

The representation as an incomplete gamma function provides an analytic

continuation for (operatorname{E}_nu(z)). If (nu) is a

non-positive integer, the exponential integral is thus an unbranched

function of (z), otherwise there is a branch point at the origin.

Refer to the incomplete gamma function documentation for details of the

branching behavior.Examples

>>> from sympy import expint, S >>> from sympy.abc import nu, z

Differentiation is supported. Differentiation with respect to (z) further

explains the name: for integral orders, the exponential integral is an

iterated integral of the exponential function.>>> expint(nu, z).diff(z) -expint(nu - 1, z)

Differentiation with respect to (nu) has no classical expression:

>>> expint(nu, z).diff(nu) -z**(nu - 1)*meijerg(((), (1, 1)), ((0, 0, 1 - nu), ()), z)

At non-postive integer orders, the exponential integral reduces to the

exponential function:>>> expint(0, z) exp(-z)/z >>> expint(-1, z) exp(-z)/z + exp(-z)/z**2

At half-integers it reduces to error functions:

>>> expint(S(1)/2, z) sqrt(pi)*erfc(sqrt(z))/sqrt(z)

At positive integer orders it can be rewritten in terms of exponentials

andexpint(1, z). Useexpand_func()to do this:>>> from sympy import expand_func >>> expand_func(expint(5, z)) z**4*expint(1, z)/24 + (-z**3 + z**2 - 2*z + 6)*exp(-z)/24

The generalised exponential integral is essentially equivalent to the

incomplete gamma function:>>> from sympy import uppergamma >>> expint(nu, z).rewrite(uppergamma) z**(nu - 1)*uppergamma(1 - nu, z)

As such it is branched at the origin:

>>> from sympy import exp_polar, pi, I >>> expint(4, z*exp_polar(2*pi*I)) I*pi*z**3/3 + expint(4, z) >>> expint(nu, z*exp_polar(2*pi*I)) z**(nu - 1)*(exp(2*I*pi*nu) - 1)*gamma(1 - nu) + expint(nu, z)

See also

Ei-

Another related function called exponential integral.

E1-

The classical case, returns expint(1, z).

li-

Logarithmic integral.

Li-

Offset logarithmic integral.

Si-

Sine integral.

Ci-

Cosine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

uppergammaReferences

- sympy.functions.special.error_functions.E1(z)[source]#

-

Classical case of the generalized exponential integral.

Explanation

This is equivalent to

expint(1, z).Examples

>>> from sympy import E1 >>> E1(0) expint(1, 0)

See also

Ei-

Exponential integral.

expint-

Generalised exponential integral.

li-

Logarithmic integral.

Li-

Offset logarithmic integral.

Si-

Sine integral.

Ci-

Cosine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

- class sympy.functions.special.error_functions.li(z)[source]#

-

The classical logarithmic integral.

Explanation

For use in SymPy, this function is defined as

[operatorname{li}(x) = int_0^x frac{1}{log(t)} mathrm{d}t ,.]

Examples

>>> from sympy import I, oo, li >>> from sympy.abc import z

Several special values are known:

>>> li(0) 0 >>> li(1) -oo >>> li(oo) oo

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(li(z), z) 1/log(z)

Defining the

lifunction via an integral:

>>> from sympy import integrate

>>> integrate(li(z))

z*li(z) — Ei(2*log(z))>>> integrate(li(z),z) z*li(z) - Ei(2*log(z))

The logarithmic integral can also be defined in terms of

Ei:>>> from sympy import Ei >>> li(z).rewrite(Ei) Ei(log(z)) >>> diff(li(z).rewrite(Ei), z) 1/log(z)

We can numerically evaluate the logarithmic integral to arbitrary precision

on the whole complex plane (except the singular points):>>> li(2).evalf(30) 1.04516378011749278484458888919

>>> li(2*I).evalf(30) 1.0652795784357498247001125598 + 3.08346052231061726610939702133*I

We can even compute Soldner’s constant by the help of mpmath:

>>> from mpmath import findroot >>> findroot(li, 2) 1.45136923488338

Further transformations include rewriting

liin terms of

the trigonometric integralsSi,Ci,ShiandChi:>>> from sympy import Si, Ci, Shi, Chi >>> li(z).rewrite(Si) -log(I*log(z)) - log(1/log(z))/2 + log(log(z))/2 + Ci(I*log(z)) + Shi(log(z)) >>> li(z).rewrite(Ci) -log(I*log(z)) - log(1/log(z))/2 + log(log(z))/2 + Ci(I*log(z)) + Shi(log(z)) >>> li(z).rewrite(Shi) -log(1/log(z))/2 + log(log(z))/2 + Chi(log(z)) - Shi(log(z)) >>> li(z).rewrite(Chi) -log(1/log(z))/2 + log(log(z))/2 + Chi(log(z)) - Shi(log(z))

See also

Li-

Offset logarithmic integral.

Ei-

Exponential integral.

expint-

Generalised exponential integral.

E1-

Special case of the generalised exponential integral.

Si-

Sine integral.

Ci-

Cosine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

References

- class sympy.functions.special.error_functions.Li(z)[source]#

-

The offset logarithmic integral.

Explanation

For use in SymPy, this function is defined as

[operatorname{Li}(x) = operatorname{li}(x) — operatorname{li}(2)]

Examples

>>> from sympy import Li >>> from sympy.abc import z

The following special value is known:

Differentiation with respect to (z) is supported:

>>> from sympy import diff >>> diff(Li(z), z) 1/log(z)

The shifted logarithmic integral can be written in terms of (li(z)):

>>> from sympy import li >>> Li(z).rewrite(li) li(z) - li(2)

We can numerically evaluate the logarithmic integral to arbitrary precision

on the whole complex plane (except the singular points):>>> Li(4).evalf(30) 1.92242131492155809316615998938

See also

li-

Logarithmic integral.

Ei-

Exponential integral.

expint-

Generalised exponential integral.

E1-

Special case of the generalised exponential integral.

Si-

Sine integral.

Ci-

Cosine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

References

- class sympy.functions.special.error_functions.Si(z)[source]#

-

Sine integral.

Explanation

This function is defined by

[operatorname{Si}(z) = int_0^z frac{sin{t}}{t} mathrm{d}t.]

It is an entire function.

Examples

>>> from sympy import Si >>> from sympy.abc import z

The sine integral is an antiderivative of (sin(z)/z):

>>> Si(z).diff(z) sin(z)/z

It is unbranched:

>>> from sympy import exp_polar, I, pi >>> Si(z*exp_polar(2*I*pi)) Si(z)

Sine integral behaves much like ordinary sine under multiplication by

I:>>> Si(I*z) I*Shi(z) >>> Si(-z) -Si(z)

It can also be expressed in terms of exponential integrals, but beware

that the latter is branched:>>> from sympy import expint >>> Si(z).rewrite(expint) -I*(-expint(1, z*exp_polar(-I*pi/2))/2 + expint(1, z*exp_polar(I*pi/2))/2) + pi/2

It can be rewritten in the form of sinc function (by definition):

>>> from sympy import sinc >>> Si(z).rewrite(sinc) Integral(sinc(t), (t, 0, z))

See also

Ci-

Cosine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

Ei-

Exponential integral.

expint-

Generalised exponential integral.

sinc-

unnormalized sinc function

E1-

Special case of the generalised exponential integral.

li-

Logarithmic integral.

Li-

Offset logarithmic integral.

References

- class sympy.functions.special.error_functions.Ci(z)[source]#

-

Cosine integral.

Explanation

This function is defined for positive (x) by

[operatorname{Ci}(x) = gamma + log{x}

+ int_0^x frac{cos{t} — 1}{t} mathrm{d}t

= -int_x^infty frac{cos{t}}{t} mathrm{d}t,]where (gamma) is the Euler-Mascheroni constant.

We have

[operatorname{Ci}(z) =

-frac{operatorname{E}_1left(e^{ipi/2} zright)

+ operatorname{E}_1left(e^{-i pi/2} zright)}{2}]which holds for all polar (z) and thus provides an analytic

continuation to the Riemann surface of the logarithm.The formula also holds as stated

for (z in mathbb{C}) with (Re(z) > 0).

By lifting to the principal branch, we obtain an analytic function on the

cut complex plane.Examples

>>> from sympy import Ci >>> from sympy.abc import z

The cosine integral is a primitive of (cos(z)/z):

>>> Ci(z).diff(z) cos(z)/z

It has a logarithmic branch point at the origin:

>>> from sympy import exp_polar, I, pi >>> Ci(z*exp_polar(2*I*pi)) Ci(z) + 2*I*pi

The cosine integral behaves somewhat like ordinary (cos) under

multiplication by (i):>>> from sympy import polar_lift >>> Ci(polar_lift(I)*z) Chi(z) + I*pi/2 >>> Ci(polar_lift(-1)*z) Ci(z) + I*pi

It can also be expressed in terms of exponential integrals:

>>> from sympy import expint >>> Ci(z).rewrite(expint) -expint(1, z*exp_polar(-I*pi/2))/2 - expint(1, z*exp_polar(I*pi/2))/2

See also

Si-

Sine integral.

Shi-

Hyperbolic sine integral.

Chi-

Hyperbolic cosine integral.

Ei-

Exponential integral.

expint-

Generalised exponential integral.

E1-

Special case of the generalised exponential integral.

li-

Logarithmic integral.

Li-

Offset logarithmic integral.

References

- class sympy.functions.special.error_functions.Shi(z)[source]#

-

Sinh integral.

Explanation

This function is defined by

[operatorname{Shi}(z) = int_0^z frac{sinh{t}}{t} mathrm{d}t.]

It is an entire function.

Examples

>>> from sympy import Shi >>> from sympy.abc import z

The Sinh integral is a primitive of (sinh(z)/z):

>>> Shi(z).diff(z) sinh(z)/z

It is unbranched:

>>> from sympy import exp_polar, I, pi >>> Shi(z*exp_polar(2*I*pi)) Shi(z)

The (sinh) integral behaves much like ordinary (sinh) under

multiplication by (i):>>> Shi(I*z) I*Si(z) >>> Shi(-z) -Shi(z)

It can also be expressed in terms of exponential integrals, but beware

that the latter is branched:>>> from sympy import expint >>> Shi(z).rewrite(expint) expint(1, z)/2 - expint(1, z*exp_polar(I*pi))/2 - I*pi/2

See also

Si-

Sine integral.

Ci-

Cosine integral.

Chi-

Hyperbolic cosine integral.

Ei-