New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and

privacy statement. We’ll occasionally send you account related emails.

Already on GitHub?

Sign in

to your account

Open

Nizami91 opened this issue

Oct 25, 2019

· 11 comments

Open

Error! (file) is not UTF-8 encoded

#5003

Nizami91 opened this issue

Oct 25, 2019

· 11 comments

Comments

I cannot open the saved .npy file. As I click on the file to open it, I get the following text:

Error! C:UsersOzgunworkspacesaved_test.npy is not UTF-8 encoded

Saving disabled.

See Console for more details.

Please help.

It seems like this problem already has a solution here at an audio-classification repository.

I was facing a similar problem because I was trying to open a compressed(zipped) folder that had an extension ‘.ipynb’. So I moved the actual IPYNB File in it to ‘Desktop’ and was then able to access it using jupyter.

Я столкнулся с аналогичной проблемой, потому что я пытался открыть сжатую(сжатую) папку, которая имела расширение ‘.ипынб’. Поэтому я переместил фактический файл IPYNB в нем на «рабочий стол», а затем смог получить доступ к нему с помощью jupyter.

The exact same problem. The .gz format archive broke the .ipynb file. Do not write in detail what they did?

Just double check the file format you are trying to open. is it ‘ipynb’?

It seems like this problem already has a solution here at an audio-classification repository.

No it isn’t. This is a circular reference that refers me back to this thread. Sounds like this is a Stack Overflow forum.

I had the same problem,have you solved it?I’m on the verge of collapse.

Hi @xiaodong-Ren — If you could provide traceback and/or contextual information regarding, others may be able to assist. Thanks.

f = open(«CORONA.csv»,»w»,encoding=’utf-8′). Use it behind and it will resolve the problem

This just means -«jupyter cannot read this file you need another viewer to see this file » it just a misleading error message. You just need to open this file where you saved it

i.e C:UsersOzgunworkspacesaved_test.npy through your File Explorer.

or you could originally encode it as ‘utf-8’ when you opened this file i.e file = open(filename, ‘w’, encoding=’utf-8′)

Hope this helps!

Yes, the jupyter notebook cant read that file, you need to open it where you have saved it.

Thanks, Vallabh1822, saved my day.

So we’ve all gotten that error, you download a CSV from the web or get emailed it from your manager, who wants analysis done ASAP, and you find a card in your Kanban labelled URGENT AFF,so you open up VSCode, import Pandas and then type the following: pd.read_csv('some_important_file.csv').

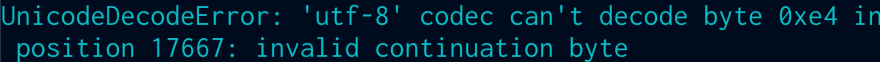

Now, instead of the actual import happening, you get the following, near un-interpretable stacktrace:

What does that even mean?! And what the heck is utf-8. As a brief primer/crash course, your computer (like all computers), stores everything as bits (or series of ones and zeros). Now, in order to represent human-readable things (think letters) from ones and zeros, the Internet Assigned Numbers Authority came together and came up with the ASCII mappings. These basically map bytes (binary bits) to codes (in base-10, so numbers) which represent various characters. For example, 00111111 is the binary for 063 which is the code for ?.

These letters then come together to form words which form sentences. The number of unique characters that ASCII can handle is limited by the number of unique bytes (combinations of 1 and 0) available. However, to summarize: using 8 bits allows for 256 unique characters which is NO where close in handling every single character from every single language. This is where Unicode comes in; unicode assigns a «code points» in hexadecimal to each character. For example U+1F602 maps to 😂. This way, there are potentially millions of combinations, and is far broader than the original ASCII.

UTF-8

UTF-8 translates Unicode characters to a unique binary string, and vice versa. However, UTF-8, as its name suggests, uses an 8-bit word (similar to ASCII), to save memory. This is similar to a technique known as Huffman Coding which represents the most-used characters or tokens as the shortest words. This is intuitive in the sense that, we can afford to assign tokens used the least to larger bytes, as they are less likely to be sent together. If every character would be sent in 4 bytes instead, every text file you have would take up four times the space.

Caveat

However, this also means that the number of characters encoded by specifically UTF-8, is limited (just like ASCII). There are other UTFs (such as 16), however, this raises a key limitation, especially in the field of data science: sometimes we either don’t need the non-UTF characters or can’t process them, or we need to save on space. Therefore, here are three ways I handle non-UTF-8 characters for reading into a Pandas dataframe:

Find the correct Encoding Using Python

Pandas, by default, assumes utf-8 encoding every time you do pandas.read_csv, and it can feel like staring into a crystal ball trying to figure out the correct encoding. Your first bet is to use vanilla Python:

with open('file_name.csv') as f:

print(f)

Enter fullscreen mode

Exit fullscreen mode

Most of the time, the output resembles the following:

<_io.TextIOWrapper name='file_name.csv' mode='r' encoding='utf16'>

Enter fullscreen mode

Exit fullscreen mode

.

If that fails, we can move onto the second option

Find Using Python Chardet

chardet is a library for decoding characters, once installed you can use the following to determine encoding:

import chardet

with open('file_name.csv') as f:

chardet.detect(f)

Enter fullscreen mode

Exit fullscreen mode

The output should resemble the following:

{'encoding': 'EUC-JP', 'confidence': 0.99}

Enter fullscreen mode

Exit fullscreen mode

Finally

The last option is using the Linux CLI (fine, I lied when I said three methods using Pandas)

iconv -f utf-8 -t utf-8 -c filepath -o CLEAN_FILE

Enter fullscreen mode

Exit fullscreen mode

- The first

utf-8afterfdefined what we think the original file format is -

tis the target file format we wish to convert to (in this caseutf-8) -

cskips ivalid sequences -

ooutputs the fixed file to an actual filepath (instead of the terminal)

Now that you have your encoding, you can go on to read your CSV file successfully by specifying it in your read_csv command such as here:

pd.read_csv("some_csv.txt", encoding="not utf-8")

Enter fullscreen mode

Exit fullscreen mode

PEP: Python3 and UnicodeDecodeError

This is a PEP describing the behaviour of Python3 on UnicodeDecodeError. It’s a draft, don’t hesitate to comment it. This document suppose that my patch to allow bytes filenames is accepted which is not the case today.

While I was writing this document I found poential problems in Python3. So here is a TODO list (things to be checked):

- FIXME: When bytearray is accepted or not?

- FIXME: Allow bytes/str mix for shutil.copy*()? The ignore callback will get bytes or unicode?

Can anyone write a section about bytes encoding in Unicode using escape sequence?

What is the best tool to work on a PEP? I hate email threads, and I would prefer SVN / Mercurial / anything else.

Python3 and UnicodeDecodeError for the command line, environment variables and filenames

Introduction

Python3 does its best to give you texts encoded as a valid unicode characters strings. When it hits an invalid bytes sequence (according to the used charset), it has two choices: drops the value or raises an UnicodeDecodeError. This document present the behaviour of Python3 for the command line, environment variables and filenames.

Example of an invalid bytes sequence: ::

>>> str(b'xff', 'utf8') UnicodeDecodeError: 'utf8' codec can't decode byte 0xff (...)

whereas the same byte sequence is valid in another charset like ISO-8859-1: ::

>>> str(b'xff', 'iso-8859-1') 'ÿ'

Default encoding

Python uses «UTF-8» as the default Unicode encoding. You can read the default charset using sys.getdefaultencoding(). The «default encoding» is used by PyUnicode_FromStringAndSize().

A function sys.setdefaultencoding() exists, but it raises a ValueError for charset different than UTF-8 since the charset is hardcoded in PyUnicode_FromStringAndSize().

Command line

Python creates a nice unicode table for sys.argv using mbstowcs(): ::

$ ./python -c 'import sys; print(sys.argv)' 'Ho hé !' ['-c', 'Ho hé !']

On Linux, mbstowcs() uses LC_CTYPE environement variable to choose the encoding. On an invalid bytes sequence, Python quits directly with an exit code 1. Example with UTF-8 locale:

$ python3.0 $(echo -e 'invalid:xff') Could not convert argument 1 to string

Environment variables

Python uses «_wenviron» on Windows which are contains unicode (UTF-16-LE) strings. On other OS, it uses «environ» variable and the UTF-8 charset. It drops a variable if its key or value is not convertible to unicode. Example:

env -i HOME=/home/my PATH=$(echo -e "xff") python

>>> import os; list(os.environ.items())

[('HOME', '/home/my')]

Both key and values are unicode strings. Empty key and/or value are allowed.

Python ignores invalid variables, but values still exist in memory. If you run a child process (eg. using os.system()), the «invalid» variables will also be copied.

Filenames

Introduction

Python2 uses byte filenames everywhere, but it was also possible to use unicode filenames. Examples:

- os.getcwd() gives bytes whereas os.getcwdu() always returns unicode

-

os.listdir(unicode) creates bytes or unicode filenames (fallback to bytes on UnicodeDecodeError), os.readlink() has the same behaviour

- glob.glob() converts the unicode pattern to bytes, and so create bytes filenames

- open() supports bytes and unicode

Since listdir() mix bytes and unicode, you are not able to manipulate easily filenames:

>>> path=u'.'

>>> for name in os.listdir(path):

... print repr(name)

... print repr(os.path.join(path, name))

...

u'valid'

u'./valid'

'invalidxff'

Traceback (most recent call last):

...

File "/usr/lib/python2.5/posixpath.py", line 65, in join

path += '/' + b

UnicodeDecodeError: 'ascii' codec can't decode byte 0xff (...)

Python3 supports both types, bytes and unicode, but disallow mixing them. If you ask for unicode, you will always get unicode or an exception is raised.

You should only use unicode filenames, except if you are writing a program fixing file system encoding, a backup tool or you users are unable to fix their broken system.

Windows

Microsoft Windows since Windows 95 only uses Unicode (UTF-16-LE) filenames. So you should only use unicode filenames.

Non Windows (POSIX)

POSIX OS like Linux uses bytes for historical reasons. In the best case, all filenames will be encoded as valid UTF-8 strings and Python creates valid unicode strings. But since system calls uses bytes, the file system may returns an invalid filename, or a program can creates a file with an invalid filename.

An invalid filename is a string which can not be decoded to unicode using the default file system encoding (which is UTF-8 most of the time).

A robust program will have to use only the bytes type to make sure that it can open / copy / remove any file or directory.

Filename encoding

Python use:

- «mbcs» on Windows

- or «utf-8» on Mac OS X

- or nl_langinfo(CODESET) on OS supporting this function

- or UTF-8 by default

«mbcs» is not a valid charset name, it’s an internal charset saying that Python will use the function MultiByteToWideChar() to decode bytes to unicode. This function uses the current codepage to decode bytes string.

You can read the charset using sys.getfilesystemencoding(). The function may returns None if Python is unable to determine the default encoding.

PyUnicode_DecodeFSDefaultAndSize() uses the default file system encoding, or UTF-8 if it is not set.

On UNIX (and other operating systems), it’s possible to mount different file systems using different charsets. sys.getdefaultencoding() will be the same for the different file systems since this encoding is only used between Python and the Linux kernel, not between the kernel and the file system which may uses a different charset.

Display a filename

Example of a function formatting a filename to display it to human eyes: ::

from sys import getfilesystemencoding

def format_filename(filename):

return str(filename, getfilesystemencoding(), 'replace')

Example: format_filename(‘rxffport.doc’) gives ‘r�port.doc’ with the UTF-8 encoding.

Functions producing filenames

Policy: for unicode arguments: drop invalid bytes filenames; for bytes arguments: return bytes

- os.listdir()

- glob.glob()

This behaviour (drop silently invalid filenames) is motivated by the fact to if a directory of 1000 files only contains one invalid file, listdir() fails for the whole directory. Or if your directory contains 1000 python scripts (.py) and just one another document with an invalid filename (eg. r�port.doc), glob.glob(‘*.py’) fails whereas all .py scripts have valid filename.

Policy: for an unicode argument: raise an UnicodeDecodeError on invalid filename; for an bytes argument: return bytes

- os.readlink()

Policy: create unicode directory or raise an UnicodeDecodeError

- os.getcwd()

Policy: always returns bytes

- os.getcwdb()

Functions for filename manipulation

Policy: raise TypeError on bytes/str mix

- os.path.*(), eg. os.path.join()

- fnmatch.*()

Functions accessing files

Policy: accept both bytes and str

- io.open()

- os.open()

- os.chdir()

- os.stat(), os.lstat()

- os.rename()

- os.unlink()

- shutil.*()

os.rename(), shutil.copy*(), shutil.move() allow to use bytes for an argment, and unicode for the other argument

bytearray

In most cases, bytearray() can be used as bytes for a filename.

Unicode normalisation

Unicode characters can be normalized in 4 forms: NFC, NFD, NFKC or NFKD. Python does never normalize strings (nor filenames). No operating system does normalize filenames. So the users using different norms will be unable to retrieve their file. Don’t panic! All users use the same norm.

Use unicodedata.normalize() to normalize an unicode string.

В python есть 2 объекта работающими с текстом: unicode и str, объект unicode хранит символы в формате (кодировке) unicode, объект str является набором байт/символов в которых python хранит остальные кодировки (utf8, cp1251, cp866, koi8-r и др).

Кодировку unicode можно считать рабочей кодировкой питона т.к. она предназначена для её использования в самом скрипте — для разных операций над строками.

Внешняя кодировка (объект str) предназначена для хранения и передачи текстовой информации вне скрипта, например для сохранения в файл или передачи по сети. Поэтому в данной статье я её назвал внешней. Самой используемой кодировкой в мире является utf8 и число приложений переходящих на эту кодировку растет каждый день, таким образом превращаясь в «стандарт».

Эта кодировка хороша тем что для хранения текста она занимает оптимальное кол-во памяти и с помощью её можно закодировать почти все языки мира ( в отличие от cp1251 и подобных однобайтовых кодировок). Поэтому рекомендуется везде использовать utf8, и при написании скриптов.

Использование

Скрипт питона, в самом начале скрипта указываем кодировку файла и сохраняем в ней файл

# coding: utf8

либо

# -*- coding: utf-8 -*-

для того что-бы интерпретатор python понял в какой кодировке файл

Строки в скрипте

Строки в скрипте хранятся байтами, от кавычки до кавычки:

print 'Привет'

= 6 байт при cp1251

= 12 байт при utf8

Если перед строкой добавить символ u, то при запуске скрипта, эта байтовая строка будет декодирована в unicode из кодировки указанной в начале:

# coding:utf8 print u'Привет'

и если кодировка содержимого в файле отличается от указанной, то в строке могут быть «битые символы»

Загрузка и сохранение файла

# coding: utf8

# Загружаем файл с кодировкай utf8

text = open('file.txt','r').read()

# Декодируем из utf8 в unicode - из внешней в рабочую

text = text.decode('utf8')

# Работаем с текстом

text += text

# Кодируем тест из unicode в utf8 - из рабочей во внешнюю

text = text.encode('utf8')

# Сохраняем в файл с кодировкий utf8

open('file.txt','w').write(text)

Текст в скрипте

# coding: utf8

a = 'Текст в utf8'

b = u'Текст в unicode'

# Эквивалентно: b = 'Текст в unicode'.decode('utf8')

# т.к. сам скрипт хранится в utf8

print 'a =',type(a),a

# декодируем из utf-8 в unicode и далее unicode в cp866 (кодировка консоли winXP ru)

print 'a2 =',type(a),a.decode('utf8').encode('cp866')

print 'b =',type(b),b

Процедуре print текст желательно передавать в рабочей кодировке либо кодировать в кодировку ОС.

Результат скрипта при запуске из консоли windows XP:

a = ╨в╨╡╨║╤Б╤В ╨▓ utf8

a2 = Текст в utf8

b = Текст в unicode

В последней строке print преобразовал unicode в cp866 автоматический, см. следующий пункт

Авто-преобразование кодировки

В некоторых случаях для упрощения разработки python делает преобразование кодировки, пример с методом print можно посмотреть в предыдущем пункте.

В примере ниже, python сам переводит utf8 в unicode — приводит к одной кодировке для того что-бы сложить строки.

# coding: utf8

# Устанавливаем стандартную внешнюю кодировку = utf8

import sys

reload(sys)

sys.setdefaultencoding('utf8')

a = 'Текст в utf8'

b = u'Текст в unicode'

c = a + b

print 'a =',type(a),a

print 'b =',type(b),b

print 'c =',type(c),c

Результат

a = Текст в utf8

b = Текст в unicode

c = Текст в utf8Текст в unicode

Как видим результирующая строка «c» в unicode. Если бы кодировки строк совпадали то авто-перекодирования не произошло бы и результирующая строка содержала кодировку слагаемых строк.

Авто-перекодирование обычно срабатывает когда происходит взаимодействие разных кодировок.

Пример авто-преобразования кодировок в сравнении

# coding: utf8

# Устанавливаем стандартную внешнюю кодировку = utf8

import sys

reload(sys)

sys.setdefaultencoding('utf8')

print '1. utf8 and unicode', 'true' if u'Слово'.encode('utf8') == u'Слово' else 'false'

print '2. utf8 and cp1251', 'true' if u'Слово'.encode('utf8') == u'Слово'.encode('cp1251') else 'false'

print '3. cp1251 and unicode', 'true' if u'Слово'.encode('cp1251') == u'Слово' else 'false'

Результат

1. utf8 and unicode true

2. utf8 and cp1251 false

script.py:10: UnicodeWarning: Unicode equal comparison failed to convert both arguments to Unicode — interpreting them as being unequal

print ‘3. cp1251 and unicode’, ‘true’ if u’Слово’.encode(‘cp1251′) == u’Слово’ else ‘false’

3. cp1251 and unicode false

В сравнении 1, кодировка utf8 преобразовалась в unicode и сравнение произошло корректно.

В сравнении 2, сравниваются кодировки одного вида — обе внешние, т.к. кодированы они в разных кодировках условие выдало что они не равны.

В сравнении 3, выпало предупреждение из за того что выполняется сравнение кодировок разного вида — рабочая и внешняя, а авто-декодирование не произошло т.к. стандартная внешняя кодировка = utf8, и декодировать строку в кодировке cp1251 методом utf8 питон не смог.

Вывод списков

# coding: utf8 d = ['Тест','списка'] print '1',d print '2',d.__repr__() print '3',','.join(d)

Результат:

1 [‘xd0xa2xd0xb5xd1x81xd1x82’, ‘xd1x81xd0xbfxd0xb8xd1x81xd0xbaxd0xb0’]

2 [‘xd0xa2xd0xb5xd1x81xd1x82’, ‘xd1x81xd0xbfxd0xb8xd1x81xd0xbaxd0xb0’]

3 Тест,списка

При выводе списка, происходит вызов [{repr}]() который возвращает внутреннее представление этого спиcка — print 1 и 2 являются аналогичными. Для корректного вывода списка, его нужно преобразовать в строку — print 3.

Установка внешней кодировки при запуске

PYTHONIOENCODING=utf8 python 1.py

В обучении ребенка важно правильное толкование окружающего его мира. Существует масса полезных журналов которые начнут экологическое воспитание дошкольников правильным путем. Развивать интерес к окружающему миру очень трудный но интересный процесс, уделите этому особое внимание.