I am trying to set up a iSCSI target:

[root@localhost /]# targetcli

targetcli shell version 2.1.fb37

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> cd backstores/fileio

/backstores/fileio> create disk01 /iscsi_disks/disk01.img 5G

Created fileio disk01 with size 5368709120

/backstores/fileio> cd /iscsi

/iscsi> create iqn.2015-06.world.server:storage.target01

Created target iqn.2015-06.world.server:storage.target01.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/iscsi> cd iqn.2015-06.world.server:storage.target01/tpg1/luns

/iscsi/iqn.20...t01/tpg1/luns> create /backstores/fileio/disk01

Created LUN 0.

/iscsi/iqn.20...t01/tpg1/luns> cd ../acls

/iscsi/iqn.20...t01/tpg1/acls> create iqn.2015-06.world.server:www.server.world

Created Node ACL for iqn.2015-06.world.server:www.server.world

Created mapped LUN 0.

/iscsi/iqn.20...t01/tpg1/acls> cd iqn.2015-06.world.server:www.server.world/

/iscsi/iqn.20....server.world> set auth userid=foo

Parameter userid is now 'foo'.

/iscsi/iqn.20....server.world> set auth password=bar

Parameter password is now 'bar'.

/iscsi/iqn.20....server.world> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

[root@localhost /]# service iscsid restart

Redirecting to /bin/systemctl restart iscsid.service

[root@localhost /]# service iscsi restart

Redirecting to /bin/systemctl restart iscsi.service

Here’s the structure (open image in a new window to see it full size):

Discovery seems to work fine:

[root@linuxbox ~]# iscsiadm -m discovery -t sendtargets -p 10.0.0.60

10.0.0.60:3260,1 iqn.2015-06.world.server:storage.target01

However, when I try to connect, I get this authorization failure message:

[root@linuxbox ~]# iscsiadm -m node --targetname "iqn.2015-06.world.server:storage.target01" --portal "10.0.0.60:3260" --login

Logging in to [iface: default, target: iqn.2015-06.world.server:storage.target01, portal: 10.0.0.60,3260] (multiple)

iscsiadm: Could not login to [iface: default, target: iqn.2015-06.world.server:storage.target01, portal: 10.0.0.60,3260].

iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

Содержание

- Ben.T. George

- Sr. Technical Engineer

- Feb.07

- Ubuntu: iscsiadm: initiator reported error (24 – iSCSI login failed due to authorization failure)

- Server

- Client

- iSCSI login failed due to authorization failure #13

- Comments

- iSCSI Setup with FlashArray

- Seneca-CD Research Project

Ben.T. George

Sr. Technical Engineer

- Linux

- Ubuntu: iscsiadm: initiator reported error (24.

Feb.07

Ubuntu: iscsiadm: initiator reported error (24 – iSCSI login failed due to authorization failure)

i was trying to export one iscsi share from centOS server and my client is Ubuntu server.

well i know this will work on CentOS/Redhat without any changes and i was under impression that , on ubuntu also will get same behavior.

On CentOS, below are the commands to create Iscsi target

Server

Install and enable services:

yum -y install target*

systemctl start target && systemctl enable target

# targetcli /backstores/block create name=ubuntu-iscsi dev=/dev/cl_centos/lv_scsi

# targetcli /iscsi create iqn.2017-02.com.example.kw:centos

# targetcli /iscsi/iqn.2017-02.com.example.kw:centos/tpg1/acls create iqn.2017-02.com.example.kw:ubuntu-archive

# targetcli /iscsi/iqn.2017-02.com.example.kw:centos/tpg1/luns create /backstores/block/ubuntu-iscsi

# targetcli /iscsi/iqn.2017-02.com.example.kw:centos/tpg1/portals delete 0.0.0.0 3260

# targetcli /iscsi/iqn.2017-02.com.example.kw:centos/tpg1/portals create 192.168.1.66 3260

# targetcli saveconfig

Allow TCP port if firewalld is enabled.

# firewall-cmd –permanent –add-port=3260/tcp

All set from from target(server) side.

Client

On client side install iscsi packages

# apt-get -y install open-iscsi

edit /etc/iscsi/iscsid.conf and change like below

# To request that the iscsi initd scripts startup a session set to “automatic”.

# node.startup = automatic

node.startup = automatic

and i manually edited /etc/iscsi/initiatorname.iscsi , added iqn number taken from server.Which is

# service iscsid restart

Do the discovery

# iscsiadm -m discovery –type sendtargets –portal 192.168.1.66

And finally Login to target

# iscsiadm -m node –targetname iqn.2017-02.com.example.kw:centos –portal 192.168.1.66 –login

After this , the newly added disk will be displayed under fdisk. Below is the syslog

Источник

login iqn.2003-01.com.redhat.iscsi-gw:ceph-igw failed

I don’t understand gwcli set chap under client name.

- does iscsiadm need to specify the name of the client when it login to target?

2.if the client name have some rules,such astarget-name:client-name?

Login result:

iscsiadm -m node -T iqn.2003-01.com.redhat.iscsi-gw:ceph-igw -l

Logging in to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.131,3260] (multiple)

Logging in to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.130,3260] (multiple)

iscsiadm: Could not login to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.131,3260].

iscsiadm: initiator reported error (24 — iSCSI login failed due to authorization failure)

iscsiadm: Could not login to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.130,3260].

iscsiadm: initiator reported error (24 — iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

Prepare for login:

vi /etc/iscsi/iscsid.conf

node.session.auth.authmethod = CHAP

node.session.auth.username = consumer

node.session.auth.password = 012345678912

/> ls

o- / . [. ]

o- clusters . [Clusters: 1]

| o- c1 . [HEALTH_WARN]

| o- pools . [Pools: 1]

| | o- rbd . [(x3), Commit: 384M/6G (6%), Used: 3K]

| o- topology . [OSDs: 1,MONs: 1]

o- disks . [384M, Disks: 3]

| o- rbd.disk_1 . [disk_1 (128M)]

| o- rbd.disk_2 . [disk_2 (128M)]

| o- rbd.disk_3 . [disk_3 (128M)]

o- iscsi-target . [Targets: 1]

o- iqn.2003-01.com.redhat.iscsi-gw:ceph-igw . [Gateways: 2]

o- gateways . [Up: 2/2, Portals: 2]

| o- node0 . [172.16.121.130 (UP)]

| o- node1 . [172.16.121.131 (UP)]

o- host-groups . [Groups : 0]

o- hosts . [Hosts: 3]

o- iqn.1994-05.com.redhat:rh7-client . [Auth: None, Disks: 0(0b)]

o- iqn.1994-05.com.redhat:xx . [Auth: CHAP, Disks: 1(128M)]

| o- lun 0 . [rbd.disk_1(128M), Owner: node1]

o- iqn.2003-01.com.redhat.iscsi-gw:nike [Auth: CHAP, Disks: 1(128M)]

o- lun 0 . [rbd.disk_2(128M), Owner: node0]

- (_get_rbd_config) config object contains ‘ <

«clients»: <

«iqn.1994-05.com.redhat:rh7-client»:<

«auth»: <

«chap»: «»

>,

«created»: «2017/08/28 06:08:53»,

«group_name»: «»,

«luns»: <>,

«updated»: «2017/08/28 06:08:53»

>,

«iqn.1994-05.com.redhat:xx»:<

«auth»: <

«chap»: «consumer/012345678912»

>,

«created»: «2017/08/29 03:05:00»,

«group_name»: «»,

«luns»: <

«rbd.disk_1»: <

«lun_id»: 0

>

>,

«updated»: «2017/08/29 06:20:45»

>,

«iqn.2003-01.com.redhat.iscsi-gw:nike»:<

«auth»: <

«chap»: «consumer/012345678912»

>,

«created»: «2017/08/29 07:07:28»,

«group_name»: «»,

«luns»: <

«rbd.disk_2»: <

«lun_id»: 0

>

>,

«updated»: «2017/08/29 07:11:57»

>

>,

«created»: «2017/08/28 03:26:42»,

«disks»: <

«rbd.disk_1»: <

«created»: «2017/08/29 02:41:52»,

«image»: «disk_1»,

«owner»: «node1»,

«pool»: «rbd»,

«pool_id»: 1,

«updated»: «2017/08/29 02:41:52»,

«wwn»: «95c0d3a9-96cf-4158-9341-88020e2cc8d7»

>,

«rbd.disk_2»: <

«created»: «2017/08/29 03:02:49»,

«image»: «disk_2»,

«owner»: «node0»,

«pool»: «rbd»,

«pool_id»: 1,

«updated»: «2017/08/29 03:02:49»,

«wwn»: «8383bdfa-1e94-4a94-ae59-c33a0dd7a737»

>,

«rbd.disk_3»: <

«created»: «2017/08/29 03:03:51»,

«image»: «disk_3»,

«owner»: «node1»,

«pool»: «rbd»,

«pool_id»: 1,

«updated»: «2017/08/29 03:03:51»,

«wwn»: «d80cc287-4b16-408b-932a-b5ede4e5bc96»

>

>,

«epoch»: 20,

«gateways»: <

«created»: «2017/08/28 03:31:58»,

«ip_list»: [

«172.16.121.131»,

«172.16.121.130»

],

«iqn»: «iqn.2003-01.com.redhat.iscsi-gw:ceph-igw»,

«node0»: <

«active_luns»: 1,

«created»: «2017/08/28 04:00:44»,

«gateway_ip_list»: [

«172.16.121.131»,

«172.16.121.130»

],

«inactive_portal_ips»: [

«172.16.121.131»

],

«iqn»: «iqn.2003-01.com.redhat.iscsi-gw:ceph-igw»,

«portal_ip_address»: «172.16.121.130»,

«tpgs»: 2,

«updated»: «2017/08/29 03:02:49»

>,

«node1»: <

«active_luns»: 2,

«created»: «2017/08/28 03:33:26»,

«gateway_ip_list»: [

«172.16.121.131»,

«172.16.121.130»

],

«inactive_portal_ips»: [

«172.16.121.130»

],

«iqn»: «iqn.2003-01.com.redhat.iscsi-gw:ceph-igw»,

«portal_ip_address»: «172.16.121.131»,

«tpgs»: 2,

«updated»: «2017/08/29 03:03:51»

>

>,

«groups»: <>,

«updated»: «2017/08/29 07:11:57»,

«version»: 3

>’

The text was updated successfully, but these errors were encountered:

Источник

iSCSI Setup with FlashArray

This blog post covers the steps required to connect FlashArray volumes over iSCSI on RHEL variant Linux systems (RHEL, Oracle Linux, CentOS).

Required package on RHEL for iSCSI

iscsi-initiator-utils

Multipathing package

device-mapper-multipath

High level process with iSCSI

- Setup iSCSI at the initiator

- Setup Host at the FlashArray level

- Discover the iSCSI targets

- Login to the iSCSI targets

- Create volumes, attach to the hosts, scan them and create the filesystems

1. To set up iSCSI at the host level, install the package iscsi-initiator-utils and start the iscsi service. Make sure to include the multipathing package as well.

] # systemctl enable iscsid Created symlink from /etc/systemd/system/multi-user.target.wants/iscsid.service to /usr/lib/systemd/system/iscsid.service.

] # systemctl start iscsid

] # systemctl status iscsid ● iscsid.service — Open-iSCSI

2. Setup the host at the FlashArray level by first getting the initiator’s IQN which can be found at /etc/iscsi/initiatorname.iscsi

]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1988-12.com.oracle:abd254f8bba0

Go the Pure FlashArray, click Storage on the left frame and select the Hosts tab. Click the + sign on the right side to create a new host. Provide the IQN name you got from the initiator.

Configure IQN for a host in FlashArray

3. On the host, discover the iSCSI targets

$ iscsiadm -m discovery -t st -p

] # iscsiadm -m discovery -t st -p 192.168.228.24

4. Create a volume on Pure FlashArray and attach to the host. Without a volume/lun attached to the host, trying to login to the target will result in following errors.

iscsiadm -m node –targetname iqn.2010-06.com.purestorage:flasharray.7b87f11aad2d06c8 -p 192.168.154.254 -l

5. Using the IQN information of the target, login to iSCSI target using iscsiadm command.

iscsiadm -m node –targetname -p –login

] # iscsiadm -m node —targetname iqn.2010-06.com.purestorage:flasharray.6fb3576b8af88fd5 -p 192.168.228.24 —login

Login on all the iSCSI ports on the target. As of now, we have connected only to the first iSCSI target IP and hence Purity GUI shows Uneven paths. Run the above login command across all available iscsi paths .

To login automatically on the host startup, run the following command to update the config at the host level. Do it on all the available iSCSI target IP addresses.

iscsiadm -m node -T -p –op update -n node.startup -v automatic

] # iscsiadm -m node —targetname iqn.2010-06.com.purestorage:flasharray.6fb3576b8af88fd5 -p 192.168.228.27 —op update -n node.startup -v automatic

] # iscsiadm -m node —targetname iqn.2010-06.com.purestorage:flasharray.6fb3576b8af88fd5 -p 192.168.228.26 —op update -n node.startup -v automatic

] # iscsiadm -m node —targetname iqn.2010-06.com.purestorage:flasharray.6fb3576b8af88fd5 -p 192.168.228.25 —op update -n node.startup -v automatic

] # iscsiadm -m node —targetname iqn.2010-06.com.purestorage:flasharray.6fb3576b8af88fd5 -p 192.168.228.24 —op update -n node.startup -v automatic

You can verify if the iSCSI setup is successful by checking at the FlashArray GUI. Click Health on the left frame and go to the Connections tab. Look for the Paths against the host you just added. If it shows “Unused Port” and if you don’t see any entries against the host initiator it means the host has not logged into the target.

Clicking on the “Unused Port” shows the connection map of the host ports.

After discovering and logging into the target from all the initiators, we can see that the Paths shows Redundant across all of them.

5. Create the volumes on Pure Flasharray and attach to the host.

Источник

Seneca-CD Research Project

To see a working iSCSI model in action, I’m going to have to have a remote server set up as an iSCSI Initiator Node. Though we do have a second machine, we decided that I should work in a virtual environment instead. So, I’m going to create a Virtual Machine by following the instructions on this post, and they’re going to be my remote servers for this experiment.

My main resources for these next few steps are:

Step 1: Set-up a Virtual Machine and Install “iscsi-initiator-utils”

Funny thing, I’ve set up my virtual machine, but my host can’t interact with it! No pings, no ssh, nothing. Some research later, I discovered that it was because I didn’t properly connect the VM to my host network adapter. An excellent tutorial on the subject can be found here (thanks to Haydn Solomon).

Now for some reason my virtual machine can’t communicate with its host machine. *sigh*

For now, I’m going to try to use my localhost as both a Target and an Initiator.

Installing the utilities to manage an iSCSI initiator is pretty easy with YUM:

Although, as it turns out, my machine already has these preloaded.

Step 2: Setting the Initiator Authentication

Similarly to tgt-admin, there is a tool called iscsiadm which allows us to do general administration for open-iscsi Initiator Nodes.

I’ll edit the open-iscsi daemon configuration file to contain the necessary target authentication details (as root):

First, enable CHAP authentication by uncommenting the line:

Then, add the CHAP Initiator and Target authentication details created on the Target node:

It is worth noting that the discovery of a Target itself by an Initiator node can be authenticated through CHAP in a similar way, in the same config file.

Step 3: Discovering the Target(s)

Now we get to play with iscsiadm:

- -m discovery is setting the operation mode to search for Targets

- -t sendtargets is telling the Targets to return their names

- -p 10.0.0.21 is explicitly defining which IP or host to scan for Target nodes.

And now I’m receiving an error message:

To save time, I specified localhost instead of an IP address and here’s what happened:

Good! Now to make sure the iSCSI daemon is set to start on boot:

I’ll check to make sure the target node is still registering with:

So far so good! Target acquired.

Step 4: Login to the Target, and Check Partitions

All that’s left is to log in to the Target node. If this is successful, I should be able to see the iSCSI partition I created for the Target Node as one of my local drives.

Logging in is simple after editing the .conf file:

or SHOULD be, except I’m getting this error:

After spending some time with the settings, I’ve discovered that Target Authentication (the credentials the Initiator provides to get access to the Target) is working properly, but Initiator Authentication (the credentials the Target provides to the Initiator) is causing some problems.

Rather than waste too much time here, I’m going to disable Initiator (also known as outgoing ) authentication by removing the outgoinguser details from /etc/tgt/targets.conf thusly:

And then disable outgoing authentication in /etc/iscsi/iscsid.conf:

Now, I must rediscover the Target Node, and then try to log in:

This time, it seems to have worked properly.

To confirm, I’ll logout of the session, check my partitions, then log in and redo the check:

It works!! Line 29 shows a new partition that only exists when the iSCSI session is active!

Conclusion

This was tough. There are some serious unknowns here, mainly because of the different tools involved. For next steps, it would be best to delve into the documentation of these tools and the projects associated with them so that I can create a substantial test conditions. In the meantime however, it is working!

Источник

login iqn.2003-01.com.redhat.iscsi-gw:ceph-igw failed

I don’t understand gwcli set chap under client name.

- does iscsiadm need to specify the name of the client when it login to target?

2.if the client name have some rules,such astarget-name:client-name?

Login result:

iscsiadm -m node -T iqn.2003-01.com.redhat.iscsi-gw:ceph-igw -l

Logging in to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.131,3260] (multiple)

Logging in to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.130,3260] (multiple)

iscsiadm: Could not login to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.131,3260].

iscsiadm: initiator reported error (24 — iSCSI login failed due to authorization failure)

iscsiadm: Could not login to [iface: default, target: iqn.2003-01.com.redhat.iscsi-gw:ceph-igw, portal: 172.16.121.130,3260].

iscsiadm: initiator reported error (24 — iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

Prepare for login:

vi /etc/iscsi/iscsid.conf

node.session.auth.authmethod = CHAP

node.session.auth.username = consumer

node.session.auth.password = 012345678912

restart iscsid

/> ls

o- / …………………………………………………….. […]

o- clusters ……………………………………… [Clusters: 1]

| o- c1 …………………………………………. [HEALTH_WARN]

| o- pools ……………………………………….. [Pools: 1]

| | o- rbd ………………. [(x3), Commit: 384M/6G (6%), Used: 3K]

| o- topology ………………………………. [OSDs: 1,MONs: 1]

o- disks ……………………………………… [384M, Disks: 3]

| o- rbd.disk_1 ………………………………… [disk_1 (128M)]

| o- rbd.disk_2 ………………………………… [disk_2 (128M)]

| o- rbd.disk_3 ………………………………… [disk_3 (128M)]

o- iscsi-target …………………………………… [Targets: 1]

o- iqn.2003-01.com.redhat.iscsi-gw:ceph-igw ……….. [Gateways: 2]

o- gateways …………………………… [Up: 2/2, Portals: 2]

| o- node0 ……………………………. [172.16.121.130 (UP)]

| o- node1 ……………………………. [172.16.121.131 (UP)]

o- host-groups ………………………………… [Groups : 0]

o- hosts ……………………………………….. [Hosts: 3]

o- iqn.1994-05.com.redhat:rh7-client . [Auth: None, Disks: 0(0b)]

o- iqn.1994-05.com.redhat:xx ……. [Auth: CHAP, Disks: 1(128M)]

| o- lun 0 ………………… [rbd.disk_1(128M), Owner: node1]

o- iqn.2003-01.com.redhat.iscsi-gw:nike [Auth: CHAP, Disks: 1(128M)]

o- lun 0 ………………… [rbd.disk_2(128M), Owner: node0]

rbd-target-api.log:

- (_get_rbd_config) config object contains ‘{

«clients»: {

«iqn.1994-05.com.redhat:rh7-client»: {

«auth»: {

«chap»: «»

},

«created»: «2017/08/28 06:08:53»,

«group_name»: «»,

«luns»: {},

«updated»: «2017/08/28 06:08:53»

},

«iqn.1994-05.com.redhat:xx»: {

«auth»: {

«chap»: «consumer/012345678912»

},

«created»: «2017/08/29 03:05:00»,

«group_name»: «»,

«luns»: {

«rbd.disk_1»: {

«lun_id»: 0

}

},

«updated»: «2017/08/29 06:20:45»

},

«iqn.2003-01.com.redhat.iscsi-gw:nike»: {

«auth»: {

«chap»: «consumer/012345678912»

},

«created»: «2017/08/29 07:07:28»,

«group_name»: «»,

«luns»: {

«rbd.disk_2»: {

«lun_id»: 0

}

},

«updated»: «2017/08/29 07:11:57»

}

},

«created»: «2017/08/28 03:26:42»,

«disks»: {

«rbd.disk_1»: {

«created»: «2017/08/29 02:41:52»,

«image»: «disk_1»,

«owner»: «node1»,

«pool»: «rbd»,

«pool_id»: 1,

«updated»: «2017/08/29 02:41:52»,

«wwn»: «95c0d3a9-96cf-4158-9341-88020e2cc8d7»

},

«rbd.disk_2»: {

«created»: «2017/08/29 03:02:49»,

«image»: «disk_2»,

«owner»: «node0»,

«pool»: «rbd»,

«pool_id»: 1,

«updated»: «2017/08/29 03:02:49»,

«wwn»: «8383bdfa-1e94-4a94-ae59-c33a0dd7a737»

},

«rbd.disk_3»: {

«created»: «2017/08/29 03:03:51»,

«image»: «disk_3»,

«owner»: «node1»,

«pool»: «rbd»,

«pool_id»: 1,

«updated»: «2017/08/29 03:03:51»,

«wwn»: «d80cc287-4b16-408b-932a-b5ede4e5bc96»

}

},

«epoch»: 20,

«gateways»: {

«created»: «2017/08/28 03:31:58»,

«ip_list»: [

«172.16.121.131»,

«172.16.121.130»

],

«iqn»: «iqn.2003-01.com.redhat.iscsi-gw:ceph-igw»,

«node0»: {

«active_luns»: 1,

«created»: «2017/08/28 04:00:44»,

«gateway_ip_list»: [

«172.16.121.131»,

«172.16.121.130»

],

«inactive_portal_ips»: [

«172.16.121.131»

],

«iqn»: «iqn.2003-01.com.redhat.iscsi-gw:ceph-igw»,

«portal_ip_address»: «172.16.121.130»,

«tpgs»: 2,

«updated»: «2017/08/29 03:02:49»

},

«node1»: {

«active_luns»: 2,

«created»: «2017/08/28 03:33:26»,

«gateway_ip_list»: [

«172.16.121.131»,

«172.16.121.130»

],

«inactive_portal_ips»: [

«172.16.121.130»

],

«iqn»: «iqn.2003-01.com.redhat.iscsi-gw:ceph-igw»,

«portal_ip_address»: «172.16.121.131»,

«tpgs»: 2,

«updated»: «2017/08/29 03:03:51»

}

},

«groups»: {},

«updated»: «2017/08/29 07:11:57»,

«version»: 3

}’

-

#1

Hello everyone!

I encounter problem with setting iscsi and chap on my proxmox server.

I had a disk bay HPE MSA1060 where a set a record for chap authentication:

initiatorname : the iqn find on the proxmox server in /etc/iscsi/initiatorname.iscsi

password: test1234test

on the proxmox server, in /etc/iscsi/iscsi.conf i set :

# To enable CHAP authentication set node.session.auth.authmethod

# to CHAP. The default is None.

node.session.auth.authmethod = CHAP

# To configure which CHAP algorithms to enable set

# node.session.auth.chap_algs to a comma seperated list.

# The algorithms should be listen with most prefered first.

# Valid values are MD5, SHA1, SHA256, and SHA3-256.

# The default is MD5.

#node.session.auth.chap_algs = SHA3-256,SHA256,SHA1,MD5

# To set a CHAP username and password for initiator

# authentication by the target(s), uncomment the following lines:

node.session.auth.username = username

node.session.auth.password = test1234test

# To set a CHAP username and password for target(s)

# authentication by the initiator, uncomment the following lines:

#node.session.auth.username_in = username_in

#node.session.auth.password_in = password_in

# To enable CHAP authentication for a discovery session to the target

# set discovery.sendtargets.auth.authmethod to CHAP. The default is None.

discovery.sendtargets.auth.authmethod = CHAP

# To set a discovery session CHAP username and password for the initiator

# authentication by the target(s), uncomment the following lines:

discovery.sendtargets.auth.username = username

discovery.sendtargets.auth.password = test1234test

when i try a lsscsi, it dit not find my hpe msa.

when i try a iscsiadm -m node —portal «192.168.0.1» —login it returns:

Logging in to [iface: default, target: iqn.xxx.hpe:storage.msa1060.xxxxxxx, portal: 192.168.0.1,3260]

iscsiadm: Could not login to [iface: default, target: iqn.xxxxx.hpe:storage.msa1060.xxxxxxxx, portal: 192.168.0.1,3260].

iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

(i change the ip for the post, and change the iqn)

so, i dont know how to make it works…

Someone can help me ?

When i try to unset chap on my hpe msa, and comment all the line in /etc/iscsi/iscsi.conf for the chap auth, lsscsi find the volumes from my hpe msa, and the iscsiadm command return no error.

So, there is something i missed for chap, but i dont know what…

-

#2

when i try a iscsiadm -m node —portal «192.168.0.1» —login it returns:

Logging in to [iface: default, target: iqn.xxx.hpe:storage.msa1060.xxxxxxx, portal: 192.168.0.1,3260] iscsiadm: Could not login to [iface: default, target: iqn.xxxxx.hpe:storage.msa1060.xxxxxxxx, portal: 192.168.0.1,3260]. iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure) iscsiadm: Could not log into all portals

(i change the ip for the post, and change the iqn)

The error message is likely correct and your authentication material is wrong. «iscsiadm» is part of standard Linux package and is not modified by Proxmox in any way. Hence the issue you are having is strictly between HPE and Linux.

Are you sure that username is «username» ? Have you properly permissioned the HPE side to allow PVE IQN to login? What does HPE log say?

Have you consulted HPE document that guides you on the steps of connecting a Linux host to their storage?

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox — https://www.blockbridge.com/proxmox

-

#4

I already have read this document:

https://www.hpe.com/psnow/doc/a00105312en_us

I know it is not spefically related to proxmox, but, i post here thinking that i could find help here because there is more system administrator here than on linux general forum…

for the username, i dont find any information on it… When i seek for more information, i find some people saying that this username is not restrictive, and we can set what we want, the most important think is that the password is good, and the chat record is set on the HPE storage with the correct iqn of the initiator (the proxmox server).

I dont find the log for hpe but i try. I post in the HPE forum too, but, i had just one response from a bot asking if i need help …

Anyone did connect a HPE storage to a Linux host or to a proxmox server?

In any case, thanks for your future answer, and thanks @bbgeek17 for your answers!

-

#5

I got there, finally, all that’s missing is the multipath test

For those who have the same problem, to get the logs, you have to go to the bay’s web administration interface, then support, enter the information, do «collect logs», wait several minutes, and there it downloads a .zip file with lots of logs

Which I made a script to look for «chap failed» entries:

Code:

for i in $(find . -type f -print)

do

echo $i

grep -i "chap failed" $i

doneWhich returned errors with the affected files.

I could see that my different username attempts corresponded to [0]##########Chap failed - No DB Entry for username

On the other hand, I noticed that when I changed the username in the iscid.conf file, it did not change the username during the tests, because it always remained the same in the logs…

I searched, and found: you have to manually modify the files in nodes/iqn...../@ip_port_iscsi/default and in send_targets/@ip_portal/iqn.. .@ip_port/st_config

So, the username needed is in fact the iqn of the initiator (iqn given in /etc/iscsi/initiatorname.iscsi)

And there, an systemctl restart open-iscsi.service or an iscsiadm -m node --portal "@ip_portail" --login works!

You can check with an lsscsi to see the disks shared by the array (you have to install lsscsi before you can use it)

Last edited: Nov 24, 2022

-

#6

Hello everyone.

I managed to enable Chap authentication between the array and the proxmox servers and I can clearly see the volumes mapped on the servers.

However, I can’t create an lvm on it…

As soon as I do a vgcreate vg_name /dev/mapper/volume_hpe

then a pvs, I have errors:

Code:

WARNING: Metadata location on /dev/mapper/volume_hpe at 4608 begins with invalid VG name.

WARNING: bad metadata text on /dev/mapper/volume_hpe in mda1

WARNING: scanning /dev/mapper/volume_hpe mda1 failed to read metadata summary.

WARNING: repair VG metadata on /dev/mapper/volume_hpe with vgck --updatemetadata.

WARNING: scan failed to get metadata summary from /dev/mapper/volume_hpe PVID oWtXNViRKrjasEzsvD1RQJ94fhLV65CD

WARNING: PV /dev/mapper/volume_hpe is marked in use but no VG was found using it.

WARNING: PV /dev/mapper/volume_hpe might need repairing.and by default vgcreate does not offer me the path /dev/mapper/volume_hpe … only the path /dev/sda (local disk of the servers, where there is already the data of the os proxmox systems)

What’s weird is that after I do the pvcreate, when I do the vgcreate vg-name, the auto-completion doesn’t even offer me the volume initialized by the pvcreate…

Some of my ancient colleague said me «When you have set the open-iscsi on proxmox, go connect the lun by the web interface», but, i read on some forums that we had to do this by the web interface for the ancients version of proxmox, but not longer with the 7.X version of proxmox. Starting with the 7.x (or even 6.x), we need to set it on the shell, but i dont understand what i do wrong…

If you have some tips for me, that would be great ^^

thanks in advance

-

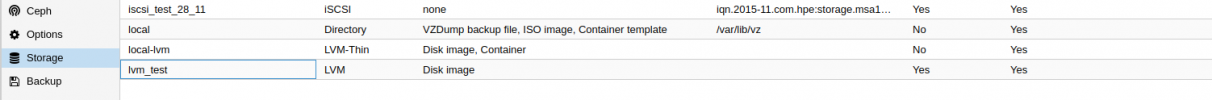

#7

So i try few things.

I delete the volume from my hpe, and create a new one.

On the web gui, on datacenter, storage, i add an iscsi storage, name iscsi_test, portal: @ip_portal, target: the target (the iqn of the hpe volume), check the box enabled and uncheck use directly.

After that, a try, from the web gui, make a lvm, base: the iscsi set before, base volume: the lun, check enable and chek share, and an error occured saying that i cannot create the volume.

So after that, i try in shell pvesm add lvm lvm_test --vgname vg_lvm_test --base iscsi_test_28_11:0.0.1.scsi-3600c0ff000668360f3f3846301000000 --shared yes --content images, it works, but, i cant use the storage…

-

iscsi_lvm_3.png

22.8 KB

· Views: 2

-

iscsi_lvm_2.png

2.9 KB

· Views: 2

-

iscsi_lvm_1.png

23 KB

· Views: 2

I am attempting to setup an iSCSI target, with one block LUN which is a zfs zvol on a CentOS 7 machine. I’ve only ever used iscsitarget and the /etc/iet/ietd.conf file on ubuntu systems.

I have this (from targetcli):

/iscsi> ls

o- iscsi .............................................................................................................. [Targets: 1]

o- iqn.2019-09.com.nas:main ............................................................................................ [TPGs: 1]

o- tpg1 ................................................................................................. [no-gen-acls, no-auth]

o- acls ............................................................................................................ [ACLs: 1]

| o- iqn.2019-09.com.nas:main:client ........................................................................ [Mapped LUNs: 1]

| o- mapped_lun0 .................................................................................. [lun0 block/block1 (rw)]

o- luns ............................................................................................................ [LUNs: 1]

| o- lun0 ....................................................................... [block/block1 (/dev/zd0) (default_tg_pt_gp)]

o- portals ...................................................................................................... [Portals: 1]

o- 0.0.0.0:3260 ....................................................................................................... [OK]

/iscsi>

And I ran this:

/iscsi/iqn.20...nas:main/tpg1> set attribute authentication=0

Parameter authentication is now '0'.

/iscsi/iqn.20...nas:main/tpg1>

However, a Windows client refuses to connect, and from an Ubuntu machine I receive this authorization error:

iscsiadm -m node --targetname "iqn.2019-09.com.nas:main" --portal "192.168.0.146" --login

Logging in to [iface: default, target: iqn.2019-09.com.nas:main, portal: 192.168.0.146,3260] (multiple)

iscsiadm: Could not login to [iface: default, target: iqn.2019-09.com.nas:main, portal: 192.168.0.146,3260].

iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

I was not looking to use any CHAP authentication, but I guess if I have to I can. targetcli is entirely new to me, not sure what is missing and from looking around that set attribute command from within tpg1 should have done the trick.

From https://www.systutorials.com/docs/linux/man/8-targetcli/

No Authentication Authentication is disabled by clearing the TPG

«authentication» attribute: set attribute authentication=0. Although

initiator names are trivially forgeable, generate_node_acls still

works here to either ignore user-defined ACLs and allow all, or check

that an ACL exists for the connecting initiator

Perhaps I named this incorrectly? As under «acls» I have iqn.2019-09.com.nas:main:client but a discovery from clients only returns iqn.2019-09.com.nas:main

This is the guide I used to setup:

Hi,

I am sharing storage between 2 machines, follow the steps and stuck in the last of sharing storage. I am using Oracle Linux 7.9 on both machines (storage and node) and sharing storage using targetcli on it, all the things run smoothly. I discovered the storage from node but when trying to login, it shows an this error.

Please Guide

root@racnode1 iscsi]# iscsiadm -m discovery -t sendtargets -p 192.168.1.15

192.168.1.15:3260,1 iqn.2003-01.org.linux-iscsi.storage.x8664:sn.cf46131fa4fe

Error:

————

[root@racnode1 iscsi]# iscsiadm -m node -T iqn.2003-01.org.linux-iscsi.storage.x8664:sn.cf46131fa4fe 192.168.1.15 -l

Logging in to [iface: default, target: iqn.2003-01.org.linux-iscsi.storage.x8664:sn.cf46131fa4fe, portal: 192.168.1.15,3260] (multiple)

iscsiadm: Could not login to [iface: default, target: iqn.2003-01.org.linux-iscsi.storage.x8664:sn.cf46131fa4fe, portal: 192.168.1.15,3260].

iscsiadm: initiator reported error (24 — iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

==============================================================

Other info:

========

ssh configured, firewall stopped, pining successfully

Node iqn:

—————

[root@racnode1 iscsi]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2017-02.tn.wadhahdaouehi.node:racnode1

Storage iqn:

———————

[root@storage ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2003-01.org.linux-iscsi.storage.x8664:sn.cf46131fa4fe

Storage:

———————

[root@storage ~]# targetcli

targetcli shell version 2.1.53

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type ‘help’.

/> ls

o- / …………………………………………………………………………………………………………. […]

o- backstores ……………………………………………………………………………………………….. […]

| o- block …………………………………………………………………………………….. [Storage Objects: 1]

| | o- newlun_sdb …………………………………

-

#1

version: TrueNAS-SCALE-21.08-BETA.2.iso

environment: vmwareworkstation 16 pro

iscsi with chap auto failed

Code:

[root@localhost ~]# iscsiadm --mode node --targetname iqn.2021-10.org.freenas.ctl:asm-t1 --portal 192.168.93.53 --login Logging in to [iface: default, target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] (multiple) iscsiadm: Could not login to [iface: default, target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260]. iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure) iscsiadm: Could not log into all portals

[/CODE]

my config

Code:

cat >>/etc/iscsi/iscsid.conf<<EOF discovery.sendtargets.auth.authmethod = CHAP discovery.sendtargets.auth.username = user discovery.sendtargets.auth.password = pass12345678 EOF

some error logs

Code:

[root@localhost ~]# tail -50f /var/log/messages Oct 21 22:06:04 localhost kernel: sd 4:0:0:1: [sdc] Write Protect is off Oct 21 22:06:04 localhost kernel: sd 4:0:0:1: [sdc] Write cache: enabled, read cache: enabled, supports DPO and FUA Oct 21 22:06:04 localhost kernel: sd 4:0:0:1: [sdc] Optimal transfer size 16384 bytes Oct 21 22:06:04 localhost kernel: sd 4:0:0:1: [sdc] Attached SCSI disk Oct 21 22:06:11 localhost kernel: scsi host5: iSCSI Initiator over TCP/IP Oct 21 22:06:11 localhost iscsid: iscsid: Connection3:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t3, portal: 192.168.93.53,3260] through [iface: default] is operational now Oct 21 22:14:48 localhost systemd: Starting Cleanup of Temporary Directories... Oct 21 22:14:48 localhost systemd: Started Cleanup of Temporary Directories. Oct 21 22:35:40 localhost systemd-logind: Removed session 1. Oct 21 22:44:09 localhost systemd-logind: New session 3 of user root. Oct 21 22:44:09 localhost systemd: Started Session 3 of user root. Oct 21 22:51:38 localhost kernel: sd 3:0:0:0: [sdb] Synchronizing SCSI cache Oct 21 22:51:38 localhost iscsid: iscsid: Connection1:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 22:51:42 localhost kernel: sd 4:0:0:1: [sdc] Synchronizing SCSI cache Oct 21 22:51:42 localhost iscsid: iscsid: Connection2:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t2, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 22:51:45 localhost iscsid: iscsid: Connection3:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t3, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 22:51:54 localhost kernel: scsi host6: iSCSI Initiator over TCP/IP Oct 21 22:51:54 localhost iscsid: iscsid: Connection4:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] through [iface: default] is operational now Oct 21 22:51:54 localhost kernel: scsi 6:0:0:0: Direct-Access TrueNAS iSCSI Disk 360 PQ: 0 ANSI: 6 Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: Attached scsi generic sg2 type 0 Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: Power-on or device reset occurred Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: [sdb] 2621444 4096-byte logical blocks: (10.4 GB/10.0 GiB) Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: [sdb] 16384-byte physical blocks Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: [sdb] Write Protect is off Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: [sdb] Write cache: enabled, read cache: enabled, supports DPO and FUA Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: [sdb] Optimal transfer size 16384 bytes Oct 21 22:51:54 localhost kernel: sd 6:0:0:0: [sdb] Attached SCSI disk Oct 21 22:51:58 localhost kernel: sd 6:0:0:0: [sdb] Synchronizing SCSI cache Oct 21 22:51:58 localhost iscsid: iscsid: Connection4:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 22:52:18 localhost kernel: scsi host7: iSCSI Initiator over TCP/IP Oct 21 22:52:18 localhost iscsid: iscsid: Login failed to authenticate with target iqn.2021-10.org.freenas.ctl:asm-t1 Oct 21 22:52:18 localhost iscsid: iscsid: session 5 login rejected: Initiator failed authentication with target Oct 21 22:52:18 localhost kernel: connection5:0: detected conn error (1020) Oct 21 22:52:18 localhost iscsid: iscsid: Connection5:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 22:58:04 localhost kernel: scsi host8: iSCSI Initiator over TCP/IP Oct 21 22:58:04 localhost iscsid: iscsid: Login failed to authenticate with target iqn.2021-10.org.freenas.ctl:asm-t1 Oct 21 22:58:04 localhost iscsid: iscsid: session 6 login rejected: Initiator failed authentication with target Oct 21 22:58:04 localhost kernel: connection6:0: detected conn error (1020) Oct 21 22:58:04 localhost iscsid: iscsid: Connection6:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 22:58:09 localhost systemd: Stopping Open-iSCSI... Oct 21 22:58:09 localhost iscsid: iscsid: iscsid shutting down. Oct 21 22:58:09 localhost systemd: Stopped Open-iSCSI. Oct 21 22:58:09 localhost systemd: Starting Open-iSCSI... Oct 21 22:58:09 localhost systemd: Started Open-iSCSI. Oct 21 22:58:12 localhost kernel: scsi host9: iSCSI Initiator over TCP/IP Oct 21 22:58:12 localhost iscsid: iscsid: Login failed to authenticate with target iqn.2021-10.org.freenas.ctl:asm-t1 Oct 21 22:58:12 localhost iscsid: iscsid: session 7 login rejected: Initiator failed authentication with target Oct 21 22:58:12 localhost kernel: connection7:0: detected conn error (1020) Oct 21 22:58:12 localhost iscsid: iscsid: Connection7:0 to [target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] through [iface: default] is shutdown. Oct 21 23:01:01 localhost systemd: Started Session 4 of user root.

here have wrong guide:https://www.truenas.com/docs/core/sharing/iscsi/iscsishare/

it should be

Code:

cat >>/etc/iscsi/iscsid.conf<<EOF node.session.auth.authmethod = CHAP node.session.auth.username = user node.session.auth.password = pass12345678 EOF

and success

Code:

[root@localhost ~]# iscsiadm --mode node --targetname iqn.2021-10.org.freenas.ctl:asm-t1 --portal 192.168.93.53 --login Logging in to [iface: default, target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] (multiple) Login to [iface: default, target: iqn.2021-10.org.freenas.ctl:asm-t1, portal: 192.168.93.53,3260] successful.

-

Summary

-

Files

-

Reviews

-

Support

-

News

-

Discussion

-

Mailing Lists

-

Tickets ▾

- Patches

- Feature Requests

- Bugs

-

Donate

-

Code

-

Old 3.x Code

Menu

▾

▴

-

Create Topic -

Stats Graph

Forums

-

Suggestion Box

46 -

Development

75 -

Tips & Tricks

24 -

Documentation

23 -

Open Discussion

115 -

Installing openQRM

441 -

Help

466 -

News & Announcements

200

Help

-

Formatting Help

Could not login to via iscsiadm

Created:

2012-05-30

Updated:

2013-04-13

-

Hi Matt,

openqrm distro: CentOS v6.1 x86_64bit

issues:

Error as shown below during vnc boot up populating nfs server template to lvm iscsi

sd: 0:0:0:0: Attached scsi generic sg0 type 0 scsi 1:0:0:0 Attached scsi generic sg1 type 5 sd 1:0:1:0 Attached scsi generic sg2 type 0 iscsi: registered transport (iser) lvm-iscsi-storage: Discovering Iscsi-target 198.168.0.1:3260 198.168.0.1:3260,1 iscsiVol0 198.168.122.1,1 iscsiVol0 Logging in to [iface: default, target: iscsiVol0, portal 198.168.0.1,3260] iscsiadm: Could not login to [iface: default, target: iscsiVol0, portal 198.168.0.1,3260]. iscsiadm: initiator reported (19 - encountered non-retryable iSCSI login failure) iscsiadm: Could not log into all portals find: /sys/class/iscsi_session/session*/device/target*/*/: No such file or directory find: /sys/class/iscsi_session/session*/device/target*/*/: No such file or directory [b]ERROR: Udev did not detect the new device[/b] [b]ERROR: Could not look-up the Iscsi device in the sys-fs dir[/b] bash-3.2# bash-3.2#

What I missed here? Your help is very much appreciated.

Thanks

Junix

-

hope this info could help:

# ifconfig

br0 Link encap:Ethernet HWaddr 78:2B:CB:33:19:A2 inet addr:198.168.0.1 Bcast:198.168.0.255 Mask:255.255.255.0 inet6 addr: fe80::7a3b:cbfe:fe33:19a2/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:4805823211 errors:0 dropped:0 overruns:0 frame:0 TX packets:2105416761 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:4827190461723 (4.3 TiB) TX bytes:794613565768 (740.0 GiB)

eth0 Link encap:Ethernet HWaddr 78:2B:CB:63:29:A2

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:4807763506 errors:0 dropped:205 overruns:0 frame:0

TX packets:2115498198 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4913837853719 (4.4 TiB) TX bytes:803742870532 (748.5 GiB)

Interrupt:36 Memory:d8000000-d8012800lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:52367354 errors:0 dropped:0 overruns:0 frame:0

TX packets:52367354 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:117620670959 (109.5 GiB) TX bytes:117620670959 (109.5 GiB)virbr0 Link encap:Ethernet HWaddr 52:54:00:66:F1:9F inet addr:198.168.122.1 Bcast:198.168.122.255 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:111989 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 b) TX bytes:5153395 (4.9 MiB)

Thanks.

junix

-

Additional info / outputs:

Tried via command — line

[code][root@thesisProjectQRMserver ~]# [b]iscsiadm --mode node -T iscsiVol0 -p 198.168.0.1:3260 --login[/b] Logging in to [iface: default, target: iscsiVol0, portal: 198.168.0.1,3260] (multiple) iscsiadm: Could not login to [iface: default, target: iscsiVol0, portal: 198.168.0.1,3260]. iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure) iscsiadm: Could not log into all portals[/code]

The /etc/ietd.conf

[root@thesisProjectQRMserver ~]# cat /etc/ietd.conf Target iscsiVol0 IncomingUser iscsiVol0 0ph6cwk5xtbr Lun 1 Path=/dev/mapper/cloudVG-iscsiVol0,Type=fileio MaxConnections 1 # iscsiVol0 MaxSessions 1 # iscsiVol0iscsi-target and iscsid up and running

[root@thesisProjectQRMserver~]# service iscsi-target status iSCSI Target (pid 23907) is running... [root@thesisProjectQRMserver~]# service iscsid status iscsid (pid 24783) is running...

iscsi service stop status

[root@thesisProjectQRMserver~]# service iscsi status iscsi is stopped

The output when I started the iscsi service

[root@thesisProjectQRMserver~]# service iscsi start Starting iscsi: iscsiadm: Could not login to [iface: default, target: iscsiVol0, portal: 198.168.0.1,3260]. iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure) iscsiadm: Could not login to [iface: default, target: iscsiVol0, portal: 198.168.122.1,3260]. iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure) iscsiadm: Could not log into all portals [ OK ] [root@thesisProjectQRMserver~]# service iscsi status No active sessions

The /etc/iscsi/iscsid.conf file

[root@thesisProjectQRMserver ~]# tail -n30 /etc/iscsi/iscsid.conf # # To disable digest checking for the header and/or data part of # iSCSI PDUs, uncomment the following line: #node.conn[0].iscsi.HeaderDigest = None # # The default is to never use DataDigests or HeaderDigests. # node.conn[0].iscsi.HeaderDigest = None # For multipath configurations, you may want more than one session to be # created on each iface record. If node.session.nr_sessions is greater # than 1, performing a 'login' for that node will ensure that the # appropriate number of sessions is created. node.session.nr_sessions = 1 #************ # Workarounds #************ # Some targets like IET prefer after an initiator has sent a task # management function like an ABORT TASK or LOGICAL UNIT RESET, that # it does not respond to PDUs like R2Ts. To enable this behavior uncomment # the following line (The default behavior is Yes): node.session.iscsi.FastAbort = Yes # Some targets like Equalogic prefer that after an initiator has sent # a task management function like an ABORT TASK or LOGICAL UNIT RESET, that # it continue to respond to R2Ts. To enable this uncomment this line # node.session.iscsi.FastAbort = No

I’m also confuse here in the script below why the iscsi configuration parameters (in bold font) that supposedly appended in the /etc/iscsi/iscsid.conf file doesn’t added. What I miss here?

[root@thesisProjectQRMserver ~]# cat /usr/share/openqrm/plugins/lvm-storage/web/root-mount.lvm-iscsi-deployment | sed -n '92,150p' function mount_rootfs() { : : chmod +x /sbin/iscsi* mkdir -p /tmp # load iscsi related modules modprobe iscsi_tcp modprobe libiscsi modprobe scsi_transport_iscsi modprobe scsi_mod modprobe sg modprobe sd_mod modprobe ib_iser # create config /etc/iscsi/iscsid.conf mkdir -p /etc/iscsi/ cat >> /etc/iscsi/iscsid.conf << EOF [b]node.startup = manual node.session.timeo.replacement_timeout = 120 node.conn[0].timeo.login_timeout = 15 node.conn[0].timeo.logout_timeout = 15 node.conn[0].timeo.noop_out_interval = 10 node.conn[0].timeo.noop_out_timeout = 15 node.session.iscsi.InitialR2T = No node.session.iscsi.ImmediateData = Yes node.session.iscsi.FirstBurstLength = 262144 node.session.iscsi.MaxBurstLength = 16776192 node.conn[0].iscsi.MaxRecvDataSegmentLength = 65536 node.session.auth.authmethod = CHAP node.session.auth.username = $IMAGE_TARGET node.session.auth.password = $IMAGE_ISCSI_AUTH[/b] EOF # create /etc/iscsi/initiatorname.iscsi [b] cat >> /etc/iscsi/initiatorname.iscsi << EOF InitiatorName=iqn.1993-08.org.debian:01:31721e7e6b8f[/b] EOF # also create /etc/initiatorname.iscsi, some open-iscsi version looking for that cp /etc/iscsi/initiatorname.iscsi /etc/initiatorname.iscsi

«InitiatorName=iqn.1993-08.org.debian:01:31721e7e6b8f» does not appended also in the /etc/iscsi/initiatorname.iscsi as supposedly in the aboved script (in bold font)

[root@thesisProjectQRMserver ~]# cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.1994-05.com.redhat:db77ee114224

-

Hello Matt,

I can now boot the KVM VM where the image resided in the lvm-storage server (iSCSI). The only problem is when my instructor rebooted the KVM VM and check via VNC viewer the VM wasn’t able to boot again and the error shows as shown below :

sd: 0:0:0:0: Attached scsi generic sg0 type 0 scsi 1:0:0:0 Attached scsi generic sg1 type 5 sd 1:0:1:0 Attached scsi generic sg2 type 0 iscsi: registered transport (iser) lvm-iscsi-storage: Discovering Iscsi-target 198.168.0.1:3260 198.168.0.1:3260,1 iscsiVol0 198.168.122.1,1 iscsiVol0 Logging in to [iface: default, target: iscsiVol0, portal 198.168.0.1,3260] iscsiadm: Could not login to [iface: default, target: iscsiVol0, portal 198.168.0.1,3260]. iscsiadm: initiator reported (19 - encountered non-retryable iSCSI login failure) iscsiadm: Could not log into all portals find: /sys/class/iscsi_session/session*/device/target*/*/: No such file or directory find: /sys/class/iscsi_session/session*/device/target*/*/: No such file or directory ERROR: Udev did not detect the new device ERROR: Could not look-up the Iscsi device in the sys-fs dir bash-3.2# bash-3.2#

I observed that when I created a new LUN everything is fine from populating the image from NFS to iSCSI lun until it booted-up. The problem was when I reboot the VM the message aboved error shows again.

Hope you could guide me on how to fix this Matt.

Thanks a lot.

Junix

-

Hi Junix,

please try stopping and starting the appliance again.

This will re-authenticate the iSCSI Lunmany thanks and have a nice day,

Matt Rechenburg

Project Manager openQRM

-

Hello Matt,

Is there any total fix or your recommendation to make more efficient without stopping and starting back again the appliance just to re-authenticate the iSCSI Lun? Because as I observed for several weeks everytime I rebooted the KVM VM it always failed to login, sometimes it log-in after several hours or an hour before it logged on.

Your brilliant solution / recommendation on how-to is very much appreciated.

Thanks.

Junix

-

Hi Junix,

the re-authentication problem you describe are normally caused by problems in the iscsid itself, not by openQRM which is just «using» iscsi as is. Can you please check in the situation you described if a restart of the iscsitarget helps ?

I would also like you to check if e.g. the password in ietd.conf somehow «changes automatically» when this happens for you.-> what you can also try is to use the iscsiadm (target config tool) to re-create the current target which gives you trouble.

btw: I have seen this once or twice on RH/Centos, never on Debian/ubuntu iscsitarget storage server.many thanks, have a nice day and enjoy openQRM 5.0,

Matt Rechenburg

Project Manager openQRM

Log in to post a comment.