Содержание

- Processing large JSON files in Python without running out of memory

- The problem: Python’s memory-inefficient JSON loading

- A brief digression: Python’s string memory representation

- A streaming solution

- Other approaches

- Learn even more techniques for reducing memory usage—read the rest of the Larger-than-memory datasets guide for Python.

- Find performance and memory bottlenecks in your data processing code with the Sciagraph profiler

- Learn practical Python software engineering skills you can use at your job

- MemoryError in Python while combining multiple JSON files and outputting as single CSV

- 2 Answers 2

- Load large .jsons file into Pandas dataframe

- 1 Answer 1

- JSON module, then into Pandas

- Directly using Pandas

- Another memory-saving trick — using Generators

- Python: Json.load большой файл JSON MemoryError

- 2 ответа

- Json load python memory error

- Что такое MemoryError в Python?

- Почему возникает MemoryError?

- Как исправить MemoryError?

- Ошибка связана с 32-битной версией

- Как посмотреть версию Python?

- Как установить 64-битную версию Python?

- Оптимизация кода

- Явно освобождаем память с помощью сборщика мусора

Processing large JSON files in Python without running out of memory

by Itamar Turner-Trauring

Last updated 06 Jan 2023, originally created 14 Mar 2022

If you need to process a large JSON file in Python, it’s very easy to run out of memory. Even if the raw data fits in memory, the Python representation can increase memory usage even more.

And that means either slow processing, as your program swaps to disk, or crashing when you run out of memory.

One common solution is streaming parsing, aka lazy parsing, iterative parsing, or chunked processing. Let’s see how you can apply this technique to JSON processing.

The problem: Python’s memory-inefficient JSON loading

For illustrative purposes, we’ll be using this JSON file, large enough at 24MB that it has a noticeable memory impact when loaded. It encodes a list of JSON objects (i.e. dictionaries), which look to be GitHub events, users doing things to repositories:

Our goal is to figure out which repositories a given user interacted with. Here’s a simple Python program that does so:

The result is a dictionary mapping usernames to sets of repository names.

When we run this with the Fil memory profiler, here’s what we get:

Looking at peak memory usage, we see two main sources of allocation:

- Reading the file.

- Decoding the resulting bytes into Unicode strings.

And if we look at the implementation of the json module in Python, we can see that the json.load() just loads the whole file into memory before parsing!

So that’s one problem: just loading the file will take a lot of memory. In addition, there should be some usage from creating the Python objects. However, in this case they don’t show up at all, probably because peak memory is dominated by loading the file and decoding it from bytes to Unicode. That’s why actual profiling is so helpful in reducing memory usage and speeding up your software: the real bottlenecks might not be obvious.

Even if loading the file is the bottleneck, that still raises some questions. The original file we loaded is 24MB. Once we load it into memory and decode it into a text (Unicode) Python string, it takes far more than 24MB. Why is that?

A brief digression: Python’s string memory representation

Python’s string representation is optimized to use less memory, depending on what the string contents are. First, every string has a fixed overhead. Then, if the string can be represented as ASCII, only one byte of memory is used per character. If the string uses more extended characters, it might end up using as many as 4 bytes per character. We can see how much memory an object needs using sys.getsizeof() :

Notice how all 3 strings are 1000 characters long, but they use different amounts of memory depending on which characters they contain.

If you look at our large JSON file, it contains characters that don’t fit in ASCII. Because it’s loaded as one giant string, that whole giant string uses a less efficient memory representation.

A streaming solution

It’s clear that loading the whole JSON file into memory is a waste of memory. With a larger file, it would be impossible to load at all.

Given a JSON file that’s structured as a list of objects, we could in theory parse it one chunk at a time instead of all at once. The resulting API would probably allow processing the objects one at a time. And if we look at the algorithm we want to run, that’s just fine; the algorithm does not require all the data be loaded into memory at once. We can process the records one at a time.

Whatever term you want to describe this approach—streaming, iterative parsing, chunking, or reading on-demand—it means we can reduce memory usage to:

- The in-progress data, which should typically be fixed.

- The result data structure, which in our case shouldn’t be too large.

There are a number of Python libraries that support this style of JSON parsing; in the following example, I used the ijson library.

In the previous version, using the standard library, once the data is loaded we no longer to keep the file open. With this API the file has to stay open because the JSON parser is reading from the file on demand, as we iterate over the records.

The items() API takes a query string that tells you which part of the record to return. In this case, «item» just means “each item in the top-level list we’re iterating over”; see the ijson documentation for more details.

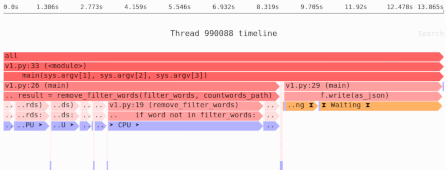

Here’s what memory usage looks like with this approach:

When it comes to memory usage, problem solved! And as far as runtime performance goes, the streaming/chunked solution with ijson actually runs slightly faster, though this won’t necessarily be the case for other datasets or algorithms.

Note: Whether or not any particular tool or technique will help depends on where the actual memory bottlenecks are in your software.

Need to identify the memory and performance bottlenecks in your own Python data processing code? Try the Sciagraph profiler, with support for profiling both in development and production.

Other approaches

As always, there are other solutions you can try:

- Pandas: Pandas has the ability to read JSON, and, in theory, it could do it in a more memory-efficient way for certain JSON layouts. In practice, for this example at least peak memory was much worse at 287MB, not including the overhead of importing Pandas.

- SQLite: The SQLite database can parse JSON, store JSON in columns, and query JSON (see the documentation). One could therefore load the JSON into a disk-backed database file, and run queries against it to extract only the relevant subset of the data. I haven’t measured this approach, but if you need to run multiple queries against the same JSON file, this might be a good path going forward; you can add indexes, too.

Finally, if you have control over the output format, there are ways to reduce the memory usage of JSON processing by switching to a more efficient representation. For example, switching from a single giant JSON list of objects to a JSON record per line, which means every decoded JSON record will only use a small amount of memory.

Learn even more techniques for reducing memory usage—read the rest of the Larger-than-memory datasets guide for Python.

Find performance and memory bottlenecks in your data processing code with the Sciagraph profiler

Slow-running jobs waste your time during development, impede your users, and increase your compute costs. Speed up your code and you’ll iterate faster, have happier users, and stick to your budget—but first you need to identify the cause of the problem.

Find performance bottlenecks and memory hogs in your data science Python jobs with the Sciagraph profiler. Profile in development and production, with multiprocessing support and more.

Learn practical Python software engineering skills you can use at your job

Sign up for my newsletter, and join over 6700 Python developers and data scientists learning practical tools and techniques, from Python performance to Docker packaging, with a free new article in your inbox every week.

Источник

MemoryError in Python while combining multiple JSON files and outputting as single CSV

I have a number of JSON files to combine and output as a single CSV (to load into R), with each JSON file at about 1.5GB. While doing a trial on 4-5 JSON files at 250MB each, the code works when I only use 2-3 files but chokes when the total file sizes get larger.

I’m running Python version 2.7.6 (default, Nov 10 2013, 19:24:24) [MSC v.1500 64 bit (AMD64)] on 8GB RAM and Windows 7 Professional 64 bit.

I’m a Python novice and have little experience with writing optimized code. I would appreciate guidance on optimizing my script below.

Python MemoryError

JSON to CSV conversion script

2 Answers 2

You’re storing lots of results in lists, which could be streamed instead. Fortunately, using generators, you can make this ‘streaming’ relatively easy without changing too much of the structure of your code. Essentially, put each ‘step’ into a function, and then rather than append ing to a list, yield each value. Then you’d have something that might look like this:

Then you could replace

But this will still store the IDs into ids , texts into texts , etc. You could use a bunch of generators and zip them together with itertools.izip , but the better solution would be to extract the columns from the tweet when writing each line. Then (omitting the UTF-8 encoding piece, which you’d want to rewrite to work on dictionaries) you’d have

Lastly, since this is Code Review, you might consider putting all the keys you want to pull out into a list:

Then your row-writing code can be simplified to

Rewriting it this way, you can then UTF-8 encode rather easily (using a helper function):

Источник

Load large .jsons file into Pandas dataframe

I’m trying to load a large jsons-file (2.5 GB) into a Pandas dataframe. Due to the large size of the file pandas.read_json() will result in a memory error.

Therefore I’m trying to read it in like this:

However, this just keeps running, slowing/crashing my pc.

What would be the most efficient way to do this?

The final aim is to have a subset (sample) of this large file.jsons dataset.

1 Answer 1

There are a few ways to do this a little more efficiently:

JSON module, then into Pandas

You could try reading the JSON file directly as a JSON object (i.e. into a Python dictionary) using the json module:

Using orient=»index» might be necessary, depending on the shape/mappings of your JSON file.

check out this in depth tutorial on JSON files with Python.

Directly using Pandas

You said this option gives you a memory error, but there is an option that should help with it. Passing lines=True and then specify how many lines to read in one chunk by using the chunksize argument. The following will return an object that you can iterate over, and each iteration will read only 5 lines of the file:

Note here that the JSON file must be in the records format, meaning each line is list like. This allows Pandas to know that is can reliably read chunksize=5 lines at a time. Here is the relevant documentation on line-delimited JSON files. In short, the file should have be written using something like: df.to_json(. orient=»records», line=True) .

Not only does Pandas abstract some manual parts away for you, it offers a lot more options, such as converting dates correctly, specifying data type of each column and so on. Check out the relevant documentation.

Check out a little code example in the Pandas user guide documentation.

Another memory-saving trick — using Generators

There is a nice way to only have one file’s contents in memory at any given time, using Python generators, which have lazy evaluation. Here is a starting place to learn about them.

Источник

Python: Json.load большой файл JSON MemoryError

Я пытаюсь загрузить большой файл JSON (300 МБ), чтобы использовать его для анализа в Excel. Я только начал работать с MemoryError, когда я делаю json.load(файл). Вопросы, подобные этому, были опубликованы, но не смогли ответить на мой конкретный вопрос. Я хочу иметь возможность вернуть все данные из файла JSON в одном блоке, как я сделал в коде. Каков наилучший способ сделать это? Код и структура JSON ниже:

Код выглядит так.

Структура выглядит следующим образом.

Внутри «контента» информация не согласована, но имеет 3 различных, но разных возможных шаблона, которые можно предсказать на основе analysis_type.

2 ответа

Мне так понравилось, надеюсь, это поможет вам. и, возможно, вам нужно пропустить 1-ю строку «[«. и удалите «,» в конце строки, если существует «>,».

Если бы все протестированные библиотеки вызывали проблемы с памятью, мой подход заключался бы в разбиении файла на один для каждого объекта в массиве.

Если в файле есть символы новой строки и отступы, как вы сказали в OP, я должен прочитать строку, отбрасывая, если это [ или же ] записывать строки в новые файлы каждый раз, когда вы найдете >, где вам также нужно убрать запятые. Затем попробуйте загрузить каждый файл и распечатать сообщение, когда вы закончите читать каждый файл, чтобы увидеть, где он терпит неудачу, если это так.

Если в файле нет символов новой строки или он не заполнен должным образом, вам нужно начать читать символ с помощью символа, сохраняя слишком много счетчиков, увеличивая каждый из них, когда вы найдете [ или же < и уменьшая их, когда вы найдете ] или же >соответственно. Также учтите, что вам может понадобиться отбросить любую фигурную или квадратную скобку, которая находится внутри строки, хотя это может и не понадобиться.

Источник

Json load python memory error

Впервые я столкнулся с Memory Error, когда работал с огромным массивом ключевых слов. Там было около 40 млн. строк, воодушевленный своим гениальным скриптом я нажал Shift + F10 и спустя 20 секунд получил Memory Error.

Что такое MemoryError в Python?

Memory Error — исключение вызываемое в случае переполнения выделенной ОС памяти, при условии, что ситуация может быть исправлена путем удаления объектов. Оставим ссылку на доку, кому интересно подробнее разобраться с этим исключением и с формулировкой. Ссылка на документацию по Memory Error.

Если вам интересно как вызывать это исключение, то попробуйте исполнить приведенный ниже код.

Почему возникает MemoryError?

В целом существует всего лишь несколько основных причин, среди которых:

- 32-битная версия Python, так как для 32-битных приложений Windows выделяет лишь 4 гб, то серьезные операции приводят к MemoryError

- Неоптимизированный код

- Чрезмерно большие датасеты и иные инпут файлы

- Ошибки в установке пакетов

Как исправить MemoryError?

Ошибка связана с 32-битной версией

Тут все просто, следуйте данному гайдлайну и уже через 10 минут вы запустите свой код.

Как посмотреть версию Python?

Идем в cmd (Кнопка Windows + R -> cmd) и пишем python. В итоге получим что-то похожее на

Нас интересует эта часть [MSC v.1928 64 bit (AMD64)], так как вы ловите MemoryError, то скорее всего у вас будет 32 bit.

Как установить 64-битную версию Python?

Идем на официальный сайт Python и качаем установщик 64-битной версии. Ссылка на сайт с официальными релизами. В скобках нужной нам версии видим 64-bit. Удалять или не удалять 32-битную версию — это ваш выбор, я обычно удаляю, чтобы не путаться в IDE. Все что останется сделать, просто поменять интерпретатор.

Идем в PyCharm в File -> Settings -> Project -> Python Interpreter -> Шестеренка -> Add -> New environment -> Base Interpreter и выбираем python.exe из только что установленной директории. У меня это

Все, запускаем скрипт и видим, что все выполняется как следует.

Оптимизация кода

Пару раз я встречался с ситуацией когда мои костыли приводили к MemoryError. К этому приводили избыточные условия, циклы и буферные переменные, которые не удаляются после потери необходимости в них. Если вы понимаете, что проблема может быть в этом, вероятно стоит закостылить пару del, мануально удаляя ссылки на объекты. Но помните о том, что проблема в архитектуре вашего проекта, и по настоящему решить эту проблему можно лишь правильно проработав структуру проекта.

Явно освобождаем память с помощью сборщика мусора

В целом в 90% случаев проблема решается переустановкой питона, однако, я просто обязан рассказать вам про библиотеку gc. В целом почитать про Garbage Collector стоит отдельно на авторитетных ресурсах в статьях профессиональных программистов. Вы просто обязаны знать, что происходит под капотом управления памятью. GC — это не только про Python, управление памятью в Java и других языках базируется на технологии сборки мусора. Ну а вот так мы можем мануально освободить память в Python:

Источник

Answer by Kinley Tanner

If you are using the json built-in library to load that file, it’ll have to build larger and larger objects from the contents as they are parsed, and at some point your OS will refuse to provide more memory. That won’t be at 32GB, because there is a limit per process how much memory can be used, so more likely to be at 4GB. At that point all those objects already created are freed again, so in the end the actual memory use doesn’t have to have changed that much. ,That’s because Python objects require some overhead; instances track the number of references to them, what type they are, and their attributes (if the type supports attributes) or their contents (in the case of containers).,The solution is to either break up that large JSON file into smaller subsets, or to use an event-driven JSON parser like ijson.,So, I will explain how I finally solved this problem. The first answer will work. But you have to know that loading elements one per one with ijson will be very long… and by the end, you do not have the loaded file.

For example, the following simple JSON string of 14 characters (bytes on disk) results in a Python object is almost 25 times larger (Python 3.6b3):

>>> import json

>>> from sys import getsizeof

>>> j = '{"foo": "bar"}'

>>> len(j)

14

>>> p = json.loads(j)

>>> getsizeof(p) + sum(getsizeof(k) + getsizeof(v) for k, v in p.items())

344

>>> 344 / 14

24.571428571428573

Answer by Bowen Sheppard

Thanks for contributing an answer to Data Science Stack Exchange!,

Data Science Meta

,

Data Science

help

chat

,Science Fiction & Fantasy

You could try reading the JSON file directly as a JSON object (i.e. into a Python dictionary) using the json module:

import json

import pandas as pd

data = json.load(open("your_file.json", "r"))

df = pd.DataFrame.from_dict(data, orient="index")

You said this option gives you a memory error, but there is an option that should help with it. Passing lines=True and then specify how many lines to read in one chunk by using the chunksize argument. The following will return an object that you can iterate over, and each iteration will read only 5 lines of the file:

df = pd.read_json("test.json", orient="records", lines=True, chunksize=5)

In your example, it could look like this:

import os

# Get a list of files

files = sorted(os.listdir("your_folder"))

# Load each file individually in a generator expression

df = pd.concat(pd.read_json(file, orient="index") for f in files, ...)

Answer by Lacey Gonzales

Questions

,

How can you do «impedance matching» on USB or other serial communication lines?

,Asking for help, clarification, or responding to other answers.,Then your row-writing code can be simplified to

You’re storing lots of results in lists, which could be streamed instead. Fortunately, using generators, you can make this ‘streaming’ relatively easy without changing too much of the structure of your code. Essentially, put each ‘step’ into a function, and then rather than appending to a list, yield each value. Then you’d have something that might look like this:

def load_json():

for file in open_files:

for line in file:

# only keep tweets and not the empty lines

if line.rstrip():

try:

datum = json.loads(line)

except:

pass

else:

yield datum

Then you could replace

for tweet in tweets:

with

for tweet in load_json():

But this will still store the IDs into ids, texts into texts, etc. You could use a bunch of generators and zip them together with itertools.izip, but the better solution would be to extract the columns from the tweet when writing each line. Then (omitting the UTF-8 encoding piece, which you’d want to rewrite to work on dictionaries) you’d have

for tweet in load_json():

csv.writerow((tweet['id'], tweet['text'], ...))

Lastly, since this is Code Review, you might consider putting all the keys you want to pull out into a list:

columns = ['id', 'text', ...]

Then your row-writing code can be simplified to

csv.writerow([tweet[key] for key in columns])

Rewriting it this way, you can then UTF-8 encode rather easily (using a helper function):

def encode_if_possible(value, codec):

if hasattr(value, 'encode'):

return value.encode(codec)

else:

return value

csv.writerow([encode_if_possible(tweet[key], 'utf-8') for key in columns])

Answer by Kaisley Leblanc

Hi,

For handling large JSON files using read_json() is not an efficient way. You will always get an memory error. Because this will take complete data in a memory and process it further.,I have a large dataset in a json file. The file is 1.2GB in size. I’m not able to read it using pandas.read_json(..) . Do we have a way of handling large datasets like this?,Also worth a look — Python & JSON: Working with large datasets using Pandas,You can try ijson module that will work with JSON as a stream, rather than as a block file.

Hey,

I have a large dataset in a json file. The file is 1.2GB in size. I’m not able to read it using pandas.read_json(..) . Do we have a way of handling large datasets like this?

pandas.read_json(..)Answer by Mariam Maddox

Alright think I figured it out. Apparently the pp module that this is using to do the requests in parallel won’t release the memory until you read the result, and reading the result significantly slows down the process.,Reading the result appears to resolve the memory issue, but it slows the individuals requests by a ton. I’ll leave it running overnight and see where it’s at in the AM.,I replaced this line with parallelJobs = pp.Server(‘autodetect’, (), None, True), and it seemed to do the trick (the fourth param is whether to restart worker process after each task completion and defaults to false).,Closing as there are posted workarounds above, feel free to re-open if the bug is being worked on!

started at 1469480152.98

Traceback (most recent call last):

File "import.py", line 90, in <module>

main(argParser.parse_args())

File "import.py", line 20, in main

for prefix, event, value in parser:

File "R:Python27libsite-packagesijsoncommon.py", line 65, in parse

for event, value in basic_events:

File "R:Python27libsite-packagesijsonbackendspython.py", line 185, in basic_parse

for value in parse_value(lexer):

File "R:Python27libsite-packagesijsonbackendspython.py", line 116, in parse_value

for event in parse_array(lexer):

File "R:Python27libsite-packagesijsonbackendspython.py", line 138, in parse_array

for event in parse_value(lexer, symbol, pos):

File "R:Python27libsite-packagesijsonbackendspython.py", line 119, in parse_value

for event in parse_object(lexer):

File "R:Python27libsite-packagesijsonbackendspython.py", line 160, in parse_object

pos, symbol = next(lexer)

File "R:Python27libsite-packagesijsonbackendspython.py", line 59, in Lexer

buf += data

MemoryError

Answer by Cameron Gibson

Not too surprisingly, Python gives me a MemoryError. It appears that json.load() calls json.loads(f.read()), which is trying to dump the entire file into memory first, which clearly isn’t going to work.,The best option appears to be using something like ijson — a module that will work with JSON as a stream, rather than as a block file.,Possible Duplicate:

Is there a memory efficient and fast way to load big json files in python? ,Reading rather large json files in Python

So I seem to run out of memory when trying to load the file with Python. I’m currently just running some tests to see roughly how long dealing with this stuff is going to take to see where to go from here. Here is the code I’m using to test:

from datetime import datetime

import json

print datetime.now()

f = open('file.json', 'r')

json.load(f)

f.close()

print datetime.now()

Впервые я столкнулся с Memory Error, когда работал с огромным массивом ключевых слов. Там было около 40 млн. строк, воодушевленный своим гениальным скриптом я нажал Shift + F10 и спустя 20 секунд получил Memory Error.

Memory Error — исключение вызываемое в случае переполнения выделенной ОС памяти, при условии, что ситуация может быть исправлена путем удаления объектов. Оставим ссылку на доку, кому интересно подробнее разобраться с этим исключением и с формулировкой. Ссылка на документацию по Memory Error.

Если вам интересно как вызывать это исключение, то попробуйте исполнить приведенный ниже код.

print('a' * 1000000000000)

Почему возникает MemoryError?

В целом существует всего лишь несколько основных причин, среди которых:

- 32-битная версия Python, так как для 32-битных приложений Windows выделяет лишь 4 гб, то серьезные операции приводят к MemoryError

- Неоптимизированный код

- Чрезмерно большие датасеты и иные инпут файлы

- Ошибки в установке пакетов

Как исправить MemoryError?

Ошибка связана с 32-битной версией

Тут все просто, следуйте данному гайдлайну и уже через 10 минут вы запустите свой код.

Как посмотреть версию Python?

Идем в cmd (Кнопка Windows + R -> cmd) и пишем python. В итоге получим что-то похожее на

Python 3.8.8 (tags/v3.8.8:024d805, Feb 19 2021, 13:18:16) [MSC v.1928 64 bit (AMD64)]

Нас интересует эта часть [MSC v.1928 64 bit (AMD64)], так как вы ловите MemoryError, то скорее всего у вас будет 32 bit.

Как установить 64-битную версию Python?

Идем на официальный сайт Python и качаем установщик 64-битной версии. Ссылка на сайт с официальными релизами. В скобках нужной нам версии видим 64-bit. Удалять или не удалять 32-битную версию — это ваш выбор, я обычно удаляю, чтобы не путаться в IDE. Все что останется сделать, просто поменять интерпретатор.

Идем в PyCharm в File -> Settings -> Project -> Python Interpreter -> Шестеренка -> Add -> New environment -> Base Interpreter и выбираем python.exe из только что установленной директории. У меня это

C:/Users/Core/AppData/LocalPrograms/Python/Python38

Все, запускаем скрипт и видим, что все выполняется как следует.

Оптимизация кода

Пару раз я встречался с ситуацией когда мои костыли приводили к MemoryError. К этому приводили избыточные условия, циклы и буферные переменные, которые не удаляются после потери необходимости в них. Если вы понимаете, что проблема может быть в этом, вероятно стоит закостылить пару del, мануально удаляя ссылки на объекты. Но помните о том, что проблема в архитектуре вашего проекта, и по настоящему решить эту проблему можно лишь правильно проработав структуру проекта.

Явно освобождаем память с помощью сборщика мусора

В целом в 90% случаев проблема решается переустановкой питона, однако, я просто обязан рассказать вам про библиотеку gc. В целом почитать про Garbage Collector стоит отдельно на авторитетных ресурсах в статьях профессиональных программистов. Вы просто обязаны знать, что происходит под капотом управления памятью. GC — это не только про Python, управление памятью в Java и других языках базируется на технологии сборки мусора. Ну а вот так мы можем мануально освободить память в Python:

What is Memory Error?

Python Memory Error or in layman language is exactly what it means, you have run out of memory in your RAM for your code to execute.

When this error occurs it is likely because you have loaded the entire data into memory. For large datasets, you will want to use batch processing. Instead of loading your entire dataset into memory you should keep your data in your hard drive and access it in batches.

A memory error means that your program has run out of memory. This means that your program somehow creates too many objects. In your example, you have to look for parts of your algorithm that could be consuming a lot of memory.

If an operation runs out of memory it is known as memory error.

Types of Python Memory Error

Unexpected Memory Error in Python

If you get an unexpected Python Memory Error and you think you should have plenty of rams available, it might be because you are using a 32-bit python installation.

The easy solution for Unexpected Python Memory Error

Your program is running out of virtual address space. Most probably because you’re using a 32-bit version of Python. As Windows (and most other OSes as well) limits 32-bit applications to 2 GB of user-mode address space.

We Python Pooler’s recommend you to install a 64-bit version of Python (if you can, I’d recommend upgrading to Python 3 for other reasons); it will use more memory, but then, it will have access to a lot more memory space (and more physical RAM as well).

The issue is that 32-bit python only has access to ~4GB of RAM. This can shrink even further if your operating system is 32-bit, because of the operating system overhead.

For example, in Python 2 zip function takes in multiple iterables and returns a single iterator of tuples. Anyhow, we need each item from the iterator once for looping. So we don’t need to store all items in memory throughout looping. So it’d be better to use izip which retrieves each item only on next iterations. Python 3’s zip functions as izip by default.

Must Read: Python Print Without Newline

Python Memory Error Due to Dataset

Like the point, about 32 bit and 64-bit versions have already been covered, another possibility could be dataset size, if you’re working with a large dataset. Loading a large dataset directly into memory and performing computations on it and saving intermediate results of those computations can quickly fill up your memory. Generator functions come in very handy if this is your problem. Many popular python libraries like Keras and TensorFlow have specific functions and classes for generators.

Python Memory Error Due to Improper Installation of Python

Improper installation of Python packages may also lead to Memory Error. As a matter of fact, before solving the problem, We had installed on windows manually python 2.7 and the packages that I needed, after messing almost two days trying to figure out what was the problem, We reinstalled everything with Conda and the problem was solved.

We guess Conda is installing better memory management packages and that was the main reason. So you can try installing Python Packages using Conda, it may solve the Memory Error issue.

Most platforms return an “Out of Memory error” if an attempt to allocate a block of memory fails, but the root cause of that problem very rarely has anything to do with truly being “out of memory.” That’s because, on almost every modern operating system, the memory manager will happily use your available hard disk space as place to store pages of memory that don’t fit in RAM; your computer can usually allocate memory until the disk fills up and it may lead to Python Out of Memory Error(or a swap limit is hit; in Windows, see System Properties > Performance Options > Advanced > Virtual memory).

Making matters much worse, every active allocation in the program’s address space can cause “fragmentation” that can prevent future allocations by splitting available memory into chunks that are individually too small to satisfy a new allocation with one contiguous block.

1 If a 32bit application has the LARGEADDRESSAWARE flag set, it has access to s full 4gb of address space when running on a 64bit version of Windows.

2 So far, four readers have written to explain that the gcAllowVeryLargeObjects flag removes this .NET limitation. It does not. This flag allows objects which occupy more than 2gb of memory, but it does not permit a single-dimensional array to contain more than 2^31 entries.

How can I explicitly free memory in Python?

If you wrote a Python program that acts on a large input file to create a few million objects representing and it’s taking tons of memory and you need the best way to tell Python that you no longer need some of the data, and it can be freed?

The Simple answer to this problem is:

Force the garbage collector for releasing an unreferenced memory with gc.collect().

Like shown below:

import gc

gc.collect()

Memory error in Python when 50+GB is free and using 64bit python?

On some operating systems, there are limits to how much RAM a single CPU can handle. So even if there is enough RAM free, your single thread (=running on one core) cannot take more. But I don’t know if this is valid for your Windows version, though.

How do you set the memory usage for python programs?

Python uses garbage collection and built-in memory management to ensure the program only uses as much RAM as required. So unless you expressly write your program in such a way to bloat the memory usage, e.g. making a database in RAM, Python only uses what it needs.

Which begs the question, why would you want to use more RAM? The idea for most programmers is to minimize resource usage.

if you wanna limit the python vm memory usage, you can try this:

1、Linux, ulimit command to limit the memory usage on python

2、you can use resource module to limit the program memory usage;

if u wanna speed up ur program though giving more memory to ur application, you could try this:

1threading, multiprocessing

2pypy

3pysco on only python 2.5

How to put limits on Memory and CPU Usage

To put limits on the memory or CPU use of a program running. So that we will not face any memory error. Well to do so, Resource module can be used and thus both the task can be performed very well as shown in the code given below:

Code #1: Restrict CPU time

# importing libraries

import signal

import resource

import os

# checking time limit exceed

def time_exceeded(signo, frame):

print("Time's up !")

raise SystemExit(1)

def set_max_runtime(seconds):

# setting up the resource limit

soft, hard = resource.getrlimit(resource.RLIMIT_CPU)

resource.setrlimit(resource.RLIMIT_CPU, (seconds, hard))

signal.signal(signal.SIGXCPU, time_exceeded)

# max run time of 15 millisecond

if __name__ == '__main__':

set_max_runtime(15)

while True:

pass

Code #2: In order to restrict memory use, the code puts a limit on the total address space

# using resource import resource def limit_memory(maxsize): soft, hard = resource.getrlimit(resource.RLIMIT_AS) resource.setrlimit(resource.RLIMIT_AS, (maxsize, hard))

Ways to Handle Python Memory Error and Large Data Files

1. Allocate More Memory

Some Python tools or libraries may be limited by a default memory configuration.

Check if you can re-configure your tool or library to allocate more memory.

That is, a platform designed for handling very large datasets, that allows you to use data transforms and machine learning algorithms on top of it.

A good example is Weka, where you can increase the memory as a parameter when starting the application.

2. Work with a Smaller Sample

Are you sure you need to work with all of the data?

Take a random sample of your data, such as the first 1,000 or 100,000 rows. Use this smaller sample to work through your problem before fitting a final model on all of your data (using progressive data loading techniques).

I think this is a good practice in general for machine learning to give you quick spot-checks of algorithms and turnaround of results.

You may also consider performing a sensitivity analysis of the amount of data used to fit one algorithm compared to the model skill. Perhaps there is a natural point of diminishing returns that you can use as a heuristic size of your smaller sample.

3. Use a Computer with More Memory

Do you have to work on your computer?

Perhaps you can get access to a much larger computer with an order of magnitude more memory.

For example, a good option is to rent compute time on a cloud service like Amazon Web Services that offers machines with tens of gigabytes of RAM for less than a US dollar per hour.

4. Use a Relational Database

Relational databases provide a standard way of storing and accessing very large datasets.

Internally, the data is stored on disk can be progressively loaded in batches and can be queried using a standard query language (SQL).

Free open-source database tools like MySQL or Postgres can be used and most (all?) programming languages and many machine learning tools can connect directly to relational databases. You can also use a lightweight approach, such as SQLite.

5. Use a Big Data Platform

In some cases, you may need to resort to a big data platform.

Summary

In this post, you discovered a number of tactics and ways that you can use when dealing with Python Memory Error.

Are there other methods that you know about or have tried?

Share them in the comments below.

Have you tried any of these methods?

Let me know in the comments.

If your problem is still not solved and you need help regarding Python Memory Error. Comment Down below, We will try to solve your issue asap.