Мы предполагаем, что вы немного знакомы с Docker и знаете основы, такие как запуск контейнеров докеров и т. д.

Что именно изменяет образ докера?

Образ контейнера строится по слоям (или это набор слоев), каждая инструкция Dockerfile создает слой изображения. Например, рассмотрим следующий файл Dockerfile:

FROM alpine:latest

RUN apk add --no-cache python3

ENTRYPOINT ["python3", "-c", "print('Hello World')"]

Поскольку существует всего три команды Dockerfile, образ, созданный из этого Dockerfile, будет содержать в общей сложности три слоя.

Вы можете убедиться в этом, построив образ:

docker image built -t dummy:0.1 .

А затем с помощью команды docker image history на построенном образе.

articles/Modify a Docker Image on modify-docker-images [?] took 12s ❯ docker image history dummy:0.1 IMAGE CREATED CREATED BY SIZE COMMENT b997f897c2db 10 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["python3" "-c… 0B ee217b9fe4f7 10 seconds ago /bin/sh -c apk add --no-cache python3 43.6MB 28f6e2705743 35 hours ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B <missing> 35 hours ago /bin/sh -c #(nop) ADD file:80bf8bd014071345b… 5.61MB

Игнорируйте последний слой <missing>.

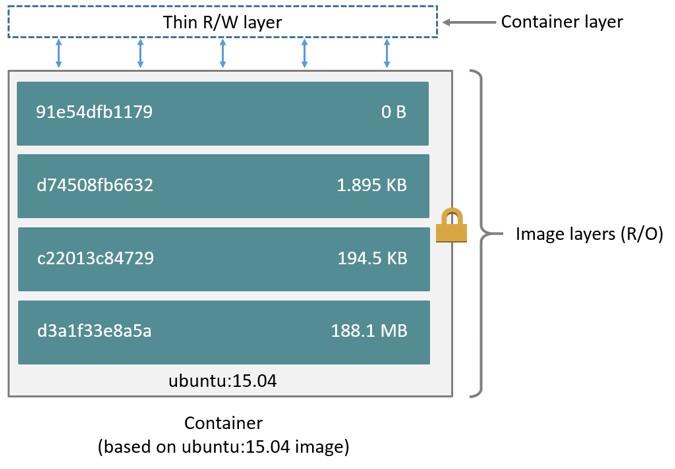

Каждый из этих слоев доступен только для чтения. Это полезно, потому что, поскольку эти слои доступны только для чтения, ни один процесс, связанный с запущенным экземпляром этого изображения, не сможет изменять содержимое этого изображения, поэтому эти слои могут использоваться многими контейнерами без необходимости сохранения копия для каждого экземпляра. Но для того, чтобы процессы контейнеров могли выполнять чтение/запись, при создании контейнеров поверх существующих слоев RO добавляется еще один слой, который доступен для записи и не используется другими контейнерами.

Обратной стороной этого слоя r/w является то, что изменения, сделанные в этом слое, не являются постоянными, хотя вы можете использовать тома для сохранения некоторых данных, иногда вам может потребоваться/вы захотите добавить слой перед каким-либо существующим слоем или удалить слой из изображение или просто замените слой. Это причины, по которым можно изменить существующее изображение docker.

В этой статье я собираюсь рассмотреть все упомянутые выше случаи, используя разные методы.

Способы изменения образа докера

Есть два способа изменить образ докера.

- Через Dockerfiles.

- Используя команду docker container commit.

Мы объясним оба метода, а в конце добавим, какой вариант использования лучше подходит для этого метода в контексте.

Изменение образа докера через Dockerfile

Изменение образа докера по сути означает изменение слоев изображения. Теперь, поскольку каждая команда Dockerfile представляет один слой изображения, изменение каждой строки файла Dockerfile также изменит соответствующий образ.

Поэтому, если вы добавляете слой к изображению, вы можете просто добавить к нему еще одну инструкцию Dockerfile, чтобы удалить одну, вы удалили бы строку, а для изменения слоя вы бы изменили строку соответствующим образом.

Есть два способа использовать Dockerfile для изменения изображения.

- Используя образ, который вы хотите изменить, как базовый образ, и создайте дочерний образ.

- Изменение фактического файла Dockerfile образа, который вы хотите изменить.

Позвольте нам объяснить, какой метод следует использовать, когда и как.

1. Использование изображения в качестве базового.

Это когда вы берете изображение, которое хотите изменить, и добавляете к нему слои для создания нового дочернего изображения. Если образ не создается с нуля, каждое изображение является модификацией одного и того же родительского базового образа.

Рассмотрим предыдущий файл Dockerfile. Скажите, почему построение образа из этого образа названо dummy:0.1. А если мы захотели использовать Perl вместо Python3 для печати «Hello World», но мы не хотим удалять Python3, мы могли бы просто использовать изображение dummy:0.1 в качестве базового изображения (поскольку Python3 уже существует) и строить из этого, как показано ниже

FROM dummy:0.1 RUN apk add --no-cache perl ENTRYPOINT ["perl", "-e", "print "Hello Worldn""]

Здесь мы строим поверх dummy:0.1, добавляя к нему больше слоев, как считаем нужным.

Этот метод не будет очень полезным, если вы собираетесь изменить или удалить какой-либо существующий слой. Для этого вам нужно следовать следующему методу.

2. Изменение образа Dockerfile.

Поскольку существующие слои изображения доступны только для чтения, вы не можете напрямую изменять их с помощью нового файла Dockerfile. С помощью команды FROM в Dockerfile вы берете какое-то изображение в качестве основы и строите на нем или добавляете к нему слои.

Некоторые задачи могут потребовать от нас изменения существующего уровня, хотя вы можете сделать это, используя предыдущий метод с кучей противоречивых RUNинструкций (например, удаление файлов, удаление/замена пакетов, добавленных на каком-то предыдущем уровне), это не идеальное решение или что мы бы порекомендовали. Потому что он добавляет дополнительные слои и значительно увеличивает размер изображения.

Лучшим способом было бы не использовать образ в качестве базового, а изменить фактический файл Dockerfile этого образа. Вернемся к предыдущему Dockerfile, что, если бы мне не пришлось хранить Python3 в этом образе и заменить пакет Python3 и команду на Perl?

Следуя предыдущему методу, нам пришлось бы создать новый Dockerfile, например:

FROM dummy:0.1 RUN apk del python3 && apk add --no-cache perl ENTRYPOINT ["perl", "-e", "print "Hello Worldn""]

При построении, в этом изображении будет всего пять слоев.

articles/Modify a Docker Image on modify-docker-images [?] took 3s ❯ docker image history dummy:0.2 IMAGE CREATED CREATED BY SIZE COMMENT 2792036ddc91 10 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["perl" "-e" "… 0B b1b2ec1cf869 11 seconds ago /bin/sh -c apk del python3 && apk add --no-c… 34.6MB ecb8694b5294 3 hours ago /bin/sh -c #(nop) ENTRYPOINT ["python3" "-c… 0B 8017025d71f9 3 hours ago /bin/sh -c apk add --no-cache python3 && … 43.6MB 28f6e2705743 38 hours ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B <missing> 38 hours ago /bin/sh -c #(nop) ADD file:80bf8bd014071345b… 5.61MB

Также размер изображения составляет 83,8 МБ.

articles/Modify a Docker Image on modify-docker-images [?] ❯ docker images REPOSITORY TAG IMAGE ID CREATED SIZE dummy 0.2 2792036ddc91 19 seconds ago 83.8MB

Теперь вместо этого возьмите исходный файл Dockerfile и измените файлы Python3 на Perl следующим образом

FROM alpine:latest RUN apk add --no-cache perl ENTRYPOINT ["perl", "-e", "print "Hello Worldn""]

Количество слоев уменьшилось до 3, а размер теперь составляет 40,2 МБ.

articles/Modify a Docker Image on modify-docker-images [?] took 3s ❯ docker image history dummy:0.3 IMAGE CREATED CREATED BY SIZE COMMENT f35cd94c92bd 9 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["perl" "-e" "… 0B 053a6a6ba221 9 seconds ago /bin/sh -c apk add --no-cache perl 34.6MB 28f6e2705743 38 hours ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B <missing> 38 hours ago /bin/sh -c #(nop) ADD file:80bf8bd014071345b… 5.61MB articles/Modify a Docker Image on modify-docker-images [?] ❯ docker images REPOSITORY TAG IMAGE ID CREATED SIZE dummy 0.3 f35cd94c92bd 29 seconds ago 40.2MB

Изображение успешно изменено.

Предыдущий метод более полезен, когда вы собираетесь просто добавить слои поверх существующих, но не очень полезен при попытке изменить существующие слои, например удалить один, заменить один, изменить порядок существующих и так далее. Вот где сияет этот метод.

Метод 2: изменение образа с помощью фиксации докера

Есть еще один метод, с помощью которого вы можете сделать снимок работающего контейнера и превратить его в собственный образ.

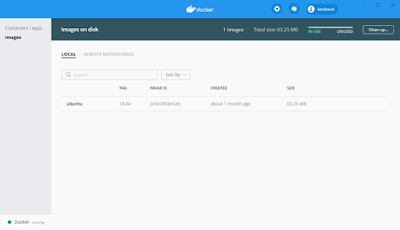

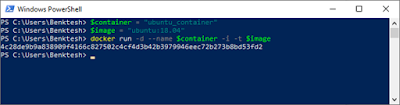

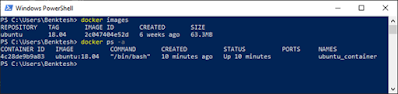

Давайте создадим идентичный образ dummy:0.1, но на этот раз без использования Dockerfile. Поскольку мы использовали базу alpine:latestкак dummy:0.1, разверните контейнер с этим изображением.

docker run --rm --name alpine -ti alpine ash

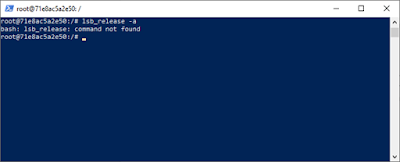

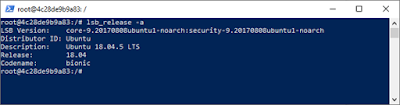

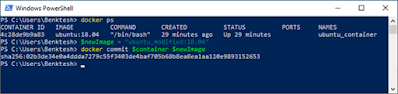

Теперь внутри контейнера, добавьте пакет Python3, apk add –no-cache python3. После этого откройте новое окно терминала и выполните следующую команду (или что-то подобное)

docker container commit --change='ENTRYPOINT ["python3", "-c", "print("Hello World")"]' alpine dummy:0.4

С помощью флага –change мы добавляю к новому образу dummy:04 инструкцию Dockerfile (в данном случае инструкцию ENTRYPOINT).

С помощью команды docker container commit вы в основном конвертируете внешний слой r/w в слой ar/o, добавляете его к существующим слоям изображения и создаете новое изображение. Этот метод более интуитивно понятен/интерактивен, поэтому вы можете использовать его вместо Dockerfiles, но понимаете, что это не очень воспроизводимо. Также те же правила применяются к удалению или изменению любых существующих слоев, добавление слоя только для того, чтобы что-то удалить или изменить что-то, сделанное на предыдущем слое, не лучшая идея, по крайней мере, в большинстве случаев.

На этом статья завершается. Мы надеемся, что это статья была полезна для вас, если у вас есть какие-либо вопросы, оставьте комментарий ниже.

Если вы нашли ошибку, пожалуйста, выделите фрагмент текста и нажмите Ctrl+Enter.

I presume you are a tad bit familiar with Docker and know basics like running docker containers etc.

In previous articles we have discussed updating docker container and writing docker files.

What exactly is modifying a docker image?

A container image is built in layers (or it is a collection of layers), each Dockerfile instruction creates a layer of the image. For example, consider the following Dockerfile:

FROM alpine:latest

RUN apk add --no-cache python3

ENTRYPOINT ["python3", "-c", "print('Hello World')"]

Since there are a total of three Dockerfile commands, the image built from this Dockerfile, will contain a total of three layers.

You can confirm that by building the image:

docker image built -t dummy:0.1 .

And then using the command docker image history on the built image.

articles/Modify a Docker Image on modify-docker-images [?] took 12s

❯ docker image history dummy:0.1

IMAGE CREATED CREATED BY SIZE COMMENT

b997f897c2db 10 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["python3" "-c… 0B

ee217b9fe4f7 10 seconds ago /bin/sh -c apk add --no-cache python3 43.6MB

28f6e2705743 35 hours ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 35 hours ago /bin/sh -c #(nop) ADD file:80bf8bd014071345b… 5.61MB

Ignore the last ‘<missing>’ layer.

Each of these layers is read-only. This is beneficial because since these layers are read-only, no process associated with a running instance of this image is going to be able to modify the contents of this image, therefore, these layers can be shared by many containers without having to keep a copy for each instance. But for the containers’ processes to be able to perform r/w, another layer is added on top of the existing RO layers when creating the containers, this is writable and not shared by other containers.

The downside of this r/w layer is that changes made in this layer is not persistent, although you can use volumes to persist some data, sometimes you may need/want to add a layer before some existing layer, or delete a layer from an image or simply replace a layer. These are the reasons one might want to modify an existing docker image.

In this article, I’m going to cover all the cases I mentioned above, using different methods.

Methods of modifying a docker image

There are two ways you can modify a docker image.

- Through Dockerfiles.

- Using the command

docker container commit.

I’ll explain both methods, and at the end, I’ll also add which use case would be better for the method in context.

Method 1: Modifying docker image through the Dockerfile

Modifying a docker image essentially means modifying the layers of an image. Now since each Dockerfile command represents one layer of the image, modifying each line of a Dockerfile will change the respective image as well.

So if you were to add a layer to the image, you can simply add another Dockerfile instruction to it, to remove one you would remove a line and to change a layer, you would change the line accordingly.

There are two ways you can use a Dockerfile to modify an image.

- Using the image that you want to modify as a base image and build a child image.

- Modifying the actual Dockerfile of the image you want to change.

Let me explain which method should be used when, and how.

1. Using an image as a base image

This is when you take the image you want to modify, and add layers to it to build a new child image. Unless an image is built from scratch, every image is a modification to the same parent base image.

Consider the previous Dockerfile. Say the image build from that image is named dummy:0.1. Now if I were to think that I now need to use Perl instead of Python3 to print «Hello World», but I also don’t want to remove Python3, I could just use the dummy:0.1 image as the base image (since Python3 is already there) and build from that like the following

FROM dummy:0.1

RUN apk add --no-cache perl

ENTRYPOINT ["perl", "-e", "print "Hello Worldn""]

Here I’m building on top of dummy:0.1, adding more layers to it as I see fit.

This method is not going to be much helpful if your intention is to change or delete some existing layer. For that, you need to follow the next method.

2. Modifying the image’s Dockerfile

Since the existing layers of an image are read-only, you cannot directly modify them through a new Dockerfile. With the FROM command in a Dockerfile, you take some image as a base and build on it, or add layers to it.

Some tasks may require us to alter an existing layer, although you can do that using the previous method with a bunch of contradictory RUN instructions (like deleting files, removing/replacing packages added in some previous layer), it isn’t an ideal solution or what I’d recommend. Because it adds additional layers and increases the image size by quite a lot.

A better method would be to not use the image as a base image, but change that image’s actual Dockerfile. Consider again the previous Dockerfile, what if I did not have to keep Python3 in that image, and replace the Python3 package and the command with the Perl ones?

If following the previous method I’d have had to create a new Dockerfile like so —

FROM dummy:0.1

RUN apk del python3 && apk add --no-cache perl

ENTRYPOINT ["perl", "-e", "print "Hello Worldn""]

If built, there is going to be a total of five layers in this image.

articles/Modify a Docker Image on modify-docker-images [?] took 3s

❯ docker image history dummy:0.2

IMAGE CREATED CREATED BY SIZE COMMENT

2792036ddc91 10 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["perl" "-e" "… 0B

b1b2ec1cf869 11 seconds ago /bin/sh -c apk del python3 && apk add --no-c… 34.6MB

ecb8694b5294 3 hours ago /bin/sh -c #(nop) ENTRYPOINT ["python3" "-c… 0B

8017025d71f9 3 hours ago /bin/sh -c apk add --no-cache python3 && … 43.6MB

28f6e2705743 38 hours ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 38 hours ago /bin/sh -c #(nop) ADD file:80bf8bd014071345b… 5.61MB

Also, the size of the image is 83.8 MB.

articles/Modify a Docker Image on modify-docker-images [?]

❯ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

dummy 0.2 2792036ddc91 19 seconds ago 83.8MB

Now instead of doing that, take the initial Dockerfile, and change the Python3 ones to Perl like so

FROM alpine:latest

RUN apk add --no-cache perl

ENTRYPOINT ["perl", "-e", "print "Hello Worldn""]

The number of layers has reduced to 3, and the size now is 40.2 MB.

articles/Modify a Docker Image on modify-docker-images [?] took 3s

❯ docker image history dummy:0.3

IMAGE CREATED CREATED BY SIZE COMMENT

f35cd94c92bd 9 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["perl" "-e" "… 0B

053a6a6ba221 9 seconds ago /bin/sh -c apk add --no-cache perl 34.6MB

28f6e2705743 38 hours ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 38 hours ago /bin/sh -c #(nop) ADD file:80bf8bd014071345b… 5.61MB

articles/Modify a Docker Image on modify-docker-images [?]

❯ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

dummy 0.3 f35cd94c92bd 29 seconds ago 40.2MB

Image successfully changed.

The previous method is more useful when you’re going to just add layers on top of the existing ones, but isn’t much helpful when trying to modify the existing layers like delete one, replace one, reorder the existing ones and so on. That is where this method shines.

Method 2: Modifying image using docker commit

There’s this another method where you can take a snapshot of a running container, and turn that into an image of its own.

Let’s build a dummy:0.1 identical image, but this time without using a Dockerfile. Since I used alpine:latest as dummy:0.1‘s base, spin up a container of that image.

docker run --rm --name alpine -ti alpine ash

Now inside the container, add the Python3 package, apk add --no-cache python3. Once done, open a new terminal window and run the following command (or something similar)

docker container commit --change='ENTRYPOINT ["python3", "-c", "print("Hello World")"]' alpine dummy:0.4

With the --change flag I’m adding a Dockerfile instruction to the new dummy:04 image (in this case, the ENTRYPOINT instruction).

With the docker container commit command, you basically convert the outermost r/w layer to a r/o layer, append that to the existing image’s layers and create a new image. This method is more intuitive/interactive so you may want to use this instead of Dockerfiles, but do understand that this isn’t very reproducible. Also the same rules apply to removing or altering any existing layers, adding a layer just to remove something or alter something done in a previous layer is not the best idea, at least in most of the cases.

That concludes this article. I hope this one was helpful to you, if you have any question, do comment down below.

Spin up and run applications in split-seconds – That’s what makes Docker containers so popular among developers and web hosting providers.

In our role as Technical Support Providers for web hosting companies and infrastructure providers, we provision and manage Docker systems that best suit their purposes.

One of the key tasks involved in this Docker management services, is editing docker images. Today, we’ll see how we edit docker image for customer needs.

What is a docker image

Docker helps to easily create and run container instances with our desired applications. These containers are created using images.

A docker image is a package of code, libraries, configuration files, etc. for an application. The images are stored in repositories (storage locations).

Images can be downloaded from a repository and executed to create docker containers. So, in effect, a container is just a run-time instance of a particular image.

To create a Docker image, a Dockerfile is used. A dockerfile is a text document, usually saved in YAML format. It contains the list of commands to be executed to create an image.

How to edit docker image

The images provided by repositories are specific to a single instance type creation. In many scenarios, users need to edit these images to suit their needs.

For customizing or tweaking a docker image to specific requirements, we edit this docker image. But Docker has a drawback that an image cannot be directly edited or modified.

To edit Docker images, there are two ways:

1. Edit the Dockerfile

The most commonly used method is to edit the Dockerfile that is used to create the Docker image. This is a convenient and fool-proof method to edit docker image.

A sample Dockerfile for a Zabbix monitoring container would look like:

zabbix-agent:

container_name: zabbix-agent

image: zabbix/zabbix-agent:latest

net: "host"

privileged: true

restart: always

environment:

ZBX_SERVER_HOST: zabbix.mysite.com

To modify the image used by an existing container, we delete that container, edit the Docker file with the changes needed and recreate the container with the new file.

In this sample Dockerfile, the image used to create the container is ‘zabbix/zabbix-agent:latest’. This image can be modified to another one or version by editing this file.

The ‘docker compose’ tool helps to manage multiple Docker containers easily using a single YAML Dockerfile. In cases where changes are to be made for only one container, it can be edited in the file.

After making changes to the image, only the corresponding containers need to be recreated and others can be left intact. The option “–no-recreate” is used for that.

docker-compose -f dockerfile.yml up -d --no-recreate

As Docker containers are meant to be restarted and recreated, they cannot be used to store persistent data. The data in Docker infrastructure are usually stored in Docker volumes.

Storing the data in volumes outside the containers ensures that even when the containers are recreated, the data related to it won’t get affected.

This enables us to easily edit a docker image without losing the underlying data. Modifying Dockerfiles also helps to keep track of the changes made in the images.

Utmost care has to be exercised while modifying a Dockerfile, especially in the production environment, as a single mistake can mess up a normally functioning container.

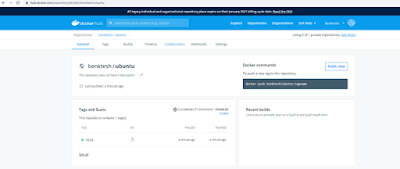

2. Create a modified image

Another option to edit docker image is to run an existing image as a container, make the required modifications in it and then create a new image from the modified container.

a. First, create a Docker container from a parent image that is available in repository.

b. Then connect to the container via bash shell

docker exec -it container-name bash

c. From the shell, make the changes in the container as required. This can include installing new packages, modifying configuration files, downloading or removing files, compiling modules, and so on.

d. Once the changes are done, exit the container. Then commit the container as a new image. This will save the modified container state as a new image.

docker commit container-ID image-name

e. This modified image is then uploaded to a repository, which is then used for creating more such containers for future use.

Conclusion

Docker containers are widely used in DevOps and niche web hosting. Today we’ve discussed how our Docker Support Engineers easily edit docker images in our Docker infrastructure management.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

SEE SERVER ADMIN PLANS

var google_conversion_label = «owonCMyG5nEQ0aD71QM»;

I have recently started working on docker. I have downloaded a docker image and I want to change it in a way so that I can copy a folder with its contents from my local into that image or may be edit any file in the image.

I thought if I can extract the image somehow, do the changes and then create one image. Not sure if it will work like that. I tried looking for options but couldn’t find a promising solution to it.

The current Dockerfile for the image is somewhat like this:

FROM abc/def

MAINTAINER Humpty Dumpty <@hd>

RUN sudo apt-get install -y vim

ADD . /home/humpty-dumpty

WORKDIR /home/humpty-dumpty

RUN cd lib && make

CMD ["bash"]

Note:- I am looking for an easy and clean way to change the existing image only and not to create a new image with the changes.

asked Apr 14, 2017 at 16:27

qwertyqwerty

2,3623 gold badges27 silver badges55 bronze badges

As an existing docker image cannot be changed what I did was that I created a dockerfile for a new docker image based on my original docker image for its contents and modified it to include test folder from local in the new image.

This link was helpful Build your own image — Docker Documentation

FROM abc/def:latest

The above line in docker file tells Docker which image your image is based on. So, the contents from parent image are copied to new image

Finally, for including the test folder on local drive I added below command in my docker file

COPY test /home/humpty-dumpty/test

and the test folder was added in that new image.

Below is the dockerfile used to create the new image from the existing one.

FROM abc/def:latest

# Extras

RUN sudo apt-get install -y vim

# copies local folder into the image

COPY test /home/humpty-dumpty/test

Update:- For editing a file in the running docker image, we can open that file using vim editor installed through the above docker file

vim <filename>

Now, the vim commands can be used to edit and save the file.

answered Apr 14, 2017 at 18:05

qwertyqwerty

2,3623 gold badges27 silver badges55 bronze badges

You don’t change existing images, images are marked with a checksum and are considered read-only. Containers that use an image point to the same files on the filesystem, adding on their on RW layer for the container, and therefore depend on the image being unchanged. Layer caching also adds to this dependency.

Because of the layered filesystem and caching, creating a new image with just your one folder addition will only add a layer with that addition, and not a full copy of a new image. Therefore, the easy/clean/correct way is to create a new image using a Dockerfile.

answered Apr 14, 2017 at 16:40

BMitchBMitch

214k40 gold badges456 silver badges428 bronze badges

2

First of all, I will not recommend messing with other image. It would be better if you can create your own. Moving forward, You can use copy command to add folder from host machine to the docker image.

COPY <src> <dest>

The only caveat is <src> path must be inside the context of the build; you cannot COPY ../something /something, because the first step of a docker build is to send the context directory (and subdirectories) to the docker daemon.

FROM abc/def

MAINTAINER Humpty Dumpty <@hd>

RUN sudo apt-get install -y vim

// Make sure you already have /home/humpty-dumpty directory

// if not create one

RUN mkdir -p /home/humpty-dumpty

COPY test /home/humpty-dumpty/ // This will add test directory to home/humpty-dumpty

WORKDIR /home/humpty-dumpty

RUN cd lib && make

CMD ["bash"]

answered Apr 14, 2017 at 16:44

RahulRahul

2,0281 gold badge20 silver badges36 bronze badges

I think you can use the docker cp command to make changes to the container which is build from your docker image and then commit the changes.

Here is a reference,

Guide for docker cp:

https://docs.docker.com/engine/reference/commandline/cp/

Guide for docker commit: https://docs.docker.com/engine/reference/commandline/container_commit/

Remember, docker image is a ready only so you cannot make any changes to that. The only way is to modify your docker file and recreate the image but in that case you lose the data(if not mounted on docker volume ). But you can make changes to container which is not ready only.

answered Apr 14, 2017 at 17:32

В переводе третьей части серии материалов, посвящённых Docker, мы продолжим вдохновляться выпечкой, а именно — бубликами. Нашей сегодняшней основной темой будет работа с файлами Dockerfile. Мы разберём инструкции, которые используются в этих файлах.

→ Часть 1: основы

→ Часть 2: термины и концепции

→ Часть 3: файлы Dockerfile

→ Часть 4: уменьшение размеров образов и ускорение их сборки

→ Часть 5: команды

→ Часть 6: работа с данными

Бублики — это инструкции в файле Dockerfile

Образы Docker

Вспомните о том, что контейнер Docker — это образ Docker, вызванный к жизни. Это — самодостаточная операционная система, в которой имеется только самое необходимое и код приложения.

Образы Docker являются результатом процесса их сборки, а контейнеры Docker — это выполняющиеся образы. В самом сердце Docker находятся файлы Dockerfile. Подобные файлы сообщают Docker о том, как собирать образы, на основе которых создаются контейнеры.

Каждому образу Docker соответствует файл, который называется Dockerfile. Его имя записывается именно так — без расширения. При запуске команды docker build для создания нового образа подразумевается, что Dockerfile находится в текущей рабочей директории. Если этот файл находится в каком-то другом месте, его расположение можно указать с использованием флага -f.

Контейнеры, как мы выяснили в первом материале этой серии, состоят из слоёв. Каждый слой, кроме последнего, находящегося поверх всех остальных, предназначен только для чтения. Dockerfile сообщает системе Docker о том, какие слои и в каком порядке надо добавить в образ.

Каждый слой, на самом деле, это всего лишь файл, который описывает изменение состояния образа в сравнении с тем состоянием, в котором он пребывал после добавления предыдущего слоя. В Unix, кстати, практически всё что угодно — это файл.

Базовый образ — это то, что является исходным слоем (или слоями) создаваемого образа. Базовый образ ещё называют родительским образом.

Базовый образ — это то, с чего начинается образ Docker

Когда образ загружается из удалённого репозитория на локальный компьютер, то физически скачиваются лишь слои, которых на этом компьютере нет. Docker стремится экономить пространство и время путём повторного использования существующих слоёв.

Файлы Dockerfile

В файлах Dockerfile содержатся инструкции по созданию образа. С них, набранных заглавными буквами, начинаются строки этого файла. После инструкций идут их аргументы. Инструкции, при сборке образа, обрабатываются сверху вниз. Вот как это выглядит:

FROM ubuntu:18.04

COPY . /app

Слои в итоговом образе создают только инструкции FROM, RUN, COPY, и ADD. Другие инструкции что-то настраивают, описывают метаданные, или сообщают Docker о том, что во время выполнения контейнера нужно что-то сделать, например — открыть какой-то порт или выполнить какую-то команду.

Здесь мы исходим из предположения, в соответствии с которым используется образ Docker, основанный на Unix-подобной ОС. Конечно, тут можно воспользоваться и образом, основанным на Windows, но использование Windows — это менее распространённая практика, работать с такими образами сложнее. В результате, если у вас есть такая возможность, пользуйтесь Unix.

Для начала приведём список инструкций Dockerfile с краткими комментариями.

Дюжина инструкций Dockerfile

FROM— задаёт базовый (родительский) образ.LABEL— описывает метаданные. Например — сведения о том, кто создал и поддерживает образ.ENV— устанавливает постоянные переменные среды.RUN— выполняет команду и создаёт слой образа. Используется для установки в контейнер пакетов.COPY— копирует в контейнер файлы и папки.ADD— копирует файлы и папки в контейнер, может распаковывать локальные .tar-файлы.CMD— описывает команду с аргументами, которую нужно выполнить когда контейнер будет запущен. Аргументы могут быть переопределены при запуске контейнера. В файле может присутствовать лишь одна инструкцияCMD.WORKDIR— задаёт рабочую директорию для следующей инструкции.ARG— задаёт переменные для передачи Docker во время сборки образа.ENTRYPOINT— предоставляет команду с аргументами для вызова во время выполнения контейнера. Аргументы не переопределяются.EXPOSE— указывает на необходимость открыть порт.VOLUME— создаёт точку монтирования для работы с постоянным хранилищем.

Теперь поговорим об этих инструкциях.

Инструкции и примеры их использования

▍Простой Dockerfile

Dockerfile может быть чрезвычайно простым и коротким. Например — таким:

FROM ubuntu:18.04▍Инструкция FROM

Файл Dockerfile должен начинаться с инструкции FROM, или с инструкции ARG, за которой идёт инструкция FROM.

Ключевое слово FROM сообщает Docker о том, чтобы при сборке образа использовался бы базовый образ, который соответствует предоставленному имени и тегу. Базовый образ, кроме того, ещё называют родительским образом.

В этом примере базовый образ хранится в репозитории ubuntu. Ubuntu — это название официального репозитория Docker, предоставляющего базовую версию популярной ОС семейства Linux, которая называется Ubuntu.

Обратите внимание на то, что рассматриваемый Dockerfile включает в себя тег 18.04, уточняющий то, какой именно базовый образ нам нужен. Именно этот образ и будет загружен при сборке нашего образа. Если тег в инструкцию не включён, тогда Docker исходит из предположения о том, что требуется самый свежий образ из репозитория. Для того чтобы яснее выразить свои намерения, автору Dockerfile рекомендуется указывать то, какой именно образ ему нужен.

Когда вышеописанный Dockerfile используется на локальной машине для сборки образа в первый раз, Docker загрузит слои, определяемые образом ubuntu. Их можно представить наложенными друг на друга. Каждый следующий слой представляет собой файл, описывающий отличия образа в сравнении с тем его состоянием, в котором он был после добавления в него предыдущего слоя.

При создании контейнера слой, в который можно вносить изменения, добавляется поверх всех остальных слоёв. Данные, находящиеся в остальных слоях, можно только читать.

Структура контейнера (взято из документации)

Docker, ради эффективности, использует стратегию копирования при записи. Если слой в образе существует на предыдущем уровне и какому-то слою нужно произвести чтение данных из него, Docker использует существующий файл. При этом ничего загружать не нужно.

Когда образ выполняется, если слой нужно модифицировать средствами контейнера, то соответствующий файл копируется в самый верхний, изменяемый слой. Для того чтобы узнать подробности о стратегии копирования при записи, взгляните на этот материал из документации Docker.

Продолжим рассмотрение инструкций, которые используются в Dockerfile, приведя пример такого файла с более сложной структурой.

▍Более сложный Dockerfile

Хотя файл Dockerfile, который мы только что рассмотрели, получился аккуратным и понятным, он устроен слишком просто, в нём используется всего одна инструкция. Кроме того, там нет инструкций, вызываемых во время выполнения контейнера. Взглянем на ещё один файл, который собирает маленький образ. В нём имеются механизмы, определяющие команды, вызываемые во время выполнения контейнера.

FROM python:3.7.2-alpine3.8

LABEL maintainer="jeffmshale@gmail.com"

ENV ADMIN="jeff"

RUN apk update && apk upgrade && apk add bash

COPY . ./app

ADD https://raw.githubusercontent.com/discdiver/pachy-vid/master/sample_vids/vid1.mp4

/my_app_directory

RUN ["mkdir", "/a_directory"]

CMD ["python", "./my_script.py"]Возможно, на первый взгляд этот файл может показаться довольно сложным. Поэтому давайте с ним разберёмся.

Базой этого образа является официальный образ Python с тегом 3.7.2-alpine3.8. Проанализировав этот код можно увидеть, что данный базовый образ включает в себя Linux, Python, и, по большому счёту, этим его состав и ограничивается. Образы ОС Alpine весьма популярны в мире Docker. Дело в том, что они отличаются маленькими размерами, высокой скоростью работы и безопасностью. Однако образы Alpine не отличаются широкими возможностями, характерными для обычных операционных систем. Поэтому для того, чтобы собрать на основе такого образа что-то полезное, создателю образа нужно установить в него необходимые ему пакеты.

▍Инструкция LABEL

Метки

Инструкция LABEL (метка) позволяет добавлять в образ метаданные. В случае с рассматриваемым сейчас файлом, она включает в себя контактные сведения создателя образа. Объявление меток не замедляет процесс сборки образа и не увеличивает его размер. Они лишь содержат в себе полезную информацию об образе Docker, поэтому их рекомендуется включать в файл. Подробности о работе с метаданными в Dockerfile можно прочитать здесь.

▍Инструкция ENV

Окружающая среда

Инструкция ENV позволяет задавать постоянные переменные среды, которые будут доступны в контейнере во время его выполнения. В предыдущем примере после создания контейнера можно пользоваться переменной ADMIN.

Инструкция ENV хорошо подходит для задания констант. Если вы используете некое значение в Dockerfile несколько раз, скажем, при описании команд, выполняющихся в контейнере, и подозреваете, что, возможно, вам когда-нибудь придётся сменить его на другое, его имеет смысл записать в подобную константу.

Надо отметить, что в файлах Dockerfile часто существуют разные способы решения одних и тех же задач. Что именно использовать — это вопрос, на решение которого влияет стремление к соблюдению принятых в среде Docker методов работы, к обеспечению прозрачности решения и его высокой производительности. Например, инструкции RUN, CMD и ENTRYPOINT служат разным целям, но все они используются для выполнения команд.

▍Инструкция RUN

Инструкция RUN

Инструкция RUN позволяет создать слой во время сборки образа. После её выполнения в образ добавляется новый слой, его состояние фиксируется. Инструкция RUN часто используется для установки в образы дополнительных пакетов. В предыдущем примере инструкция RUN apk update && apk upgrade сообщает Docker о том, что системе нужно обновить пакеты из базового образа. Вслед за этими двумя командами идёт команда && apk add bash, указывающая на то, что в образ нужно установить bash.

То, что в командах выглядит как apk — это сокращение от Alpine Linux package manager (менеджер пакетов Alpine Linux). Если вы используете базовый образ какой-то другой ОС семейства Linux, тогда вам, например, при использовании Ubuntu, для установки пакетов может понадобиться команда вида RUN apt-get. Позже мы поговорим о других способах установки пакетов.

Инструкция RUN и схожие с ней инструкции — такие, как CMD и ENTRYPOINT, могут быть использованы либо в exec-форме, либо в shell-форме. Exec-форма использует синтаксис, напоминающий описание JSON-массива. Например, это может выглядеть так: RUN ["my_executable", "my_first_param1", "my_second_param2"].

В предыдущем примере мы использовали shell-форму инструкции RUN в таком виде: RUN apk update && apk upgrade && apk add bash.

Позже в нашем Dockerfile использована exec-форма инструкции RUN, в виде RUN ["mkdir", "/a_directory"] для создания директории. При этом, используя инструкцию в такой форме, нужно помнить о необходимости оформления строк с помощью двойных кавычек, как это принято в формате JSON.

▍Инструкция COPY

Инструкция COPY

Инструкция COPY представлена в нашем файле так: COPY . ./app. Она сообщает Docker о том, что нужно взять файлы и папки из локального контекста сборки и добавить их в текущую рабочую директорию образа. Если целевая директория не существует, эта инструкция её создаст.

▍Инструкция ADD

Инструкция ADD позволяет решать те же задачи, что и COPY, но с ней связана ещё пара вариантов использования. Так, с помощью этой инструкции можно добавлять в контейнер файлы, загруженные из удалённых источников, а также распаковывать локальные .tar-файлы.

В этом примере инструкция ADD была использована для копирования файла, доступного по URL, в директорию контейнера my_app_directory. Надо отметить, однако, что документация Docker не рекомендует использование подобных файлов, полученных по URL, так как удалить их нельзя, и так как они увеличивают размер образа.

Кроме того, документация предлагает везде, где это возможно, вместо инструкции ADD использовать инструкцию COPY для того, чтобы сделать файлы Dockerfile понятнее. Полагаю, команде разработчиков Docker стоило бы объединить ADD и COPY в одну инструкцию для того, чтобы тем, кто создаёт образы, не приходилось бы помнить слишком много инструкций.

Обратите внимание на то, что инструкция ADD содержит символ разрыва строки — . Такие символы используются для улучшения читабельности длинных команд путём разбиения их на несколько строк.

▍Инструкция CMD

Инструкция CMD

Инструкция CMD предоставляет Docker команду, которую нужно выполнить при запуске контейнера. Результаты выполнения этой команды не добавляются в образ во время его сборки. В нашем примере с помощью этой команды запускается скрипт my_script.py во время выполнения контейнера.

Вот ещё кое-что, что нужно знать об инструкции CMD:

- В одном файле Dockerfile может присутствовать лишь одна инструкция

CMD. Если в файле есть несколько таких инструкций, система проигнорирует все кроме последней. - Инструкция

CMDможет иметь exec-форму. Если в эту инструкцию не входит упоминание исполняемого файла, тогда в файле должна присутствовать инструкцияENTRYPOINT. В таком случае обе эти инструкции должны быть представлены в форматеJSON. - Аргументы командной строки, передаваемые

docker run, переопределяют аргументы, предоставленные инструкцииCMDв Dockerfile.

▍Ещё более сложный Dockerfile

Рассмотрим ещё один файл Dockerfile, в котором будут использованы некоторые новые команды.

FROM python:3.7.2-alpine3.8

LABEL maintainer="jeffmshale@gmail.com"

# Устанавливаем зависимости

RUN apk add --update git

# Задаём текущую рабочую директорию

WORKDIR /usr/src/my_app_directory

# Копируем код из локального контекста в рабочую директорию образа

COPY . .

# Задаём значение по умолчанию для переменной

ARG my_var=my_default_value

# Настраиваем команду, которая должна быть запущена в контейнере во время его выполнения

ENTRYPOINT ["python", "./app/my_script.py", "my_var"]

# Открываем порты

EXPOSE 8000

# Создаём том для хранения данных

VOLUME /my_volume

В этом примере, кроме прочего, вы можете видеть комментарии, которые начинаются с символа #.

Одно из основных действий, выполняемых средствами Dockerfile — это установка пакетов. Как уже было сказано, существуют различные способы установки пакетов с помощью инструкции RUN.

Пакеты в образ Alpine Docker можно устанавливать с помощью apk. Для этого, как мы уже говорили, применяется команда вида RUN apk update && apk upgrade && apk add bash.

Кроме того, пакеты Python в образ можно устанавливать с помощью pip, wheel и conda. Если речь идёт не о Python, а о других языках программирования, то при подготовке соответствующих образов могут использоваться и другие менеджеры пакетов.

При этом для того, чтобы установка была бы возможной, нижележащий слой должен предоставить слою, в который выполняется установка пакетов, подходящий менеджер пакетов. Поэтому если вы столкнулись с проблемами при установке пакетов, убедитесь в том, что менеджер пакетов установлен до того, как вы попытаетесь им воспользоваться.

Например, инструкцию RUN в Dockerfile можно использовать для установки списка пакетов с помощью pip. Если вы так поступаете — объедините все команды в одну инструкцию и разделите её символами разрыва строки с помощью символа . Благодаря такому подходу файлы будут выглядеть аккуратно и это приведёт к добавлению в образ меньшего количества слоёв, чем было бы добавлено при использовании нескольких инструкций RUN.

Кроме того, для установки нескольких пакетов можно поступить и по-другому. Их можно перечислить в файле и передать менеджеру пакетов этот файл с помощью RUN. Обычно таким файлам дают имя requirements.txt.

▍Инструкция WORKDIR

Рабочие директории

Инструкция WORKDIR позволяет изменить рабочую директорию контейнера. С этой директорией работают инструкции COPY, ADD, RUN, CMD и ENTRYPOINT, идущие за WORKDIR. Вот некоторые особенности, касающиеся этой инструкции:

- Лучше устанавливать с помощью

WORKDIRабсолютные пути к папкам, а не перемещаться по файловой системе с помощью командcdв Dockerfile. - Инструкция

WORKDIRавтоматически создаёт директорию в том случае, если она не существует. - Можно использовать несколько инструкций

WORKDIR. Если таким инструкциям предоставляются относительные пути, то каждая из них меняет текущую рабочую директорию.

▍Инструкция ARG

Инструкция ARG позволяет задать переменную, значение которой можно передать из командной строки в образ во время его сборки. Значение для переменной по умолчанию можно представить в Dockerfile. Например: ARG my_var=my_default_value.

В отличие от ENV-переменных, ARG-переменные недоступны во время выполнения контейнера. Однако ARG-переменные можно использовать для задания значений по умолчанию для ENV-переменных из командной строки в процессе сборки образа. А ENV-переменные уже будут доступны в контейнере во время его выполнения. Подробности о такой методике работы с переменными можно почитать здесь.

▍Инструкция ENTRYPOINT

Пункт перехода в какое-то место

Инструкция ENTRYPOINT позволяет задавать команду с аргументами, которая должна выполняться при запуске контейнера. Она похожа на команду CMD, но параметры, задаваемые в ENTRYPOINT, не перезаписываются в том случае, если контейнер запускают с параметрами командной строки.

Вместо этого аргументы командной строки, передаваемые в конструкции вида docker run my_image_name, добавляются к аргументам, задаваемым инструкцией ENTRYPOINT. Например, после выполнения команды вида docker run my_image bash аргумент bash добавится в конец списка аргументов, заданных с помощью ENTRYPOINT. Готовя Dockerfile, не забудьте об инструкции CMD или ENTRYPOINT.

В документации к Docker есть несколько рекомендаций, касающихся того, какую инструкцию, CMD или ENTRYPOINT, стоит выбрать в качестве инструмента для выполнения команд при запуске контейнера:

- Если при каждом запуске контейнера нужно выполнять одну и ту же команду — используйте

ENTRYPOINT. - Если контейнер будет использоваться в роли приложения — используйте

ENTRYPOINT. - Если вы знаете, что при запуске контейнера вам понадобится передавать ему аргументы, которые могут перезаписывать аргументы, указанные в Dockerfile, используйте

CMD.

В нашем примере использование инструкции ENTRYPOINT ["python", "my_script.py", "my_var"] приводит к тому, что контейнер, при запуске, запускает Python-скрипт my_script.py с аргументом my_var. Значение, представленное my_var, потом можно использовать в скрипте с помощью argparse. Обратите внимание на то, что в Dockerfile переменной my_var, до её использования, назначено значение по умолчанию с помощью ARG. В результате, если при запуске контейнера ему не передали соответствующее значение, будет применено значение по умолчанию.

Документация Docker рекомендует использовать exec-форму ENTRYPOINT: ENTRYPOINT ["executable", "param1", "param2"].

▍Инструкция EXPOSE

Инструкция EXPOSE

Инструкция EXPOSE указывает на то, какие порты планируется открыть для того, чтобы через них можно было бы связаться с работающим контейнером. Эта инструкция не открывает порты. Она, скорее, играет роль документации к образу, средством общения того, кто собирает образ, и того, кто запускает контейнер.

Для того чтобы открыть порт (или порты) и настроить перенаправление портов, нужно выполнить команду docker run с ключом -p. Если использовать ключ в виде -P (с заглавной буквой P), то открыты будут все порты, указанные в инструкции EXPOSE.

▍Инструкция VOLUME

Инструкция VOLUME

Инструкция VOLUME позволяет указать место, которое контейнер будет использовать для постоянного хранения файлов и для работы с такими файлами. Об этом мы ещё поговорим.

Итоги

Теперь вы знаете дюжину инструкций, применяемых при создании образов с помощью Dockerfile. Этим список таких инструкций не исчерпывается. В частности, мы не рассмотрели здесь такие инструкции, как USER, ONBUILD, STOPSIGNAL, SHELL и HEALTHCHECK. Вот краткий справочник по инструкциям Dockerfile.

Вероятно, файлы Dockerfile — это ключевой компонент экосистемы Docker, работать с которым нужно научиться всем, кто хочет уверенно чувствовать себя в этой среде. Мы ещё вернёмся к разговору о них в следующий раз, когда будем обсуждать способы уменьшения размеров образов.

Уважаемые читатели! Если вы пользуетесь Docker на практике, просим рассказать о том, как вы пишете Docker-файлы.

Dockerfile reference

Docker can build images automatically by reading the instructions from a

Dockerfile. A Dockerfile is a text document that contains all the commands a

user could call on the command line to assemble an image. Using docker build

users can create an automated build that executes several command-line

instructions in succession.

This page describes the commands you can use in a Dockerfile. When you are

done reading this page, refer to the Dockerfile Best

Practices for a tip-oriented guide.

Usage

The docker build command builds an image from

a Dockerfile and a context. The build’s context is the files at a specified

location PATH or URL. The PATH is a directory on your local filesystem.

The URL is a the location of a Git repository.

A context is processed recursively. So, a PATH includes any subdirectories and

the URL includes the repository and its submodules. A simple build command

that uses the current directory as context:

$ docker build .

Sending build context to Docker daemon 6.51 MB

...

The build is run by the Docker daemon, not by the CLI. The first thing a build

process does is send the entire context (recursively) to the daemon. In most

cases, it’s best to start with an empty directory as context and keep your

Dockerfile in that directory. Add only the files needed for building the

Dockerfile.

Warning: Do not use your root directory,

/, as thePATHas it causes

the build to transfer the entire contents of your hard drive to the Docker

daemon.

To use a file in the build context, the Dockerfile refers to the file specified

in an instruction, for example, a COPY instruction. To increase the build’s

performance, exclude files and directories by adding a .dockerignore file to

the context directory. For information about how to create a .dockerignore

file see the documentation on this page.

Traditionally, the Dockerfile is called Dockerfile and located in the root

of the context. You use the -f flag with docker build to point to a Dockerfile

anywhere in your file system.

$ docker build -f /path/to/a/Dockerfile .

You can specify a repository and tag at which to save the new image if

the build succeeds:

$ docker build -t shykes/myapp .

To tag the image into multiple repositories after the build,

add multiple -t parameters when you run the build command:

$ docker build -t shykes/myapp:1.0.2 -t shykes/myapp:latest .

The Docker daemon runs the instructions in the Dockerfile one-by-one,

committing the result of each instruction

to a new image if necessary, before finally outputting the ID of your

new image. The Docker daemon will automatically clean up the context you

sent.

Note that each instruction is run independently, and causes a new image

to be created — so RUN cd /tmp will not have any effect on the next

instructions.

Whenever possible, Docker will re-use the intermediate images (cache),

to accelerate the docker build process significantly. This is indicated by

the Using cache message in the console output.

(For more information, see the Build cache section) in the

Dockerfile best practices guide:

$ docker build -t svendowideit/ambassador .

Sending build context to Docker daemon 15.36 kB

Step 0 : FROM alpine:3.2

---> 31f630c65071

Step 1 : MAINTAINER SvenDowideit@home.org.au

---> Using cache

---> 2a1c91448f5f

Step 2 : RUN apk update && apk add socat && rm -r /var/cache/

---> Using cache

---> 21ed6e7fbb73

Step 3 : CMD env | grep _TCP= | (sed 's/.*_PORT_([0-9]*)_TCP=tcp://(.*):(.*)/socat -t 100000000 TCP4-LISTEN:1,fork,reuseaddr TCP4:2:3 &/' && echo wait) | sh

---> Using cache

---> 7ea8aef582cc

Successfully built 7ea8aef582cc

When you’re done with your build, you’re ready to look into Pushing a

repository to its registry.

Format

Here is the format of the Dockerfile:

# Comment

INSTRUCTION arguments

The instruction is not case-sensitive, however convention is for them to

be UPPERCASE in order to distinguish them from arguments more easily.

Docker runs the instructions in a Dockerfile in order. The

first instruction must be `FROM` in order to specify the Base

Image from which you are building.

Docker will treat lines that begin with # as a

comment. A # marker anywhere else in the line will

be treated as an argument. This allows statements like:

# Comment

RUN echo 'we are running some # of cool things'

Here is the set of instructions you can use in a Dockerfile for building

images.

Environment replacement

Environment variables (declared with the ENV statement) can also be

used in certain instructions as variables to be interpreted by the

Dockerfile. Escapes are also handled for including variable-like syntax

into a statement literally.

Environment variables are notated in the Dockerfile either with

$variable_name or ${variable_name}. They are treated equivalently and the

brace syntax is typically used to address issues with variable names with no

whitespace, like ${foo}_bar.

The ${variable_name} syntax also supports a few of the standard bash

modifiers as specified below:

${variable:-word}indicates that ifvariableis set then the result

will be that value. Ifvariableis not set thenwordwill be the result.${variable:+word}indicates that ifvariableis set thenwordwill be

the result, otherwise the result is the empty string.

In all cases, word can be any string, including additional environment

variables.

Escaping is possible by adding a before the variable: $foo or ${foo},

for example, will translate to $foo and ${foo} literals respectively.

Example (parsed representation is displayed after the #):

FROM busybox

ENV foo /bar

WORKDIR ${foo} # WORKDIR /bar

ADD . $foo # ADD . /bar

COPY $foo /quux # COPY $foo /quux

Environment variables are supported by the following list of instructions in

the Dockerfile:

ADDCOPYENVEXPOSELABELUSERWORKDIRVOLUMESTOPSIGNAL

as well as:

ONBUILD(when combined with one of the supported instructions above)

Note:

prior to 1.4,ONBUILDinstructions did NOT support environment

variable, even when combined with any of the instructions listed above.

Environment variable substitution will use the same value for each variable

throughout the entire command. In other words, in this example:

ENV abc=hello

ENV abc=bye def=$abc

ENV ghi=$abc

will result in def having a value of hello, not bye. However,

ghi will have a value of bye because it is not part of the same command

that set abc to bye.

.dockerignore file

Before the docker CLI sends the context to the docker daemon, it looks

for a file named .dockerignore in the root directory of the context.

If this file exists, the CLI modifies the context to exclude files and

directories that match patterns in it. This helps to avoid

unnecessarily sending large or sensitive files and directories to the

daemon and potentially adding them to images using ADD or COPY.

The CLI interprets the .dockerignore file as a newline-separated

list of patterns similar to the file globs of Unix shells. For the

purposes of matching, the root of the context is considered to be both

the working and the root directory. For example, the patterns

/foo/bar and foo/bar both exclude a file or directory named bar

in the foo subdirectory of PATH or in the root of the git

repository located at URL. Neither excludes anything else.

Here is an example .dockerignore file:

This file causes the following build behavior:

| Rule | Behavior |

|---|---|

*/temp* |

Exclude files and directories whose names start with temp in any immediate subdirectory of the root. For example, the plain file /somedir/temporary.txt is excluded, as is the directory /somedir/temp. |

*/*/temp* |

Exclude files and directories starting with temp from any subdirectory that is two levels below the root. For example, /somedir/subdir/temporary.txt is excluded. |

temp? |

Exclude files and directories in the root directory whose names are a one-character extension of temp. For example, /tempa and /tempb are excluded. |

Matching is done using Go’s

filepath.Match rules. A

preprocessing step removes leading and trailing whitespace and

eliminates . and .. elements using Go’s

filepath.Clean. Lines

that are blank after preprocessing are ignored.

Beyond Go’s filepath.Match rules, Docker also supports a special

wildcard string ** that matches any number of directories (including

zero). For example, **/*.go will exclude all files that end with .go

that are found in all directories, including the root of the build context.

Lines starting with ! (exclamation mark) can be used to make exceptions

to exclusions. The following is an example .dockerignore file that

uses this mechanism:

All markdown files except README.md are excluded from the context.

The placement of ! exception rules influences the behavior: the last

line of the .dockerignore that matches a particular file determines

whether it is included or excluded. Consider the following example:

*.md

!README*.md

README-secret.md

No markdown files are included in the context except README files other than

README-secret.md.

Now consider this example:

*.md

README-secret.md

!README*.md

All of the README files are included. The middle line has no effect because

!README*.md matches README-secret.md and comes last.

You can even use the .dockerignore file to exclude the Dockerfile

and .dockerignore files. These files are still sent to the daemon

because it needs them to do its job. But the ADD and COPY commands

do not copy them to the image.

Finally, you may want to specify which files to include in the

context, rather than which to exclude. To achieve this, specify * as

the first pattern, followed by one or more ! exception patterns.

Note: For historical reasons, the pattern . is ignored.

FROM

Or

Or

The FROM instruction sets the Base Image

for subsequent instructions. As such, a valid Dockerfile must have FROM as

its first instruction. The image can be any valid image – it is especially easy

to start by pulling an image from the Public Repositories.

-

FROMmust be the first non-comment instruction in theDockerfile. -

FROMcan appear multiple times within a singleDockerfilein order to create

multiple images. Simply make a note of the last image ID output by the commit

before each newFROMcommand. -

The

tagordigestvalues are optional. If you omit either of them, the builder

assumes alatestby default. The builder returns an error if it cannot match

thetagvalue.

MAINTAINER

The MAINTAINER instruction allows you to set the Author field of the

generated images.

RUN

RUN has 2 forms:

RUN <command>(shell form, the command is run in a shell —/bin/sh -c)RUN ["executable", "param1", "param2"](exec form)

The RUN instruction will execute any commands in a new layer on top of the

current image and commit the results. The resulting committed image will be

used for the next step in the Dockerfile.

Layering RUN instructions and generating commits conforms to the core

concepts of Docker where commits are cheap and containers can be created from

any point in an image’s history, much like source control.

The exec form makes it possible to avoid shell string munging, and to RUN

commands using a base image that does not contain /bin/sh.

In the shell form you can use a (backslash) to continue a single

RUN instruction onto the next line. For example, consider these two lines:

RUN /bin/bash -c 'source $HOME/.bashrc ;

echo $HOME'

Together they are equivalent to this single line:

RUN /bin/bash -c 'source $HOME/.bashrc ; echo $HOME'

Note:

To use a different shell, other than ‘/bin/sh’, use the exec form

passing in the desired shell. For example,

RUN ["/bin/bash", "-c", "echo hello"]

Note:

The exec form is parsed as a JSON array, which means that

you must use double-quotes («) around words not single-quotes (‘).

Note:

Unlike the shell form, the exec form does not invoke a command shell.

This means that normal shell processing does not happen. For example,

RUN [ "echo", "$HOME" ]will not do variable substitution on$HOME.

If you want shell processing then either use the shell form or execute

a shell directly, for example:RUN [ "sh", "-c", "echo", "$HOME" ].

The cache for RUN instructions isn’t invalidated automatically during

the next build. The cache for an instruction like

RUN apt-get dist-upgrade -y will be reused during the next build. The

cache for RUN instructions can be invalidated by using the --no-cache

flag, for example docker build --no-cache.

See the Dockerfile Best Practices

guide for more information.

The cache for RUN instructions can be invalidated by ADD instructions. See

below for details.

Known issues (RUN)

-

Issue 783 is about file

permissions problems that can occur when using the AUFS file system. You

might notice it during an attempt torma file, for example.For systems that have recent aufs version (i.e.,

dirperm1mount option can

be set), docker will attempt to fix the issue automatically by mounting

the layers withdirperm1option. More details ondirperm1option can be

found ataufsman pageIf your system doesn’t have support for

dirperm1, the issue describes a workaround.

CMD

The CMD instruction has three forms:

CMD ["executable","param1","param2"](exec form, this is the preferred form)CMD ["param1","param2"](as default parameters to ENTRYPOINT)CMD command param1 param2(shell form)

There can only be one CMD instruction in a Dockerfile. If you list more than one CMD

then only the last CMD will take effect.

The main purpose of a CMD is to provide defaults for an executing

container. These defaults can include an executable, or they can omit

the executable, in which case you must specify an ENTRYPOINT

instruction as well.

Note:

IfCMDis used to provide default arguments for theENTRYPOINT

instruction, both theCMDandENTRYPOINTinstructions should be specified

with the JSON array format.

Note:

The exec form is parsed as a JSON array, which means that

you must use double-quotes («) around words not single-quotes (‘).

Note:

Unlike the shell form, the exec form does not invoke a command shell.

This means that normal shell processing does not happen. For example,

CMD [ "echo", "$HOME" ]will not do variable substitution on$HOME.

If you want shell processing then either use the shell form or execute

a shell directly, for example:CMD [ "sh", "-c", "echo", "$HOME" ].

When used in the shell or exec formats, the CMD instruction sets the command

to be executed when running the image.

If you use the shell form of the CMD, then the <command> will execute in

/bin/sh -c:

FROM ubuntu

CMD echo "This is a test." | wc -

If you want to run your <command> without a shell then you must

express the command as a JSON array and give the full path to the executable.

This array form is the preferred format of CMD. Any additional parameters

must be individually expressed as strings in the array:

FROM ubuntu

CMD ["/usr/bin/wc","--help"]

If you would like your container to run the same executable every time, then

you should consider using ENTRYPOINT in combination with CMD. See

ENTRYPOINT.

If the user specifies arguments to docker run then they will override the

default specified in CMD.

Note:

don’t confuseRUNwithCMD.RUNactually runs a command and commits

the result;CMDdoes not execute anything at build time, but specifies

the intended command for the image.

LABEL

LABEL <key>=<value> <key>=<value> <key>=<value> ...

The LABEL instruction adds metadata to an image. A LABEL is a

key-value pair. To include spaces within a LABEL value, use quotes and

backslashes as you would in command-line parsing. A few usage examples:

LABEL "com.example.vendor"="ACME Incorporated"

LABEL com.example.label-with-value="foo"

LABEL version="1.0"

LABEL description="This text illustrates

that label-values can span multiple lines."

An image can have more than one label. To specify multiple labels,

Docker recommends combining labels into a single LABEL instruction where

possible. Each LABEL instruction produces a new layer which can result in an

inefficient image if you use many labels. This example results in a single image

layer.

LABEL multi.label1="value1" multi.label2="value2" other="value3"

The above can also be written as:

LABEL multi.label1="value1"

multi.label2="value2"

other="value3"

Labels are additive including LABELs in FROM images. If Docker

encounters a label/key that already exists, the new value overrides any previous

labels with identical keys.

To view an image’s labels, use the docker inspect command.

"Labels": {

"com.example.vendor": "ACME Incorporated"

"com.example.label-with-value": "foo",

"version": "1.0",

"description": "This text illustrates that label-values can span multiple lines.",

"multi.label1": "value1",

"multi.label2": "value2",

"other": "value3"

},

EXPOSE

EXPOSE <port> [<port>...]

The EXPOSE instruction informs Docker that the container listens on the

specified network ports at runtime. EXPOSE does not make the ports of the

container accessible to the host. To do that, you must use either the -p flag

to publish a range of ports or the -P flag to publish all of the exposed

ports. You can expose one port number and publish it externally under another

number.

To set up port redirection on the host system, see using the -P

flag. The Docker network feature supports

creating networks without the need to expose ports within the network, for

detailed information see the overview of this

feature).

ENV

ENV <key> <value>

ENV <key>=<value> ...

The ENV instruction sets the environment variable <key> to the value

<value>. This value will be in the environment of all «descendant»

Dockerfile commands and can be replaced inline in

many as well.

The ENV instruction has two forms. The first form, ENV <key> <value>,

will set a single variable to a value. The entire string after the first

space will be treated as the <value> — including characters such as

spaces and quotes.

The second form, ENV <key>=<value> ..., allows for multiple variables to

be set at one time. Notice that the second form uses the equals sign (=)

in the syntax, while the first form does not. Like command line parsing,

quotes and backslashes can be used to include spaces within values.

For example:

ENV myName="John Doe" myDog=Rex The Dog

myCat=fluffy

and

ENV myName John Doe

ENV myDog Rex The Dog

ENV myCat fluffy

will yield the same net results in the final container, but the first form

is preferred because it produces a single cache layer.

The environment variables set using ENV will persist when a container is run

from the resulting image. You can view the values using docker inspect, and

change them using docker run --env <key>=<value>.

Note:

Environment persistence can cause unexpected side effects. For example,

settingENV DEBIAN_FRONTEND noninteractivemay confuse apt-get

users on a Debian-based image. To set a value for a single command, use

RUN <key>=<value> <command>.

ADD

ADD has two forms:

ADD <src>... <dest>ADD ["<src>",... "<dest>"](this form is required for paths containing

whitespace)

The ADD instruction copies new files, directories or remote file URLs from <src>

and adds them to the filesystem of the container at the path <dest>.

Multiple <src> resource may be specified but if they are files or

directories then they must be relative to the source directory that is

being built (the context of the build).

Each <src> may contain wildcards and matching will be done using Go’s

filepath.Match rules. For example:

ADD hom* /mydir/ # adds all files starting with "hom"

ADD hom?.txt /mydir/ # ? is replaced with any single character, e.g., "home.txt"

The <dest> is an absolute path, or a path relative to WORKDIR, into which

the source will be copied inside the destination container.

ADD test relativeDir/ # adds "test" to `WORKDIR`/relativeDir/

ADD test /absoluteDir/ # adds "test" to /absoluteDir/

All new files and directories are created with a UID and GID of 0.

In the case where <src> is a remote file URL, the destination will

have permissions of 600. If the remote file being retrieved has an HTTP

Last-Modified header, the timestamp from that header will be used

to set the mtime on the destination file. However, like any other file

processed during an ADD, mtime will not be included in the determination

of whether or not the file has changed and the cache should be updated.

Note:

If you build by passing aDockerfilethrough STDIN (docker build - < somefile), there is no build context, so theDockerfile

can only contain a URL basedADDinstruction. You can also pass a

compressed archive through STDIN: (docker build - < archive.tar.gz),

theDockerfileat the root of the archive and the rest of the

archive will get used at the context of the build.

Note:

If your URL files are protected using authentication, you

will need to useRUN wget,RUN curlor use another tool from

within the container as theADDinstruction does not support

authentication.

Note:

The first encounteredADDinstruction will invalidate the cache for all

following instructions from the Dockerfile if the contents of<src>have

changed. This includes invalidating the cache forRUNinstructions.

See theDockerfileBest Practices

guide for more information.

ADD obeys the following rules:

-

The

<src>path must be inside the context of the build;

you cannotADD ../something /something, because the first step of a

docker buildis to send the context directory (and subdirectories) to the

docker daemon. -

If

<src>is a URL and<dest>does not end with a trailing slash, then a

file is downloaded from the URL and copied to<dest>. -

If

<src>is a URL and<dest>does end with a trailing slash, then the

filename is inferred from the URL and the file is downloaded to

<dest>/<filename>. For instance,ADD http://example.com/foobar /would

create the file/foobar. The URL must have a nontrivial path so that an

appropriate filename can be discovered in this case (http://example.com

will not work). -

If

<src>is a directory, the entire contents of the directory are copied,

including filesystem metadata.

Note:

The directory itself is not copied, just its contents.

-

If

<src>is a local tar archive in a recognized compression format

(identity, gzip, bzip2 or xz) then it is unpacked as a directory. Resources

from remote URLs are not decompressed. When a directory is copied or

unpacked, it has the same behavior astar -x: the result is the union of:- Whatever existed at the destination path and

- The contents of the source tree, with conflicts resolved in favor

of «2.» on a file-by-file basis.

Note:

Whether a file is identified as a recognized compression format or not

is done solely based on the contents of the file, not the name of the file.

For example, if an empty file happens to end with.tar.gzthis will not

be recognized as a compressed file and will not generate any kind of

decompression error message, rather the file will simply be copied to the

destination. -

If

<src>is any other kind of file, it is copied individually along with

its metadata. In this case, if<dest>ends with a trailing slash/, it

will be considered a directory and the contents of<src>will be written

at<dest>/base(<src>). -

If multiple

<src>resources are specified, either directly or due to the

use of a wildcard, then<dest>must be a directory, and it must end with

a slash/. -

If

<dest>does not end with a trailing slash, it will be considered a

regular file and the contents of<src>will be written at<dest>. -

If

<dest>doesn’t exist, it is created along with all missing directories

in its path.

COPY

COPY has two forms:

COPY <src>... <dest>COPY ["<src>",... "<dest>"](this form is required for paths containing

whitespace)

The COPY instruction copies new files or directories from <src>

and adds them to the filesystem of the container at the path <dest>.

Multiple <src> resource may be specified but they must be relative

to the source directory that is being built (the context of the build).

Each <src> may contain wildcards and matching will be done using Go’s

filepath.Match rules. For example:

COPY hom* /mydir/ # adds all files starting with "hom"

COPY hom?.txt /mydir/ # ? is replaced with any single character, e.g., "home.txt"

The <dest> is an absolute path, or a path relative to WORKDIR, into which

the source will be copied inside the destination container.

COPY test relativeDir/ # adds "test" to `WORKDIR`/relativeDir/

COPY test /absoluteDir/ # adds "test" to /absoluteDir/

All new files and directories are created with a UID and GID of 0.

Note:

If you build using STDIN (docker build - < somefile), there is no

build context, soCOPYcan’t be used.

COPY obeys the following rules:

-

The

<src>path must be inside the context of the build;

you cannotCOPY ../something /something, because the first step of a

docker buildis to send the context directory (and subdirectories) to the

docker daemon. -

If

<src>is a directory, the entire contents of the directory are copied,

including filesystem metadata.

Note:

The directory itself is not copied, just its contents.

-

If

<src>is any other kind of file, it is copied individually along with

its metadata. In this case, if<dest>ends with a trailing slash/, it

will be considered a directory and the contents of<src>will be written

at<dest>/base(<src>). -

If multiple

<src>resources are specified, either directly or due to the

use of a wildcard, then<dest>must be a directory, and it must end with

a slash/. -

If

<dest>does not end with a trailing slash, it will be considered a

regular file and the contents of<src>will be written at<dest>. -

If

<dest>doesn’t exist, it is created along with all missing directories

in its path.

ENTRYPOINT

ENTRYPOINT has two forms:

ENTRYPOINT ["executable", "param1", "param2"]

(exec form, preferred)ENTRYPOINT command param1 param2

(shell form)

An ENTRYPOINT allows you to configure a container that will run as an executable.

For example, the following will start nginx with its default content, listening

on port 80:

docker run -i -t --rm -p 80:80 nginx

Command line arguments to docker run <image> will be appended after all

elements in an exec form ENTRYPOINT, and will override all elements specified

using CMD.

This allows arguments to be passed to the entry point, i.e., docker run <image> -d

will pass the -d argument to the entry point.

You can override the ENTRYPOINT instruction using the docker run --entrypoint

flag.

The shell form prevents any CMD or run command line arguments from being

used, but has the disadvantage that your ENTRYPOINT will be started as a

subcommand of /bin/sh -c, which does not pass signals.

This means that the executable will not be the container’s PID 1 — and

will not receive Unix signals — so your executable will not receive a

SIGTERM from docker stop <container>.

Only the last ENTRYPOINT instruction in the Dockerfile will have an effect.

Exec form ENTRYPOINT example

You can use the exec form of ENTRYPOINT to set fairly stable default commands

and arguments and then use either form of CMD to set additional defaults that

are more likely to be changed.

FROM ubuntu

ENTRYPOINT ["top", "-b"]

CMD ["-c"]

When you run the container, you can see that top is the only process:

$ docker run -it --rm --name test top -H

top - 08:25:00 up 7:27, 0 users, load average: 0.00, 0.01, 0.05

Threads: 1 total, 1 running, 0 sleeping, 0 stopped, 0 zombie

%Cpu(s): 0.1 us, 0.1 sy, 0.0 ni, 99.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem: 2056668 total, 1616832 used, 439836 free, 99352 buffers

KiB Swap: 1441840 total, 0 used, 1441840 free. 1324440 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1 root 20 0 19744 2336 2080 R 0.0 0.1 0:00.04 top

To examine the result further, you can use docker exec:

$ docker exec -it test ps aux