In statistics, the mean squared error (MSE)[1] or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the actual value. MSE is a risk function, corresponding to the expected value of the squared error loss.[2] The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate.[3] In machine learning, specifically empirical risk minimization, MSE may refer to the empirical risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution).

The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the error approaches zero.

The MSE is the second moment (about the origin) of the error, and thus incorporates both the variance of the estimator (how widely spread the estimates are from one data sample to another) and its bias (how far off the average estimated value is from the true value).[citation needed] For an unbiased estimator, the MSE is the variance of the estimator. Like the variance, MSE has the same units of measurement as the square of the quantity being estimated. In an analogy to standard deviation, taking the square root of MSE yields the root-mean-square error or root-mean-square deviation (RMSE or RMSD), which has the same units as the quantity being estimated; for an unbiased estimator, the RMSE is the square root of the variance, known as the standard error.

Definition and basic propertiesEdit

The MSE either assesses the quality of a predictor (i.e., a function mapping arbitrary inputs to a sample of values of some random variable), or of an estimator (i.e., a mathematical function mapping a sample of data to an estimate of a parameter of the population from which the data is sampled). The definition of an MSE differs according to whether one is describing a predictor or an estimator.

PredictorEdit

If a vector of predictions is generated from a sample of data points on all variables, and is the vector of observed values of the variable being predicted, with being the predicted values (e.g. as from a least-squares fit), then the within-sample MSE of the predictor is computed as

In other words, the MSE is the mean of the squares of the errors . This is an easily computable quantity for a particular sample (and hence is sample-dependent).

In matrix notation,

where is and is the column vector.

The MSE can also be computed on q data points that were not used in estimating the model, either because they were held back for this purpose, or because these data have been newly obtained. Within this process, known as statistical learning, the MSE is often called the test MSE,[4] and is computed as

EstimatorEdit

The MSE of an estimator with respect to an unknown parameter is defined as[1]

This definition depends on the unknown parameter, but the MSE is a priori a property of an estimator. The MSE could be a function of unknown parameters, in which case any estimator of the MSE based on estimates of these parameters would be a function of the data (and thus a random variable). If the estimator is derived as a sample statistic and is used to estimate some population parameter, then the expectation is with respect to the sampling distribution of the sample statistic.

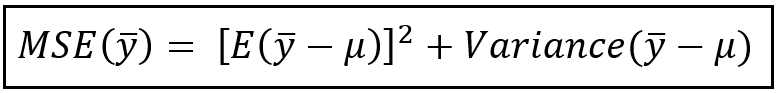

The MSE can be written as the sum of the variance of the estimator and the squared bias of the estimator, providing a useful way to calculate the MSE and implying that in the case of unbiased estimators, the MSE and variance are equivalent.[5]

Proof of variance and bias relationshipEdit

An even shorter proof can be achieved using the well-known formula that for a random variable , . By substituting with, , we have

But in real modeling case, MSE could be described as the addition of model variance, model bias, and irreducible uncertainty (see Bias–variance tradeoff). According to the relationship, the MSE of the estimators could be simply used for the efficiency comparison, which includes the information of estimator variance and bias. This is called MSE criterion.

In regressionEdit

In regression analysis, plotting is a more natural way to view the overall trend of the whole data. The mean of the distance from each point to the predicted regression model can be calculated, and shown as the mean squared error. The squaring is critical to reduce the complexity with negative signs. To minimize MSE, the model could be more accurate, which would mean the model is closer to actual data. One example of a linear regression using this method is the least squares method—which evaluates appropriateness of linear regression model to model bivariate dataset,[6] but whose limitation is related to known distribution of the data.

The term mean squared error is sometimes used to refer to the unbiased estimate of error variance: the residual sum of squares divided by the number of degrees of freedom. This definition for a known, computed quantity differs from the above definition for the computed MSE of a predictor, in that a different denominator is used. The denominator is the sample size reduced by the number of model parameters estimated from the same data, (n−p) for p regressors or (n−p−1) if an intercept is used (see errors and residuals in statistics for more details).[7] Although the MSE (as defined in this article) is not an unbiased estimator of the error variance, it is consistent, given the consistency of the predictor.

In regression analysis, «mean squared error», often referred to as mean squared prediction error or «out-of-sample mean squared error», can also refer to the mean value of the squared deviations of the predictions from the true values, over an out-of-sample test space, generated by a model estimated over a particular sample space. This also is a known, computed quantity, and it varies by sample and by out-of-sample test space.

ExamplesEdit

MeanEdit

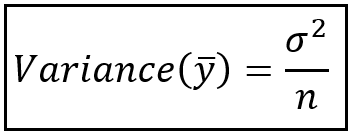

Suppose we have a random sample of size from a population, . Suppose the sample units were chosen with replacement. That is, the units are selected one at a time, and previously selected units are still eligible for selection for all draws. The usual estimator for the is the sample average

which has an expected value equal to the true mean (so it is unbiased) and a mean squared error of

where is the population variance.

For a Gaussian distribution, this is the best unbiased estimator (i.e., one with the lowest MSE among all unbiased estimators), but not, say, for a uniform distribution.

VarianceEdit

The usual estimator for the variance is the corrected sample variance:

This is unbiased (its expected value is ), hence also called the unbiased sample variance, and its MSE is[8]

where is the fourth central moment of the distribution or population, and is the excess kurtosis.

However, one can use other estimators for which are proportional to , and an appropriate choice can always give a lower mean squared error. If we define

then we calculate:

This is minimized when

For a Gaussian distribution, where , this means that the MSE is minimized when dividing the sum by . The minimum excess kurtosis is ,[a] which is achieved by a Bernoulli distribution with p = 1/2 (a coin flip), and the MSE is minimized for Hence regardless of the kurtosis, we get a «better» estimate (in the sense of having a lower MSE) by scaling down the unbiased estimator a little bit; this is a simple example of a shrinkage estimator: one «shrinks» the estimator towards zero (scales down the unbiased estimator).

Further, while the corrected sample variance is the best unbiased estimator (minimum mean squared error among unbiased estimators) of variance for Gaussian distributions, if the distribution is not Gaussian, then even among unbiased estimators, the best unbiased estimator of the variance may not be

Gaussian distributionEdit

The following table gives several estimators of the true parameters of the population, μ and σ2, for the Gaussian case.[9]

| True value | Estimator | Mean squared error |

|---|---|---|

| = the unbiased estimator of the population mean, | ||

| = the unbiased estimator of the population variance, | ||

| = the biased estimator of the population variance, | ||

| = the biased estimator of the population variance, |

InterpretationEdit

An MSE of zero, meaning that the estimator predicts observations of the parameter with perfect accuracy, is ideal (but typically not possible).

Values of MSE may be used for comparative purposes. Two or more statistical models may be compared using their MSEs—as a measure of how well they explain a given set of observations: An unbiased estimator (estimated from a statistical model) with the smallest variance among all unbiased estimators is the best unbiased estimator or MVUE (Minimum-Variance Unbiased Estimator).

Both analysis of variance and linear regression techniques estimate the MSE as part of the analysis and use the estimated MSE to determine the statistical significance of the factors or predictors under study. The goal of experimental design is to construct experiments in such a way that when the observations are analyzed, the MSE is close to zero relative to the magnitude of at least one of the estimated treatment effects.

In one-way analysis of variance, MSE can be calculated by the division of the sum of squared errors and the degree of freedom. Also, the f-value is the ratio of the mean squared treatment and the MSE.

MSE is also used in several stepwise regression techniques as part of the determination as to how many predictors from a candidate set to include in a model for a given set of observations.

ApplicationsEdit

- Minimizing MSE is a key criterion in selecting estimators: see minimum mean-square error. Among unbiased estimators, minimizing the MSE is equivalent to minimizing the variance, and the estimator that does this is the minimum variance unbiased estimator. However, a biased estimator may have lower MSE; see estimator bias.

- In statistical modelling the MSE can represent the difference between the actual observations and the observation values predicted by the model. In this context, it is used to determine the extent to which the model fits the data as well as whether removing some explanatory variables is possible without significantly harming the model’s predictive ability.

- In forecasting and prediction, the Brier score is a measure of forecast skill based on MSE.

Loss functionEdit

Squared error loss is one of the most widely used loss functions in statistics[citation needed], though its widespread use stems more from mathematical convenience than considerations of actual loss in applications. Carl Friedrich Gauss, who introduced the use of mean squared error, was aware of its arbitrariness and was in agreement with objections to it on these grounds.[3] The mathematical benefits of mean squared error are particularly evident in its use at analyzing the performance of linear regression, as it allows one to partition the variation in a dataset into variation explained by the model and variation explained by randomness.

CriticismEdit

The use of mean squared error without question has been criticized by the decision theorist James Berger. Mean squared error is the negative of the expected value of one specific utility function, the quadratic utility function, which may not be the appropriate utility function to use under a given set of circumstances. There are, however, some scenarios where mean squared error can serve as a good approximation to a loss function occurring naturally in an application.[10]

Like variance, mean squared error has the disadvantage of heavily weighting outliers.[11] This is a result of the squaring of each term, which effectively weights large errors more heavily than small ones. This property, undesirable in many applications, has led researchers to use alternatives such as the mean absolute error, or those based on the median.

See alsoEdit

- Bias–variance tradeoff

- Hodges’ estimator

- James–Stein estimator

- Mean percentage error

- Mean square quantization error

- Mean square weighted deviation

- Mean squared displacement

- Mean squared prediction error

- Minimum mean square error

- Minimum mean squared error estimator

- Overfitting

- Peak signal-to-noise ratio

NotesEdit

- ^ This can be proved by Jensen’s inequality as follows. The fourth central moment is an upper bound for the square of variance, so that the least value for their ratio is one, therefore, the least value for the excess kurtosis is −2, achieved, for instance, by a Bernoulli with p=1/2.

ReferencesEdit

- ^ a b «Mean Squared Error (MSE)». www.probabilitycourse.com. Retrieved 2020-09-12.

- ^ Bickel, Peter J.; Doksum, Kjell A. (2015). Mathematical Statistics: Basic Ideas and Selected Topics. Vol. I (Second ed.). p. 20.

If we use quadratic loss, our risk function is called the mean squared error (MSE) …

- ^ a b Lehmann, E. L.; Casella, George (1998). Theory of Point Estimation (2nd ed.). New York: Springer. ISBN 978-0-387-98502-2. MR 1639875.

- ^ Gareth, James; Witten, Daniela; Hastie, Trevor; Tibshirani, Rob (2021). An Introduction to Statistical Learning: with Applications in R. Springer. ISBN 978-1071614174.

- ^ Wackerly, Dennis; Mendenhall, William; Scheaffer, Richard L. (2008). Mathematical Statistics with Applications (7 ed.). Belmont, CA, USA: Thomson Higher Education. ISBN 978-0-495-38508-0.

- ^ A modern introduction to probability and statistics : understanding why and how. Dekking, Michel, 1946-. London: Springer. 2005. ISBN 978-1-85233-896-1. OCLC 262680588.

{{cite book}}: CS1 maint: others (link) - ^ Steel, R.G.D, and Torrie, J. H., Principles and Procedures of Statistics with Special Reference to the Biological Sciences., McGraw Hill, 1960, page 288.

- ^ Mood, A.; Graybill, F.; Boes, D. (1974). Introduction to the Theory of Statistics (3rd ed.). McGraw-Hill. p. 229.

- ^ DeGroot, Morris H. (1980). Probability and Statistics (2nd ed.). Addison-Wesley.

- ^ Berger, James O. (1985). «2.4.2 Certain Standard Loss Functions». Statistical Decision Theory and Bayesian Analysis (2nd ed.). New York: Springer-Verlag. p. 60. ISBN 978-0-387-96098-2. MR 0804611.

- ^ Bermejo, Sergio; Cabestany, Joan (2001). «Oriented principal component analysis for large margin classifiers». Neural Networks. 14 (10): 1447–1461. doi:10.1016/S0893-6080(01)00106-X. PMID 11771723.

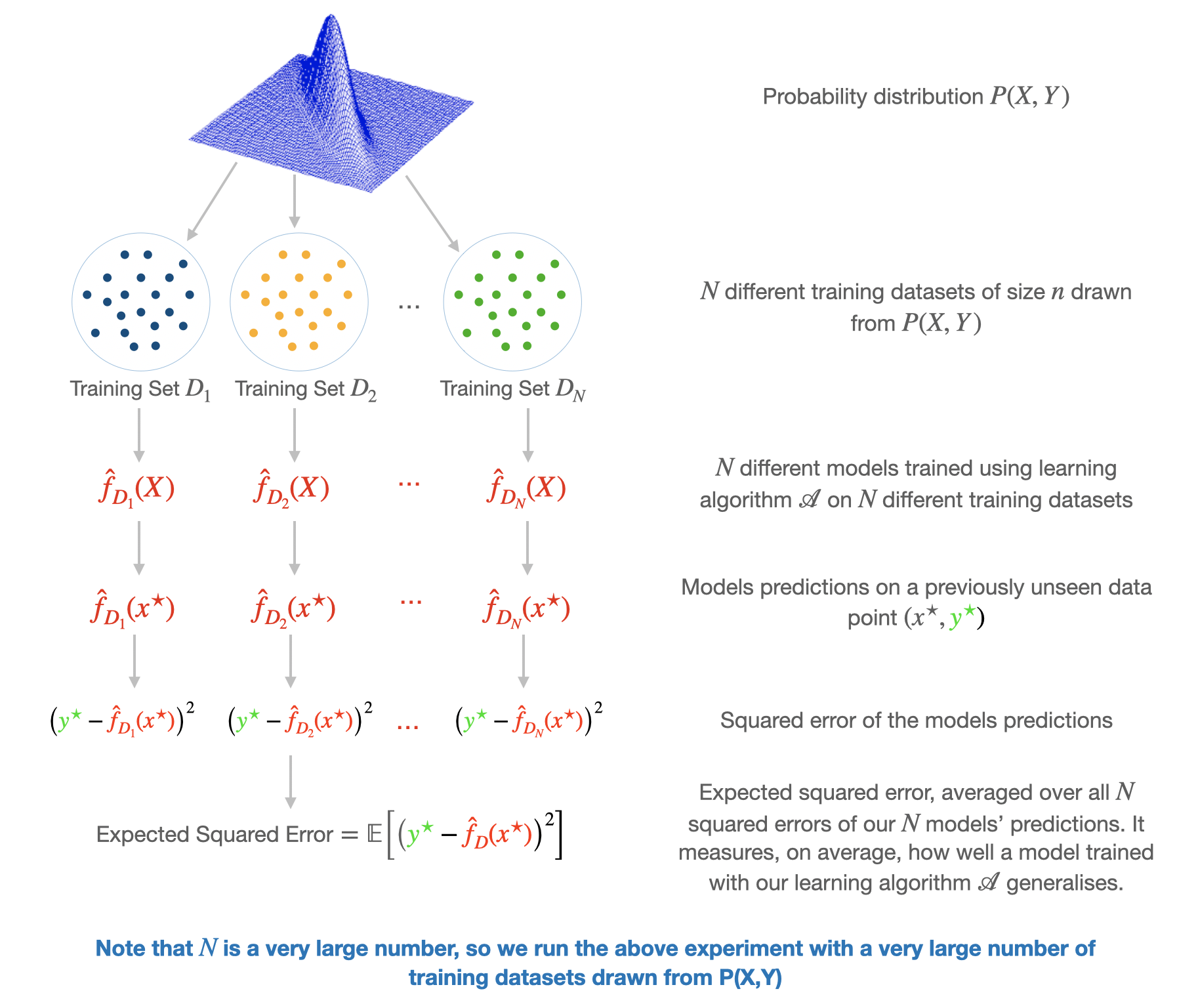

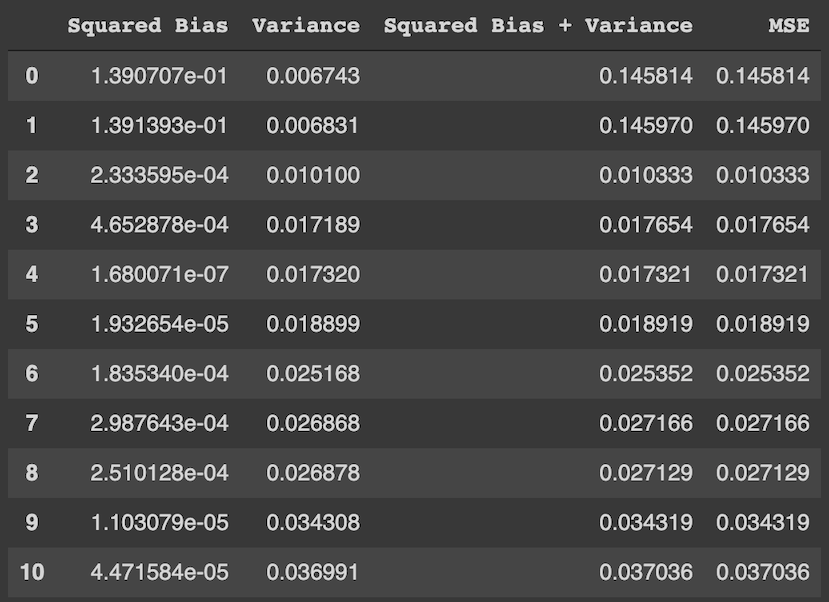

В данной главе мы изучим инструмент, который позволяет анализировать ошибку алгоритма в зависимости от некоторого набора факторов, влияющих на итоговое качество его работы. Этот инструмент в литературе называется bias-variance decomposition — разложение ошибки на смещение и разброс. В разложении, на самом деле, есть и третья компонента — случайный шум в данных, но ему не посчастливилось оказаться в названии. Данное разложение оказывается полезным в некоторых теоретических исследованиях работы моделей машинного обучения, в частности, при анализе свойств ансамблевых моделей.

Некоторые картинки в тексте кликабельны. Это означает, что они были заимствованы из какого-то источника и при клике вы сможете перейти к этому источнику.

Вывод разложения bias-variance для MSE

Рассмотрим задачу регрессии с квадратичной функцией потерь. Представим также для простоты, что целевая переменная $y$ — одномерная и выражается через переменную $x$ как:

$$

y = f(x) + varepsilon,

$$

где $f$ — некоторая детерминированная функция, а $varepsilon$ — случайный шум со следующими свойствами:

$$

mathbb{E} varepsilon = 0, , mathbb{V}text{ar} varepsilon = mathbb{E} varepsilon^2 = sigma^2.

$$

В зависимости от природы данных, которые описывает эта зависимость, её представление в виде точной $f(x)$ и случайной $varepsilon$ может быть продиктовано тем, что:

-

данные на самом деле имеют случайный характер;

-

измерительный прибор не может зафиксировать целевую переменную абсолютно точно;

-

имеющихся признаков недостаточно, чтобы исчерпывающим образом описать объект, пользователя или событие.

Функция потерь на одном объекте $x$ равна

$$

MSE = (y(x) — a(x))^2

$$

Однако знание значения MSE только на одном объекте не может дать нам общего понимания того, насколько хорошо работает наш алгоритм. Какие факторы мы бы хотели учесть при оценке качества алгоритма? Например, то, что выход алгоритма на объекте $x$ зависит не только от самого этого объекта, но и от выборки $X$, на которой алгоритм обучался:

$$

X = ((x_1, y_1), ldots, (x_ell, y_ell))

$$

$$

a(x) = a(x, X)

$$

Кроме того, значение $y$ на объекте $x$ зависит не только от $x$, но и от реализации шума в этой точке:

$$

y(x) = y(x, varepsilon)

$$

Наконец, измерять качество мы бы хотели на тестовых объектах $x$ — тех, которые не встречались в обучающей выборке, а тестовых объектов у нас в большинстве случаев более одного. При включении всех вышеперечисленных источников случайности в рассмотрение логичной оценкой качества алгоритма $a$ кажется следующая величина:

$$

Q(a) = mathbb{E}_x mathbb{E}_{X, varepsilon} [y(x, varepsilon) — a(x, X)]^2

$$

Внутреннее матожидание позволяет оценить качество работы алгоритма в одной тестовой точке $x$ в зависимости от всевозможных реализаций $X$ и $varepsilon$, а внешнее матожидание усредняет это качество по всем тестовым точкам.

Замечание. Запись $mathbb{E}_{X, varepsilon}$ в общем случае обозначает взятие матожидания по совместному распределению $X$ и $varepsilon$. Однако, поскольку $X$ и $varepsilon$ независимы, она равносильна последовательному взятию матожиданий по каждой из переменных: $mathbb{E}_{X, varepsilon} = mathbb{E}_{X} mathbb{E}_{varepsilon}$, но последний вариант выглядит несколько более громоздко.

Попробуем представить выражение для $Q(a)$ в более удобном для анализа виде. Начнём с внутреннего матожидания:

$$

mathbb{E}_{X, varepsilon} [y(x, varepsilon) — a(x, X)]^2 = mathbb{E}_{X, varepsilon}[f(x) + varepsilon — a(x, X)]^2 =

$$

$$

= mathbb{E}_{X, varepsilon} [ underbrace{(f(x) — a(x, X))^2}_{text{не зависит от $varepsilon$}} +

underbrace{2 varepsilon cdot (f(x) — a(x, X))}_{text{множители независимы}} + varepsilon^2 ] =

$$

$$

= mathbb{E}_X left[

(f(x) — a(x, X))^2

right] + 2 underbrace{mathbb{E}_varepsilon[varepsilon]}_{=0} cdot mathbb{E}_X (f(x) — a(x, X)) + mathbb{E}_varepsilon varepsilon^2 =

$$

$$

= mathbb{E}_X left[ (f(x) — a(x, X))^2 right] + sigma^2

$$

Из общего выражения для $Q(a)$ выделилась шумовая компонента $sigma^2$. Продолжим преобразования:

$$

mathbb{E}_X left[ (f(x) — a(x, X))^2 right] = mathbb{E}_X left[

(f(x) — mathbb{E}_X[a(x, X)] + mathbb{E}_X[a(x, X)] — a(x, X))^2

right] =

$$

$$

= mathbb{E}_Xunderbrace{left[

(f(x) — mathbb{E}_X[a(x, X)])^2

right]}_{text{не зависит от $X$}} + underbrace{mathbb{E}_X left[ (a(x, X) — mathbb{E}_X[a(x, X)])^2 right]}_{text{$=mathbb{V}text{ar}_X[a(x, X)]$}} +

$$

$$

+ 2 mathbb{E}_X[underbrace{(f(x) — mathbb{E}_X[a(x, X)])}_{text{не зависит от $X$}} cdot (mathbb{E}_X[a(x, X)] — a(x, X))] =

$$

$$

= (underbrace{f(x) — mathbb{E}_X[a(x, X)]}_{text{bias}_X a(x, X)})^2 + mathbb{V}text{ar}_X[a(x, X)] + 2 (f(x) — mathbb{E}_X[a(x, X)]) cdot underbrace{(mathbb{E}_X[a(x, X)] — mathbb{E}_X [a(x, X)])}_{=0} =

$$

$$

= text{bias}_X^2 a(x, X)+ mathbb{V}text{ar}_X[a(x, X)]

$$

Таким образом, итоговое выражение для $Q(a)$ примет вид

$$

Q(a) = mathbb{E}_x mathbb{E}_{X, varepsilon} [y(x, varepsilon) — a(x, X)]^2 = mathbb{E}_x text{bias}_X^2 a(x, X) + mathbb{E}_x mathbb{V}text{ar}_X[a(x, X)] + sigma^2,

$$

где

$$

text{bias}_X a(x, X) = f(x) — mathbb{E}_X[a(x, X)]

$$

— смещение предсказания алгоритма в точке $x$, усреднённого по всем возможным обучающим выборкам, относительно истинной зависимости $f$;

$$

mathbb{V}text{ar}_X[a(x, X)] = mathbb{E}_X left[ a(x, X) — mathbb{E}_X[a(x, X)] right]^2

$$

— дисперсия (разброс) предсказаний алгоритма в зависимости от обучающей выборки $X$;

$$

sigma^2 = mathbb{E}_x mathbb{E}_varepsilon[y(x, varepsilon) — f(x)]^2

$$

— неустранимый шум в данных.

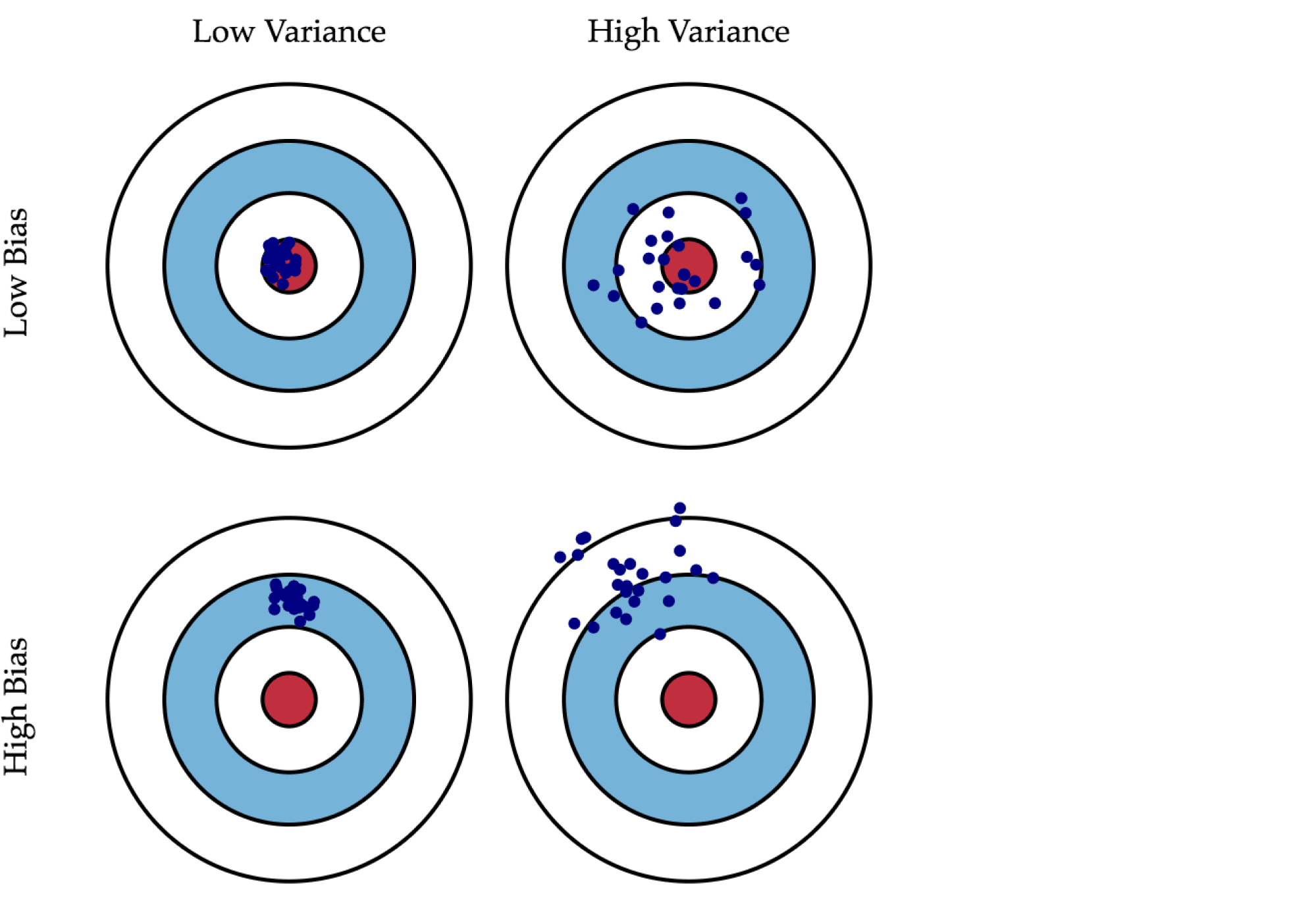

Смещение показывает, насколько хорошо с помощью данного алгоритма можно приблизить истинную зависимость $f$, а разброс характеризует чувствительность алгоритма к изменениям в обучающей выборке. Например, деревья маленькой глубины будут в большинстве случаев иметь высокое смещение и низкий разброс предсказаний, так как они не могут слишком хорошо запомнить обучающую выборку. А глубокие деревья, наоборот, могут безошибочно выучить обучающую выборку и потому будут иметь высокий разброс в зависимости от выборки, однако их предсказания в среднем будут точнее. На рисунке ниже приведены возможные случаи сочетания смещения и разброса для разных моделей:

Синяя точка соответствует модели, обученной на некоторой обучающей выборке, а всего синих точек столько, сколько было обучающих выборок. Красный круг в центре области представляет ближайшую окрестность целевого значения. Большое смещение соответствует тому, что модели в среднем не попадают в цель, а при большом разбросе модели могут как делать точные предсказания, так и довольно сильно ошибаться.

Полученное нами разложение ошибки на три компоненты верно только для квадратичной функции потерь. Для других функций потерь существуют более общие формы этого разложения (Domigos, 2000, James, 2003) с похожими по смыслу компонентами. Это позволяет предполагать, что для большинства основных функций потерь имеется некоторое представление в виде смещения, разброса и шума (хоть и, возможно, не в столь простой аддитивной форме).

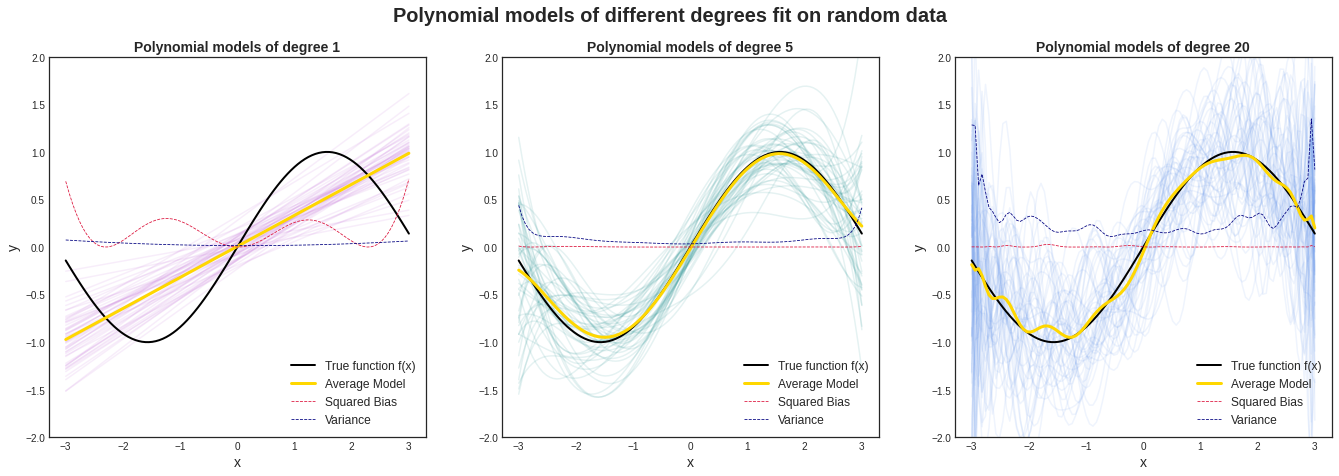

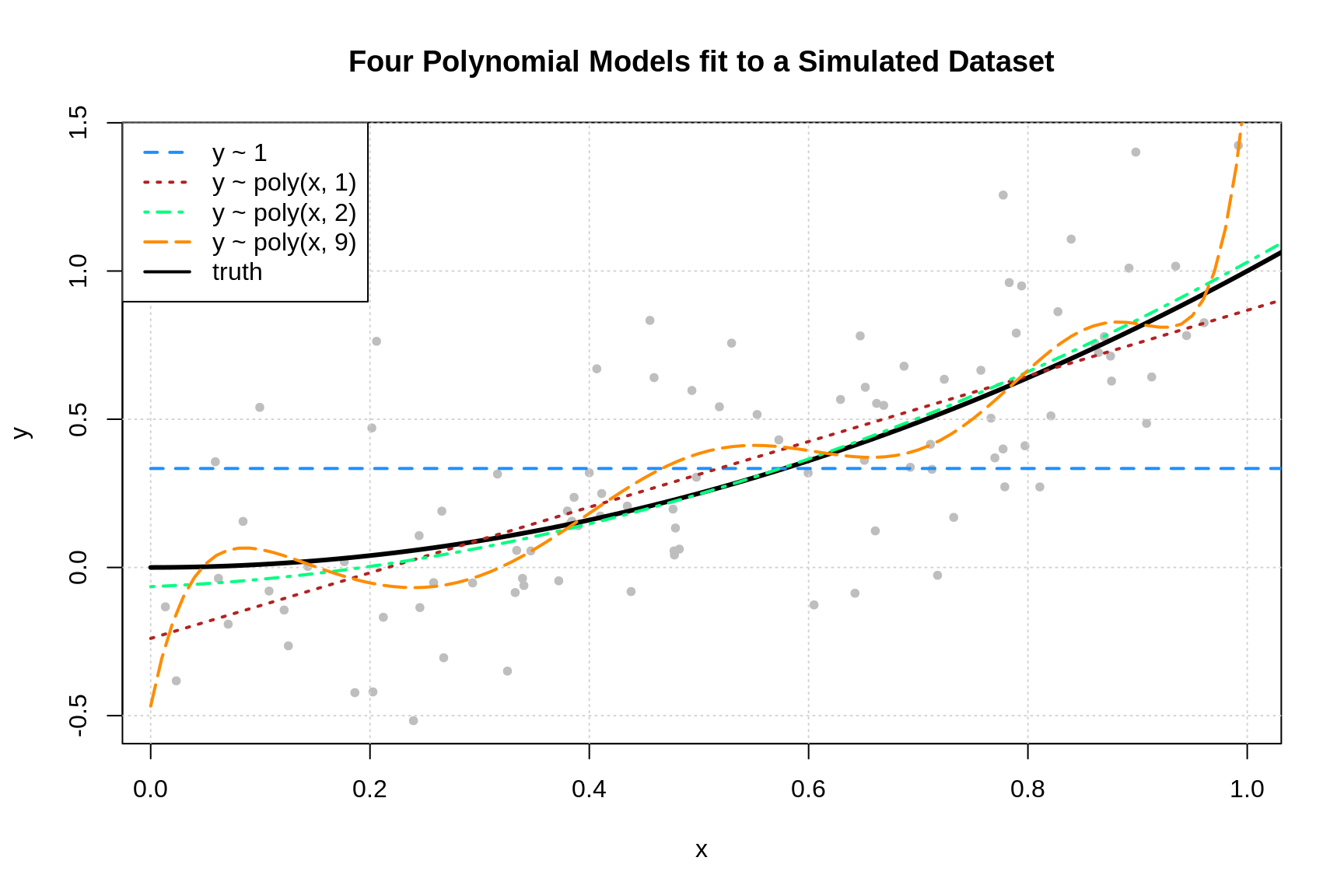

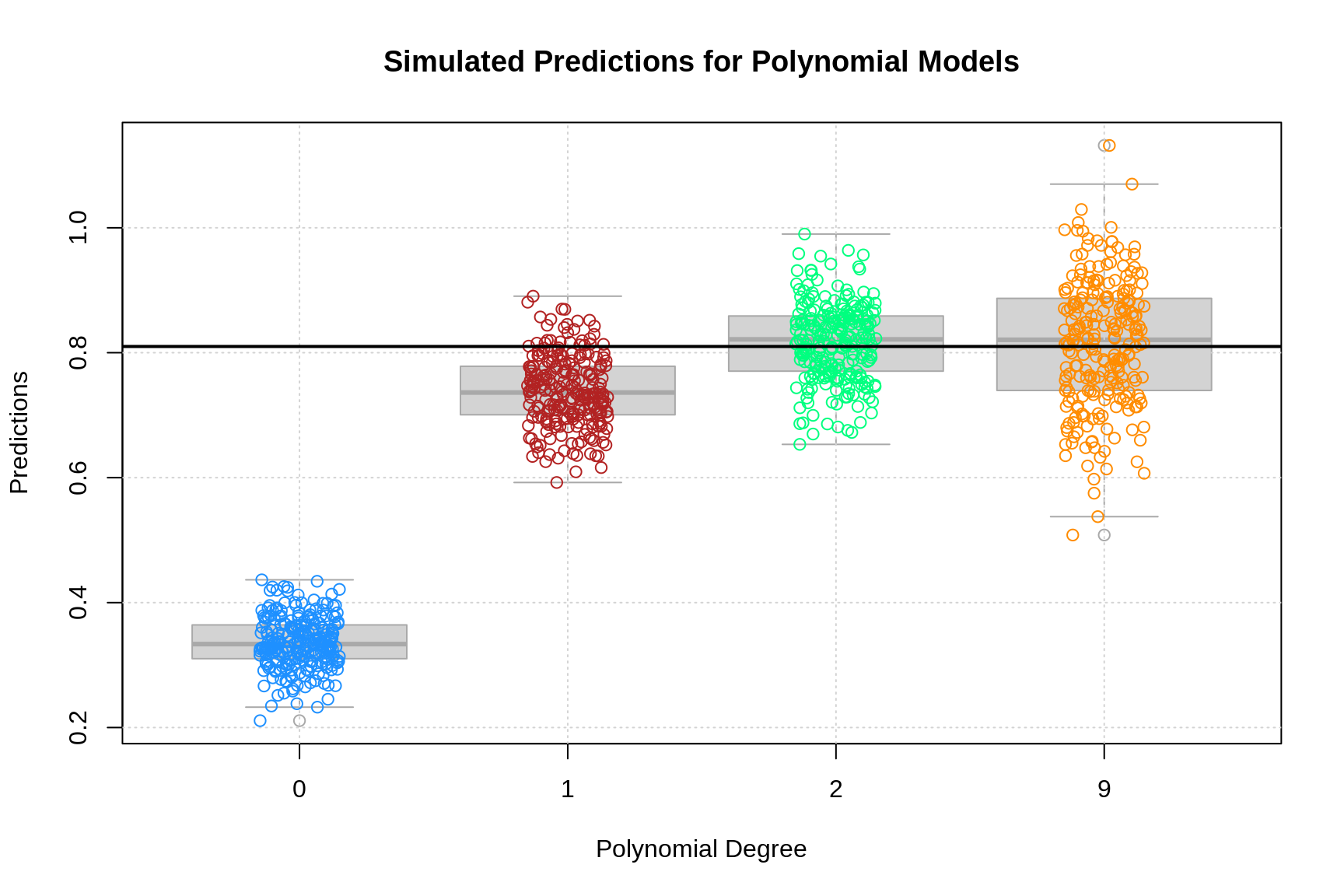

Пример расчёта оценок bias и variance

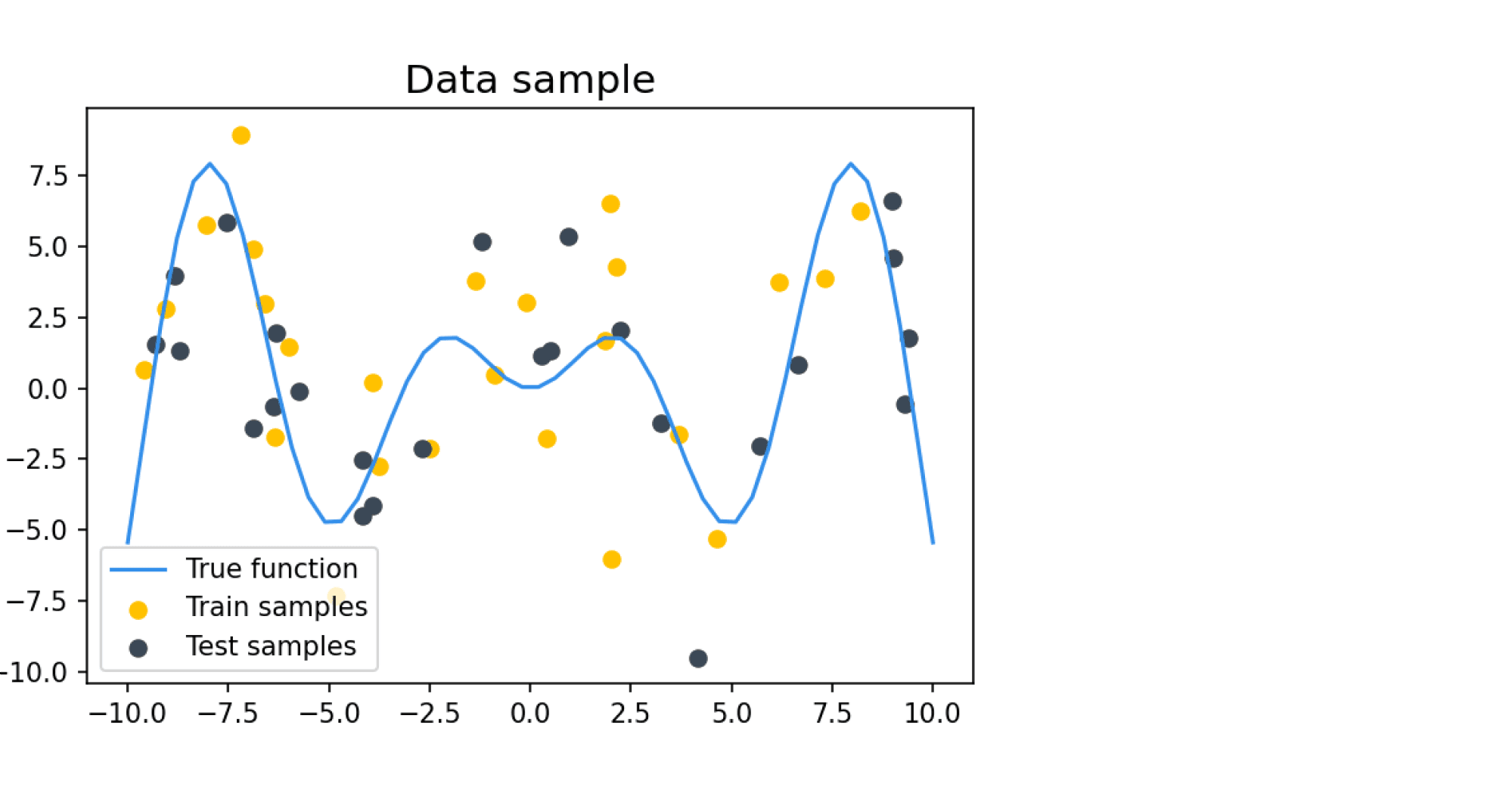

Попробуем вычислить разложение на смещение и разброс на каком-нибудь практическом примере. Наши обучающие и тестовые примеры будут состоять из зашумлённых значений целевой функции $f(x)$, где $f(x)$ определяется как

$$

f(x) = x sin x

$$

В качестве шума добавляется нормальный шум с нулевым средним и дисперсией $sigma^2$, равной во всех дальнейших примерах 9. Такое большое значение шума задано для того, чтобы задача была достаточно сложной для классификатора, который будет на этих данных учиться и тестироваться. Пример семпла из таких данных:

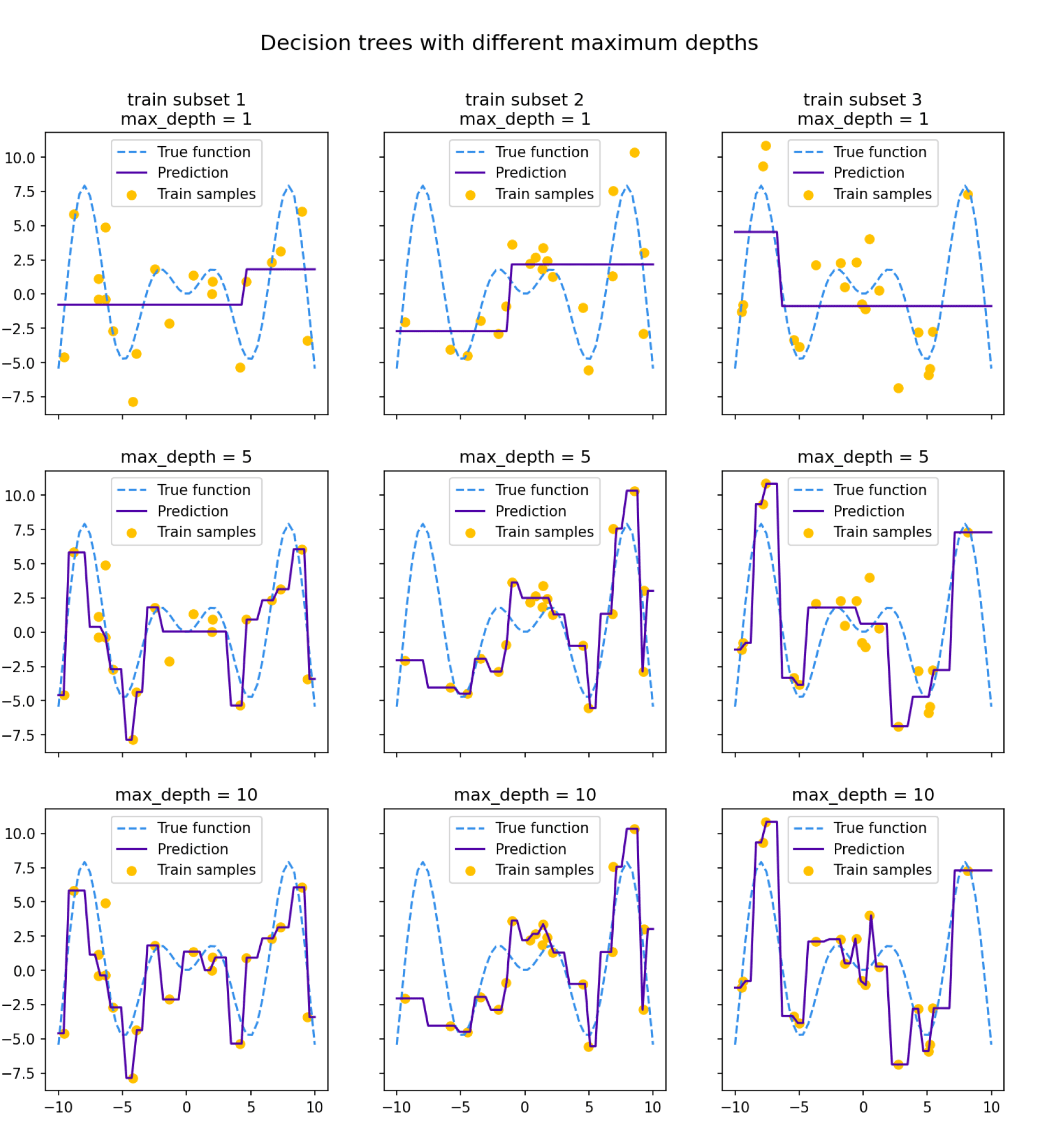

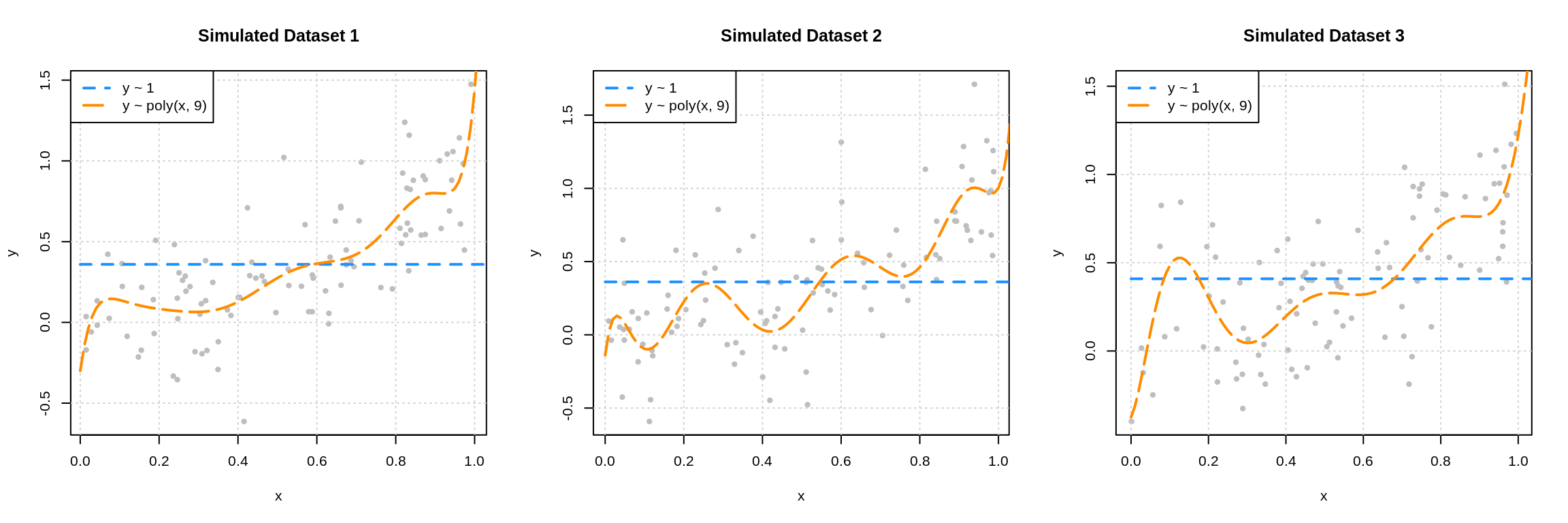

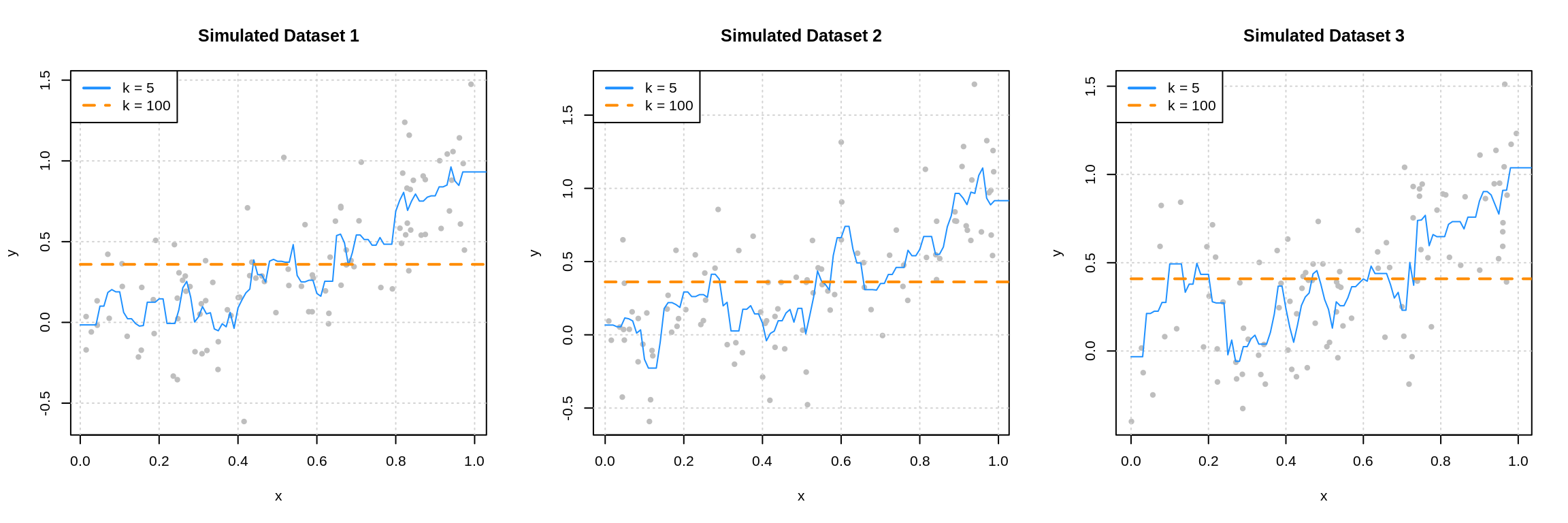

Посмотрим на то, как предсказания деревьев зависят от обучающих подмножеств и максимальной глубины дерева. На рисунке ниже изображены предсказания деревьев разной глубины, обученных на трёх независимых подвыборках размера 20 (каждая колонка соответствует одному подмножеству):

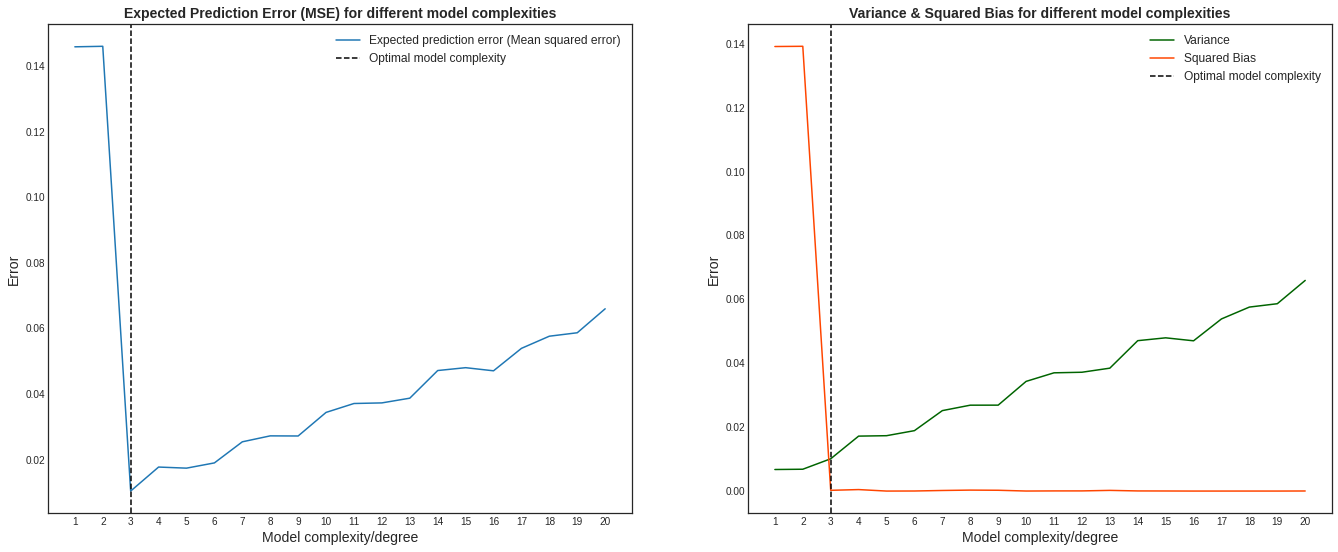

Глядя на эти рисунки, можно выдвинуть гипотезу о том, что с увеличением глубины дерева смещение алгоритма падает, а разброс в зависимости от выборки растёт. Проверим, так ли это, вычислив компоненты разложения для деревьев со значениями глубины от 1 до 15.

Для обучения деревьев насемплируем 1000 случайных подмножеств $X_{train} = (x_{train}, y_{train})$ размера 500, а для тестирования зафиксируем случайное тестовое подмножество точек $x_{test}$ также размера 500. Чтобы вычислить матожидание по $varepsilon$, нам нужно несколько экземпляров шума $varepsilon$ для тестовых лейблов:

$$

y_{test} = y(x_{test}, hat varepsilon) = f(x_{test}) + hat varepsilon

$$

Положим количество семплов случайного шума равным 300. Для фиксированных $X_{train} = (x_{train}, y_{train})$ и $X_{test} = (x_{test}, y_{test})$ квадратичная ошибка вычисляется как

$$

MSE = (y_{test} — a(x_{test}, X_{train}))^2

$$

Взяв среднее от $MSE$ по $X_{train}$, $x_{test}$ и $varepsilon$, мы получим оценку для $Q(a)$, а оценки для компонент ошибки мы можем вычислить по ранее выведенным формулам.

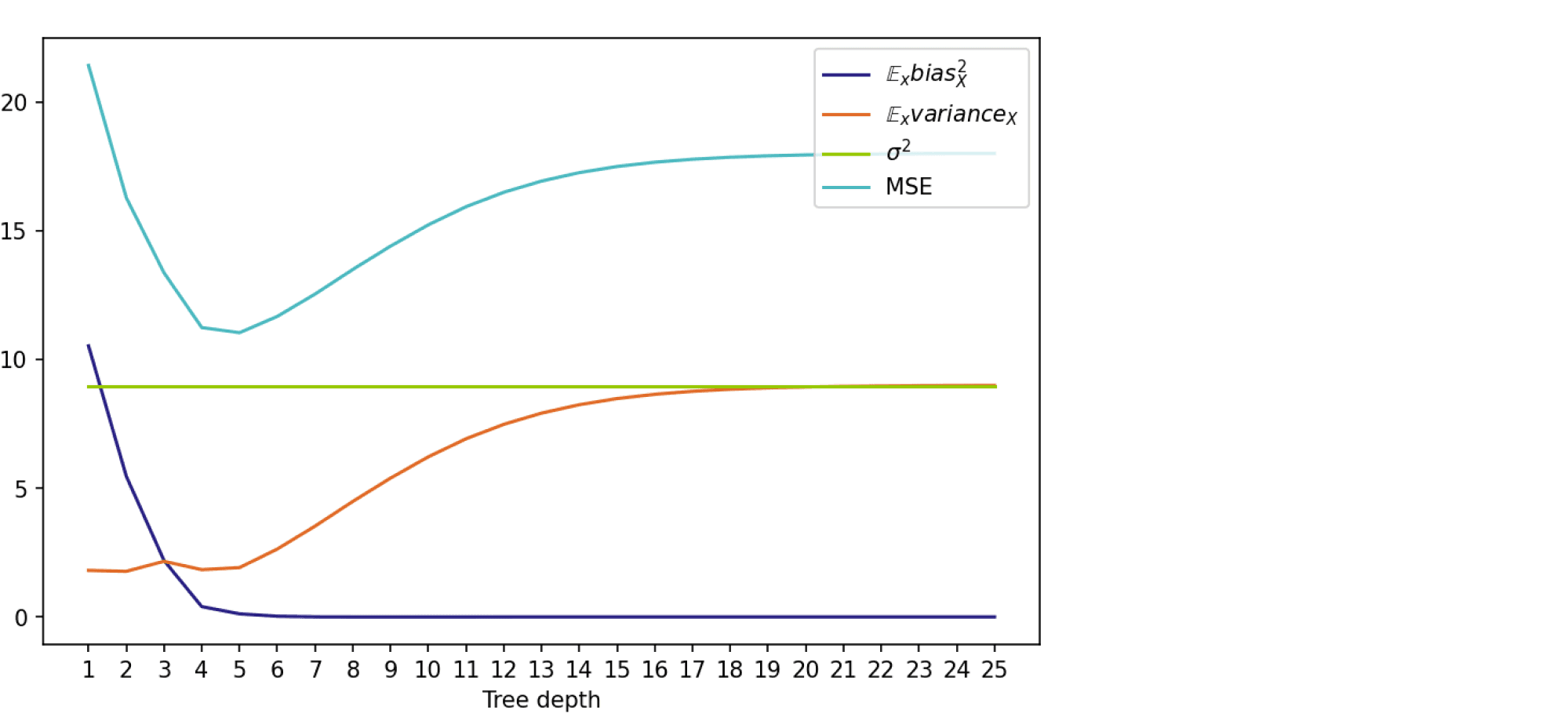

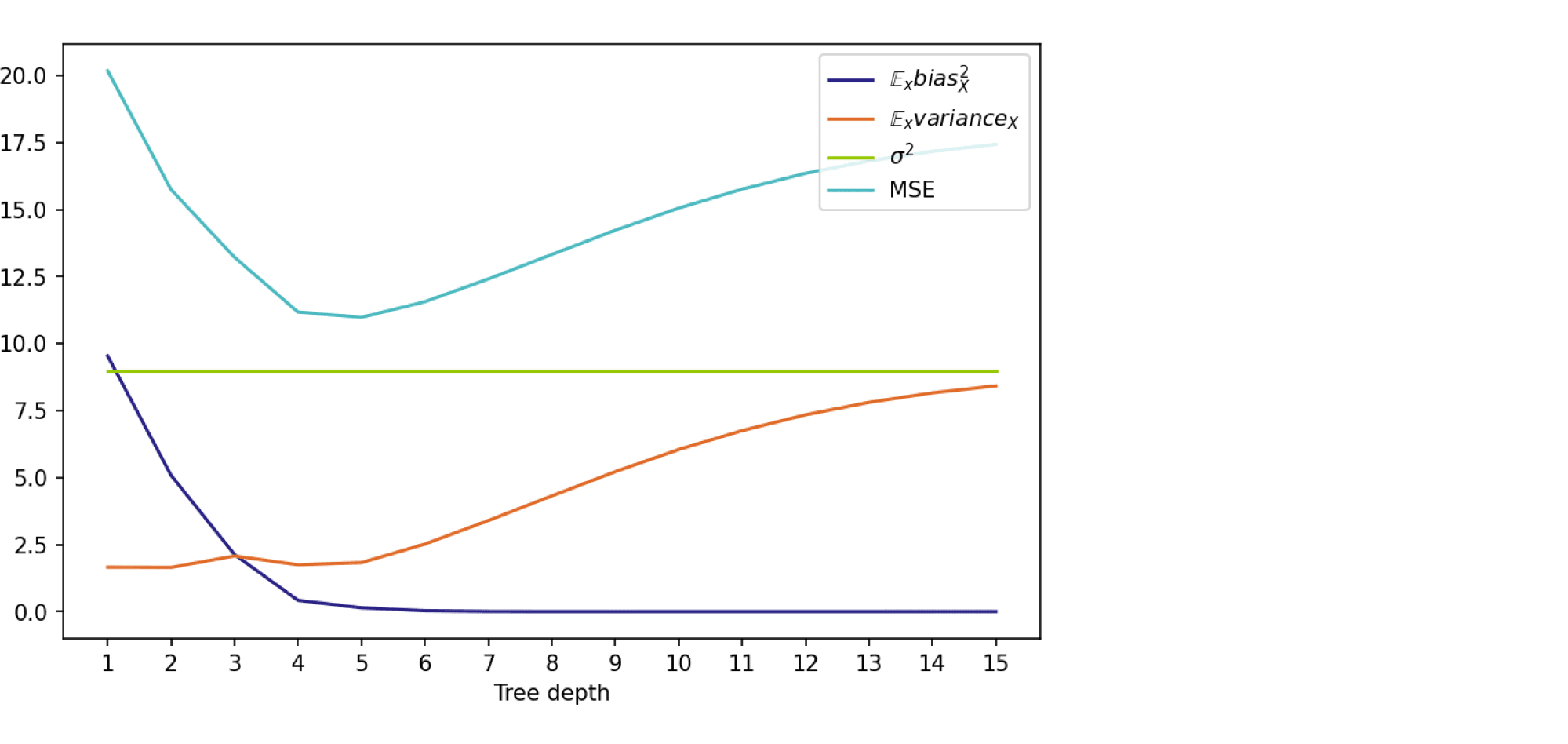

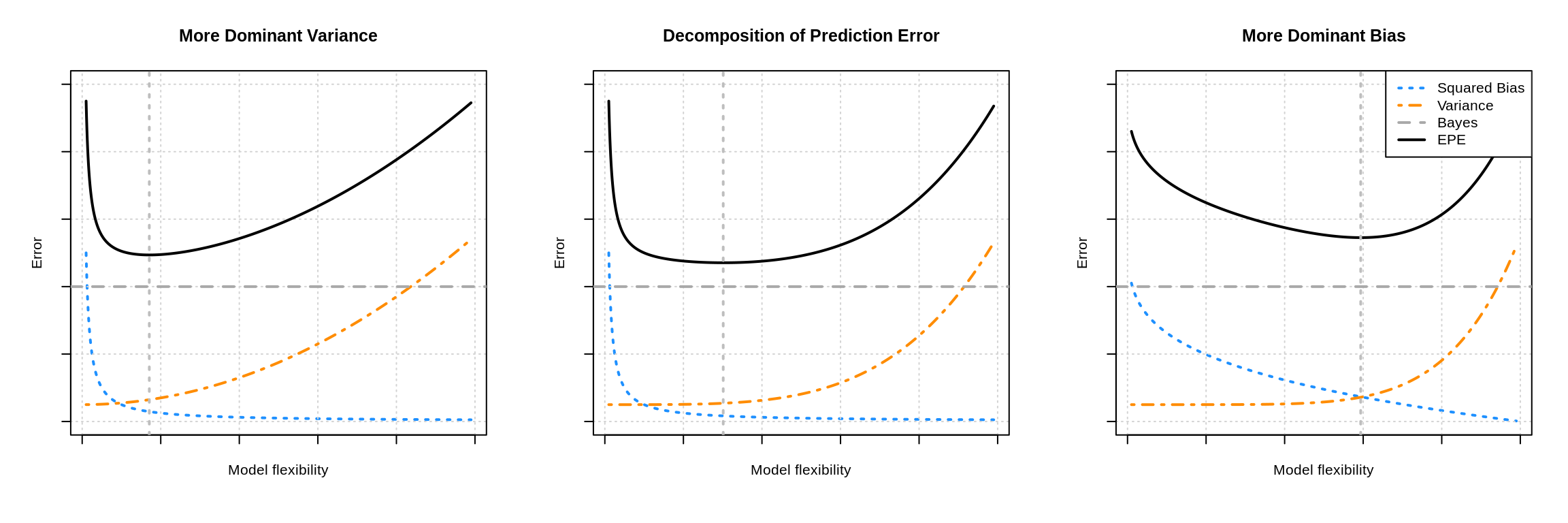

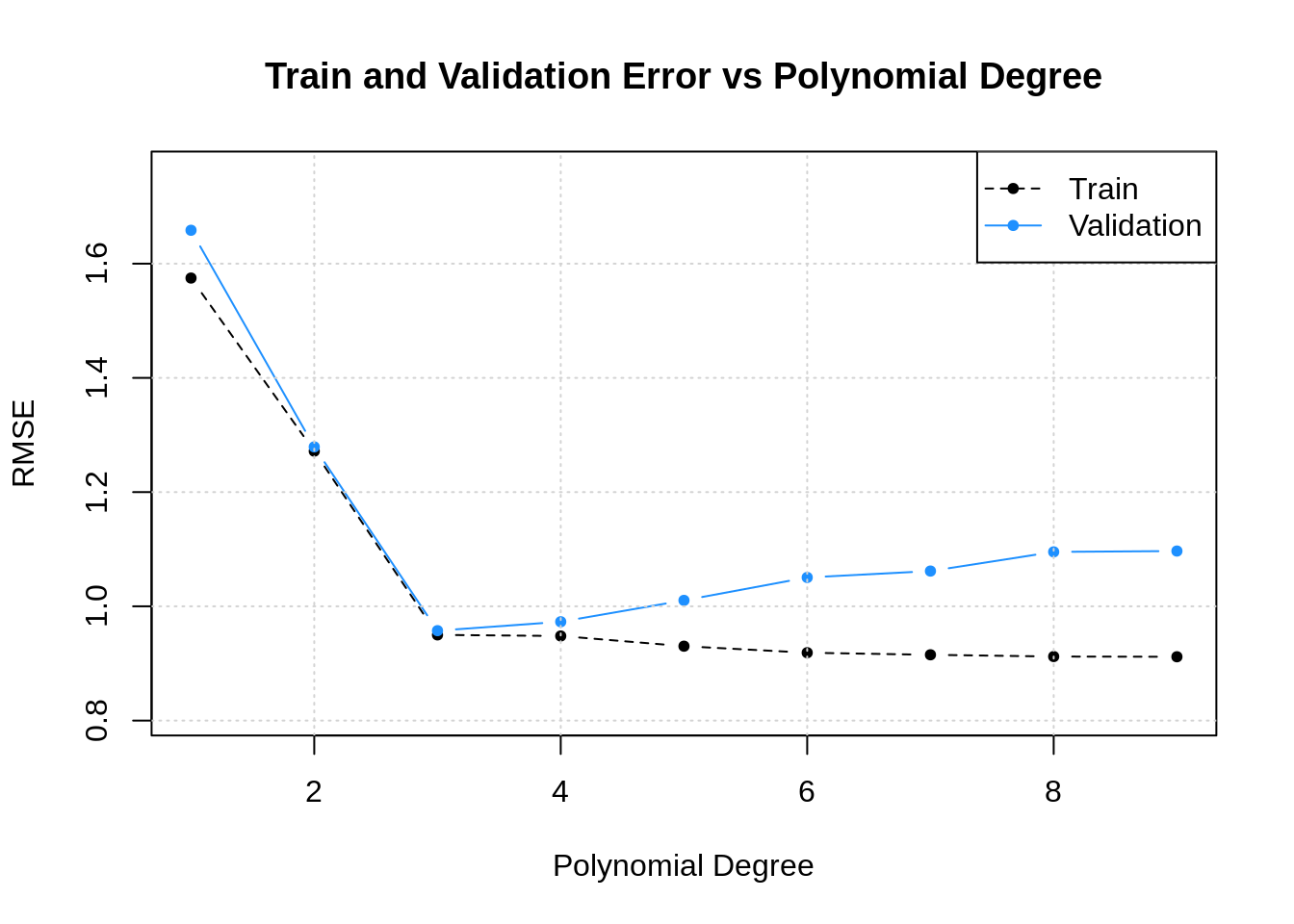

На графике ниже изображены компоненты ошибки и она сама в зависимости от глубины дерева:

По графику видно, что гипотеза о падении смещения и росте разброса при увеличении глубины подтверждается для рассматриваемого отрезка возможных значений глубины дерева. Правда, если нарисовать график до глубины 25, можно увидеть, что разброс становится равен дисперсии случайного шума. То есть деревья слишком большой глубины начинают идеально подстраиваться под зашумлённую обучающую выборку и теряют способность к обобщению:

Код для подсчёта разложения на смещение и разброс, а также код отрисовки картинок можно найти в данном ноутбуке.

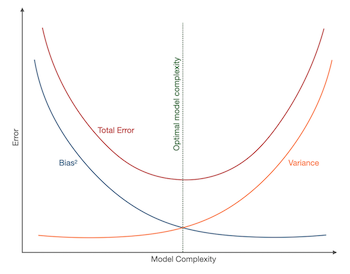

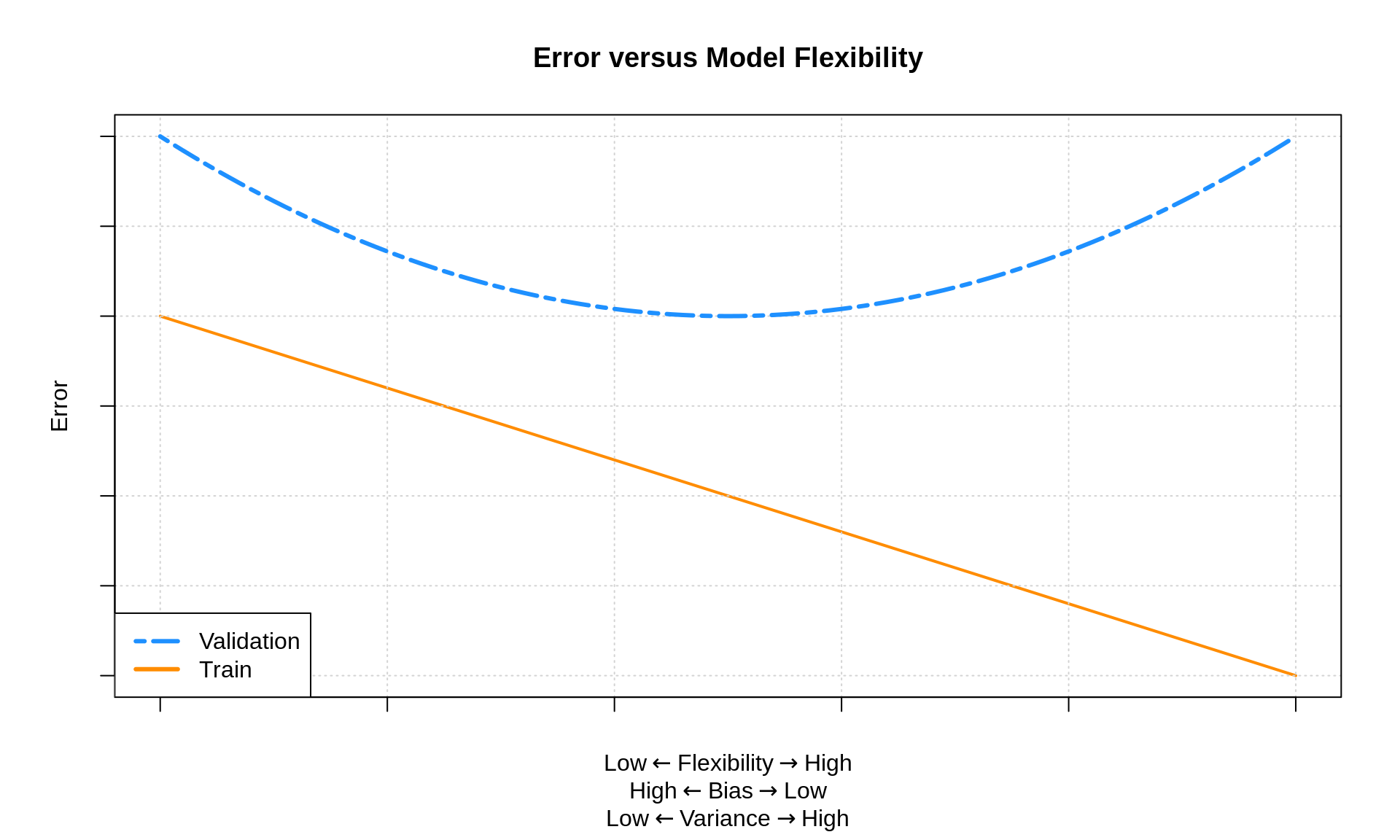

Bias-variance trade-off: в каких ситуациях он применим

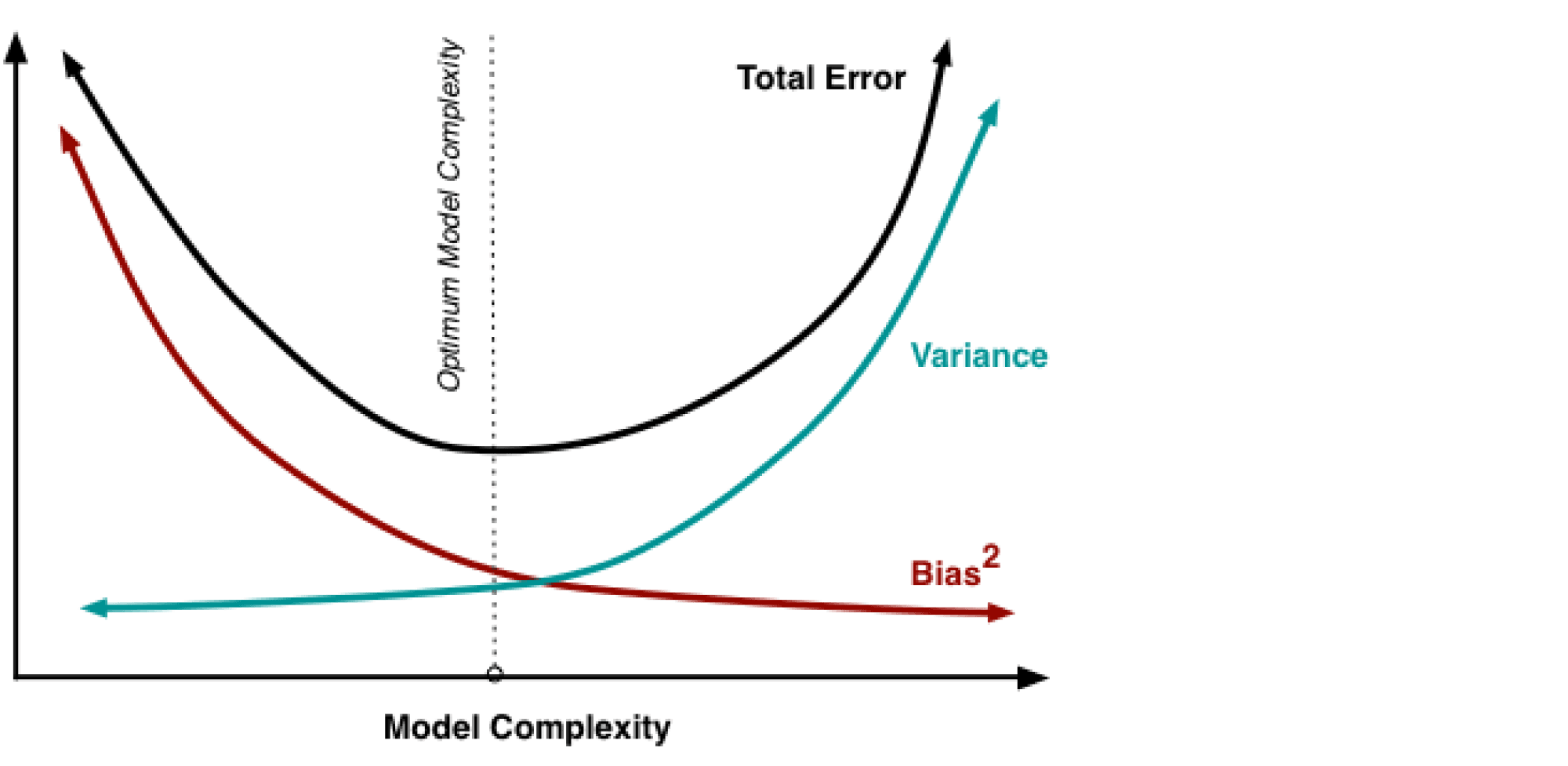

В книжках и различных интернет-ресурсах часто можно увидеть следующую картинку:

Она иллюстрирует утверждение, которое в литературе называется bias-variance trade-off: чем выше сложность обучаемой модели, тем меньше её смещение и тем больше разброс, и поэтому общая ошибка на тестовой выборке имеет вид $U$-образной кривой. С падением смещения модель всё лучше запоминает обучающую выборку, поэтому слишком сложная модель будет иметь нулевую ошибку на тренировочных данных и большую ошибку на тесте. Этот график призван показать, что существует оптимальная сложность модели, при которой соблюдается баланс между переобучением и недообучением и ошибка при этом минимальна.

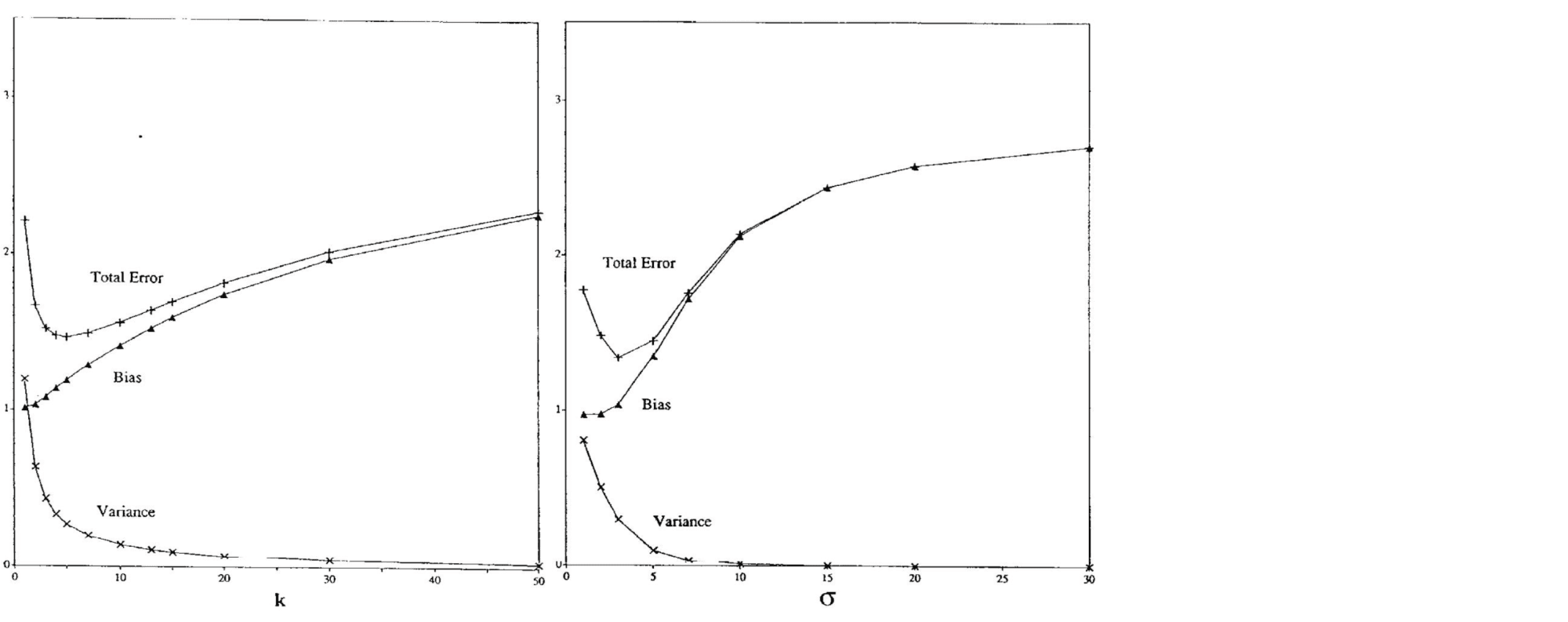

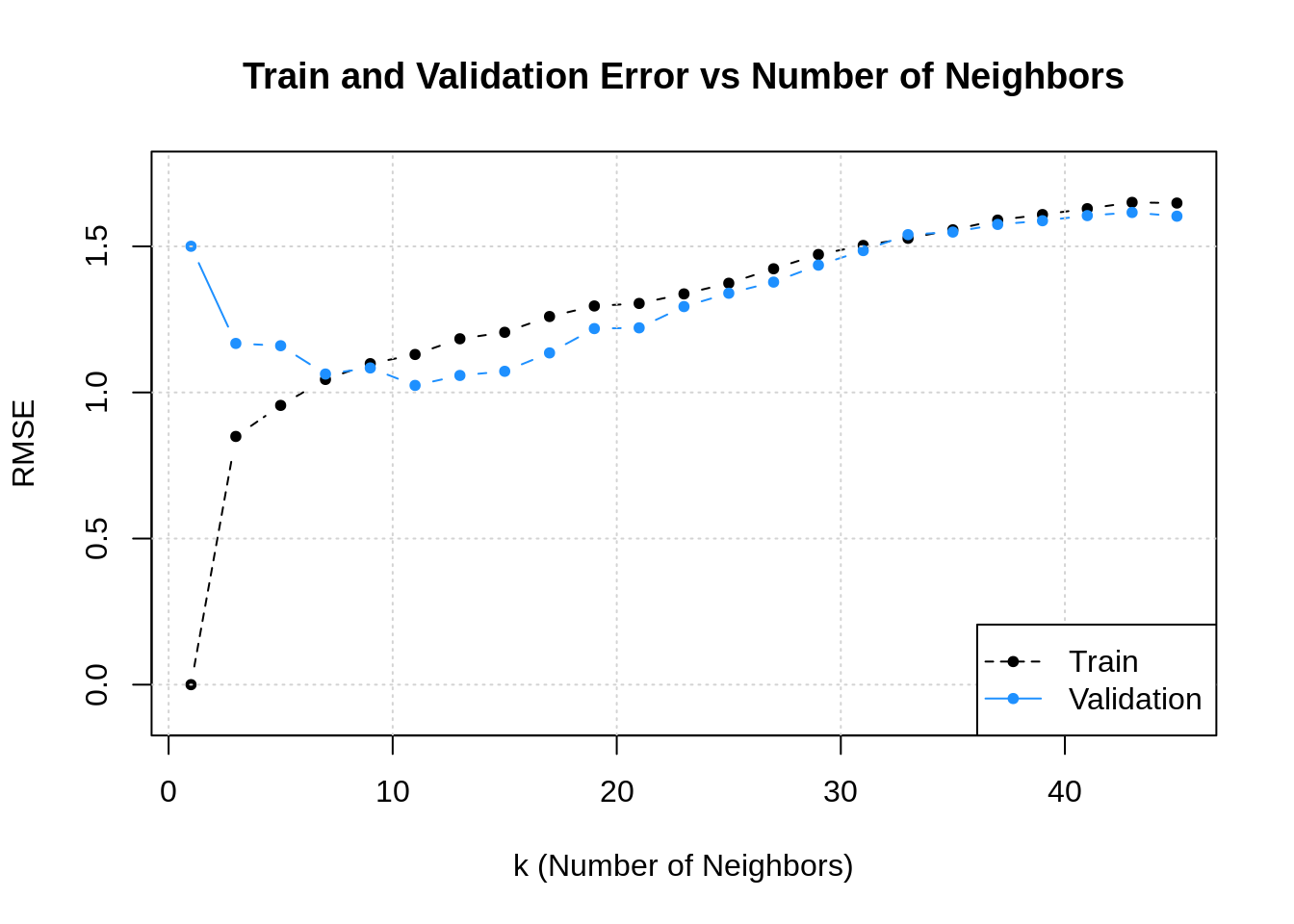

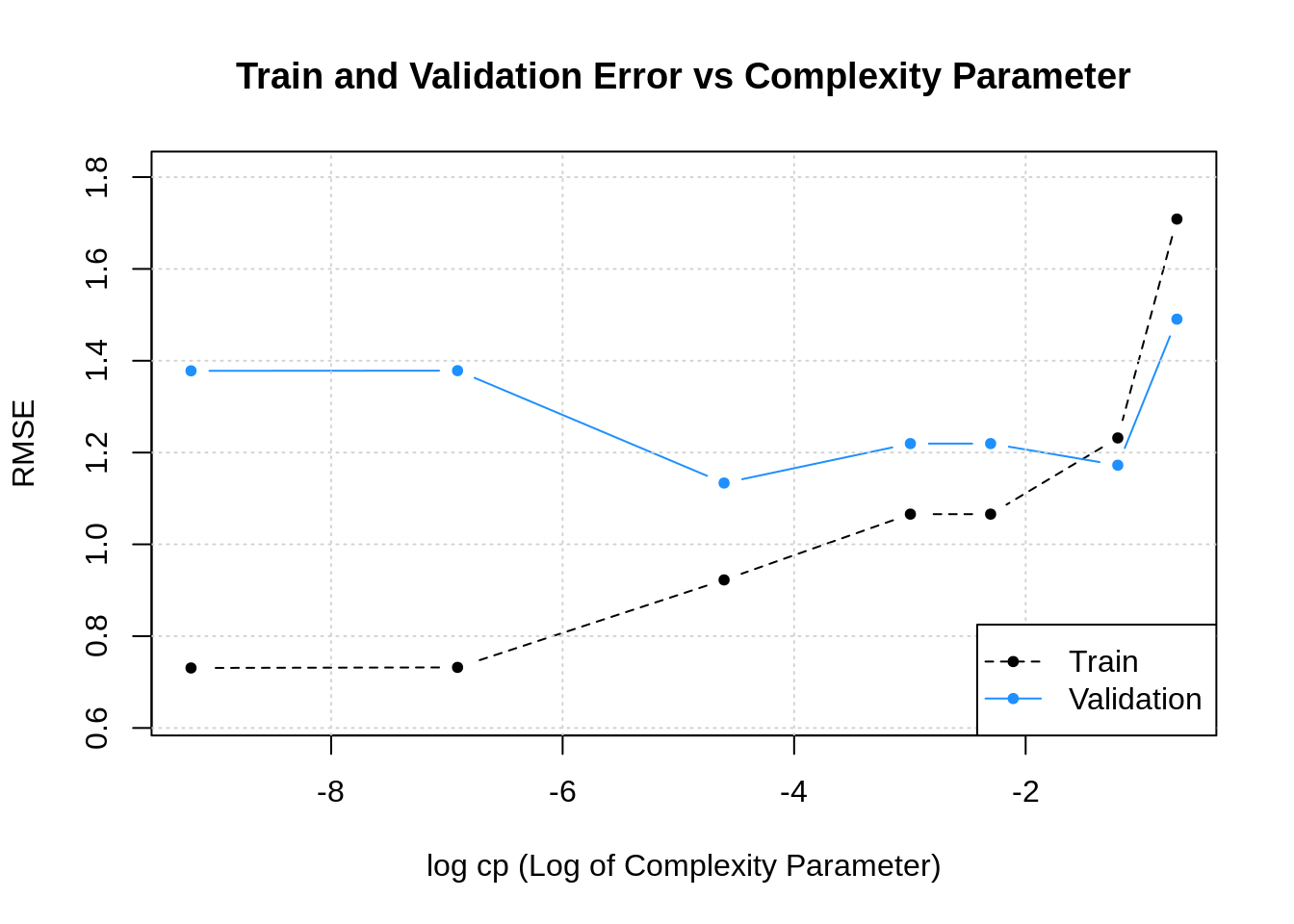

Существует достаточное количество подтверждений bias-variance trade-off для непараметрических моделей. Например, его можно наблюдать для метода $k$ ближайших соседей при росте $k$ и для ядерной регрессии при увеличении ширины окна $sigma$ (Geman et al., 1992):

Чем больше соседей учитывает $k$-NN, тем менее изменчивым становится его предсказание, и аналогично для ядерной регрессии, из-за чего сложность этих моделей в некотором смысле убывает с ростом $k$ и $sigma$. Поэтому традиционный график bias-variance trade-off здесь симметрично отражён по оси $x$.

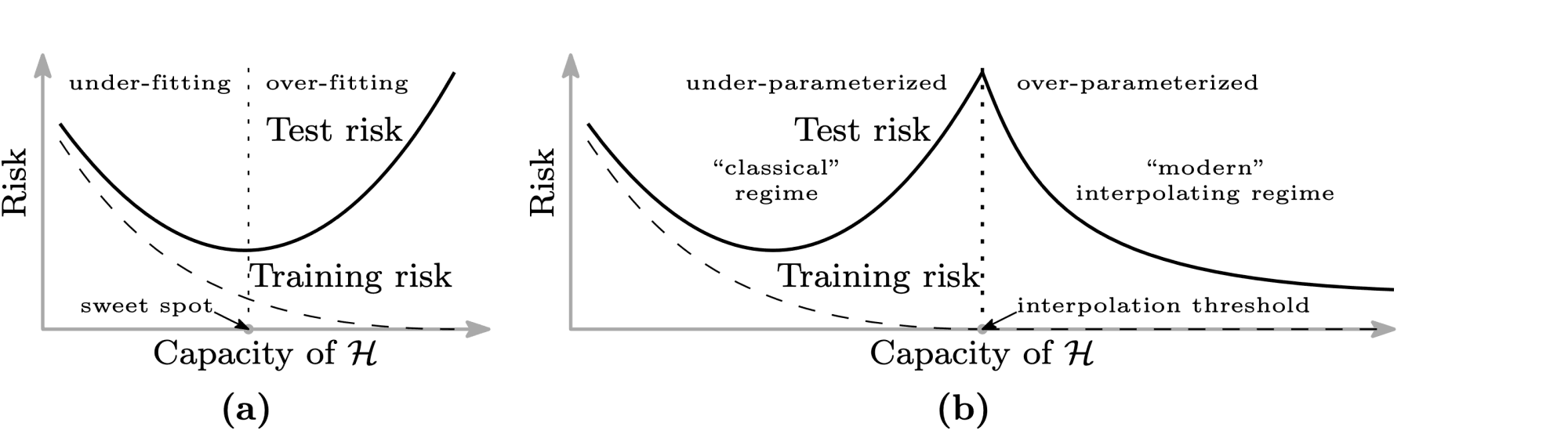

Однако, как показывают последние исследования, непременное возрастание разброса при убывании смещения не является абсолютно истинным предположением. Например, для нейронных сетей с ростом их сложности может происходить снижение и разброса, и смещения. Одна из наиболее известных статей на эту тему — статья Белкина и др. (Belkin et al., 2019), в которой, в частности, была предложена следующая иллюстрация:

Слева — классический bias-variance trade-off: убывающая часть кривой соответствует недообученной модели, а возрастающая — переобученной. А на правой картинке — график, называемый в статье double descent risk curve. На нём изображена эмпирически наблюдаемая авторами зависимость тестовой ошибки нейросетей от мощности множества входящих в них параметров ($mathcal H$). Этот график разделён на две части пунктирной линией, которую авторы называют interpolation threshold. Эта линия соответствует точке, в которой в нейросети стало достаточно параметров, чтобы без особых усилий почти идеально запомнить всю обучающую выборку. Часть до достижения interpolation threshold соответствует «классическому» режиму обучения моделей: когда у модели недостаточно параметров, чтобы сохранить обобщающую способность при почти полном запоминании обучающей выборки. А часть после достижения interpolation threshold соответствует «современным» возможностям обучения моделей с огромным числом параметров. На этой части графика ошибка монотонно убывает с ростом количества параметров у нейросети. Авторы также наблюдают похожее поведение и для «древесных» моделей: Random Forest и бустинга над решающими деревьями. Для них эффект проявляется при одновременном росте глубины и числа входящих в ансамбль деревьев.

В качестве вывода к этому разделу хочется сформулировать два основных тезиса:

- Bias-variance trade-off нельзя считать непреложной истиной, выполняющейся для всех моделей и обучающих данных.

- Разложение на смещение и разброс не влечёт немедленного выполнения bias-variance trade-off и остаётся верным и для случая, когда все компоненты ошибки (кроме неустранимого шума) убывают одновременно. Этот факт может оказаться незамеченным из-за того, что в учебных пособиях часто разговор о разложении дополняется иллюстрацией с $U$-образной кривой, благодаря чему в сознании эти два факта могут слиться в один.

Список литературы

- Блог-пост про bias-variance от Йоргоса Папахристудиса

- Блог-пост про bias-variance от Скотта Фортмана-Роу

- Статьи от Домингоса (2000) и Джеймса (2003) про обобщённые формы bias-variance decomposition

- Блог-пост от Брейди Нила про необходимость пересмотра традиционного взгляда на bias-variance trade-off

- Статья Гемана и др. (1992), в которой была впервые предложена концепция bias-variance trade-off

- Статья Белкина и др. (2019), в которой был предложен double-descent curve

In this post we’re going to take a deeper look at Mean Squared Error. Despite the relatively simple nature of this metric, it contains a surprising amount of insight into modeling. By breaking down Mean Squared Error into bias and variance, we’ll get a better sense of how models work and ways in which they can fail to perform. We’ll also find that baked into the definition of this metric is the idea that there is always some irreducible uncertainty that we can never quite get rid of.

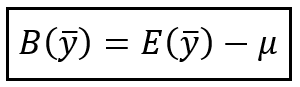

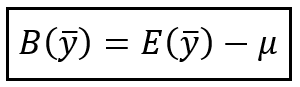

A quick explanation of bias

The primary mathematical tools we’ll be using are expectation, variance and bias. We’ve talked about expectation and variance quite a bit on this blog, but we need to introduce the idea of bias. Bias is used when we have an estimator, (hat{y}), that is used to predict another value (y). For example you might want to predict the rainfall, in inches, for your city in a given year. The bias is the average difference between that estimator and true value. Mathematically we just write bias as:

$$E[hat{y}-y]$$

Note that unlike variance, bias can be either positive or negative. If your rainfall predictions for your city had a bias of 0, it means that you’re just as likely to predict too much rain as to predict too little. If your bias was positive it means that you tend to predict more rain than actually occurs. Likewise, negative bias means that you underpredict. However, bias alone says little about how correct you are. Your forecasts could always be wildly wrong, but if you predict a drought during the rainiest year and predict floods during the driest then your bias can still be 0.

What is Modeling?

Before we dive too deeply into our problem, we want to be very clear about what we’re even trying to do whenever we model data. Even if you’re an expert in machine learning or scientific modeling, it’s always worth it to take a moment to make sure everything is clear. Whether we’re just making a random guess, building a deep neural network, or formulating a mathematical model of the physical universe, anytime we’re modeling we are attempting to understand some process in nature. Mathematically we describe this process as an unknown (and perhaps unknowable) function (f). This could be the process of curing meat, the complexity of a game of basketball, the rotation of the planets, etc.

Describing the world

To understand this process we have some information about how the world works and we know what outcome we expect from the process. The information about the world is our data and we use the variable (x) to denote this. It is easy to imagine (x) as just a single value, but it could be a vector of values, a matrix a values or something even more complex. And we know that this information about the world produces a result (y). Again we can think of (y) as a single value, but it can also be more complex values. This gives us our basic understanding of things, and we have the following equation:

$$y = f(x)$$

So (x) could the height a object is dropped, (f) could be the effects of gravity and the atmosphere on the object and (y) the time it takes to hit the ground. However, one very important thing is missing from this description of how things work. Clearly if you drop an object and time it, you will get slightly different observations, especially if you drop different objects. We consider this the «noise» in this process and we note that with a variable (epsilon) (lower case «epsilon» for those unfamiliar). Now we have this equation:

$$y = f(x) + epsilon$$

The (epsilon ) value is considered to be normally distributed with some standard deviation (sigma) and a mean of 0. We’ll note this as (mathcal{N}(mu=0,sigma)). This means that a negative and a positive impact from this noise are considered equally likely, and that small errors are much more likely than extreme ones.

Our model

This equation is just for how the world actually works given (x) that we know and (y) that we observe. However, we don’t really know how the world works so we have to make models. A model, in it’s most general form, is simply some other function (hat{f}) that takes (x) and should approximate (y). Mathematically we might say:

$$hat{f}(x) approx y$$

However it’s useful to expand out (y) into (f(x) + epsilon). This helps us see that (hat{f}) is not approximating (f), that is our natural process, directly.

$$hat{f}(x_i) approx f(x_i) + epsilon_i $$

Instead we have to remember that (hat{f}) models (y) which includes our noise. In practical modeling and machine learning this is an important thing to remember. If our noise term is very large, it can be difficult or even impossible to meaningfully capture the behavior of (f) itself.

Measuring the success of our model with Mean Squared Error.

Once we have implemented a model we need to check how well it preforms. In machine learning we typically split of our data into training and testing data, but you can also imagine a scientist building a model on past experiments or an economist making a model based on economic theories. In whichever case, we want to test our the model and measure it’s success. When we do this we have a new set of data, (x) with each values in that set being indexed by value so we label them all (x_i). To evaluate the model we need to compare this to some (y_i). The most common way to do this for real valued data is to use Mean Squared Error (MSE). MSE is exactly what it sounds like:

— We take the error (i.e. difference from (hat{f}(x_i)) and (y))

— Square this value (making negative in positive the same, and greater error gets more severe penalty)

— Then we take the average (mean) of these results.

Mathematically we express this as:

$$MSE = frac{1}{n}Sigma_{i=1}^n (y -hat{f}(x_i))^2 $$

Of course the mean of our squared error is also the same thing as the expectation of our squared error so we can go ahead and simplify this a bit:

$$MSE=E[(y-hat{f}(x))^2]$$

It’s worth noting that if our model simply predicted the mean of (y), (mu_y) for every answer, then our MSE is the same as the variance of (y), since one way we can define variance is as (E[(y-mu)^2])

Unpacking Mean Squared Error

Already MSE has some useful properties that are similar to the general properties we explored when we discussed why we square values for variance. However, even more interesting is that inside this relatively simple equation are hidden the calculations of bias and variance for our model! To find these we need to start by expanding our MSE a bit more. We’ll start by replacing (y) with (f(x) + epsilon):

$$MSE = E[(f(x)+ epsilon — hat{f}(x))^2]$$

Before moving on there is one rule of expectation we need to know so that we can manipulate this a bit better:

$$E[X+Y] = E[X] + E[Y]$$

That is, adding two random variables and computing their expectation is the same as computing the expectation of each random variable and then computing that. Of course, we can’t use this on our equation yet because all of the added terms are squared. To do anything more interesting we need to expand this out fully:

$$MSE = E[(f(x)+ epsilon — hat{f}(x))^2] =E[(f(x)+ epsilon — hat{f}(x))cdot (f(x)+ epsilon — hat{f}(x))] = ldots $$

$$E[-2f(x)hat{f}(x) + f(x)^2 + 2epsilon f(x) + hat{f}(x)^2 — 2epsilon hat{f}(x) + epsilon^2]$$

Now this looks like a mess, but let’s start pulling this apart in terms of expectations of each section were adding or subtracting.

At the end we have (epsilon^2). We know that this our noise which we already stated is normally distributed with a mean, (mu=0), and an unknown standard deviation of (sigma). Recall that (sigma^2 = text{Variance}). The definition of Variance is:

$$E[X^2] — E[X]^2$$

So the variance for our (epsilon) is:

$$Var(epsilon) = sigma^2 = E[epsilon^2] — E[epsilon]^2$$

But we can do a neat trick here! We just said that (epsilon ) is sampled from a Normal distribution with mean of 0, and the mean is the exact same thing as the expectation so:

$$E[epsilon]^2 = mu^2 = 0^2 = 0$$

And because of this we know that:

$$E[epsilon^2] = sigma^2 $$

We can use this to pull out the (epsilon^2) term from our MSE definition:

$$MSE = sigma^2 + E[-2f(x)hat{f}(x) + f(x)^2 + 2epsilon f(x) + hat{f}(x)^2 — 2epsilon hat{f}(x)]$$

Which still looks pretty gross! However, if we pull out all the remaining terms with (epsilon ) in them we can clean this up very easily:

$$MSE = sigma^2 + E[2epsilon f(x)- 2epsilon hat{f}(x)] + E[-2f(x)hat{f}(x) + f(x)^2 + hat{f}(x)^2]$$

Again, we know that the expected value of (epsilon ) is 0, so all the terms in (E[2epsilon f(x)- 2epsilon hat{f}(x)]) will end up a 0 in the long run! Now we’ve cleaned up quite a bit:

$$MSE = sigma^2 + E[-2f(x)hat{f}(x)+f(x)^2 + hat{f}(x)^2]$$

And here we see that one of the terms in our MSE reduced to (sigma^2), or the variance in the noise in our process.

A note on noise variance

It is important to stress here how important this insight is. The variance of the noise in our data is an irreducible part of our MSE. No matter how clever our model is, we can never reduce our MSE to being less than the variance related to the noise. It’s also worthwhile thinking about what «noise» is. In orthodox statistics we often think of noise as just some randomness added to the data, but as a Bayesian I can’t quite swallow this view. Randomness isn’t a statement about data, it’s a statement about our state of knowledge. A better interpretation is that noise represents the unknown in our view of the world. Part of it may be simply measurement error. Any equipment measuring data has limitations. But, and this is very important, it can also account for limitations in our (x) to fully capture all the information needed to adequately explain (y). If you are trying to predict the temperature in NYC from just the atmospheric CO2 levels, there’s still far to much unknown in your model.

Model variance and bias

So now that we’ve extracted out the noise variance, we still have a bit of work to do. Luckily we can simplify the inner term of the remaining expectation quite a bit:

$$-2f(x)hat{f}(x)+f(x)^2 + hat{f}(x)^2 = (f(x)-hat{f}(x))^2 $$

With that simplification we’re now left with:

$$MSE = sigma^2 + E[(f(x)-hat{f}(x))^2]$$

Now we need to do one more transformation that is really exciting! We just defined

$$Var(X) = E[X^2] — E[X]^2$$

Which means that:

$$Var(f(x)-hat{f}(x)) = E[(f(x)-hat{f}(x))^2] — E[f(x)-hat{f}(x)]^2$$

A simple reordering of these terms solves this for our (E[(f(x)-hat{f}(x))^2])):

$$E[(f(x)-hat{f}(x))^2]=Var(f(x)-hat{f}(x)) + E[f(x)-hat{f}(x)]^2$$

And now we get a much more interesting formulation of MSE:

$$MSE = sigma^2 + Var(f(x)-hat{f}(x)) + E[f(x) — hat{f}(x)]^2$$

And here we see two remarkable terms just pop out! The first, (Var(f(x)-hat{f}(x))) is, quite literally, the variance in our predictions from the true output of our process. Notice that even though we can’t differentiate (f(x)) and (epsilon), and our model cannot either, baked in to MSE is the true variance between the non-noisy process (f) and (hat{f}). The second term that pops out is (E[f(x) — hat{f}(x)]^2), this is just the bias squared. Remember that unlike variance, bias can be positive or negative (depending on how the model is biased), so we would need to square this value in order to make sure it’s always positive.

With MSE unpacked we can see that Mean Squared Error is quite literally:

$$text{Mean Squared Error}=text{Model Variance} + text{Model Bias}^2 + text{Irreducible Uncertainty}$$

Simulating Bias and Variance

It turns out that we actually need MSE is able to capture the details of bias, variance and the uncertainty in our model because *we cannot directly observe these properties ourselves*. In practice there is no way to know explicitly what (sigma^2) is for our (epsilon). This means that we cannot directly determine what the bias and variance are exactly for our model. Since (sigma^2) is constant, we do know that if we lower our MSE we must have lowered at least one of these other properties of our model.

However to understand these concepts better we can simulate a function (f) and apply our own noises sampled from a distribution with a known variance. Here is a bit of R code that will create our a (y) value from a function (f(x) = 3cdot x + 6) with (epsilon in mathcal{N}(mu=0,sigma=3) ):

We can visualize our data and see that we get what we would expect, points scattered around a straight line:

Because we know the true (f) and (sigma) we can experiment with different (hat{f}) models and see their bias and variance. Let’s start with a model that is the same as (f) except that it has a different y-intercept:

We can plot this out and see how it looks compared to our data.

As we can see, the blue line representing our model follows our data pretty closely but it systematically underestimates our values. We can go ahead and calculate the MSE for this function and, because we know what these values are, we can also calculate the bias and variance:

-

MSE: 13

-

bias: -2

-

variance: 0

So (hat{f}_1) has a negative bias because it under estimates the points more often than it over estimates them. But, compared to the true (f),it does so consistently, so therefore has 0 variance with (f). Given that we have a MSE of 13, a bias of -2 and 0 variance, we can also calculate that our (sigma^2) for our uncertainty is

$$sigma^2 = text{MSE} — (text{bias}^2 + text{variance})= 13 — (-2^2 + 0) = 9$$

Which is exactly what we would expect given that we set our (sigma) parameter to 3 when sampling our ‘e’ values.

We can come up with another model, (hat{f}_2), which simply guesses the mean of our data, which is 21.

With this simple model we get just a straight line cutting our data in half:

When we look at the MSE, bias and variance for this model we get very different results from last time:

-

MSE: 84

-

bias: 0

-

variance: 75

Given that our new model predicts the mean it shouldn’t be surprising to see that this model is has no bias since it underestimates (f(x)) just as much as it overestimates. The tradeoff here is that the variance in how the predictions differ between (hat{f_2}) and (f) is very high. And of course when we subtract out the variance from our MSE we still have the same (sigma^2=9).

For our final model,(hat{f}_3), we’ll just use R’s built-in `lm` function to build a linear model:

lm(y ~ x)When we plot this out we can see that, by minimizing the MSE, the linear model was able to recover our original (f):

Since you true function (f) is a linear model it’s not surprising that (hat{f_3}) is able to learn it. By this point you should be able to guess the results for this model:

-

MSE: 9

-

bias: 0

-

variance: 0

The MSE remains 9 because, even when we’re cheating and know the true model, we can’t learn better than the uncertainty in our data. So (sigma^2) stands as the limit for how good we can get our model to perform.

The Bias-Variance trade off

As we can see, if we want to improve our model we have to decrease either bias or variance. In practice bias and variance come up when we see how our models perform on a separate set of data than what they were trained on. In the case of testing your model out on different data than it was trained on, bias and variance can be used to diagnosis different symptoms of issues that could be wrong with your model. High bias on test data is typically caused because your model failed to find the (f) in the data and so it systematically under or over predicts. This is called «underfitting» in machine learning because you have failed to fit your model to the data.

High variance when working with test data indicates a different problem. High variance means that the predictions your model makes very greatly with what the known results should be in the test data. This high variance indicates your model has learned the training data so well that it is started to mistake the (f(x) + epsilon) for just the true (f(x)) you wanted to learn. This problem is called «overfitting», because you are fitting your model to closely to the data.

Conclusion

When we unpack and really understand Mean Squared Error we see that it is much more than just a useful error metric, but also a guide for reasoning about models. MSE allows us, in a single measurement, to capture the ideas of bias and variance in our models, as well as showing that there is some uncertainty in our models that we cannot get rid. The real magic of MSE is that it provides indirect mathematical insight about the behavior of the true process, (f), which we are never able to truly observe.

If you enjoyed this post please subscribe to keep up to date and follow @willkurt!

If you enjoyed this writing and also like programming languages, you might enjoy my book Get Programming With Haskell from Manning. Expected in July 2019 from No Starch Press is my next book Bayesian Statistics the Fun Way

Contents

- Bias and Variance of an Estimator

- Maximum Likelihood Estimation

- Example: Variance Estimator of Gaussian Data

- Example: Linear Regression

- Main Results

- Comparing linear regression and ridge regression estimators

- Bias and Variance of a Predictor (or Model)

- Setup

- Decomposition

- Discussion

- Example: Linear Regression and Ridge Regression

- Main Results

- Decomposing Bias for Linear Models

- Further areas of investigation

- Appendix

[newcommand{Noise}{mathcal{E}}

newcommand{fh}{hat{f}}

newcommand{fhR}{hat{f}^text{Ridge}}

newcommand{bff}{mathbf{f}}

newcommand{bfh}{mathbf{h}}

newcommand{bfX}{mathbf{X}}

newcommand{bfy}{mathbf{y}}

newcommand{bfZ}{mathbf{Z}}

newcommand{wh}{hat{w}}

newcommand{whR}{hat{w}^text{Ridge}}

newcommand{Th}{hat{T}}

DeclareMathOperator*{argmax}{arg,max}

DeclareMathOperator*{argmin}{arg,min}

DeclareMathOperator{Bias}{Bias}

DeclareMathOperator{var}{Var}

DeclareMathOperator{Cov}{Cov}

DeclareMathOperator{tr}{tr}]

Mean squared error (MSE) is defined in two different contexts.

- The MSE of an estimator quantifies the error of a sample statistic relative to the true population statistic.

- The MSE of a regression predictor (or model) quantifies the generalization error of that model trained on a sample of the true data distribution.

This post discusses the bias-variance decomposition for MSE in both of these contexts. To start, we prove a generic identity.

Theorem 1: For any random vector (X in R^p) and any constant vector (c in R^p),

[E[|X-c|_2^2] = trCov[X] + |E[X]-c|_2^2.]

Proof

All of the expectations and the variance are taken with respect to (P(X)). Let (mu := E[X]).

[begin{aligned}

tr Cov(X) + |mu-c|_2^2

&= sum_{i=1}^p var(X_i) + |mu-c|_2^2 \

&= sum_{i=1}^p Eleft[ (X_i — mu_i)^2 right] + |mu-c|_2^2 \

&= Eleft[sum_{i=1}^p (X_i — mu_i)^2 right] + |mu-c|_2^2 \

&= E[(X-mu)^T (X-mu)] + (mu-c)^T (mu-c) \

&= E[X^T X] underbrace{- 2 E[X]^T mu + mu^T mu + mu^T mu}_{=0} — 2 mu^T c + c^T c \

&= E[X^T X — 2 X^T c + c^T c] \

&= E[|X-c|_2^2]

end{aligned}]

We write the special case where (X) and (c) are scalars instead of vectors as a corollary.

Corollary 1: For any random variable (X in R) and any constant (c in R),

[E[(X-c)^2] = var[X] + (E[X]-c)^2.]

Bias and Variance of an Estimator

Consider a probability distribution (P_T(X)) parameterized by (T), and let (D = { x^{(i)} }_{i=1}^N) be a set of samples drawn i.i.d. from (P_T(X)). Let (Th = Th(D)) be an estimator of (T) that has variability due to the randomness of the data from which it is computed.

For example, (T) could be the mean of (P_T(X)). The sample mean (Th = frac{1}{N} sum_{i=1}^N x^{(i)}) is an estimator of (T).

For the rest of this section, we will use the abbreviation (E_T equiv E_{D sim P_T(X)}) and similarly for variance.

The mean squared error of (Th) decomposes nicely into the squared-bias and variance of (Th) by a straightforward application of Theorem 1 where (T) is constant.

[begin{aligned}

MSE(Th)

&= E_Tleft[ |Th(D) — T|_2^2 right] \

&= | E_T[Th] — T |_2^2 + trCov_T[Th] \

&= |Bias[Th]|_2^2 + trCov[Th]

end{aligned}]

Terminology for an estimator (Th):

- The standard error of a scalar estimator (Th) is (SE(Th) = sqrt{var[Th]}).

- If (Th) is a vector, then the standard error of its (i)-th entry is (SE(Th_i) = sqrt{var[Th_i]}).

- (Th) is unbiased if (Bias[Th] = 0).

- (Th) is efficient if (Cov[Th]) equals the Cramer-Rao lower bound (I(T)^{-1} / N) where (I(T)) is the Fisher Information matrix of (T).

- (Th) is asymptotically efficient if it achieves this bound asymptotically as the number of samples (N to infty).

- (Th) is consistent if (Th to T) in probability as (N to infty).

Sources

- Fan, Zhou. Lecture Notes from STATS 200 course, Stanford University. Autumn 2016. link.

- “What is the difference between a consistent estimator and an unbiased estimator?” StackExchange. link.

Maximum Likelihood Estimation

It can be shown that given data (D) sampled i.i.d. from (P_T(X)), the maximum likelihood estimator

[Th_{MLE} = argmax_Th P_Th(D) = argmax_Th prod_{i=1}^N P_Th(x^{(i)})]

is consistent and asymptotically efficient. See Rice 8.5.2 and 8.7.

Sources

- Rice, John A. Mathematical statistics and data analysis. 3rd ed., Cengage Learning, 2006.

- General statistics reference. Good discussion on maximum likelihood estimation.

Example: Variance Estimator of Gaussian Data

Consider data (D) sampled i.i.d. from a univariate Gaussian distribution (mathcal{N}(mu, sigma^2)). Letting (bar{x} = frac{1}{N} sum_{i=1}^N x^{(i)}) be the sample mean, the variance of the sampled data is

[S_N^2 = frac{1}{N} sum_{i=1}^N (x^{(i)} — bar{x})^2.]

The estimator (S_N^2) is both the method of moments estimator (Fan, Lecture 12) and maximum likelihood estimator (Tobago) of the population variance (sigma^2). Nonetheless, even though it is consistent and asymptotically efficient, it is biased (proof on Wikipedia).

[E[S_N^2] = frac{N-1}{N} sigma^2 neq sigma^2]

Correcting for the bias yields the usual unbiased sample variance estimator.

[S_{N-1}^2

= frac{N}{N-1} S_N^2

= frac{1}{N-1} sum_{i=1}^N (x^{(i)} — bar{x})^2]

Interestingly, although (S_{N-1}^2) is an unbiased estimator of the population variance (sigma^2), its square-root (S_{N-1}) is a biased estimator of the population standard deviation (sigma). This is because the square root is a strictly concave function, so by Jensen’s inequality,

[E[S_{N-1}] = Eleft[sqrt{S_{N-1}^2}right]

< sqrt{E[S_{N-1}^2]} = sqrt{sigma^2} = sigma.]

The variances of the two estimators (S_N^2) and (S_{N-1}^2) are also different. The distribution of (frac{N-1}{sigma^2} S_{N-1}^2) is (chi_{N-1}^2) (chi-square) with (N-1) degrees of freedom (Rice 6.3), so

[var[S_{N-1}^2]

= varleft[ frac{sigma^2}{N-1} chi_{N-1}^2 right]

= frac{sigma^4}{(N-1)^2} var[ chi_{N-1}^2 ]

= frac{2 sigma^4}{N-1}.]

Instead of directly calculating the variance of (S_N^2), let’s calculate the bias and variance of the family of estimators parameterized by (k).

[begin{aligned}

S_k^2

&= frac{1}{k} sum_{i=1}^N (x^{(i)} — bar{x})^2

= frac{N-1}{k} S_{N-1}^2 \

E[S_k^2]

&= frac{N-1}{k} E[S_{N-1}^2]

= frac{N-1}{k} sigma^2 \

Bias[S_k^2]

&= E[S_k^2] — sigma^2

= frac{N-1-k}{k} sigma^2 \

var[S_k^2]

&= left(frac{N-1}{k}right)^2 var[S_{N-1}^2]

= frac{2 sigma^4 (N-1)}{k^2} \

MSE[S_k^2]

&= Bias[S_k^2]^2 + var[S_k^2]

= frac{(N-1-k)^2 + 2(N-1)}{k^2} sigma^4

end{aligned}]

Although (S_N^2) is biased whereas (S_{N-1}^2) is not, (S_N^2) actually has lower mean squared error for any sample size (N > 2), as shown by the ratio of their MSEs.

[frac{MSE(S_N^2)}{MSE(S_{N-1}^2)}

= frac{sigma^4(2N — 1) / N^2}{2 sigma^4 / (N-1)}

= frac{(2N-1)(N-1)}{2N^2}]

In fact, within the family of estimators of the form (S_k^2), the estimator with the lowest mean squared error is actually (k = N+1). In most real-world scenarios, though, any of (S_{N-1}), (S_N), and (S_{N+1}) works well enough for large (N).

Sources

- Giles, David. “Variance Estimators That Minimize MSE.” Econometrics Beat: Dave Giles’ Blog, 21 May 2013. link.

- Taboga, Marco. “Normal Distribution — Maximum Likelihood Estimation.” StatLect. link.

- “Variance.” Wikipedia, 18 July 2019. link.

Example: Linear Regression

In the setting of parameter estimation for linear regression, the task is to estimate the coefficients (w in R^p) that relate a scalar output (Y) to a vector of regressors (X in R^p). It is typically assumed that (Y) and (X) are random variables related by (Y = w^T X + epsilon) for some noise (epsilon in R). However, we will take the unusual step of not necessarily assuming that the relationship between (X) and (Y) is truly linear, but instead that their relationship is given by (Y = f(X) + epsilon) for some arbitrary function (f: R^p to R).

Suppose that the noise (epsilon sim Noise) is independent of (X) and that (Noise) is some arbitrary distribution with mean 0 and constant variance (sigma^2). One example of such a noise distribution is (epsilon sim mathcal{N}(0, sigma^2)), although our following analysis does not require a Gaussian distribution.

Thus, for a given (x),

[begin{aligned}

E_{y sim P(Y|X=x)}[y] &= f(x) \

var_{y sim P(Y|X=x)}[y] &= var_{epsilon sim Noise}[epsilon] = sigma^2

end{aligned}]

Note that if (sigma^2 = 0), then (Y) is deterministically related to (X), i.e. (Y = f(X)).

We aim to estimate a linear regression function (fh) that approximates the true (f) over some given training set of (N) labeled examples (D = left{ left(x^{(i)}, y^{(i)} right) right}_{i=1}^N) sampled from an underlying joint distribution (P(X,Y)). In matrix notation, we can write (D = (bfX, bfy)) where (bfX in R^{N times p}) and (bfy in R^N) have the training examples arranged in rows.

We can factor (P(X,Y) = P(Y mid X) P(X)). We have assumed that (P(Y mid X)) has mean (f(X)) and variance (sigma^2). However, we do not assume anything about the marginal distribution (P(X)) of the inputs, which is arbitrary depending on the dataset.

For the rest of this post, we use the following abbreviations for the subscripts of expectations and variances:

[begin{aligned}

epsilon &equiv epsilon sim Noise \

y mid x &equiv y sim p(Y mid X=x) \

bfy mid bfX &equiv bfy sim p(Y mid X=bfX) \

x &equiv x sim P(X) \

D &equiv D sim P(X, Y)

end{aligned}]

and the following shorthand notations:

[begin{aligned}

bfZ_bfX &= (bfX^T bfX)^{-1} bfX^T in R^{p times N} \

bfZ_{bfX, alpha} &= (bfX^T bfX + alpha I_d)^{-1} bfX^T in R^{p times N} \

wh_{bfX, f} &= bfZ_bfX f(bfX) = (bfX^T bfX)^{-1} bfX^T f(bfX) in R^p \

wh_{bfX, f, alpha} &= bfZ_{bfX, alpha} f(bfX) = (bfX^T bfX + alpha I_d)^{-1} bfX^T f(bfX) in R^p \

bfh_bfX(x) &= bfZ_bfX^T x = bfX (bfX^T bfX)^{-1} x in R^N \

bfh_{bfX, alpha}(x) &= bfZ_{bfX, alpha}^T x = bfX (bfX^T bfX + alpha I_d)^{-1} x in R^N.

end{aligned}]

Main Results

The ordinary least-squares (OLS) and ridge regression estimators for (w) are

[begin{aligned}

wh

&= argmin_w | f(bfX) — bfX w |^2

= (bfX^T bfX)^{-1} bfX^T bfy \

whR

&= argmin_w | f(bfX) — bfX w |^2 + alpha |w|_2^2

= (bfX^T bfX + alpha I_d)^{-1} bfX^T bfy.

end{aligned}]

Their bias and variance properties are summarized in the table below. Note that in the case of arbitrary (f), the bias of an estimator is technically undefined, since there is no “true” value to compare it to. (See highlighted cells in the table.) Instead, we compare our estimators to the parameters (w_star) of the best-fitting linear approximation to the true (f). When (f) is truly linear, i.e. (f(x) = w^T x), then (w_star = w). The derivation for (w_star) can be found here.

[begin{aligned}

w_star

&= argmin_w E_xleft[ (f(x) — w^T x)^2 right] \

&= E_x[x x^T]^{-1} E_x[x f(x)]. \

end{aligned}]

We separately consider 2 cases:

- The training inputs (bfX) are fixed, and the training targets are sampled from the conditional distribution (bfy sim P(Y mid X=bfX)).

- Both the training inputs and targets are sampled jointly ((bfX, bfy) sim P(X, Y)).

We also show that the variance of the ridge regression estimator is strictly less than the variance of the linear regression estimator when (bfX) are considered fixed. Furthermore, there always exists some choice of (alpha) such that the mean squared error of (whR) is less than the mean squared error of (wh).

| arbitrary (f(X)) | linear (f(X)) | ||||

|---|---|---|---|---|---|

| fixed (bfX) | (E_D) | fixed (bfX) | (E_D) | ||

| OLS | Bias | 0 | (E_bfX[ wh_{bfX, f} ] — w_star) | 0 | 0 |

| Variance | (sigma^2 (bfX^T bfX)^{-1}) | (Cov_bfX[wh_{bfX, f}] + sigma^2 E_bfXleft[(bfX^T bfX)^{-1}right]) | (sigma^2 (bfX^T bfX)^{-1}) | (sigma^2 E_bfXleft[(bfX^T bfX)^{-1}right]) | |

| Ridge Regression | Bias | (bfZ_{bfX, alpha} bfX w_star — w_star) | (E_bfXleft[ bfZ_{bfX, alpha} bfX right] w_star — w_star) | (wh_{bfX, f, alpha} — w) | (E_bfXleft[ wh_{bfX, f, alpha} right] — w) |

| Variance | (sigma^2 bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T) | (Cov_bfX[ wh_{bfX, f, alpha} ] + sigma^2 E_{bfX, epsilon}[bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T]) | (sigma^2 bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T) | (Cov_bfX[ wh_{bfX, f, alpha} ] + sigma^2 E_{bfX, epsilon}[bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T]) |

Linear Regression Estimator for arbitrary (f)

Details

First, we consider the case where the training inputs (bfX) are fixed. In this case, the estimator (wh) is unbiased relative to (w_star).

[begin{aligned}

E_{bfy|bfX}[wh]

&= E_{bfy|bfX}left[ (bfX^T bfX)^{-1} bfX^T bfy right] \

&= (bfX^T bfX)^{-1} bfX^T E_{bfy|bfX}[bfy] \

&= (bfX^T bfX)^{-1} bfX^T f(bfX)

= wh_{bfX, f} \

&= n (bfX^T bfX)^{-1} cdot frac{1}{n} bfX^T f(bfX) \

&= left( frac{1}{n} sum_{i=1}^N x^{(i)} x^{(i)T} right)^{-1} frac{1}{n} sum_{i=1}^N x^{(i)} f(x^{(i)}) \

&= E_x[x x^T]^{-1} E_x[x f(x)]

= w_star

\

Cov[wh mid bfX]

&= Cov_{bfy | bfX} [ wh ] \

&= Cov_{bfy | bfX} left[ (bfX^T bfX)^{-1} bfX^T bfy right] \

&= bfZ Cov_{bfy | bfX}[bfy] bfZ^T \

&= bfZ (sigma^2 I_n) bfZ^T \

&= sigma^2 bfZ bfZ^T \

&= sigma^2 (bfX^T bfX)^{-1}

end{aligned}]

However, if the training inputs (bfX) are sampled randomly, then the estimator is no longer unbiased, and the variance term also becomes dependent on (f).

[begin{aligned}

E_D[wh]

&= E_bfX left[ E_{bfy|bfX}[ wh ] right]

= E_bfX[ wh_{bfX, f} ]

\

Bias[wh]

&= E_bfX[ wh_{bfX, f} ] — w_star

end{aligned}]

We prove by counterexample that (E_bfX[ wh_{bfX, f} ] neq w_star). Suppose (X sim mathsf{Uniform}[0, 1]) is a scalar random variable, let (f(x) = x^2), and consider a training set of size 2: (D = {a, b} sim P(X)). We evaluate the integral by WolframAlpha. Otherwise, we can also compute the integral manually by splitting the fraction and doing a (u)-substitution with (u = a^2).

[begin{aligned}

w_star

&= E_x[x x^T]^{-1} E_x[x f(x)] \

&= E_x[x^2]^{-1} E_x[x^3] \

&= (1/3)^{-1} (1/4) = 3/4

\

E_D[wh]

&= E_bfXleft[ (bfX^T bfX)^{-1} bfX^T f(bfX) right] \

&= E_{a, b sim P(X)} left[ (a^2 + b^2)^{-1} (a^3 + b^3) right] \

&= int_0^1 int_0^1 frac{a^3 + b^3}{a^2 + b^2} da db \

&= frac{1}{6} left( 2 + pi — ln 4 right)

approx 0.63

end{aligned}]

The derivation for the variance of (wh) relies heavily on the linearity of expectation for matrices (see Appendix).

[begin{aligned}

Cov[wh]

&= Cov_D[ wh ] \

&= Cov_{bfX, epsilon} [ (bfX^T bfX)^{-1} bfX^T (f(bfX) + epsilon) ] \

&= Cov_{bfX, epsilon} [ wh_{bfX, f} + bfZ_bfX epsilon ] \

&= E_{bfX, epsilon} [ (wh_{bfX, f} + bfZ_bfX epsilon) (wh_{bfX, f} + bfZ_bfX epsilon)^T ] — E_{bfX, epsilon}[ wh_{bfX, f} + bfZ_bfX epsilon ] E_{bfX, epsilon}[ wh_{bfX, f} + bfZ_bfX epsilon ]^T \

&= E_{bfX, epsilon} left[ wh_{bfX, f} wh_{bfX, f}^T + wh_{bfX, f} (bfZ_bfX epsilon)^T + bfZ_bfX epsilon wh_{bfX, f}^T + bfZ_bfX epsilon (bfZ_bfX epsilon)^T right] — E_bfX[wh_{bfX, f}] E_bfX[wh_{bfX, f}]^T \

&= E_bfX[ wh_{bfX, f} wh_{bfX, f}^T ] + 0 + 0 + E_{bfX, epsilon}[bfZ_bfX epsilon epsilon^T bfZ_bfX^T] — E_bfX[wh_{bfX, f}] E_bfX[wh_{bfX, f}]^T \

&= Cov_bfX[wh_{bfX, f}] + E_bfXleft[bfZ_bfX underbrace{E_epsilon[epsilon epsilon^T]}_{=sigma^2 I_N} bfZ_bfX^Tright] \

&= Cov_bfX[wh_{bfX, f}] + sigma^2 E_bfXleft[(bfX^T bfX)^{-1}right]

end{aligned}]

Linear Regression Estimator for linear (f)

In this setting, we assume that (f(x) = w^T x) for some true (w). As a special case, if the noise is Gaussian distributed (epsilon sim mathcal{N}(0, sigma^2)), then (wh) is the maximum likelihood estimator (MLE) for (w), so it is consistent and asymptotically efficient.

Details

If (bfX) is fixed, then the least-squares estimate is unbiased.

[begin{aligned}

Bias[wh mid bfX]

&= E_{bfy mid bfX}[wh] — w \

&= wh_{bfX, f} — w \

&= (bfX^T bfX)^{-1} bfX^T bfX w — w \

&= 0.

end{aligned}]

Therefore, the expectation of the bias over the distribution of (bfX) is also 0.

If (bfX) is fixed, then variance of the least-squares estimate is the same for linear or nonlinear (f), since it does not depend on (f).

However, when the training inputs (bfX) are sampled randomly, the variance does depend on (f). Subsitituting (f(bfX) = bfX w) into the variance expression derived for arbitrary (f) yields

[begin{aligned}

Cov_bfX[wh_{bfX, f}]

&= Cov_bfX[(bfX^T bfX)^{-1} bfX^T f(bfX)] \

&= Cov_bfX[(bfX^T bfX)^{-1} bfX^T (bfX w)] \

&= Cov_bfX[w] = 0

end{aligned}]

so (Cov[wh] = sigma^2 E_bfXleft[ (bfX^T bfX)^{-1} right]).

Ridge Regression Estimator

The ridge regression estimator (whR) is a linear function of the least-squares estimator (wh).

[begin{aligned}

whR

&= (bfX^T bfX + alpha I_d)^{-1} bfX^T bfy \

&= (bfX^T bfX + alpha I_d)^{-1} underbrace{(bfX^T bfX) (bfX^T bfX)^{-1}}_{=I_d} bfX^T bfy \

&= (bfX^T bfX + alpha I_d)^{-1} (bfX^T bfX) wh \

&= bfZ_{bfX, alpha} bfX wh

end{aligned}]

Details

If (f) is arbitrary and (bfX) is fixed, then the expectation of the ridge regression estimator is not equal to (w_star), so it is biased. The inequality on the first line comes from the fact that (bfZ_{bfX, alpha} bfX = (bfX^T bfX + alpha I_d)^{-1} (bfX^T bfX) neq I_d).

[begin{aligned}

E_{bfy|bfX}[whR]

&= E_{bfy|bfX}[bfZ_{bfX, alpha} bfX wh]

= bfZ_{bfX, alpha} bfX E_{bfy|bfX}[wh]

= bfZ_{bfX, alpha} bfX w_star

neq w_star \

Bias[whR mid bfX]

&= E_{bfy mid bfX}[whR] — w_star

= bfZ_{bfX, alpha} bfX w_star — w_star \

Cov_{bfy|bfX}[whR mid bfX]

&= Cov_{bfy|bfX}left[ bfZ_{bfX, alpha} bfX wh right] \

&= bfZ_{bfX, alpha} bfX Cov_{bfy|bfX}left[ wh right] bfX^T bfZ_{bfX, alpha}^T \

&= sigma^2 bfZ_{bfX, alpha} bfX (bfX^T bfX)^{-1} bfX^T bfZ_{bfX, alpha}^T \

&= sigma^2 bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T

end{aligned}]

If (f) was truly linear so (w_star = w) and (f(bfX) = bfX w), then we can simplify the bias. However, the variance expression does not depend on (f), so it is the same regardless of whether (f) is linear or not.

[Bias[whR mid bfX]

= bfZ_{bfX, alpha} bfX w — w

= bfZ_{bfX, alpha} f(bfX) — w

= wh_{bfX, f, alpha} — w.]

If the training inputs (bfX) are sampled randomly with arbitrary (f), then the bias and variance are as follows. The variance derivation follows a similar proof to the ordinary linear regression.

[begin{aligned}

E_D[whR]

&= E_bfXleft[ E_{bfy mid bfX}[whR] right]

= E_bfXleft[ bfZ_{bfX, alpha} bfX right] w_star \

Bias[whR]

&= E_bfXleft[ bfZ_{bfX, alpha} bfX right] w_star — w_star \

Cov[whR]

&= Cov_{bfX, epsilon}left[ wh_{bfX, f, alpha} + bfZ_{bfX, alpha} epsilon right] \

&= E_bfX[ wh_{bfX, f, alpha} wh_{bfX, f, alpha}^T ] + E_{bfX, epsilon}[bfZ_{bfX, alpha} epsilon epsilon^T bfZ_{bfX, alpha}^T] — E_bfX[wh_{bfX, f, alpha}] E_bfX[wh_{bfX, f, alpha}]^T \

&= Cov_bfX[ wh_{bfX, f, alpha} ] + sigma^2 E_{bfX, epsilon}[bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T].

end{aligned}]

If (f) is truly linear, then (Bias[whR] = E_bfXleft[ wh_{bfX, f, alpha} right] — w).

Comparing linear regression and ridge regression estimators

For any (alpha > 0) and assuming the training inputs (bfX) are fixed and full-rank, the ridge regression estimator has lower variance than the standard linear regression estimator without regularization. This result holds regardless of whether (f) is linear or not.

Because the estimators (wh) and (whR) are vectors, their variances are really covariance matrices. Thus, when we compare their variances, we actually compare the definiteness of their covariance matrices. One way to see this is that the MSE formula only depends on the trace of the covariance matrix. For any two vectors (a) and (b),

[Cov[a] — Cov[b] succ 0

quadimpliesquad tr(Cov[a] — Cov[b]) > 0

quadiffquad tr(Cov[a]) > tr(Cov[b]).]

The first implication relies on the fact that if a matrix is positive definite, its trace is positive. Thus, showing that (Cov[wh mid bfX] succ Cov[whR mid bfX]) establishes that the (wh) has a larger variance term in its MSE decomposition.

For linear models, comparing the definiteness of the covariance matrices is also directly related to the variance of the predicted outputs. This makes more sense when we discuss the variance of the ridge regression predictor later in this post.

Theorem: If we take the training inputs (bfX in R^{n times d}) with (n geq d) to be fixed and full-rank while the training labels (bfy in R^N) have variance (sigma^2), then the variance of any ridge regression estimator with (alpha > 0) has lower variance than the standard linear regression estimator without regularization. In other words, (forall alpha > 0., Cov[whR mid bfX] prec Cov[wh mid bfX]).

Proof

Let (S = bfX^T bfX) and (W = (bfX^T bfX + alpha I)^{-1}). Both (S) and (W) are symmetric and invertible matrices. Note that (S succ 0) because (z^T S z = | bfX z |_2^2 > 0) for all non-zero (z) (since (bfX) has linearly independent columns). Then, (W^{-1} = (S + alpha I) succ 0) because (I succ 0) and (alpha > 0). Since the inverse of any positive definite matrix is also positive definite, (S^{-1} succ 0) and (W succ 0) as well.

[begin{aligned}

Cov[whR mid bfX]

&= sigma^2 bfZ_{bfX, alpha} bfZ_{bfX, alpha}^T

= sigma^2 W bfX^T bfX^T W

= sigma^2 WSW \

Cov[wh mid bfX]

&= sigma^2 (bfX^T bfX)^{-1}

= sigma^2 S^{-1} \

Cov[wh mid bfX] — Cov[whR mid bfX]

&= sigma^2 (S^{-1} — WSW)

end{aligned}]

We will show that (S^{-1} — WSW succ 0) (positive definite), which implies that (Cov[whR mid bfX] prec Cov[wh mid bfX]).

We first show

[begin{aligned}

W^{-1} S^{-1} W^{-1} — S

&= (S + alpha I) S^{-1} (S + alpha I) — S \

&= (I + alpha S^{-1}) (S + alpha I) — S \

&= 2 alpha I + alpha^2 S^{-1}

end{aligned}]

which is clearly positive definite since (I succ 0), (S^{-1} succ 0), and (alpha > 0).

We can then expand

[begin{aligned}

S^{-1} — WSW

&= W W^{-1} S^{-1} W^{-1} W — WSW \

&= W (W^{-1} S^{-1} W^{-1} — S) W \

&= alpha W (2I + alpha S^{-1}) W

end{aligned}]

which is positive definite. This is because (z^T W (2I + alpha S^{-1}) W z > 0) for all (Wz neq 0) (since the matrix inside the parentheses is positive definite), and (W) is invertible so (Wz neq 0 iff z neq 0).

Having shown that the ridge regression estimator is biased but has lower variance than the unbiased least-squares estimator, the obvious next question is whether the decrease in variance is greater than the bias. Indeed, the following theorem shows that the ridge regression estimator is always able to achieve lower mean squared error.

Theorem: Assume that the training inputs (bfX) are fixed and that (f(x) = w^T x) is truly linear. Then (MSE[whR] < MSE[wh]) if and only if (0 < alpha < 2 frac{sigma^2}{|w|_2^2}).

As the proof for this is quite involved, we refer readers to Theorem 1.2 of Wieringen, 2015 or Theorem 4.3 of Hoerl and Kennard, 1970 for different proofs of this theorem.

Sources

- Hoerl, Arthur E., and Robert W. Kennard. “Ridge regression: Biased estimation for nonorthogonal problems.” Technometrics 12.1 (1970): 55-67. link.

- Proves that the MSE of ridge regression estimator is less than the MSE of the least-squares estimator for certain values of (alpha).

- “Prove that the variance of the ridge regression estimator is less than the variance of the OLS estimator.” StackExchange. link.

- Taboga, Marco. “Ridge Regression.” StatLect. link.

- Provides alternative proof for why the ridge regression estimator has lower variance than the ordinary linear regression estimator.

- van Wieringen, Wessel N. “Lecture notes on ridge regression.” arXiv preprint arXiv:1509.09169 (2018). link.

- Reference for bias and variance of linear and ridge regression estimators.

- Only discusses the case where training inputs are fixed.

Bias and Variance of a Predictor (or Model)

Setup

We consider the same setup discussed in the Linear Regression section above. To recap, we have 3 random variables (X in R^p), (Y in R), and (epsilon in R), related by (Y = f(X) + epsilon) for some function (f: R^p to R). The noise (epsilon sim Noise) is independent of (X) and is distributed with mean 0 and variance (sigma^2).

The bias-variance decomposition of mean squared error is a method for analyzing a deterministic model’s behavior when trained on different training sets drawn from the same underlying distribution. To do this, we fix some test point (x) and then iterate the following procedure many times:

- Sample (y sim p(Y mid X=x)). Equivalently, sample (epsilon sim Noise), then set (y = f(x) + epsilon).

- Sample a training set (D) from (P(X,Y)).

- Fit (fh_D) to the training set.

- Predict (fh_D(x)) as our estimate of (y).

The mean squared error of our model (fh_D) on a particular test point (x) is then

[begin{aligned}

MSE(x)

&= E_{y|x} left[ E_D left[ (y — fh_D(x))^2 right] right] \

&= (Bias[fh(x)])^2 + var[fh(x)] + text{Noise}

end{aligned}]

where

[begin{aligned}

Bias[fh(x)] &= E_D[fh_D(x)] — f(x) \

var[fh(x)] &= var_D[fh_D(x)] \

text{Noise} &= sigma^2.

end{aligned}]

Proof

[begin{aligned}

MSE(x)

&= E_{y|x} left[ E_D [ (y — fh_D(x))^2 ] right] \

&= E_{y|x} left[ var_D[fh_D(x)] + left(E_D[fh_D(x)] — yright)^2 right] \

&= var_D[fh_D(x)] + E_{y|x} left[ left(E_D[fh_D(x)] — yright)^2 right] \

&= var_D[fh_D(x)] + var_{p(y|x)}[y] + left( E_D[fh_D(x)] — E_{y|x}[y] right)^2 \

&= var_D[fh_D(x)] + sigma^2 + left( E_D[fh_D(x)] — f(x) right)^2 \

&= var[fh(x)] + text{Noise} + (Bias[fh(x)])^2

end{aligned}]

The 2nd equality comes from applying Corollary 1 where (y) is constant w.r.t. (D). The 4th equality comes again from applying Corollary 1, but this time (E_D[fh_D(x)]) is constant w.r.t. (y).

Thus we have decomposed the mean squared error into 3 terms: bias, variance, and noise. Notice that if there is no noise ((sigma^2 = 0)), then the mean squared error decomposes strictly into bias and variance. The mean squared error at (x) is also known as expected prediction error at (x), commonly written as (EPE(x)).

Discussion

The noise term (sigma^2), also known as irreducible error or aleatoric uncertainty, is the variance of the target (Y) around its true mean (f(x)). It is inherent in the problem and it does not depend on the model or training data. If the data generation process is known, then we may know (sigma^2). Otherwise, we may estimate (sigma^2) with the sample variance of (y) at duplicated (or nearby) inputs (x).

However, the bias and variance components do depend on the model. A misspecified model, i.e. a model that does not match the true distribution of the data, will generally have bias. Thus, a model with high bias may underfit the data. On the other hand, more complex models have lower bias but higher variance. Such models have a tendency to overfit the data. In many circumstances it is possible to achieve large reductions in the variance term (var_D [ fh_D(x) ]) with only a small increase in bias, thus reducing overfitting. We show this explicitly in the setting of linear models by comparing linear regression with ridge regression.

In general, we are unable to exactly calculate the bias and variance of a learned model without knowing the true (f). However, we can estimate the bias, variance, and MSE at a test point (x) by taking bootstrap samples of the dataset to approximate drawing different datasets (D).

Example: Linear Regression and Ridge Regression

Consider a linear model (fh(x) = wh^T x) over (p)-dimensional inputs (x in R^p), where the intercept is included in (wh). The relationship between the bias/variance of an estimator and the bias/variance of the model is straightforward for a linear model. Thus, we can readily use the results derived for the bias and variance of linear and ridge regression estimators.

[begin{aligned}

Bias[fh(x)]

&= E_D[fh_D(x)] — f(x) \

&= E_D[wh_D^T x] — w^T x \

&= left(E_D[wh_D] — w right)^T x \

&= Bias[wh]^T x

\

var[fh(x)]

&= var_D[ fh_D(x) ] \

&= var_D[ x^T wh_D ] \

&= x^T Cov_D[ wh_D ] x

end{aligned}]

However, when (f) is arbitrary, we cannot use the estimator bias results directly because they were derived relative to (w_star). Here, we are interested in the bias of (wh^T x) vs. (f(x)), as opposed to (w_star^T x).

As before, we separately consider the cases where the true (f) is an arbitrary function and when (f) is perfectly linear in (x). We also consider whether or not the training inputs (bfX) are fixed. The training targets (bfy) are always sampled from (P(Y mid X)).

Main Results

| arbitrary (f(X)) | linear (f(X)) | ||||

|---|---|---|---|---|---|

| fixed (bfX) | (E_D) | fixed (bfX) | (E_D) | ||

| OLS | Bias | (x^T wh_{bfX, f} — f(x)) | (x^T E_bfX[wh_{bfX, f}] — f(x)) | 0 | 0 |

| Variance | (sigma^2 |bfh_bfX(x)|_2^2) | (x^T Cov_bfX[wh_{bfX, f}] x + sigma^2 E_bfXleft[ |bfh_bfX(x)|_2^2 right]) | (sigma^2 |bfh_bfX(x)|_2^2) | (sigma^2 E_bfXleft[ |bfh_bfX(x)|_2^2 right]) | |

| Ridge Regression | Bias | (bfZ_{bfX, alpha} bfX w_star^T x — w_star^T x) | (E_bfXleft[ bfZ_{bfX, alpha} bfX right] w_star^T x — w_star^T x) | (wh_{bfX, f, alpha}^T x — w^T x) | (E_bfXleft[ wh_{bfX, f, alpha} right]^T x — w^T x) |

| Variance | (sigma^2 |bfh_{bfX, alpha}(x)|_2^2) | (x^T Cov_bfX[wh_{bfX, alpha, f}] x + sigma^2 E_bfXleft[ |bfh_{bfX, alpha}(x)|_2^2 right]) | (sigma^2 |bfh_{bfX, alpha}(x)|_2^2) | (x^T Cov_bfX[wh_{bfX, alpha, f}] x + sigma^2 E_bfXleft[ |bfh_{bfX, alpha}(x)|_2^2 right]) |

Decomposing Bias for Linear Models

Before discussing the bias and variance of the linear and ridge regression models, we take a brief digression to show a further decomposition of bias for linear models. While there may exist similar decompositions for other model families, the following decomposition explicitly assumes that our model (fh(x)) is linear.

Let (w_star = argmin_w E_{x sim P(X)}left[ (f(x) — w^T x)^2 right]) be the parameters of the best-fitting linear approximation to the true (f), which may or may not be linear. Then, the expected squared bias term decomposes into model bias and estimation bias.

[begin{aligned}

E_xleft[ (Bias[fh(x)])^2 right]

&= E_xleft[ left( E_D[fh_D(x)] — f(x) right)^2 right] \

&= E_xleft[ left( w_star^T x — f(x) right)^2 right] + E_xleft[ left( E_D[wh_D^T x] — w_star^T x right)^2 right] \

&= mathrm{Average}[(text{Model Bias})^2] + mathrm{Average}[(text{Estimation Bias})^2]

end{aligned}]

The model bias is the error between the best-fitting linear approximation (w_star^T x) and the true function (f(x)). Note that (w_star) is exactly defined as the parameters of a linear model that minimizes the average squared model bias. If (f) is not perfectly linear, then the squared model bias is clearly positive. The estimation bias is the error between the average estimate (E_D[wh_D^T x]) and the best-fitting linear approximation (w_star^T x).

For example, if the true function was quadratic, then there would be a large model bias. However, if (f) is linear, then the model bias is 0; in fact, both the model bias and the estimation bias are 0 at all test points (x), as shown in the next section. On the other hand, ridge regression has positive estimation bias, but reduced variance.

Proof

For any arbitrary (wh),

[begin{aligned}

left( f(x) — wh^T x right)^2