I’m trying to debug a file descriptor leak in a Java webapp running in Jetty 7.0.1 on Linux.

The app had been happily running for a month or so when requests started to fail due to too many open files, and Jetty had to be restarted.

java.io.IOException: Cannot run program [external program]: java.io.IOException: error=24, Too many open files

at java.lang.ProcessBuilder.start(ProcessBuilder.java:459)

at java.lang.Runtime.exec(Runtime.java:593)

at org.apache.commons.exec.launcher.Java13CommandLauncher.exec(Java13CommandLauncher.java:58)

at org.apache.commons.exec.DefaultExecutor.launch(DefaultExecutor.java:246)

At first I thought the issue was with the code that launches the external program, but it’s using commons-exec and I don’t see anything wrong with it:

CommandLine command = new CommandLine("/path/to/command")

.addArgument("...");

ByteArrayOutputStream errorBuffer = new ByteArrayOutputStream();

Executor executor = new DefaultExecutor();

executor.setWatchdog(new ExecuteWatchdog(PROCESS_TIMEOUT));

executor.setStreamHandler(new PumpStreamHandler(null, errorBuffer));

try {

executor.execute(command);

} catch (ExecuteException executeException) {

if (executeException.getExitValue() == EXIT_CODE_TIMEOUT) {

throw new MyCommandException("timeout");

} else {

throw new MyCommandException(errorBuffer.toString("UTF-8"));

}

}

Listing open files on the server I can see a high number of FIFOs:

# lsof -u jetty

...

java 524 jetty 218w FIFO 0,6 0t0 19404236 pipe

java 524 jetty 219r FIFO 0,6 0t0 19404008 pipe

java 524 jetty 220r FIFO 0,6 0t0 19404237 pipe

java 524 jetty 222r FIFO 0,6 0t0 19404238 pipe

when Jetty starts there are just 10 FIFOs, after a few days there are hundreds of them.

I know it’s a bit vague at this stage, but do you have any suggestions on where to look next, or how to get more detailed info about those file descriptors?

Scenario: Your application log displays the error message “Too many Open Files“. As a result, application requests are failing and you need to restart the application. In some cases, you also need to reboot your machine.

Facts: The JVM console (or log file) contains the following error:

java.io.FileNotFoundException: filename (Too many open files) at java.io.FileInputStream.open(Native Method) at java.io.FileInputStream.<init>(FileInputStream.java:106) at java.io.FileInputStream.<init>(FileInputStream.java:66) at java.io.FileReader.<init>(FileReader.java:41)

Or:

java.net.SocketException: Too many open files

at java.net.PlainSocketImpl.socketAccept(Native Method)

at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:398)

What is the solution?

The error Java IOException “Too many open files” can happen on high-load servers and it means that a process has opened too many files (file descriptors) and cannot open new ones. In Linux, the maximum open file limits are set by default for each process or user and the defaut values are quite small.

Also note that socket connections are treated like files and they use file descriptor, which is a limited resource.

You can approach this issue with the following check-list:

- Check the JVM File handles

- Check other processes’ File Handles

- Inspect the limits of your OS

- Inspect user limits

Check the JVM File Handles

Firstly, we need to determine if the Root cause of “Too many open Files” is the JVM process itself.

On a Linux machine, everything is a file: this includes, for example, regular files or sockets. To check the number of open files per process you can use the Linux command lsof . For example, if your JVM process has the pid 1234:

lsofpid -p 1234

This command will return in the last column the file descriptor name:

java 1234 user mem REG 253,0 395156 785358 /home/application/fileleak1.txt java 1234 user 48r REG 253,0 395156 785358 /home/application/fileleak2.txt

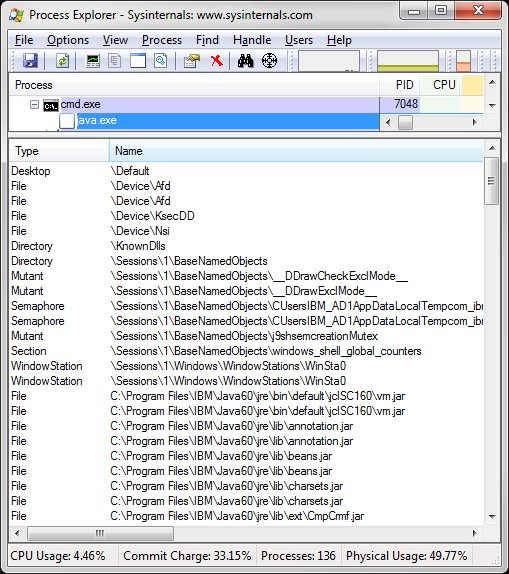

On a Windows machine, you can check the open files through the Resource Monitor Tool which contains this information in the Disk section:

If you are seeing too many application file handles, it is likely that you have a leak in your application code. To avoid resource leaks in your code, we recommend to use the try-with-resources statement.

The try-with-resources statement is a try statement that declares one or more resources. A resource is an object that must be closed after the program is finished with it. The try-with-resources statement ensures an automatic close at the end of the statement. You can use this construct with any object implementing java.io.Closeable interface.

The following example shows a safe way to read the first line from a file using an instance of BufferedReader:

static String readFromFile(String path) throws IOException {

try (BufferedReader br =

new BufferedReader(new FileReader(path))) {

return br.readLine();

}

}

To learn more about try-with-resources, check this article Using try-with-resources to close database connections

Finally, consider that, even though you are properly closing your handles, it will be actually disposed after the Garbage collection phase. It is worth checking, using a tool like Eclipse MAT, which are the top objects in memory when you spot the error “Too many open files”.

Check other processes’ File Handles

At this point, we have determined that your application does not have File Leaks. We need to extend the search to other processes running on your Machine.

The following command returns the list of file descriptors for each process:

$ lsof | awk '{print $2}' | sort | uniq -c | sort -n

On the other hand, if you want to have the list of handles per user, you can run lsof with the -u parameter:

$ lsof -u jboss | wc -l

Next, check which process is holding most file handles. For example:

$ ps -ef | grep 2873 frances+ 2873 2333 14 10:15 tty2 00:16:14 /usr/lib64/firefox/firefox

Besides it, you should also check what is the status of these file handles. You can verify it with the netstat command. For example, to verify the status of the sockets opened for the process 7790, you can execute the following command:

$ netstat -tulpn | grep 7790 (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) tcp 0 0 127.0.0.1:54200 0.0.0.0:* LISTEN 7790/java tcp 0 0 127.0.0.1:8443 0.0.0.0:* LISTEN 7790/java tcp 0 0 127.0.0.1:9990 0.0.0.0:* LISTEN 7790/java tcp 0 0 127.0.0.1:8009 0.0.0.0:* LISTEN 7790/java tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 7790/java udp 0 0 224.0.1.105:23364 0.0.0.0:* 7790/java udp 0 0 230.0.0.4:45688 0.0.0.0:* 7790/java udp 0 0 127.0.0.1:55200 0.0.0.0:* 7790/java udp 0 0 230.0.0.4:45688 0.0.0.0:* TIME_WAIT 7790/java udp 0 0 230.0.0.4:45689 0.0.0.0:* TIME_WAIT 7790/java

From the netstat output, we can see a small number of TIME_WAIT sockets, which is absolutely normal. You should worry if you detect thousands of active TIME WAIT sockets. If that happens, you should consider some possible actions such as:

- Make sure you close the TCP connection on the client before closing it on the server.

- Consider reducing the timeout of TIME_WAIT sockets. In most Linux machines, you can do it by adding the following contents to the sysctl.conf file (f.e.reduce to 30 seconds):

net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_timestamps = 1 net.ipv4.tcp_fin_timeout = 30 net.nf_conntrack_max = 655360 net.netfilter.nf_conntrack_tcp_timeout_established = 1200

- Use more client ports by setting net.ipv4.ip_local_port_range to a wider range.

- Have the application to Listen for more server ports. (Example httpd defaults to port 80, you can add extra ports).

- Add more client IPs by configuring additional IP on the load balancer and use them in a round-robin fashion.

Check the OS limits for your machine

At this point, we know that we have an high number of File handles but there are no leaks in the JVM nor in other processes. Therefore, we have to check if our machine is able to use that amount of File Handles.

On a Linux Box you use the sysctl command to check the maximum number of files youcurrent value:

$ sysctl fs.file-max fs.file-max = 8192

This is the maximum number of files that you can open on your machine for your processes. The default value for fs.file-max can vary depending on your OS version the the amount of physical RAM size at boot.

If you want to change the above limit, edit as root the /etc/sysctl.conf file and set a value for the fs.file-max property. for example:

fs.file-max = 9223372036854775807

Next, to reload the sysctl settings from the default location execute:

sysctl -p

Finally, it is worth mentioning that you can also set a value for the fs.file-max property without editing manually the file. For example:

$ sudo/sbin/sysctl -w fs.file-max=<NEWVALUE>

Check the User limits

At this point, we know that your kernel is not enforcing limits on the number of open files. It can be the case that your current user cannot handle too many files open.

To verify the current limits for your user, run the command ulimit:

$ ulimit -n 1024

To change this value to 8192 for the user jboss, who is running the Java application, change as follows the /etc/security/limits.conf file:

jboss soft nofile 8192 jboss hard nofile 9182

Please be aware that changes in the file /etc/security/limits.conf can be overwritten if you have any file matching /etc/security/limits.d/*.conf on your machine. Please review all these files to make sure they don’t override your settings.

Finally, if you don’t want to set a limit only for a specific user, you can enforce the limit for all users with this entry in /etc/security/limits.conf :

* - nofile 8192

The above lines set the hard limit to 8192 for all users. Reboot the machine for changes to take effect.

Finally please note that the user limits will be ignored when start your process from within a cron job as the cron does not uses a login shell. One potential workaround is to edit the crond.service and increase the TasksMax property accordingly. For example:

Type=simple Restart=no ... TasksMax=8192

Conclusion

In conclusion, “Too many open files” is a common issue that can occur in Java applications, and it can have a significant impact on the performance and stability of your system. However, by understanding the root cause of the problem, and implementing effective solutions such as increasing the file descriptor limit, closing unused files, you can effectively resolve this issue and ensure that your Java applications run smoothly.

Post Views:

4,063

Not many Java programmers know that socket connections are treated like files and they use file descriptors, which is a limited resource. The different operating system has different limits on the number of file handles they can manage. One of the common reasons for java.net.SocketException: Too many files open in Tomcat, Weblogic, or any Java application server is, too many clients connecting and disconnecting frequently a very short span of time. Since Socket connection internally uses TCP protocol, which says that a socket can remain in TIME_WAIT state for some time, even after they are closed. One of the reasons to keep the closed socket in the TIME_WAIT state is to ensure that delayed packets reached the corresponding socket.

The different operating system has different default time to keep sockets in TIME_WAIT state, in Linux it’s 60 seconds, while in Windows is 4 minutes. Remember longer the timeout, the longer your closed socket will keep file handle, which increases the chances of java.net.SocketException: Too many files open exception.

This also means, if you are running Tomcat, Weblogic, Websphere, or any other web server in windows machine, you are more prone to this error than Linux based systems e.g. Solaris or Ubuntu.

By the way, this error is the same as java.io.IOException: Too many files open exception, which is thrown by code from IO package if you try to open a new FileInputStream or any stream pointing to file resource.

How to solve java.net.SocketException: Too many files open

1) Increase the number of open file handles or file descriptors per process.

2) Reduce timeout for TIME_WAIT state in your operating system

In UNIX based operating system e.g. Ubuntu or Solaris, you can use the command ulimit -a to find out how many open file handles per process is allowed.

$ ulimit -a

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

file size (blocks, -f) unlimited

open files (-n) 256

pipe size (512 bytes, -p) 10

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 2048

virtual memory (kbytes, -v) unlimited

You can see that, open files (-n) 256, which means only 256 open file handles per process is allowed. If your Java program, remember Tomcat, weblogic or any other application server are Java programs and they run on JVM, exceeds this limit, it will throw java.net.SocketException: Too many files open error.

You can change this limit by using ulimit -n to a larger number e.g. 4096, but do it with the advice of the UNIX system administrator and if you have a separate UNIX support team, than better escalate to them.

Another important thing to verify is that your process is not leaking file descriptors or handles, well that’s a tedious thing to find out, but you can use lsof command to check how many open file handles is owned by a particular process in UNIX or Linux. You can run lsof command by providing PID of your process, which you can get it from ps command.

Similarly, you can change TIME_WAIT timeout but do with consultation of UNIX support, as a really low time means, you might miss delayed packets. In UNIX based systems, you ca n see current configuration in /proc/sys/net/ipv4/tcp_fin_timeout file. In Windows based system, you can see this information in the windows registry. You can change the TCP TIME_WAIT timeout in Windows by following below steps :

1) Open Windows Registry Editor, by typing regedit in run command window

2) Find the key HKEY_LOCAL_MACHINESystemCurrentControlSetServicestcpipParameters

3) Add a new key value pair TcpTimedWaitDelay asa decimal and set the desired timeout in seconds (60-240)

4) Restart your windows machine.

Remember, you might not have permission to edit windows registry, and if you are not comfortable, better not to do it. Instead ask Windows Network support team, if you have any, to do that for you. Bottom line to fix java.net.SocketException: Too many files open, is that either increasing number of open file handles or reducing TCP TIME_WAIT timeout. java.net.SocketException: Too many files open issue is also common among FIX Engines, where client use TCP/IP protocol to connect with brokers FIX servers.

Since FIX engines needs correct value of incoming and outgoing sequence number to establish FIX session, and if client tries to connect with a smaller sequence number than expected at brokers end, it disconnects the session immediately.

If the client is well behind, and keep retrying by increasing sequence number by 1, it can cause java.net.SocketException: Too many files open at brokers end. To avoid this, let’s FIX engine keep track of it’s sequence number, when it restart. In short, «java.net.SocketException: Too many files open» can be seen any Java Server application e.g. Tomcat, Weblogic, WebSphere etc, with client connecting and disconnecting frequently.

This is easily demonstrated using the provided Http Snoop example implementation of a Netty Http Server.

When this server is set up and stressed with multiple requests, after about 3000 — 4000 requests, the server fails with an java.io.IOException:

java.io.IOException: Too many open files

at sun.nio.ch.ServerSocketChannelImpl.accept0(Native Method)

at sun.nio.ch.ServerSocketChannelImpl.accept(ServerSocketChannelImpl.java:152)

at io.netty.channel.socket.nio.NioServerSocketChannel.doReadMessages(NioServerSocketChannel.java:111)

at io.netty.channel.nio.AbstractNioMessageChannel$NioMessageUnsafe.read(AbstractNioMessageChannel.java:69)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:465)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:359)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:101)

at java.lang.Thread.run(Thread.java:662)

This is reproducable by running the following integration test:

import org.apache.http.HttpResponse;

import org.apache.http.client.HttpClient;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpRequestBase;

import org.apache.http.impl.client.DefaultHttpClient;

import org.junit.Before;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.junit.runners.JUnit4;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

import static org.hamcrest.MatcherAssert.assertThat;

import static org.hamcrest.Matchers.is;

public class HttpSnoopServerStressTests {

private static final int NUMBER_OF_THREADS = 100;

private static final int NUMBER_OF_REQUESTS = 1000;

private SnoopServerTests snoopServerTests;

private ThreadPoolExecutor executor;

private static int executionCounter;

@Before

public void setUp() throws Exception {

BlockingQueue blockingQueue = new ArrayBlockingQueue(NUMBER_OF_THREADS);

executor = new ThreadPoolExecutor(NUMBER_OF_THREADS, NUMBER_OF_THREADS, 1, TimeUnit.SECONDS, blockingQueue);

snoopServerTests = new SnoopServerTests();

}

@Test

public void multiThreaded_getRequest_shouldNotFail() throws Exception {

for (int i = 0; i < NUMBER_OF_THREADS; i++) {

SnoopServerTestsRunnable runnable = new SnoopServerTestsRunnable();

executor.execute(runnable);

}

waitForExecutionToFinish();

}

private void waitForExecutionToFinish() throws InterruptedException {

executor.shutdown();

while (!executor.awaitTermination(1, TimeUnit.SECONDS)) {

Thread.currentThread().sleep(100L);

}

}

private class SnoopServerTestsRunnable implements Runnable {

@Override

public void run() {

try {

for (int i = 0; i < NUMBER_OF_REQUESTS; i++) {

snoopServerTests.get_request_returns200OK();

}

printToConsole();

} catch (Exception e) {

e.printStackTrace();

}

}

private synchronized void printToConsole() {

executionCounter++;

System.out.printf("Execution number %d. Executed %d number of requests.%n", executionCounter, NUMBER_OF_REQUESTS);

}

}

@RunWith(JUnit4.class)

private class SnoopServerTests {

@Test

public void get_request_returns200OK() throws Exception {

HttpClient client = new DefaultHttpClient();

HttpRequestBase request = new HttpGet("http://HOSTNAME:8080/");

HttpResponse response = client.execute(request);

assertThat(response.getStatusLine().getStatusCode(), is(200));

}

}

}

When the test have ran for a couple of minutes (of course depending on your current environment), reaching approx. 3000 to 4000 requests, the server stops to respond. The above mentioned IOException is logged at the server.

This has been reproduced on 4.0.0.CR1, 4.0.0.CR9, as well as the latest snapshot 4.0.0.CR10-SNAPSHOT.

Help with this issue would be very appreciated.

Troubleshooting

Problem

This technote explains how to debug the «Too many open files» error message on Microsoft Windows, AIX, Linux and Solaris operating systems.

Symptom

The following messages could be displayed when the process has exhausted the file handle limit:

java.io.IOException: Too many open files

[3/14/15 9:26:53:589 EDT] 14142136 prefs W Could not lock User prefs. Unix error code 24.

New sockets/file descriptors can not be opened after the limit has been reached.

Cause

System configuration limitation.

When the «Too Many Open Files» error message is written to the logs, it indicates that all available file handles for the process have been used (this includes sockets as well). In a majority of cases, this is the result of file handles being leaked by some part of the application. This technote explains how to collect output that identifies what file handles are in use at the time of the error condition.

Resolving The Problem

Determine Ulimits

On UNIX and Linux operating systems, the ulimit for the number of file handles can be configured, and it is usually set too low by default. Increasing this ulimit to 8000 is usually sufficient for normal runtime, but this depends on your applications and your file/socket usage. Additionally, file descriptor leaks can still occur even with a high value.

Display the current soft limit:

ulimit -Sn

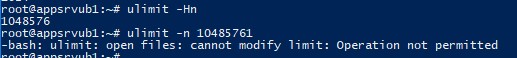

Display the current hard limit:

ulimit -Hn

Or capture a Javacore, the limit will be listed in that file under the name NOFILE:

kill -3 PID

Please see the following document if you would like more information on where you can edit ulimits:

Guidelines for setting ulimits (WebSphere Application Server)

http://www.IBM.com/support/docview.wss?rs=180&uid=swg21469413

Operating Systems

By default, Windows does not ship with a tool to debug this type of problem. Instead Microsoft provides a tool that you can download called Process Explorer. This tool identifies the open handles/files associated with the Java™ process (but usually not sockets opened by the Winsock component) and determines which handles are still opened. These handles result in the «Too many open files» error message.

To display the handles, click on the Gear Icon in the toolbar (or press CTRL+ H to toggle the handles view). The icon will change to the icon for DLL files (so you can toggle it back to the DLL view).

It is important that you change the Refresh Rate. Select View > Update Speed, and change it to 5 seconds.

There is also another Microsoft utility called Handle that you can download from the following URL:

https://technet.microsoft.com/en-us/sysinternals/bb896655.aspx

This tool is a command line version of Process Explorer. The URL above contains the usage instructions.

The commands lsof and procfiles are usually the best commands to determine what files and sockets are opened..

lsof

To determine if the number of open files is growing over a period of time, issue lsof to report the open files against a PID on a periodic basis. For example:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

This output does not give the actual file names to which the handles are open. It provides only the name of the file system (directory) in which they are contained. The lsof command indicates if the open file is associated with an open socket or a file. When it references a file, it identifies the file system and the inode, not the file name.

It is best to capture lsof several times to see the rate of growth in the file descriptors.

procfiles

The procfiles command does provide similar information, and also displays the full filenames loaded. It may not show sockets in use.

procfiles -n [PID] > procfiles.out

Other commands (to display filenames that are opened)

INODES and DF

df -kP filesystem_from_lsof | awk ‘{print $6}’ | tail -1

>> Note the filesystem name

find filesystem_name -inum inode_from_lsof -print > filelist.out

>> Shows the actual file name

svmon

svmon -P PID -m | grep pers

(for JFS)

svmon -P PID -m | grep clnt

(for JFS2, NFS)

(this opens files in the format:

filesystem_device:inode

)

Use the same procedure as above for finding the actual file name.

To determine if the number of open files is growing over a period of time, issue lsof to report the open files against a PID on a periodic basis. For example:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

The output will provide you with all of the open files for the specified PID. You will be able to determine which files are opened and which files are growing over time.

It is best to capture lsof several times to see the rate of growth in the file descriptors.

Alternately you can list the contents of the file descriptors as a list of symbolic links in the following directory, where you replace PID with the process ID. This is especially useful if you don’t have access to the lsof command:

ls -al /proc/PID/fd

Run the following commands to monitor open file (socket) descriptors on Solaris:

pfiles

/usr/proc/bin/pfiles [PID] > pfiles.out

lsof

lsof -p [PID] > lsof.out

This will get one round of lsof output. If you want to determine if the number of open files is growing over time, you can issue the command with the -r option to capture multiple intervals:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

It is best to capture lsof several times to see the rate of growth in the file descriptors.

lsof

lsof -p [PID] > lsof.out

This will get one round of lsof output. If you want to determine if the number of open files is growing over time, you can issue the command with the -r option to capture multiple intervals:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

It is best to capture lsof several times to see the rate of growth in the file descriptors.

[{«Product»:{«code»:»SSEQTP»,»label»:»WebSphere Application Server»},»Business Unit»:{«code»:»BU053″,»label»:»Cloud & Data Platform»},»Component»:»Java SDK»,»Platform»:[{«code»:»PF002″,»label»:»AIX»},{«code»:»PF010″,»label»:»HP-UX»},{«code»:»PF016″,»label»:»Linux»},{«code»:»PF027″,»label»:»Solaris»},{«code»:»PF033″,»label»:»Windows»}],»Version»:»9.0;8.5.5;8.5;8.0;7.0″,»Edition»:»Base;Express;Network Deployment»,»Line of Business»:{«code»:»LOB45″,»label»:»Automation»}},{«Product»:{«code»:»SSNVBF»,»label»:»Runtimes for Java Technology»},»Business Unit»:{«code»:»BU059″,»label»:»IBM Software w/o TPS»},»Component»:»Java SDK»,»Platform»:[{«code»:»»,»label»:»»}],»Version»:»»,»Edition»:»»,»Line of Business»:{«code»:»LOB36″,»label»:»IBM Automation»}}]

Очень часто при работе на высоконагруженных Linux серверах могут возникать ошибки “too many open files”. Это означает, что процесс открыл слишком много файлов (читай файловых дескрипторов) и не может открыть новые. В Linux ограничения “max open file limit“ установлены по умолчанию для каждого процесса и пользователя, и они не слишком высокие.

В данной статье мы рассмотрим, как проверить текущие ограничения на количество открытых файлов в Linux, как изменить этот параметр для всего сервера, для отдельных сервисов и для сеанса. Статья применима для большинства современных дистрибутивов Linux (Debian, Ubuntu, CentOS, RHEL, Oracle Linux, Rocky и т.д.)

Содержание:

- Ошибка: Too many open files и лимиты на количество открытых файлов в Linux

- Глобальные ограничения на количество открытых файлов в Linux

- Увеличить лимит открытых файловых дескрипторов для отдельного сервиса

- Увеличить количество открытых файлов для Nginx и Apache

- Лимиты file-max для текущей сессии

Ошибка: Too many open files и лимиты на количество открытых файлов в Linux

Чаще всего ошибку “too many open files“. Чаще всего эта ошибка встречается на серверах с установленным веб-сервером NGINX/httpd, сервером БД (MySQL/MariaDB/PostgreSQL), при чтении большого количества логов. Например, когда веб-серверу Nginx не хватает лимита для открытия файлов, вы получите ошибку:

socket () failed (24: Too many open files) while connecting to upstream

Или:

HTTP: Accept error: accept tcp [::]:<port_number>: accept4: too many open files.

В Python:

OSError: [Errno 24] Too many open files.

Максимально количество файловых дескрипторов, которые могут быть открыты в вашей файловой системе всеми процессами можно узнать так:

# cat /proc/sys/fs/file-max

Чтобы узнать, сколько файлов открыто сейчас, выполните:

$ cat /proc/sys/fs/file-nr

7744 521 92233720

- 7744 — суммарное количество открытых файлов

- 521– число открытых файлов, но которые сейчас не используются

- 92233720– максимальное количество файлов, которое разрешено открывать

В Linux ограничение на максимальное количество открытых файлов можно натсроить на нескольких уровнях:

- Ядра ОС

- Сервиса

- Пользователя

Чтобы вывести текущее ограничение на количество открытых файлов в ядре Linux, выполните:

# sysctl fs.file-max

fs.file-max = 92233720

Выведем ограничение на количество открытых файлов для одного процесса текущего пользователя:

# ulimit -n

По умолчанию количество файлов для одного процесса этого ограничено числом 1024.

Выведем максимальное количество для одного пользователя (max user processes):

# ulimit –u

5041

Умножаем 1024 * 5041 дает нам 5161984 – это максимально количество открытых файлов всеми процессами пользователя.

В Linux есть два типа ограничений на количество открытых файлов: Hard и Soft. Soft ограничения носят рекомендательный характер, при превышении значения пользователь будет получать предупреждения. Если количество открытых файлов превысило hard лимит, пользователь не сможет открыть новые файлы пока не будет закрыты ранее открытые.

Для просмотра текущих лимитов используется команда ulimit с опцией

-S

(soft) или

-H

(hard) и параметром

-n

(the maximum number of open file descriptors).

Для вывода Soft-ограничения:

# ulimit -Sn

Для вывода Hard-ограничения:

# ulimit -Hn

Глобальные ограничения на количество открытых файлов в Linux

Чтобы разрешить всем сервисам открывать большее количество файлов, можно изменить лимиты на уровне всей ОС Linux. Чтобы новые настройки работали постоянно и не сбрасывались при перезапуске сервера или сессии, нужно поправить файл /etc/security/limits.conf. Строки с разрешениями выглядит так:

Имя_пользователя тип_огрничение название_ограничения значение

Например:

apache hard nofile 978160 apache soft nofile 978160

Вместо имени пользователя можно указать

*

. Это означает, что это ограничение будет применяться для всех пользователей Linux:

* hard nofile 97816 * soft nofile 97816

Например, вы получили ошибку too many open files для nginx. Проверьте, сколько файлов разрешено открывать процессу этому пользователю:

$ sudo -u nginx bash -c 'ulimit -n'

1024

Для нагруженного сервера этого недостаточно. Добавьте в /etc/security/limits.conf строки

nginx hard nofile 50000 nginx soft nofile 50000

В старых ядрах Linux значение fs.file-max может быть равно 10000. Поэтому проверьте это значение и увеличьте его, чтобы оно было больше чем ограничение в limits.conf:

# sysctl -w fs.file-max=500000

Это временно увеличит лимит. Чтобы новые настройки стали постоянными, нужно добавить в файл /etc/sysctl.conf строку:

fs.file-max = 500000

Проверьте, что в файле /etc/pam.d/common-session (Debian/Ubuntu или /etc/pam.d/login для CentOS/RedHat/Fedora) есть строчка:

session required pam_limits.so

Если нет, добавьте ее в конец. Она нужна, чтобы ограничения загружались при авторизации пользователя.

После изменений, перезапустите терминал и проверьте значение лимита max_open_files:

# ulimit -n

97816

Увеличить лимит открытых файловых дескрипторов для отдельного сервиса

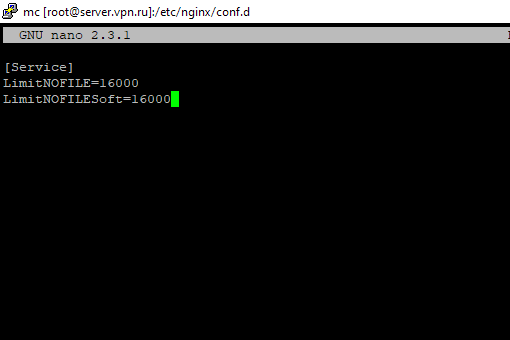

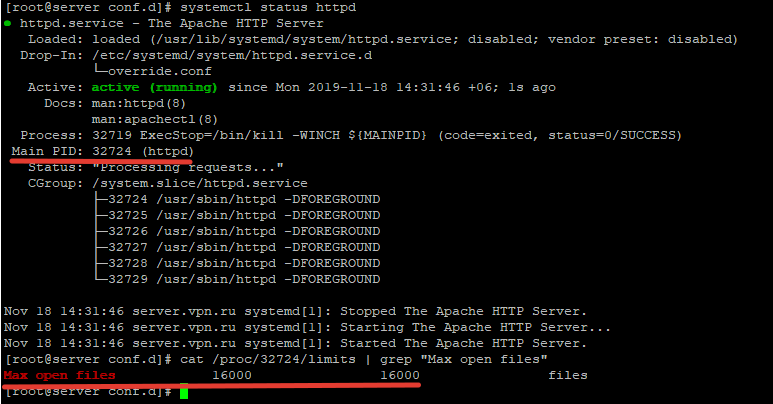

Вы можете изменить лимит на количество открытых файловых дескрипторов для конкретного сервиса, а не для всей системы. Рассмотрим на примере apache. Чтобы изменить значения, откройте настройки службы через systemctl:

# systemctl edit httpd.service

Добавьте необходимые лимиты, например:

[Service] LimitNOFILE=16000 LimitNOFILESoft=16000

После изменения, нужно обновить конфигурацию сервиса и перезапустить его:

# systemctl daemon-reload

# systemctl restart httpd.service

Чтобы проверить, изменились ли значения, нужно получить PID сервиса:

# systemctl status httpd.service

Например, вы определил PID сервиса 32724:

# cat /proc/32724/limits | grep "Max open files”

Значение должно быть 16000.

Так вы изменили значения Max open files для конкретного сервиса.

Увеличить количество открытых файлов для Nginx и Apache

После того, как вы увеличил ограничения на количество открытых файлов для сервера, нужно также поправить конфигурационный файл службы. Например, для веб-сервера Nginx в файле конфигурации /etc/nginx/nginx.conf нужно задать значение в директиве:

worker_rlimit_nofile 16000

Директива worker_rlimit_nofile задает ограничение на количество файлов, открытых в рабочем процессе (

RLIMIT_NOFILE

). В Nginx файловые дескрипторы нужны для возврата статического файла из кэша для каждого подключения клиента. Чем больше пользователей использует ваш сервер и чем больше статических файлов отдает nginx, тем большее количество дескрипторов используется. Сверху максимальное количество дескрипторов ограничивается на уровне ОС и/или сервиса. При превышении количеств открытых файлов nginx появится ошибка

socket() failed (24: Too many open files) while connecting to upstream

.

При настройке Nginx на высоконагруженном 8-ядерном сервере с worker_connections 8192 нужно в worker_rlimit_nofile указать 8192*2*8 (vCPU) = 131072.

После чего выполнить рестарт Nginx:

# nginx -t && service nginx -s reload

Чтобы увидеть чисто открытых файлов для процессов пользователя nginx:

# su nginx

# ulimit –Hn

# for pid in `pidof nginx`; do echo "$(< /proc/$pid/cmdline)"; egrep 'files|Limit' /proc/$pid/limits; echo "Currently open files: $(ls -1 /proc/$pid/fd | wc -l)"; echo; done

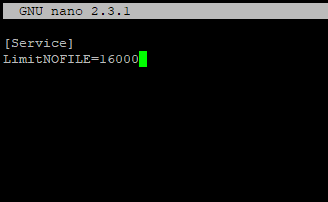

Для apache, нужно создать директорию:

# mkdir /lib/systemd/system/httpd.service.d/

После этого создайте файл limit_nofile.conf:

# nano /lib/systemd/system/httpd.service.d/limit_nofile.conf

И добавьте в него:

[Service] LimitNOFILE=16000

Не забудьте перезапустить сервис httpd.

Лимиты file-max для текущей сессии

Чтобы изменить лимиты на открытые файлы в рамках текущей сессии пользователя, выполните команду:

# ulimit -n 3000

Если указать здесь значение большее, чем заданное в hard limit, появится ошибка:

-bash: ulimit: open files: cannot modify limit: Operation not permitted

Когда вы завершите текущую сессию терминала и откроете новую, лимиты вернутся к начальным значениям, указанным в файле /etc/security/limits.conf.

В данной статье мы разобрались, как решить проблему с недостаточным лимитом для открытых файловых дескрипторов в Linux и рассмотрели несколько вариантов изменения лимитов на сервере.

Symptoms

A JIRA application experiences a general loss of functionality in several areas.

The following error appears in the atlassian-jira.log:

java.io.IOException: java.io.IOException: Too many open filesTo identify the current open file handler count limit please run:

And then look at the row which looks like:

Diagnosis

Option 1

lsof +L1 > open_files.txtCOMMAND PID USER FD TYPE DEVICE SIZE/OFF NLINK NODE NAME

java 2565 dos 534r REG 8,17 11219 0 57809485 /home/dos/deploy/applinks-jira/temp/jar_cache3983695525155383469.tmp (deleted)

java 2565 dos 536r REG 8,17 29732 0 57809486 /home/dos/deploy/applinks-jira/temp/jar_cache5041452221772032513.tmp (deleted)

java 2565 dos 537r REG 8,17 197860 0 57809487 /home/dos/deploy/applinks-jira/temp/jar_cache6047396568660382237.tmp (deleted)Option 2

Linux kernel exports information about all of the running processes on a Linux system via the proc pseudo filesystem, usually mounted at /proc.

In the /proc pseudo filesystem, we can find the open file descriptors under /proc/<pid>/fd/ where <pid> is the JAVA PID.

To count the open files opened by the JAVA process, we can use the command below to get a count:

ls -U /proc/{JAVA-PID}/fd | wc -lor to show files:

ls -lU /proc/{JAVA-PID}/fd | tail -n+2Atlassian Support can investigate this further if the resolution does not work, in order to do so please provide the following to Atlassian Support.

- The output of Option 1 and/or Option 2

The commands must be executed by the JIRA user, or a user who can view the files. For example if JIRA is running as

root(which is not at all recommended), executing this command asjirawill not show the open files. - A heap dump taken at the time of the exception being thrown, as per Generating a Heap Dump.

- A JIRA application Support ZIP.

Cause

UNIX systems have a limit on the number of files that can be concurrently open by any one process. The default for most distributions is only 1024 files, and for certain configurations of JIRA applications, this is too small a number. When that limit is hit, the above exception is generated and JIRA applications can fail to function as it cannot open the required files to complete its current operation.

There are certain bugs in the application that are known to cause this behaviour:

-

JRA-29587

—

Getting issue details…

STATUS

-

JRA-35726

—

Getting issue details…

STATUS

-

JRA-39114

—

Getting issue details…

STATUS

(affects JIRA 6.2 and higher) -

JRASERVER-68653

—

Getting issue details…

STATUS

We have the following improvement requests to better handle this in JIRA applications:

JRA-33887

—

Getting issue details…

STATUS

JRA-33463

—

Getting issue details…

STATUS

Resolution

These changes will only work on installation that uses built in initd script for starting Jira. For installations that uses custom build service for systemd (latest versions of linux OS-es) changes will need to be applied directly in that systemd service configuration in a form of:

[Service]

LimitNOFILE=20000In most cases, setting the ulimit in JIRA’s setenv.sh should work:

- If the

$JIRA_HOME/caches/indexesfolder is mounted over NFS move it to a local mount (i.e. storage on the same server as the JIRA instance). NFS is not supported as per our JIRA application Supported Platforms and will cause this problem to occur at a much higher frequency. - Stop the JIRA application.

-

Edit

$JIRA_INSTALL/bin/setenv.shto include the following at the top of the file:This will set that value each time JIRA applications are started, however, it will need to be manually migrated when upgrading JIRA applications.

- Start your JIRA application.

- The changes can be verified by running

/proc/<pid>/limitswhere <pid> is the application process ID.

If you are using a JIRA application version with the bug in

JRA-29587

—

Getting issue details…

STATUS

, upgrade it to the latest version. If using NFS, migrate to a local storage mount.

Notice that some operating systems may require additional configuration for setting the limits.

For most Linux systems you would modify the limits.conf file:

- To modify the limits.conf file, use the following command:

sudo vim /etc/security/limits.conf -

Add/Edit the following for the user that runs Jira application. If you have used the bundled installer, this will be

jira.limits.conf

#<domain> <type> <item> <value> # #* soft core 0 #root hard core 100000 #* hard rss 10000 #@student hard nproc 20 #@faculty soft nproc 20 #@faculty hard nproc 50 #ftp hard nproc 0 #ftp - chroot /ftp #@student - maxlogins 4 jira soft nofile 16384 jira hard nofile 32768 -

Modify the common-session file with the following:

sudo vim /etc/pam.d/common-sessioncommon-sessionis a file only available in debian/ubuntu. -

Add the following line:

common-session

# The following changes were made from the JIRA KB (https://confluence.atlassian.com/display/JIRAKB/Loss+of+Functionality+due+to+Too+Many+Open+Files+Error): session required pam_limits.so

In some circumstances it is necessary to check and configure the changes globally, this is done in 2 different places, it is advised to check all of this for what is in effect on your system and configure accordingly:

- The first place to check is the systctl.conf file.

- Modify the systctl.conf file with the following command:

sudo vim /etc/sysctl.conf -

Add the following to the bottom of the file or modify the existing value if present:

sysctl.conf

fs.file-max=16384 -

Users will need to logout and login again for the changes to take effect. If you want to apply the limit immediately, you can use the following command:

- Modify the systctl.conf file with the following command:

- The other place to configure this is within the

sysctl.ddirectory, the filename can be anything you as long as it follows with «.conf» e.g. «30-jira.conf», the number here helps to give an order of precedence see the previous link for more info.- To create/edit the file use the following:

sudo vim /etc/sysctl.d/30-jira.conf-

Add the following to the bottom of the file or modify the existing value if present:

30-jira.conf

fs.file-max=16384

-

N.B., for RHEL/CentOS/Fedora/Scientific Linux, you’ll need to modify the login file with the following:

sudo vim /etc/pam.d/login -

Add the following line to the bottom of the file:

login

# The following changes were made from the JIRA KB (https://confluence.atlassian.com/display/JIRAKB/Loss+of+Functionality+due+to+Too+Many+Open+Files+Error): session required pam_limits.so - See this external blog post for a more detailed write-up on configuring the open file limits in Linux: Linux Increase The Maximum Number Of Open Files / File Descriptors (FD)

N.B. For any other operating system (please see your operating system’s manual for details).

Lastly, restart the JIRA server application to take effect.

tip/resting

Created with Sketch.

For exceedingly large instances, we recommend consulting with our partners for scaling JIRA applications. See Is Clustering or Load Balancing JIRA Possible.