Модуль ngx_http_auth_request_module (1.5.4+) предоставляет

возможность авторизации клиента, основанной на результате подзапроса.

Если подзапрос возвращает код ответа 2xx, доступ разрешается.

Если 401 или 403 — доступ запрещается с соответствующим кодом ошибки.

Любой другой код ответа, возвращаемый подзапросом, считается ошибкой.

При ошибке 401 клиенту также передаётся заголовок

“WWW-Authenticate” из ответа подзапроса.

По умолчанию этот модуль не собирается, его сборку необходимо

разрешить с помощью конфигурационного параметра

--with-http_auth_request_module.

Модуль может быть

скомбинирован с другими модулями доступа, такими как

ngx_http_access_module,

ngx_http_auth_basic_module

и

ngx_http_auth_jwt_module,

с помощью директивы satisfy.

До версии 1.7.3 ответы на авторизационные подзапросы не могли быть закэшированы

(с использованием директив

proxy_cache,

proxy_store и т.п.).

Пример конфигурации

location /private/ {

auth_request /auth;

...

}

location = /auth {

proxy_pass ...

proxy_pass_request_body off;

proxy_set_header Content-Length "";

proxy_set_header X-Original-URI $request_uri;

}

Директивы

| Синтаксис: |

auth_request

|

|---|---|

| Умолчание: |

auth_request off; |

| Контекст: |

http, server, location

|

Включает авторизацию, основанную на результате выполнения подзапроса,

и задаёт URI, на который будет отправлен подзапрос.

| Синтаксис: |

auth_request_set

|

|---|---|

| Умолчание: |

— |

| Контекст: |

http, server, location

|

Устанавливает переменную в запросе в заданное

значение после завершения запроса авторизации.

Значение может содержать переменные из запроса авторизации,

например, $upstream_http_*.

The ngx_http_auth_request_module module (1.5.4+) implements

client authorization based on the result of a subrequest.

If the subrequest returns a 2xx response code, the access is allowed.

If it returns 401 or 403,

the access is denied with the corresponding error code.

Any other response code returned by the subrequest is considered an error.

For the 401 error, the client also receives the

“WWW-Authenticate” header from the subrequest response.

This module is not built by default, it should be enabled with the

--with-http_auth_request_module

configuration parameter.

The module may be combined with

other access modules, such as

ngx_http_access_module,

ngx_http_auth_basic_module,

and

ngx_http_auth_jwt_module,

via the satisfy directive.

Before version 1.7.3, responses to authorization subrequests could not be cached

(using proxy_cache,

proxy_store, etc.).

Example Configuration

location /private/ {

auth_request /auth;

...

}

location = /auth {

proxy_pass ...

proxy_pass_request_body off;

proxy_set_header Content-Length "";

proxy_set_header X-Original-URI $request_uri;

}

Directives

| Syntax: |

auth_request

|

|---|---|

| Default: |

auth_request off; |

| Context: |

http, server, location

|

Enables authorization based on the result of a subrequest and sets

the URI to which the subrequest will be sent.

| Syntax: |

auth_request_set

|

|---|---|

| Default: |

— |

| Context: |

http, server, location

|

Sets the request variable to the given

value after the authorization request completes.

The value may contain variables from the authorization request,

such as $upstream_http_*.

I’ve a really strange issue mostly with images and sometimes with URLs, too. (on my NGINX setup)

Sometimes I find following message in the console of Firebug the message:

"NetworkError: 401 Unauthorized - http://myproject.mydomain.com/images/20150812/sample1.png"

or

"NetworkError: 401 Unauthorized - http://myproject.mydomain.com/appliaction/item/18/#"

But the strange thing is, these things are loaded! There aren’t anything missing. So I’m a little bit confused about this error message.

Configuration:

myproject-file in «sites-available» and «sites-enabled»

server {

listen 80;

#listen 443 ssl;

server_name myproject.mydomain.com;

root /srv/www/myproject;

access_log /var/log/nginx/myproject-access.log;

error_log /var/log/nginx/myproject-error.log;

include global/dev.conf;

}

dev.conf

## Disable Access

auth_basic "Restricted";

auth_basic_user_file /etc/nginx/.htpasswd;

## Open instead listing (start)

index index.php index.html index.htm;

## Redirect Default Pages

# error_page 404 /404.html;

## favicon.ico should not be logged

location = /favicon.ico {

log_not_found off;

access_log off;

}

## Deny all attempts to access hidden files such as .htaccess, .htpasswd, .DS_Store (Mac).

location ~ /. {

deny all;

access_log off;

log_not_found off;

}

## Deny all attems to access possible configuration files

location ~ .(tpl|yml|ini|log)$ {

deny all;

}

## XML Sitemap support.

location = /sitemap.xml {

log_not_found off;

access_log off;

}

## robots.txt support.

location = /robots.txt {

log_not_found off;

access_log off;

}

location ~ .php$ {

# try_files $uri $uri/ =404;

## NOTE: You should have "cgi.fix_pathinfo = 0;" in php.ini

fastcgi_split_path_info ^(.+.php)(/.+)$;

## required for upstream keepalive

# disabled due to failed connections

#fastcgi_keep_conn on;

include fastcgi_params;

fastcgi_buffers 8 16k;

fastcgi_buffer_size 32k;

client_max_body_size 24M;

client_body_buffer_size 128k;

## Timeout for Nginx to 5 min

fastcgi_read_timeout 300;

## upstream "php-fpm" must be configured in http context

fastcgi_pass php-fpm;

}

#URL Rewrite

location / {

try_files $uri $uri/ /index.php?$args;

}

Any idea?

Ever found yourself wanting to put an application behind a login form, but dreading writing all that code to deal with OAuth 2.0 or passwords? In this tutorial, I’ll show you how to use the nginx auth_request module to protect any application running behind your nginx server with OAuth 2.0, without writing any code! Vouch, a microservice written in Go, handles the OAuth dance to any number of different auth providers so you don’t have to.

Tip: If you want to add login (and URL based authorization) to more apps via a UI, integrate with more complex apps like Oracle or SAP, or replace legacy Single Sign-On on-prem, check the Okta Access Gateway.

Why Authenticate at the Web Server?

Imagine you use nginx to run a small private wiki for your team. At first, you probably start out with adding a wiki user account for each person. It’s not too bad, adding new accounts for new hires, and removing them when they leave.

A few months later, as your team and company start growing, you add some server monitoring software, and you want to put that behind a login so only your company can view it. Since it’s not very sophisticated software, the easiest way to do that is to create a single password for everyone in an .htpasswd file, and share that user with the office.

Another month goes by, and you add a continuous integration system, and that comes with GitHub authentication as an option, which seems reasonable since most of your team has GitHub accounts already.

At this point, when someone new joins, you have to create a wiki account for them, add them to the GitHub organization, and give them the shared password for the other system. When someone leaves, you can delete their wiki account and remove them from GitHub, but let’s face it, you probably won’t change the shared password for a while since it’s annoying having to distribute that to everyone again.

Surely there must be a better way to integrate all these systems to use a common shared login system! The problem is the wiki is written in PHP, the server monitoring system just ends up publishing a folder of static HTML, and the CI system is written in Ruby which only one person on your team feels comfortable writing.

If the web server could handle authenticating users, then each backend system wouldn’t need to worry about it, since the only requests that could make it through would already be authenticated!

Using the nginx auth_request Module

Enter the nginx auth_request module.

This module is shipped with nginx, but requires enabling when you compile nginx. When you download the nginx source and compile, just include the --with-http_auth_request_module flag along with any others that you use.

The auth_request module sits between the internet and your backend server that nginx passes requests onto, and any time a request comes in, it first forwards the request to a separate server to check whether the user is authenticated, and uses the HTTP response to decide whether to allow the request to continue to the backend.

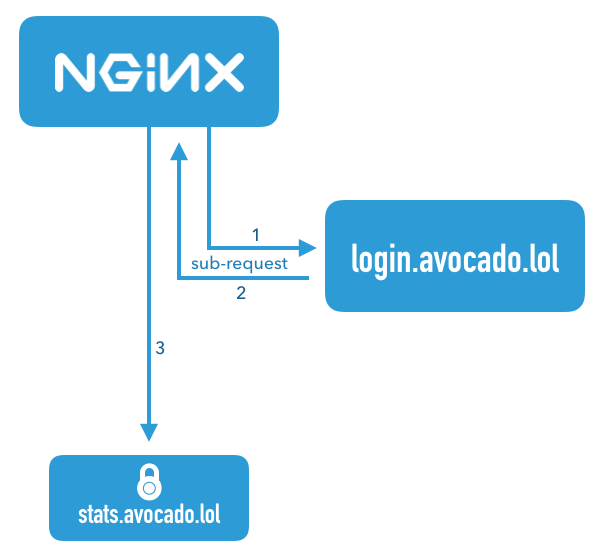

This diagram illustrates a request that comes in for the server name stats.avocado.lol. First, nginx fires off a sub-request to login.avocado.lol (1), and if the response (2) to that request returns HTTP 200, it then continues forwarding the request on to the backend stats.avocado.lol.

Choosing an Auth Proxy

Since the nginx auth_request module has no concept of users or how to authenticate anyone, we need something else in the mix that can actually handle logging users in. In the diagram above, this is illustrated by the server name login.avocado.lol.

This server needs to handle an HTTP request and return HTTP 200 or 401 depending on whether the user is logged in. If the user is not logged in, it needs to know how to get them to log in and set a session cookie.

To accomplish this, we’ll use the open source project “Vouch”. Vouch is written in Go, so it’s super easy to deploy. Everything can be configured via a single YAML file. Vouch can be configured to authenticate users via a variety of OAuth and OpenID Connect backends such as GitHub, Google, Okta or any other custom servers.

We’ll come back to configuring Vouch in a few minutes, but for now, let’s continue on to set up your protected server in nginx.

Configure Your Protected nginx Host

Starting with a typical nginx server block, you just need to add a couple lines to enable the auth_request module. Here is an example server block that should look similar to your own config. This example just serves a folder of static HTML files, but the same idea applies whether you’re passing the request on to a fastcgi backend or using proxy_pass.

server {

listen 443 ssl http2;

server_name stats.avocado.lol;

ssl_certificate /etc/letsencrypt/live/avocado.lol/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/avocado.lol/privkey.pem;

root /web/sites/stats.avocado.lol;

index index.html;

}

Add the following to your existing server block:

# Any request to this server will first be sent to this URL

auth_request /vouch-validate;

location = /vouch-validate {

# This address is where Vouch will be listening on

proxy_pass http://127.0.0.1:9090/validate;

proxy_pass_request_body off; # no need to send the POST body

proxy_set_header Content-Length "";

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# these return values are passed to the @error401 call

auth_request_set $auth_resp_jwt $upstream_http_x_vouch_jwt;

auth_request_set $auth_resp_err $upstream_http_x_vouch_err;

auth_request_set $auth_resp_failcount $upstream_http_x_vouch_failcount;

}

error_page 401 = @error401;

# If the user is not logged in, redirect them to Vouch's login URL

location @error401 {

return 302 https://login.avocado.lol/login?url=https://$http_host$request_uri&vouch-failcount=$auth_resp_failcount&X-Vouch-Token=$auth_resp_jwt&error=$auth_resp_err;

}

Let’s look at what’s going on here. The first line, auth_request /vouch-validate; is what enables this flow. This tells the auth_request module to first send any request to this URL before deciding whether it’s allowed to continue to the backend server.

The block location = /vouch-validate captures that URL, and proxies it to the Vouch server that will be listening on port 9090. We don’t need to send the POST body to Vouch, since all we really care about is the cookie.

The line error_page 401 = @error401; tells nginx what to do if Vouch returns an HTTP 401 response, which is to pass it to the block defined by location @error401. That block will redirect the user’s browser to Vouch’s login URL which will kick off the flow to the real authentication backend.

Configure a Server Block for Vouch

Next, configure a new server block for Vouch so that it has a publicly accessible URL like https://login.avocado.lol. All this needs to do is proxy the request to the backend Vouch server.

server {

listen 443 ssl;

server_name login.avocado.lol;

ssl_certificate /etc/letsencrypt/live/login.avocado.lol/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/login.avocado.lol/privkey.pem;

# Proxy to your Vouch instance

location / {

proxy_set_header Host login.avocado.lol;

proxy_set_header X-Forwarded-Proto https;

proxy_pass http://127.0.0.1:9090;

}

}

Configure and Deploy Vouch

You’ll need to download Vouch and compile the Go binary for your platform. You can follow the instructions in the project’s README file.

Once you’ve got a binary, you’ll need to create the config file to define the way you want Vouch to authenticate users.

Copy config/config.yml_example to config/config.yml and read through the settings there. Most of the defaults will be fine, but you’ll want to create your own JWT secret string and replace the placeholder value of your_random_string.

The easiest way to configure Vouch is to have it allow any user that can authenticate at the OAuth server be allowed to access the backend. This works great if you’re using a private OAuth server like Okta to manage your users. Go ahead and set allowAllUsers: true to enable this behavior, and comment out the domains: chunk.

You’ll need to choose an OAuth 2.0 provider to use to actually authenticate users. In this example we’ll use Okta, since that’s the easiest way to have a full OAuth/OpenID Connect server and be able to manage all your user accounts from a single dashboard.

Before you begin, you’ll need a free Okta developer account. Install the Okta CLI and run okta register to sign up for a new account. If you already have an account, run okta login.

Then, run okta apps create. Select the default app name, or change it as you see fit.

Choose Web and press Enter.

Select Other.

Then, change the Redirect URI to https://login.avocado.lol/auth and use https://login.avocado.lol for the Logout Redirect URI.

What does the Okta CLI do?

The Okta CLI will create an OIDC Web App in your Okta Org. It will add the redirect URIs you specified and grant access to the Everyone group. You will see output like the following when it’s finished:

Okta application configuration has been written to: /path/to/app/.okta.env

Run cat .okta.env (or type .okta.env on Windows) to see the issuer and credentials for your app.

export OKTA_OAUTH2_ISSUER="https://dev-133337.okta.com/oauth2/default"

export OKTA_OAUTH2_CLIENT_ID="0oab8eb55Kb9jdMIr5d6"

export OKTA_OAUTH2_CLIENT_SECRET="NEVER-SHOW-SECRETS"

Your Okta domain is the first part of your issuer, before /oauth2/default.

NOTE: You can also use the Okta Admin Console to create your app. See Create a Web App for more information.

Now that you’ve registered the application in Okta, you’ll have a client ID and secret which you’ll need to include in the config file. You’ll also need to set the URLs for your authorization endpoint, token endpoint and userinfo endpoint. These will most likely look like the below using your Okta domain.

config.yml

oauth:

provider: oidc

client_id: {yourClientID}

client_secret: {yourClientSecret}

auth_url: https://{yourOktaDomain}/oauth2/default/v1/authorize

token_url: https://{yourOktaDomain}/oauth2/default/v1/token

user_info_url: https://{yourOktaDomain}/oauth2/default/v1/userinfo

scopes:

- openid

- email

# Set the callback URL to the domain that Vouch is running on

callback_url: https://login.avocado.lol/auth

Now you can run Vouch! It will listen on port 9090, which is where you’ve configured nginx to send the auth_request verifications as well as serve traffic from login.avocado.lol.

When you reload the nginx config, all requests to stats.avocado.lol will require that you log in via Okta first!

Bonus: Who Logged In?

If you’re putting a dynamic web app behind nginx and you care not only about whether someone was able to log in, but also who they are, there is one more trick we can use.

By default, Vouch will extract a user ID via OpenID Connect (or GitHub or Google if you’ve configured those as your auth providers), and will include that user ID in an HTTP header that gets passed back up to the main server.

In your main server block, just below the line auth_request /vouch-validate; which enables the auth_request module, add the following:

auth_request_set $auth_user $upstream_http_x_vouch_user;

This will take the HTTP header that Vouch sets, X-Vouch-User, and assign it to the nginx variable $auth_user. Then, depending on whether you use fastcgi or proxy_pass, include one of the two lines below in your server block:

fastcgi_param REMOTE_USER $auth_user;

proxy_set_header Remote-User $auth_user;

These will set an HTTP header with the value of $auth_user that your backend server can read in order to know who logged in. For example, in PHP you can access this data using:

<?php

echo 'Hello, ' . $_SERVER['REMOTE_USER'] . '!';

Now you can be sure that your internal app can only be accessed by authenticated users!

Learn More About OAuth 2.0 and Secure User Management with Okta

For more information and tutorials about OAuth 2.0, check out some of our other blog posts!

- Add Authentication to your PHP App in 5 Minutes

- What is the OAuth 2.0 Authorization Code Grant Type?

- What is the OAuth 2.0 Implicit Grant Type?

As always, we’d love to hear from you about this post, or really anything else! Hit us up in the comments, or on Twitter @oktadev!

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and

privacy statement. We’ll occasionally send you account related emails.

Already on GitHub?

Sign in

to your account

Closed

sepulrator opened this issue

Apr 4, 2018

· 13 comments

Comments

NGINX Ingress controller version: 0.12.0

What happened:

Nginx returns custom error page instead of external http auth service response body when auth service return 401 status code with json response body. Is it possible to return auth service response body in the case of auth errors except 2xx status codes?

Nginx response when got 401 error:

<html>

<head>

<title>401 Authorization Required</title>

</head>

<body bgcolor="white">

<center>

<h1>401 Authorization Required</h1>

</center>

<hr>

<center>nginx/1.13.9</center>

</body>

</html>

Nginx conf:

ingress.kubernetes.io/ssl-redirect: "false"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/auth-method: "POST"

nginx.ingress.kubernetes.io/service-upstream: "true"

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/auth-url: "http://auth-svc.default.svc.cluster.local/api/auth"

nginx.ingress.kubernetes.io/auth-response-headers: UserRole

What you expected to happen:

I expect to be able to return auth service response body to client when auth service gives error unless the status code is 2xx.

same issue here, I need to get whatever response body is generated by auth service but cannot get dynamically. It would be very helpful if there is any workaround for solving the problem

Fom Nginx auth_request documentation:

If the subrequest returns a 2xx response code, the access is allowed. If it returns 401 or 403, the access is denied with the corresponding error code. Any other response code returned by the subrequest is considered an error.

However I was able to create custom errors with auth_request using the following configuration:

location = /error/401 {

internal;

proxy_method GET;

proxy_set_header x-code 401;

proxy_pass http://custom-default-backend;

}

location = /error/403 {

internal;

proxy_method GET;

proxy_set_header x-code 403;

proxy_pass http://custom-default-backend;

}

location = /error/500 {

internal;

proxy_method GET;

proxy_set_header x-code 500;

proxy_pass http://custom-default-backend;

}

# Your authenticated location, just an example to be simple

location ~ ^/(.*)$ {

auth_request /auth;

error_page 401 =401 /error/401;

error_page 403 =403 /error/403;

error_page 500 =500 /error/500;

# proxy_pass and etc...

}

But with this your errors (401, 403, 500) will ALWAYS come from custom locations. And still it is not possible to have the auth_request response body.

It seems to be a limitation of Nginx. Has anyone got around to this?

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

Stale issues rot after 30d of inactivity.

Mark the issue as fresh with /remove-lifecycle rotten.

Rotten issues close after an additional 30d of inactivity.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle rotten

k8s-ci-robot

added

lifecycle/rotten

Denotes an issue or PR that has aged beyond stale and will be auto-closed.

and removed

lifecycle/stale

Denotes an issue or PR has remained open with no activity and has become stale.

labels

Sep 5, 2018

Rotten issues close after 30d of inactivity.

Reopen the issue with /reopen.

Mark the issue as fresh with /remove-lifecycle rotten.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/close

@fejta-bot: Closing this issue.

In response to this:

Rotten issues close after 30d of inactivity.

Reopen the issue with/reopen.

Mark the issue as fresh with/remove-lifecycle rotten.Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/close

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

I expect to be able to return auth service response body to client when auth service gives error unless the status code is 2xx.

Exactly what we need as well…

has anyone found an alternative solution?

I needed this as well, but I found a workaround by using the nginx.ingress.kubernetes.io/auth-signin to redirect to a page with the error response body. For example, my authenticate endpoint is /auth/authenticate, my ingress annotations are:

nginx.ingress.kubernetes.io/auth-url: <internal host>/auth/authenticate

nginx.ingress.kubernetes.io/auth-signin: /auth/authenticate

It seems that it converts all requests into GET requests but preserves the headers, which means that you just have to support GET requests (and reuse the auth-url endpoint).

/reopen

/remove-lifecycle rotten

This is still an issue with v0.25.0. It would be great if we could return the auth service’s body as the result instead of requiring custom error pages or redirects.

@bekriebel: You can’t reopen an issue/PR unless you authored it or you are a collaborator.

In response to this:

/reopen

/remove-lifecycle rottenThis is still an issue with v0.25.0. It would be great if we could return the auth service’s body as the result instead of requiring custom error pages or redirects.

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

I’m using Nginx 1.17 and found this limitation as well. If I access the upstream auth server directly I could see the 401 status with response content. But the custom response body served by the upstream server gets overwritten by the default Nginx 401 page through the auth_request proxy.

For anyone else running into this, here’s my workaround. It’s a big of a config kludge but it solves the problem of returning the auth backend response to the client and relies almost entirely on annotations in ingress configuration. The one exception is the proxy_pass directive in the error location; it uses the $target variable set in the generated auth location block to point to the auth backend. That’s very much an implementation detail internal to the ingress machinery so be aware that this could break in the future if someone renames that variable.

apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: my-ingress namespace: default annotations: nginx.ingress.kubernetes.io/auth-url: http://auth-service.namespace.svc.cluster.local/authorize nginx.ingress.kubernetes.io/auth-response-headers: | X-My-Header, X-My-Other-Header, X-Yet-Another-Header nginx.ingress.kubernetes.io/server-snippet: | location /error/401 { internal; proxy_method GET; proxy_pass $target; } nginx.ingress.kubernetes.io/configuration-snippet: | error_page 401 =401 /error/401; spec: rules: ...

nginx.ingress.kubernetes.io/server-snippet: |

location /authz {

internal;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Request-Id $req_id;

proxy_set_header X-Request-Origin-Host $host;

proxy_set_header X-Request-Origin-Path $request_uri;

proxy_set_header X-Request-Origin-Method $request_method;

#proxy_set_header Connection "Upgrade";

#proxy_set_header Upgrade $http_upgrade;

#proxy_http_version 1.1;

proxy_method GET;

proxy_pass http://vsc-leo2go-0.vsc-me-dev.ws03.svc.cluster.local;

}

nginx.ingress.kubernetes.io/configuration-snippet: |

access_by_lua '

local res = ngx.location.capture("/authz")

if res.status >= ngx.HTTP_OK and res.status < ngx.HTTP_SPECIAL_RESPONSE then

ngx.req.set_header("X-Request-User-KID", res.header["X-Request-User-KID"])

ngx.req.set_header("X-Request-Role-KID", res.header["X-Request-Role-KID"])

return

--elseif res.status == ngx.HTTP_UNAUTHORIZED then

-- ngx.redirect("http://sso.sims-cn.com?redirect=")

-- return

end

ngx.header.content_type = res.header["Content-Type"]

ngx.status = res.status

ngx.say(res.body)

ngx.exit(res.status)

';

If you’re about to deploy an NGINX server, you might want to take a few steps to make sure it is secure out of the gate. Jack Wallen offers up five easy tips that can give your security a boost.

NGINX continues to rise in popularity. According to the October, 2017 Netcraft stats, it has nearly caught up with Apache–meaning more and more people are making use of this lightweight, lightning fast web server. That also means more and more NGINX deployments need to be secured. To that end, there are so many possibilities.

If you’re new to NGINX, you will want to make sure to deploy the server in such a way that your foundation is safe. I will walk through five ways to gain better security over NGINX that won’t put your skills or resolve to too much of a test. These are tips every NGINX admin should know and employ:

1. Prevent information disclosure

Open up a terminal window and issue the command:

curl -I http://SERVER_ADDRESS

Chances are you’ll see something similar to that shown in Figure A.

Figure A

That information could invite attack. How? Because attackers can make use of that information to forge a hack for your system. To prevent that, we’re going to configure NGINX to not display either the NGINX release or the hosting platform information. Open up the configuration file with the command sudo nano /etc/nginx/nginx.conf. Scroll down until you see the line:

# server_tokens off;

Uncomment that line by removing the # character, like so:

server_tokens off;

Save and exit the file. Once NGINX is reloaded with the command sudo systemctl reload nginx, the curl -I command will no longer show the NGINX version or the host platform (Figure B).

Figure B

2. Hide php settings

If you make use of NGINX with PHP, you cannot hide the PHP information via the NGINX configuration file. Instead, you have to edit the php.ini file. Issue the command sudo nano /etc/php/7.0/fpm/php.ini and make sure expose_php is set to off. The configuration option falls around line 359. Once you’ve changed that configuration, save and close the file. Reload NGINX and your PHP information will be hidden from prying eyes.

3. Redirect server errors

Next we need to configure the error pages in the default sites-enabled configuration, such that error 401 (Unauthorized) and 403 (Forbidden) will automatically redirect to 404 (Not Found) error page. Many believe handing out errors 401 and 403 is akin to exposing secure information–so rerouting them to error 404 offers a bit of security-by-obfuscation, in similar fashion to hiding server information.

To do this, issue the command sudo nano /etc/nginx/sites-enabled/default. In the resulting configuration file, scroll down to the server { section and add the line:

error_page 401 403 404 /404.html;

Save and close the file. Reload NGINX with the command sudo systemctl reload nginx and your server will now redirect 401 and 403 errors to 404 errors.

4. Secure sensitive directories

Say you want to block certain directories from all but specific addresses. Let’s say you have a WordPress site and you want to block everyone but your local LAN addresses from accessing the wp-admin folder. And let’s say your local LAN IP address scheme is 192.168.1.0/24. To do this, issue the command sudo nano /etc/nginx/sites-enabled/default. Scroll down to the server { location and add the following under the location / directive:

location /wp-admin {

allow 192.168.1.0/24;

deny all;

}

Once you reload NGINX, if anyone attempts to access the wp-admin folder, they will be redirected to the error 403 page, unless you’ve configured NGINX to redirect to error 404–at which point it will redirect to the error 404 page.

5. Limit the rate of requests

It is possible to limit the rate NGINX will accept incoming requests. For example, say you want to limit the acceptance of incoming requests to the /wp-admin section. To achieve this, we are going to use the limit_req_zone directory and configure a shared memory zone named one (which will store the requests for a specified key) and limit it to 30 requests per minute. For our specified key, we’ll use the binary_remote_addr (client IP address). To do this, issue the command sudo nano /etc/nginx/sites-enabled/default. Above the server { section, add the following line:

limit_req_zone $binary_remote_addr zone=one:10m rate=30r/m;

Scroll down to the location directive, where we added the wp-admin section. Within that directive, add the following line:

limit_req zone=one;

So our wp-admin section might look like:

location /wp-admin {

allow 10.10.1.0/24;

deny all;

limit_req zone=one;

}

Save and close the default file. Reload NGINX with the command sudo systemctl reload nginx. Your wp-admin section will now only allow 30 requests per minute. After that 30th request, the user will see the following error ( Figure B).

Figure B

You can set that rate limit on any directory that needs protected by such a mechanism.

NGINX a bit more secure

Congratulations, your NGINX installation is now a bit more secure. Yes, there are plenty more ways in which you can achieve even more security, but starting here will be serve as a great foundation.

Появление сообщения об ошибке 401 Unauthorized Error («отказ в доступе») при открытии страницы сайта означает неверную авторизацию или аутентификацию пользователя на стороне сервера при обращении к определенному url-адресу. Чаще всего она возникает при ошибочном вводе имени и/или пароля посетителем ресурса при входе в свой аккаунт. Другой причиной являются неправильные настройки, допущенные при администрировании web-ресурса. Данная ошибка отображается в браузере в виде отдельной страницы с соответствующим описанием. Некоторые разработчики интернет-ресурсов, в особенности крупных порталов, вводят собственную дополнительную кодировку данного сбоя:

- 401 Unauthorized;

- Authorization Required;

- HTTP Error 401 – Ошибка авторизации.

Попробуем разобраться с наиболее распространенными причинами возникновения данной ошибки кода HTTP-соединения и обсудим способы их решения.

Причины появления ошибки сервера 401 и способы ее устранения на стороне пользователя

При доступе к некоторым сайтам (или отдельным страницам этих сайтов), посетитель должен пройти определенные этапы получения прав:

- Идентификация – получение вашей учетной записи («identity») по username/login или email.

- Аутентификация («authentic») – проверка того, что вы знаете пароль от этой учетной записи.

- Авторизация – проверка вашей роли (статуса) в системе и решение о предоставлении доступа к запрошенной странице или ресурсу на определенных условиях.

Большинство пользователей сохраняют свои данные по умолчанию в истории браузеров, что позволяет быстро идентифицироваться на наиболее часто посещаемых страницах и синхронизировать настройки между устройствами. Данный способ удобен для серфинга в интернете, но может привести к проблемам с безопасностью доступа к конфиденциальной информации. При наличии большого количества авторизованных регистрационных данных к различным сайтам используйте надежный мастер-пароль, который закрывает доступ к сохраненной в браузере информации.

Наиболее распространенной причиной появления ошибки с кодом 401 для рядового пользователя является ввод неверных данных при посещении определенного ресурса. В этом и других случаях нужно попробовать сделать следующее:

- Проверьте в адресной строке правильность написания URL. Особенно это касается перехода на подстраницы сайта, требующие авторизации. Введите правильный адрес. Если переход на страницу осуществлялся после входа в аккаунт, разлогинитесь, вернитесь на главную страницу и произведите повторный вход с правильными учетными данными.

- При осуществлении входа с сохраненными данными пользователя и появлении ошибки сервера 401 проверьте их корректность в соответствующих настройках данного браузера. Возможно, авторизационные данные были вами изменены в другом браузере. Также можно очистить кэш, удалить cookies и повторить попытку входа. При удалении истории браузера или очистке кэша потребуется ручное введение логина и пароля для получения доступа. Если вы не помните пароль, пройдите процедуру восстановления, следуя инструкциям.

- Если вы считаете, что вводите правильные регистрационные данные, но не можете получить доступ к сайту, обратитесь к администратору ресурса. В этом случае лучше всего сделать скриншот проблемной страницы.

- Иногда блокировка происходит на стороне провайдера, что тоже приводит к отказу в доступе и появлению сообщения с кодировкой 401. Для проверки можно попробовать авторизоваться на том же ресурсе с альтернативного ip-адреса (например, используя VPN). При подтверждении блокировки трафика свяжитесь с провайдером и следуйте его инструкциям.

Некоторые крупные интернет-ресурсы с большим количеством подписчиков используют дополнительные настройки для обеспечения безопасности доступа. К примеру, ваш аккаунт может быть заблокирован при многократных попытках неудачной авторизации. Слишком частые попытки законнектиться могут быть восприняты как действия бота. В этом случае вы увидите соответствующее сообщение, но можете быть просто переадресованы на страницу с кодом 401. Свяжитесь с администратором сайта и решите проблему.

Иногда простая перезагрузка проблемной страницы, выход из текущей сессии или использование другого веб-браузера полностью решают проблему с 401 ошибкой авторизации.

Устранение ошибки 401 администратором веб-ресурса

Для владельцев сайтов, столкнувшихся с появлением ошибки отказа доступа 401, решить ее порою намного сложнее, чем обычному посетителю ресурса. Есть несколько рекомендаций, которые помогут в этом:

- Обращение в службу поддержки хостинга сайта. Как и в случае возникновения проблем с провайдером, лучше всего подробно описать последовательность действий, приведших к появлению ошибки 401, приложить скриншот.

- При отсутствии проблем на стороне хостинг-провайдера можно внести следующие изменения в настройки сайта с помощью строки Disallow:/адрес проблемной страницы. Запретить индексацию страницам с ошибкой в «rоbоts.txt», после чего добавить в файл «.htассеss» строку такого типа:

Redirect 301 /oldpage.html http://site.com/newpage.html.

Где в поле /oldpage.html прописывается адрес проблемной страницы, а в http://site.com/newpage.html адрес страницы авторизации.

Таким образом вы перенаправите пользователей со всех страниц, которые выдают ошибку 401, на страницу начальной авторизации.

- Если после выполнения предыдущих рекомендаций пользователи при попытках авторизации все равно видят ошибку 401, то найдите на сервере файл «php.ini» и увеличьте время жизни сессии, изменив значения следующих параметров: «session.gc_maxlifetime» и «session.cookie_lifetime» на 1440 и 0 соответственно.

- Разработчики веб-ресурсов могут использовать более сложные методы авторизации и аутентификации доступа для создания дополнительной защиты по протоколу HTTP. Если устранить сбой простыми методами администрирования не удается, следует обратиться к специалистам, создававшим сайт, для внесения соответствующих изменений в код.

Хотя ошибка 401 и является проблемой на стороне клиента, ошибка пользователя на стороне сервера может привести к ложному требованию входа в систему. К примеру, сетевой администратор разрешит аутентификацию входа в систему всем пользователям, даже если это не требуется. В таком случае сообщение о несанкционированном доступе будет отображаться для всех, кто посещает сайт. Баг устраняется внесением соответствующих изменений в настройки.

Дополнительная информация об ошибке с кодом 401

Веб-серверы под управлением Microsoft IIS могут предоставить дополнительные данные об ошибке 401 Unauthorized в виде второго ряда цифр:

- 401, 1 – войти не удалось;

- 401, 2 – ошибка входа в систему из-за конфигурации сервера;

- 401, 3 – несанкционированный доступ из-за ACL на ресурс;

- 401, 501 – доступ запрещен: слишком много запросов с одного и того же клиентского IP; ограничение динамического IP-адреса – достигнут предел одновременных запросов и т.д.

Более подробную информацию об ошибке сервера 401 при использовании обычной проверки подлинности для подключения к веб-узлу, который размещен в службе MS IIS, смотрите здесь.

Следующие сообщения также являются ошибками на стороне клиента и относятся к 401 ошибке:

- 400 Bad Request;

- 403 Forbidden;

- 404 Not Found;

- 408 Request Timeout.

Как видим, появление ошибки авторизации 401 Unauthorized не является критичным для рядового посетителя сайта и чаще всего устраняется самыми простыми способами. В более сложной ситуации оказываются администраторы и владельцы интернет-ресурсов, но и они в 100% случаев разберутся с данным багом путем изменения настроек или корректировки html-кода с привлечением разработчика сайта.