Первая задача, с которой чаще всего сталкиваются разработчики, начинающие программировать на JavaScript, – как регистрировать события в журнале консоли с помощью метода console.log. В поисках информации по отладке кода на JavaScript вы найдёте сотни статей в блогах, а также инструкций на StackOverflow, советующих «просто» вывести данные в консоль через метод console.log. Это настолько распространённая практика, что пришлось ввести правила для контроля качества кода, подобные no-console, чтобы не оставлять случайные записи из журнала в коде для продакшена. Но что делать, если нужно специально зарегистрировать какое-нибудь событие, чтобы предоставить дополнительную информацию?

В этой статье рассматриваются различные ситуации, в которых требуется вести логи; показывается разница между методами console.log и console.error в Node.js и демонстрируется, как передать функцию логирования библиотекам, не перегружая пользовательскую консоль.

Теоретические основы работы с Node.js

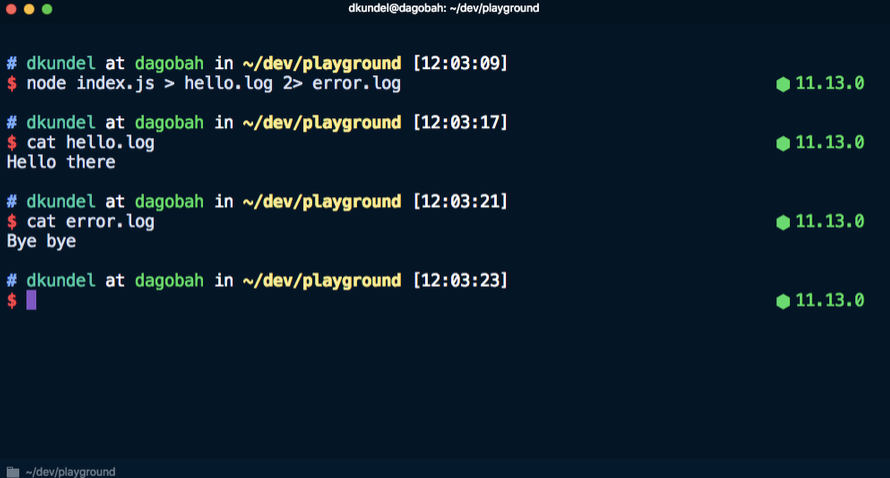

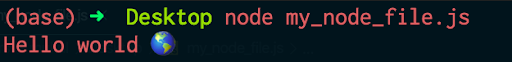

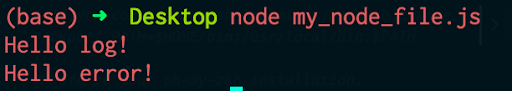

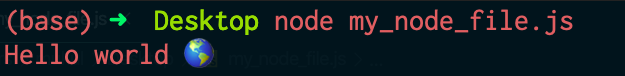

Методы console.log и console.error можно использовать как в браузере, так и в Node.js. Тем не менее, при использовании Node.js нужно помнить одну важную вещь. Если создать следующий код в Node.js, используя файл под названием index.js,

а затем выполнить его в терминале с помощью node index.js, то результаты выполнения команд будут располагаться один над другим:

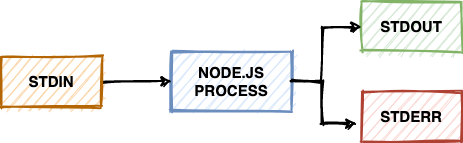

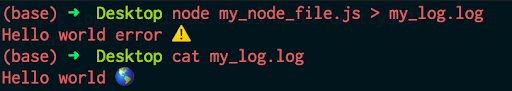

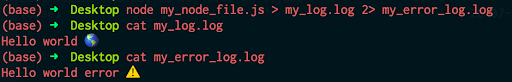

Несмотря на то, что они кажутся похожими, система обрабатывает их по-разному. Если посмотреть раздел о работе console в документации Node.js, то окажется, что console.log выводит результат через stdout, а console.error – через stderr.

Каждый процесс может работать с тремя потоками (stream) по умолчанию: stdin, stdout и stderr. Поток stdin обрабатывает ввод для процесса, например нажатия кнопок или перенаправленный вывод (об этом чуть ниже). Стандартный поток вывода stdout предназначен для вывода данных приложения. Наконец, стандартный поток ошибок stderr предназначен для вывода сообщений об ошибках. Если нужно разобраться, для чего предназначен stderr и в каких случаях его использовать, прочитайте эту статью.

Если вкратце, то с его помощью можно использовать операторы перенаправления (>) и конвейера (|) для работы с ошибками и диагностической информацией отдельно от фактических результатов работы приложения. Если оператор > позволяет перенаправить вывод результата выполнения команды в файл, то с помощью оператора 2> можно перенаправить вывод потока ошибок stderr в файл. Например, эта команда отправит Hello there в файл hello.log, а Bye bye в файл error.log.

Когда необходимо записывать события в журнал?

Теперь, рассмотрев технические аспекты, лежащие в основе записи в журнал, перейдём к различным сценариям, в которых необходимо регистрировать события. Обычно эти сценарии относятся к одной из нескольких категорий:

- быстрая отладка при неожиданном поведении во время разработки;

- ведение журнала на базе браузера для анализа и диагностики;

- ведение журналов для серверных приложений для регистрации входящих запросов и возможные ошибки;

- ведение дополнительных журналов отладки для библиотек, чтобы помогать пользователям в решении проблем;

- ведение журнала для выходных данных интерфейса командной строки, чтобы выводить сообщения в консоли о ходе выполнения операции, подтверждениях и ошибках.

В этой статье рассматриваются только три последних сценария на базе Node.js.

Ведение журналов для серверных приложений

Существует несколько причин для логирования событий, происходящих на сервере. Например, логирование входящих запросов позволяет получить статистические данные о том, как часто пользователи сталкиваются с ошибкой 404, что может быть этому причиной или какое клиентское приложение User-Agent используется. Также можно узнать время возникновения ошибки и её причину.

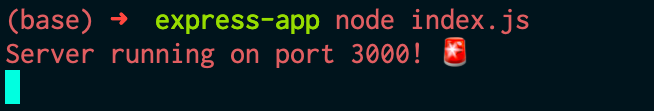

Для того чтобы поэкспериментировать с материалом, приведённым в этой части статьи, нужно создать новый каталог для проекта. В каталоге проекта создаём index.js для кода, который будет использоваться, и выполняем следующие команды для запуска проекта и установки express:

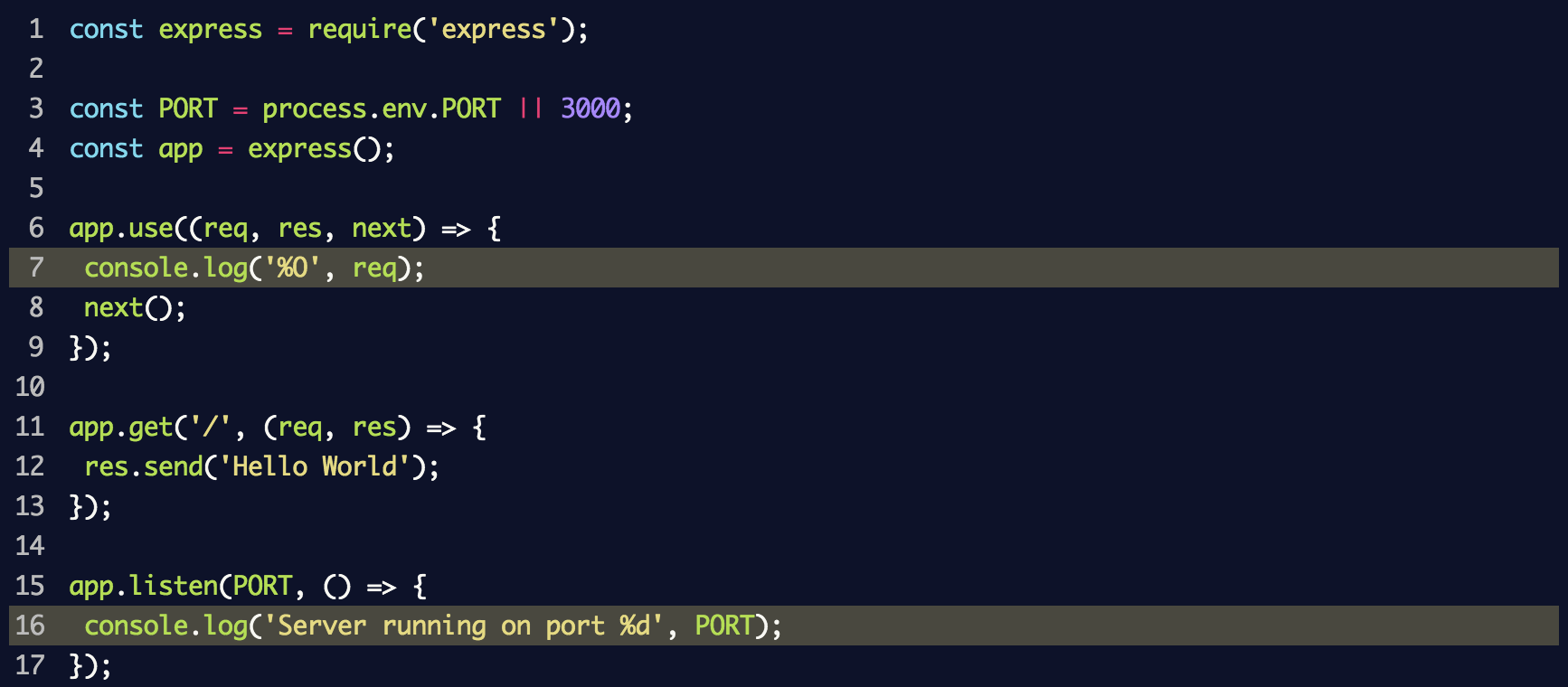

Настраиваем сервер с межплатформенным ПО, который будет регистрировать каждый запрос в консоли с помощью метода console.log. Помещаем следующие строки в файл index.js:

Здесь используется console.log('%O', req) для регистрации целого объекта в журнале. С точки зрения внутренней структуры метод console.log применяет util.format, который кроме %O поддерживает и другие метки-заполнители. Информацию о них можно найти в документации Node.js.

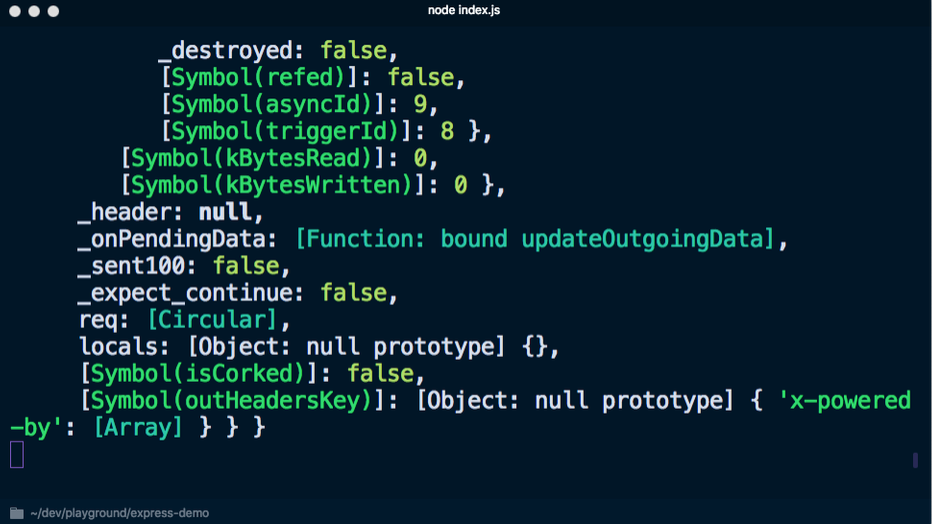

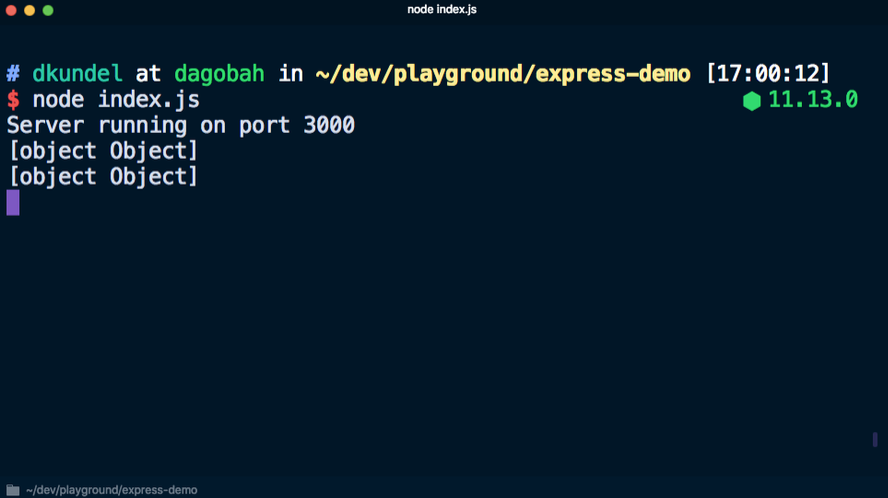

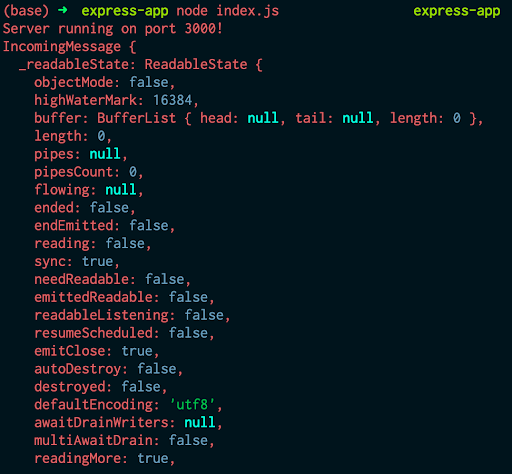

При выполнении node index.js для запуска сервера и переходе на localhost:3000 в консоли отображается много ненужной информации:

Если вместо этого использовать console.log('%s', req), чтобы не выводить объект полностью, много информации получить не удастся:

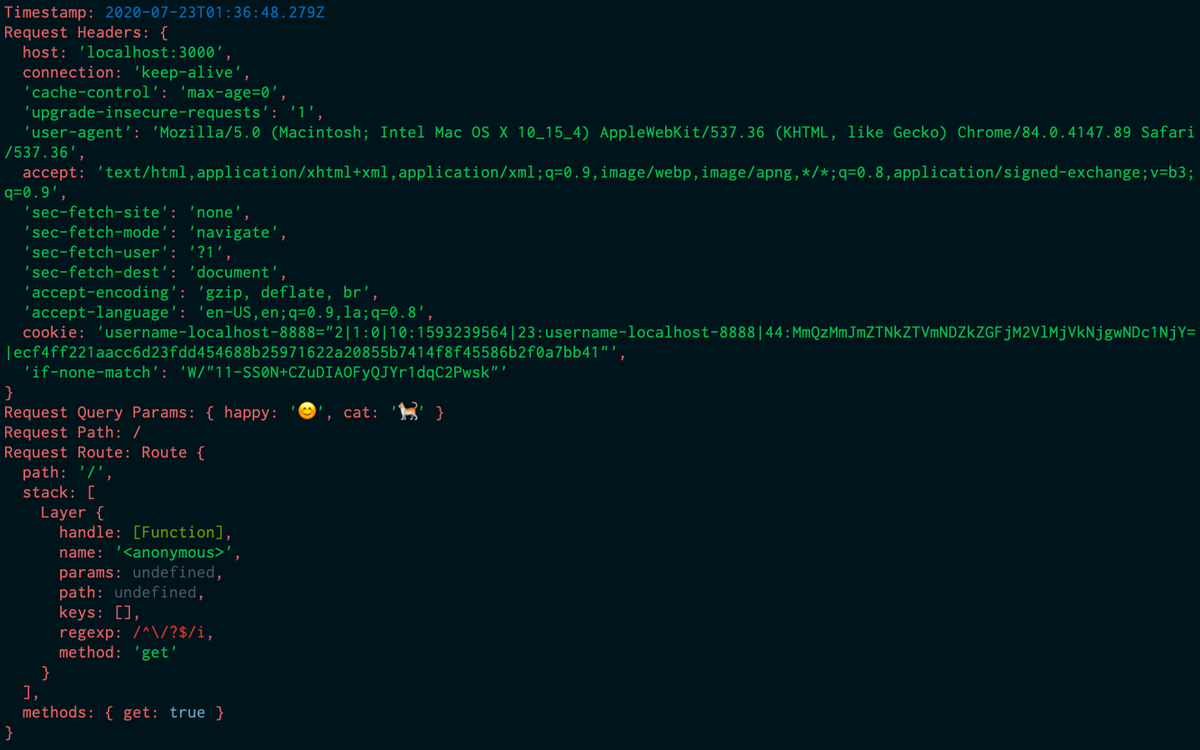

Можно написать собственную функцию логирования, которая будет выводить только нужные данные, однако сначала следует определиться, какая именно информация необходима. Несмотря на то, что в центре внимания обычно оказывается содержание сообщения, в реальности часто необходимо получить дополнительную информацию, которая включает:

- метку времени – чтобы знать, когда произошли события;

- имя компьютера/сервера – если запущена распределённая система;

- идентификатор процесса – если запущено несколько процессов Node с помощью, например,

pm2; - сообщение – фактическое сообщение с неким содержимым;

- трассировку стека – если ошибка регистрируется;

- дополнительные переменные/информацию.

Кроме того, учитывая, что в любом случае всё выводится в потоки stdout и stderr, возникает необходимость вести журнал на разных уровнях, а также конфигурировать и фильтровать записи в журнале в зависимости от уровня.

Этого можно добиться, получив доступ к разным частям процесса process и написав несколько строчек кода на JavaScript. Однако Node.js замечателен тем, что в нём уже есть экосистема npm и несколько библиотек, которые можно использовать для этих целей. К ним относятся:

pino;winston;- roarr;

- bunyan (эта библиотека не обновлялась в течение двух лет).

Часто предпочтение отдаётся pino, потому что она быстрая и обладает собственной экосистемой. Посмотрим, как pino может помочь с ведением журнала. Ещё одно преимущество этой библиотеки – пакет express-pino-logger, который позволяет регистрировать запросы.

Установим pino и express-pino-logger:

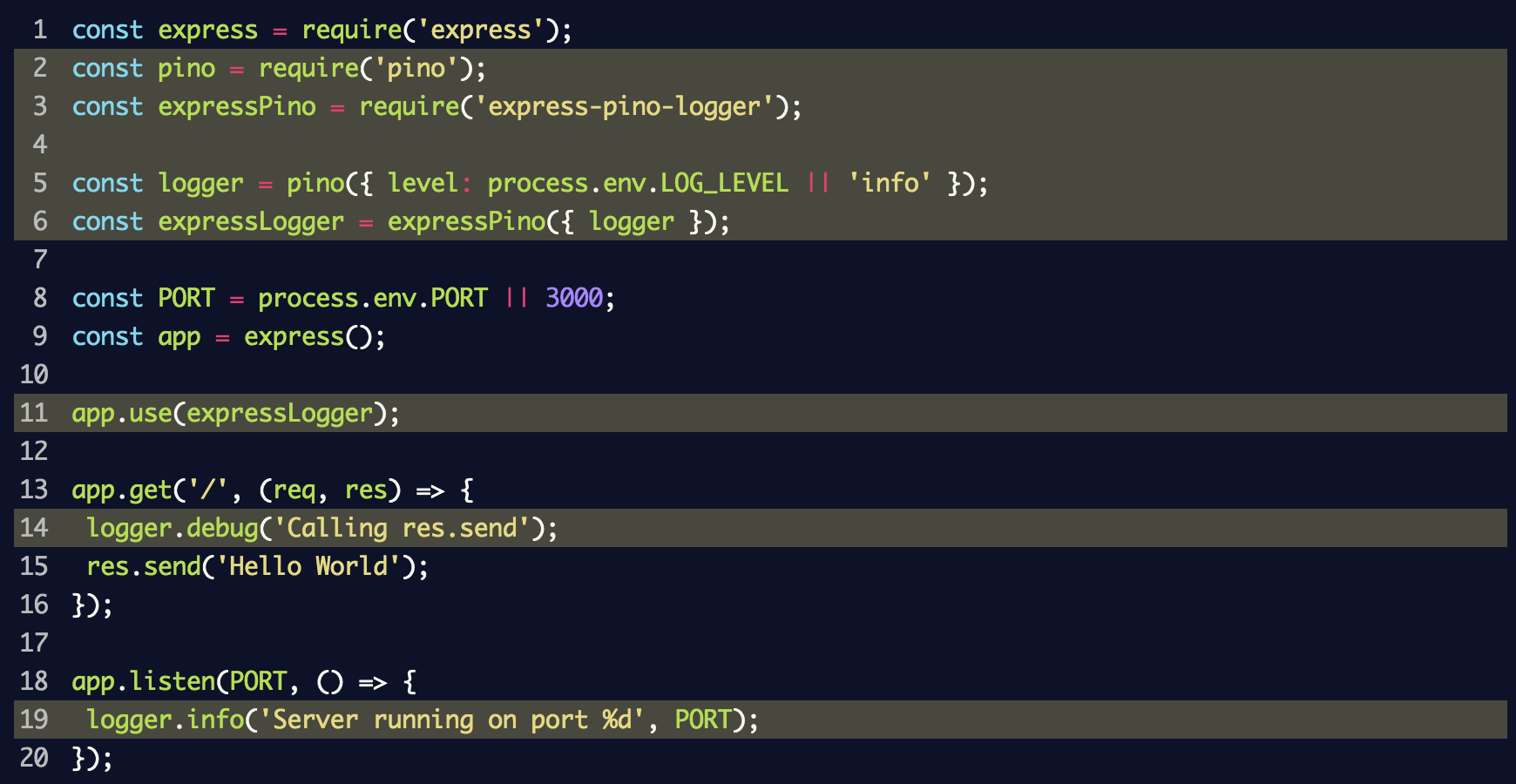

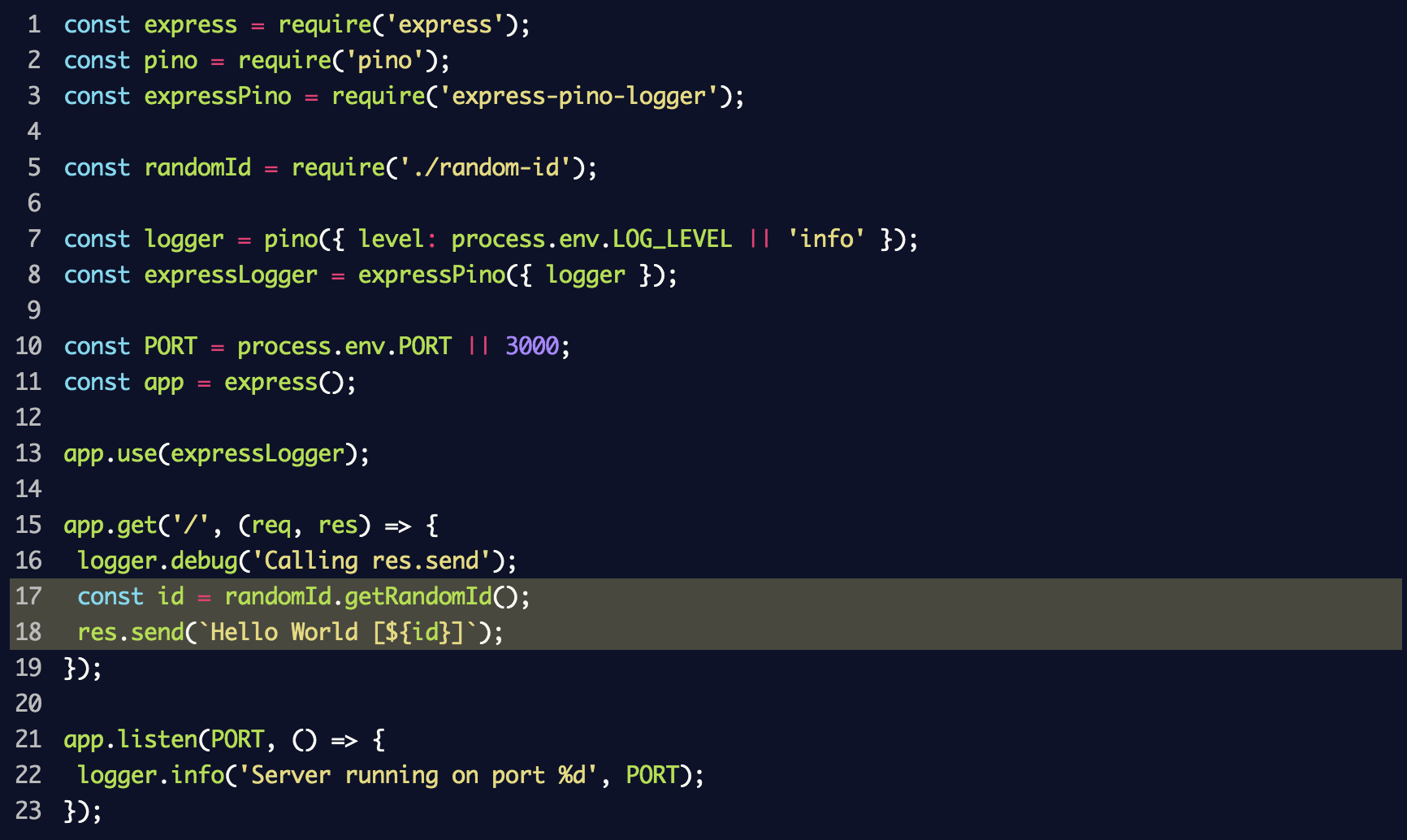

После этого обновляем файл index.js, чтобы использовать регистратор событий и межплатформенное ПО:

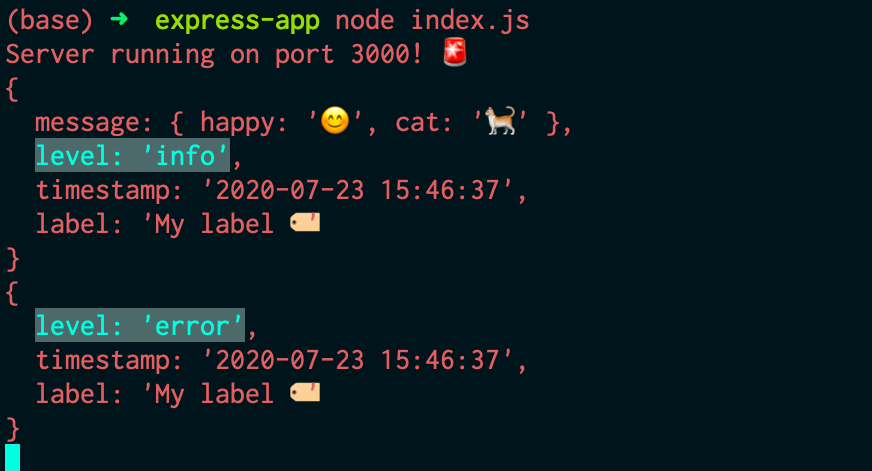

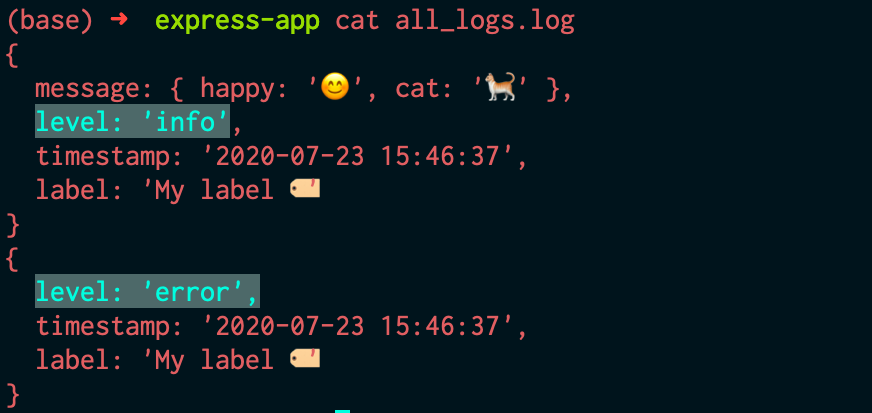

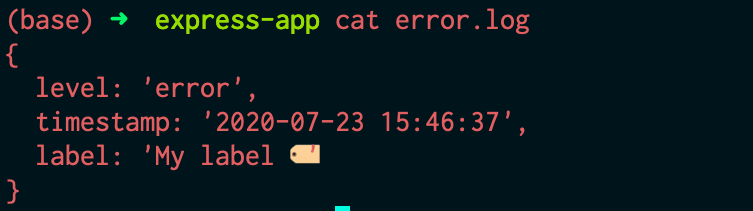

В этом фрагменте создали экземпляр регистратора событий logger для pino и передали его в express-pino-logger, чтобы создать новое межплатформенное ПО для регистрации событий, с которым можно вызвать app.use. Кроме того, console.log заменили при запуске сервера на logger.info и добавили logger.debug к маршруту, чтобы отображать разные уровни журнала.

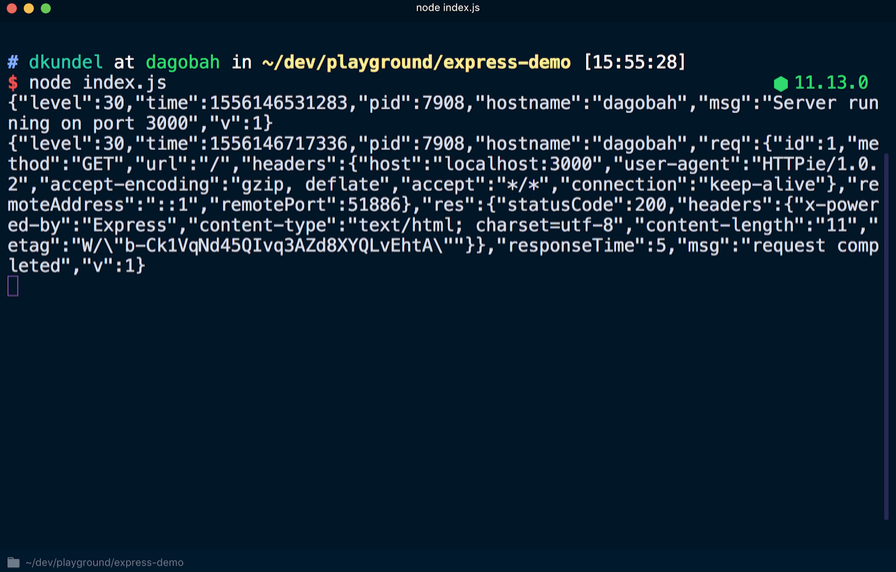

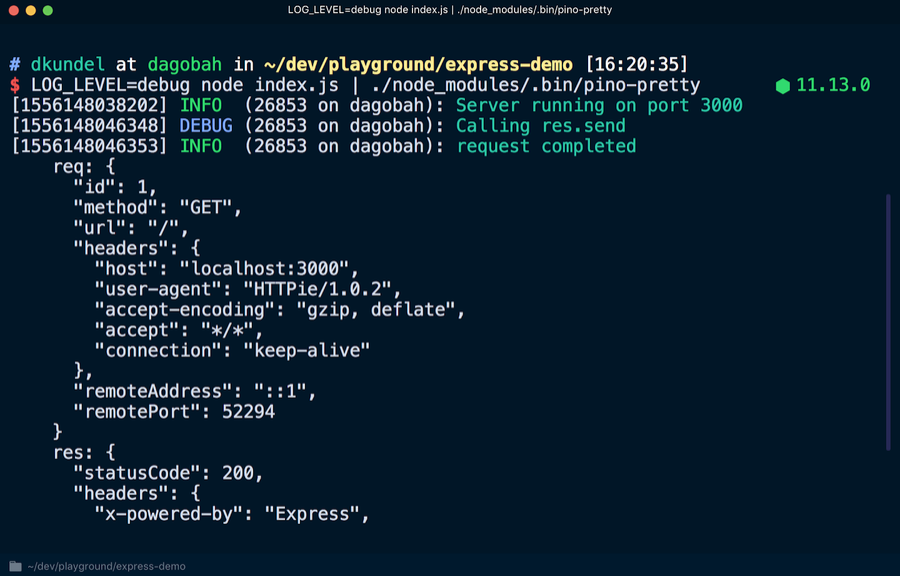

Если вы перезапустите сервер, повторно выполнив node index.js, то получите на выходе другой результат, при котором каждая строка/линия будет выводиться в формате JSON. Снова переходим на localhost:3000, чтобы увидеть ещё одну новую строку в формате JSON.

Среди данных в формате JSON можно обнаружить упомянутую ранее информацию, например метку времени. Также отмечаем, что сообщение logger.debug не было выведено. Для того чтобы сделать его видимым, необходимо изменить установленный по умолчанию уровень журнала. После создания экземпляра регистрации событий logger было установлено значение process.env.LOG_LEVEL. Это означает, что можно изменить значение или принять значение info по умолчанию. Запуская LOG_LEVEL=debug node index.js, изменяем уровень журнала.

Прежде чем это сделать, необходимо решить проблему выходного формата, который не слишком удобен для восприятия в настоящий момент. Этот шаг делается намеренно. Согласно философии pino, в целях производительности необходимо перенести обработку записей в журнале в отдельный процесс, передав выходные данные (с помощью оператора |). Процесс включает перевод выходных данных в формат, более удобный для восприятия человеком, или их загрузку в облако. Эту задачу выполняют инструменты передачи под названием transports. Ознакомьтесь с документацией по инструментам transports и вы узнаете, почему ошибки в pino не выводятся через stderr.

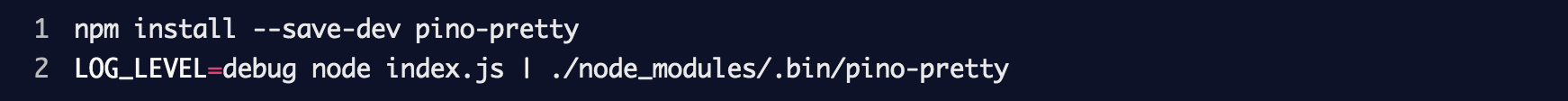

Чтобы просмотреть более удобную для чтения версию журнала, воспользуемся инструментом pino-pretty. Запускаем в терминале:

Все записи в журнале передаются с помощью оператора | в распоряжение pino-pretty, благодаря чему «очищаются» выходные данные, которые будут содержать только важную информацию, отображённую разными цветами. Если снова запросить localhost:3000, должно появиться сообщение об отладке debug.

Для того чтобы сделать записи в журнале более читабельными или преобразовать их, существует множество инструментов передачи. Их даже можно отобразить с помощью эмодзи, используя pino-colada. Эти инструменты будут полезны для разработки в локальных масштабах. При работе сервера в продакшене может появиться необходимость передавать данные журнала с помощью другого инструмента, записывать их на диск с помощью > для последующей обработки или делать две операции одновременно, используя определённую команду, например tee.

В документе также говорится о ротации файлов журнала, фильтрации и записи данных журнала в другие файлы.

Ведение журналов для библиотек

Изучив способы эффективной организации ведения журналов для серверных приложений, можно использовать ту же технологию для собственных библиотек.

Проблема в том, что в случае с библиотекой может понадобиться вести журнал в целях отладки, не нагружая при этом приложения клиента. Наоборот, клиент должен иметь возможность активировать журнал, если необходимо произвести отладку. По умолчанию библиотека не должна производить записи выходных данных, предоставив это право пользователю.

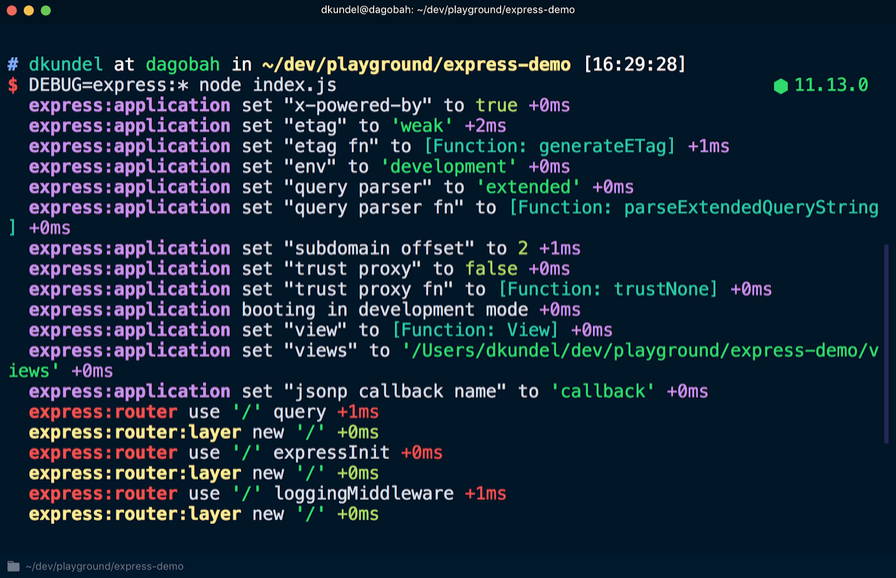

Хорошим примером этого является фреймворк express. Во внутренней структуре фреймворка express происходит много процессов, что может вызвать интерес к его более глубокому изучению во время отладки приложения. В документации для фреймворка express сказано, что к началу команды можно добавить DEBUG=express:* следующим образом:

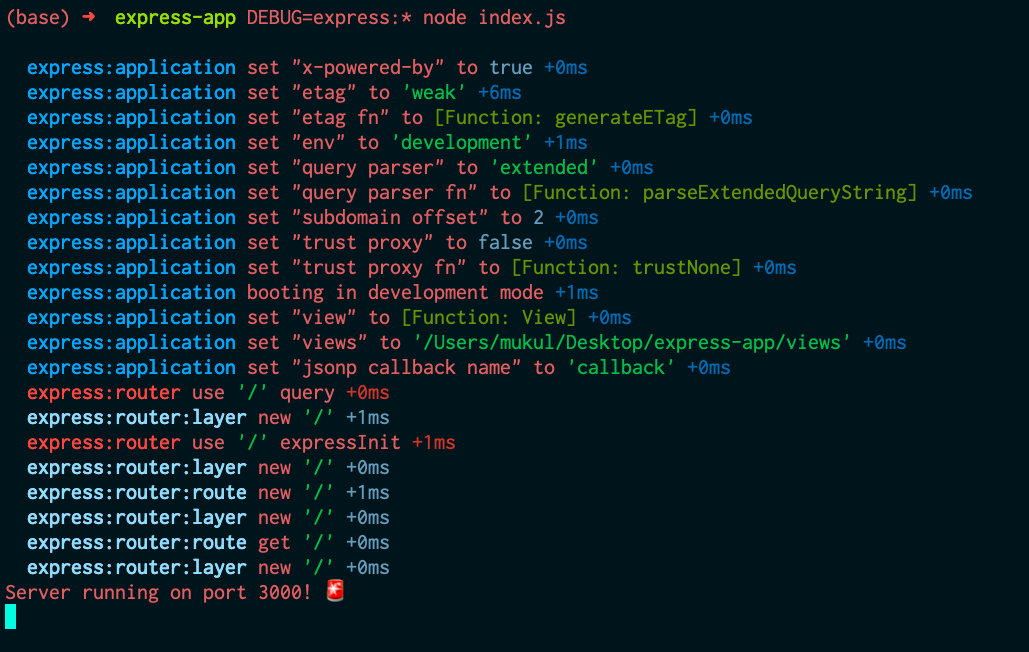

Если применить эту команду к существующему приложению, можно увидеть множество дополнительных выходных данных, которые помогут при отладке:

Эту информацию нельзя увидеть, если не активирован журнал отладки. Для этого существует пакет debug. С его помощью можно писать сообщения в «пространстве имён», и если пользователь библиотеки включит это пространство имён или подстановочный знак, который с ним совпадает, в их переменную среды DEBUG, то сообщения будут отображаться. Сначала нужно установить библиотеку debug:

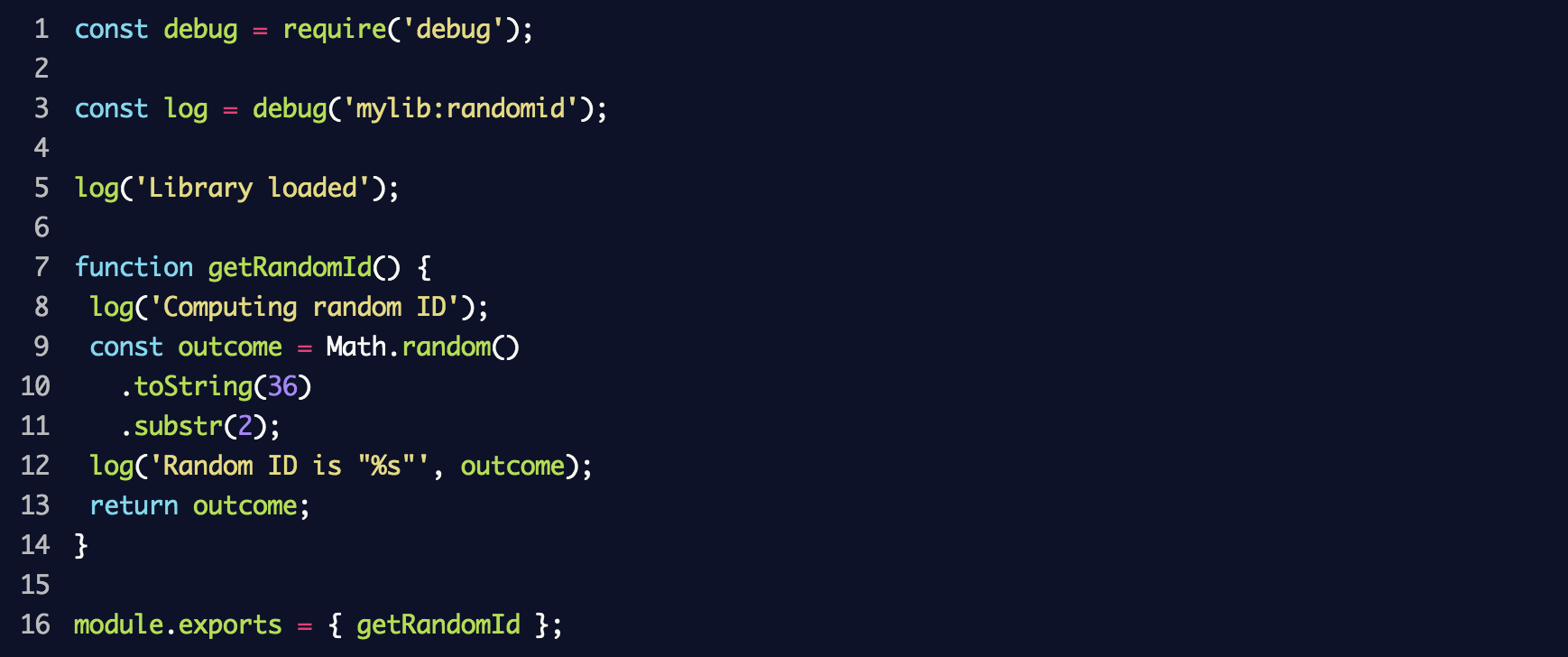

Создайте новый файл под названием random-id.js, который будет моделировать работу библиотеки, и поместите в него следующий код:

В результате будет создан новый регистратор событий debug с пространством имён mylib:randomid, в котором затем будут зарегистрированы два сообщения. Используем его в index.js из предыдущего раздела:

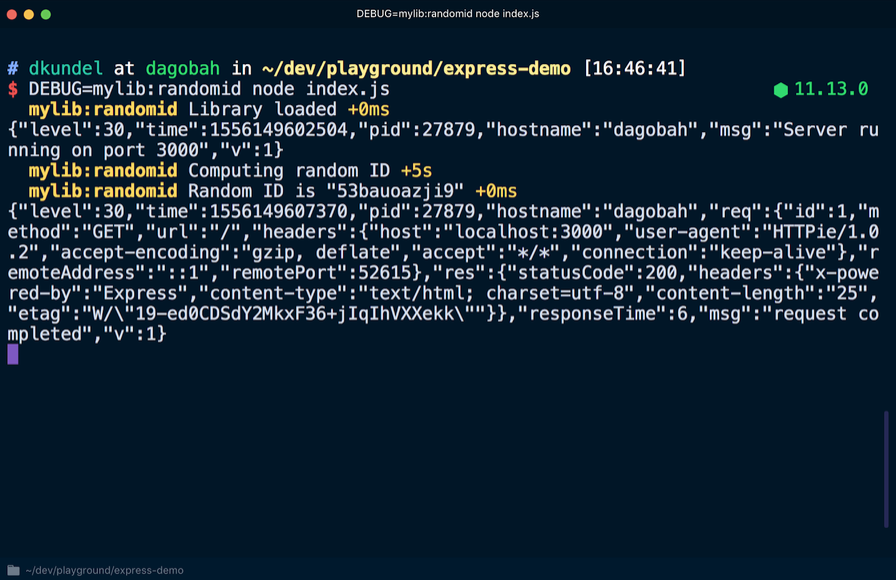

Если вновь запустить сервер, добавив в этот раз DEBUG=mylib:randomid node index.js, то отобразятся записи в журнале отладки для нашей «библиотеки»:

Если пользователи библиотеки захотят поместить информацию об отладке в записи журнала pino, они могут использовать библиотеку под названием pino-debug, созданную командой pino для корректного форматирования этих записей.

Устанавливаем библиотеку:

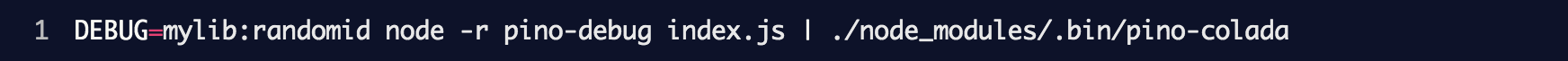

Перед первым использованием debug необходимо инициализировать pino-debug. Самый простой способ сделать это – использовать флаги -r или --require, чтобы запросить модуль перед запуском скрипта. Перезапускаем сервер, используя команду (при условии, что установлена pino-colada):

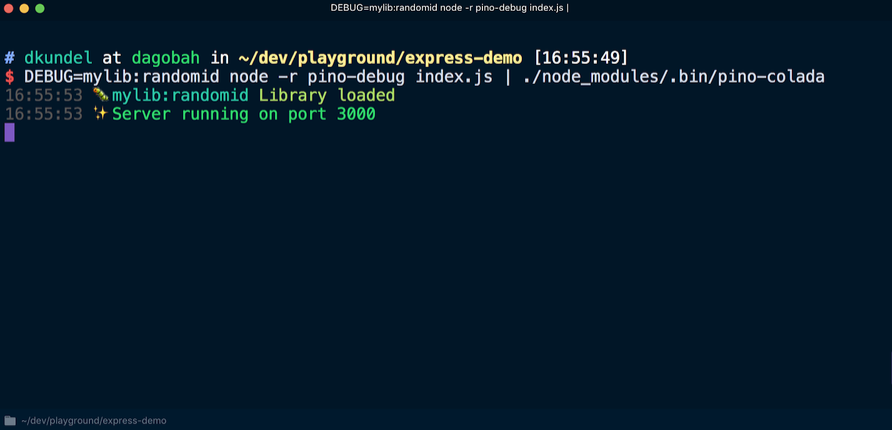

В результате записи журнала отладки библиотеки отобразятся так же, как и в журнале приложения:

Выходные данные интерфейса командной строки (CLI)

Последний случай, который рассматривается в этой статье, касается ведения журнала для интерфейса командной строки. Предпочтительно, чтобы журнал, регистрирующий события, связанные с логикой программы, вёлся отдельно от журнала для регистрации данных интерфейса командной строки. Для записи любых событий, связанных с логикой программы, нужно использовать определённую библиотеку, например debug. В этом случае можно повторно использовать логику программы, не будучи ограниченным одним сценарием использования интерфейса командной строки.

Создавая интерфейс командной строки с помощью Node.js, можно добавить различные цвета, блоки с изменяемым значением или инструменты форматирования, чтобы придать интерфейсу визуально привлекательный вид. Однако при этом нужно держать в уме несколько сценариев.

По одному из них интерфейс может использоваться в контексте системы непрерывной интеграции (CI), и в этом случае лучше отказаться от цветового форматирования и визуально перегруженного представления результатов. В некоторых системах непрерывной интеграции установлен флаг CI. Удостовериться, что вы находитесь в системе непрерывной интеграции, можно с помощью пакета is-ci, который поддерживает несколько таких систем.

Некоторые библиотеки, например chalk, определяют системы непрерывной интеграции и отменяют вывод в консоль цветного текста. Давайте посмотрим, как это работает.

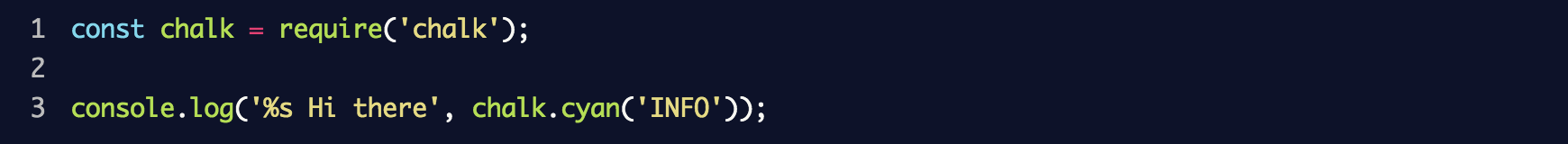

Установите chalk с помощью команды npm install chalk и создайте файл под названием cli.js. Поместите в файл следующие строки:

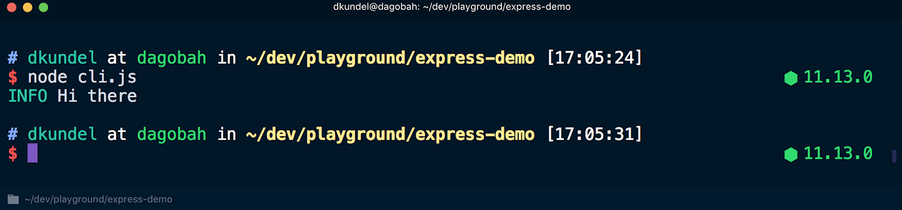

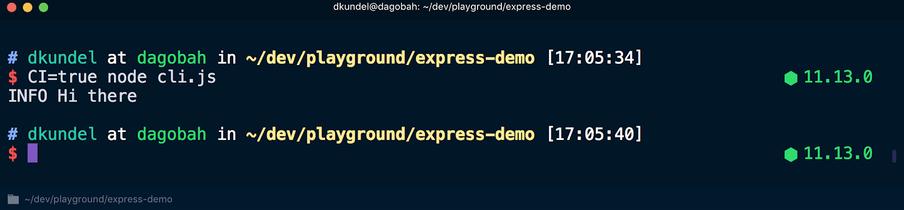

Теперь, если выполнить этот скрипт с помощью node cli.js, результаты будут представлены с использованием разных цветов:

Но если выполнить скрипт с помощью CI=true node cli.js, цветовое форматирование текстов будет отменено:

В другом сценарии, о котором стоит помнить, stdout запущен в режиме терминала, т.е. данные выводятся на терминал. В этом случае результаты можно красиво отобразить с помощью boxen. В противном случае выходные данные, скорее всего, будут перенаправлены в файл или куда-то ещё.

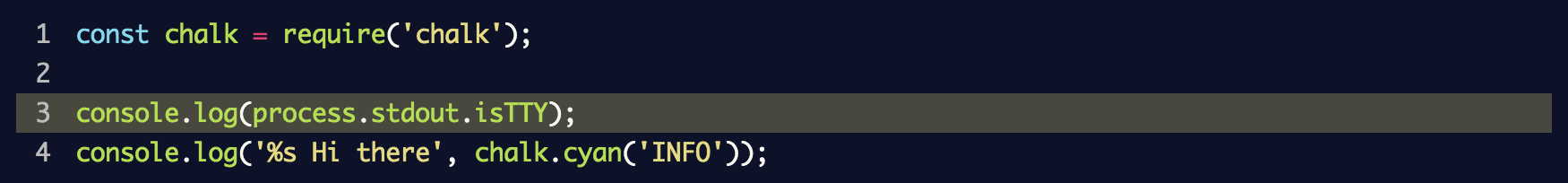

Проверить работу потоков stdin, stdout или stderr в режиме терминала можно, посмотрев на атрибут isTTY соответствующего потока. Например, process.stdout.isTTY. TTY означает «телетайп» (teletypewriter) и в данном случае предназначен специально для терминала.

Значения могут различаться для каждого из трёх потоков в зависимости от того, как были запущены процессы Node.js. Подробную информацию об этом можно найти в документации Node.js, в разделе «Ввод/вывод процессов».

Посмотрим, как значение process.stdout.isTTY различается в разных ситуациях. Обновляем файл cli.js, чтобы проверить его:

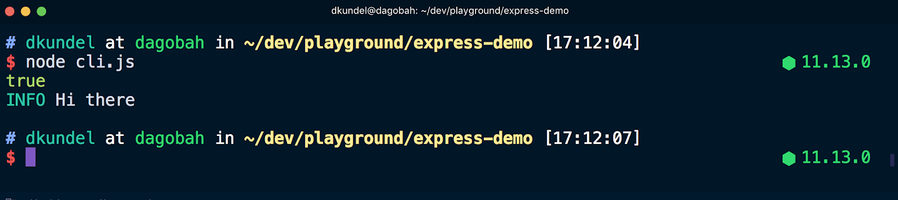

Теперь запускаем node cli.js в терминале и видим слово true, после которого цветным шрифтом отображается сообщение:

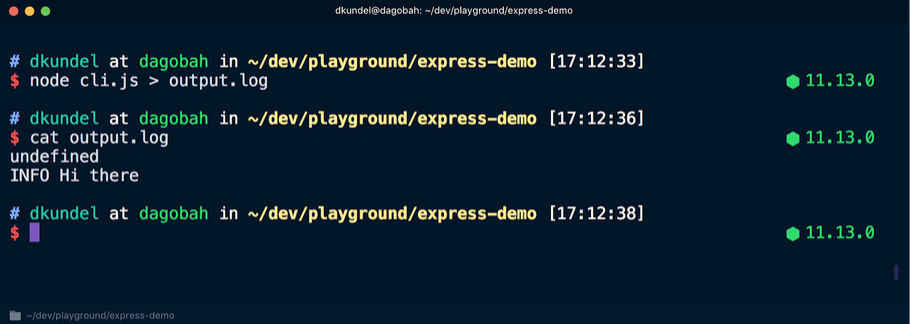

После этого повторно выполняем команду, но перенаправляем выходные данные в файл, а затем просматриваем содержимое:

В этот раз в терминале появилось слово undefined, за которым следует сообщение, отображённое бесцветным шрифтом, поскольку перенаправление потока stdout вывело его из режима терминала. Здесь chalk использует инструмент supports-color, который с точки зрения внутренней структуры проверяет isTTY соответствующего потока.

Такие инструменты, как chalk, выполняют эти действия самостоятельно. Однако, разрабатывая интерфейс командной строки, нужно всегда помнить о ситуациях, когда интерфейс работает в системе непрерывной интеграции или осуществляется перенаправление выходных данных. Эти инструменты помогают использовать интерфейс командной строки на более высоком уровне. Например, данные в терминале можно организовать более структурировано и, если isTTY имеет значение undefined, переключиться на более простой способ анализа.

Заключение

Начать использовать JavaScript и внести первую строчку в журнал консоли с помощью console.log довольно просто. Но перед тем как развернуть код в продакшене, следует учесть несколько аспектов использования журнала. Данная статья является лишь введением в различные способы и решения, применяемые при организации журнала событий. Она не содержит всего, что вам необходимо знать. Поэтому рекомендуется обращать внимание на удачные проекты с открытым кодом и отслеживать, каким образом в них решена проблема логирования и какие инструменты используются при этом. А теперь попробуйте сами вести логи без вывода данных в консоль.

Если вы знаете другие инструменты, о которых стоит упомянуть, напишите о них в комментариях.

Для начала повторю то, что каждый разработчик должен знать об этом — надо предохраняться.

Зачем надо логгировать

Вы запустили серверное приложение у которого нету GUI для отображения своего состояния, а знать очень хочется. Самое простое и очевидное решение, выводить какие то сообщения куда то — stdout/stderr, файлики, syslog, что-то более извращенное. Это и есть логирование (спасибо кэп).

Перечислю основные задачи, которые могут решаться при помощи логгирования:

- Анализ поведения и производительности. Здесь надо заметить, что для анализа производительности, лучше использовать проббирование (н-р twitter zipkin получает данные через пробы в коде). К поведению относится все, что сейчас происходит.

- Анализ и диагностика проблем. Тут очевидно — в приложении критическая ситуация 1/0 (причем не важно когда она произошла, приложение вот вот издохнет), что делать? Правильно — залоггировать это. О об этом подробнее чуть ниже.

- Всякие левые сценарии использования.

Как логгировать

Писать в логи надо и много и мало. Настолько мало, чтобы понять в каком состоянии приложение сейчас, и настолько много, чтобы если приложение рухнуло понять почему.

То есть пишем, что вы сами считаете нужным, для того, чтобы вам было понятно, что происходит. Но надо подчеркнуть, что логгирование ест ресурсы приложения — так что не надо увлекаться, я видел приложение однажды, где логгирование жрало примерно 50% производительности (это конечно же ппц не надо так делать).

Если происходит ошибка/исключение и тд, вам нужно понять почему. В этот самый момент, пишем в логи все (да, абсолютно все), что необходимо для понимания, почему это случилось (здесь как раз надо писать много — не надо скромничать).

Уровни логов

Очевидно, что не всякое сообщение имеет одинаковую важность. Обычно большинству приложении достаточно 5 уровней логгов — я использую название уровней к которым привык с slf4j:

- ERROR — приложение в критическом положении, требуется внимание человека для продолжения. Появляется довольно редко, но метко. Я использую его для очень низкоуровневых вещей или для необработанных исключений

- WARN — произошло что-то необычное, выбивающееся из обычного сценария, но приложение умное и восстановило свою работу само. Я использую этот уровень в обрабочиках ошибок.

- INFO — что сейчас происходит

- DEBUG — что сейчас происходит, более подробно

- TRACE — пишем как в твиттер, все что не попадя.

Далее будут небольшое сравнение логгеров, которые я опробовал в node.js и что в них не так, с учетом написанного выше.

log4js

Это первое на что я посмотрел, в надежде что это хоть сколько нибудь будет похоже на log4j.

Маленький пример:

var log4js = require('log4js');

var logger = log4js.getLogger();

logger.debug("Some debug messages");

В консоли появится цветное сообщение:

[2014-01-13 20:41:22.604] [DEBUG] [default] - Some debug messages

В этом логгере есть необходимый минимум. Есть фильтрация сообщений по уровню и категории (имени логгера), время сообщения и возможность его изменить. Для вывода сообщений использутся util.format — поэтому поддерживаются те же опции форматирования, что и в console.log.

winston

Его часто рекомендуют на SO. Я лично ни кому бы его не рекомендовал.

Пример:

var winston = require('winston');

winston.info('Hello distributed log files!');

Что будет в консоли:

info: Hello distributed log files!

В этом месте был небольшой опус о том, что winston не позволяет выдавать время записей, благодаря Keenn, оказалось, что все таки можно, но эти опции (timestamp: true у транспортов) выключены по умолчанию, что очень странно.

bunyan

В отличии от двух предыдущих библиотек этот логгер вместо текста выдает JSON. Создатель решил, что логи все равно разбираются машиной (коллекторами логгов), то пусть это будет машиночитаемый формат. Это хорошо подходит под первый сценарий логирования (очевидно, что собирать пробы руками — это как минимум глупо), но не всегда используются коллекторы. Что лично мне показалось странным, или лучше сказать неудачным решением:

- JSON для второго сценария, который на мой взгляд наиболее разумное применение логирования, это как искать в ассемблерном коде ошибку в С++ коде. Логи читают люди. Если приложение небольшое, коллектор логов избыточен, а если появился, то нужно просто добавить для него обработчик.

- Если говорить об организации кода, то что обработчик логгера выплевывает и куда, это полностью ответственность обработчика — то есть по идее вывод JSON можно прикрутить к любой библиотеке которая позволяет писать свои обработчики/форматтеры.

- У bunyan есть одна фича — сериализаторы для пользовательских типов. Вместе с сообщением логгеру скармливается пачка объектов и он их сериализует. Если посмотреть на это под другим углом:

сериализация — это просто JSON представление объекта; каждый объект может определить у себя в прототипе метод toJSON и он будет вызван при JSON.stringify — вот и сериализатор; теперь как его вывести — абсолютно все логгеры позволяют выводить json представление объектов специальным флагом (для util.format это %j). То есть фича вроде как достаточно очевидная.

Я честно признаюсь, опыта использования этого логгера у меня почти нет (я пробовал его прикрутить самым первым — все таки joyent использует его), если мне покажут/укажут/ткнут носом, что в нем крутого, я с радостью послушаю и приму к сведению. Хотя я заметил, что есть простейшая организация иерархий логгеров, но ее использовать мне не показалось удобным.

intel

Это самое лучшая библиотека для логгирования, которая мне попалась. В плане конфигурации у меня к ней не было ни каких нареканий. Почему она мне понравилась:

- Как уже сказал, у нее очень удобная конфигурация, настроить можно все в одном месте. У логгеров есть обработчики, у обработчиков есть форматтеры сообщений, фильтры по сообщениям и уровням. Почти сказка.

- Иерархии логгеров, кто пришел н-р с slf4j считает это само собой разумеющимся, однако же только в этой либе это было нормально реализовано. Что такое иерархия логгов:

у каждого логгера есть родитель (полное имя логгера задается перечеслением через точку всех родителей), задавая конфигурацию родителя можно выставить опцию, чтобы все дети автоматически получали ее же (log propagation). То есть я могу например создать несколько родительских логеров, задать им обработчики (н-р на уровни ERROR и WARN слать email) и все дети будут использовать их же, но более того если мне нужно заглушить какое то дерево логгов, я просто специализирую конфигурацию для его полного пути. И все это в одном месте и один раз. - Очень разумная подмена стандартной console. Разрешаются пути относительно текущей рабочей директории, что позволяет без проблем конфигурировать вывод console.log и получить плюшки логгера.

После непродолжительно использования (я заметил, что в этом проекте форматированию уделяется столько же мало внимания как и в других), я сделал несколько пулл реквестов, чтобы добавить возможность вывода миллисикунд, поддержку форматированного вывода ошибок, но после непродолжительного общения с автором и 4-5 пулл реквестов, стало очевидно, что автор хочет идти своей дорогой и я форкнул проект добавив, то о чем мечтал.

Поковырявшись в коде я заметил, что автор оптимизировал код для бенчмарка. Свое мнение о таких вещах я лучше оставлю при себе.

Что я изменил в самой либе, оставив не тронутыми обработчики:

- Выкинул все такие оптимизации для бенчмарка — писать такое себя не уважать.

- Формат сообщений в intel: нужно у полей объекта-записи указывать их тип (н-р ‘[%(date)s] %(name)s:: %(message)s’), но ведь типы этих полей известны — так зачем нужно их указывать. Вместо этого я взял формат сообщений из logback.

- Для форматирования аргументов при логгировании используется свой собственный аналог util.format причем со своими ключами и он же используется при подмене консоли, то есть если сторонняя либа напишет, что то в консоль мы получим не то что ожидаем. Естественно это было заменено на util.format

- После некоторого профайлинга стало очевидно, что все время уходит на форматирование сообщений. Так как формат обычно задается один раз разумно его оптимизировать, что и было сделано, с помощью new Function формат собирается один раз, а не при каждом вызове.

Есть еще некоторые мелочи, но это будет уже какой то пиар, а не сравнение.

Чтобы показать некоторую пузомерку отличий — маленький замер скорости (код бенчмарка, все версии последние). Просто выведем logger.info(с сообщением):

$ node benchmark/logging.js

console.info x 1,471,685 ops/sec ±0.79% (95 runs sampled)

rufus.info x 200,641 ops/sec ±1.04% (84 runs sampled)

winston.info x 65,567 ops/sec ±0.80% (96 runs sampled)

intel.info x 56,117 ops/sec ±1.51% (92 runs sampled)

bunyan.info x 86,970 ops/sec ±1.71% (81 runs sampled)

log4js.info x 45,351 ops/sec ±3.25% (79 runs sampled)

Fastest is console.info

При вот таком формате сообщений ‘[%date] %logger:: %message’, который разумно ожидать всегда. Попробуем заменить на стандартное сообщение в intel, чтобы ощутить всю мощь оптимизаций:

$ node benchmark/logging.js

console.info x 1,569,375 ops/sec ±0.66% (95 runs sampled)

rufus.info x 199,138 ops/sec ±0.81% (97 runs sampled)

winston.info x 66,864 ops/sec ±0.84% (91 runs sampled)

intel.info x 173,483 ops/sec ±5.64% (59 runs sampled)

bunyan.info x 86,357 ops/sec ±1.02% (94 runs sampled)

log4js.info x 49,978 ops/sec ±2.29% (81 runs sampled)

Fastest is console.info

Интересное изменение.

Вообщем, то все. Если кому интересно — форк (я скорее всего не буду принимать feature запросы, так как писал для себя в свободное время, с багами и пулл реквестами добро пожаловать).

Как всегда, надеюсь в комментариях найти что-то новое для себя. Ошибки пожалуйста в личку.

Logging refers to the process of recording some detail about application

behavior and storing it in a persistent medium. Having a good logging system is

a key feature that helps developers, sysadmins, and support teams to monitor the

behavior of an application in production, and solve problems as they appear.

Troubleshooting is not the only valid reason to log. Product teams and designers

also use logs to track user behavior (such as A/B testing), and the marketing

department can measure the impact of a specific marketing campaign through the

logs.

In short, logging can provide value for every department in an organization

provided that the correct things are logged in the right format, and analyzed

using a specialized tool.

This tutorial will explain the basics of logging in Node.js starting from the

built-in console module, then proceed to topics like choosing a logging

framework, using the right log format, structuring your messages and sending

them to a log management system for long-term storage and further analysis.

🔭 Want to centralize and monitor your Node.js logs?

Head over to Logtail and start ingesting your logs in 5 minutes.

Prerequisites

Before you proceed with this article, ensure that you have a recent version of

Node.js and npm installed locally on your

machine. Also, you can sign up for a free

Logtail account if you’d like to centralize

your application logs in one place but this is not required to follow through

with this tutorial.

What should you log?

Before we discuss the mechanics of Node.js logging, let’s discuss the general

things that you should be logging in a Node.js application. It’s possible to log

too much or too little so these general guidelines are helpful when determining

what to log:

- Think about the critical aspects of your program and identify which

information you will want to debug an issue in production. - Log as much as possible in development at the appropriate level and turn off

the superfluous details in production through an environmental variable. You

can always turn them back on if you need to trace a problem more closely. - Log data that can help you profile your code in the absence of specialized

tools. - Log your Node.js errors, whether they are operational or not.

- Log uncaught exceptions and unhandled promise rejections at the highest log

level so that it can be fixed promptly.

It might also be helpful to think about what not to log:

- Don’t log sensitive user information such as passwords, credit card details.

- Avoid logging anything that can cause you to fall afoul of any relevant

regulations in places where your business operates.

Following these simple rules will help if you’re just getting started with

logging. As your application evolves, you’ll figure out how valuable your logs

are and update your logging strategy accordingly.

Logging using the console module

The most common way to log in Node.js is by using methods on the console

module (such as log()). It’s adequate for basic debugging, and it’s already

present in the global scope of any Node.js program. All the methods provided on

the console module log to the console, but there are ways to redirect the

output to a file as you’ll see shortly. These are the console methods commonly

used for logging in Node.js:

console.error(): used for serious problems that occurred during the

execution of the program.console.warn(): used for reporting non-critical unusual behavior.console.trace(): used for debugging messages with extended information (a

stack trace) about application processing.console.info(),console.log(): used for printing informative messages

about the application.

Let’s look at a quick example of using the logging methods on the console

object. Create a main.js file in the root of your project, and populate it

with the following code:

const fruits = [

'apple',

'banana',

'grapefruit',

'mango',

'orange',

'melon',

'pear',

];

const basket = [];

function addToBasket(item) {

if (basket.length < 5) {

// log the action

console.info(`Putting "${item}" in the basket!`);

basket.push(item);

} else {

// log an error if the basket is full

console.error(`Trying to put "${item}" in the full basket!`);

}

}

for (const fruit of fruits) {

addToBasket(fruit);

}

// log the current basket state

console.log('Current basket state:', basket);

Save the file, then run the program using the command below:

You should observe the following output:

Output

Putting "apple" in the basket!

Putting "banana" in the basket!

Putting "grapefruit" in the basket!

Putting "mango" in the basket!

Putting "orange" in the basket!

Trying to put "melon" in the full basket!

Trying to put "pear" in the full basket!

Current basket state: [ 'apple', 'banana', 'grapefruit', 'mango', 'orange' ]

Now that we can log to the console, let’s look at a way to store our log output

in a log file for further processing. You can do this by redirecting the output

of the program to a file as shown below:

node main.js > combined.log

You’ll notice that the following error logs were printed to the console:

Output

Trying to put "melon" in the full basket!

Trying to put "pear" in the full basket!

Meanwhile, you’ll also notice that a new combined.log file is present in the

current working directory. If you inspect the file in your editor or with cat,

you’ll see the following contents:

Output

Putting "apple" in the basket!

Putting "banana" in the basket!

Putting "grapefruit" in the basket!

Putting "mango" in the basket!

Putting "orange" in the basket!

Current basket state: [ 'apple', 'banana', 'grapefruit', 'mango', 'orange' ]

The reason why the error logs were printed to the console instead of being sent

to the combined.log file is that the error() method prints its messages to

the standard error (stderr) and the > operator works for messages printed to

the standard output (stdout) alone (both info() and log() print to

stdout).

To ensure that error logs are also placed in a file, you need to use the 2>

operator as shown below:

node main.js > main.log 2> error.log

Using > main.log lets you redirect the stdout contents to the main.log

file while 2> error.log redirects the contents of stderr to the error.log

file. You can inspect the contents of both files using cat as shown below:

This outputs the following:

Output

Putting "apple" in the basket!

Putting "banana" in the basket!

Putting "grapefruit" in the basket!

Putting "mango" in the basket!

Putting "orange" in the basket!

Current basket state: [ 'apple', 'banana', 'grapefruit', 'mango', 'orange' ]

Next, display the contents of the error.log file:

Which should yield the following output:

Output

Trying to put "melon" in the full basket!

Trying to put "pear" in the full basket!

If you want to log both types of messages to a single file, you can do the

following:

node main.js > app.log 2>&1

This would redirect the stdout file descriptor to the app.log and redirect

stderr to stdout.

Output

Putting "apple" in the basket!

Putting "banana" in the basket!

Putting "grapefruit" in the basket!

Putting "mango" in the basket!

Putting "orange" in the basket!

Trying to put "melon" in the full basket!

Trying to put "pear" in the full basket!

Current basket state: [ 'apple', 'banana', 'grapefruit', 'mango', 'orange' ]

To learn more about input or output redirection, you can read more about file

descriptors on the wooledge pages.

Don’t forget to check out the

Node.js Console documentation to learn

more about the other features of the console module.

Why you need a logging framework

Using the methods on the console module is a good way to get started with

Node.js logging, but it’s not adequate when designing a logging strategy for

production applications due to its lack of convenience features like log levels,

structured JSON logging, timestamps, logging to multiple destinations, and more.

These are all features that a good logging framework takes care

of so that you can focus on the problem you’re trying to

solve instead of logging details.

There are a lot of options out there when it comes to logging frameworks for

Node.js. They mostly offer similar features so choosing between them often boils

down to the one whose API you love the most. Here’s a brief overview of the most

popular logging packages on NPM that you can check out:

- Winston: the most popular and

comprehensive logging framework for Node.js - Pino: offers an extensive feature-set and claims to be

faster than competing libraries. - Bunyan: provides structured JSON

logging out of the box. - Roarr: use this if you need a single

library for logging in Node.js and the browser.

In this tutorial, we’ll be demonstrating some basic features of a logging

framework through Winston since it remains the most popular logging framework

for Node.js at the time of writing.

Getting started with Winston

Winston is a multi-transport async

logging library for Node.js with rich configuration abilities. It’s designed to

be a simple and universal logging library with maximal flexibility in log

formatting and transports (storage). You can install Winston in your project

through npm:

After installing Winston, you can start logging right away by using the default

logger which is accessible on the winston module. Clear your main.js file

before populating it once more with the following code:

const winston = require('winston');

const consoleTransport = new winston.transports.Console();

winston.add(consoleTransport);

winston.info('Getting started with Winston');

winston.error('Here is an error message');

Before you can use the default logger, you need to set at least one transport

(storage location) on it because none are set by default. In the snippet above,

we’ve set the

Console transport

which means that subsequent log messages will be outputted to the Node.js

console. Run the program to see this in action:

You should observe the following output:

{"level":"info","message":"Getting started with Winston"}

{"level":"error","message":"Here is an error message"}

Notice that the default logger has been configured to format each log message as

JSON instead of plain text. This is done to ensure that log entries are

structured in a consistent manner that allows them to be easily searched,

filtered and organised by a log management system.

Without structured logging, finding and extracting the useful data that is

needed from your logs will be a tedious experience because you’ll likely need to

write a custom parsing algorithm for extracting relevant data attributes from

plain text messages, and this task can become quite complicated if the

formatting of each message varies from entry to entry.

Winston uses JSON by default, but it provides some other predefined options like

simple, cli, and logstash which you can investigate further. You can also

create a completely custom format by using winston.format. Under the hood,

this uses the logform module to format

the messages.

Since JSON is both human and machine-readable, it remains the go-to format for

structured logging in most Node.js applications. We recommend that you stick

with it unless you strongly prefer some other structured format (such as

logfmt for example).

Understanding Log levels

In the previous code block, you’ll notice the presence of the level property

in each log entry. The value of this property indicates how important the

message is to the application. Notably, this is absent in the native Console

module, and it’s one of the major reasons why its methods are unsuitable for

serious production-ready applications.

In general, log levels indicate the severity of the logging message. For

example, an info message is just informative, while a warn message indicates

an unusual but not critical situation. An error message indicates that

something failed but the application can keep working, while a fatal or

emergency message indicates that a non-recoverable error occurred and

immediate attention is needed to resolve the issue.

The exact log levels available to you will depend on your framework of choice,

although this is usually configurable. Winston provides six log levels on its

default logger and six corresponding methods which are ordered from the most

severe to the least severe below:

const levels = {

error: 0,

warn: 1,

info: 2,

http: 3,

verbose: 4,

debug: 5,

silly: 6

};

Severity ordering in Winston conforms to the order specified by the

RFC5424 document in which the most severe

level is numbered 0, and each subsequent level ascends numerically ensuring

that the least severe level has the highest number.

winston.error('error');

winston.warn('warn');

winston.info('info');

winston.verbose('verbose');

winston.debug('debug');

winston.silly('silly');

The log level for an entry has an important consequence when logging. It

determines if the entry will be emitted by the logger during program execution.

You can test this out by placing each of the six logging methods above in your

main.js file and executing it. You’ll notice that only the first three appear

in the Node.js console:

Output

{"level":"error","message":"error"}

{"level":"warn","message":"warn"}

{"level":"info","message":"info"}

That’s because the default logger is set to log at the info level by default.

This means that only messages with a minimum severity of info (or a maximum

number of 2) will be logged to the configured transport (the console in this

case). This behavior can be changed by customizing the level property on the

transport as shown below:

. . .

winston.add(consoleTransport);

consoleTransport.level = 'silly';

winston.error('error');

winston.warn('warn');

winston.info('info');

winston.verbose('verbose');

winston.debug('debug');

winston.silly('silly');

With the minimum severity level now set to silly, all the logging methods

above will now produce some output:

Output

{"level":"error","message":"error"}

{"level":"warn","message":"warn"}

{"level":"info","message":"info"}

{"level":"verbose","message":"verbose"}

{"level":"debug","message":"debug"}

{"level":"silly","message":"silly"}

It’s important to log at the appropriate level so that it’s easy to distinguish

between purely informative events and potentially critical problems that need to

be addressed immediately. Log levels also help to reduce the verbosity of

logging so that some messages are essentially turned off where they are not

needed. Usually, production environments will run the application at the info

level by default while testing or debugging environments typically run at the

debug or the lowest level in the hierarchy.

This setting is usually controlled through an environmental variable to avoid

modifying the application code each time the log level needs to be changed.

consoleTransport.level = process.env.LOG_LEVEL;

A starting point for your log entries

A good log entry should consist of at least the following three fields:

timestamp: the time at which the entry was created so that we can filter

entries by time.level: the log level, so that we can filter by severity.message: the log message that contains the details of the entry.

Using the default Winston logger gives us only two of the three properties, but

we can easily add the third by creating a custom logger. Update your main.js

file as shown below:

const winston = require('winston');

const logger = winston.createLogger({

level: 'info',

format: winston.format.json(),

transports: [new winston.transports.Console()],

});

logger.info('Info message');

Three basic things to configure on a custom logger are the minimum log level,

the format of the log messages, and where the logs should be outputted. This

logger above does not behave too differently from the default logger at the

moment, but we can easily customize it further.

For example, let’s add the missing timestamp field on all log entries. The way

to do this is by creating a custom format that combines the timestamp() and

json() formats as shown below:

const logger = winston.createLogger({

level: 'info',

format: winston.format.combine(

winston.format.timestamp(),

winston.format.json()

),

transports: [new winston.transports.Console()],

});

logger.info('Info message');

After configuring the logger as shown above, a timestamp field will be

included in each entry:

Output

{"level":"info","message":"Info message","timestamp":"2022-01-22T08:24:44.305Z"}

You can also configure the format of the datetime value in the timestamp filed

by passing an object to the timestamp() method as shown below. The string

accepted by the format property must be one that can be parsed by the

fecha module.

winston.format.timestamp({

format: 'YYYY-MM-DD HH:mm:ss',

})

This yields the following output:

{"level":"info","message":"Info message","timestamp":"2022-01-23 13:46:35"}

Writing good log messages

The way messages are crafted is essential to good logging practices. The whole

point of logging is to help you understand what is happening in your

application, so it’s necessary to adequately describe the details of each entry

using detailed and concise language so that your logs don’t turn out to be

useless when you need them the most. Some examples of bad log messages include

the following:

Output

Something happened

Transaction failed

Couldn't open file

Failed to load resource

Task failed successfully

Here are examples of better log messages:

Output

Failed to open file 'abc.pdf': no such file or directory

Cache hit for image '59AIGo0TMgo'

Transaction 3628122 failed: cc number is invalid

Adding context to your log entries

Another important way to furnish your log entries with useful details is by

adding extra fields to each JSON object aside from the three already discussed.

A good starting point for the data points that you can add to your logs include

the following:

- HTTP request data such as the route path or verb.

- IP addresses.

- Session identifiers.

- Order or transaction IDs.

- Exception details.

You can do so by passing an object as the second argument to each logging

method:

logger.info('Starting all recurring tasks', {

tag: 'starting_recurring_tasks',

id: 'TaskManager-1234729',

module: 'RecurringTaskManager',

});

This yields the following output:

Output

{"id":"TaskManager-1234729","level":"info","message":"Starting all recurring tasks","module":"RecurringTaskManager","tag":"starting_recurring_tasks","timestamp":"2022-01-23 14:51:17"}

If you add context to your log entries in this manner, you won’t need to repeat

the information in the message itself. This also makes it easy to filter your

logs, or to find a specific entry based on some criteria.

Storing logs in files

Logging to the console may be good enough in development, but it’s important to

record the entries into a more permanent location when deploying to production.

Winston provides a

File transport

to help you direct entries to a file. You can use it via the transports

property as shown below

const logger = winston.createLogger({

levels: logLevels,

level: 'trace',

format: winston.format.combine(

winston.format.timestamp(),

winston.format.json()

),

transports: [new winston.transports.File({ filename: 'combined.log' })],

});

This replaces the Console transport with the File transport so all emitted

entries will now be placed in the combined.log file. You can log to more than

one transport at once so you can log to both the console and a file using the

snippet below:

transports: [

new winston.transports.Console(),

new winston.transports.File({ filename: 'combined.log' }),

]

To prevent a log file from getting too big, you should rotate them through a

transport like the

winston-daily-rotate-file.

You can also use an external tool like

logrotate if you’re deploying to a

Linux-based operating system.

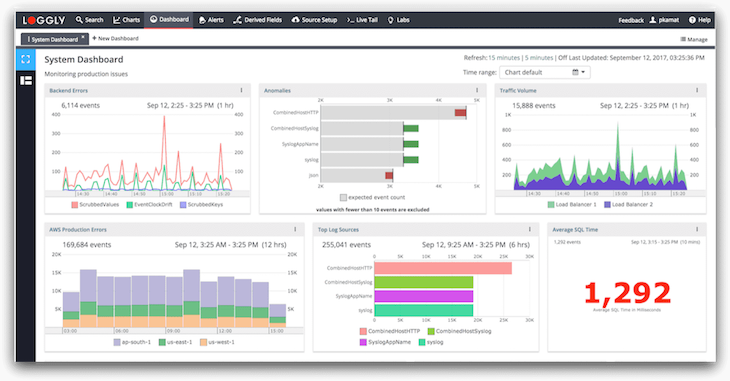

Aggregating your logs

Once you’re used to writing and reading logs, you’ll want to aggregate them in a

specialized log management tool. This helps you centralize your logs in one

place, and filter them to debug an issue or gather insights from them in various

ways. You can even discover usage patterns that could come in handy when

debugging specific issues, or create alerts to get notified when a specific

event occurs.

Logtail is a specialized log management tool

that integrates perfectly with several Node.js logging frameworks. To use it

with Winston, you’ll need to install the

@logtail/node and

@logtail/winston packages:

npm install @logtail/node @logtail/winston

Afterward, you can set Logtail as one of the transport options on your Winston

logger and log as normal. Note that you’ll need to

sign up for Logtail to retrieve your source

token. Ensure to replace the <your_source_token> placeholder below with this

token string.

const winston = require('winston');

const { Logtail } = require('@logtail/node');

const { LogtailTransport } = require('@logtail/winston');

// Create a Logtail client

const logtail = new Logtail('<your_source_token>');

const logger = winston.createLogger({

level: 'info',

format: winston.format.combine(

winston.format.timestamp(),

winston.format.json()

),

transports: [new winston.transports.Console(), new LogtailTransport(logtail)],

});

logger.info('Info message');

After executing the above snippet, you should see the following output in the

Live tail section on the Logtail dashboard.

For more information on how Logtail integrates with Node.js applications, please

see the full documentation.

Summary

- Logging is a necessary task in application development and it should be taken

seriously. - Due to the deficiencies of the Node.js

consolemodule, it is recommended

that a suitable logging framework is employed for this task. - Structured logging is key for automated processing (such as for alerting or

auditing). - Use JSON format for log entries to maintain human and machine readability.

- Always log at the appropriate level and turn off superfluous levels in

production. - Ensure all log entries have a timestamp, log level, and message.

- Improve your log entries with contextual information.

- Use a log management solution, such as Logtail, to aggregate and monitor your

logs as this can help you drastically improve the speed at which issues are

resolved.

Conclusion and next steps

We hope this article has helped you learn enough to get started with logging in

your Node.js applications. As the title suggests, this is only the starting

point of your logging journey, so feel free to do some more research on this

topic as needed. We also have specialized guides that provide more detail on

everything you can do with logging frameworks like

Winston

and Pino, and also some Node.js logging best practices to follow so

ensure to check those out as well.

Thanks for reading, and happy coding!

Centralize all your logs into one place.

Analyze, correlate and filter logs with SQL.

Create actionable

dashboards.

Share and comment with built-in collaboration.

Got an article suggestion?

Let us know

Next article

A Complete Guide to Pino Logging in Node.js

Learn how to start logging with Pino in Node.js and go from basics to best practices in no time.

→

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Building SaaS products is hard. Making customers happy is even harder. I should know, I’ve built a start-up that failed!

But, not everything is that bad. I learned a lot. Now I maintain a few open-source Node.js projects at Sematext. It’s a monitoring SaaS. I joined to help make the log management features as good as they can be. If you’ve never heard that term before, my co-worker wrote this great introductory explanation of what is log management.

Let me tell you, the peeps here at Sematext take logs seriously! But, back to the topic at hand.

I want to take you on a trip to show what I’ve learned so far about logging in Node.js while working on open-source projects. Hopefully, it’ll help you improve your code, make it more robust, and ultimately help you solve bugs and errors more quickly.

There are a few things I want to explain before we dive into some code examples and configurations. Not knowing anything about logging is okay, don’t feel pressured into having to google for explanations. I’ll go over as much as I can without making this article boring. 😉

After the introductory explanations, I’ll jump into code examples and explain what tools to use and what not to use to make your logging setup solid.

Ready to go down the rabbit hole? Let’s go! PS. I really love this song. 😄

Why Is Logging Important?

Reading and analyzing logs and making sense of errors, why they happen, and what they break, is your first line of defense when battling production downtime. These logs tell you what’s happening in your app, what’s misbehaving, and what’s the root cause of the issue you want to fix. Logs help you solve problems and maintain maximum uptime.

Logging in Different Environments

First of all, I want you to know you can gather and monitor logs from several different environments.

- Application

- Containers (Docker / Kubernetes)

- Infrastructure

Based on what you need, and how you configure your infrastructure, you can gather and send logs directly from your Node.js app which is the first option – Application.

Or, you can output logs to stdout/stderr and use a log collector and shipper tool running as a DaemonSet (K8s) / persistent Docker container (Docker) to gather and send these logs to a central location which would work for option 2 – Containers.

Finally, you can write Node.js logs to a file that’s stored on your server, then use a log shipping tool that would natively run on the server to tail this file and send the content to your preferred location for safekeeping if you pick option 3 – Infrastructure.

What Data Should You Log?

Log as much as you can while staying within reason and not breaking the budget.

It makes sense to log as much as you can. With more data, you can visualize issues easier. The better structure you create for the logs, the easier you can solve issues that pop up. But, here’s the kicker. This will make it easier for you to create alerts based on the richness of data and the proper log structure.

I can’t give you the ultimate correct answer for what to log. Every use case is different. But I can give you pointers on how to start.

What NOT to Log?

This is pretty straightforward. Would you like to expose credit card info, passwords, secret keys, your users’ personal data? I’d rather not. I’d also suggest you be careful here. Logs are considered data by law, and laws like GDPR require you to not expose or misuse user data. Here’s an article explaining logging GDPR best practices in simple terms.

To make sure you don’t expose sensitive data, you can drop fields like these or mask them by replacing the values with asterisk symbols (*).

Where to Send Log Data?

Most likely you’ll end up sending and storing logs in Elasticsearch. You can host it yourself, or use a hosted setup, or a SaaS tool. It doesn’t really matter. But I’m warning you, rolling your own will take time and require active maintenance. If you can’t afford that, I’d suggest using a SaaS or Elastic Stack alternative.

You want a tool that can integrate errors and logs so you have all the context you need at your disposal right away.

Some of the most common log management solutions that pop into my mind:

- Sematext

- Logz.io

- LogDNA

- Elasticsearch

If you’re on the market for a logging solution, have a look at this pros & cons comparison of the best log management tools, my co-worker, Radu, wrote. These include both free and paid solutions as well as some open-source tools as well.

We take logging seriously at Sematext. Having logs, metrics, events and alerts in one place lets you pivot and correlate all the data to get to the root cause of issues much faster. You don’t really have to believe me, try for yourself or check these screenshots to see what I mean.

Or, you can check out this short video on Sematext Logs.

How To Log Node.js Applications: Best Practices You Should Follow

I’ll run you through a set of best practices to follow, but more importantly, I’ll show you how to configure it all with code examples, so you can set up your own applications too!

Let’s go!

Use a Structured Logging Format

Please promise me you’ll never use the console.log every again in a production app. Please? Every time you do that a baby penguin dies. I mean, not really, but imagine that happens, and please never do it again. 😄

Structured logging has saved my skin so many times. What is it? It’s pretty self-explanatory. ALWAYS log out JSON objects with a certain predefined set of fields.

This makes it simple to filter logs based on severity levels. Meaning, if they are errors, warnings, debug logs or whatever else.

Structured logging allows you to add metadata to your logs that make your life easier. That’s the goal. Getting alerted when 💩 breaks, and making the 💩-fixing easy. At least that’s my mantra!

Fields in my JSON structured logs I tend to always have are:

- message

- timestamp

- log level

- source

- destination

Yours may vary based on your app and your needs, but try always having the top three from the list above.

Use Node.js Logging Frameworks

Now you’re asking yourself: “Dude WTF, you said no-console, what should I do then!?”

Yeah, you’re right. Use frameworks instead. The ones I’d suggest are:

- Winston

- Morgan

- Pino

In the section below you’ll see how to configure Winston and Morgan. With these tools, you can choose which format your logs will have, where to forward the logs, and much more.

I’ll also show you how to configure a middleware to get API-level logs and metrics for every single one of your API routes! 🤯 I actually wrote a separate blog post about that if you have the time to check it out.

Lastly, I want to walk you through how I configured logging in this open-source tool I maintain. This will show you a practical example of how I do it at work.

Enough talk, let’s write some code.

Set Up an Express.js Application

Let’s start by creating a simple Express.js app. Create a directory called logging-made-easy. Open it up and run this command in your terminal:

npm init -y

npm i express winston morgan morgan-json

Now, open the project with your favorite code editor. I use VS Code, but any will do.

Create a file called app.js and paste in this boilerplate code.

const express = require('express')

const app = express()

app.get('/', (req, res, next) => {

res.status(200).send('Hello World!')

})

app.listen(3000, () =>

console.log('Express.js listening on port 3000.'))

You can add in console.log or console.error statements to write logs to the stdout or stderr streams. Like this:

const express = require('express')

const app = express()

app.get('/', (req, res, next) => {

console.log('This is the "/" route.')

res.status(200).send('Hello World!')

})

app.get('/boom', (req, res, next) => {

try {

throw new Error('Wowza!')

} catch (error) {

console.error('Whooops! This broke with error: ', error)

res.status(500).send('Error!')

}

})

app.listen(3000, () =>

console.log('Express.js listening on port 3000.'))

When you hit the “/” route you’ll see the string “This is the “/” route.” be output to the stdout. If you hit the “/boom” route, you’ll see an error instead.

Now, what’s the difference? It has to do with Linux streams. Not to bore you with details, this article explains it to you much better than I ever could.

What’s important however is that you grasp the notion of log levels! If you want to learn more about log levels, my buddy Rafal, an Solr/Elasticsearch pro, he wrote a short article about what log levels are and how they work.

In the life of a Node.js dev, you can get away with having a rough understanding of the article above and then move on to using npm log levels. Let’s go into that a bit more.

Log Levels Based on Priority

Using proper log level is one of the best practices you should follow for efficient application logging. Log levels indicate message priority. Every level is shown as an integer. I’ll be using and explaining the npm logging levels in this tutorial. They’re prioritized from 0 to 5. Where 0 is the highest, and 5 is the lowest.

- 0: error

- 1: warn

- 2: info

- 3: verbose

- 4: debug

- 5: silly

Using these log levels is a breeze with Winston. And, you guessed it, that’s what I’ll be showing now.

Use Winston for Logging

You already installed Winston above. What needs to be done now, is to add it to the code.

Start by creating another file called logger.js. Paste this snippet into the file.

const winston = require('winston')

const options = {

file: {

level: 'info',

filename: './logs/app.log',

handleExceptions: true,

json: true,

maxsize: 5242880, // 5MB

maxFiles: 5,

colorize: false,

},

console: {

level: 'debug',

handleExceptions: true,

json: false,

colorize: true,

},

};

const logger = winston.createLogger({

levels: winston.config.npm.levels,

transports: [

new winston.transports.File(options.file),

new winston.transports.Console(options.console)

],

exitOnError: false

})

module.exports = logger

This config will add file and console transports in the Winston configuration. Remember when I mentioned logging in different environments above? Here’s why. I wanted you to grasp the notion of being able to both output logs to the console and write them to a file, based on what tool you’re using to forward them to a central location.

Transports are a concept introduced by Winston that refer to the storage/output mechanisms used for the logs.

Winston comes with three core transports – console, file, and HTTP. I’ll explain the console and file transports in this article.

The console transport will log information to the console, and the file transport will log information to a file. Each transport definition can contain its own configuration settings such as file size, log levels, and log format.

When specifying a logging level in Winston for a particular transport, anything at that level or higher will be logged. For example, by specifying a level of info, anything at level error, warn, or info will be logged.

Log levels are specified when calling the logger, meaning we can do the following to record an error: logger.error('test error message').

Let’s move on and require the logger in the app.js, then replace all console statements with logger. Here’s what your app.js should look like now.

const express = require('express')

const app = express()

const logger = require('./logger')

app.get('/', (req, res, next) => {

logger.debug('This is the "/" route.')

res.status(200).send('Hello World!')

})

app.get('/boom', (req, res, next) => {

try {

throw new Error('Wowza!')

} catch (error) {

logger.error('Whooops! This broke with error: ', error)

res.status(500).send('Error!')

}

})

app.listen(3000, () =>

logger.info('Express.js listening on port 3000.'))

When hitting the routes now, you’ll see logs being written in JSON format. They’re structured exactly like you want them! Error logs will have fields called error making debugging much easier.

Set Up Error Handling

Having a solid configuration for error handling is crucial in every production environment. With Express.js you can configure a middleware to catch any errors that happen so you don’t need to handle errors for every API route separately. If you want to find out more about that, read my blog post about Express.js performance best practices for running Express.js in production.

The concept of a middleware in Express.js is rather simple. They are functions with access to the req and res objects, but also a next function that passes control to the next function in the middleware chain. The official Express.js docs explain it in more detail.

Let me break it down to simpler terms though. This means you can call the next() function with the error as the parameter and handle errors down the middleware chain.

Here’s an example. Above the app.listen section of your app.js file add this piece of code.

app.get('/errorhandler', (req, res, next) => {

try {

throw new Error('Wowza!')

} catch (error) {

next(error)

}

})

app.use(logErrors)

app.use(errorHandler)

function logErrors (err, req, res, next) {

console.error(err.stack)

next(err)

}

function errorHandler (err, req, res, next) {

res.status(500).send('Error!')

}

app.listen(3000, () =>

logger.info('Express.js listening on port 3000.'))

By calling app.use() with a function as a parameter you’ll add error handling middleware functions to the middleware chain.

Look at the new “/errorhandler” route. I’m calling the next(error) function which passes the error as a parameter to the logErrors and errorHandler functions respectively. This loosens the pressure of you having to write error handlers in every API route separately. Very convenient in my opinion.

If error handling sounds like an alien to you right now, I recommend you also check out my blog post about Node.js error handling best practices where I’m sharing tips & tricks that I’ve learned over the years while working with Node.js logs.

Use Morgan for Logging HTTP Requests

Morgan is a HTTP logger middleware for Express.js. It’s built to output HTTP logs to the console. I’ll show you how to define a stream function that will be able to get morgan-generated output into the Winston log files.

But, I won’t settle with only logging the HTTP requests. I want to have actionable metrics as well. So, I’ll show you how to configure a nice format with response times, status codes, and much more.

Start by creating a file called httpLogger.js and paste this code in it.

const morgan = require('morgan')

const json = require('morgan-json')

const format = json({

method: ':method',

url: ':url',

status: ':status',

contentLength: ':res[content-length]',

responseTime: ':response-time'

})

const logger = require('./logger')

const httpLogger = morgan(format, {

stream: {

write: (message) => {

const {

method,

url,

status,

contentLength,

responseTime

} = JSON.parse(message)

logger.info('HTTP Access Log', {

timestamp: new Date().toString(),

method,

url,

status: Number(status),

contentLength,

responseTime: Number(responseTime)

})

}

}

})

module.exports = httpLogger

Next, add the httpLogger to the app.js.

const express = require('express')

const app = express()

const logger = require('./logger')

const httpLogger = require('./httpLogger')

app.use(httpLogger)

app.get('/', (req, res, next) => {

res.status(200).send('Hello World!')

})

app.get('/boom', (req, res, next) => {

try {

throw new Error('Wowza!')

} catch (error) {

logger.error('Whooops! This broke with error: ', error)

res.status(500).send('Error!')

}

})

app.get('/errorhandler', (req, res, next) => {

try {

throw new Error('Wowza!')

} catch (error) {

next(error)

}

})

app.use(logErrors)

app.use(errorHandler)

function logErrors (err, req, res, next) {

console.error(err.stack)

next(err)

}

function errorHandler (err, req, res, next) {

res.status(500).send('Error!')

}

app.listen(3000, () =>

logger.info('Express.js listening on port 3000.'))

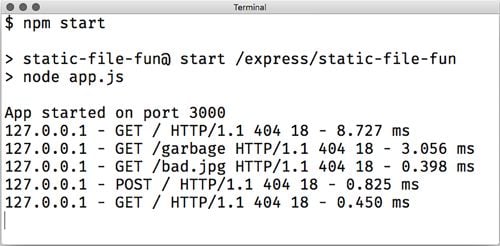

There we go. Hitting the API routes now will generate HTTP access logs with actionable metrics you can use when debugging issues in your production Node.js app.

{"timestamp":"Tue Nov 24 2020 15:58:01 GMT+0100 (Central European Standard Time)","method":"GET","url":"/","status":200,"contentLength":"12","responseTime":7.544,"level":"info","message":"HTTP Access Log"}

We’re getting somewhere with this logging magic, aren’t we!

This format above is what I use in one of the open-source tools I maintain. Having insight into response times and status codes is crucial when trying to find errors and debug issues. You can read this blog post to find out more about how I suggest hooking up monitoring and logging for a Node.js app in production.

I mentioned briefly the notion of logging in different environments. Whether you’re using a plain server, or running containers, the approach to logging can be different.

Logging Node.js Apps that Run on Servers

There are two ways to go about creating a nice logging setup for your Node.js app that’s running natively on a plain server.

You can either log everything to a file, that you then tail and forward to a central location, or forward the logs from the app directly.

Both of these options work with Winston. But, Morgan in this sense, is just a logging library, as it helps you format and structure your logs. While Winston is a fully-fledged log shipper. Read this article about the differences between logging libraries and log shippers, if you want to learn more.

Winston has tons of transports you can choose from.

You can pick from syslog, HTTP, or any vendor-specific transport. Here’s an example of using some common transports.

First, AWS Cloudwatch.

const winston = require('winston')

const CloudWatchTransport = require('winston-aws-cloudwatch')

const logger = winston.createLogger({

transports: [

new CloudWatchTransport({

logGroupName: '...', // REQUIRED

logStreamName: '...', // REQUIRED

createLogGroup: true,

createLogStream: true,

submissionInterval: 2000,

submissionRetryCount: 1,

batchSize: 20,

awsConfig: {

accessKeyId: '...',

secretAccessKey: '...',

region: '...'

},

formatLog: item =>

`${item.level}: ${item.message} ${JSON.stringify(item.meta)}`

})

]

})

Here’s one I maintain for Sematext Logs.

const Logsene = require('winston-logsene')

const { createLogger, config } = require('winston')

const logger = createLogger({

levels: config.npm.levels,

transports: [

new Logsene({

token: process.env.LOGS_TOKEN,

level: 'debug',

type: 'test_logs',

url: '<https://logsene-receiver.sematext.com/_bulk>'

})

]

})

Finally, you can always rely on using the file transport.

const winston = require('winston')

const options = {

file: {

level: 'info',

filename: `./logs/app.log`,

handleExceptions: true,

json: true,

maxsize: 5242880, // 5MB

maxFiles: 5,

colorize: false,

}

}

const logger = winston.createLogger({

levels: winston.config.npm.levels,

transports: [

new winston.transports.File(options.file)

],

exitOnError: false

})

In this case, you need to pick a log shipper that’ll run on the server. This shipper will collect, parse, and send the logs to whichever location you choose. It can be Sematext, Elasticsearch, CloudWatch, whatever.

From the available log shippers, I’d suggest you use:

- Vector

- Fluentbit

- Logagent

- Logstash

But, any will do, as long as you are comfortable with it. You’ll configure it to tail your log file and send its content to your location of choice.

Logging Node.js Apps that Run in Containers

If you’re running your Node.js app in a Docker container, then you should use the Console transport to output all logs to the Docker socket. What’s a Docker socket!? I explained it in more detail in this article about Docker logs location.

When logging in Docker, all logs should be written to the console. These logs are then piped through one socket. You should use a cluster level agent to listen to this socket, gather all Docker logs, and forward them to a central location.

const winston = require('winston')

const options = {

console: {

level: 'info',

handleExceptions: true,

json: true

}

}

const logger = winston.createLogger({

levels: winston.config.npm.levels,

transports: [

new winston.transports.Console(options.console)

],

exitOnError: false

})

module.exports = logger

As with plain servers, choose a log shipper and run it in your cluster as a DaemonSet. This will ensure every host has a log shipper listening to the Docker socket.

Phew, that was a lot of info to digest. Let’s have a quick rest. Now’s the perfect time to go over some of the available tools you can use to store the logs.

Final Thoughts About Node.js Logging

There’s one last piece of advice I want to share. Logs are not living up to their full potential if you’re not getting alerted when shit breaks. Use a centralized logging SaaS like Sematext Logs, or whichever tool you like. We rounded up some of the best log management tools, log analysis tools, and cloud logging services if you want to check them out and see how Sematext stacks against them.

You want to see all logs across your entire infrastructure, cluster, servers, apps in one place. This will help you pinpoint issues and pivot between logs and metrics to find spikes and drill down to issues faster. This is what we’re building at Sematext. I want you to pivot between Node.js logs and metrics with two clicks.

You can pinpoint the errors with filters and fields to exclude redundant info and isolate the issues you’re looking for. Then, taking this a bit further, enable alerting when errors like these happen again. You need to know when 💩 breaks before your customers do!

If you need a central location for your logs, check out Sematext Logs. We’re pushing to open source our products and make an impact. If you’d like to try us out and monitor your Node.js applications, sign up to get a 14-day pro trial, or choose the free tier right away.

on

August 07, 2020

When visiting a new website, it is quite normal to get carried away by the bells and whistles of the fancy UI and UX and not be able to appreciate all the lower level, back-end code that runs tirelessly to ensure a smooth and fast website experience. This is because your front-end HTML code has a visually rich browser page interface as a platform to showcase its output. Whereas your back-end, server-side code usually only has a console at its disposal. The console is the only window to the execution of your back-end code. And the only way we can effectively utilize this console is by extensively, yet intelligently logging information in our application.

Logging is the practice of keeping track of events that take place over the course of your application’s life. It allows you to record important information about its execution to keep track of its correctness, accuracy, quality, and overall performance.

Logging plays an integral role throughout the whole pipeline of any software application: from development to testing to releasing for production, a well-implemented logging system allows you to understand the various aspects of your application’s performance.

In this post, we will first talk about the importance of logging in web applications and about the most common, practical use cases where it would be a good idea to implement logging. Then we will discuss how we can implement logging in Node.js: about the different levels and functions of logging, about how they work under the hood, and the different places in which we can output our logs. To understand from a real-life example, we will also create a basic Express.js based server application and set up a basic logging system in it. Since implementing an extensive, fully functional logging system can be a daunting task if you are just starting out, we will also learn to use one of the most popular third-party open-source logging frameworks for Node.js — Winston, that can ease off your burden and provide a great deal of flexibility in logging.

If you haven’t already been logging in your code, by the end of this post, you should be convinced about its importance and be able to easily implement it in your Node.js application.

Here’s an outline of what we’ll be covering in this post so you can navigate the guide or skip ahead in the tutorial:

- Why Is Node.js Logging Important?

- What and When to Log: Use Cases for Logging

- How to Log: Various Logging Methods in Node.js

- How Node.js Logging Works Under The Hood ⚙️

- Where to Log 📝

- Logging in an Express.js Server App

- Third-Party Logging Frameworks — Winston 👨🏻🔧

- Recap and Summary 🔁

Let’s get started!

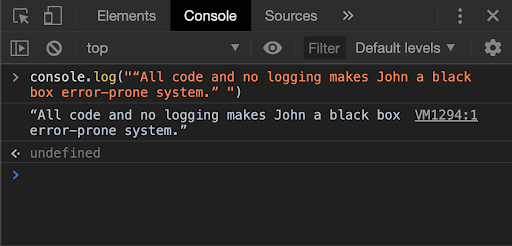

Why Is Node.js Logging Important? 🖨

Logging in Programming Languages]

Before we talk about logging in Node.js based web applications, let us look into how we directly benefit from well-implemented logging systems on a daily basis. Each time you write basic code in any programming language and make an error (for example, forgetting to close a parenthesis), you are encountered by a straightforward error message. It provides information about what the error is, where it happened, and (quite often) even about how it can be fixed.

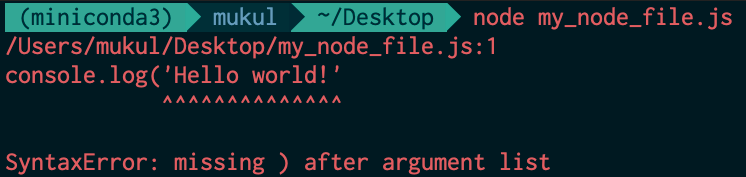

Example syntax error in Node.js

Example syntax error in Node.js

Thanks to excellent in-built logging systems in these programming languages, developers can be alerted about the weak links in their code through errors and warnings. If this wasn’t the case, it would take you ages of proof-reading your code to figure out why it didn’t output anything; you wouldn’t know about error occurrences right away, let alone where those errors happened and how they can be fixed.