From Wikipedia, the free encyclopedia

Out-of-bag (OOB) error, also called out-of-bag estimate, is a method of measuring the prediction error of random forests, boosted decision trees, and other machine learning models utilizing bootstrap aggregating (bagging). Bagging uses subsampling with replacement to create training samples for the model to learn from. OOB error is the mean prediction error on each training sample xi, using only the trees that did not have xi in their bootstrap sample.[1]

Bootstrap aggregating allows one to define an out-of-bag estimate of the prediction performance improvement by evaluating predictions on those observations that were not used in the building of the next base learner.

Out-of-bag dataset[edit]

When bootstrap aggregating is performed, two independent sets are created. One set, the bootstrap sample, is the data chosen to be «in-the-bag» by sampling with replacement. The out-of-bag set is all data not chosen in the sampling process.

When this process is repeated, such as when building a random forest, many bootstrap samples and OOB sets are created. The OOB sets can be aggregated into one dataset, but each sample is only considered out-of-bag for the trees that do not include it in their bootstrap sample. The picture below shows that for each bag sampled, the data is separated into two groups.

Visualizing the bagging process. Sampling 4 patients from the original set with replacement and showing the out-of-bag sets. Only patients in the bootstrap sample would be used to train the model for that bag.

This example shows how bagging could be used in the context of diagnosing disease. A set of patients are the original dataset, but each model is trained only by the patients in its bag. The patients in each out-of-bag set can be used to test their respective models. The test would consider whether the model can accurately determine if the patient has the disease.

Calculating out-of-bag error[edit]

Since each out-of-bag set is not used to train the model, it is a good test for the performance of the model. The specific calculation of OOB error depends on the implementation of the model, but a general calculation is as follows.

- Find all models (or trees, in the case of a random forest) that are not trained by the OOB instance.

- Take the majority vote of these models’ result for the OOB instance, compared to the true value of the OOB instance.

- Compile the OOB error for all instances in the OOB dataset.

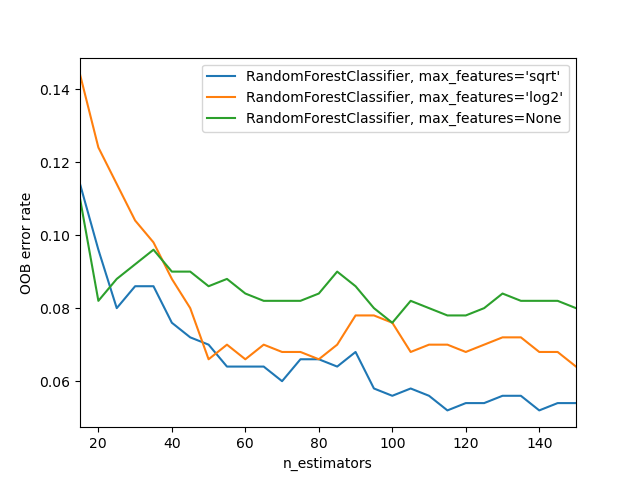

An illustration of OOB error

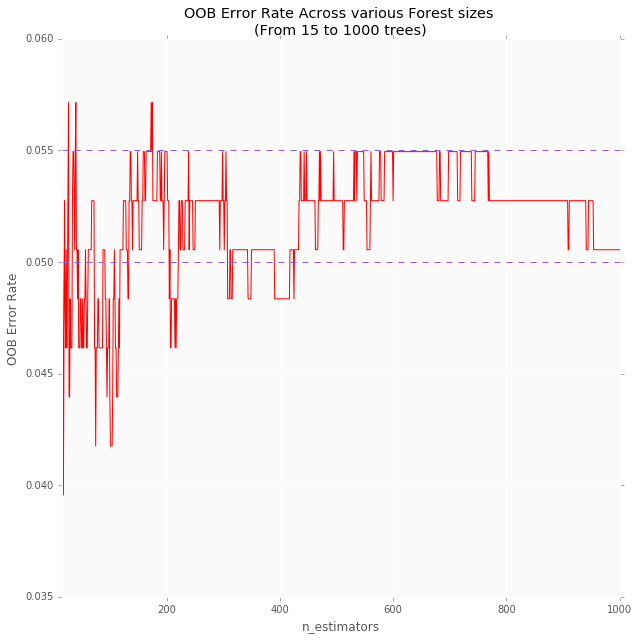

The bagging process can be customized to fit the needs of a model. To ensure an accurate model, the bootstrap training sample size should be close to that of the original set.[2] Also, the number of iterations (trees) of the model (forest) should be considered to find the true OOB error. The OOB error will stabilize over many iterations so starting with a high number of iterations is a good idea.[3]

Shown in the example to the right, the OOB error can be found using the method above once the forest is set up.

Comparison to cross-validation[edit]

Out-of-bag error and cross-validation (CV) are different methods of measuring the error estimate of a machine learning model. Over many iterations, the two methods should produce a very similar error estimate. That is, once the OOB error stabilizes, it will converge to the cross-validation (specifically leave-one-out cross-validation) error.[3] The advantage of the OOB method is that it requires less computation and allows one to test the model as it is being trained.

Accuracy and Consistency[edit]

Out-of-bag error is used frequently for error estimation within random forests but with the conclusion of a study done by Silke Janitza and Roman Hornung, out-of-bag error has shown to overestimate in settings that include an equal number of observations from all response classes (balanced samples), small sample sizes, a large number of predictor variables, small correlation between predictors, and weak effects.[4]

See also[edit]

- Boosting (meta-algorithm)

- Bootstrap aggregating

- Bootstrapping (statistics)

- Cross-validation (statistics)

- Random forest

- Random subspace method (attribute bagging)

References[edit]

- ^ James, Gareth; Witten, Daniela; Hastie, Trevor; Tibshirani, Robert (2013). An Introduction to Statistical Learning. Springer. pp. 316–321.

- ^ Ong, Desmond (2014). A primer to bootstrapping; and an overview of doBootstrap (PDF). pp. 2–4.

- ^ a b Hastie, Trevor; Tibshirani, Robert; Friedman, Jerome (2008). The Elements of Statistical Learning (PDF). Springer. pp. 592–593.

- ^ Janitza, Silke; Hornung, Roman (2018-08-06). «On the overestimation of random forest’s out-of-bag error». PLOS ONE. 13 (8): e0201904. doi:10.1371/journal.pone.0201904. ISSN 1932-6203. PMC 6078316. PMID 30080866.

Get this book -> Problems on Array: For Interviews and Competitive Programming

In this article, we have explored Out-of-Bag Error in Random Forest with an numeric example and sample implementation to plot Out-of-Bag Error.

Table of contents:

- Introduction to Random Forest

- Out-of-Bag Error in Random Forest

- Example of Out-of-Bag Error

- Code for Out-of-Bag Error

Introduction to Random Forest

Random Forest is one of the machine learning algorithms that use bootstrap aggregation. Random Forest aggregates the result of several decision trees. Decision Trees are known to work well when they have small depth otherwise they overfit. When they are used ensemble in Random Forests, this weakness of decision trees is mitigated.

Random Forest works by taking a random sample of small subsets of the data and applies a decision tree classification to them. The prediction of the Random Forest is then a combination of the individual prediction of the decision trees either by summing or taking a majority vote or any other suitable means of combining the results.

The sampling of random subsets (with replacement) of the training data is what is referred to as bagging. The idea is that the randomness in choosing the data fed to each decision tree will reduce the variance in the predictions from the random forest model.

The out-of-bag error is the average error for each predicted outcome calculated using predictions from the trees that do not contain that data point in their respective bootstrap sample. This way, the Random Forest model is constantly being validated while being trained. Let us consider the jth decision tree (DT_j) that has been fitted on a subset of the sample data. For every training observation or sample (z_i = (x_i , y_i)) not in the sample subset of (DT_j) where (x_i) is the set of features and (y_i) is the target, we use (DT_j) to predict the outcome (o_i) for (x_i). The error can easily be computed as (|o_i — y_i|).

The out-of-bag error is thus the average value of this error across all decision trees.

Example of Out-of-Bag Error

The following is a simple example of how this works in practice. Consider this toy dataset which records if it rains given the temperature and humidity:

| S/N | Temperature | Humidity | Rained? |

|---|---|---|---|

| 1 | 33 | High | No |

| 2 | 18 | Low | No |

| 3 | 27 | Low | Yes |

| 4 | 20 | High | Yes |

| 5 | 21 | Low | No |

| 6 | 29 | Low | Yes |

| 7 | 19 | High | Yes |

Assume that a random forest ensemble consisting of 5 decision trees (DT_1 … DT_5) is to be trained on the the dataset. Each tree will be trained on a random subset of the dataset. Assuming for (DT_1) that the randomly selected subset contains the first five samples of the dataset. Therefore, the last two samples 6 and 7 will be the out-of-bag samples on which (DT_1) will be validated. Continuing with the assumption, let the following table represent the prediction of each decision tree on each of its out-of-bag samples:

| Tree | Sample S/N | Prediction | Actual | Error (abs) |

|---|---|---|---|---|

| DT1 | 6 | No | Yes | 1 |

| DT1 | 7 | No | Yes | 1 |

| DT2 | 2 | No | No | 0 |

| DT3 | 1 | No | No | 0 |

| DT3 | 2 | Yes | No | 1 |

| DT3 | 4 | Yes | Yes | 0 |

| DT4 | 2 | Yes | No | 1 |

| DT4 | 7 | Yes | Yes | 1 |

| DT5 | 3 | Yes | Yes | 0 |

| DT5 | 5 | No | No | 0 |

From the above, the out-of-bag error is the average error which is 0.5.

We thus see that because only a subset of the decision trees in the ensemble is used in determining each error that is used to compute the out-of-bag score, it cannot be considered as accurate as a validation score on validation data. However, in cases such as this where the dataset is quite small and it is impossible to set aside a validation set, the out-of-bag error can prove to be a useful metric.

Code for Out-of-Bag Error

Lastly, we demonstrate the use of out-of-bag error in the estimation of a suitable value for the choice of n_estimators for a random forest model in scikit-learn library. This example is adapted from the documentation. The out-of-bag error is measured at the addition of each new tree during training. The resulting plot shows that the choice of 115 for n_estimators is optimal for the classifier (with ‘sqrt’ max_features) in this example.

import matplotlib.pyplot as plt

from collections import OrderedDict

from sklearn.datasets import make_classification

from sklearn.ensemble import RandomForestClassifier

RANDOM_STATE = 123

# Generate a binary classification dataset.

X, y = make_classification(

n_samples=500,

n_features=25,

n_clusters_per_class=1,

n_informative=15,

random_state=RANDOM_STATE,

)

# NOTE: Setting the `warm_start` construction parameter to `True` disables

# support for parallelized ensembles but is necessary for tracking the OOB

# error trajectory during training.

ensemble_clfs = [

(

"RandomForestClassifier, max_features='sqrt'",

RandomForestClassifier(

warm_start=True,

oob_score=True,

max_features="sqrt",

random_state=RANDOM_STATE,

),

),

(

"RandomForestClassifier, max_features='log2'",

RandomForestClassifier(

warm_start=True,

max_features="log2",

oob_score=True,

random_state=RANDOM_STATE,

),

),

(

"RandomForestClassifier, max_features=None",

RandomForestClassifier(

warm_start=True,

max_features=None,

oob_score=True,

random_state=RANDOM_STATE,

),

),

]

# Map a classifier name to a list of (<n_estimators>, <error rate>) pairs.

error_rate = OrderedDict((label, []) for label, _ in ensemble_clfs)

# Range of `n_estimators` values to explore.

min_estimators = 15

max_estimators = 150

for label, clf in ensemble_clfs:

for i in range(min_estimators, max_estimators + 1, 5):

clf.set_params(n_estimators=i)

clf.fit(X, y)

# Record the OOB error for each `n_estimators=i` setting.

oob_error = 1 - clf.oob_score_

error_rate[label].append((i, oob_error))

# Generate the "OOB error rate" vs. "n_estimators" plot.

for label, clf_err in error_rate.items():

xs, ys = zip(*clf_err)

plt.plot(xs, ys, label=label)

plt.xlim(min_estimators, max_estimators)

plt.xlabel("n_estimators")

plt.ylabel("OOB error rate")

plt.legend(loc="upper right")

plt.show()

Пятую статью курса мы посвятим простым методам композиции: бэггингу и случайному лесу. Вы узнаете, как можно получить распределение среднего по генеральной совокупности, если у нас есть информация только о небольшой ее части; посмотрим, как с помощью композиции алгоритмов уменьшить дисперсию и таким образом улучшить точность модели; разберём, что такое случайный лес, какие его параметры нужно «подкручивать» и как найти самый важный признак. Сконцентрируемся на практике, добавив «щепотку» математики.

UPD 01.2022: С февраля 2022 г. ML-курс ODS на русском возрождается под руководством Петра Ермакова couatl. Для русскоязычной аудитории это предпочтительный вариант (c этими статьями на Хабре – в подкрепление), англоговорящим рекомендуется mlcourse.ai в режиме самостоятельного прохождения.

Видеозапись лекции по мотивам этой статьи в рамках второго запуска открытого курса (сентябрь-ноябрь 2017).

План этой статьи

- Бэггинг

- Ансамбли

- Бутстрэп

- Бэггинг

- Out-of-bag ошибка

- Случайный лес

- Алгоритм

- Сравнение с деревом решений и бэггингом

- Параметры

- Вариация и декорреляционный эффект

- Смещение

- Сверхслучайные деревья

- Схожесть с алгоритмом k-ближайших соседей

- Преобразование признаков в многомерное пространство

- Оценка важности признаков

- Плюсы и минусы случайного леса

- Домашнее задание №5

- Полезные источники

1. Бэггинг

Из прошлых лекций вы уже узнали про разные алгоритмы классификации, а также научились правильно валидироваться и оценивать качество модели. Но что делать, если вы уже нашли лучшую модель и повысить точность модели больше не можете? В таком случае нужно применить более продвинутые техники машинного обучения, которые можно объединить словом «ансамбли». Ансамбль — это некая совокупность, части которой образуют единое целое. Из повседневной жизни вы знаете музыкальные ансамбли, где объединены несколько музыкальных инструментов, архитектурные ансамбли с разными зданиями и т.д.

Ансамбли

Хорошим примером ансамблей считается теорема Кондорсе «о жюри присяжных» (1784). Если каждый член жюри присяжных имеет независимое мнение, и если вероятность правильного решения члена жюри больше 0.5, то тогда вероятность правильного решения присяжных в целом возрастает с увеличением количества членов жюри и стремится к единице. Если же вероятность быть правым у каждого из членов жюри меньше 0.5, то вероятность принятия правильного решения присяжными в целом монотонно уменьшается и стремится к нулю с увеличением количества присяжных.

— количество присяжных

— вероятность правильного решения присяжного

— вероятность правильного решения всего жюри

— минимальное большинство членов жюри,

— число сочетаний из

по

Если , то

Если , то

Давайте рассмотрим ещё один пример ансамблей — «Мудрость толпы». Фрэнсис Гальтон в 1906 году посетил рынок, где проводилась некая лотерея для крестьян.

Их собралось около 800 человек, и они пытались угадать вес быка, который стоял перед ними. Бык весил 1198 фунтов. Ни один крестьянин не угадал точный вес быка, но если посчитать среднее от их предсказаний, то получим 1197 фунтов.

Эту идею уменьшения ошибки применили и в машинном обучении.

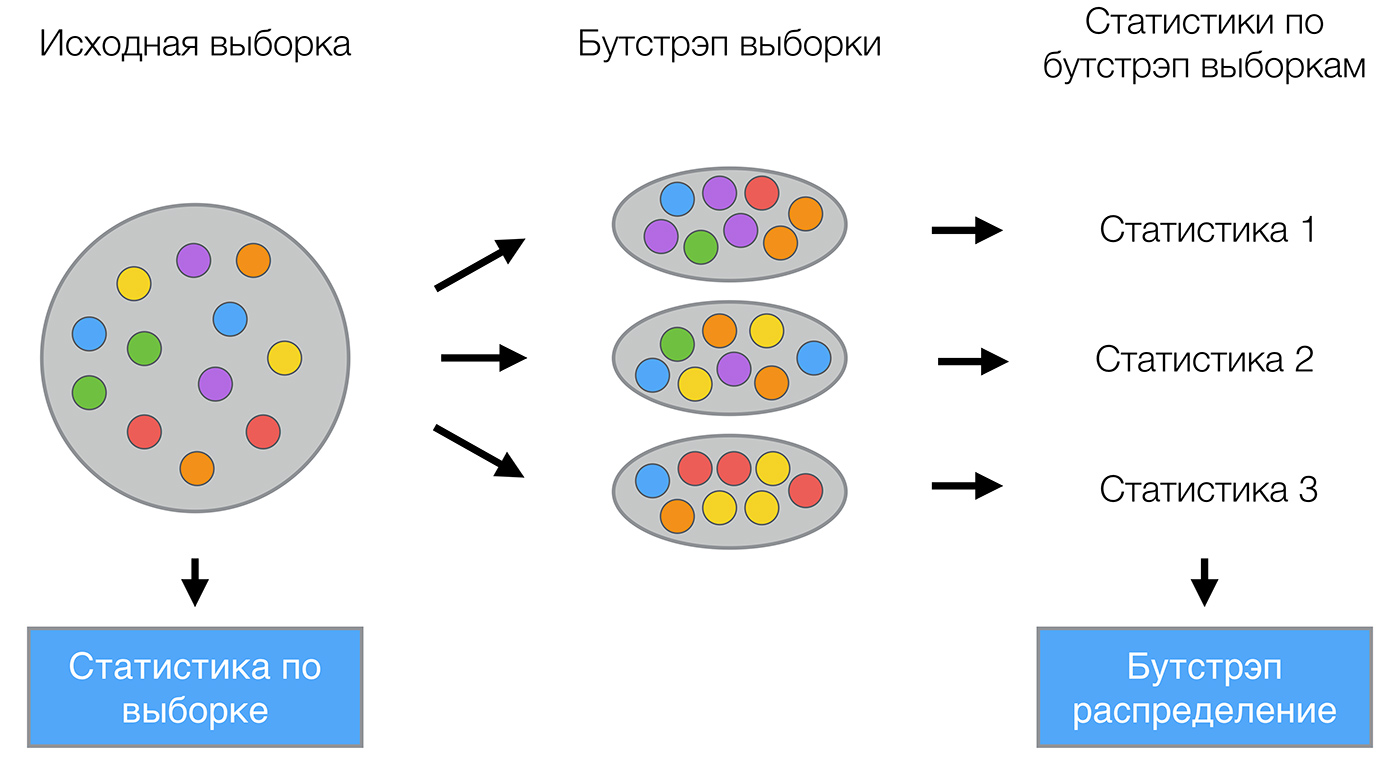

Бутстрэп

Bagging (от Bootstrap aggregation) — это один из первых и самых простых видов ансамблей. Он был придуман Ле́о Бре́йманом в 1994 году. Бэггинг основан на статистическом методе бутстрэпа, который позволяет оценивать многие статистики сложных распределений.

Метод бутстрэпа заключается в следующем. Пусть имеется выборка размера

. Равномерно возьмем из выборки

объектов с возвращением. Это означает, что мы будем

раз выбирать произвольный объект выборки (считаем, что каждый объект «достается» с одинаковой вероятностью

), причем каждый раз мы выбираем из всех исходных

объектов. Можно представить себе мешок, из которого достают шарики: выбранный на каком-то шаге шарик возвращается обратно в мешок, и следующий выбор опять делается равновероятно из того же числа шариков. Отметим, что из-за возвращения среди них окажутся повторы. Обозначим новую выборку через

. Повторяя процедуру

раз, сгенерируем

подвыборок

. Теперь мы имеем достаточно большое число выборок и можем оценивать различные статистики исходного распределения.

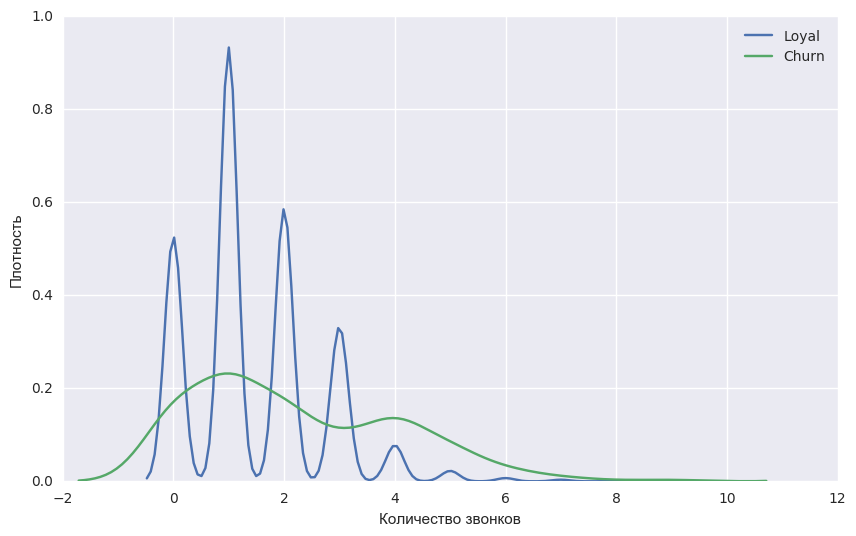

Давайте для примера возьмем уже известный вам набор данных telecom_churn из прошлых уроков нашего курса. Напомним, что это задача бинарной классификации оттока клиентов. Одним из самых важных признаков в этом датасете является количество звонков в сервисный центр, которые были сделаны клиентом. Давайте попробуем визулизировать данные и посмотреть на распределение данного признака.

Код для загрузки данных и построения графика

import pandas as pd

from matplotlib import pyplot as plt

plt.style.use('ggplot')

plt.rcParams['figure.figsize'] = 10, 6

import seaborn as sns

%matplotlib inline

telecom_data = pd.read_csv('data/telecom_churn.csv')

fig = sns.kdeplot(telecom_data[telecom_data['Churn'] == False]['Customer service calls'], label = 'Loyal')

fig = sns.kdeplot(telecom_data[telecom_data['Churn'] == True]['Customer service calls'], label = 'Churn')

fig.set(xlabel='Количество звонков', ylabel='Плотность')

plt.show()Как вы уже могли заметить, количество звонков в сервисный центр у лояльных клиентов меньше, чем у наших бывших клиентов. Теперь было бы хорошо оценить, сколько в среднем делает звонков каждая из групп. Так как данных в нашем датасете мало, то искать среднее не совсем правильно, лучше применить наши новые знания бутстрэпа. Давайте сгенерируем 1000 новых подвыборок из нашей генеральной совокупности и сделаем интервальную оценку среднего.

Код для построения доверительного интервала с помощью бутстрэпа

import numpy as np

def get_bootstrap_samples(data, n_samples):

# функция для генерации подвыборок с помощью бутстрэпа

indices = np.random.randint(0, len(data), (n_samples, len(data)))

samples = data[indices]

return samples

def stat_intervals(stat, alpha):

# функция для интервальной оценки

boundaries = np.percentile(stat, [100 * alpha / 2., 100 * (1 - alpha / 2.)])

return boundaries

# сохранение в отдельные numpy массивы данных по лояльным и уже бывшим клиентам

loyal_calls = telecom_data[telecom_data['Churn'] == False]['Customer service calls'].values

churn_calls= telecom_data[telecom_data['Churn'] == True]['Customer service calls'].values

# ставим seed для воспроизводимости результатов

np.random.seed(0)

# генерируем выборки с помощью бутстрэра и сразу считаем по каждой из них среднее

loyal_mean_scores = [np.mean(sample)

for sample in get_bootstrap_samples(loyal_calls, 1000)]

churn_mean_scores = [np.mean(sample)

for sample in get_bootstrap_samples(churn_calls, 1000)]

# выводим интервальную оценку среднего

print("Service calls from loyal: mean interval", stat_intervals(loyal_mean_scores, 0.05))

print("Service calls from churn: mean interval", stat_intervals(churn_mean_scores, 0.05))В итоге мы получили, что с 95% вероятностью среднее число звонков от лояльных клиентов будет лежать в промежутке между 1.40 и 1.50, в то время как наши бывшие клиенты звонили в среднем от 2.06 до 2.40 раз. Также ещё можно обратить внимание, что интервал для лояльных клиентов уже, что довольно логично, так как они звонят редко (в основном 0, 1 или 2 раза), а недовольные клиенты будут звонить намного чаще, но со временем их терпение закончится, и они поменяют оператора.

Бэггинг

Теперь вы имеете представление о бустрэпе, и мы можем перейти непосредственно к бэггингу. Пусть имеется обучающая выборка . С помощью бутстрэпа сгенерируем из неё выборки

. Теперь на каждой выборке обучим свой классификатор

. Итоговый классификатор будет усреднять ответы всех этих алгоритмов (в случае классификации это соответствует голосованию):

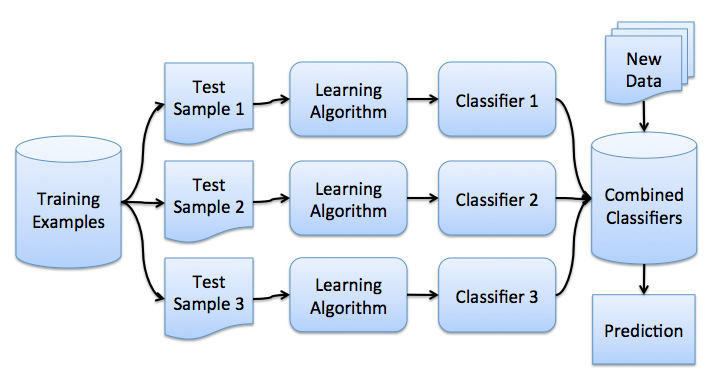

. Эту схему можно представить картинкой ниже.

Рассмотрим задачу регрессии с базовыми алгоритмами . Предположим, что существует истинная функция ответа для всех объектов

, а также задано распределение на объектах

. В этом случае мы можем записать ошибку каждой функции регрессии

и записать матожидание среднеквадратичной ошибки

Средняя ошибка построенных функций регрессии имеет вид

Предположим, что ошибки несмещены и некоррелированы:

Построим теперь новую функцию регрессии, которая будет усреднять ответы построенных нами функций:

Найдем ее среднеквадратичную ошибку:

Таким образом, усреднение ответов позволило уменьшить средний квадрат ошибки в n раз!

Напомним вам из нашего предыдущего урока, как раскладывается общая ошибка:

Бэггинг позволяет снизить дисперсию (variance) обучаемого классификатора, уменьшая величину, на сколько ошибка будет отличаться, если обучать модель на разных наборах данных, или другими словами, предотвращает переобучение. Эффективность бэггинга достигается благодаря тому, что базовые алгоритмы, обученные по различным подвыборкам, получаются достаточно различными, и их ошибки взаимно компенсируются при голосовании, а также за счёт того, что объекты-выбросы могут не попадать в некоторые обучающие подвыборки.

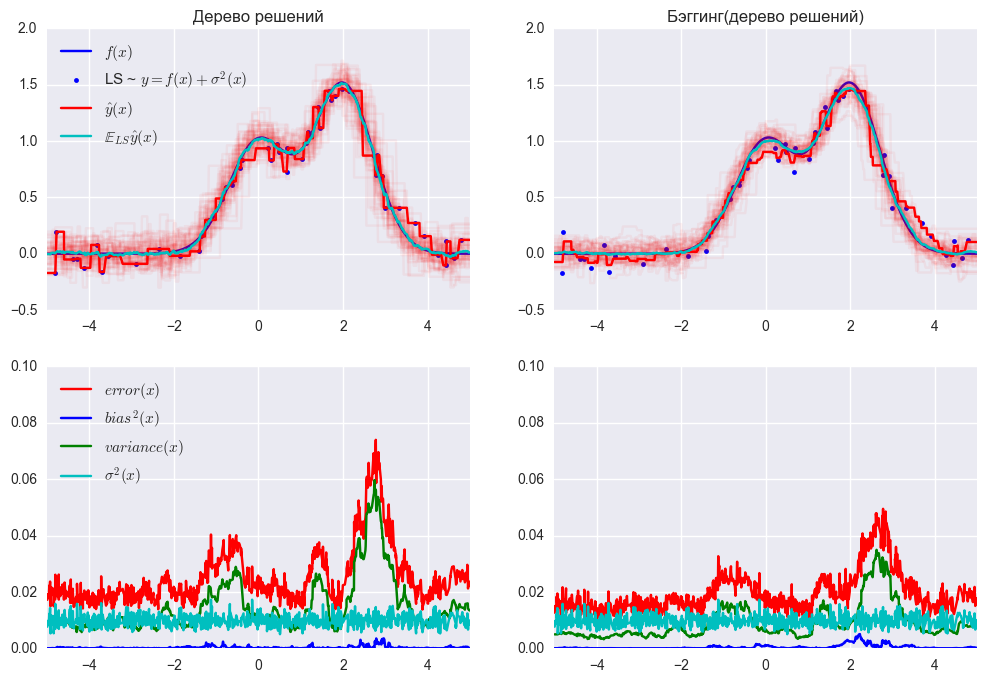

В библиотеке scikit-learn есть реализации BaggingRegressor и BaggingClassifier, которые позволяют использовать большинство других алгоритмов «внутри». Рассмотрим на практике, как работает бэггинг, и сравним его с деревом решений, пользуясь примером из документации.

Ошибка дерева решений

Ошибка бэггинга

По графику и результатам выше видно, что ошибка дисперсии намного меньше при бэггинге, как мы и доказали теоретически выше.

Бэггинг эффективен на малых выборках, когда исключение даже малой части обучающих объектов приводит к построению существенно различных базовых классификаторов. В случае больших выборок обычно генерируют подвыборки существенно меньшей длины.

Следует отметить, что рассмотренный нами пример не очень применим на практике, поскольку мы сделали предположение о некоррелированности ошибок, что редко выполняется. Если это предположение неверно, то уменьшение ошибки оказывается не таким значительным. В следующих лекциях мы рассмотрим более сложные методы объединения алгоритмов в композицию, которые позволяют добиться высокого качества в реальных задачах.

Out-of-bag error

Забегая вперед, отметим, что при использовании случайных лесов нет необходимости в кросс-валидации или в отдельном тестовом наборе, чтобы получить несмещенную оценку ошибки набора тестов. Посмотрим, как получается «внутренняя» оценка модели во время ее обучения.

Каждое дерево строится с использованием разных образцов бутстрэпа из исходных данных. Примерно 37% примеров остаются вне выборки бутстрэпа и не используются при построении k-го дерева.

Это можно легко доказать: пусть в выборке объектов. На каждом шаге все объекты попадают в подвыборку с возвращением равновероятно, т.е отдельный объект — с вероятностью

Вероятность того, что объект НЕ попадет в подвыборку (т.е. его не взяли

раз):

. При

получаем один из «замечательных» пределов

. Тогда вероятность попадания конкретного объекта в подвыборку

.

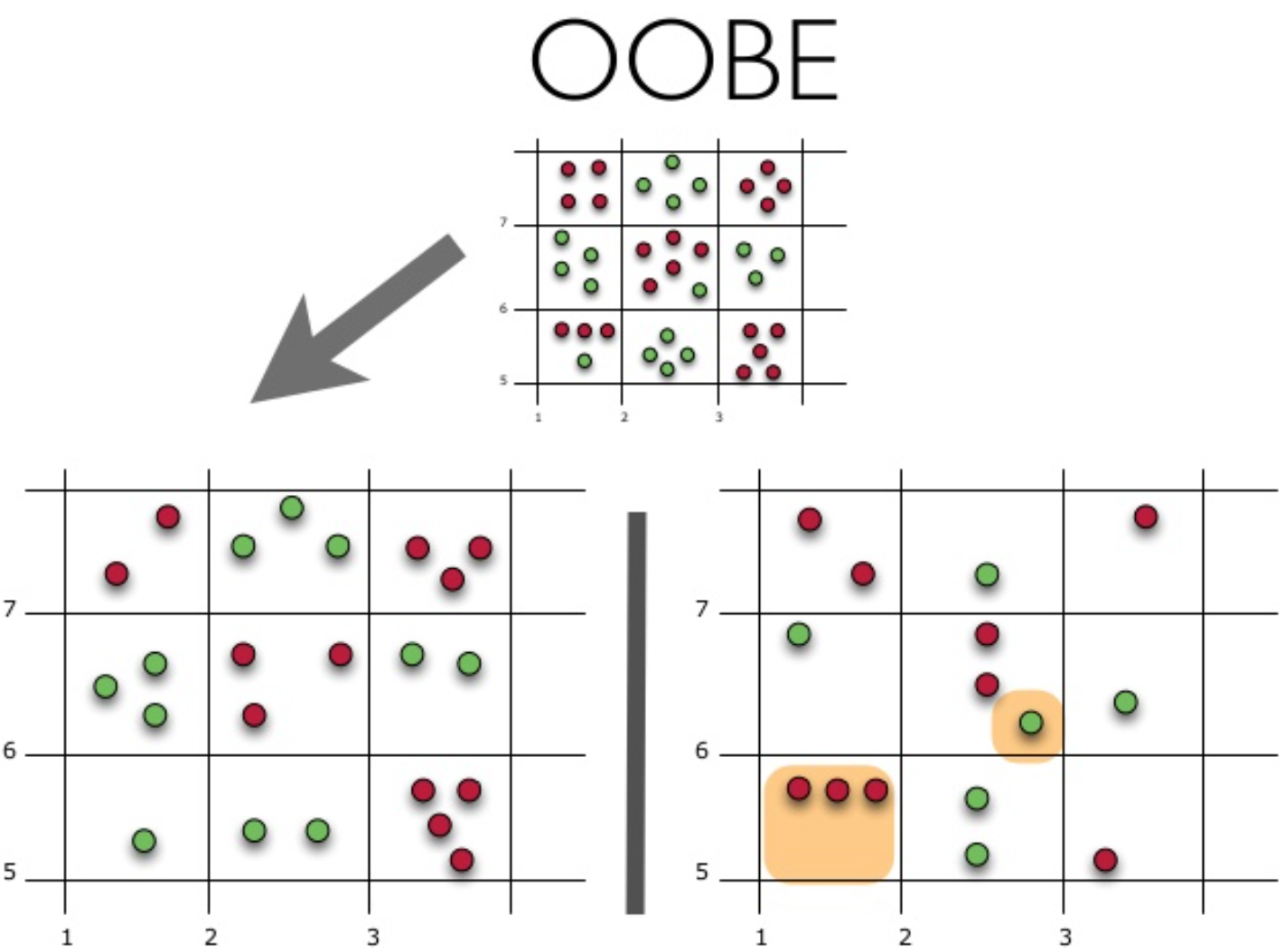

Давайте рассмотрим, как это работает на практике:

На рисунке изображена оценка oob-ошибки. Верхний рисунок – это наша исходная выборка, ее мы делим на обучающую(слева) и тестовую(справа). На рисунке слева у нас есть сетка из квадратиков, которая идеально разбивает нашу выборку. Теперь нужно оценить долю верных ответов на нашей тестовой выборке. На рисунке видно, что наш классификатор ошибся в 4 наблюдениях, которые мы не использовали для обучения. Значит, доля верных ответов нашего классификатора:

Получается, что каждый базовый алгоритм обучается на ~63% исходных объектов. Значит, на оставшихся ~37% его можно сразу проверять. Out-of-Bag оценка — это усредненная оценка базовых алгоритмов на тех ~37% данных, на которых они не обучались.

2. Случайный лес

Лео Брейман нашел применение бутстрэпу не только в статистике, но и в машинном обучении. Он вместе с Адель Катлер усовершенстовал алгоритм случайного леса, предложенный Хо, добавив к первоначальному варианту построение некоррелируемых деревьев на основе CART, в сочетании с методом случайных подпространств и бэггинга.

Решающие деревья являются хорошим семейством базовых классификаторов для бэггинга, поскольку они достаточно сложны и могут достигать нулевой ошибки на любой выборке. Метод случайных подпространств позволяет снизить коррелированность между деревьями и избежать переобучения. Базовые алгоритмы обучаются на различных подмножествах признакового описания, которые также выделяются случайным образом.

Ансамбль моделей, использующих метод случайного подпространства, можно построить, используя следующий алгоритм:

- Пусть количество объектов для обучения равно

, а количество признаков

.

- Выберите

как число отдельных моделей в ансамбле.

- Для каждой отдельной модели

выберите

как число признаков для

. Обычно для всех моделей используется только одно значение

.

- Для каждой отдельной модели

создайте обучающую выборку, выбрав

признаков из

, и обучите модель.

- Теперь, чтобы применить модель ансамбля к новому объекту, объедините результаты отдельных

моделей мажоритарным голосованием или путем комбинирования апостериорных вероятностей.

Алгоритм

Алгоритм построения случайного леса, состоящего из деревьев, выглядит следующим образом:

- Для каждого

:

Итоговый классификатор , простыми словами — для задачи кассификации мы выбираем решение голосованием по большинству, а в задаче регрессии — средним.

Рекомендуется в задачах классификации брать , а в задачах регрессии —

, где

— число признаков. Также рекомендуется в задачах классификации строить каждое дерево до тех пор, пока в каждом листе не окажется по одному объекту, а в задачах регрессии — пока в каждом листе не окажется по пять объектов.

Таким образом, случайный лес — это бэггинг над решающими деревьями, при обучении которых для каждого разбиения признаки выбираются из некоторого случайного подмножества признаков.

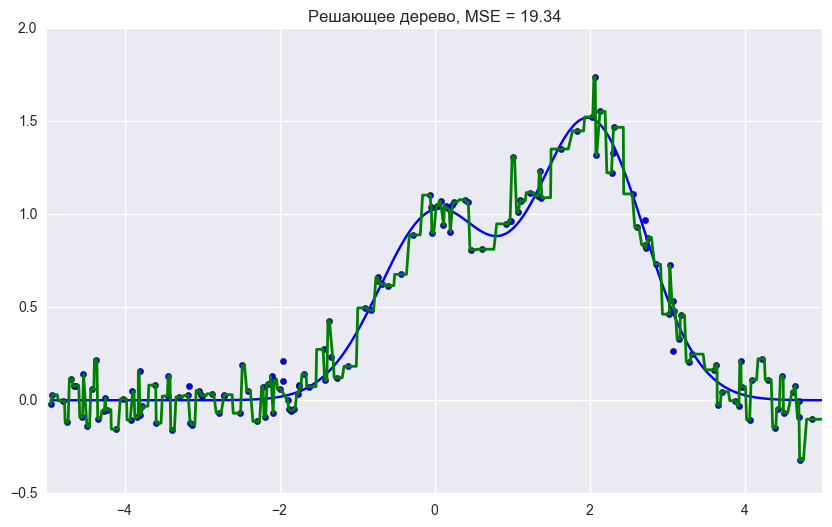

Сравнение с деревом решений и бэггингом

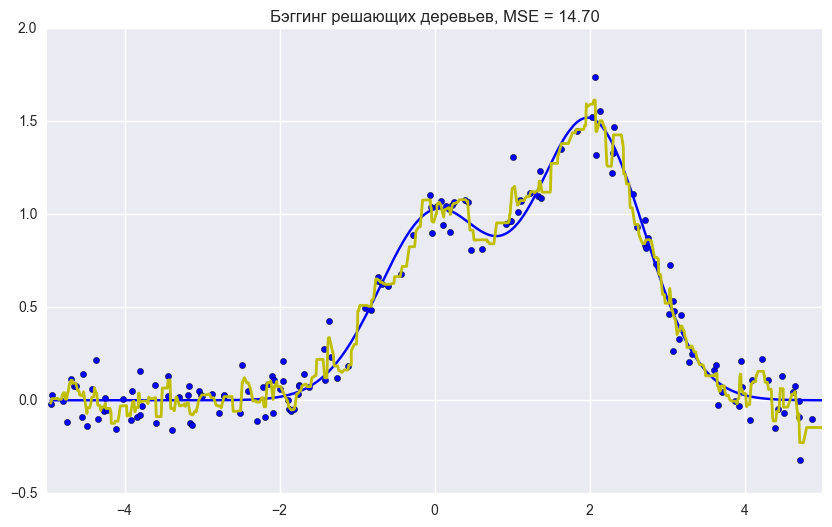

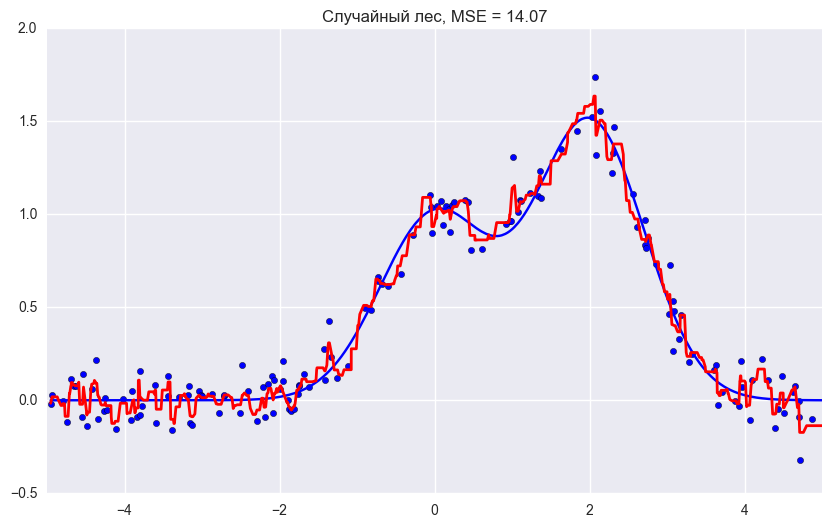

Код для сравнения решающего дерева, бэггинга и случайного леса для задачи регрессии

from __future__ import division, print_function

# отключим всякие предупреждения Anaconda

import warnings

warnings.filterwarnings('ignore')

%pylab inline

np.random.seed(42)

figsize(8, 6)

import seaborn as sns

from sklearn.ensemble import RandomForestRegressor, RandomForestClassifier, BaggingRegressor

from sklearn.tree import DecisionTreeRegressor, DecisionTreeClassifier

n_train = 150

n_test = 1000

noise = 0.1

# Generate data

def f(x):

x = x.ravel()

return np.exp(-x ** 2) + 1.5 * np.exp(-(x - 2) ** 2)

def generate(n_samples, noise):

X = np.random.rand(n_samples) * 10 - 5

X = np.sort(X).ravel()

y = np.exp(-X ** 2) + 1.5 * np.exp(-(X - 2) ** 2)

+ np.random.normal(0.0, noise, n_samples)

X = X.reshape((n_samples, 1))

return X, y

X_train, y_train = generate(n_samples=n_train, noise=noise)

X_test, y_test = generate(n_samples=n_test, noise=noise)

# One decision tree regressor

dtree = DecisionTreeRegressor().fit(X_train, y_train)

d_predict = dtree.predict(X_test)

plt.figure(figsize=(10, 6))

plt.plot(X_test, f(X_test), "b")

plt.scatter(X_train, y_train, c="b", s=20)

plt.plot(X_test, d_predict, "g", lw=2)

plt.xlim([-5, 5])

plt.title("Решающее дерево, MSE = %.2f"

% np.sum((y_test - d_predict) ** 2))

# Bagging decision tree regressor

bdt = BaggingRegressor(DecisionTreeRegressor()).fit(X_train, y_train)

bdt_predict = bdt.predict(X_test)

plt.figure(figsize=(10, 6))

plt.plot(X_test, f(X_test), "b")

plt.scatter(X_train, y_train, c="b", s=20)

plt.plot(X_test, bdt_predict, "y", lw=2)

plt.xlim([-5, 5])

plt.title("Бэггинг решающих деревьев, MSE = %.2f" % np.sum((y_test - bdt_predict) ** 2));

# Random Forest

rf = RandomForestRegressor(n_estimators=10).fit(X_train, y_train)

rf_predict = rf.predict(X_test)

plt.figure(figsize=(10, 6))

plt.plot(X_test, f(X_test), "b")

plt.scatter(X_train, y_train, c="b", s=20)

plt.plot(X_test, rf_predict, "r", lw=2)

plt.xlim([-5, 5])

plt.title("Случайный лес, MSE = %.2f" % np.sum((y_test - rf_predict) ** 2));Как мы видим из графиков и значений ошибки MSE, случайный лес из 10 деревьев дает лучший результат, чем одно дерево или бэггинг из 10 деревьев решений. Основное различие случайного леса и бэггинга на деревьях решений заключается в том, что в случайном лесе выбирается случайное подмножество признаков, и лучший признак для разделения узла определяется из подвыборки признаков, в отличие от бэггинга, где все функции рассматриваются для разделения в узле.

Также можно увидеть преимущество случайного леса и бэггинга в задачах классификации.

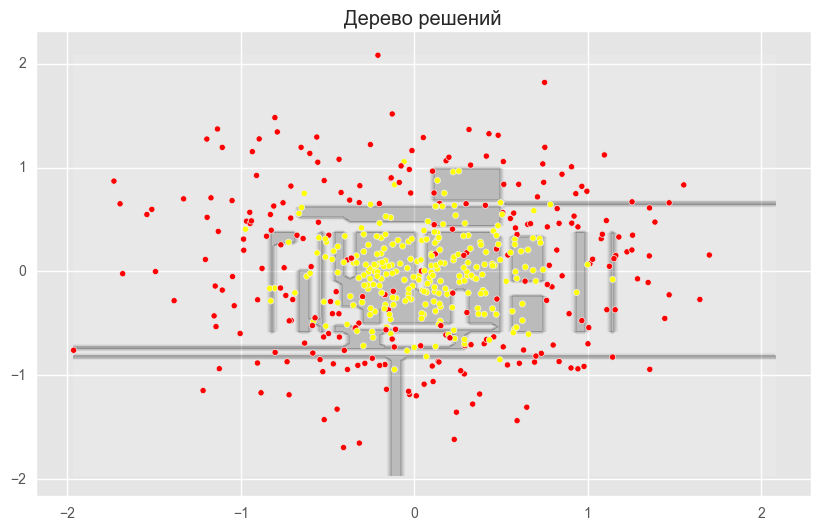

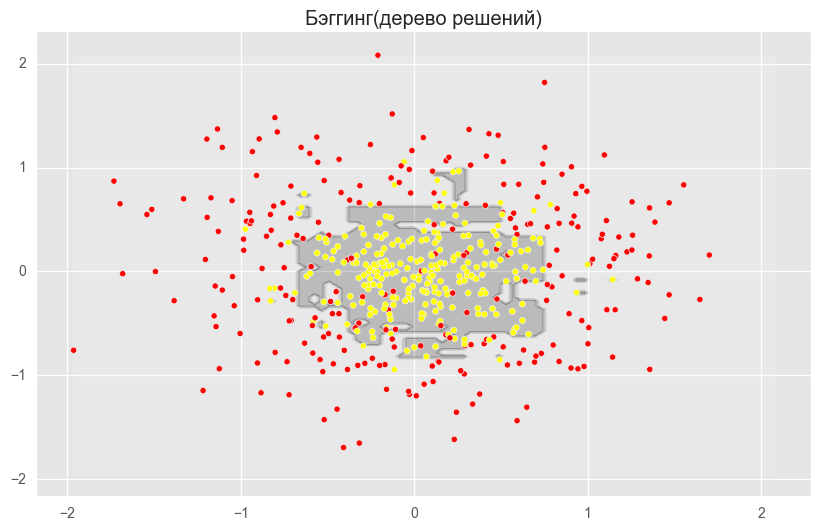

Код для сравнения решающего дерева, бэггинга и случайного леса для задачи классификации

from sklearn.ensemble import RandomForestClassifier, BaggingClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.datasets import make_circles

from sklearn.cross_validation import train_test_split

import numpy as np

from matplotlib import pyplot as plt

plt.style.use('ggplot')

plt.rcParams['figure.figsize'] = 10, 6

%matplotlib inline

np.random.seed(42)

X, y = make_circles(n_samples=500, factor=0.1, noise=0.35, random_state=42)

X_train_circles, X_test_circles, y_train_circles, y_test_circles = train_test_split(X, y, test_size=0.2)

dtree = DecisionTreeClassifier(random_state=42)

dtree.fit(X_train_circles, y_train_circles)

x_range = np.linspace(X.min(), X.max(), 100)

xx1, xx2 = np.meshgrid(x_range, x_range)

y_hat = dtree.predict(np.c_[xx1.ravel(), xx2.ravel()])

y_hat = y_hat.reshape(xx1.shape)

plt.contourf(xx1, xx2, y_hat, alpha=0.2)

plt.scatter(X[:,0], X[:,1], c=y, cmap='autumn')

plt.title("Дерево решений")

plt.show()

b_dtree = BaggingClassifier(DecisionTreeClassifier(),n_estimators=300, random_state=42)

b_dtree.fit(X_train_circles, y_train_circles)

x_range = np.linspace(X.min(), X.max(), 100)

xx1, xx2 = np.meshgrid(x_range, x_range)

y_hat = b_dtree.predict(np.c_[xx1.ravel(), xx2.ravel()])

y_hat = y_hat.reshape(xx1.shape)

plt.contourf(xx1, xx2, y_hat, alpha=0.2)

plt.scatter(X[:,0], X[:,1], c=y, cmap='autumn')

plt.title("Бэггинг(дерево решений)")

plt.show()

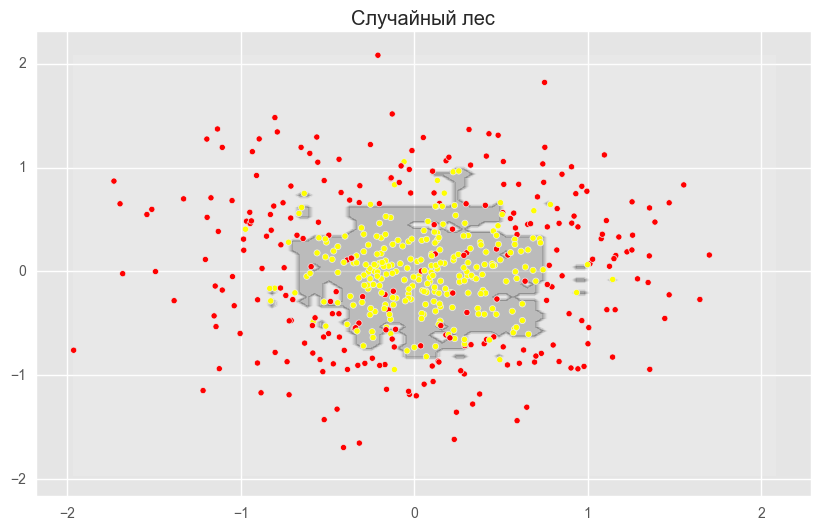

rf = RandomForestClassifier(n_estimators=300, random_state=42)

rf.fit(X_train_circles, y_train_circles)

x_range = np.linspace(X.min(), X.max(), 100)

xx1, xx2 = np.meshgrid(x_range, x_range)

y_hat = rf.predict(np.c_[xx1.ravel(), xx2.ravel()])

y_hat = y_hat.reshape(xx1.shape)

plt.contourf(xx1, xx2, y_hat, alpha=0.2)

plt.scatter(X[:,0], X[:,1], c=y, cmap='autumn')

plt.title("Случайный лес")

plt.show()На рисунках выше видно, что разделяющая граница дерева решений очень «рваная» и на ней много острых углов, что говорит о переобучении и слабой обобщающей способности. В то время как у бэггинга и случайного леса граница достаточно сглаженная и практически нет признаков переобучения.

Давайте теперь попробуем разобраться с параметрами, с помощью подбора которых мы cможем увеличить долю правильных ответов.

Параметры

Метод случайного леса реализован в библиотеке машинного обучения scikit-learn двумя классами RandomForestClassifier и RandomForestRegressor.

Полный список параметров случайного леса для задачи регрессии:

class sklearn.ensemble.RandomForestRegressor(

n_estimators — число деревьев в "лесу" (по дефолту – 10)

criterion — функция, которая измеряет качество разбиения ветки дерева (по дефолту — "mse" , так же можно выбрать "mae")

max_features — число признаков, по которым ищется разбиение. Вы можете указать конкретное число или процент признаков, либо выбрать из доступных значений: "auto" (все признаки), "sqrt", "log2". По дефолту стоит "auto".

max_depth — максимальная глубина дерева (по дефолту глубина не ограничена)

min_samples_split — минимальное количество объектов, необходимое для разделения внутреннего узла. Можно задать числом или процентом от общего числа объектов (по дефолту — 2)

min_samples_leaf — минимальное число объектов в листе. Можно задать числом или процентом от общего числа объектов (по дефолту — 1)

min_weight_fraction_leaf — минимальная взвешенная доля от общей суммы весов (всех входных объектов) должна быть в листе (по дефолту имеют одинаковый вес)

max_leaf_nodes — максимальное количество листьев (по дефолту нет ограничения)

min_impurity_split — порог для остановки наращивания дерева (по дефолту 1е-7)

bootstrap — применять ли бустрэп для построения дерева (по дефолту True)

oob_score — использовать ли out-of-bag объекты для оценки R^2 (по дефолту False)

n_jobs — количество ядер для построения модели и предсказаний (по дефолту 1, если поставить -1, то будут использоваться все ядра)

random_state — начальное значение для генерации случайных чисел (по дефолту его нет, если хотите воспроизводимые результаты, то нужно указать любое число типа int

verbose — вывод логов по построению деревьев (по дефолту 0)

warm_start — использует уже натренированую модель и добавляет деревьев в ансамбль (по дефолту False)

)Для задачи классификации все почти то же самое, мы приведем только те параметры, которыми RandomForestClassifier отличается от RandomForestRegressor

class sklearn.ensemble.RandomForestClassifier(

criterion — поскольку у нас теперь задача классификации, то по дефолту выбран критерий "gini" (можно выбрать "entropy")

class_weight — вес каждого класса (по дефолту все веса равны 1, но можно передать словарь с весами, либо явно указать "balanced", тогда веса классов будут равны их исходным частям в генеральной совокупности; также можно указать "balanced_subsample", тогда веса на каждой подвыборке будут меняться в зависимости от распределения классов на этой подвыборке.

)Далее рассмотрим несколько параметров, на которые в первую очередь стоит обратить внимание при построении модели:

- n_estimators — число деревьев в «лесу»

- criterion — критерий для разбиения выборки в вершине

- max_features — число признаков, по которым ищется разбиение

- min_samples_leaf — минимальное число объектов в листе

- max_depth — максимальная глубина дерева

Рассмотрим применение случайного леса в реальной задаче

Для этого будем использовать пример с задачей оттока клиентов. Это задача классификации, поэтому будем использовать метрику accuracy для оценки качества модели. Для начала построим самый простой классификатор, который будет нашим бейслайном. Возьмем только числовые признаки для упрощения.

Код для построения бейслайна для случайного леса

import pandas as pd

from sklearn.model_selection import cross_val_score, StratifiedKFold, GridSearchCV

from sklearn.metrics import accuracy_score

# Загружаем данные

df = pd.read_csv("../../data/telecom_churn.csv")

# Выбираем сначала только колонки с числовым типом данных

cols = []

for i in df.columns:

if (df[i].dtype == "float64") or (df[i].dtype == 'int64'):

cols.append(i)

# Разделяем на признаки и объекты

X, y = df[cols].copy(), np.asarray(df["Churn"],dtype='int8')

# Инициализируем страифицированную разбивку нашего датасета для валидации

skf = StratifiedKFold(n_splits=5, shuffle=True, random_state=42)

# Инициализируем наш классификатор с дефолтными параметрами

rfc = RandomForestClassifier(random_state=42, n_jobs=-1, oob_score=True)

# Обучаем на тренировочном датасете

results = cross_val_score(rfc, X, y, cv=skf)

# Оцениваем долю верных ответов на тестовом датасете

print("CV accuracy score: {:.2f}%".format(results.mean()*100))Получили долю верных ответов 91.21%, теперь попробуем улучшить этот результат и посмотреть, как ведут себя кривые валидации при изменении основных параметров.

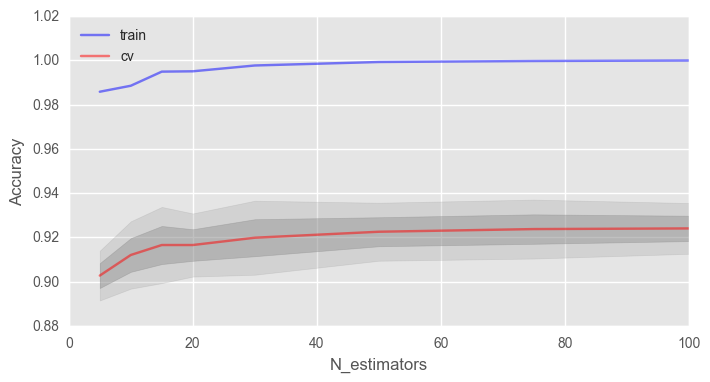

Начнем с количества деревьев:

Код для построения кривых валидации по подбору количества деревьев

# Инициализируем валидацию

skf = StratifiedKFold(n_splits=5, shuffle=True, random_state=42)

# Создаем списки для сохранения точности на тренировочном и тестовом датасете

train_acc = []

test_acc = []

temp_train_acc = []

temp_test_acc = []

trees_grid = [5, 10, 15, 20, 30, 50, 75, 100]

# Обучаем на тренировочном датасете

for ntrees in trees_grid:

rfc = RandomForestClassifier(n_estimators=ntrees, random_state=42, n_jobs=-1, oob_score=True)

temp_train_acc = []

temp_test_acc = []

for train_index, test_index in skf.split(X, y):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y[train_index], y[test_index]

rfc.fit(X_train, y_train)

temp_train_acc.append(rfc.score(X_train, y_train))

temp_test_acc.append(rfc.score(X_test, y_test))

train_acc.append(temp_train_acc)

test_acc.append(temp_test_acc)

train_acc, test_acc = np.asarray(train_acc), np.asarray(test_acc)

print("Best accuracy on CV is {:.2f}% with {} trees".format(max(test_acc.mean(axis=1))*100,

trees_grid[np.argmax(test_acc.mean(axis=1))]))Код для построения графика кривых валидации

import matplotlib.pyplot as plt

plt.style.use('ggplot')

%matplotlib inline

fig, ax = plt.subplots(figsize=(8, 4))

ax.plot(trees_grid, train_acc.mean(axis=1), alpha=0.5, color='blue', label='train')

ax.plot(trees_grid, test_acc.mean(axis=1), alpha=0.5, color='red', label='cv')

ax.fill_between(trees_grid, test_acc.mean(axis=1) - test_acc.std(axis=1), test_acc.mean(axis=1) + test_acc.std(axis=1), color='#888888', alpha=0.4)

ax.fill_between(trees_grid, test_acc.mean(axis=1) - 2*test_acc.std(axis=1), test_acc.mean(axis=1) + 2*test_acc.std(axis=1), color='#888888', alpha=0.2)

ax.legend(loc='best')

ax.set_ylim([0.88,1.02])

ax.set_ylabel("Accuracy")

ax.set_xlabel("N_estimators")Как видно, при достижении определенного числа деревьев наша доля верных ответов на тесте выходит на асимптоту, и вы можете сами решить, сколько деревьев оптимально для вашей задачи.

На рисунке также видно, что на тренировочной выборке мы смогли достичь 100% точности, это говорит нам о переобучении нашей модели. Чтобы избежать переобучения, мы должны добавить параметры регуляризации в модель.

Начнем с параметра максимальной глубины – max_depth. (зафиксируем к-во деревьев 100)

Код для построения кривых обучения по подбору максимальной глубины дерева

# Создаем списки для сохранения точности на тренировочном и тестовом датасете

train_acc = []

test_acc = []

temp_train_acc = []

temp_test_acc = []

max_depth_grid = [3, 5, 7, 9, 11, 13, 15, 17, 20, 22, 24]

# Обучаем на тренировочном датасете

for max_depth in max_depth_grid:

rfc = RandomForestClassifier(n_estimators=100, random_state=42, n_jobs=-1, oob_score=True, max_depth=max_depth)

temp_train_acc = []

temp_test_acc = []

for train_index, test_index in skf.split(X, y):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y[train_index], y[test_index]

rfc.fit(X_train, y_train)

temp_train_acc.append(rfc.score(X_train, y_train))

temp_test_acc.append(rfc.score(X_test, y_test))

train_acc.append(temp_train_acc)

test_acc.append(temp_test_acc)

train_acc, test_acc = np.asarray(train_acc), np.asarray(test_acc)

print("Best accuracy on CV is {:.2f}% with {} max_depth".format(max(test_acc.mean(axis=1))*100,

max_depth_grid[np.argmax(test_acc.mean(axis=1))]))Код для построения графика кривых обучения

fig, ax = plt.subplots(figsize=(8, 4))

ax.plot(max_depth_grid, train_acc.mean(axis=1), alpha=0.5, color='blue', label='train')

ax.plot(max_depth_grid, test_acc.mean(axis=1), alpha=0.5, color='red', label='cv')

ax.fill_between(max_depth_grid, test_acc.mean(axis=1) - test_acc.std(axis=1), test_acc.mean(axis=1) + test_acc.std(axis=1), color='#888888', alpha=0.4)

ax.fill_between(max_depth_grid, test_acc.mean(axis=1) - 2*test_acc.std(axis=1), test_acc.mean(axis=1) + 2*test_acc.std(axis=1), color='#888888', alpha=0.2)

ax.legend(loc='best')

ax.set_ylim([0.88,1.02])

ax.set_ylabel("Accuracy")

ax.set_xlabel("Max_depth")Параметр max_depth хорошо справляется с регуляризацией модели, и мы уже не так сильно переобучаемся. Доля верных ответов нашей модели немного возросла.

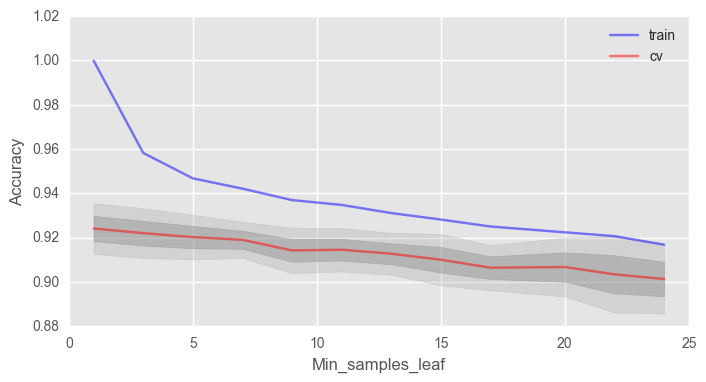

Еще важный параметр min_samples_leaf, он также выполняет функцию регуляризатора.

Код для построения кривых валидации по подбору минимального числа объектов в одном листе дерева

# Создаем списки для сохранения точности на тренировочном и тестовом датасете

train_acc = []

test_acc = []

temp_train_acc = []

temp_test_acc = []

min_samples_leaf_grid = [1, 3, 5, 7, 9, 11, 13, 15, 17, 20, 22, 24]

# Обучаем на тренировочном датасете

for min_samples_leaf in min_samples_leaf_grid:

rfc = RandomForestClassifier(n_estimators=100, random_state=42, n_jobs=-1,

oob_score=True, min_samples_leaf=min_samples_leaf)

temp_train_acc = []

temp_test_acc = []

for train_index, test_index in skf.split(X, y):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y[train_index], y[test_index]

rfc.fit(X_train, y_train)

temp_train_acc.append(rfc.score(X_train, y_train))

temp_test_acc.append(rfc.score(X_test, y_test))

train_acc.append(temp_train_acc)

test_acc.append(temp_test_acc)

train_acc, test_acc = np.asarray(train_acc), np.asarray(test_acc)

print("Best accuracy on CV is {:.2f}% with {} min_samples_leaf".format(max(test_acc.mean(axis=1))*100,

min_samples_leaf_grid[np.argmax(test_acc.mean(axis=1))]))Код для построения графика кривых валидации

fig, ax = plt.subplots(figsize=(8, 4))

ax.plot(min_samples_leaf_grid, train_acc.mean(axis=1), alpha=0.5, color='blue', label='train')

ax.plot(min_samples_leaf_grid, test_acc.mean(axis=1), alpha=0.5, color='red', label='cv')

ax.fill_between(min_samples_leaf_grid, test_acc.mean(axis=1) - test_acc.std(axis=1), test_acc.mean(axis=1) + test_acc.std(axis=1), color='#888888', alpha=0.4)

ax.fill_between(min_samples_leaf_grid, test_acc.mean(axis=1) - 2*test_acc.std(axis=1), test_acc.mean(axis=1) + 2*test_acc.std(axis=1), color='#888888', alpha=0.2)

ax.legend(loc='best')

ax.set_ylim([0.88,1.02])

ax.set_ylabel("Accuracy")

ax.set_xlabel("Min_samples_leaf")В данном случае мы не выигрываем в точности на валидации, но зато можем сильно уменьшить переобучение до 2% при сохранении точности около 92%.

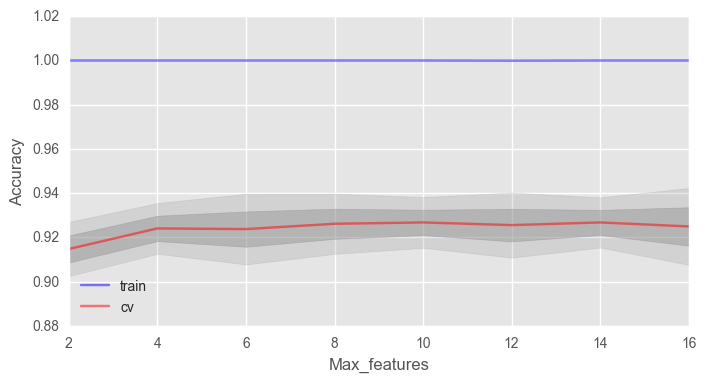

Рассмотрим такой параметр как max_features. Для задач классификации по умолчанию используется , где n — число признаков. Давайте проверим, оптимально ли в нашем случае использовать 4 признака или нет.

Код для построения кривых валидации по подбору максимального количества признаков для одного дерева

# Создаем списки для сохранения точности на тренировочном и тестовом датасете

train_acc = []

test_acc = []

temp_train_acc = []

temp_test_acc = []

max_features_grid = [2, 4, 6, 8, 10, 12, 14, 16]

# Обучаем на тренировочном датасете

for max_features in max_features_grid:

rfc = RandomForestClassifier(n_estimators=100, random_state=42, n_jobs=-1,

oob_score=True, max_features=max_features)

temp_train_acc = []

temp_test_acc = []

for train_index, test_index in skf.split(X, y):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y[train_index], y[test_index]

rfc.fit(X_train, y_train)

temp_train_acc.append(rfc.score(X_train, y_train))

temp_test_acc.append(rfc.score(X_test, y_test))

train_acc.append(temp_train_acc)

test_acc.append(temp_test_acc)

train_acc, test_acc = np.asarray(train_acc), np.asarray(test_acc)

print("Best accuracy on CV is {:.2f}% with {} max_features".format(max(test_acc.mean(axis=1))*100,

max_features_grid[np.argmax(test_acc.mean(axis=1))]))Код для построения графика кривых валидации

fig, ax = plt.subplots(figsize=(8, 4))

ax.plot(max_features_grid, train_acc.mean(axis=1), alpha=0.5, color='blue', label='train')

ax.plot(max_features_grid, test_acc.mean(axis=1), alpha=0.5, color='red', label='cv')

ax.fill_between(max_features_grid, test_acc.mean(axis=1) - test_acc.std(axis=1), test_acc.mean(axis=1) + test_acc.std(axis=1), color='#888888', alpha=0.4)

ax.fill_between(max_features_grid, test_acc.mean(axis=1) - 2*test_acc.std(axis=1), test_acc.mean(axis=1) + 2*test_acc.std(axis=1), color='#888888', alpha=0.2)

ax.legend(loc='best')

ax.set_ylim([0.88,1.02])

ax.set_ylabel("Accuracy")

ax.set_xlabel("Max_features")В нашем случае оптимальное число признаков — 10, именно с таким значением достигается наилучший результат.

Мы рассмотрели, как ведут себя кривые валидации в зависимости от изменения основных параметров. Давайте теперь с помощью GridSearchCV найдем оптимальные параметры для нашего примера.

Код для подбора оптимальных параметров модели

# Сделаем инициализацию параметров, по которым хотим сделать полный перебор

parameters = {'max_features': [4, 7, 10, 13], 'min_samples_leaf': [1, 3, 5, 7], 'max_depth': [5,10,15,20]}

rfc = RandomForestClassifier(n_estimators=100, random_state=42,

n_jobs=-1, oob_score=True)

gcv = GridSearchCV(rfc, parameters, n_jobs=-1, cv=skf, verbose=1)

gcv.fit(X, y)Лучшая доля верных ответов, который мы смогли достичь с помощью перебора параметров — 92.83% при 'max_depth': 15, 'max_features': 7, 'min_samples_leaf': 3.

Вариация и декорреляционный эффект

Давайте запишем дисперсию для случайного леса как

Тут

– корреляция выборки между любыми двумя деревьями, используемыми при усреднении

где

и

– случайно выбранная пара деревьев на случайно выбранных объектах выборки

— это выборочная дисперсия любого произвольно выбранного дерева:

Легко спутать

со средней корреляцией между обученными деревьями в данном случайном лесе, рассматривая деревья как N-векторы и вычисляя среднюю парную корреляцию между ними. Это не тот случай. Эта условная корреляция не имеет прямого отношения к процессу усреднения, а зависимость от

в

предупреждает нас об этом различии. Скорее

является теоретической корреляцией между парой случайных деревьев, оцененных в объекте

, которая была вызвана многократным сэмплированием обучающей выборки из генеральной совокупности

, и после этого выбрана данная пара случайных деревьев. На статистическом жаргоне это корреляция, вызванная выборочным распределением

и

.

По факту, условная ковариация пары деревьев равна 0, потому что бустрэп и отбор признаков — независимы и одинаково распределены.

Если рассмотреть дисперсию по одному дереву, то она практически не меняется от переменных для разделения (

), а вот для ансамбля это играет большую роль, и дисперсия для дерева намного выше, чем для ансамбля.

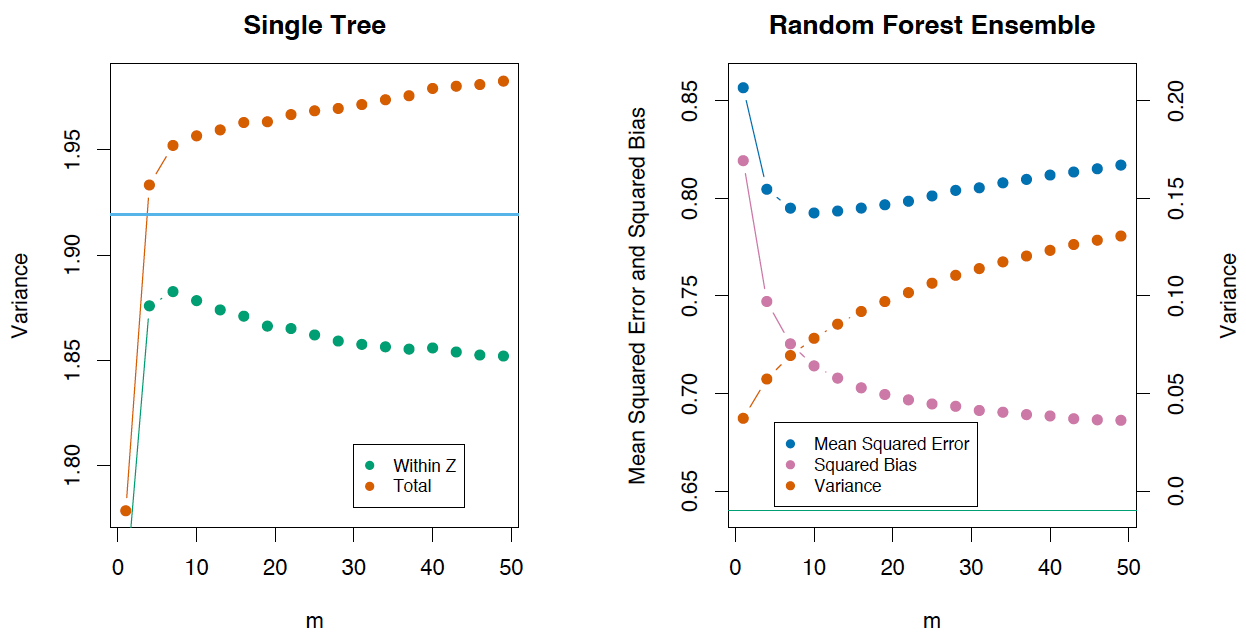

В книге The Elements of Statistical Learning (Trevor Hastie, Robert Tibshirani и Jerome Friedman) есть отличный пример, который это демонстрирует.

Смещение

Как и в бэггинге, смещение в случайном лесе такое же, как и смещение в отдельно взятом дереве

:

Это также обычно больше (в абсолютных величинах), чем смещение «неусеченного» (unprunned) дерева, поскольку рандомизация и сокращение пространства выборки налагают ограничения. Следовательно, улучшения в прогнозировании, полученные с помощью бэггинга или случайных лесов, являются исключительно результатом уменьшения дисперсии.

Сверхслучайные деревья

В сверхслучайных деревьях (Extremely Randomized Trees) больше случайности в том, как вычисляются разделения в узлах. Как и в случайных лесах, используется случайное подмножество возможных признаков, но вместо поиска наиболее оптимальных порогов, пороговые значения произвольно выбираются для каждого возможного признака, и наилучший из этих случайно генерируемых порогов выбирается как лучшее правило для разделения узла. Это обычно позволяет немного уменьшить дисперсию модели за счет несколько большего увеличения смещения.

В библиотеке scikit-learn есть реализация ExtraTreesClassifier и ExtraTreesRegressor. Данный метод стоит использовать, когда вы сильно переобучаетесь на случайном лесе или градиентном бустинге.

Схожесть случайного леса с алгоритмом k-ближайших соседей

Метод случайного леса схож с методом ближайших соседей. Случайные леса, по сути, осуществляют предсказания для объектов на основе меток похожих объектов из обучения. Схожесть объектов при этом тем выше, чем чаще эти объекты оказываются в одном и том же листе дерева. Покажем это формально.

Рассмотрим задачу регрессии с квадратичной функцией потерь. Пусть

— номер листа

-го дерева из случайного леса, в который попадает объект

. Ответ объекта

равен среднему ответу по всем объектам обучающей выборки, которые попали в этот лист

. Это можно записать так

где

Тогда ответ композиции равен

Видно, что ответ случайного леса представляет собой сумму ответов всех объектов обучения с некоторыми весами. Отметим, что номер листа

, в который попал объект, сам по себе является ценным признаком. Достаточно неплохо работает подход, в котором по выборке обучается композиция из небольшого числа деревьев с помощью случайного леса или градиентного бустинга, а потом к ней добавляются категориальные признаки

. Новые признаки являются результатом нелинейного разбиения пространства и несут в себе информацию о сходстве объектов.

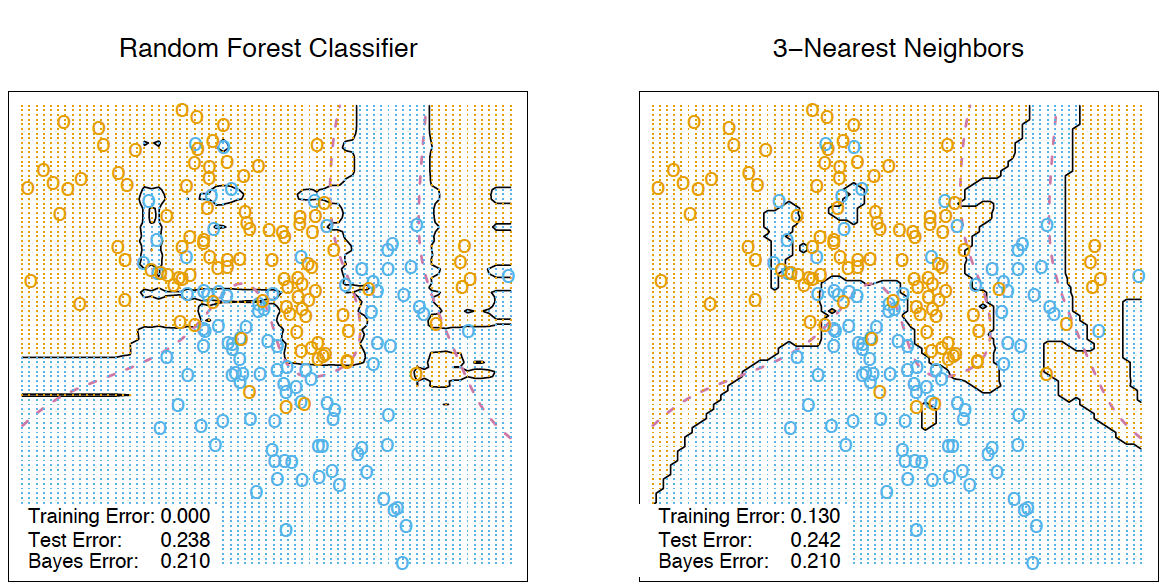

Все в той же книге The Elements of Statistical Learning есть хороший наглядный пример сходства случайного леса и k-ближайших соседей.

Преобразование признаков в многомерное пространство

Все привыкли использовать случайный лес для задач обучения с учителем, но также есть возможность проводить обучение и без учителя. С помощью метода RandomTreesEmbedding мы можем сделать трансформацию нашего датасета в многомерное разреженное его представление. Его суть в том, что мы строим абсолютно случайные деревья, и индекс листа, в котором оказалось наблюдение, мы считаем за новый признак. Если в первый лист попал объект, то мы ставим 1, а если не попал, то 0. Так называемое бинарное кодирование. Контролировать количество переменных и также степень разреженности нашего нового представления датасета мы можем увеличивая/уменьшая количество деревьев и их глубины. Поскольку соседние точки данных скорее всего лежат в одном и том же листе дерева, преобразование выполняет неявную, непараметрическую оценку плотности.

3. Оценка важности признаков

Очень часто вы хотите понять свой алгоритм, почему он именно так, а не иначе дал определенный ответ. Или если не понять его полностью, то хотя бы какие переменные больше всего влияют на результат. Из случайного леса можно довольно просто получить данную информацию.

Суть метода

По данной картинке интуитивно понятно, что важность признака «Возраст» в задаче кредитного скоринга выше, чем важность признака «Доход». Формализуется это с помощью понятия прироста информации.

Если построить много деревьев решений (случайный лес), то чем выше в среднем признак в дереве решений, тем он важнее в данной задаче классификации/регрессии. При каждом разбиении в каждом дереве улучшение критерия разделения (в нашем случае неопределенность Джини(Gini impurity)) — это показатель важности, связанный с переменной разделения, и накапливается он по всем деревьям леса отдельно для каждой переменной.

Давайте немного углубимся в детали. Среднее снижение точности, вызываемое переменной, определяется во время фазы вычисления out-of-bag ошибки. Чем больше уменьшается точность предсказаний из-за исключения (или перестановки) одной переменной, тем важнее эта переменная, и поэтому переменные с бо́льшим средним уменьшением точности более важны для классификации данных. Среднее уменьшение неопределенности Джини (или ошибки mse в задачах регрессии) является мерой того, как каждая переменная способствует однородности узлов и листьев в окончательной модели случайного леса. Каждый раз, когда отдельная переменная используется для разбиения узла, неопределенность Джини для дочерних узлов рассчитывается и сравнивается с коэффициентом исходного узла. Неопределенность Джини является мерой однородности от 0 (однородной) до 1 (гетерогенной). Изменения в значении критерия разделения суммируются для каждой переменной и нормируются в конце вычисления. Переменные, которые приводят к узлам с более высокой чистотой, имеют более высокое снижение коэффициента Джини.

А теперь представим все вышеописанное в виде формул.

— предсказание класса перед перестановкой/удалением признака

— предсказание класса после перестановки/удаления признака

Заметим, что, если

не находится в дереве

Расчет важности признаков в ансамбле:

— ненормированные— нормированные

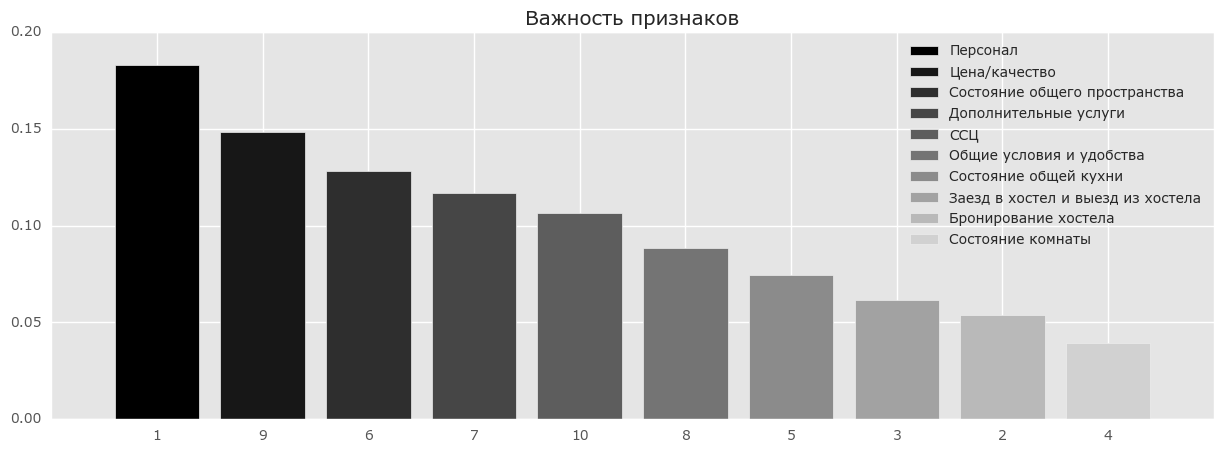

Пример

Рассмотрим результаты анкетирования посетителей хостелов с сайтов Booking.com и TripAdvisor.com. Признаки — средние оценки по разным факторам (перечислены ниже) — персонал, состояние комнат и т.д. Целевой признак — рейтинг хостела на сайте.

Код для оценки важности признаков

from __future__ import division, print_function # отключим всякие предупреждения Anaconda import warnings warnings.filterwarnings('ignore') %pylab inline import seaborn as sns # russian headres from matplotlib import rc font = {'family': 'Verdana', 'weight': 'normal'} rc('font', **font) import pandas as pd import numpy as np from sklearn.ensemble.forest import RandomForestRegressor hostel_data = pd.read_csv("../../data/hostel_factors.csv") features = {"f1":u"Персонал", "f2":u"Бронирование хостела ", "f3":u"Заезд в хостел и выезд из хостела", "f4":u"Состояние комнаты", "f5":u"Состояние общей кухни", "f6":u"Состояние общего пространства", "f7":u"Дополнительные услуги", "f8":u"Общие условия и удобства", "f9":u"Цена/качество", "f10":u"ССЦ"} forest = RandomForestRegressor(n_estimators=1000, max_features=10, random_state=0) forest.fit(hostel_data.drop(['hostel', 'rating'], axis=1), hostel_data['rating']) importances = forest.feature_importances_ indices = np.argsort(importances)[::-1] # Plot the feature importancies of the forest num_to_plot = 10 feature_indices = [ind+1 for ind in indices[:num_to_plot]] # Print the feature ranking print("Feature ranking:") for f in range(num_to_plot): print("%d. %s %f " % (f + 1, features["f"+str(feature_indices[f])], importances[indices[f]])) plt.figure(figsize=(15,5)) plt.title(u"Важность конструктов") bars = plt.bar(range(num_to_plot), importances[indices[:num_to_plot]], color=([str(i/float(num_to_plot+1)) for i in range(num_to_plot)]), align="center") ticks = plt.xticks(range(num_to_plot), feature_indices) plt.xlim([-1, num_to_plot]) plt.legend(bars, [u''.join(features["f"+str(i)]) for i in feature_indices]);

На рисунке выше видно, что люди больше всего обращают внимание на персонал и соотношение цена/качество и на основе впечатления от данных вещей пишут свои отзывы. Но разница между этими признаками и менее влиятельными признаками не очень значительная, и выкидывание какого-то признака приведет к уменьшению точности нашей модели. Но даже на основе нашего анализа мы можем дать рекомендации отелям в первую очередь лучше готовить персонал и/или улучшить качество до заявленной цены.4. Плюсы и минусы случайного леса

Плюсы:

— имеет высокую точность предсказания, на большинстве задач будет лучше линейных алгоритмов; точность сравнима с точностью бустинга

— практически не чувствителен к выбросам в данных из-за случайного сэмлирования

— не чувствителен к масштабированию (и вообще к любым монотонным преобразованиям) значений признаков, связано с выбором случайных подпространств

— не требует тщательной настройки параметров, хорошо работает «из коробки». С помощью «тюнинга» параметров можно достичь прироста от 0.5 до 3% точности в зависимости от задачи и данных

— способен эффективно обрабатывать данные с большим числом признаков и классов

— одинаково хорошо обрабатывет как непрерывные, так и дискретные признаки

— редко переобучается, на практике добавление деревьев почти всегда только улучшает композицию, но на валидации, после достижения определенного количества деревьев, кривая обучения выходит на асимптоту

— для случайного леса существуют методы оценивания значимости отдельных признаков в модели

— хорошо работает с пропущенными данными; сохраняет хорошую точность, если большая часть данных пропущенна

— предполагает возможность сбалансировать вес каждого класса на всей выборке, либо на подвыборке каждого дерева

— вычисляет близость между парами объектов, которые могут использоваться при кластеризации, обнаружении выбросов или (путем масштабирования) дают интересные представления данных

— возможности, описанные выше, могут быть расширены до неразмеченных данных, что приводит к возможности делать кластеризацию и визуализацию данных, обнаруживать выбросы

— высокая параллелизуемость и масштабируемость.Минусы:

— в отличие от одного дерева, результаты случайного леса сложнее интерпретировать

— нет формальных выводов (p-values), доступных для оценки важности переменных

— алгоритм работает хуже многих линейных методов, когда в выборке очень много разреженных признаков (тексты, Bag of words)

— случайный лес не умеет экстраполировать, в отличие от той же линейной регрессии (но это можно считать и плюсом, так как не будет экстремальных значений в случае попадания выброса)

— алгоритм склонен к переобучению на некоторых задачах, особенно на зашумленных данных

— для данных, включающих категориальные переменные с различным количеством уровней, случайные леса предвзяты в пользу признаков с большим количеством уровней: когда у признака много уровней, дерево будет сильнее подстраиваться именно под эти признаки, так как на них можно получить более высокое значение оптимизируемого функционала (типа прироста информации)

— если данные содержат группы коррелированных признаков, имеющих схожую значимость для меток, то предпочтение отдается небольшим группам перед большими

— больший размер получающихся моделей. Требуетсяпамяти для хранения модели, где

— число деревьев.

5. Домашнее задание

В качестве закрепления материала предлагаем выполнить это задание – разобраться с бэггингом и обучить модели случайного леса и логистической регрессии для решения задачи кредитного скоринга. Проверить себя можно отправив ответы в веб-форме (там же найдете и решение).

Актуальные и обновляемые версии демо-заданий – на английском на сайте курса, вот первое задание. Также по подписке на Patreon («Bonus Assignments» tier) доступны расширенные домашние задания по каждой теме (только на англ.).

6. Полезные источники

– Open Machine Learning Course. Topic 5. Bagging and Random Forest (перевод этой статьи на английский)

– Видеозапись лекции по мотивам этой статьи

– 15 раздел книги “Elements of Statistical Learning” Jerome H. Friedman, Robert Tibshirani, and Trevor Hastie

– Блог Александра Дьяконова

– Больше про практические применение случайного леса и других алгоритмов-композиций в официальной документации scikit-learn

– Курс Евгения Соколова по машинному обучению (материалы на GitHub). Есть дополнительные практические задания для углубления ваших знаний

– Обзорная статья «История развития ансамблевых методов классификации в машинном обучении» (Ю. Кашницкий)Статья написана в соавторстве с yorko (Юрием Кашницким). Автор домашнего задания – vradchenko (Виталий Радченко). Благодарю bauchgefuehl (Анастасию Манохину) за редактирование.

A random forest is an ensemble machine-learning model that is composed of multiple decision trees. A decision tree is a model that makes predictions by learning a series of simple decision rules based on the features of the data. A random forest combines the predictions of multiple decision trees to make more accurate and robust predictions.

Random Forests are often used for classification and regression tasks. In classification, the goal is to predict the class label (e.g., “cat” or “dog”) of each sample in the dataset. In regression, the goal is to predict a continuous target variable (e.g., the price of a house) based on the features of the data.

Random forests are popular because they are easy to train, can handle high-dimensional data, and are highly accurate. They also have the ability to handle missing values and can handle imbalanced datasets, where some classes are more prevalent than others.

To train a random forest, you need to specify the number of decision trees to use (the n_estimators parameter) and the maximum depth of each tree (the max_depth parameter). Other hyperparameters, such as the minimum number of samples required to split a node and the minimum number of samples required at a leaf node, can also be specified.

Once the random forest is trained, you can use it to make predictions on new data. To make a prediction, the random forest uses the predictions of the individual decision trees and combines them using a majority vote or an averaging technique.

What is the difference between the OOB Score and the Validation score?

OOB (out-of-bag) score is a performance metric for a machine learning model, specifically for ensemble models such as random forests. It is calculated using the samples that are not used in the training of the model, which is called out-of-bag samples. These samples are used to provide an unbiased estimate of the model’s performance, which is known as the OOB score.

The validation score, on the other hand, is the performance of the model on a validation dataset. This dataset is different from the training dataset and is used to evaluate the model’s performance after it has been trained on the training dataset.

In summary, the OOB score is calculated using out-of-bag samples and is a measure of the model’s performance on unseen data. The validation score, on the other hand, is a measure of the model’s performance on a validation dataset, which is a set of samples that the model has not seen during training.

OOB (out-of-bag) Errors

OOB (out-of-bag) errors are an estimate of the performance of a random forest classifier or regressor on unseen data. In scikit-learn, the OOB error can be obtained using the oob_score_ attribute of the random forest classifier or regressor.

The OOB error is computed using the samples that were not included in the training of the individual trees. This is different from the error computed using the usual training and validation sets, which are used to tune the hyperparameters of the random forest.

The OOB error can be useful for evaluating the performance of the random forest on unseen data. It is not always a reliable estimate of the generalization error of the model, but it can provide a useful indication of how well the model is performing.

Implementation of OOB Errors for Random Forests

To compute the OOB error, the samples that are not used in the training of an individual tree are known as “out-of-bag” samples. These samples are not used in the training of the tree, but they are used to compute the OOB error for that tree. The OOB error for the entire random forest is computed by averaging the OOB errors of the individual trees.

To install NumPy and scikit-learn, you can use the following commands:

pip install numpy pip install scikit-learn

These commands will install the latest versions of NumPy and scikit-learn from the Python Package Index (PyPI). To use the oob_score_ attribute, you must set the oob_score parameter to True when creating the random forest classifier or regressor.

Python3

clf = RandomForestClassifier(n_estimators=100,

oob_score=True,

random_state=0)

Once the random forest classifier or regressor is trained on the data, the oob_score_ attribute will contain the OOB error. For example:

Python3

clf.fit(X, y)

oob_error = 1 - clf.oob_score_

Here is the complete code of how to obtain the OOB error of a random forest classifier in scikit-learn:

Python3

import numpy as np

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=1000,

n_features=10,

n_informative=5,

n_classes=2,

random_state=0)

clf = RandomForestClassifier(n_estimators=100,

oob_score=True,

random_state=0)

clf.fit(X, y)

oob_error = 1 - clf.oob_score_

print(f'OOB error: {oob_error:.3f}')

Output:

OOB error: 0.044

This code will create a random forest classifier, fit it to the data, and obtain the OOB error using the oob_score_ attribute. The oob_score_ attribute will only be available if the oob_score parameter was set to True when creating the classifier.

What are some of the use cases of the OOB error?

One of the main use cases of the OOB error is to evaluate the performance of an ensemble model, such as a random forest. Because the OOB error is calculated using out-of-bag samples, which are samples that are not used in the training of the model, it provides an unbiased estimate of the model’s performance.

Another use case of the OOB error is to tune the hyperparameters of a model. By using the OOB error as a performance metric, the hyperparameters of the model can be adjusted to improve its performance on unseen data.

Additionally, the OOB error can be used to diagnose whether a model is overfitting or underfitting. If the OOB error is significantly higher than the validation score, it may indicate that the model is overfitting and not generalizing well to unseen data. On the other hand, if the OOB error is significantly lower than the validation score, it may indicate that the model is underfitting and not learning the underlying patterns in the data.

Overall, the OOB error is a useful tool for evaluating the performance of an ensemble model and for diagnosing issues such as overfitting and underfitting.

-

Loading metrics

Open Access

Peer-reviewed

Research Article

-

Roman Hornung

On the overestimation of random forest’s out-of-bag error

- Silke Janitza,

- Roman Hornung

x

- Published: August 6, 2018

- https://doi.org/10.1371/journal.pone.0201904

Figures

Abstract

The ensemble method random forests has become a popular classification tool in bioinformatics and related fields. The out-of-bag error is an error estimation technique often used to evaluate the accuracy of a random forest and to select appropriate values for tuning parameters, such as the number of candidate predictors that are randomly drawn for a split, referred to as mtry. However, for binary classification problems with metric predictors it has been shown that the out-of-bag error can overestimate the true prediction error depending on the choices of random forests parameters. Based on simulated and real data this paper aims to identify settings for which this overestimation is likely. It is, moreover, questionable whether the out-of-bag error can be used in classification tasks for selecting tuning parameters like mtry, because the overestimation is seen to depend on the parameter mtry. The simulation-based and real-data based studies with metric predictor variables performed in this paper show that the overestimation is largest in balanced settings and in settings with few observations, a large number of predictor variables, small correlations between predictors and weak effects. There was hardly any impact of the overestimation on tuning parameter selection. However, although the prediction performance of random forests was not substantially affected when using the out-of-bag error for tuning parameter selection in the present studies, one cannot be sure that this applies to all future data. For settings with metric predictor variables it is therefore strongly recommended to use stratified subsampling with sampling fractions that are proportional to the class sizes for both tuning parameter selection and error estimation in random forests. This yielded less biased estimates of the true prediction error. In unbalanced settings, in which there is a strong interest in predicting observations from the smaller classes well, sampling the same number of observations from each class is a promising alternative.

Citation: Janitza S, Hornung R (2018) On the overestimation of random forest’s out-of-bag error. PLoS ONE 13(8):

e0201904.

https://doi.org/10.1371/journal.pone.0201904

Editor: Y-h. Taguchi,

Chuo University, JAPAN

Received: January 15, 2018; Accepted: July 24, 2018; Published: August 6, 2018

Copyright: © 2018 Janitza, Hornung. This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All used data are third party data not owned or collected by us. However, all data sets can be accessed by readers using the links provided below in the same manner as we accessed these data. The Colon Cancer data, the Prostate Cancer data and both Breast Cancer data sets were obtained from the website http://ligarto.org/rdiaz/Papers/rfVS/randomForestVarSel.html. The Embryonal Tumor data is available at http://portals.broadinstitute.org/cgi-bin/cancer/datasets.cgi, and the Leukemia data was retrieved from the Bioconductor package golubEsets (URL http://bioconductor.org/packages/release/data/experiment/html/golubEsets.html).

Funding: SJ and RH were both funded by grant BO3139/6-1 from the German Science Foundation (URL http://www.dfg.de). SJ was in addition supported by grant BO3139/2-2 from the German Science Foundation. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Random forests (RF) [1] have become a popular classification tool in bioinformatics and related fields. They have also shown excellent performance in very complex data settings. Each tree in a RF is constructed based on a random sample of the observations, usually a bootstrap sample or a subsample of the original data. The observations that are not part of the bootstrap sample or subsample, respectively, are referred to as out-of-bag (OOB) observations. The OOB observations can be used for example for estimating the prediction error of RF, yielding the so-called OOB error. The OOB error is often used for assessing the prediction performance of RF. An advantage of the OOB error is that the complete original sample is used both for constructing the RF classifier and for error estimation. By contrast, with cross-validation and related data splitting procedures for error estimation a subset of the samples are left out for RF construction, which is why the resulting RF classifiers are less performant. Another advantage of using the OOB error is its computational speed. In contrast to cross-validation or other data splitting approaches, only one RF has to be constructed, while for k-fold cross-validation k RF have to be constructed [2, 3]. The use of the OOB error saves memory and computation time, especially when dealing with large data dimensions, where constructing a single RF might last several days or even weeks. These reasons might explain the frequent use of the OOB error for error estimation and tuning parameter selection in RF.

The OOB error is often claimed to be an unbiased estimator for the true error rate [1, 3, 4]. However, for two-class classification problems it was reported that the OOB error can overestimate the true prediction error depending on the choices of RF parameters [2, 5]. The bias can be very substantial, as shown in the latter papers, and is also present when using classical cross-validation procedures for error estimation. It was thus recommended by Mitchell [5] to use the OOB error only as an upper bound for the true prediction error. However, Mitchell [5] considered only settings with completely balanced samples, sample sizes below 60 and two response classes, limiting the generality of his results.

Besides the fact that trees in RF are constructed on a random sample of the data, there is a second component which differs between standard classification and regression trees and the trees in RF. In the trees of a RF, not all variables but only a subset of the variables are considered for each split. This subset is randomly drawn from all candidate predictors at each split. The size of this subset is usually referred to as mtry. The purpose of considering random subsets of the predictors instead of all predictors is to reduce the correlation between the trees, that is, to make them more dissimilar. This reduces the variance of the predictions obtained using RF. In practical applications, the most common approach for choosing appropriate values for mtry is to select the value over a grid of plausible values that yields the smallest OOB error [6–8]. In literature on RF methodology, the OOB error has also frequently been used to choose an appropriate value for mtry [9, 10]. In principle, other procedures like (repeated) cross-validation may be applied for selecting an optimal value for mtry, but the OOB error is usually the first choice for parameter tuning. This is due to the fact that, unlike many other approaches such as cross-validation, the whole data can be used to construct the RF and much computational effort is saved since only one RF has to be built for each candidate mtry value. Implementations exist that use the OOB error to select an appropriate value for mtry. In the statistical software R [11], for example, the function tuneRF from the package randomForest [12] automatically searches over a grid of mtry values and selects the value for mtry for which the OOB error is smallest. The latter approach is only valid, if the bias of the OOB error does not depend on the parameter mtry. If, by contrast, the bias of the OOB error does depend on this parameter, the mtry value minimizing the OOB error cannot be expected to minimize the true prediction error and will thus be in general suboptimal, making the OOB error based tuning approaches questionable. To date there are no studies investigating the reliability of the OOB error for tuning parameters like mtry in RF.

The main contribution of this paper is three-fold: (i) the bias and its dependence on mtry in settings with metric predictor variables are quantitatively assessed through studies with different numbers of observations, predictors and response classes which helps to identify so-called “high-risk settings”, (ii) the reasons for this bias and its dependence on mtry are studied in detail, and based on these findings, the use of alternatives, such as stratified sampling (which preserves the response class distribution of the original data in each subsample), is investigated, and (iii) the consequences of the bias for tuning parameter selection are explored.

This paper is structured as follows: In the section “Methods”, simulation-based and real-data based studies are described after briefly introducing the RF method. The description includes an outline of the simulated and real data, the considered settings and several different error estimation techniques that will be used. The results of the studies are subsequently shown in the section “Results”, where also some additional studies are presented, and finally recommendations are given. The results are discussed in the section “Discussion” and the main points are condensed in the section “Conclusions”.

Methods

In this section, the RF method and the simulation-based and real-data based studies are described. Simulated data is used to study the behavior of the OOB error in simple settings, in which all predictors are uncorrelated. This provides insight to the mechanisms which lead to the bias in the OOB error. Based on these results, settings are identified, in which a bias in the OOB error is likely. Subsequently, to assess the extent of the bias in these settings in practice, complex real world data is used.

Random forests and its out-of-bag error

RF is an ensemble of classification or regression trees that was introduced by Breiman [1]. One of the two random components in RF concerns the choice of variables used for splitting. For each split in a tree, the best splitting variable from a random sample of mtry predictors is selected. If the chosen mtry value is too small, it might be that none of the variables contained in the subset is relevant and that irrelevant variables are often selected for a split. The resulting trees have poor predictive ability. If the subset contains a large number of predictors, in contrast, it is likely that the same variables, namely those with the largest effect, are often selected for a split and that variables with smaller effects have hardly any chance of being selected. Therefore, mtry should be considered a tuning parameter.

The other random component in RF concerns the choice of training observations for a tree. Each tree in RF is built from a random sample of the data. This is usually a bootstrap sample or a subsample of size 0.632n. Therefore not all observations are used to construct a specific tree. The observations that are not used to construct a tree are denoted by out-of-bag (OOB) observations. In a RF, each tree is built from a different sample of the original data, so each observation is “out-of-bag” for some of the trees. The prediction for an observation can then be obtained by using only those trees for which the observation was not used for the construction. A classification for each observation is obtained in this way and the error rate can be estimated from these predictions. The resulting error rate is referred to as OOB error. This procedure was originally introduced by Breiman [13] and it has become an established method for error estimation in RF.

Simulation-based studies

The upward bias of the OOB error in different data settings with metric predictor variables was systematically investigated by means of simulation studies.

Settings were considered with

- different associations between the predictors and the response. Either none of the predictors were associated with the response (the corresponding studies termed null case) or some of them were associated (power case);

- different numbers of predictors, p ∈ {10, 100, 1000};