-

#1

We have been getting error TASK ERROR: timeout waiting on systemd on system shutdowns/startups for purposes of backups/snapshots. This is occurring on multiple PVE servers and multiple VMs. (both Ubuntu and Windows OS VMs). This has caused backups to fail. This issue has been discussed on the user forums for awhile but didn’t find a solution,

This began after our upgrade from 5.x to 6.x. This is affecting linux systems which are NOT running NTFS file system and Windows 10 systems. Because you have announced end-of-life for 5.x, downgrading to 5.x is apparently no longer option. We can (as a temporary measure) written and cron’ed babysitting scripts, but this really should not be necessary.

Servers are HP Proliant GEN-9, either with single or dual XEON processors, and plenty of RAM well above the total usage of all running VMs. One location is running Proxmox on HP Workstation PC. Most VM’s are Ubuntu 18.04 or Windows 10 1909.

-

#2

@wolfgang recently managed to reproduce this issue — if load on the DBUS system bus is high, we can’t talk to systemd and can’t start VMs. there are some settings that we can tweak to improve the situation, Wolfgang is working on finalizing proper patches.

do you by chance have a service running that communicates a lot over DBUS — known causes that we found out about so far are udisks2 or prometheus running on the nodes.. you can check with ‘dbus-monitor —system’, if you are not currently starting/stopping VMs it should be (near) silent.

-

#3

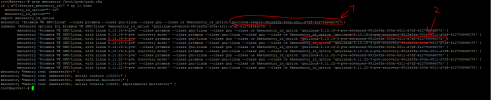

Here is the result of running dbus-monitor —system on one of the VMs effected.

-

Capture.PNG

10.4 KB

· Views: 63

-

#4

no, not in the VM. on the host PVE is talking to systemd via DBUS when starting a VM, if DBUS does not work because of an overload situation we can’t start the VM

-

#5

We also appear to also bee experiencing the same problem. We have some Windows VMs (appears to primarily affect Active Directory role related hosts, such as DCs and dedicated Azure Connect instance, although we primarily host Linux VMs on the affected cluster). We are most probably also not observing this problem as much on our other production clusters as the VMs there very rarely get stopped and started as a KVM guest as a whole (Windows Update restarts wouldn’t cause the whole VM container to get restarted).

I initially found troubleshooting steps in the following thread:

https://forum.proxmox.com/threads/vm-doesnt-start-proxmox-6-timeout-waiting-on-systemd.56218/

Code:

[admin@kvm1a ~]# systemctl status qemu.slice

● qemu.slice

Loaded: loaded

Active: active since Tue 2020-12-22 12:29:36 SAST; 1 weeks 4 days ago

Tasks: 189

Memory: 230.9G

CGroup: /qemu.slice

<snip>

└─144.scope

└─9941 [kvm]

Dec 22 12:31:57 kvm1a QEMU[4935]: kvm: warning: TSC frequency mismatch between VM (2499998 kHz) and host (2499999 kHz), and TSC scaling unavailable

Dec 22 12:31:57 kvm1a QEMU[4935]: kvm: warning: TSC frequency mismatch between VM (2499998 kHz) and host (2499999 kHz), and TSC scaling unavailable

Dec 22 12:31:57 kvm1a QEMU[4935]: kvm: warning: TSC frequency mismatch between VM (2499998 kHz) and host (2499999 kHz), and TSC scaling unavailable

Dec 22 12:31:57 kvm1a QEMU[4935]: kvm: warning: TSC frequency mismatch between VM (2499998 kHz) and host (2499999 kHz), and TSC scaling unavailable

Dec 22 12:31:57 kvm1a QEMU[4935]: kvm: warning: TSC frequency mismatch between VM (2499998 kHz) and host (2499999 kHz), and TSC scaling unavailable

Dec 22 12:36:30 kvm1a ovs-vsctl[9976]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port tap144i0

Dec 22 12:36:30 kvm1a ovs-vsctl[9976]: ovs|00002|db_ctl_base|ERR|no port named tap144i0

Dec 22 12:36:30 kvm1a ovs-vsctl[9977]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port fwln144i0

Dec 22 12:36:30 kvm1a ovs-vsctl[9977]: ovs|00002|db_ctl_base|ERR|no port named fwln144i0

Dec 22 12:36:30 kvm1a ovs-vsctl[9978]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl -- add-port vmbr0 tap144i0 tag=1 vlan_mode=dot1q-tunnel other-config:qiAll VMs running normally typically have a long line of options following the scope’s KVM instance. They also typically start as:

<PID> /usr/bin/kvm -id xxx -name yyy…

The ‘[kvm]’ subsequently identifies the process as defunct, confirmed when showing process information:

Code:

[admin@kvm1a ~]# ps -Flww -p 9941

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

7 Z admin 9941 1 4 80 0 - 0 - 0 2 2020 ? 14:13:38 [kvm] <defunct>One can’t kill the process so one essentially has to move all other VMs off the host and then restart it:

Code:

[admin@kvm1a ~]# systemctl stop 144.scope;

[admin@kvm1a ~]# telinit u; # Restart init just in case it's causing the problem (highly unlikely)

[admin@kvm1a ~]# ps -Flww -p 9941;

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

7 Z admin 9941 1 4 80 0 - 0 - 0 2 2020 ? 14:13:38 [kvm] <defunct>

[admin@kvm1a ~]# kill -9 9941;

[admin@kvm1a ~]# ps -Flww -p 9941;

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

7 Z admin 9941 1 4 80 0 - 0 - 0 2 2020 ? 14:13:38 [kvm] <defunct>

[admin@kvm1a ~]# cd /proc/9941/task;

[admin@kvm1a task]# kill *;

[admin@kvm1a task]# for f in *; do ps -Flww -p $f; done;

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

F S UID PID PPID C PRI NI ADDR SZ WCHAN RSS PSR STIME TTY TIME CMD

7 Z admin 9941 1 4 80 0 - 0 - 0 2 2020 ? 14:13:38 [kvm] <defunct>Code:

[admin@kvm1a log]# cat /etc/pve/qemu-server/144.conf

agent: 1

bios: ovmf

boot: cdn

bootdisk: scsi0

cores: 1

cpu: SandyBridge,flags=+pcid

efidisk0: rbd_hdd:vm-144-disk-1,size=1M

ide2: none,media=cdrom

localtime: 1

memory: 4096

name: redacted

net0: virtio=DE:AD:BEE:EEF:DE:AD,bridge=vmbr0,tag=1

numa: 1

onboot: 1

ostype: win10

protection: 1

scsi0: rbd_hdd:base-116-disk-0/vm-144-disk-0,cache=writeback,discard=on,size=80G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=deadbeef-dead-beef-dead-beefdeadbeef

sockets: 2System is running OvS with LACP bonded ethernet ports.

Code:

[admin@kvm1a ~]# pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph: 15.2.8-pve2

ceph-fuse: 15.2.8-pve2

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.5

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-2

libpve-guest-common-perl: 3.1-3

libpve-http-server-perl: 3.0-6

libpve-storage-perl: 6.3-3

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.3-pve3

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.0-1

proxmox-backup-client: 1.0.5-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-cluster: 6.2-1

pve-container: 3.3-1

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-7

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-2

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

gbr

Active Member

-

#81

This also stop backups from running when the backup reached the failed VM. Any chance there’s some forward motion on this? Anything i can do on my end to help?

gbr

Active Member

-

#82

So, the same VM is down this morning. No backups, no stress, just stopped responding at 8:40 AM. It no longer listens to any commands from the web interface, and shows as running, but it clearly isn’t.

Code:

root@pve-4:~# qm status 150

status: running

root@pve-4:~# qm terminal 150

unable to find a serial interface

root@pve-4:~# qm reset 150

VM 150 qmp command 'system_reset' failed - unable to connect to VM 150 qmp socket - timeout after 31 retries

root@pve-4:~# qm stop 150

VM quit/powerdown failed - terminating now with SIGTERM

VM still running - terminating now with SIGKILL

root@pve-4:~# ps ax | grep 150

10097 pts/0 S+ 0:00 grep 150

root@pve-4:~# qm start 150

timeout waiting on systemd

root@pve-4:~#

root@pve-4:~# qm migrate 150 pve-3

2020-05-29 09:41:12 starting migration of VM 150 to node 'pve-3' (192.168.100.112)

^Ccommand '/usr/bin/qemu-img info '--output=json' /mnt/pve/Slow-NAS/images/150/vm-150-disk-0.qcow2' failed: interrupted by signal

could not parse qemu-img info command output for '/mnt/pve/Slow-NAS/images/150/vm-150-disk-0.qcow2'

2020-05-29 09:46:55 migration finished successfully (duration 00:05:43)

root@pve-4:~#

/CODE]Migrating the VM to a server that had a previous failure results in the same error. Migrating to a server that has never run this VM (to failure) and I can start it.

Last edited: May 29, 2020

-

#83

Hi all,

I have (nearly?) the same issue here:

Some VM got stuck and I have no chance to restart or kill and start it again on the same pve-node (lets call ist ‘1st’).

After killing the VM, I can (live) migrate it to an other pve-host (2nd) and start it there.

Only a reboot of the 1st node recovers the issue.

After the reboot of the 1st node I also can (live) migrate the VM back to the 1st node.

Bash:

root@hv01:~# pvecm status

Cluster information

-------------------

Name: pvecluchaosinc

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Thu Jun 4 15:24:01 2020

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000001

Ring ID: 1.289

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.99.0.99

0x00000002 1 10.99.0.1 (local)

0x00000003 1 10.99.0.9Bash:

root@hv01:~# pveversion -v

proxmox-ve: 6.2-1 (running kernel: 5.4.41-1-pve)

pve-manager: 6.2-4 (running version: 6.2-4/9824574a)

pve-kernel-5.4: 6.2-2

pve-kernel-helper: 6.2-2

pve-kernel-5.4.41-1-pve: 5.4.41-1

ceph-fuse: 12.2.13-pve1

corosync: 3.0.3-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.15-pve1

libproxmox-acme-perl: 1.0.4

libpve-access-control: 6.1-1

libpve-apiclient-perl: 3.0-3

libpve-common-perl: 6.1-2

libpve-guest-common-perl: 3.0-10

libpve-http-server-perl: 3.0-5

libpve-storage-perl: 6.1-8

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.2-1

lxcfs: 4.0.3-pve2

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.2-1

pve-cluster: 6.1-8

pve-container: 3.1-6

pve-docs: 6.2-4

pve-edk2-firmware: 2.20200229-1

pve-firewall: 4.1-2

pve-firmware: 3.1-1

pve-ha-manager: 3.0-9

pve-i18n: 2.1-2

pve-qemu-kvm: 5.0.0-2

pve-xtermjs: 4.3.0-1

qemu-server: 6.2-2

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.4-pve1All qcow2 disks are located on a NFS-storage

-

#84

Good afternoon.

I encounter similar problems usually on old hardware (for example: intel xeon x3400 LGA1156). Most recently with kernel 5.4

I have never encountered such problems on more modern equipment.

Last edited: Jun 5, 2020

gbr

Active Member

-

#85

Hey Proxmox folk… What can I do to help you solve this issue?

Gerald

-

#86

Hey Proxmox folk… What can I do to help you solve this issue?

We cannot reproduce and understand how this could still happen with current Proxmox VE 6.2.

We check really closely on the VM systemd scopes and the timeouts are set so that running into them would normally indicate that something is really really slow (close to hanging)

While checking out this problem closely during the 5.x release we came to the conclusion that there can be some race/timing issue due to how systemd behaves if we only trust the «systemctl» command. This lead to developing a solution which talks over DBus directly to systemd to poll the current status in a safe way:

https://git.proxmox.com/?p=pve-comm…d7e877a9fd1910daf4e7cd937aa4bca8;hb=HEAD#l142

With this we could not reproduce this at all anymore, and we’re talking hundreds of machines, production and testing, doing various amounts of tasks which go through this code path.

For now, I can only recommend ensuring your setups are updated on latest 6.x release, nothing weird is in the logs, and that nothing really hangs which would make this message just a side effect — especially NFS can be prone to get into the infamous «D» (uninterruptible IO) state if network or the share are down.

Hints on things done out of the ordinary on your setup(s) could help to reproduce this, and we would be happy to hear them.

-

#87

I have the same issue with 1 of VM.

My setup is:

HP DL380G10:

2 x Intel(R) Xeon(R) Gold 6130

768 GB of RAM

Proxmox 6.2 with latest updates from apt

Single Node

Disks are LVM-Thin

Do you want access to my host and check the error?

Code:

TASK ERROR: timeout waiting on systemd

-

#88

For those who have these problems — do you use hardware raid?

-

#89

I have the same issue with 1 of VM.

My setup is:

HP DL380G10:

2 x Intel(R) Xeon(R) Gold 6130

768 GB of RAMProxmox 6.2 with latest updates from apt

Single Node

Disks are LVM-ThinDo you want access to my host and check the error?

Code:

TASK ERROR: timeout waiting on systemd

What is your VM Os and what happen on it before that issue ?

-

#90

For those who have these problems — do you use hardware raid?

At first i only use Lvm-Thin and now Raidz1.

-

#91

We cannot reproduce and understand how this could still happen with current Proxmox VE 6.2.

We check really closely on the VM systemd scopes and the timeouts are set so that running into them would normally indicate that something is really really slow (close to hanging)While checking out this problem closely during the 5.x release we came to the conclusion that there can be some race/timing issue due to how systemd behaves if we only trust the «systemctl» command. This lead to developing a solution which talks over DBus directly to systemd to poll the current status in a safe way:

https://git.proxmox.com/?p=pve-comm…d7e877a9fd1910daf4e7cd937aa4bca8;hb=HEAD#l142

With this we could not reproduce this at all anymore, and we’re talking hundreds of machines, production and testing, doing various amounts of tasks which go through this code path.For now, I can only recommend ensuring your setups are updated on latest 6.x release, nothing weird is in the logs, and that nothing really hangs which would make this message just a side effect — especially NFS can be prone to get into the infamous «D» (uninterruptible IO) state if network or the share are down.

Hints on things done out of the ordinary on your setup(s) could help to reproduce this, and we would be happy to hear them.

It happen when a guest os fail/hang and no respond to host caused by bugg like zerocopy issue, vswitch drivers kernel panic, etc….

It seams that the host can’t stop correctly all the freezing VM process and still keep previous running guest’s status until to reboot the node.

So is only the systemd scopes acting on this ?

To reproduce, can you totaly freeze a VM ?

Last edited: Jun 6, 2020

-

#92

It happen when a guest os fail/hang and no respond to host caused by bugg like zerocopy issue, vswitch drivers kernel panic, etc….

It seams that the host can’t stop correctly all the freezing VM process and still keep previous running guest’s status until to reboot the node.

So is only the systemd scopes acting on this ?

Yeah, I mean for that this error is totally expected and is only a side effect caused by the real error: your VM freezing.

Note that if the VM process freezes in such away that it cannot be stopped this timeout error will always be shown, because well, the VM scope can never exit as a process in it isn’t responding.

A freezing guest normally means a dead NFS or the like, so check there first.

To all, if you’re on up to date PVE 5.4 or 6.2 and see this error you’re 99.999% not affected by the issues of the thread starters.

They had the issue that the VM could be stopped fine, but the scope was still around even if no process was.

If you issue is that the VM is also still around just please open a new thread as then this error is expected and your real problem is the VM refusing to stop.

-

#93

FWIW, we just started to see this problem in our two clusters in the past few days. We upgraded from 5.4 to 6.2 a couple of weeks ago: pve-manager/6.2-4/9824574a (running kernel: 5.4.41-1-pve). No openvswitch; one cluster with PVE (hyperconverged) Ceph, one cluster with it’s own dedicated non-PVE-managed Ceph cluster.

I have a few VMs that I can’t start right now and I see defunct kvm processes on the host. I see the same systemd timeout that others have seen. These VMs aren’t critical so I haven’t tried rebooting the host yet, but the defunct kvm process is unkillable so I think I might be looking at a reboot. It’s happening on multiple hosts across clusters.

-

#94

it happened again to one guest here.

what i saw in the monitoring (nagios based checkmk) was, that the guest-os was at a high cpu utilization (97% io-wait).

all other guests on this and the other hosts are running fine (atm.)

trying to stop the guest from the web-gui does not work correctly, the guest stops only if i cancel the stop command. (!?)

i know, sounds a little bit strange, but:

if i migrate the so ‘stop canceled’ guest, the migration process finishes immediately after canceling the migration. (!?)

that is really strange, isn’t it?

the stopped guest can be migrated offline back and forth in a normal way from and to other hosts, but once the guest is migrated back to ‘hv01’ on which the issue has begun, migration is only possible by canceling the migration process.

the vm can be started only on other host then ‘hv01’

once the guest was started on an other host, it can not be migrated online back to hv01.

offline migration works instead.

a reboot of hv01 reverts to normal state.

the issue did occur in the past to different guests on different hosts in a random manner.

which logfile/information may i post to analyze this behavior?

-

#95

Same problem here. Just ran an update on all nodes last night and first servers to go down seem to be the Windows KVM ones. first it lost NFS connections not sure why. Then it the vms which use LVM started freezing. I stopped the vms but then could not start them as I got:

TASK ERROR: timeout waiting on systemd

I checked qemu.slice and it was not running so weird I could not start it. Even tried the fix posted earlier in thread but that didnt help.

Had to reboot two nodes now to get them stable and stop our clients killing us.

-

#96

Interesting, it was actually Windows guests that I first noticed it on, too.

gbr

Active Member

-

#97

I get roughly one VM a day, across multiple Linux OSs. No Windows yet. I’m going to start monitoring IOWait to see if that is an issue.

I’ve been running NFS for storage for years, and have not seen this issue. Plus, none of my other VMs are down, so I can’t see it being an NFS issue.

-

#98

Hi,

I, too, am seeing this error.

My Thinkstation P700 is on PVE 6.2-6.

Last night I shutdown the host and the host shutdown my Windows 10 VM. Earlier tonight I started the host again and it booted up without any issues. My Windows VM was set to auto start but it did not come up. I accessed the PVE web gui and found the OP error. In addition, the overall summary page wasn’t functioning and looking at the hardware choices (like usb passthrough) for the affected VM only brought up empty lists.

Rebooted the host but the issue is persisting so far.

gbr

Active Member

-

#99

Up to date, and still having issues. PVE has gone from a remarkably stable product to ultra flakey. Thanks systemd.

I’m either going to have to roll back to PVE 5.x or leave Proxmox entirely. This instability is seriously hurting my production servers.

tom

Proxmox Staff Member

-

#100

Up to date, and still having issues. PVE has gone from a remarkably stable product to ultra flakey. Thanks systemd.

My experience is exactly the opposite. As this thread is huge, please open a new thread describing your issue.

Содержание

- VM doesn’t start Proxmox 6 — timeout waiting on systemd

- homozavrus

- Attachments

- fabian

- homozavrus

- t.lamprecht

- Paddy972

- copcopcopcop

- copcopcopcop

- Paddy972

- homozavrus

- scintilla13

- VTeronen

- copcopcopcop

- copcopcopcop

- Addy90

- VM doesn’t start Proxmox 6 — timeout waiting on systemd

- chris.u

- copcopcopcop

- Mrt12

- spirit

- Antoine Ignimotion

- VM doesn’t start Proxmox 6 — timeout waiting on systemd

- kohly

- Catwoolfii

- t.lamprecht

- dominiaz

- Catwoolfii

VM doesn’t start Proxmox 6 — timeout waiting on systemd

homozavrus

Member

Hi all,

i have a updated to 6.0 proxmox from 5.4 (without any issues). Community edition.

All containers and VM are on zfs pool (10 disks in zfs raid10).

I have tried to reset one stucked VM. I have stopped and when i try to start that,

i have strange error —

# qm start 6008

timeout waiting on systemd

Web-interface look strange too: all wm and pct are grey.

How can i debug that problem?

prx-06:

# uname -a

Linux hst-cl-prx-06 5.0.15-1-pve #1 SMP PVE 5.0.15-1 (Wed, 03 Jul 2019 10:51:57 +0200) x86_64 GNU/Linux

prx-06:

# pveversion

pve-manager/6.0-4/2a719255 (running kernel: 5.0.15-1-pve)

root@hst-cl-prx-06:

# zpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 00:05:54 with 0 errors on Sun Jul 14 06:29:56 2019

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdz3 ONLINE 0 0 0

sdy3 ONLINE 0 0 0

errors: No known data errors

pool: storagepool

state: ONLINE

scan: scrub repaired 0B in 0 days 05:05:06 with 0 errors on Sun Jul 14 11:29:09 2019

config:

NAME STATE READ WRITE CKSUM

storagepool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

wwn-0x5000cca2326d67ac ONLINE 0 0 0

wwn-0x5000cca23271b308 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

wwn-0x5000cca23271ae98 ONLINE 0 0 0

wwn-0x5000cca2322788a0 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

wwn-0x5000cca23227ae20 ONLINE 0 0 0

wwn-0x5000cca23227b510 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

wwn-0x5000cca23227abf0 ONLINE 0 0 0

wwn-0x5000cca232276af0 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

wwn-0x5000cca23227b354 ONLINE 0 0 0

wwn-0x5000cca2327168a8 ONLINE 0 0 0

errors: No known data errors

Attachments

fabian

Proxmox Staff Member

Best regards,

Fabian

Do you already have a Commercial Support Subscription? — If not, Buy now and read the documentation

homozavrus

Member

I have rebooted the host.

i have no other options ((

All works for now. I think that issue coming again.

# pveversion -v

proxmox-ve: 6.0-2 (running kernel: 5.0.15-1-pve)

pve-manager: 6.0-4 (running version: 6.0-4/2a719255)

pve-kernel-5.0: 6.0-5

pve-kernel-helper: 6.0-5

pve-kernel-4.15: 5.4-6

pve-kernel-5.0.15-1-pve: 5.0.15-1

pve-kernel-4.15.18-18-pve: 4.15.18-44

pve-kernel-4.15.18-17-pve: 4.15.18-43

ceph: 14.2.1-pve2

ceph-fuse: 14.2.1-pve2

corosync: 3.0.2-pve2

criu: 3.11-3

glusterfs-client: 5.5-3

ksmtuned: 4.20150325+b1

libjs-extjs: 6.0.1-10

libknet1: 1.10-pve1

libpve-access-control: 6.0-2

libpve-apiclient-perl: 3.0-2

libpve-common-perl: 6.0-2

libpve-guest-common-perl: 3.0-1

libpve-http-server-perl: 3.0-2

libpve-storage-perl: 6.0-5

libqb0: 1.0.5-1

lvm2: 2.03.02-pve3

lxc-pve: 3.1.0-61

lxcfs: 3.0.3-pve60

novnc-pve: 1.0.0-60

openvswitch-switch: 2.10.0+2018.08.28+git.8ca7c82b7d+ds1-12

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.0-5

pve-cluster: 6.0-4

pve-container: 3.0-4

pve-docs: 6.0-4

pve-edk2-firmware: 2.20190614-1

pve-firewall: 4.0-5

pve-firmware: 3.0-2

pve-ha-manager: 3.0-2

pve-i18n: 2.0-2

pve-qemu-kvm: 4.0.0-3

pve-xtermjs: 3.13.2-1

pve-zsync: 2.0-1

qemu-server: 6.0-5

smartmontools: 7.0-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.1-pve1

# systemctl status pvestatd

● pvestatd.service — PVE Status Daemon

Loaded: loaded (/lib/systemd/system/pvestatd.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2019-07-22 19:34:53 +06; 25min ago

Process: 8683 ExecStart=/usr/bin/pvestatd start (code=exited, status=0/SUCCESS)

Main PID: 8903 (pvestatd)

Tasks: 1 (limit: 4915)

Memory: 94.7M

CGroup: /system.slice/pvestatd.service

└─8903 pvestatd

Jul 22 19:35:33 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/609: 0

Jul 22 19:35:33 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/610: 1

Jul 22 19:35:33 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/611: 4

Jul 22 19:35:44 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/603: 5

Jul 22 19:35:44 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/607: 6

Jul 22 19:35:44 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/612: 0

Jul 22 19:35:44 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/613: 7

Jul 22 19:35:53 hst-cl-prx-06 pvestatd[8903]: modified cpu set for lxc/609: 8

Jul 22 19:46:53 hst-cl-prx-06 pvestatd[8903]: unable to get PID for CT 603 (not running?)

Jul 22 19:46:54 hst-cl-prx-06 pvestatd[8903]: unable to get PID for CT 603 (not running?)

t.lamprecht

Proxmox Staff Member

It lists all scopes, we now wait on a possible old $VMID.scope to be gone before we start the VM as we had problems with sopes still being around when we restarted a VM (e.g., for stop-mode backup).

If you still see the $vmid.scope around, you could try a manual:

Best regards,

Thomas

Do you already have a Commercial Support Subscription? — If not, Buy now and read the documentation

Paddy972

New Member

hello,

i have the same probleme, after upgrade to the 6th version of proxmox.

i have 2 VMs who freeze after 2 days activities .

with on the console systemd[1] : failed to start journalservice. line by line.

after a qmstop VMID

i have this

root@srvpve1:

# systemctl status qemu.slice

● qemu.slice

Loaded: loaded

Active: active since Wed 2019-07-24 09:35:36 AST; 4 days ago

Tasks: 13

Memory: 54.8G

CGroup: /qemu.slice

└─200.scope

└─4744 /usr/bin/kvm -id 200 -name srvalcasar -chardev socket,id=qmp,path=/var/run/qemu-server/200.qmp,server,nowait -mon chardev=qmp,mode=control -chard

Jul 24 09:35:47 srvpve1 ovs-vsctl[5223]: ovs|00002|db_ctl_base|ERR|no port named fwln208i0

Jul 24 09:35:47 srvpve1 ovs-vsctl[5237]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl add-port vmbr254 fwln208o0 — set Interface fwln208o0 type=internal

Jul 26 09:59:49 srvpve1 ovs-vsctl[3036011]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port tap402i0

Jul 26 09:59:49 srvpve1 ovs-vsctl[3036011]: ovs|00002|db_ctl_base|ERR|no port named tap402i0

Jul 26 09:59:49 srvpve1 ovs-vsctl[3036013]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port fwln402i0

Jul 26 09:59:49 srvpve1 ovs-vsctl[3036013]: ovs|00002|db_ctl_base|ERR|no port named fwln402i0

Jul 26 09:59:49 srvpve1 ovs-vsctl[3036014]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl add-port vmbr254 tap402i0

Jul 26 11:32:23 srvpve1 ovs-vsctl[3238665]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port fwln402i0

Jul 26 11:32:23 srvpve1 ovs-vsctl[3238665]: ovs|00002|db_ctl_base|ERR|no port named fwln402i0

Jul 26 11:32:23 srvpve1 ovs-vsctl[3238666]: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port tap402i0

root@srvpve1:

# qm start 208

timeout waiting on systemd

root@srvpve1:

CT is ok

VM on mageia 7 ok

VMs on ubuntu bionic freeze and con’t restart with qm command

all restart if i reboot the server hardware

thanks for your help

copcopcopcop

Member

Same problem here. Restarting qemu.slice and stopping 4000.scope did not help..

When I try to start the VM after it has crashed.

New Member

copcopcopcop

Member

Exactly the problem i’ve been having..

EDIT: 20 hours later, qm 4000 is still hung and won’t start.

New Member

Paddy972

New Member

homozavrus

Member

scintilla13

Member

VTeronen

Member

copcopcopcop

Member

Just had this happen again. Freshly installed VM running for about 2 days.

HDD was not set to write-back.

EDIT: The volume is still stuck and the VM won’t start, but I can clone the VM and start it up again fine. pretty annoying. Also worth mentioning this causes shutdown to hang since the volume won’t unmount.

copcopcopcop

Member

still happening fairly frequently over here. it seems to happen most when trying to shut down a VM. the VM will hang while shutting down and has to force stopped. at that point, the virtual disk refuses to unmount until the host is shutdown which requires at least 5-minutes.

I may just revert back to PVE 5.

Addy90

Member

I have the same problem. One VM keeps freezing the entire system. It seems I can log in via noVNC, but when I shutdown, it stops before killing the process. Stopping the process on Proxmox does not work either.

This is only on my home server: My CPU is an Intel i7-7700T on a GIGABYTE GA-Z270N-WIFI Mainboard with 16 GB DDR4-2400 RAM and Samsung Pro 256 GB SSD. Did not happen on PVE 5.4 before the upgrade. I already had no-cache active, not writeback. Did not help.

I could log the following from the PVE 6 host when trying to shut down the hung VM:

Aug 17 11:45:26 server kernel: INFO: task kvm:1732 blocked for more than 120 seconds.

Aug 17 11:45:26 server kernel: Tainted: P O 5.0.18-1-pve #1

Aug 17 11:45:26 server kernel: «echo 0 > /proc/sys/kernel/hung_task_timeout_secs» disables this message.

Aug 17 11:45:26 server kernel: kvm D 0 1732 1 0x00000000

Aug 17 11:45:26 server kernel: Call Trace:

Aug 17 11:45:26 server kernel: __schedule+0x2d4/0x870

Aug 17 11:45:26 server kernel: ? wait_for_completion+0xc2/0x140

Aug 17 11:45:26 server kernel: ? wake_up_q+0x80/0x80

Aug 17 11:45:26 server kernel: schedule+0x2c/0x70

Aug 17 11:45:26 server kernel: vhost_net_ubuf_put_and_wait+0x60/0x90 [vhost_net]

Aug 17 11:45:26 server kernel: ? wait_woken+0x80/0x80

Aug 17 11:45:26 server kernel: vhost_net_ioctl+0x5fe/0xa50 [vhost_net]

Aug 17 11:45:26 server kernel: ? send_signal+0x3e/0x80

Aug 17 11:45:26 server kernel: do_vfs_ioctl+0xa9/0x640

Aug 17 11:45:26 server kernel: ksys_ioctl+0x67/0x90

Aug 17 11:45:26 server kernel: __x64_sys_ioctl+0x1a/0x20

Aug 17 11:45:26 server kernel: do_syscall_64+0x5a/0x110

Aug 17 11:45:26 server kernel: entry_SYSCALL_64_after_hwframe+0x44/0xa9

Aug 17 11:45:26 server kernel: RIP: 0033:0x7f03ce3bc427

Aug 17 11:45:26 server kernel: Code: Bad RIP value.

Aug 17 11:45:26 server kernel: RSP: 002b:00007ffd2eb8bd98 EFLAGS: 00000246 ORIG_RAX: 0000000000000010

Aug 17 11:45:26 server kernel: RAX: ffffffffffffffda RBX: 00007f03c16bb000 RCX: 00007f03ce3bc427

Aug 17 11:45:26 server kernel: RDX: 00007ffd2eb8bda0 RSI: 000000004008af30 RDI: 0000000000000017

Aug 17 11:45:26 server kernel: RBP: 00007ffd2eb8bda0 R08: 00007f03c1657760 R09: 00007f03c10e8420

Aug 17 11:45:26 server kernel: R10: 0000000000000000 R11: 0000000000000246 R12: 00007f02bbf9a6b0

Aug 17 11:45:26 server kernel: R13: 0000000000000001 R14: 00007f02bbf9a638 R15: 00007f03c16880c0

Источник

VM doesn’t start Proxmox 6 — timeout waiting on systemd

chris.u

New Member

I took the solution from the redhat bugtracker: I am not allowed to post the link. but it is Bug 1494974

copcopcopcop

Member

I took the solution from the redhat bugtracker: I am not allowed to post the link. but it is Bug 1494974

Thanks. Just disabled zcopytx. Will report back.

EDIT:

For anyone else trying this, don’t forget to run the following command after adding the config file to /etc/modprobe.d

Mrt12

Member

spirit

Famous Member

I have check on my proxmox5 node,

vhost_net experimental_zcopytx was already at 1.

Not sure if it’s a regression in kernel or ovs .

but a recent patch is going to set it again to 0 by default

https://lkml.org/lkml/2019/6/17/202

Edit:

this patch has been applied in kernel 5.1

«Are you looking for a French Proxmox training center?

Antoine Ignimotion

New Member

I have a updated to 6.0 proxmox from 5.4 (without any issues) and Debian 9 (updated before with normal reboot) to Debian 10

(same on my 2 serveur node — same time — same result)

Following the procedure at https://pve.proxmox.com/wiki/Upgrade_from_5.x_to_6.0

When i reboot server the default kernel 5.0 stay frozen (picture) :

But if manualy take grub option for Kernel 4.15.18-20-pve (last before upgrade), the reboot go to the end and i can going on web interface and shell for using my serveur.

But if i reboot this serveur again without change grub option (auto boot), the server freeze again (kernel 5.0)

Can you say me that can i do ?

(Sorry for my poor English )

Источник

VM doesn’t start Proxmox 6 — timeout waiting on systemd

Active Member

Active Member

So, the same VM is down this morning. No backups, no stress, just stopped responding at 8:40 AM. It no longer listens to any commands from the web interface, and shows as running, but it clearly isn’t.

kohly

Active Member

I have (nearly?) the same issue here:

Some VM got stuck and I have no chance to restart or kill and start it again on the same pve-node (lets call ist ‘1st’).

After killing the VM, I can (live) migrate it to an other pve-host (2nd) and start it there.

Only a reboot of the 1st node recovers the issue.

After the reboot of the 1st node I also can (live) migrate the VM back to the 1st node.

Catwoolfii

Active Member

Active Member

Hey Proxmox folk. What can I do to help you solve this issue?

t.lamprecht

Proxmox Staff Member

We cannot reproduce and understand how this could still happen with current Proxmox VE 6.2.

We check really closely on the VM systemd scopes and the timeouts are set so that running into them would normally indicate that something is really really slow (close to hanging)

While checking out this problem closely during the 5.x release we came to the conclusion that there can be some race/timing issue due to how systemd behaves if we only trust the «systemctl» command. This lead to developing a solution which talks over DBus directly to systemd to poll the current status in a safe way:

https://git.proxmox.com/?p=pve-comm. d7e877a9fd1910daf4e7cd937aa4bca8;hb=HEAD#l142

With this we could not reproduce this at all anymore, and we’re talking hundreds of machines, production and testing, doing various amounts of tasks which go through this code path.

For now, I can only recommend ensuring your setups are updated on latest 6.x release, nothing weird is in the logs, and that nothing really hangs which would make this message just a side effect — especially NFS can be prone to get into the infamous «D» (uninterruptible IO) state if network or the share are down.

Hints on things done out of the ordinary on your setup(s) could help to reproduce this, and we would be happy to hear them.

Best regards,

Thomas

Do you already have a Commercial Support Subscription? — If not, Buy now and read the documentation

dominiaz

Active Member

I have the same issue with 1 of VM.

2 x Intel(R) Xeon(R) Gold 6130

768 GB of RAM

Proxmox 6.2 with latest updates from apt

Single Node

Disks are LVM-Thin

Do you want access to my host and check the error?

Catwoolfii

Active Member

New Member

I have the same issue with 1 of VM.

2 x Intel(R) Xeon(R) Gold 6130

768 GB of RAM

Proxmox 6.2 with latest updates from apt

Single Node

Disks are LVM-Thin

Do you want access to my host and check the error?

New Member

New Member

We cannot reproduce and understand how this could still happen with current Proxmox VE 6.2.

We check really closely on the VM systemd scopes and the timeouts are set so that running into them would normally indicate that something is really really slow (close to hanging)

While checking out this problem closely during the 5.x release we came to the conclusion that there can be some race/timing issue due to how systemd behaves if we only trust the «systemctl» command. This lead to developing a solution which talks over DBus directly to systemd to poll the current status in a safe way:

https://git.proxmox.com/?p=pve-comm. d7e877a9fd1910daf4e7cd937aa4bca8;hb=HEAD#l142

With this we could not reproduce this at all anymore, and we’re talking hundreds of machines, production and testing, doing various amounts of tasks which go through this code path.

For now, I can only recommend ensuring your setups are updated on latest 6.x release, nothing weird is in the logs, and that nothing really hangs which would make this message just a side effect — especially NFS can be prone to get into the infamous «D» (uninterruptible IO) state if network or the share are down.

Hints on things done out of the ordinary on your setup(s) could help to reproduce this, and we would be happy to hear them.

It happen when a guest os fail/hang and no respond to host caused by bugg like zerocopy issue, vswitch drivers kernel panic, etc.

It seams that the host can’t stop correctly all the freezing VM process and still keep previous running guest’s status until to reboot the node.

So is only the systemd scopes acting on this ?

Источник

VM doesn’t start Proxmox 6 — timeout waiting on systemd

evg32

Active Member

Just to be clear: Did you make this change on the PVE host or the guest VMs?

Edit: Changed that cstate setting on my PVE host, updated grub, and rebooted. I’ve got three VMs that won’t start up already. No dice.

Active Member

wolfgang

Proxmox Retired Staff

This is not we don’t accept that there is no issue.

But it is hard to fix something that we can’t reproduce.

Best regards,

Wolfgang

Do you already have a Commercial Support Subscription? — If not, Buy now and read the documentation

Active Member

This is not we don’t accept that there is no issue.

But it is hard to fix something that we can’t reproduce.

My apologies, then. There’s been no Proxmox employee interaction on here for quite a while, so I thought you’d dropped it.

Catwoolfii

Active Member

Active Member

Active Member

Does the release of 6.3 solve this issue? Anyone here that had the issue and upgraded?

BuzzKillingtonne

Member

Does the release of 6.3 solve this issue? Anyone here that had the issue and upgraded?

shruxx88

Member

I’ve got the same problem on 6.3-2 just tonight. Web GUI ist reachable, two VMs are not (out of 6). Just the error TASK ERROR: timeout waiting on systemd is coming up.

I cannot guess how this is coming up, because there is no info there nor a log. Right now I added the /etc/modprobe.d/vhost-net.conf workaround from the problem of PVE 5 and hope that this will be better.

Any news to this?

Mohave County Library

New Member

brdctr

Member

I can duplicate this pretty easy. Sorry for the lack of any details; home lab user here and my troubleshooting is a little limited.

Fresh install of Proxmox, single node.

Server Specs:

SuperMicro Server

Dual E5-2630 (6 core)

32GB RAM

Seagate Constellation ST91000640NS 1TB 7200 RPM 64MB Cache SATA drives (guest storage 6 drives in ZFS RAID 10)

Kensington 120GB SATA SSD drives (Proxmox system, ZFS RAID 1)

I set up two Ubuntu 20.04 VMs, 8GB RAM, 200GB drive. On both VMs run the stress command.

Eventually I’ll start to see the «timeout waiting on systemd» errors on the guest console. Sometimes it’s as little as 10 minutes other times it can be an hour before it happens.

I couldn’t reboot or shutdown the guests that had the «timeout waiting on systemd» error, rebooting the Proxmox server was the only way I could get them to power up again.

My system is Intel so I added «intel_idle.max_cstate=1» to my grub config and it did help. It took longer for a guest to show the «waiting on systemd» on the console but still had an eventual freeze on the guest and had to reboot the system so I could do anything with the guest again.

I then installed the qemu-guest-agent on the Ubuntu guests and enabled the QEMU Guest Agent in the Proxmox guest config. I also disabled Memory Ballooning. After doing these two things I’ll get the «waiting on systemd» error in the guest console but I can cancel the stress command, do a reboot, etc. with the guests. The system stays operational with those guests.

Is anyone from Proxmox interested in getting access to the system to test with? I read somewhere that Proxmox can’t duplicate so maybe there’s something specific with my system that can help.

Источник

VM doesn’t start Proxmox 6 — timeout waiting on systemd

paulprox

New Member

Same story here. Are you booting your VM off a USB device? That’s my scenario; VM will no longer boot after most recent update. (Running 5.13.19-4-pve where it now fails; came from 5.13.19-3 where it worked.)

Update: Reverting back to 5.13.19-3 for now which fixed the issue. USB device passthrough working again. Something appears to be very wrong with 5.13.19-4.

Jef Heselmans

Member

TorqueWrench

Member

Thanks for the feedback, everyone. For those of you also running across issues with the 5.13.19-4 kernel update and you’ve never reverted a Linux kernel before, I’ve written up a quick guide:

Note that this will not work if you’re using ZFS, as I don’t believe Proxmox ZFS installs use grub.

I hope this helps someone else!

depen

New Member

Adding a little bit of context.

I’m running PVE 7.1-10 with three VMs. Home Assistant and Windows 11 VM starts just fine, but my Fedora35 VM won’t start. HA starts first, Win11 with a 300s startup delay. Normally the Fedora VM starts inbetween with a 30s delay. After I upgraded to 5.13.19-4 and rebooted PVE, Fedora won’t start. I have rebooted multiple times with the same result. Changeing the auto start to manually do not help.

At the first start on my Fedora35 VM I get the following error:

TASK ERROR: start failed: command ‘/usr/bin/kvm -id 135 -name F35Prod -no-shutdown -chardev ‘socket,id=qmp,path=/var/run/qemu-server/135.qmp,server=on,wait=off’ -mon ‘chardev=qmp,mode=control’ -chardev ‘socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5’ -mon ‘chardev=qmp-event,mode=control’ -pidfile /var/run/qemu-server/135.pid -daemonize -smbios ‘type=1,uuid=—ABC123—‘ -drive ‘if=pflash,unit=0,format=raw,readonly=on,file=/usr/share/pve-edk2-firmware//OVMF_CODE.fd’ -drive ‘if=pflash,unit=1,format=raw,id=drive-efidisk0,size=131072,file=/dev/zvol/zfs1/vm-135-disk-0’ -global ‘ICH9-LPC.acpi-pci-hotplug-with-bridge-support=off’ -smp ‘2,sockets=1,cores=2,maxcpus=2’ -nodefaults -boot ‘menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg’ -vnc ‘unix:/var/run/qemu-server/135.vnc,password=on’ -cpu kvm64,enforce,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep -m 6144 -readconfig /usr/share/qemu-server/pve-q35-4.0.cfg -device ‘vmgenid,guid=—ABC123—‘ -device ‘usb-tablet,id=tablet,bus=ehci.0,port=1’ -device ‘usb-host,vendorid=0x0e8d,productid=0x1887,id=usb0’ -device ‘VGA,id=vga,bus=pcie.0,addr=0x1’ -chardev ‘socket,path=/var/run/qemu-server/135.qga,server=on,wait=off,id=qga0’ -device ‘virtio-serial,id=qga0,bus=pci.0,addr=0x8’ -device ‘virtserialport,chardev=qga0,name=org.qemu.guest_agent.0’ -device ‘virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3’ -iscsi ‘initiator-name=iqn.1993-08.org.debian:01:a15be791c279’ -device ‘virtio-scsi-pci,id=scsihw0,bus=pci.0,addr=0x5’ -drive ‘file=/dev/zvol/zfs1/vm-135-disk-1,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on’ -device ‘scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,bootindex=100’ -netdev ‘type=tap,id=net0,ifname=tap135i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on’ -device ‘virtio-net-pci,mac=—ABC123—,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=101’ -machine ‘type=q35+pve0» failed: got timeout

If I try to start the VM a second time I get the following error:

TASK ERROR: timeout waiting on systemd

Источник

[SOLVED] VM not starting after upgrading kernel and reboot — timeout waiting on systemd

vale.maio2

New Member

Hi all, quite a noob here and running Proxmox on my own home server so please bear with me.

After upgrading the Proxmox kernel this morning (via a simple apt upgrade) and rebooting, one of my 2 VM is refusing to start. From this post I’ve tried running a systemctl stop 100.slice command (100 is the ID of the offending VM), but it’s still showing up as

# qm start 100 timeout waiting on systemd

For what is worth, I’m running pve-manager/7.1-10/6ddebafe (running kernel: 5.13.19-4-pve), on a Dell T710 server, witha a hardware RAID5 configuration.

If you need any more details I’ll be happy to oblige.

EDIT: just in case you need pveversion —verbose:

vale.maio2

New Member

hACKhIDE

New Member

vale.maio2

New Member

hACKhIDE

New Member

kuchar

New Member

vale.maio2

New Member

Open a terminal to your Proxmox server. Launch the command

grep menuentry /boot/grub/grub.cfg

and in there you’ll need two things:

The first one is the id_option from the Advanced options for Proxmox VE GNU/Linux line (circled in red, number 1), the second one is the id_option for the last known working kernel (circled in red number 2, which for me was 5.13.19-3-pve). make sure not to grab the ID for the recovery mode kernel.

Modify the /etc/default/grub file with

nano /etc/default/grub

(or whatever your favourite text editor is). From there, delete the line that says

GRUB_DEFAULT=0

and replace it with

GRUB_DEFAULT=»menu entry ID>kernel ID«

and use the two IDs you grabbed above. Don’t forget to separate the IDs with the sign >.

In my case, the line looks like this:

GRUB_DEFAULT=»gnulinux-advanced-9912e5fa-300a-4311-a7df-612754946075>gnulinux-5.13.19-3-pve-advanced-9912e5fa-300a-4311-a7df-612754946075″

Save and close the file, update the GRUB with

update-grub

and reboot.

Attachments

kuchar

New Member

Open a terminal to your Proxmox server. Launch the command

grep menuentry /boot/grub/grub.cfg

and in there you’ll need two things:

View attachment 33903

The first one is the id_option from the menuentry line (circled in red, number 1), the second one is the id_option for the last known working kernel (which for me was 5.13.19-3-pve). make sure not to grab the ID for the recovery mode kernel.

Modify the /etc/default/grub file with

/etc/default/grub

(or whatever your favourite text editor is). From there, delete the line that says

GRUB_DEFAULT=0

and replace it with

GRUB_DEFAULT=»menu entry ID>kernel ID«

and use the two IDs you grabbed above. Don’t forget to separate the IDs with the sign >.

In my case, the line looks like this:

GRUB_DEFAULT=»gnulinux-advanced-9912e5fa-300a-4311-a7df-612754946075>gnulinux-5.13.19-3-pve-advanced-9912e5fa-300a-4311-a7df-612754946075″

Save and close the file, update the GRUB with

update-grub

and reboot.

Ralf MUC

New Member

jpeppard

New Member

tristank

Member

vale.maio2

New Member

Woopsie you’re right, I’ve corrected the image, thanks for spotting that.

ScottK

New Member

paulprox

New Member

peteb

Member

I also have the same issue after upgrading to kernel 5.13.19-4

My linux VM’s boot fine, but a windows 10 and a windows 11 vm do not boot. Strangely a windows server 2019 vm does boot fine.

I had to revert back to kernel 5.13.19-3 to get everything working again.

It would be really nice if Proxmox could release a simple kernel removal tool to remove recently installed buggy kernels so that previous working kernels can be booted by default. At present there is no simple way to do this when booting ZFS with Systemd-boot.

proxmox-boot-tool also does not actually remove a buggy kernel — maybe proxmox should update this tool to do this.

When trying to remove the buggy kernel with:

I get a message that it also wants to remove the following:

coffeedragonfly

New Member

Open a terminal to your Proxmox server. Launch the command

grep menuentry /boot/grub/grub.cfg

and in there you’ll need two things:

View attachment 33923

The first one is the id_option from the Advanced options for Proxmox VE GNU/Linux line (circled in red, number 1), the second one is the id_option for the last known working kernel (circled in red number 2, which for me was 5.13.19-3-pve). make sure not to grab the ID for the recovery mode kernel.

Modify the /etc/default/grub file with

/etc/default/grub

(or whatever your favourite text editor is). From there, delete the line that says

GRUB_DEFAULT=0

and replace it with

GRUB_DEFAULT=»menu entry ID>kernel ID«

and use the two IDs you grabbed above. Don’t forget to separate the IDs with the sign >.

In my case, the line looks like this:

GRUB_DEFAULT=»gnulinux-advanced-9912e5fa-300a-4311-a7df-612754946075>gnulinux-5.13.19-3-pve-advanced-9912e5fa-300a-4311-a7df-612754946075″

Save and close the file, update the GRUB with

update-grub

and reboot.

Unfortunately this did not work for me. I no longer get the «timeout waiting on systemd» error, but it throws up

«TASK ERROR: start failed: command ‘/usr/bin/kvm -id 123 -name emby -no-shutdown -chardev ‘socket,id=qmp,path=/var/run/qemu-server/123.qmp,server=on,wait=off’ -mon ‘chardev=qmp,mode=control’ -chardev ‘socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5’ -mon ‘chardev=qmp-event,mode=control’ -pidfile /var/run/qemu-server/123.pid -daemonize -smbios ‘type=1,uuid=eda27b91-dee9-40e3-a080-d0724a987a80’ -smp ‘4,sockets=1,cores=4,maxcpus=4’ -nodefaults -boot ‘menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg’ -vnc ‘unix:/var/run/qemu-server/123.vnc,password=on’ -cpu kvm64,enforce,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep -m 4096 -device ‘pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e’ -device ‘pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f’ -device ‘vmgenid,guid=4678ff30-3a41-4fc0-8481-679a6a6107a7’ -device ‘piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2’ -device ‘nec-usb-xhci,id=xhci,bus=pci.1,addr=0x1b’ -device ‘usb-tablet,id=tablet,bus=uhci.0,port=1’ -device ‘usb-host,bus=xhci.0,hostbus=3,hostport=4,id=usb0’ -device ‘VGA,id=vga,bus=pci.0,addr=0x2’ -device ‘virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3’ -iscsi ‘initiator-name=iqn.1993-08.org.debian:01:ec04b86f0bb’ -drive ‘if=none,id=drive-ide2,media=cdrom,aio=io_uring’ -device ‘ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=101’ -device ‘virtio-scsi-pci,id=scsihw0,bus=pci.0,addr=0x5’ -drive ‘file=/dev/zvol/rpool/data/vm-123-disk-0,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on’ -device ‘scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,bootindex=100’ -netdev ‘type=tap,id=net0,ifname=tap123i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on’ -device ‘virtio-net-pci,mac=56:2A:4B:46:14:31,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=102’ -machine ‘type=pc+pve0» failed: got timeout»

I’m using ZFS like a previous user who still had the issue.

EDIT: for anyone else with this issue using ZFS, all you have to do is what the user above mentioned (Proxmox also said I should run “proxmox-boot-tool refresh” to refresh the boot options so I did that, too) rebooted and chose the correct one from the options and I can run the VMs normally. Fun times.

Источник

Proxmox VE 5.3-8 running on Ryzen 1700x with 32GB RAM. This VM with GPU passthrough works fine initially, but if I shut down or try to restart, it fails pretty often. Sometimes I get

TASK ERROR: start failed: org.freedesktop.systemd1.UnitExists: Unit 200.scope already exists.

And other times it just times out. I’ve tried removing the lockfile and restarting, but that doesn’t seem to help. Any ideas??

Full error below:

TASK ERROR: start failed: command '/usr/bin/kvm -id 200 -name win10 -chardev 'socket,id=qmp,path=/var/run/qemu-server/200.qmp,server,nowait' -mon 'chardev=qmp,mode=control' -chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' -mon 'chardev=qmp-event,mode=control' -pidfile /var/run/qemu-server/200.pid -daemonize -smbios 'type=1,uuid=e1bb5721-db07-4f37-970c-5460ea044937' -drive 'if=pflash,unit=0,format=raw,readonly,file=/usr/share/pve-edk2-firmware//OVMF_CODE.fd' -drive 'if=pflash,unit=1,format=raw,id=drive-efidisk0,file=/dev/zvol/rpool/data/vm-200-disk-0' -smp '8,sockets=1,cores=8,maxcpus=8' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' -vga none -nographic -no-hpet -cpu 'host,+kvm_pv_unhalt,+kvm_pv_eoi,hv_vendor_id=proxmox,hv_spinlocks=0x1fff,hv_vapic,hv_time,hv_reset,hv_vpindex,hv_runtime,hv_relaxed,hv_synic,hv_stimer,kvm=off' -m 8192 -device 'vmgenid,guid=d89a30b5-af17-4a4e-9f09-a5f39fd5fd7b' -readconfig /usr/share/qemu-server/pve-q35.cfg -device 'vfio-pci,host=0a:00.0,id=hostpci0.0,bus=ich9-pcie-port-1,addr=0x0.0,multifunction=on' -device 'vfio-pci,host=0a:00.1,id=hostpci0.1,bus=ich9-pcie-port-1,addr=0x0.1' -device 'usb-host,hostbus=1,hostport=3,id=usb0' -device 'usb-host,hostbus=5,hostport=3,id=usb1' -device 'usb-host,hostbus=5,hostport=4.5,id=usb2' -device 'usb-host,hostbus=1,hostport=4,id=usb3' -device 'usb-host,hostbus=5,hostport=2,id=usb4' -chardev 'socket,path=/var/run/qemu-server/200.qga,server,nowait,id=qga0' -device 'virtio-serial,id=qga0,bus=pci.0,addr=0x8' -device 'virtserialport,chardev=qga0,name=org.qemu.guest_agent.0' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:6cd14dd0b082' -drive 'file=/var/lib/vz/template/iso/virtio-win-0.1.160.iso,if=none,id=drive-ide2,media=cdrom,aio=threads' -device 'ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2' -drive 'file=/dev/zvol/ssd256/vmdata/vm-200-disk-0,if=none,id=drive-virtio0,format=raw,cache=none,aio=native,detect-zeroes=on' -device 'virtio-blk-pci,drive=drive-virtio0,id=virtio0,bus=pci.0,addr=0xa,bootindex=100' -netdev 'type=tap,id=net0,ifname=tap200i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' -device 'virtio-net-pci,mac=72:71:81:DA:FF:AE,netdev=net0,bus=pci.0,addr=0x12,id=net0' -rtc 'driftfix=slew,base=localtime' -machine 'type=q35' -global 'kvm-pit.lost_tick_policy=discard' -cpu 'host,hv_time,kvm=off,hv_vendor_id=Custom'' failed: got timeout

|

# |

|

|

Темы: 16 Сообщения: 244 Участник с: 05 февраля 2013 |

шелл стандартный /bin/bash, диски разбиты стандартно, вся система на одном харде. Содержимое юнита идентично вашему. Насколько я помню можно вывести ещё более подробный лог касательно отдельного юнита, сейчас в манах гляну, как это можно сделать…

[email protected]# cat /dev/ass > /dev/head |

|

Sheykhnur |

# |

|

Темы: 16 Сообщения: 244 Участник с: 05 февраля 2013 |

Так, я стал ближе к разгадке, кто подскажет, почему systemd не может открыть приватное соединение D-Bus для юнита [email protected]? Вот вывод команды:

$ journalctl -u [email protected] --since=00:00 --until=9:30 янв 03 00:04:23 bigboyuser systemd[919]: Failed to open private bus connection: Failed to connect to socket /run/user/1000/dbus/user_bus_socket янв 03 00:04:23 bigboyuser systemd[919]: Mounted /sys/kernel/config. янв 03 00:04:23 bigboyuser systemd[919]: Mounted /sys/fs/fuse/connections. янв 03 00:04:23 bigboyuser systemd[919]: Stopped target Sound Card. янв 03 00:04:23 bigboyuser systemd[919]: Starting Default. янв 03 00:04:23 bigboyuser systemd[919]: Reached target Default. янв 03 00:04:23 bigboyuser systemd[919]: Startup finished in 59ms. янв 03 00:05:32 bigboyuser systemd[919]: Stopping Default. янв 03 00:05:32 bigboyuser systemd[919]: Stopped target Default. янв 03 00:05:32 bigboyuser systemd[919]: Starting Shutdown. янв 03 00:05:32 bigboyuser systemd[919]: Reached target Shutdown. янв 03 00:05:32 bigboyuser systemd[919]: Starting Exit the Session... янв 03 00:05:32 bigboyuser systemd[919]: systemd-exit.service: main process exited, code=exited, status=200/CHDIR янв 03 00:05:32 bigboyuser systemd[919]: Failed to start Exit the Session. янв 03 00:05:32 bigboyuser systemd[919]: Dependency failed for Exit the Session. янв 03 00:05:32 bigboyuser systemd[919]: Unit systemd-exit.service entered failed state. [email protected]# cat /dev/ass > /dev/head |

|

vadik |

# |

|

Темы: 55 Сообщения: 5410 Участник с: 17 августа 2009 |

Кто нибудь может объяснить что происходит здесь

Что там так долго стартует и останавливается? |

|

indeviral |

# |

|

Темы: 39 Сообщения: 3170 Участник с: 10 августа 2013 |

Sheykhnur systemctl —global enable dbus.socket https://wiki.archlinux.org/index.php/Systemd/User Ошибки в тексте-неповторимый стиль автора© |

|

Sheykhnur |

# |

|

Темы: 16 Сообщения: 244 Участник с: 05 февраля 2013 |

@vadik, результат выполнения команды

#systemctl --failed 0 loaded units listed. Pass --all to see loaded but inactive units, too. To show all installed unit files use 'systemctl list-unit-files'. @ind.indeviral, спасибо, ваш совет помог избавиться от ошибок типа Failed to open private bus connection: Failed to connect to socket /run/user/1000/dbus/user_bus_socket , но проблему, к сожалению, не решил :-(( [ 190.351537] systemd[1]: Got D-Bus request: org.freedesktop.DBus.Local.Disconnected() on /org/freedesktop/DBus/Local и [ 197.919468] systemd[1]: [email protected] stopping timed out. Killing. вот тогда бы всё и выяснилось! Ещё раз всем спасибо, тему не закрываю, но, видимо придётся самому копать; о результатах отпишусь. [email protected]# cat /dev/ass > /dev/head |

|

vasek |

# |

|

Темы: 47 Сообщения: 11417 Участник с: 17 февраля 2013 |

Долго отсутствовал, может пригодится создание лога завершения работы. 1. Создаем скрипт, например debug.sh, в /lib/systemd/system-shutdown/ #!/bin/sh mount -o remount,rw / dmesg > /var/log/shutdown.log mount -o remount,ro / 2. Делаем его исполняемым. 3. При перезагрузке в строке запуска пишем systemd.log_level=debug systemd.log_target=kmsg log_buf_len=1M Если этого не сделать, то лог завершения будет не информативным — всего около 10 строк. 4. Анализируем файл /var/log/shutdown.log Начало завершения — ищем строку типа systemd[1]: Stopping Session 1 of user …такой-то….. (в начале будет идти дата и время, если что тормозит, смотрим на время). Ошибки не исчезают с опытом — они просто умнеют |

|

vasek |

# |

|

Темы: 47 Сообщения: 11417 Участник с: 17 февраля 2013 |

Для этого нужно использовать debug-shell + добавить в параметрах запуска systemd.log_level=debug systemd.log_target=kmsg log_buf_len=1M Ошибки не исчезают с опытом — они просто умнеют |

|

vasek |

# |

|

Темы: 47 Сообщения: 11417 Участник с: 17 февраля 2013 |

Попробовал добавить информативности в логи. Вот что получил. Ошибки не исчезают с опытом — они просто умнеют |

|

Sheykhnur |

# |

|

Темы: 16 Сообщения: 244 Участник с: 05 февраля 2013 |

Vasek, спасибо. Честно говоря, эти дни не было времени подолгу разбираться в проблеме, но кое-что я всё-таки выяснил. Для начала, как вы помните дебаг журнала с целью kmesg я сделал уже давно, ещё в первом посту  За эти несколько дней я немного стал разбираться с systemd, подучил английский и понял одну вещь: информативность логов напрямую зависит от общительности служб) За эти несколько дней я немного стал разбираться с systemd, подучил английский и понял одну вещь: информативность логов напрямую зависит от общительности служб)

янв 07 17:29:36 arch systemd[1]: Got D-Bus request: org.freedesktop.systemd1.Manager.StartUnit() on /org/freedesktop/systemd1 это всё конечно лучше, чем ничего, но всё же мало. Что за юнит стартовал через D-Bus? Какие сообщения он получил? Когда? В общем с логгированием пока всё ещё темно. Да и user-session пока ещё на стадии тестирования (спасибо ind.indeviral за наводку). [email protected]# cat /dev/ass > /dev/head |

|

vasek |

# |

|

Темы: 47 Сообщения: 11417 Участник с: 17 февраля 2013 |

Начало раньше читал невнимательно, так что зла не держи — написал много лишнего. Сейчас посмотрел логи

Ты все правильно пишешь, [email protected] не может правильно завершить работу.

здесь с тобой не согласен. Система послала процессу [email protected] сигнал SIGTERM для завершения процесса (именно этот сигнал предназначен для завершения процесса, и в штатной ситуации процесс, получивший этот сигнал, завершается), но процесс его проигнорировал и не завершился — поэтому и timed out, а после уже вынужденно stop-sigkill. Ошибки не исчезают с опытом — они просто умнеют |