Содержание

- Как я боролся с кодировками в консоли

- Python print encoding error

- Windows

- Various UNIX consoles

- print, write and Unicode in pre-3.0 Python

- read and Unicode in pre-3.0 Python

- PyMOTW

- Page Contents

- Navigation

- This Page

- Examples

- Navigation

- codecs – String encoding and decoding¶

- Unicode Primer¶

- Encodings¶

- Working with Files¶

- Byte Order¶

- Error Handling¶

- Encoding Errors¶

- Decoding Errors¶

- Standard Input and Output Streams¶

- Network Communication¶

- Encoding Translation¶

- Non-Unicode Encodings¶

- Incremental Encoding¶

- Defining Your Own Encoding¶

- Navigation

Как я боролся с кодировками в консоли

В очередной раз запустив в Windows свой скрипт-информер для СамИздат-а и увидев в консоли «загадочные символы» я сказал себе: «Да уже сделай, наконец, себе нормальный кросс-платформенный логгинг!»

Об этом, и о том, как раскрасить вывод лога наподобие Django-вского в Win32 я попробую рассказать под хабра-катом (Всё ниженаписанное применимо к Python 2.x ветке)

Задача первая. Корректный вывод текста в консоль

Симптомы

До тех пор, пока мы не вносим каких-либо «поправок» в проинициализировавшуюся систему ввода-вывода и используем только оператор print с unicode строками, всё идёт более-менее нормально вне зависимости от ОС.

«Чудеса» начинаются дальше — если мы поменяли какие-либо кодировки (см. чуть дальше) или воспользовались модулем logging для вывода на экран. Вроде бы настроив ожидаемое поведение в Linux, в Windows получаешь «мусор» в utf-8. Начинаешь править под Win — вылезает 1251 в консоли…

Теоретический экскурс

Ищем решение

Очевидно, чтобы избавиться от всех этих проблем, надо как-то привести их к единообразию.

И вот тут начинается самое интересное:

Ага! Оказывается «система» у нас живёт вообще в ASCII. Как следствие — попытка по-простому работать с вводом/выводом заканчивается «любимым» исключением UnicodeEncodeError/UnicodeDecodeError .

Кроме того, как замечательно видно из примера, если в linux у нас везде utf-8, то в Windows — две разных кодировки — так называемая ANSI, она же cp1251, используемая для графической части и OEM, она же cp866, для вывода текста в консоли. OEM кодировка пришла к нам со времён DOS-а и, теоретически, может быть также перенастроена специальными командами, но на практике никто этого давно не делает.

До недавнего времени я пользовался распространённым способом исправить эту неприятность:

И это, в общем-то, работало. Работало до тех пор, пока пользовался print -ом. При переходе к выводу на экран через logging всё сломалось.

Угу, подумал я, раз «оно» использует кодировку по-умолчанию, — выставлю-ка я ту же кодировку, что в консоли:

Уже чуть лучше, но:

- В Win32 текст печатается кракозябрами, явно напоминающими cp1251

- При запуске с перенаправленным выводом опять получаем не то, что ожидалось

- Периодически, при попытке напечатать текст, где есть преобразованный в unicode символ типа ① ( ① ), «любезно» добавленный автором в какой-нибудь заголовок, снова получаем UnicodeEncodeError !

Присмотревшись к первому примеру, нетрудно заметить, что так желаемую кодировку «cp866» можно получить только проверив атрибут соответствующего потока. А он далеко не всегда оказывается доступен.

Вторая часть задачи — оставить системную кодировку в utf-8, но корректно настроить вывод в консоль.

Для индивидуальной настройки вывода надо переопределить обработку выходных потоков примерно так:

Этот код позволяет убить двух зайцев — выставить нужную кодировку и защититься от исключений при печати всяких умляутов и прочей типографики, отсутствующей в 255 символах cp866.

Осталось сделать этот код универсальным — откуда мне знать OEM кодировку на произвольном сферическом компе? Гугление на предмет готовой поддержки ANSI/OEM кодировок в python ничего разумного не дало, посему пришлось немного вспомнить WinAPI

… и собрать всё вместе:

Задача вторая. Раскрашиваем вывод

Насмотревшись на отладочный вывод Джанги в связке с werkzeug, захотелось чего-то подобного для себя. Гугление выдаёт несколько проектов разной степени проработки и удобности — от простейшего наследника logging.StreamHandler , до некоего набора, при импорте автоматически подменяющего стандартный StreamHandler.

Попробовав несколько из них, я, в итоге, воспользовался простейшим наследником StreamHandler, приведённом в одном из комментов на Stack Overflow и пока вполне доволен:

Однако, в Windows всё это работать, разумеется, отказалось. И если раньше можно было «включить» поддержку ansi-кодов в консоли добавлением «магического» ansi.dll из проекта symfony куда-то в недра системных папок винды, то, начиная (кажется) с Windows 7 данная возможность окончательно «выпилена» из системы. Да и заставлять юзера копировать какую-то dll в системную папку тоже как-то «не кошерно».

Снова обращаемся к гуглу и, снова, получаем несколько вариантов решения. Все варианты так или иначе сводятся к подмене вывода ANSI escape-последовательностей вызовом WinAPI для управления атрибутами консоли.

Побродив некоторое время по ссылкам, набрёл на проект colorama. Он как-то понравился мне больше остального. К плюсам именно этого проекта ст́оит отнести, что подменяется весь консольный вывод — можно выводить раскрашенный текст простым print u»x1b[31;40mЧто-то красное на чёрномx1b[0m» если вдруг захочется поизвращаться.

Сразу замечу, что текущая версия 0.1.18 содержит досадный баг, ломающий вывод unicode строк. Но простейшее решение я привёл там же при создании issue.

Собственно осталось объединить оба пожелания и начать пользоваться вместо традиционных «костылей»:

Дальше в своём проекте, в запускаемом файле пользуемся:

На этом всё. Из потенциальных доработок осталось проверить работоспособность под win64 python и, возможно, добаботать ColoredHandler чтобы проверял себя на isatty, как в более сложных примерах на том же StackOverflow.

Источник

Python print encoding error

If you try to print a unicode string to console and get a message like this one:

This means that the python console app can’t write the given character to the console’s encoding.

More specifically, the python console app created a _io.TextIOWrapperd instance with an encoding that cannot represent the given character.

sys.stdout —> _io.TextIOWrapperd —> (your console)

To understand it more clearly, look at:

- sys.stdout

sys.stdout.encoding —

Windows

By default, the console in Microsoft Windows only displays 256 characters (cp437, of «Code page 437», the original IBM-PC 1981 extended ASCII character set.)

If you try to print an unprintable character you will get UnicodeEncodeError.

Setting the PYTHONIOENCODING environment variable as described above can be used to suppress the error messages. Setting to «utf-8» is not recommended as this produces an inaccurate, garbled representation of the output to the console. For best results, use your console’s correct default codepage and a suitable error handler other than «strict».

Various UNIX consoles

There is no standard way to query UNIX console for find out what characters it supports but fortunately there is a way to find out what characters are considered to be printable. Locale category LC_CTYPE defines what characters are printable. To find out its value type at python prompt:

If you got any other value you won’t be able to print all unicode characters. As soon as you try to print a unprintable character you will get UnicodeEncodeError. To fix this situation you need to set the environment variable LANG to one of supported by your system unicode locales. To get the full list of locales use command «locale -a», look for locales that end with string «.utf-8». If you have set LANG variable but now instead of UnicodeEncodeError you see garbage on your screen you need to set up your terminal to use font unicode font. Consult terminal manual on how to do it.

print, write and Unicode in pre-3.0 Python

Because file operations are 8-bit clean, reading data from the original stdin will return str‘s containing data in the input character set. Writing these str‘s to stdout without any codecs will result in the output identical to the input.

Since programmers need to display unicode strings, the designers of the print statement built the required transformation into it.

When Python finds its output attached to a terminal, it sets the sys.stdout.encoding attribute to the terminal’s encoding. The print statement’s handler will automatically encode unicode arguments into str output.

When Python does not detect the desired character set of the output, it sets sys.stdout.encoding to None, and print will invoke the «ascii» codec.

I (IL) understand the implementation of Python2.5’s print statement as follows.

At startup, Python will detect the encoding of the standard output and, probably, store the respective StreamWriter class definition. The print statement stringifies all its arguments to narrow str and wide unicode strings based on the width of the original arguments. Then print passes narrow strings to sys.stdout directly and wide strings to the instance of StreamWriter wrapped around sys.stdout.

If the user does not replace sys.stdout as shown below and Python does not detect an output encoding, the write method will coerce unicode values to str by invoking the ASCII codec (DefaultEncoding).

Python file’s write and read methods do not invoke codecs internally. Python2.5’s file open built-in sets the .encoding attribute of the resulting instance to None.

Wrapping sys.stdout into an instance of StreamWriter will allow writing unicode data with sys.stdout.write() and print.

The write call executes StreamWriter.write which in turn invokes codec-specific encode and passes the result to the underlying file. It appears that the print statement will not fail due to the argument type coercion when sys.stdout is wrapped. My (IL’s) understanding of print‘s implementation above agrees with that.

read and Unicode in pre-3.0 Python

I (IL) believe reading from stdin does not involve coercion at all because the existing ways to read from stdin such as «for line in sys.stdin» do not convey the expected type of the returned value to the stdin handler. A function that would complement the print statement might look like this:

print statement encodes unicode strings to str strings. One can complement this with decoding of str input data into unicode strings in sys.stdin.read/readline. For this, we will wrap sys.stdin into a StreamReader instance:

PrintFails (last edited 2012-11-25 11:32:18 by techtonik )

Источник

PyMOTW

If you find this information useful, consider picking up a copy of my book, The Python Standard Library By Example.

Page Contents

Navigation

This Page

Examples

The output from all the example programs from PyMOTW has been generated with Python 2.7.8, unless otherwise noted. Some of the features described here may not be available in earlier versions of Python.

If you are looking for examples that work under Python 3, please refer to the PyMOTW-3 section of the site.

Navigation

codecs – String encoding and decoding¶

| Purpose: | Encoders and decoders for converting text between different representations. |

|---|---|

| Available In: | 2.1 and later |

The codecs module provides stream and file interfaces for transcoding data in your program. It is most commonly used to work with Unicode text, but other encodings are also available for other purposes.

Unicode Primer¶

CPython 2.x supports two types of strings for working with text data. Old-style str instances use a single 8-bit byte to represent each character of the string using its ASCII code. In contrast, unicode strings are managed internally as a sequence of Unicode code points. The code point values are saved as a sequence of 2 or 4 bytes each, depending on the options given when Python was compiled. Both unicode and str are derived from a common base class, and support a similar API.

When unicode strings are output, they are encoded using one of several standard schemes so that the sequence of bytes can be reconstructed as the same string later. The bytes of the encoded value are not necessarily the same as the code point values, and the encoding defines a way to translate between the two sets of values. Reading Unicode data also requires knowing the encoding so that the incoming bytes can be converted to the internal representation used by the unicode class.

The most common encodings for Western languages are UTF-8 and UTF-16 , which use sequences of one and two byte values respectively to represent each character. Other encodings can be more efficient for storing languages where most of the characters are represented by code points that do not fit into two bytes.

For more introductory information about Unicode, refer to the list of references at the end of this section. The Python Unicode HOWTO is especially helpful.

Encodings¶

The best way to understand encodings is to look at the different series of bytes produced by encoding the same string in different ways. The examples below use this function to format the byte string to make it easier to read.

The function uses binascii to get a hexadecimal representation of the input byte string, then insert a space between every nbytes bytes before returning the value.

The first encoding example begins by printing the text ‘pi: π’ using the raw representation of the unicode class. The π character is replaced with the expression for the Unicode code point, u03c0 . The next two lines encode the string as UTF-8 and UTF-16 respectively, and show the hexadecimal values resulting from the encoding.

The result of encoding a unicode string is a str object.

Given a sequence of encoded bytes as a str instance, the decode() method translates them to code points and returns the sequence as a unicode instance.

The choice of encoding used does not change the output type.

The default encoding is set during the interpreter start-up process, when site is loaded. Refer to Unicode Defaults for a description of the default encoding settings accessible via sys .

Working with Files¶

Encoding and decoding strings is especially important when dealing with I/O operations. Whether you are writing to a file, socket, or other stream, you will want to ensure that the data is using the proper encoding. In general, all text data needs to be decoded from its byte representation as it is read, and encoded from the internal values to a specific representation as it is written. Your program can explicitly encode and decode data, but depending on the encoding used it can be non-trivial to determine whether you have read enough bytes in order to fully decode the data. codecs provides classes that manage the data encoding and decoding for you, so you don’t have to create your own.

The simplest interface provided by codecs is a replacement for the built-in open() function. The new version works just like the built-in, but adds two new arguments to specify the encoding and desired error handling technique.

Starting with a unicode string with the code point for π, this example saves the text to a file using an encoding specified on the command line.

Reading the data with open() is straightforward, with one catch: you must know the encoding in advance, in order to set up the decoder correctly. Some data formats, such as XML, let you specify the encoding as part of the file, but usually it is up to the application to manage. codecs simply takes the encoding as an argument and assumes it is correct.

This example reads the files created by the previous program, and prints the representation of the resulting unicode object to the console.

Byte Order¶

Multi-byte encodings such as UTF-16 and UTF-32 pose a problem when transferring the data between different computer systems, either by copying the file directly or with network communication. Different systems use different ordering of the high and low order bytes. This characteristic of the data, known as its endianness, depends on factors such as the hardware architecture and choices made by the operating system and application developer. There isn’t always a way to know in advance what byte order to use for a given set of data, so the multi-byte encodings include a byte-order marker (BOM) as the first few bytes of encoded output. For example, UTF-16 is defined in such a way that 0xFFFE and 0xFEFF are not valid characters, and can be used to indicate the byte order. codecs defines constants for the byte order markers used by UTF-16 and UTF-32.

BOM , BOM_UTF16 , and BOM_UTF32 are automatically set to the appropriate big-endian or little-endian values depending on the current system’s native byte order.

Byte ordering is detected and handled automatically by the decoders in codecs , but you can also choose an explicit ordering for the encoding.

codecs_bom_create_file.py figures out the native byte ordering, then uses the alternate form explicitly so the next example can demonstrate auto-detection while reading.

codecs_bom_detection.py does not specify a byte order when opening the file, so the decoder uses the BOM value in the first two bytes of the file to determine it.

Since the first two bytes of the file are used for byte order detection, they are not included in the data returned by read() .

Error Handling¶

The previous sections pointed out the need to know the encoding being used when reading and writing Unicode files. Setting the encoding correctly is important for two reasons. If the encoding is configured incorrectly while reading from a file, the data will be interpreted wrong and may be corrupted or simply fail to decode. Not all Unicode characters can be represented in all encodings, so if the wrong encoding is used while writing an error will be generated and data may be lost.

codecs uses the same five error handling options that are provided by the encode() method of unicode and the decode() method of str .

| Error Mode | Description |

|---|---|

| strict | Raises an exception if the data cannot be converted. |

| replace | Substitutes a special marker character for data that cannot be encoded. |

| ignore | Skips the data. |

| xmlcharrefreplace | XML character (encoding only) |

| backslashreplace | escape sequence (encoding only) |

Encoding Errors¶

The most common error condition is receiving a UnicodeEncodeError when writing Unicode data to an ASCII output stream, such as a regular file or sys.stdout. This sample program can be used to experiment with the different error handling modes.

While strict mode is safest for ensuring your application explicitly sets the correct encoding for all I/O operations, it can lead to program crashes when an exception is raised.

Some of the other error modes are more flexible. For example, replace ensures that no error is raised, at the expense of possibly losing data that cannot be converted to the requested encoding. The Unicode character for pi still cannot be encoded in ASCII, but instead of raising an exception the character is replaced with ? in the output.

To skip over problem data entirely, use ignore . Any data that cannot be encoded is simply discarded.

There are two lossless error handling options, both of which replace the character with an alternate representation defined by a standard separate from the encoding. xmlcharrefreplace uses an XML character reference as a substitute (the list of character references is specified in the W3C XML Entity Definitions for Characters).

The other lossless error handling scheme is backslashreplace which produces an output format like the value you get when you print the repr() of a unicode object. Unicode characters are replaced with u followed by the hexadecimal value of the code point.

Decoding Errors¶

It is also possible to see errors when decoding data, especially if the wrong encoding is used.

As with encoding, strict error handling mode raises an exception if the byte stream cannot be properly decoded. In this case, a UnicodeDecodeError results from trying to convert part of the UTF-16 BOM to a character using the UTF-8 decoder.

Switching to ignore causes the decoder to skip over the invalid bytes. The result is still not quite what is expected, though, since it includes embedded null bytes.

In replace mode invalid bytes are replaced with uFFFD , the official Unicode replacement character, which looks like a diamond with a black background containing a white question mark (�).

Standard Input and Output Streams¶

The most common cause of UnicodeEncodeError exceptions is code that tries to print unicode data to the console or a Unix pipeline when sys.stdout is not configured with an encoding.

Problems with the default encoding of the standard I/O channels can be difficult to debug because the program works as expected when the output goes to the console, but cause encoding errors when it is used as part of a pipeline and the output includes Unicode characters above the ASCII range. This difference in behavior is caused by Python’s initialization code, which sets the default encoding for each standard I/O channel only if the channel is connected to a terminal ( isatty() returns True ). If there is no terminal, Python assumes the program will configure the encoding explicitly, and leaves the I/O channel alone.

To explicitly set the encoding on the standard output channel, use getwriter() to get a stream encoder class for a specific encoding. Instantiate the class, passing sys.stdout as the only argument.

Writing to the wrapped version of sys.stdout passes the Unicode text through an encoder before sending the encoded bytes to stdout. Replacing sys.stdout means that any code used by your application that prints to standard output will be able to take advantage of the encoding writer.

The next problem to solve is how to know which encoding should be used. The proper encoding varies based on location, language, and user or system configuration, so hard-coding a fixed value is not a good idea. It would also be annoying for a user to need to pass explicit arguments to every program setting the input and output encodings. Fortunately, there is a global way to get a reasonable default encoding, using locale .

getdefaultlocale() returns the language and preferred encoding based on the system and user configuration settings in a form that can be used with getwriter() .

The encoding also needs to be set up when working with sys.stdin. Use getreader() to get a reader capable of decoding the input bytes.

Reading from the wrapped handle returns unicode objects instead of str instances.

Network Communication¶

Network sockets are also byte-streams, and so Unicode data must be encoded into bytes before it is written to a socket.

You could encode the data explicitly, before sending it, but miss one call to send() and your program would fail with an encoding error.

By using makefile() to get a file-like handle for the socket, and then wrapping that with a stream-based reader or writer, you will be able to pass Unicode strings and know they are encoded on the way in to and out of the socket.

This example uses PassThrough to show that the data is encoded before being sent, and the response is decoded after it is received in the client.

Encoding Translation¶

Although most applications will work with unicode data internally, decoding or encoding it as part of an I/O operation, there are times when changing a file’s encoding without holding on to that intermediate data format is useful. EncodedFile() takes an open file handle using one encoding and wraps it with a class that translates the data to another encoding as the I/O occurs.

This example shows reading from and writing to separate handles returned by EncodedFile() . No matter whether the handle is used for reading or writing, the file_encoding always refers to the encoding in use by the open file handle passed as the first argument, and data_encoding value refers to the encoding in use by the data passing through the read() and write() calls.

Non-Unicode Encodings¶

Although most of the earlier examples use Unicode encodings, codecs can be used for many other data translations. For example, Python includes codecs for working with base-64, bzip2, ROT-13, ZIP, and other data formats.

Any transformation that can be expressed as a function taking a single input argument and returning a byte or Unicode string can be registered as a codec.

Using codecs to wrap a data stream provides a simpler interface than working directly with zlib .

Not all of the compression or encoding systems support reading a portion of the data through the stream interface using readline() or read() because they need to find the end of a compressed segment to expand it. If your program cannot hold the entire uncompressed data set in memory, use the incremental access features of the compression library instead of codecs .

Incremental Encoding¶

Some of the encodings provided, especially bz2 and zlib , may dramatically change the length of the data stream as they work on it. For large data sets, these encodings operate better incrementally, working on one small chunk of data at a time. The IncrementalEncoder and IncrementalDecoder API is designed for this purpose.

Each time data is passed to the encoder or decoder its internal state is updated. When the state is consistent (as defined by the codec), data is returned and the state resets. Until that point, calls to encode() or decode() will not return any data. When the last bit of data is passed in, the argument final should be set to True so the codec knows to flush any remaining buffered data.

Defining Your Own Encoding¶

Since Python comes with a large number of standard codecs already, it is unlikely that you will need to define your own. If you do, there are several base classes in codecs to make the process easier.

The first step is to understand the nature of the transformation described by the encoding. For example, an “invertcaps” encoding converts uppercase letters to lowercase, and lowercase letters to uppercase. Here is a simple definition of an encoding function that performs this transformation on an input string:

In this case, the encoder and decoder are the same function (as with ROT-13 ).

Although it is easy to understand, this implementation is not efficient, especially for very large text strings. Fortunately, codecs includes some helper functions for creating character map based codecs such as invertcaps. A character map encoding is made up of two dictionaries. The encoding map converts character values from the input string to byte values in the output and the decoding map goes the other way. Create your decoding map first, and then use make_encoding_map() to convert it to an encoding map. The C functions charmap_encode() and charmap_decode() use the maps to convert their input data efficiently.

Although the encoding and decoding maps for invertcaps are the same, that may not always be the case. make_encoding_map() detects situations where more than one input character is encoded to the same output byte and replaces the encoding value with None to mark the encoding as undefined.

The character map encoder and decoder support all of the standard error handling methods described earlier, so you do not need to do any extra work to comply with that part of the API.

Because the Unicode code point for π is not in the encoding map, the strict error handling mode raises an exception.

After that the encoding and decoding maps are defined, you need to set up a few additional classes and register the encoding. register() adds a search function to the registry so that when a user wants to use your encoding codecs can locate it. The search function must take a single string argument with the name of the encoding, and return a CodecInfo object if it knows the encoding, or None if it does not.

You can register multiple search functions, and each will be called in turn until one returns a CodecInfo or the list is exhausted. The internal search function registered by codecs knows how to load the standard codecs such as UTF-8 from encodings , so those names will never be passed to your search function.

The CodecInfo instance returned by the search function tells codecs how to encode and decode using all of the different mechanisms supported: stateless, incremental, and stream. codecs includes base classes that make setting up a character map encoding easy. This example puts all of the pieces together to register a search function that returns a CodecInfo instance configured for the invertcaps codec.

The stateless encoder/decoder base class is Codec . Override encode() and decode() with your implementation (in this case, calling charmap_encode() and charmap_decode() respectively). Each method must return a tuple containing the transformed data and the number of the input bytes or characters consumed. Conveniently, charmap_encode() and charmap_decode() already return that information.

IncrementalEncoder and IncrementalDecoder serve as base classes for the incremental interfaces. The encode() and decode() methods of the incremental classes are defined in such a way that they only return the actual transformed data. Any information about buffering is maintained as internal state. The invertcaps encoding does not need to buffer data (it uses a one-to-one mapping). For encodings that produce a different amount of output depending on the data being processed, such as compression algorithms, BufferedIncrementalEncoder and BufferedIncrementalDecoder are more appropriate base classes, since they manage the unprocessed portion of the input for you.

StreamReader and StreamWriter need encode() and decode() methods, too, and since they are expected to return the same value as the version from Codec you can use multiple inheritance for the implementation.

codecs The standard library documentation for this module. locale Accessing and managing the localization-based configuration settings and behaviors. io The io module includes file and stream wrappers that handle encoding and decoding, too. SocketServer For a more detailed example of an echo server, see the SocketServer module. encodings Package in the standard library containing the encoder/decoder implementations provided by Python.. Unicode HOWTO The official guide for using Unicode with Python 2.x. Python Unicode Objects Fredrik Lundh’s article about using non-ASCII character sets in Python 2.0. How to Use UTF-8 with Python Evan Jones’ quick guide to working with Unicode, including XML data and the Byte-Order Marker. On the Goodness of Unicode Introduction to internationalization and Unicode by Tim Bray. On Character Strings A look at the history of string processing in programming languages, by Tim Bray. Characters vs. Bytes Part one of Tim Bray’s “essay on modern character string processing for computer programmers.” This installment covers in-memory representation of text in formats other than ASCII bytes. The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets (No Excuses!) An introduction to Unicode by Joel Spolsky. Endianness Explanation of endianness in Wikipedia.

Navigation

© Copyright Doug Hellmann. |

Источник

| Purpose: | Encoders and decoders for converting text between different representations. |

|---|---|

| Available In: | 2.1 and later |

The codecs module provides stream and file interfaces for

transcoding data in your program. It is most commonly used to work

with Unicode text, but other encodings are also available for other

purposes.

Unicode Primer¶

CPython 2.x supports two types of strings for working with text data.

Old-style str instances use a single 8-bit byte to represent

each character of the string using its ASCII code. In contrast,

unicode strings are managed internally as a sequence of

Unicode code points. The code point values are saved as a sequence

of 2 or 4 bytes each, depending on the options given when Python was

compiled. Both unicode and str are derived from a

common base class, and support a similar API.

When unicode strings are output, they are encoded using one

of several standard schemes so that the sequence of bytes can be

reconstructed as the same string later. The bytes of the encoded

value are not necessarily the same as the code point values, and the

encoding defines a way to translate between the two sets of values.

Reading Unicode data also requires knowing the encoding so that the

incoming bytes can be converted to the internal representation used by

the unicode class.

The most common encodings for Western languages are UTF-8 and

UTF-16, which use sequences of one and two byte values

respectively to represent each character. Other encodings can be more

efficient for storing languages where most of the characters are

represented by code points that do not fit into two bytes.

See also

For more introductory information about Unicode, refer to the list

of references at the end of this section. The Python Unicode

HOWTO is especially helpful.

Encodings¶

The best way to understand encodings is to look at the different

series of bytes produced by encoding the same string in different

ways. The examples below use this function to format the byte string

to make it easier to read.

import binascii def to_hex(t, nbytes): "Format text t as a sequence of nbyte long values separated by spaces." chars_per_item = nbytes * 2 hex_version = binascii.hexlify(t) num_chunks = len(hex_version) / chars_per_item def chunkify(): for start in xrange(0, len(hex_version), chars_per_item): yield hex_version[start:start + chars_per_item] return ' '.join(chunkify()) if __name__ == '__main__': print to_hex('abcdef', 1) print to_hex('abcdef', 2)

The function uses binascii to get a hexadecimal representation

of the input byte string, then insert a space between every nbytes

bytes before returning the value.

$ python codecs_to_hex.py 61 62 63 64 65 66 6162 6364 6566

The first encoding example begins by printing the text ‘pi: π’

using the raw representation of the unicode class. The π

character is replaced with the expression for the Unicode code point,

u03c0. The next two lines encode the string as UTF-8 and UTF-16

respectively, and show the hexadecimal values resulting from the

encoding.

from codecs_to_hex import to_hex text = u'pi: π' print 'Raw :', repr(text) print 'UTF-8 :', to_hex(text.encode('utf-8'), 1) print 'UTF-16:', to_hex(text.encode('utf-16'), 2)

The result of encoding a unicode string is a str

object.

$ python codecs_encodings.py Raw : u'pi: u03c0' UTF-8 : 70 69 3a 20 cf 80 UTF-16: fffe 7000 6900 3a00 2000 c003

Given a sequence of encoded bytes as a str instance, the

decode() method translates them to code points and returns the

sequence as a unicode instance.

from codecs_to_hex import to_hex text = u'pi: π' encoded = text.encode('utf-8') decoded = encoded.decode('utf-8') print 'Original :', repr(text) print 'Encoded :', to_hex(encoded, 1), type(encoded) print 'Decoded :', repr(decoded), type(decoded)

The choice of encoding used does not change the output type.

$ python codecs_decode.py Original : u'pi: u03c0' Encoded : 70 69 3a 20 cf 80 <type 'str'> Decoded : u'pi: u03c0' <type 'unicode'>

Note

The default encoding is set during the interpreter start-up process,

when site is loaded. Refer to Unicode Defaults

for a description of the default encoding settings accessible via

sys.

Working with Files¶

Encoding and decoding strings is especially important when dealing

with I/O operations. Whether you are writing to a file, socket, or

other stream, you will want to ensure that the data is using the

proper encoding. In general, all text data needs to be decoded from

its byte representation as it is read, and encoded from the internal

values to a specific representation as it is written. Your program

can explicitly encode and decode data, but depending on the encoding

used it can be non-trivial to determine whether you have read enough

bytes in order to fully decode the data. codecs provides

classes that manage the data encoding and decoding for you, so you

don’t have to create your own.

The simplest interface provided by codecs is a replacement for

the built-in open() function. The new version works just like

the built-in, but adds two new arguments to specify the encoding and

desired error handling technique.

from codecs_to_hex import to_hex import codecs import sys encoding = sys.argv[1] filename = encoding + '.txt' print 'Writing to', filename with codecs.open(filename, mode='wt', encoding=encoding) as f: f.write(u'pi: u03c0') # Determine the byte grouping to use for to_hex() nbytes = { 'utf-8':1, 'utf-16':2, 'utf-32':4, }.get(encoding, 1) # Show the raw bytes in the file print 'File contents:' with open(filename, mode='rt') as f: print to_hex(f.read(), nbytes)

Starting with a unicode string with the code point for π,

this example saves the text to a file using an encoding specified on

the command line.

$ python codecs_open_write.py utf-8 Writing to utf-8.txt File contents: 70 69 3a 20 cf 80 $ python codecs_open_write.py utf-16 Writing to utf-16.txt File contents: fffe 7000 6900 3a00 2000 c003 $ python codecs_open_write.py utf-32 Writing to utf-32.txt File contents: fffe0000 70000000 69000000 3a000000 20000000 c0030000

Reading the data with open() is straightforward, with one catch:

you must know the encoding in advance, in order to set up the decoder

correctly. Some data formats, such as XML, let you specify the

encoding as part of the file, but usually it is up to the application

to manage. codecs simply takes the encoding as an argument and

assumes it is correct.

import codecs import sys encoding = sys.argv[1] filename = encoding + '.txt' print 'Reading from', filename with codecs.open(filename, mode='rt', encoding=encoding) as f: print repr(f.read())

This example reads the files created by the previous program, and

prints the representation of the resulting unicode object to

the console.

$ python codecs_open_read.py utf-8 Reading from utf-8.txt u'pi: u03c0' $ python codecs_open_read.py utf-16 Reading from utf-16.txt u'pi: u03c0' $ python codecs_open_read.py utf-32 Reading from utf-32.txt u'pi: u03c0'

Byte Order¶

Multi-byte encodings such as UTF-16 and UTF-32 pose a problem when

transferring the data between different computer systems, either by

copying the file directly or with network communication. Different

systems use different ordering of the high and low order bytes. This

characteristic of the data, known as its endianness, depends on

factors such as the hardware architecture and choices made by the

operating system and application developer. There isn’t always a way

to know in advance what byte order to use for a given set of data, so

the multi-byte encodings include a byte-order marker (BOM) as the

first few bytes of encoded output. For example, UTF-16 is defined

in such a way that 0xFFFE and 0xFEFF are not valid characters, and can

be used to indicate the byte order. codecs defines constants

for the byte order markers used by UTF-16 and UTF-32.

import codecs from codecs_to_hex import to_hex for name in [ 'BOM', 'BOM_BE', 'BOM_LE', 'BOM_UTF8', 'BOM_UTF16', 'BOM_UTF16_BE', 'BOM_UTF16_LE', 'BOM_UTF32', 'BOM_UTF32_BE', 'BOM_UTF32_LE', ]: print '{:12} : {}'.format(name, to_hex(getattr(codecs, name), 2))

BOM, BOM_UTF16, and BOM_UTF32 are automatically set to the

appropriate big-endian or little-endian values depending on the

current system’s native byte order.

$ python codecs_bom.py BOM : fffe BOM_BE : feff BOM_LE : fffe BOM_UTF8 : efbb bf BOM_UTF16 : fffe BOM_UTF16_BE : feff BOM_UTF16_LE : fffe BOM_UTF32 : fffe 0000 BOM_UTF32_BE : 0000 feff BOM_UTF32_LE : fffe 0000

Byte ordering is detected and handled automatically by the decoders in

codecs, but you can also choose an explicit ordering for the

encoding.

import codecs from codecs_to_hex import to_hex # Pick the non-native version of UTF-16 encoding if codecs.BOM_UTF16 == codecs.BOM_UTF16_BE: bom = codecs.BOM_UTF16_LE encoding = 'utf_16_le' else: bom = codecs.BOM_UTF16_BE encoding = 'utf_16_be' print 'Native order :', to_hex(codecs.BOM_UTF16, 2) print 'Selected order:', to_hex(bom, 2) # Encode the text. encoded_text = u'pi: u03c0'.encode(encoding) print '{:14}: {}'.format(encoding, to_hex(encoded_text, 2)) with open('non-native-encoded.txt', mode='wb') as f: # Write the selected byte-order marker. It is not included in the # encoded text because we were explicit about the byte order when # selecting the encoding. f.write(bom) # Write the byte string for the encoded text. f.write(encoded_text)

codecs_bom_create_file.py figures out the native byte ordering,

then uses the alternate form explicitly so the next example can

demonstrate auto-detection while reading.

$ python codecs_bom_create_file.py Native order : fffe Selected order: feff utf_16_be : 0070 0069 003a 0020 03c0

codecs_bom_detection.py does not specify a byte order when opening

the file, so the decoder uses the BOM value in the first two bytes of

the file to determine it.

import codecs from codecs_to_hex import to_hex # Look at the raw data with open('non-native-encoded.txt', mode='rb') as f: raw_bytes = f.read() print 'Raw :', to_hex(raw_bytes, 2) # Re-open the file and let codecs detect the BOM with codecs.open('non-native-encoded.txt', mode='rt', encoding='utf-16') as f: decoded_text = f.read() print 'Decoded:', repr(decoded_text)

Since the first two bytes of the file are used for byte order

detection, they are not included in the data returned by read().

$ python codecs_bom_detection.py Raw : feff 0070 0069 003a 0020 03c0 Decoded: u'pi: u03c0'

Error Handling¶

The previous sections pointed out the need to know the encoding being

used when reading and writing Unicode files. Setting the encoding

correctly is important for two reasons. If the encoding is configured

incorrectly while reading from a file, the data will be interpreted

wrong and may be corrupted or simply fail to decode. Not all Unicode

characters can be represented in all encodings, so if the wrong

encoding is used while writing an error will be generated and data may

be lost.

codecs uses the same five error handling options that are

provided by the encode() method of unicode and the

decode() method of str.

| Error Mode | Description |

|---|---|

| strict | Raises an exception if the data cannot be converted. |

| replace | Substitutes a special marker character for data that cannot be encoded. |

| ignore | Skips the data. |

| xmlcharrefreplace | XML character (encoding only) |

| backslashreplace | escape sequence (encoding only) |

Encoding Errors¶

The most common error condition is receiving a

UnicodeEncodeError when writing

Unicode data to an ASCII output stream, such as a regular file or

sys.stdout. This sample program can be used

to experiment with the different error handling modes.

import codecs import sys error_handling = sys.argv[1] text = u'pi: u03c0' try: # Save the data, encoded as ASCII, using the error # handling mode specified on the command line. with codecs.open('encode_error.txt', 'w', encoding='ascii', errors=error_handling) as f: f.write(text) except UnicodeEncodeError, err: print 'ERROR:', err else: # If there was no error writing to the file, # show what it contains. with open('encode_error.txt', 'rb') as f: print 'File contents:', repr(f.read())

While strict mode is safest for ensuring your application

explicitly sets the correct encoding for all I/O operations, it can

lead to program crashes when an exception is raised.

$ python codecs_encode_error.py strict ERROR: 'ascii' codec can't encode character u'u03c0' in position 4: ordinal not in range(128)

Some of the other error modes are more flexible. For example,

replace ensures that no error is raised, at the expense of

possibly losing data that cannot be converted to the requested

encoding. The Unicode character for pi still cannot be encoded in

ASCII, but instead of raising an exception the character is replaced

with ? in the output.

$ python codecs_encode_error.py replace File contents: 'pi: ?'

To skip over problem data entirely, use ignore. Any data that

cannot be encoded is simply discarded.

$ python codecs_encode_error.py ignore File contents: 'pi: '

There are two lossless error handling options, both of which replace

the character with an alternate representation defined by a standard

separate from the encoding. xmlcharrefreplace uses an XML

character reference as a substitute (the list of character references

is specified in the W3C XML Entity Definitions for Characters).

$ python codecs_encode_error.py xmlcharrefreplace File contents: 'pi: π'

The other lossless error handling scheme is backslashreplace which

produces an output format like the value you get when you print the

repr() of a unicode object. Unicode characters are

replaced with u followed by the hexadecimal value of the code

point.

$ python codecs_encode_error.py backslashreplace File contents: 'pi: \u03c0'

Decoding Errors¶

It is also possible to see errors when decoding data, especially if

the wrong encoding is used.

import codecs import sys from codecs_to_hex import to_hex error_handling = sys.argv[1] text = u'pi: u03c0' print 'Original :', repr(text) # Save the data with one encoding with codecs.open('decode_error.txt', 'w', encoding='utf-16') as f: f.write(text) # Dump the bytes from the file with open('decode_error.txt', 'rb') as f: print 'File contents:', to_hex(f.read(), 1) # Try to read the data with the wrong encoding with codecs.open('decode_error.txt', 'r', encoding='utf-8', errors=error_handling) as f: try: data = f.read() except UnicodeDecodeError, err: print 'ERROR:', err else: print 'Read :', repr(data)

As with encoding, strict error handling mode raises an exception

if the byte stream cannot be properly decoded. In this case, a

UnicodeDecodeError results from

trying to convert part of the UTF-16 BOM to a character using the

UTF-8 decoder.

$ python codecs_decode_error.py strict Original : u'pi: u03c0' File contents: ff fe 70 00 69 00 3a 00 20 00 c0 03 ERROR: 'utf8' codec can't decode byte 0xff in position 0: invalid start byte

Switching to ignore causes the decoder to skip over the invalid

bytes. The result is still not quite what is expected, though, since

it includes embedded null bytes.

$ python codecs_decode_error.py ignore Original : u'pi: u03c0' File contents: ff fe 70 00 69 00 3a 00 20 00 c0 03 Read : u'px00ix00:x00 x00x03'

In replace mode invalid bytes are replaced with uFFFD, the

official Unicode replacement character, which looks like a diamond

with a black background containing a white question mark (�).

$ python codecs_decode_error.py replace Original : u'pi: u03c0' File contents: ff fe 70 00 69 00 3a 00 20 00 c0 03 Read : u'ufffdufffdpx00ix00:x00 x00ufffdx03'

Standard Input and Output Streams¶

The most common cause of UnicodeEncodeError exceptions is code that tries to print

unicode data to the console or a Unix pipeline when

sys.stdout is not configured with an

encoding.

import codecs import sys text = u'pi: π' # Printing to stdout may cause an encoding error print 'Default encoding:', sys.stdout.encoding print 'TTY:', sys.stdout.isatty() print text

Problems with the default encoding of the standard I/O channels can be

difficult to debug because the program works as expected when the

output goes to the console, but cause encoding errors when it is used

as part of a pipeline and the output includes Unicode characters above

the ASCII range. This difference in behavior is caused by Python’s

initialization code, which sets the default encoding for each standard

I/O channel only if the channel is connected to a terminal

(isatty() returns True). If there is no terminal, Python

assumes the program will configure the encoding explicitly, and leaves

the I/O channel alone.

$ python codecs_stdout.py

Default encoding: utf-8

TTY: True

pi: π

$ python codecs_stdout.py | cat -

Default encoding: None

TTY: False

Traceback (most recent call last):

File "codecs_stdout.py", line 18, in <module>

print text

UnicodeEncodeError: 'ascii' codec can't encode character u'u03c0' in

position 4: ordinal not in range(128)

To explicitly set the encoding on the standard output channel, use

getwriter() to get a stream encoder class for a specific

encoding. Instantiate the class, passing sys.stdout as the only

argument.

import codecs import sys text = u'pi: π' # Wrap sys.stdout with a writer that knows how to handle encoding # Unicode data. wrapped_stdout = codecs.getwriter('UTF-8')(sys.stdout) wrapped_stdout.write(u'Via write: ' + text + 'n') # Replace sys.stdout with a writer sys.stdout = wrapped_stdout print u'Via print:', text

Writing to the wrapped version of sys.stdout passes the Unicode

text through an encoder before sending the encoded bytes to stdout.

Replacing sys.stdout means that any code used by your application

that prints to standard output will be able to take advantage of the

encoding writer.

$ python codecs_stdout_wrapped.py Via write: pi: π Via print: pi: π

The next problem to solve is how to know which encoding should be

used. The proper encoding varies based on location, language, and

user or system configuration, so hard-coding a fixed value is not a

good idea. It would also be annoying for a user to need to pass

explicit arguments to every program setting the input and output

encodings. Fortunately, there is a global way to get a reasonable

default encoding, using locale.

import codecs import locale import sys text = u'pi: π' # Configure locale from the user's environment settings. locale.setlocale(locale.LC_ALL, '') # Wrap stdout with an encoding-aware writer. lang, encoding = locale.getdefaultlocale() print 'Locale encoding :', encoding sys.stdout = codecs.getwriter(encoding)(sys.stdout) print 'With wrapped stdout:', text

getdefaultlocale() returns the language and preferred encoding

based on the system and user configuration settings in a form that can

be used with getwriter().

$ python codecs_stdout_locale.py Locale encoding : UTF-8 With wrapped stdout: pi: π

The encoding also needs to be set up when working with sys.stdin. Use getreader() to get a reader capable of

decoding the input bytes.

import codecs import locale import sys # Configure locale from the user's environment settings. locale.setlocale(locale.LC_ALL, '') # Wrap stdin with an encoding-aware reader. lang, encoding = locale.getdefaultlocale() sys.stdin = codecs.getreader(encoding)(sys.stdin) print 'From stdin:', repr(sys.stdin.read())

Reading from the wrapped handle returns unicode objects

instead of str instances.

$ python codecs_stdout_locale.py | python codecs_stdin.py From stdin: u'Locale encoding : UTF-8nWith wrapped stdout: pi: u03c0n'

Network Communication¶

Network sockets are also byte-streams, and so Unicode data must be

encoded into bytes before it is written to a socket.

import sys import SocketServer class Echo(SocketServer.BaseRequestHandler): def handle(self): # Get some bytes and echo them back to the client. data = self.request.recv(1024) self.request.send(data) return if __name__ == '__main__': import codecs import socket import threading address = ('localhost', 0) # let the kernel give us a port server = SocketServer.TCPServer(address, Echo) ip, port = server.server_address # find out what port we were given t = threading.Thread(target=server.serve_forever) t.setDaemon(True) # don't hang on exit t.start() # Connect to the server s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) s.connect((ip, port)) # Send the data text = u'pi: π' len_sent = s.send(text) # Receive a response response = s.recv(len_sent) print repr(response) # Clean up s.close() server.socket.close()

You could encode the data explicitly, before sending it, but miss one

call to send() and your program would fail with an encoding

error.

$ python codecs_socket_fail.py

Traceback (most recent call last):

File "codecs_socket_fail.py", line 43, in <module>

len_sent = s.send(text)

UnicodeEncodeError: 'ascii' codec can't encode character u'u03c0' in

position 4: ordinal not in range(128)

By using makefile() to get a file-like handle for the socket,

and then wrapping that with a stream-based reader or writer, you will

be able to pass Unicode strings and know they are encoded on the way

in to and out of the socket.

import sys import SocketServer class Echo(SocketServer.BaseRequestHandler): def handle(self): # Get some bytes and echo them back to the client. There is # no need to decode them, since they are not used. data = self.request.recv(1024) self.request.send(data) return class PassThrough(object): def __init__(self, other): self.other = other def write(self, data): print 'Writing :', repr(data) return self.other.write(data) def read(self, size=-1): print 'Reading :', data = self.other.read(size) print repr(data) return data def flush(self): return self.other.flush() def close(self): return self.other.close() if __name__ == '__main__': import codecs import socket import threading address = ('localhost', 0) # let the kernel give us a port server = SocketServer.TCPServer(address, Echo) ip, port = server.server_address # find out what port we were given t = threading.Thread(target=server.serve_forever) t.setDaemon(True) # don't hang on exit t.start() # Connect to the server s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) s.connect((ip, port)) # Wrap the socket with a reader and writer. incoming = codecs.getreader('utf-8')(PassThrough(s.makefile('r'))) outgoing = codecs.getwriter('utf-8')(PassThrough(s.makefile('w'))) # Send the data text = u'pi: π' print 'Sending :', repr(text) outgoing.write(text) outgoing.flush() # Receive a response response = incoming.read() print 'Received:', repr(response) # Clean up s.close() server.socket.close()

This example uses PassThrough to show that the data is

encoded before being sent, and the response is decoded after it is

received in the client.

$ python codecs_socket.py Sending : u'pi: u03c0' Writing : 'pi: xcfx80' Reading : 'pi: xcfx80' Received: u'pi: u03c0'

Encoding Translation¶

Although most applications will work with unicode data

internally, decoding or encoding it as part of an I/O operation, there

are times when changing a file’s encoding without holding on to that

intermediate data format is useful. EncodedFile() takes an open

file handle using one encoding and wraps it with a class that

translates the data to another encoding as the I/O occurs.

from codecs_to_hex import to_hex import codecs from cStringIO import StringIO # Raw version of the original data. data = u'pi: u03c0' # Manually encode it as UTF-8. utf8 = data.encode('utf-8') print 'Start as UTF-8 :', to_hex(utf8, 1) # Set up an output buffer, then wrap it as an EncodedFile. output = StringIO() encoded_file = codecs.EncodedFile(output, data_encoding='utf-8', file_encoding='utf-16') encoded_file.write(utf8) # Fetch the buffer contents as a UTF-16 encoded byte string utf16 = output.getvalue() print 'Encoded to UTF-16:', to_hex(utf16, 2) # Set up another buffer with the UTF-16 data for reading, # and wrap it with another EncodedFile. buffer = StringIO(utf16) encoded_file = codecs.EncodedFile(buffer, data_encoding='utf-8', file_encoding='utf-16') # Read the UTF-8 encoded version of the data. recoded = encoded_file.read() print 'Back to UTF-8 :', to_hex(recoded, 1)

This example shows reading from and writing to separate handles

returned by EncodedFile(). No matter whether the handle is used

for reading or writing, the file_encoding always refers to the

encoding in use by the open file handle passed as the first argument,

and data_encoding value refers to the encoding in use by the data

passing through the read() and write() calls.

$ python codecs_encodedfile.py Start as UTF-8 : 70 69 3a 20 cf 80 Encoded to UTF-16: fffe 7000 6900 3a00 2000 c003 Back to UTF-8 : 70 69 3a 20 cf 80

Non-Unicode Encodings¶

Although most of the earlier examples use Unicode encodings,

codecs can be used for many other data translations. For

example, Python includes codecs for working with base-64, bzip2,

ROT-13, ZIP, and other data formats.

import codecs from cStringIO import StringIO buffer = StringIO() stream = codecs.getwriter('rot_13')(buffer) text = 'abcdefghijklmnopqrstuvwxyz' stream.write(text) stream.flush() print 'Original:', text print 'ROT-13 :', buffer.getvalue()

Any transformation that can be expressed as a function taking a single

input argument and returning a byte or Unicode string can be

registered as a codec.

$ python codecs_rot13.py Original: abcdefghijklmnopqrstuvwxyz ROT-13 : nopqrstuvwxyzabcdefghijklm

Using codecs to wrap a data stream provides a simpler interface

than working directly with zlib.

import codecs from cStringIO import StringIO from codecs_to_hex import to_hex buffer = StringIO() stream = codecs.getwriter('zlib')(buffer) text = 'abcdefghijklmnopqrstuvwxyzn' * 50 stream.write(text) stream.flush() print 'Original length :', len(text) compressed_data = buffer.getvalue() print 'ZIP compressed :', len(compressed_data) buffer = StringIO(compressed_data) stream = codecs.getreader('zlib')(buffer) first_line = stream.readline() print 'Read first line :', repr(first_line) uncompressed_data = first_line + stream.read() print 'Uncompressed :', len(uncompressed_data) print 'Same :', text == uncompressed_data

Not all of the compression or encoding systems support reading a

portion of the data through the stream interface using

readline() or read() because they need to find the end of

a compressed segment to expand it. If your program cannot hold the

entire uncompressed data set in memory, use the incremental access

features of the compression library instead of codecs.

$ python codecs_zlib.py Original length : 1350 ZIP compressed : 48 Read first line : 'abcdefghijklmnopqrstuvwxyzn' Uncompressed : 1350 Same : True

Incremental Encoding¶

Some of the encodings provided, especially bz2 and zlib, may

dramatically change the length of the data stream as they work on it.

For large data sets, these encodings operate better incrementally,

working on one small chunk of data at a time. The

IncrementalEncoder and IncrementalDecoder API is

designed for this purpose.

import codecs import sys from codecs_to_hex import to_hex text = 'abcdefghijklmnopqrstuvwxyzn' repetitions = 50 print 'Text length :', len(text) print 'Repetitions :', repetitions print 'Expected len:', len(text) * repetitions # Encode the text several times build up a large amount of data encoder = codecs.getincrementalencoder('bz2')() encoded = [] print print 'Encoding:', for i in range(repetitions): en_c = encoder.encode(text, final = (i==repetitions-1)) if en_c: print 'nEncoded : {} bytes'.format(len(en_c)) encoded.append(en_c) else: sys.stdout.write('.') bytes = ''.join(encoded) print print 'Total encoded length:', len(bytes) print # Decode the byte string one byte at a time decoder = codecs.getincrementaldecoder('bz2')() decoded = [] print 'Decoding:', for i, b in enumerate(bytes): final= (i+1) == len(text) c = decoder.decode(b, final) if c: print 'nDecoded : {} characters'.format(len(c)) print 'Decoding:', decoded.append(c) else: sys.stdout.write('.') print restored = u''.join(decoded) print print 'Total uncompressed length:', len(restored)

Each time data is passed to the encoder or decoder its internal state

is updated. When the state is consistent (as defined by the codec),

data is returned and the state resets. Until that point, calls to

encode() or decode() will not return any data. When the

last bit of data is passed in, the argument final should be set to

True so the codec knows to flush any remaining buffered data.

$ python codecs_incremental_bz2.py Text length : 27 Repetitions : 50 Expected len: 1350 Encoding:................................................. Encoded : 99 bytes Total encoded length: 99 Decoding:............................................................ ............................ Decoded : 1350 characters Decoding:.......... Total uncompressed length: 1350

Defining Your Own Encoding¶

Since Python comes with a large number of standard codecs already, it

is unlikely that you will need to define your own. If you do, there

are several base classes in codecs to make the process easier.

The first step is to understand the nature of the transformation

described by the encoding. For example, an “invertcaps” encoding

converts uppercase letters to lowercase, and lowercase letters to

uppercase. Here is a simple definition of an encoding function that

performs this transformation on an input string:

import string def invertcaps(text): """Return new string with the case of all letters switched. """ return ''.join( c.upper() if c in string.ascii_lowercase else c.lower() if c in string.ascii_uppercase else c for c in text ) if __name__ == '__main__': print invertcaps('ABC.def') print invertcaps('abc.DEF')

In this case, the encoder and decoder are the same function (as with

ROT-13).

$ python codecs_invertcaps.py abc.DEF ABC.def

Although it is easy to understand, this implementation is not

efficient, especially for very large text strings. Fortunately,

codecs includes some helper functions for creating character

map based codecs such as invertcaps. A character map encoding is

made up of two dictionaries. The encoding map converts character

values from the input string to byte values in the output and the

decoding map goes the other way. Create your decoding map first,

and then use make_encoding_map() to convert it to an encoding

map. The C functions charmap_encode() and

charmap_decode() use the maps to convert their input data

efficiently.

import codecs import string # Map every character to itself decoding_map = codecs.make_identity_dict(range(256)) # Make a list of pairs of ordinal values for the lower and upper case # letters pairs = zip([ ord(c) for c in string.ascii_lowercase], [ ord(c) for c in string.ascii_uppercase]) # Modify the mapping to convert upper to lower and lower to upper. decoding_map.update( dict( (upper, lower) for (lower, upper) in pairs) ) decoding_map.update( dict( (lower, upper) for (lower, upper) in pairs) ) # Create a separate encoding map. encoding_map = codecs.make_encoding_map(decoding_map) if __name__ == '__main__': print codecs.charmap_encode('abc.DEF', 'strict', encoding_map) print codecs.charmap_decode('abc.DEF', 'strict', decoding_map) print encoding_map == decoding_map

Although the encoding and decoding maps for invertcaps are the same,

that may not always be the case. make_encoding_map() detects

situations where more than one input character is encoded to the same

output byte and replaces the encoding value with None to mark the

encoding as undefined.

$ python codecs_invertcaps_charmap.py

('ABC.def', 7)

(u'ABC.def', 7)

True

The character map encoder and decoder support all of the standard

error handling methods described earlier, so you do not need to do any

extra work to comply with that part of the API.

import codecs from codecs_invertcaps_charmap import encoding_map text = u'pi: π' for error in [ 'ignore', 'replace', 'strict' ]: try: encoded = codecs.charmap_encode(text, error, encoding_map) except UnicodeEncodeError, err: encoded = str(err) print '{:7}: {}'.format(error, encoded)

Because the Unicode code point for π is not in the encoding map,

the strict error handling mode raises an exception.

$ python codecs_invertcaps_error.py

ignore : ('PI: ', 5)

replace: ('PI: ?', 5)

strict : 'charmap' codec can't encode character u'u03c0' in position

4: character maps to <undefined>

After that the encoding and decoding maps are defined, you need to set

up a few additional classes and register the encoding.

register() adds a search function to the registry so that when a

user wants to use your encoding codecs can locate it. The

search function must take a single string argument with the name of

the encoding, and return a CodecInfo object if it knows the

encoding, or None if it does not.

import codecs import encodings def search1(encoding): print 'search1: Searching for:', encoding return None def search2(encoding): print 'search2: Searching for:', encoding return None codecs.register(search1) codecs.register(search2) utf8 = codecs.lookup('utf-8') print 'UTF-8:', utf8 try: unknown = codecs.lookup('no-such-encoding') except LookupError, err: print 'ERROR:', err

You can register multiple search functions, and each will be called in

turn until one returns a CodecInfo or the list is exhausted.

The internal search function registered by codecs knows how to

load the standard codecs such as UTF-8 from encodings, so those

names will never be passed to your search function.

$ python codecs_register.py UTF-8: <codecs.CodecInfo object for encoding utf-8 at 0x100452ae0> search1: Searching for: no-such-encoding search2: Searching for: no-such-encoding ERROR: unknown encoding: no-such-encoding

The CodecInfo instance returned by the search function tells

codecs how to encode and decode using all of the different

mechanisms supported: stateless, incremental, and stream.

codecs includes base classes that make setting up a character

map encoding easy. This example puts all of the pieces together to

register a search function that returns a CodecInfo instance

configured for the invertcaps codec.

import codecs from codecs_invertcaps_charmap import encoding_map, decoding_map # Stateless encoder/decoder class InvertCapsCodec(codecs.Codec): def encode(self, input, errors='strict'): return codecs.charmap_encode(input, errors, encoding_map) def decode(self, input, errors='strict'): return codecs.charmap_decode(input, errors, decoding_map) # Incremental forms class InvertCapsIncrementalEncoder(codecs.IncrementalEncoder): def encode(self, input, final=False): return codecs.charmap_encode(input, self.errors, encoding_map)[0] class InvertCapsIncrementalDecoder(codecs.IncrementalDecoder): def decode(self, input, final=False): return codecs.charmap_decode(input, self.errors, decoding_map)[0] # Stream reader and writer class InvertCapsStreamReader(InvertCapsCodec, codecs.StreamReader): pass class InvertCapsStreamWriter(InvertCapsCodec, codecs.StreamWriter): pass # Register the codec search function def find_invertcaps(encoding): """Return the codec for 'invertcaps'. """ if encoding == 'invertcaps': return codecs.CodecInfo( name='invertcaps', encode=InvertCapsCodec().encode, decode=InvertCapsCodec().decode, incrementalencoder=InvertCapsIncrementalEncoder, incrementaldecoder=InvertCapsIncrementalDecoder, streamreader=InvertCapsStreamReader, streamwriter=InvertCapsStreamWriter, ) return None codecs.register(find_invertcaps) if __name__ == '__main__': # Stateless encoder/decoder encoder = codecs.getencoder('invertcaps') text = 'abc.DEF' encoded_text, consumed = encoder(text) print 'Encoder converted "{}" to "{}", consuming {} characters'.format( text, encoded_text, consumed) # Stream writer import sys writer = codecs.getwriter('invertcaps')(sys.stdout) print 'StreamWriter for stdout: ', writer.write('abc.DEF') print # Incremental decoder decoder_factory = codecs.getincrementaldecoder('invertcaps') decoder = decoder_factory() decoded_text_parts = [] for c in encoded_text: decoded_text_parts.append(decoder.decode(c, final=False)) decoded_text_parts.append(decoder.decode('', final=True)) decoded_text = ''.join(decoded_text_parts) print 'IncrementalDecoder converted "{}" to "{}"'.format( encoded_text, decoded_text)

The stateless encoder/decoder base class is Codec. Override

encode() and decode() with your implementation (in this

case, calling charmap_encode() and charmap_decode()

respectively). Each method must return a tuple containing the

transformed data and the number of the input bytes or characters

consumed. Conveniently, charmap_encode() and

charmap_decode() already return that information.

IncrementalEncoder and IncrementalDecoder serve as

base classes for the incremental interfaces. The encode() and

decode() methods of the incremental classes are defined in such

a way that they only return the actual transformed data. Any

information about buffering is maintained as internal state. The

invertcaps encoding does not need to buffer data (it uses a one-to-one

mapping). For encodings that produce a different amount of output

depending on the data being processed, such as compression algorithms,

BufferedIncrementalEncoder and

BufferedIncrementalDecoder are more appropriate base classes,

since they manage the unprocessed portion of the input for you.

StreamReader and StreamWriter need encode()

and decode() methods, too, and since they are expected to return

the same value as the version from Codec you can use multiple

inheritance for the implementation.

$ python codecs_invertcaps_register.py Encoder converted "abc.DEF" to "ABC.def", consuming 7 characters StreamWriter for stdout: ABC.def IncrementalDecoder converted "ABC.def" to "abc.DEF"

Introduction to Python Unicode Error

In Python, Unicode is defined as a string type for representing the characters that allow the Python program to work with any type of different possible characters. For example, any path of the directory or any link address as a string. When we use such a string as a parameter to any function, there is a possibility of the occurrence of an error. Such error is known as Unicode error in Python. We get such an error because any character after the Unicode escape sequence (“ u ”) produces an error which is a typical error on windows.

Working of Unicode Error in Python with Examples

Unicode standard in Python is the representation of characters in code point format. These standards are made to avoid ambiguity between the characters specified, which may occur Unicode errors. For example, let us consider “ I ” as roman number one. It can be even considered the capital alphabet “ i ”; they both look the same, but they are two different characters with a different meaning to avoid such ambiguity; we use Unicode standards.

In Python, Unicode standards have two types of error: Unicode encodes error and Unicode decode error. In Python, it includes the concept of Unicode error handlers. These handlers are invoked whenever a problem or error occurs in the process of encoding or decoding the string or given text. To include Unicode characters in the Python program, we first use Unicode escape symbol u before any string, which can be considered as a Unicode-type variable.

Syntax:

Unicode characters in Python program can be written as follows:

“u dfskgfkdsg”

Or

“U sakjhdxhj”

Or

“u1232hgdsa”

In the above syntax, we can see 3 different ways of declaring Unicode characters. In the Python program, we can write Unicode literals with prefix either “u” or “U” followed by a string containing alphabets and numerical where we can see the above two syntax examples. At the end last syntax sample, we can also use the “u” Unicode escape sequence to declare Unicode characters in the program. In this, we have to note that using “u”, we can write a string containing any alphabet or numerical, but when we want to declare any hex value then we have to “x” escape sequence which takes two hex digits and for octal, it will take digit 777.

Example #1

Now let us see an example below for declaring Unicode characters in the program.

Code:

#!/usr/bin/env python

# -*- coding: latin-1 -*-

a= u'dfsfxacu1234'

print("The value of the above unicode literal is as follows:")

print(ord(a[-1]))Output:

In the above program, we can see the sample of Unicode literals in the python program, but before that, we need to declare encoding, which is different in different versions of Python, and in this program, we can see in the first two lines of the program.

Now we will see the Unicode errors such as Unicode encoding Error and Unicode decoding errors, which are handled by Unicode error handlers, are invoked automatically whenever the errors are encountered. There are 3 typical errors in Python Unicode error handlers.

Strict error in Python raises UnicodeEncodeError and UnicodeDecodeError for encoding and decoding errors that are occurred, respectively.

Example #2

UnicodeEncodeError demonstration and its example.

In Python, it cannot detect Unicode characters, and therefore it throws an encoding error as it cannot encode the given Unicode string.

Code:

str(u'éducba')

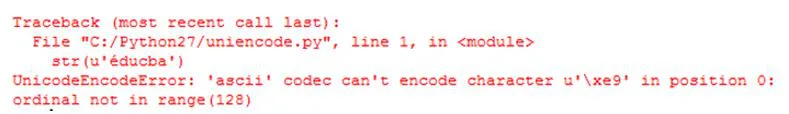

Output:

In the above program, we can see we have passed the argument to the str() function, which is a Unicode string. But this function will use the default encoding process ASCII. As we can see in the above statement, we have not specified any encoding at the starting of this program, and therefore it throws an error, and the default encoding that is used is 7-bit encoding, and it cannot recognize the characters that are outside the 0 to 128 range. Therefore, we can see the error that is displayed in the above screenshot.

The above program can be fixed by encoding Unicode string manually, such as .encode(‘utf8’), before passing the Unicode string to the str() function.

Example #3

In this program, we have called the str() function explicitly, which may again throw an UnicodeEncodeError.

Code:

a = u'café'

b = a.encode('utf8')

r = str(b)

print("The unicode string after fixing the UnicodeEncodeError is as follows:")

print(r)Output:

In the above, we can show how we can avoid UnicodeEncodeError manually by using .encode(‘utf8’) to the Unicode string.

Example #4

Now we will see the UnicodeDecodeError demonstration and its example and how to avoid it.

Code:

a = u'éducba'

b = a.encode('utf8')

unicode(b)

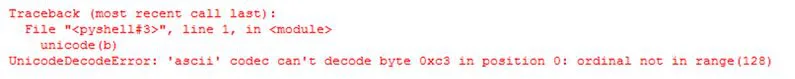

Output:

In the above program, we can see we are trying to print the Unicode characters by encoding first; then we are trying to convert the encoded string into Unicode characters, which mean decoding back to Unicode characters as given at the starting. In the above program, when we run, we get an error as UnicodeDecodeError. So to avoid this error, we have to manually decode the Unicode character “b”.

So we can fix it by using the below statement, and we can see it in the above screenshot.

b.decode(‘utf8’)

Conclusion

In this article, we conclude that in Python, Unicode literals are other types of string for representing different types of string. In this article, we saw different errors like UnicodeEncodeError and UnicodeDecodeError, which are used to encode and decode strings in the program, along with examples. In this article, we also saw how to fix these errors manually by passing the string to the function.

Recommended Articles

This is a guide to Python Unicode Error. Here we discuss the introduction to Python Unicode Error and working of Unicode error with examples, respectively. You may also have a look at the following articles to learn more –

- Python String Operations

- Python Sort List

- Quick Sort in Python

- Python Constants

Методы encode и decode Python используются для кодирования и декодирования входной строки с использованием заданной кодировки. Давайте подробно рассмотрим эти две функции.

Мы используем метод encode() для входной строки, который есть у каждого строкового объекта.

Формат:

input_string.encode(encoding, errors)

Это кодирует input_string с использованием encoding , где errors определяют поведение, которому надо следовать, если по какой-либо случайности кодирование строки не выполняется.

encode() приведет к последовательности bytes .

inp_string = 'Hello' bytes_encoded = inp_string.encode() print(type(bytes_encoded))

Как и ожидалось, в результате получается объект <class 'bytes'> :

<class 'bytes'>

Тип кодирования, которому надо следовать, отображается параметром encoding . Существуют различные типы схем кодирования символов, из которых в Python по умолчанию используется схема UTF-8.

Рассмотрим параметр encoding на примере.

a = 'This is a simple sentence.'

print('Original string:', a)

# Decodes to utf-8 by default

a_utf = a.encode()

print('Encoded string:', a_utf)

Вывод

Original string: This is a simple sentence. Encoded string: b'This is a simple sentence.'

Как вы можете заметить, мы закодировали входную строку в формате UTF-8. Хотя особой разницы нет, вы можете заметить, что строка имеет префикс b . Это означает, что строка преобразуется в поток байтов.