- the

urllibModule in Python - Check

robots.txtto PreventurllibHTTP Error 403 Forbidden Message - Adding Cookie to the Request Headers to Solve

urllibHTTP Error 403 Forbidden Message - Use Session Object to Solve

urllibHTTP Error 403 Forbidden Message

Today’s article explains how to deal with an error message (exception), urllib.error.HTTPError: HTTP Error 403: Forbidden, produced by the error class on behalf of the request classes when it faces a forbidden resource.

the urllib Module in Python

The urllib Python module handles URLs for python via different protocols. It is famous for web scrapers who want to obtain data from a particular website.

The urllib contains classes, methods, and functions that perform certain operations such as reading, parsing URLs, and robots.txt. There are four classes, request, error, parse, and robotparser.

Check robots.txt to Prevent urllib HTTP Error 403 Forbidden Message

When using the urllib module to interact with clients or servers via the request class, we might experience specific errors. One of those errors is the HTTP 403 error.

We get urllib.error.HTTPError: HTTP Error 403: Forbidden error message in urllib package while reading a URL. The HTTP 403, the Forbidden Error, is an HTTP status code that indicates that the client or server forbids access to a requested resource.

Therefore, when we see this kind of error message, urllib.error.HTTPError: HTTP Error 403: Forbidden, the server understands the request but decides not to process or authorize the request that we sent.

To understand why the website we are accessing is not processing our request, we need to check an important file, robots.txt. Before web scraping or interacting with a website, it is often advised to review this file to know what to expect and not face any further troubles.

To check it on any website, we can follow the format below.

https://<website.com>/robots.txt

For example, check YouTube, Amazon, and Google robots.txt files.

https://www.youtube.com/robots.txt

https://www.amazon.com/robots.txt

https://www.google.com/robots.txt

Checking YouTube robots.txt gives the following result.

# robots.txt file for YouTube

# Created in the distant future (the year 2000) after

# the robotic uprising of the mid-'90s wiped out all humans.

User-agent: Mediapartners-Google*

Disallow:

User-agent: *

Disallow: /channel/*/community

Disallow: /comment

Disallow: /get_video

Disallow: /get_video_info

Disallow: /get_midroll_info

Disallow: /live_chat

Disallow: /login

Disallow: /results

Disallow: /signup

Disallow: /t/terms

Disallow: /timedtext_video

Disallow: /user/*/community

Disallow: /verify_age

Disallow: /watch_ajax

Disallow: /watch_fragments_ajax

Disallow: /watch_popup

Disallow: /watch_queue_ajax

Sitemap: https://www.youtube.com/sitemaps/sitemap.xml

Sitemap: https://www.youtube.com/product/sitemap.xml

We can notice a lot of Disallow tags there. This Disallow tag shows the website’s area, which is not accessible. Therefore, any request to those areas will not be processed and is forbidden.

In other robots.txt files, we might see an Allow tag. For example, http://youtube.com/comment is forbidden to any external request, even with the urllib module.

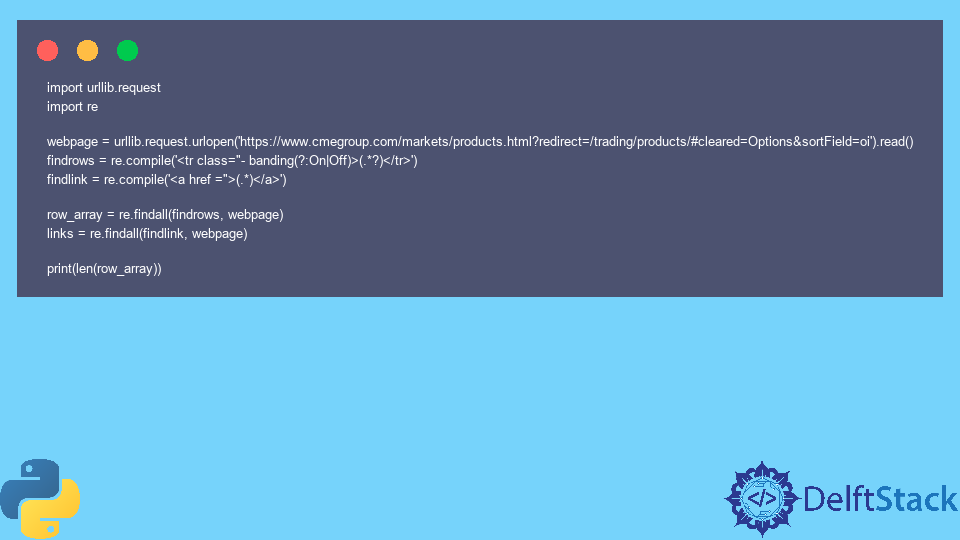

Let’s write code to scrape data from a website that returns an HTTP 403 error when accessed.

Example Code:

import urllib.request

import re

webpage = urllib.request.urlopen('https://www.cmegroup.com/markets/products.html?redirect=/trading/products/#cleared=Options&sortField=oi').read()

findrows = re.compile('<tr class="- banding(?:On|Off)>(.*?)</tr>')

findlink = re.compile('<a href =">(.*)</a>')

row_array = re.findall(findrows, webpage)

links = re.findall(findlink, webpage)

print(len(row_array))

Output:

Traceback (most recent call last):

File "c:UsersakinlDocumentsPythonindex.py", line 7, in <module>

webpage = urllib.request.urlopen('https://www.cmegroup.com/markets/products.html?redirect=/trading/products/#cleared=Options&sortField=oi').read()

File "C:Python310liburllibrequest.py", line 216, in urlopen

return opener.open(url, data, timeout)

File "C:Python310liburllibrequest.py", line 525, in open

response = meth(req, response)

File "C:Python310liburllibrequest.py", line 634, in http_response

response = self.parent.error(

File "C:Python310liburllibrequest.py", line 563, in error

return self._call_chain(*args)

File "C:Python310liburllibrequest.py", line 496, in _call_chain

result = func(*args)

File "C:Python310liburllibrequest.py", line 643, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 403: Forbidden

The reason is that we are forbidden from accessing the website. However, if we check the robots.txt file, we will notice that https://www.cmegroup.com/markets/ is not with a Disallow tag. However, if we go down the robots.txt file for the website we wanted to scrape, we will find the below.

User-agent: Python-urllib/1.17

Disallow: /

The above text means that the user agent named Python-urllib is not allowed to crawl any URL within the site. That means using the Python urllib module is not allowed to crawl the site.

Therefore, check or parse the robots.txt to know what resources we have access to. we can parse robots.txt file using the robotparser class. These can prevent our code from experiencing an urllib.error.HTTPError: HTTP Error 403: Forbidden error message.

Passing a valid user agent as a header parameter will quickly fix the problem. The website may use cookies as an anti-scraping measure.

The website may set and ask for cookies to be echoed back to prevent scraping, which is maybe against its policy.

from urllib.request import Request, urlopen

def get_page_content(url, head):

req = Request(url, headers=head)

return urlopen(req)

url = 'https://example.com'

head = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.84 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Charset': 'ISO-8859-1,utf-8;q=0.7,*;q=0.3',

'Accept-Encoding': 'none',

'Accept-Language': 'en-US,en;q=0.8',

'Connection': 'keep-alive',

'refere': 'https://example.com',

'cookie': """your cookie value ( you can get that from your web page) """

}

data = get_page_content(url, head).read()

print(data)

Output:

<!doctype html>n<html>n<head>n <title>Example Domain</title>nn <meta

'

'

'

<p><a href="https://www.iana.org/domains/example">More information...</a></p>n</div>n</body>n</html>n'

Passing a valid user agent as a header parameter will quickly fix the problem.

Use Session Object to Solve urllib HTTP Error 403 Forbidden Message

Sometimes, even using a user agent won’t stop this error from occurring. The Session object of the requests module can then be used.

from random import seed

import requests

url = "https://stackoverflow.com/search?q=html+error+403"

session_obj = requests.Session()

response = session_obj.get(url, headers={"User-Agent": "Mozilla/5.0"})

print(response.status_code)

Output:

The above article finds the cause of the urllib.error.HTTPError: HTTP Error 403: Forbidden and the solution to handle it. mod_security basically causes this error as different web pages use different security mechanisms to differentiate between human and automated computers (bots).

Summary

TL;DR: requests raises a 403 while requesting an authenticated Github API route, which otherwise succeeds while using curl/another python library like httpx

Was initially discovered in the ‘ghexport’ project; I did a reasonable amount of debugging and created this repo before submitting this issue to PyGithub, but thats a lot to look through, just leaving it here as context.

It’s been hard to reproduce, the creator of ghexport (where this was initially discovered) didn’t have the same issue, so I’m unsure of the exact reason

Expected Result

requests succeeds for the authenticated request

Actual Result

Request fails, with:

{'message': 'Must have push access to repository', 'documentation_url': 'https://docs.github.com/rest/reference/repos#get-repository-clones'}

failed

Traceback (most recent call last):

File "/home/sean/Repos/pygithub_requests_error/minimal.py", line 47, in <module>

main()

File "/home/sean/Repos/pygithub_requests_error/minimal.py", line 44, in main

make_request(requests.get, url, headers)

File "/home/sean/Repos/pygithub_requests_error/minimal.py", line 31, in make_request

resp.raise_for_status()

File "/home/sean/.local/lib/python3.9/site-packages/requests/models.py", line 941, in raise_for_status

raise HTTPError(http_error_msg, response=self)

requests.exceptions.HTTPError: 403 Client Error: Forbidden for url: https://api.github.com/repos/seanbreckenridge/albums/traffic/clones

Reproduction Steps

Apologies if this is a bit too specific, but otherwise requests works great on my system and I can’t find any other way to reproduce this — Is a bit long as it requires an auth token

Go here and create a token with scopes like:

I’ve compared this to httpx, where it doesn’t fail:

#!/usr/bin/env python3 from typing import Callable, Any import requests import httpx # extract status/status_code from the requests/httpx item def extract_status(obj: Any) -> int: if hasattr(obj, "status"): return obj.status if hasattr(obj, "status_code"): return obj.status_code raise TypeError("unsupported request object") def make_request(using_verb: Callable[..., Any], url: str, headers: Any) -> None: print("using", using_verb.__module__, using_verb.__qualname__, url) resp = using_verb(url, headers=headers) status = extract_status(resp) print(str(resp.json())) if status == 200: print("succeeded") else: print("failed") resp.raise_for_status() def main(): # see https://github.com/seanbreckenridge/pygithub_requests_error for token scopes auth_token = "put your auth token here" headers = { "Authorization": "token {}".format(auth_token), "User-Agent": "requests_error", "Accept": "application/vnd.github.v3+json", } # replace this with a URL you have access to url = "https://api.github.com/repos/seanbreckenridge/albums/traffic/clones" make_request(httpx.get, url, headers) make_request(requests.get, url, headers) if __name__ == "__main__": main()

That outputs:

using httpx get https://api.github.com/repos/seanbreckenridge/albums/traffic/clones

{'count': 15, 'uniques': 10, 'clones': [{'timestamp': '2021-04-12T00:00:00Z', 'count': 1, 'uniques': 1}, {'timestamp': '2021-04-14T00:00:00Z', 'count': 1, 'uniques': 1}, {'timestamp': '2021-04-17T00:00:00Z', 'count': 1, 'uniques': 1}, {'timestamp': '2021-04-18T00:00:00Z', 'count': 2, 'uniques': 2}, {'timestamp': '2021-04-23T00:00:00Z', 'count': 9, 'uniques': 5}, {'timestamp': '2021-04-25T00:00:00Z', 'count': 1, 'uniques': 1}]}

succeeded

using requests.api get https://api.github.com/repos/seanbreckenridge/albums/traffic/clones

{'message': 'Must have push access to repository', 'documentation_url': 'https://docs.github.com/rest/reference/repos#get-repository-clones'}

failed

Traceback (most recent call last):

File "/home/sean/Repos/pygithub_requests_error/minimal.py", line 48, in <module>

main()

File "/home/sean/Repos/pygithub_requests_error/minimal.py", line 45, in main

make_request(requests.get, url, headers)

File "/home/sean/Repos/pygithub_requests_error/minimal.py", line 32, in make_request

resp.raise_for_status()

File "/home/sean/.local/lib/python3.9/site-packages/requests/models.py", line 943, in raise_for_status

raise HTTPError(http_error_msg, response=self)

requests.exceptions.HTTPError: 403 Client Error: Forbidden for url: https://api.github.com/repos/seanbreckenridge/albums/traffic/clones

Another thing that may be useful as context is the pdb trace I did here, which was me stepping into where the request was made in PyGithub, and making all the requests manually using the computed url/headers. Fails when I use requests.get but httpx.get works fine:

> /home/sean/.local/lib/python3.8/site-packages/github/Requester.py(484)__requestEncode()

-> self.NEW_DEBUG_FRAME(requestHeaders)

(Pdb) n

> /home/sean/.local/lib/python3.8/site-packages/github/Requester.py(486)__requestEncode()

-> status, responseHeaders, output = self.__requestRaw(

(Pdb) w

/home/sean/Repos/ghexport/export.py(109)<module>()

-> main()

/home/sean/Repos/ghexport/export.py(84)main()

-> j = get_json(**params)

/home/sean/Repos/ghexport/export.py(74)get_json()

-> return Exporter(**params).export_json()

/home/sean/Repos/ghexport/export.py(60)export_json()

-> repo._requester.requestJsonAndCheck('GET', repo.url + '/traffic/' + f)

/home/sean/.local/lib/python3.8/site-packages/github/Requester.py(318)requestJsonAndCheck()

-> *self.requestJson(

/home/sean/.local/lib/python3.8/site-packages/github/Requester.py(410)requestJson()

-> return self.__requestEncode(cnx, verb, url, parameters, headers, input, encode)

> /home/sean/.local/lib/python3.8/site-packages/github/Requester.py(486)__requestEncode()

-> status, responseHeaders, output = self.__requestRaw(

(Pdb) url

'/repos/seanbreckenridge/advent-of-code-2019/traffic/views'

(Pdb) requestHeaders

{'Authorization': 'token <MY TOKEN HERE>', 'User-Agent': 'PyGithub/Python'}

(Pdb) import requests

(Pdb) requests.get("https://api.github.com" + url, headers=requestHeaders).json()

{'message': 'Must have push access to repository', 'documentation_url': 'https://docs.github.com/rest/reference/repos#get-page-views'}

(Pdb) httpx.get("https://api.github.com" + url, headers=requestHeaders).json()

{'count': 0, 'uniques': 0, 'views': []}

(Pdb) httpx succeeded??

System Information

$ python -m requests.help

{

"chardet": {

"version": "3.0.4"

},

"cryptography": {

"version": "3.4.7"

},

"idna": {

"version": "2.10"

},

"implementation": {

"name": "CPython",

"version": "3.9.3"

},

"platform": {

"release": "5.11.16-arch1-1",

"system": "Linux"

},

"pyOpenSSL": {

"openssl_version": "101010bf",

"version": "20.0.1"

},

"requests": {

"version": "2.25.1"

},

"system_ssl": {

"version": "101010bf"

},

"urllib3": {

"version": "1.25.9"

},

"using_pyopenssl": true

}

$ pip freeze | grep -E 'requests|httpx'

httpx==0.16.1

requests==2.25.1

The urllib.error.httperror: http error 403: forbidden occurs when you try to scrap a webpage using urllib.request module and the mod_security blocks the request. There are several reasons why you get this error. Let’s take a look at each of the use cases in detail.

Usually, the websites are protected with App Gateway, WAF rules, etc., which monitor whether the requests are from the actual users or triggered through the automated bot system. The mod_security or the WAF rule will block these requests treating them as spider/bot requests. These security features are the most standard ones to prevent DDOS attacks on the server.

Now coming back to the error when you make a request to any site using urllib.request basically, you will not set any user-agents and headers and by default the urllib sets something like python urllib/3.3.0, which is easily detected by the mod_security.

The mod_security is usually configured in such a way that if any requests happen without a valid user-agent header(browser user-agent), the mod_security will block the request and return the urllib.error.httperror: http error 403: forbidden

Example of 403 forbidden error

from urllib.request import Request, urlopen

req = Request('http://www.cmegroup.com/')

webpage = urlopen(req).read()Output

File "C:UsersuserAppDataLocalProgramsPythonPython39liburllibrequest.py", line 494, in _call_chain

result = func(*args)

urllib.error.HTTPError: HTTP Error 403: Forbidden

PS C:ProjectsTryouts> from urllib.request import Request, urlopenThe easy way to resolve the error is by passing a valid user-agent as a header parameter, as shown below.

from urllib.request import Request, urlopen

req = Request('https://www.yahoo.com', headers={'User-Agent': 'Mozilla/5.0'})

webpage = urlopen(req).read()

Alternatively, you can even set a timeout if you are not getting the response from the website. Python will raise a socket exception if the website doesn’t respond within the mentioned timeout period.

from urllib.request import Request, urlopen

req = Request('http://www.cmegroup.com/', headers={'User-Agent': 'Mozilla/5.0'})

webpage = urlopen(req,timeout=10).read()

In some cases, like getting a real-time bitcoin or stock market value, you will send requests every second, and the servers can block if there are too many requests coming from the same IP address and throws 403 security error.

If you get this error because of too many requests, consider adding delay between each request to resolve the error.

Srinivas Ramakrishna is a Solution Architect and has 14+ Years of Experience in the Software Industry. He has published many articles on Medium, Hackernoon, dev.to and solved many problems in StackOverflow. He has core expertise in various technologies such as Microsoft .NET Core, Python, Node.JS, JavaScript, Cloud (Azure), RDBMS (MSSQL), React, Powershell, etc.

Sign Up for Our Newsletters

Subscribe to get notified of the latest articles. We will never spam you. Be a part of our ever-growing community.

By checking this box, you confirm that you have read and are agreeing to our terms of use regarding the storage of the data submitted through this form.