SSL certificate_verify_failed errors typically occur as a result of outdated Python default certificates or invalid root certificates. We will cover how to fix this issue in 4 ways in this article.

Why certificate_verify_failed happen?

The SSL connection will be established based on the following process. We will get errors if any of these steps does not go well.

For this error certificate_verify_failed, it usually happens during step 2 and step 3.

- The client sends a request to the server for a secure session. The server responds by sending its X.509 digital certificate to the client.

- The client receives the server’s X.509 digital certificate.

- The client authenticates the server, using a list of known certificate authorities.

- The client generates a random symmetric key and encrypts it using server’s public key.

- The client and server now both know the symmetric key and can use the SSL encryption process to encrypt and decrypt the information contained in the client request and the server response.

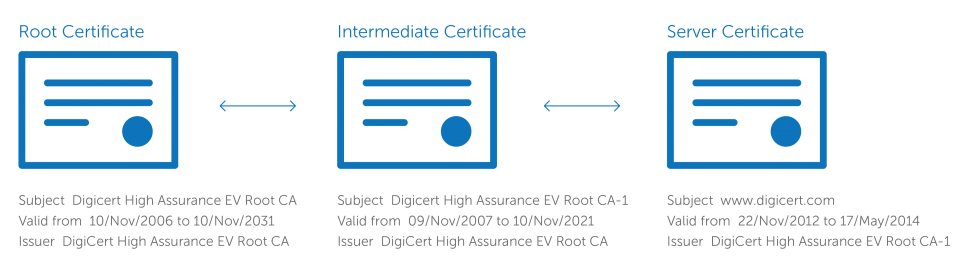

When the client receives the server’s certificate, it begins chaining that certificate back to its root. It will begin by following the chain to the intermediate that has been installed, from there it continues tracing backwards until it arrives at a trusted root certificate.

If the certificate is valid and can be chained back to a trusted root, it will be trusted. If it can’t be chained back to a trusted root, the browser will issue a warning about the certificate.

Related: Check SSL Certificate Chain with OpenSSL Examples

Error info about certificate_verify_failed

We will see the following error.

<urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:777)>

What is SSL certificate

Server certificates are the most popular type of X.509 certificate. SSL/TLS certificates are issued to hostnames (machine names like ‘ABC-SERVER-02’ or domain names like google.com).

A server certificate is a file installed on a website’s origin server. It’s simply a data file containing the public key and the identity of the website owner, along with other information. Without a server certificate, a website’s traffic can’t be encrypted with TLS.

Technically, any website owner can create their own server certificate, and such certificates are called self-signed certificates. However, browsers do not consider self-signed certificates to be as trustworthy as SSL certificates issued by a certificate authority.

Related: 2 Ways to Create self signed certificate with Openssl Command

How to fix certificate_verify_failed?

If you receive the “certificate_verify_failed” error when trying to connect to a website, it means that the certificate on the website is not trusted. There are a few different ways to fix this error.

We will skip the SSL certificate check in the first three solutions. For the fourth solution, we are going to install the latest CA certificate from certifi.

Create unverified context in SSL

import ssl

context = ssl._create_unverified_context()

urllib.request.urlopen(req,context=context)

Create unverified https context in SSL

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

urllib2.urlopen(“https://google.com”).read()

Use requests module and set ssl verify to false

requests.get(url, headers=Hostreferer,verify=False)

Update SSL certificate with PIP

we can also update our SSL certificate With PIP. All we would have to do is to update our SSL certificate directory with the following piece of code: pip install –upgrade certifi

What this command does is update our system’s SSL certificate directory.

Reference:

Understanding SSL certificates

Check SSL Certificate Chain with OpenSSL Examples

5 ways to check SSL Certificate

Содержание

- Advanced Usage¶

- Session Objects¶

- Request and Response Objects¶

- Prepared Requests¶

- SSL Cert Verification¶

- Client Side Certificates¶

- CA Certificates¶

- Body Content Workflow¶

- Keep-Alive¶

- Streaming Uploads¶

- Chunk-Encoded Requests¶

- POST Multiple Multipart-Encoded Files¶

- Event Hooks¶

- Custom Authentication¶

- Streaming Requests¶

- Proxies¶

- SOCKS¶

- Compliance¶

- Encodings¶

- HTTP Verbs¶

- Custom Verbs¶

- Link Headers¶

- Transport Adapters¶

- Example: Specific SSL Version¶

- Blocking Or Non-Blocking?В¶

- Header Ordering¶

- Timeouts¶

Advanced Usage¶

This document covers some of Requests more advanced features.

Session Objects¶

The Session object allows you to persist certain parameters across requests. It also persists cookies across all requests made from the Session instance, and will use urllib3 ’s connection pooling. So if you’re making several requests to the same host, the underlying TCP connection will be reused, which can result in a significant performance increase (see HTTP persistent connection).

A Session object has all the methods of the main Requests API.

Let’s persist some cookies across requests:

Sessions can also be used to provide default data to the request methods. This is done by providing data to the properties on a Session object:

Any dictionaries that you pass to a request method will be merged with the session-level values that are set. The method-level parameters override session parameters.

Note, however, that method-level parameters will not be persisted across requests, even if using a session. This example will only send the cookies with the first request, but not the second:

If you want to manually add cookies to your session, use the Cookie utility functions to manipulate Session.cookies .

Sessions can also be used as context managers:

This will make sure the session is closed as soon as the with block is exited, even if unhandled exceptions occurred.

Remove a Value From a Dict Parameter

Sometimes you’ll want to omit session-level keys from a dict parameter. To do this, you simply set that key’s value to None in the method-level parameter. It will automatically be omitted.

All values that are contained within a session are directly available to you. See the Session API Docs to learn more.

Request and Response Objects¶

Whenever a call is made to requests.get() and friends, you are doing two major things. First, you are constructing a Request object which will be sent off to a server to request or query some resource. Second, a Response object is generated once Requests gets a response back from the server. The Response object contains all of the information returned by the server and also contains the Request object you created originally. Here is a simple request to get some very important information from Wikipedia’s servers:

If we want to access the headers the server sent back to us, we do this:

However, if we want to get the headers we sent the server, we simply access the request, and then the request’s headers:

Prepared Requests¶

Whenever you receive a Response object from an API call or a Session call, the request attribute is actually the PreparedRequest that was used. In some cases you may wish to do some extra work to the body or headers (or anything else really) before sending a request. The simple recipe for this is the following:

Since you are not doing anything special with the Request object, you prepare it immediately and modify the PreparedRequest object. You then send that with the other parameters you would have sent to requests.* or Session.* .

However, the above code will lose some of the advantages of having a Requests Session object. In particular, Session -level state such as cookies will not get applied to your request. To get a PreparedRequest with that state applied, replace the call to Request.prepare() with a call to Session.prepare_request() , like this:

When you are using the prepared request flow, keep in mind that it does not take into account the environment. This can cause problems if you are using environment variables to change the behaviour of requests. For example: Self-signed SSL certificates specified in REQUESTS_CA_BUNDLE will not be taken into account. As a result an SSL: CERTIFICATE_VERIFY_FAILED is thrown. You can get around this behaviour by explicitly merging the environment settings into your session:

SSL Cert Verification¶

Requests verifies SSL certificates for HTTPS requests, just like a web browser. By default, SSL verification is enabled, and Requests will throw a SSLError if it’s unable to verify the certificate:

I don’t have SSL setup on this domain, so it throws an exception. Excellent. GitHub does though:

You can pass verify the path to a CA_BUNDLE file or directory with certificates of trusted CAs:

If verify is set to a path to a directory, the directory must have been processed using the c_rehash utility supplied with OpenSSL.

This list of trusted CAs can also be specified through the REQUESTS_CA_BUNDLE environment variable. If REQUESTS_CA_BUNDLE is not set, CURL_CA_BUNDLE will be used as fallback.

Requests can also ignore verifying the SSL certificate if you set verify to False:

Note that when verify is set to False , requests will accept any TLS certificate presented by the server, and will ignore hostname mismatches and/or expired certificates, which will make your application vulnerable to man-in-the-middle (MitM) attacks. Setting verify to False may be useful during local development or testing.

By default, verify is set to True. Option verify only applies to host certs.

Client Side Certificates¶

You can also specify a local cert to use as client side certificate, as a single file (containing the private key and the certificate) or as a tuple of both files’ paths:

If you specify a wrong path or an invalid cert, you’ll get a SSLError:

The private key to your local certificate must be unencrypted. Currently, Requests does not support using encrypted keys.

CA Certificates¶

Requests uses certificates from the package certifi. This allows for users to update their trusted certificates without changing the version of Requests.

Before version 2.16, Requests bundled a set of root CAs that it trusted, sourced from the Mozilla trust store. The certificates were only updated once for each Requests version. When certifi was not installed, this led to extremely out-of-date certificate bundles when using significantly older versions of Requests.

For the sake of security we recommend upgrading certifi frequently!

Body Content Workflow¶

By default, when you make a request, the body of the response is downloaded immediately. You can override this behaviour and defer downloading the response body until you access the Response.content attribute with the stream parameter:

At this point only the response headers have been downloaded and the connection remains open, hence allowing us to make content retrieval conditional:

You can further control the workflow by use of the Response.iter_content() and Response.iter_lines() methods. Alternatively, you can read the undecoded body from the underlying urllib3 urllib3.HTTPResponse at Response.raw .

If you set stream to True when making a request, Requests cannot release the connection back to the pool unless you consume all the data or call Response.close . This can lead to inefficiency with connections. If you find yourself partially reading request bodies (or not reading them at all) while using stream=True , you should make the request within a with statement to ensure it’s always closed:

Keep-Alive¶

Excellent news — thanks to urllib3, keep-alive is 100% automatic within a session! Any requests that you make within a session will automatically reuse the appropriate connection!

Note that connections are only released back to the pool for reuse once all body data has been read; be sure to either set stream to False or read the content property of the Response object.

Streaming Uploads¶

Requests supports streaming uploads, which allow you to send large streams or files without reading them into memory. To stream and upload, simply provide a file-like object for your body:

It is strongly recommended that you open files in binary mode . This is because Requests may attempt to provide the Content-Length header for you, and if it does this value will be set to the number of bytes in the file. Errors may occur if you open the file in text mode.

Chunk-Encoded Requests¶

Requests also supports Chunked transfer encoding for outgoing and incoming requests. To send a chunk-encoded request, simply provide a generator (or any iterator without a length) for your body:

For chunked encoded responses, it’s best to iterate over the data using Response.iter_content() . In an ideal situation you’ll have set stream=True on the request, in which case you can iterate chunk-by-chunk by calling iter_content with a chunk_size parameter of None . If you want to set a maximum size of the chunk, you can set a chunk_size parameter to any integer.

POST Multiple Multipart-Encoded Files¶

You can send multiple files in one request. For example, suppose you want to upload image files to an HTML form with a multiple file field вЂimages’:

To do that, just set files to a list of tuples of (form_field_name, file_info) :

It is strongly recommended that you open files in binary mode . This is because Requests may attempt to provide the Content-Length header for you, and if it does this value will be set to the number of bytes in the file. Errors may occur if you open the file in text mode.

Event Hooks¶

Requests has a hook system that you can use to manipulate portions of the request process, or signal event handling.

The response generated from a Request.

You can assign a hook function on a per-request basis by passing a dictionary to the hooks request parameter:

That callback_function will receive a chunk of data as its first argument.

Your callback function must handle its own exceptions. Any unhandled exception won’t be passed silently and thus should be handled by the code calling Requests.

If the callback function returns a value, it is assumed that it is to replace the data that was passed in. If the function doesn’t return anything, nothing else is affected.

Let’s print some request method arguments at runtime:

You can add multiple hooks to a single request. Let’s call two hooks at once:

You can also add hooks to a Session instance. Any hooks you add will then be called on every request made to the session. For example:

A Session can have multiple hooks, which will be called in the order they are added.

Custom Authentication¶

Requests allows you to specify your own authentication mechanism.

Any callable which is passed as the auth argument to a request method will have the opportunity to modify the request before it is dispatched.

Authentication implementations are subclasses of AuthBase , and are easy to define. Requests provides two common authentication scheme implementations in requests.auth : HTTPBasicAuth and HTTPDigestAuth .

Let’s pretend that we have a web service that will only respond if the X-Pizza header is set to a password value. Unlikely, but just go with it.

Then, we can make a request using our Pizza Auth:

Streaming Requests¶

With Response.iter_lines() you can easily iterate over streaming APIs such as the Twitter Streaming API. Simply set stream to True and iterate over the response with iter_lines :

When using decode_unicode=True with Response.iter_lines() or Response.iter_content() , you’ll want to provide a fallback encoding in the event the server doesn’t provide one:

iter_lines is not reentrant safe. Calling this method multiple times causes some of the received data being lost. In case you need to call it from multiple places, use the resulting iterator object instead:

Proxies¶

If you need to use a proxy, you can configure individual requests with the proxies argument to any request method:

Alternatively you can configure it once for an entire Session :

Setting session.proxies may behave differently than expected. Values provided will be overwritten by environmental proxies (those returned by urllib.request.getproxies). To ensure the use of proxies in the presence of environmental proxies, explicitly specify the proxies argument on all individual requests as initially explained above.

See #2018 for details.

When the proxies configuration is not overridden per request as shown above, Requests relies on the proxy configuration defined by standard environment variables http_proxy , https_proxy , no_proxy , and all_proxy . Uppercase variants of these variables are also supported. You can therefore set them to configure Requests (only set the ones relevant to your needs):

To use HTTP Basic Auth with your proxy, use the http://user:password@host/ syntax in any of the above configuration entries:

Storing sensitive username and password information in an environment variable or a version-controlled file is a security risk and is highly discouraged.

To give a proxy for a specific scheme and host, use the scheme://hostname form for the key. This will match for any request to the given scheme and exact hostname.

Note that proxy URLs must include the scheme.

Finally, note that using a proxy for https connections typically requires your local machine to trust the proxy’s root certificate. By default the list of certificates trusted by Requests can be found with:

You override this default certificate bundle by setting the REQUESTS_CA_BUNDLE (or CURL_CA_BUNDLE ) environment variable to another file path:

SOCKS¶

New in version 2.10.0.

In addition to basic HTTP proxies, Requests also supports proxies using the SOCKS protocol. This is an optional feature that requires that additional third-party libraries be installed before use.

You can get the dependencies for this feature from pip :

Once you’ve installed those dependencies, using a SOCKS proxy is just as easy as using a HTTP one:

Using the scheme socks5 causes the DNS resolution to happen on the client, rather than on the proxy server. This is in line with curl, which uses the scheme to decide whether to do the DNS resolution on the client or proxy. If you want to resolve the domains on the proxy server, use socks5h as the scheme.

Compliance¶

Requests is intended to be compliant with all relevant specifications and RFCs where that compliance will not cause difficulties for users. This attention to the specification can lead to some behaviour that may seem unusual to those not familiar with the relevant specification.

Encodings¶

When you receive a response, Requests makes a guess at the encoding to use for decoding the response when you access the Response.text attribute. Requests will first check for an encoding in the HTTP header, and if none is present, will use charset_normalizer or chardet to attempt to guess the encoding.

If chardet is installed, requests uses it, however for python3 chardet is no longer a mandatory dependency. The chardet library is an LGPL-licenced dependency and some users of requests cannot depend on mandatory LGPL-licensed dependencies.

When you install requests without specifying [use_chardet_on_py3] extra, and chardet is not already installed, requests uses charset-normalizer (MIT-licensed) to guess the encoding.

The only time Requests will not guess the encoding is if no explicit charset is present in the HTTP headers and the Content-Type header contains text . In this situation, RFC 2616 specifies that the default charset must be ISO-8859-1 . Requests follows the specification in this case. If you require a different encoding, you can manually set the Response.encoding property, or use the raw Response.content .

HTTP Verbs¶

Requests provides access to almost the full range of HTTP verbs: GET, OPTIONS, HEAD, POST, PUT, PATCH and DELETE. The following provides detailed examples of using these various verbs in Requests, using the GitHub API.

We will begin with the verb most commonly used: GET. HTTP GET is an idempotent method that returns a resource from a given URL. As a result, it is the verb you ought to use when attempting to retrieve data from a web location. An example usage would be attempting to get information about a specific commit from GitHub. Suppose we wanted commit a050faf on Requests. We would get it like so:

We should confirm that GitHub responded correctly. If it has, we want to work out what type of content it is. Do this like so:

So, GitHub returns JSON. That’s great, we can use the r.json method to parse it into Python objects.

So far, so simple. Well, let’s investigate the GitHub API a little bit. Now, we could look at the documentation, but we might have a little more fun if we use Requests instead. We can take advantage of the Requests OPTIONS verb to see what kinds of HTTP methods are supported on the url we just used.

Uh, what? That’s unhelpful! Turns out GitHub, like many API providers, don’t actually implement the OPTIONS method. This is an annoying oversight, but it’s OK, we can just use the boring documentation. If GitHub had correctly implemented OPTIONS, however, they should return the allowed methods in the headers, e.g.

Turning to the documentation, we see that the only other method allowed for commits is POST, which creates a new commit. As we’re using the Requests repo, we should probably avoid making ham-handed POSTS to it. Instead, let’s play with the Issues feature of GitHub.

This documentation was added in response to Issue #482. Given that this issue already exists, we will use it as an example. Let’s start by getting it.

Cool, we have three comments. Let’s take a look at the last of them.

Well, that seems like a silly place. Let’s post a comment telling the poster that he’s silly. Who is the poster, anyway?

OK, so let’s tell this Kenneth guy that we think this example should go in the quickstart guide instead. According to the GitHub API doc, the way to do this is to POST to the thread. Let’s do it.

Huh, that’s weird. We probably need to authenticate. That’ll be a pain, right? Wrong. Requests makes it easy to use many forms of authentication, including the very common Basic Auth.

Brilliant. Oh, wait, no! I meant to add that it would take me a while, because I had to go feed my cat. If only I could edit this comment! Happily, GitHub allows us to use another HTTP verb, PATCH, to edit this comment. Let’s do that.

Excellent. Now, just to torture this Kenneth guy, I’ve decided to let him sweat and not tell him that I’m working on this. That means I want to delete this comment. GitHub lets us delete comments using the incredibly aptly named DELETE method. Let’s get rid of it.

Excellent. All gone. The last thing I want to know is how much of my ratelimit I’ve used. Let’s find out. GitHub sends that information in the headers, so rather than download the whole page I’ll send a HEAD request to get the headers.

Excellent. Time to write a Python program that abuses the GitHub API in all kinds of exciting ways, 4995 more times.

Custom Verbs¶

From time to time you may be working with a server that, for whatever reason, allows use or even requires use of HTTP verbs not covered above. One example of this would be the MKCOL method some WEBDAV servers use. Do not fret, these can still be used with Requests. These make use of the built-in .request method. For example:

Utilising this, you can make use of any method verb that your server allows.

Many HTTP APIs feature Link headers. They make APIs more self describing and discoverable.

GitHub uses these for pagination in their API, for example:

Requests will automatically parse these link headers and make them easily consumable:

Transport Adapters¶

As of v1.0.0, Requests has moved to a modular internal design. Part of the reason this was done was to implement Transport Adapters, originally described here. Transport Adapters provide a mechanism to define interaction methods for an HTTP service. In particular, they allow you to apply per-service configuration.

Requests ships with a single Transport Adapter, the HTTPAdapter . This adapter provides the default Requests interaction with HTTP and HTTPS using the powerful urllib3 library. Whenever a Requests Session is initialized, one of these is attached to the Session object for HTTP, and one for HTTPS.

Requests enables users to create and use their own Transport Adapters that provide specific functionality. Once created, a Transport Adapter can be mounted to a Session object, along with an indication of which web services it should apply to.

The mount call registers a specific instance of a Transport Adapter to a prefix. Once mounted, any HTTP request made using that session whose URL starts with the given prefix will use the given Transport Adapter.

Many of the details of implementing a Transport Adapter are beyond the scope of this documentation, but take a look at the next example for a simple SSL use- case. For more than that, you might look at subclassing the BaseAdapter .

Example: Specific SSL Version¶

The Requests team has made a specific choice to use whatever SSL version is default in the underlying library (urllib3). Normally this is fine, but from time to time, you might find yourself needing to connect to a service-endpoint that uses a version that isn’t compatible with the default.

You can use Transport Adapters for this by taking most of the existing implementation of HTTPAdapter, and adding a parameter ssl_version that gets passed-through to urllib3 . We’ll make a Transport Adapter that instructs the library to use SSLv3:

Blocking Or Non-Blocking?В¶

With the default Transport Adapter in place, Requests does not provide any kind of non-blocking IO. The Response.content property will block until the entire response has been downloaded. If you require more granularity, the streaming features of the library (see Streaming Requests ) allow you to retrieve smaller quantities of the response at a time. However, these calls will still block.

If you are concerned about the use of blocking IO, there are lots of projects out there that combine Requests with one of Python’s asynchronicity frameworks. Some excellent examples are requests-threads, grequests, requests-futures, and httpx.

In unusual circumstances you may want to provide headers in an ordered manner. If you pass an OrderedDict to the headers keyword argument, that will provide the headers with an ordering. However, the ordering of the default headers used by Requests will be preferred, which means that if you override default headers in the headers keyword argument, they may appear out of order compared to other headers in that keyword argument.

If this is problematic, users should consider setting the default headers on a Session object, by setting Session to a custom OrderedDict . That ordering will always be preferred.

Timeouts¶

Most requests to external servers should have a timeout attached, in case the server is not responding in a timely manner. By default, requests do not time out unless a timeout value is set explicitly. Without a timeout, your code may hang for minutes or more.

The connect timeout is the number of seconds Requests will wait for your client to establish a connection to a remote machine (corresponding to the connect()) call on the socket. It’s a good practice to set connect timeouts to slightly larger than a multiple of 3, which is the default TCP packet retransmission window.

Once your client has connected to the server and sent the HTTP request, the read timeout is the number of seconds the client will wait for the server to send a response. (Specifically, it’s the number of seconds that the client will wait between bytes sent from the server. In 99.9% of cases, this is the time before the server sends the first byte).

If you specify a single value for the timeout, like this:

The timeout value will be applied to both the connect and the read timeouts. Specify a tuple if you would like to set the values separately:

If the remote server is very slow, you can tell Requests to wait forever for a response, by passing None as a timeout value and then retrieving a cup of coffee.

Requests is an elegant and simple HTTP library for Python, built for human beings. You are currently looking at the documentation of the development release.

Источник

Проверка SSL-сертификатов и SSL на стороне клиента.

Содержание:

- Проверка SSL сертификата;

- Сертификаты на стороне клиента;

- Доверенные сертификаты CA.

Проверка SSL сертификата.

>>> import requests >>> requests.get('https://requestb.in') # requests.exceptions.SSLError: hostname 'requestb.in' doesn't match ...

На этом домене нет установленного SSL, поэтому запрос создает исключение. Запрос к GitHub проходит без каких либо ошибок:

>>> requests.get('https://github.com') # <Response [200]>

Можно передать аргументу verify путь к файлу CA_BUNDLE или каталогу с доверенными сертификатами CA:

# указание доверенных сертификатов в запросе >>> requests.get('https://github.com', verify='/path/to/certfile') # указание доверенных сертификатов для сессии >>> sess = requests.Session() >>> sess.verify = '/path/to/certfile'

Примечание. Если для параметра verify задан путь к каталогу, то этот каталог должен быть обработан с помощью утилиты c_rehash, поставляемой с OpenSSL.

Список доверенных CA также можно указать с помощью переменных сред REQUESTS_CA_BUNDLE. Если REQUESTS_CA_BUNDLE не установлена, то CURL_CA_BUNDLE будет использоваться в качестве запасного варианта.

Запросы также могут игнорировать проверку SSL-сертификата, если для параметра verify задано значение False:

>>> requests.get('https://kennethreitz.org', verify=False) # <Response [200]>

, что если аргумент verify=False, то запросы будут принимать любой TLS-сертификат, представленный сервером, и будут игнорировать несоответствия имен хостов и/или просроченные сертификаты, что сделает приложение уязвимым для атак man-in-the-middle (MitM). Установка значения verify в False может быть полезна во время локальной разработки или тестирования.

По умолчанию для параметра verify установлено значение True. Опция verify применяется только к сертификатам хоста.

Сертификаты на стороне клиента.

Также можно указать локальный сертификат для использования в качестве сертификата на стороне клиента, как один файл (содержащий закрытый ключ и сертификат) или как кортеж путей к обоим файлам:

>>> requests.get('https://kennethreitz.org', ... cert=('/path/client.cert', '/path/client.key')) # <Response [200]> # для сессии s = requests.Session() s.cert = '/path/client.cert'

Если укать неправильный путь или неверный сертификат, то получим SSLError:

>>> requests.get('https://kennethreitz.org', cert='/wrong_path/client.pem') # SSLError: [Errno 336265225] _ssl.c:347: error:140B0009:SSL routines:SSL...

Предупреждение. Закрытый ключ к локальному сертификату должен быть незашифрованным. В настоящее время библиотека requests не поддерживают использование зашифрованных ключей.

Доверенные сертификаты CA

Библиотека requests используют сертификаты из пакета certifi. Это позволяет пользователям обновлять доверенные сертификаты без изменения версии запросов.

До версии requests-2.16 модуль объединял набор доверенных корневых центров сертификации, полученных из хранилища доверенных сертификатов Mozilla. Сертификаты обновлялись только один раз для каждой версии запросов. Когда не был установлен certifi , это приводило к чрезвычайно устаревшим пакетам сертификатов при использовании значительно более старых версий запросов.

В целях безопасности команда разработчиков библиотеки requests рекомендует почаще обновлять сертификаты!

SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:661)

There are chances to get SSL verify error while doing HTTP get method on any URL via python module requests.

It would surprise you also though the site implicate you good certificate in browser after you access the URL.

So you have ended up with confusion why the python requests failed,whereas no ssl error in browser for the same URL.

Here begins my work around and how i get fixed that in my view.

Before jumping to the solution, I would like everyone to understand the root cause in terms of SSL concepts and move on to fix.

What is an SSL Certificate?

SSL – Secure Sockets Layer

It sometimes called digital certificates, are used to establish an encrypted connection between a browser or user’s computer and a server or website.

The SSL connection protects sensitive data ,such as financial information and credentials much more during exchanged between client and server,which is called a session.

Secure Connection:

Creating a secure connection is a process of stages identically you can compare with a bare TCP network establishment which in terms defined as TCP Handshake.

Here the metaphor word is so called ‘SSL handshake’. It utilized a three different keys all to ensure a encrypt in transit data.

- Public key – Servers responds to client initial with a copy of its asymmetric public key.

- symmetric key – Browser or client creates symmetric session key and encrypts it with the server’s asymmetric public key then sends it to the server.

- private key – Server decrypts the encrypted session key using its asymmetric private key to get the symmetric session key.

Finally Server and browser now encrypt and decrypt all transmitted data with the symmetric session key. This allows for a secure channel because only the browser and the server know the symmetric session key, and the session key is only used for that specific session. If the browser was to connect to the same server the next day, a new session key would be created.

How to Get a SSL certificate and Installed ?

- A CSR(Certificate Signing Request) is raised in the server that request SSL. This process creates a private key and public key on your server.

A CSR is an encoded file that provides you with a standardized way to send your public key as well as some information that identifies your company and domain name. When you generate a CSR, most server software asks for the following information: common name (e.g., http://www.example.com), organization name and location (country, state/province, city/town), key type (typically RSA), and key size (2048-bit minimum).

An OpenSSL tool can be used to generate a CSR as follow,

openssl req –new –newkey rsa:2048 –nodes –keyout server.key –out server.csr

As a result of this command,it generates two files,

- Private key (server.key)

- CSR file (server.csr)

2. Get the certificate and intermediate CA or root CA certificate from any of Certificate Authority organization (ex: godaddy.com , digicert.com).

upload your CSR to any of vendor established as a Certificate Authority for Internet.

The CSR data file that you send to the SSL Certificate issuer (called a Certificate Authority or CA) contains the public key. The CA uses the CSR data file to create a data structure to match your private key without compromising the key itself. The CA never sees the private key.

And CA return you with files that are necessary,

1 ) Primar Certificate file

2) Intermediate CA certificate bundle

Intermediate certificate establishes the trust of your SSL certificate by tying it to your Certificate Authority’s root certificate.

The most important part of an SSL certificate is that it is digitally signed by a trusted CA, like DigiCert. Anyone can create a certificate, but browsers only trust certificates that come from an organization on their list of trusted CAs. Browsers come with a pre-installed list of trusted CAs, known as the Trusted Root CA store. In order to be added to the Trusted Root CA store and thus become a Certificate Authority, a company must comply with and be audited against security and authentication standards established by the browsers.

An SSL Certificate issued by a CA to an organization and its domain/website verifies that a trusted third party has authenticated that organization’s identity. Since the browser trusts the CA, the browser now trusts that organization’s identity too. The browser lets the user know that the website is secure, and the user can feel safe browsing the site and even entering their confidential information.

So it make sense of importance about trusted CA and Intermediate CA .

Troubleshoot:

SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:661)

Lets come to the error, the known reason strike your mind is the client couldn’t able to verify the certificate as trusted because its certificate issuer or CA is not incorporated as trusted CA in its store.

There are case a intermediate CA might issued such certificate and client might not necessary to have intermediate CA as a trusted in its store.

Though it is valid certificate ,to address commonly that requires a intermediate CA certificate and that act as chain between your primary certificate and root CA certificate.

How to Fix in python step by step:

1.Find the issuer of the certificate for the URL.

from OpenSSL import crypto

import ssl

url_ssl_cert = ssl.get_server_certificate(('burghquayregistrationoffice.inis.gov.ie',443),ssl_version=3)

with open('burghquayregistrationoffice.pem','w') as certficate:

certficate.write(url_ssl_cert)

cert_obj = crypto.load_certificate(crypto.FILETYPE_PEM, open('burghquayregistrationoffice.pem').read())

issuer = cert_obj.get_issuer()

print ('Certificate is issued by CA : {0}'.format(issuer.CN))

Certificate is issued by CA : Symantec Class 3 Secure Server CA - G4

2.Since requests module CA bundle doesn’t have information about it terminated with error. So I have chosen to use certifi ,a python module for CA bundle and if you install it via pip install certifi after the installation requests also refer this as default certificate store.

import certifi

print ('Trusted CA certificate bundle : {0}'.format(certifi.where()))

Trusted CA certificate bundle : C:python3.6.5libsite-packagescertificacert.pem

3. Next Download a Intermediate CA certificate from internet and add it to your CA cert store.

For Symantec Class 3 Secure Server CA – G4 refer below link, and save the file as SymantecClass3SecureServerCA-G4.pem

https://knowledge.digicert.com/solution/SO26896.html

Now write it to CA bundle,

with open('SymantecClass3SecureServerCA-G4.pem') as intermedCAf:

intermedCA = intermedCAf.read()

with open('C:python3.6.5libsite-packagescertificacert.pem','a') as cabundle:

cabundle.write('n'+intermedCA)

4. Finally test it with request and it should return HTTP status of 200.

x=requests.get('https://burghquayregistrationoffice.inis.gov.ie')

print (x.status_code)

200

python requests authentication provides multiple mechanisms for authentication to web service endpoints, including basic auth, X.509 certificate authentication, and authentication with a bearer token (JWT or OAuth2 token). This article will cover the basic examples for authenticating with each of these and using python requests to login to your web service.

python requests basic auth

To authenticate with basic auth using the python requests module, start with the following example python script:

import requests

basicAuthCredentials = ('user', 'pass')

response = requests.get('https://example.com/endpoint', auth=basicAuthCredentials)An alternative to this approach is to just use the python requests authorization header for basic auth:

'Authorization' : 'Basic user:pass'python requests ignore ssl

To ignore SSL verification of the installed X.509 SSL certificate, set verify=False. For example, in a python requests GET request to ignore ssl:

requests.get('https://example.com', verify=False)The verify=False parameter in the get method declares that the python requests call must ignore ssl and proceed with the api call. This may be necessary in the event of a self signed certificate in certificate chain on the server being connected to. The cause may also be an expired certificate or some other certificate not trusted by your python application by default.

python requests certificate verify failed

If you get the python requests ssl certificate_verify_failed error, the cause is that the certificate may be expired, revoked, or not trusted by you as the caller. If you are in a test environment then it may be safe to set verify=False on your call, as explained above. If you are in a production environment then you should determine whether or not you should trust the root certificate of the trust chain being sent by the server. You may explicitly set this in your call, for example:

requests.get('https://example.com', verify='truststore.pem')

The ssl certificate_verify_failed error is not an error you should simply ignore with thoroughly thinking through the implications.

python requests certificate

python requests authentication with an X.509 certificate and private key can be performed by specifying the path to the cert and key in your request. An example using python requests client certificate:

requests.get('https://example.com', cert=('/path/client.cert', '/path/client.key'))The certificate and key may also be combined into the same file. If you receive an SSL error on your python requests call, you have likely given an invalid path, key, or certificate in your request.

python requests authorization bearer

The python requests authorization header for authenticating with a bearer token is the following:

'Authorization': 'Bearer ' + token

For example:

import requests

headers = {'Authorization': 'Bearer ' + token}

response = requests.get('https://example.com', headers=headers)The bearer token is often either a JWT (Javascript web token) or an OAuth2 token for python requests using oauth2.

To generate and sign a JWT with python and a private key, here is an example. The JWT token generated from this python code snippet may then be passed as the bearer token.

import jwt

import time

# Open private key to sign token with

with open('key.pem', 'r') as f:

key = f.read()

# Create token that is valid for a given amount of time.

now = time.time();

claims = {

'iss': '',

'sub': '',

'iat': int(now),

'exp': int(now) + 10 * 60

}

token = jwt.encode(claims, key, algorithm='RS256')

It is also possible to load the private key directly from a Keystore using the pyOpenSSL dependency. Here is a code sample using python requests jwt authentication with a Keystore. Similar to the example above, you can pass in the generated JWT as the bearer token in your python requests REST call.

#!/usr/bin/env python3

import os

import sys

import time

from OpenSSL import crypto

from jwt import JWT

from jwt.jwk import RSAJWK

with open('keystore.p12', 'rb') as f:

p12 = crypto.load_pkcs12(f.read(), bytes('changeit', 'utf-8'))

key = RSAJWK(p12.get_privatekey().to_cryptography_key())

# Create authentication token

now = int(time.time())

claims = {

'iss': '',

'sub': '',

'iat': int(now),

'exp': int(now) + 10 * 60

}

jwt = JWT()

token = jwt.encode(claims, key, alg='RS256')

print(token)If you would like to see more examples of how to authenticate to REST web services with basic auth, bearer tokens (JWTs or OAuth2), or a private key and certificate leave us a comment.

Conclusion

This article has demonstrated how to use python requests with an x509 client certificate, python requests with a cert and key, and general authentication methods. Please leave us a comment if you have any questions on how to authenticate with a cert and key using python requests.

Read more of our content.