From Wikipedia, the free encyclopedia

«Systematic bias» redirects here. For the sociological and organizational phenomenon, see Systemic bias.

Observational error (or measurement error) is the difference between a measured value of a quantity and its true value.[1] In statistics, an error is not necessarily a «mistake». Variability is an inherent part of the results of measurements and of the measurement process.

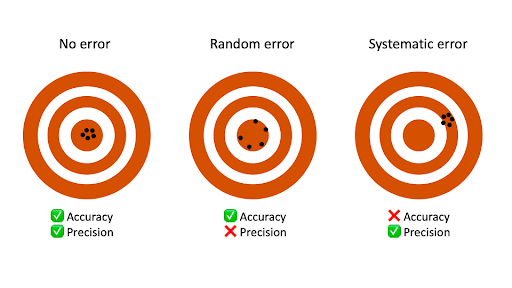

Measurement errors can be divided into two components: random and systematic.[2]

Random errors are errors in measurement that lead to measurable values being inconsistent when repeated measurements of a constant attribute or quantity are taken. Systematic errors are errors that are not determined by chance but are introduced by repeatable processes inherent to the system.[3] Systematic error may also refer to an error with a non-zero mean, the effect of which is not reduced when observations are averaged.[citation needed]

Measurement errors can be summarized in terms of accuracy and precision.

Measurement error should not be confused with measurement uncertainty.

Science and experiments[edit]

When either randomness or uncertainty modeled by probability theory is attributed to such errors, they are «errors» in the sense in which that term is used in statistics; see errors and residuals in statistics.

Every time we repeat a measurement with a sensitive instrument, we obtain slightly different results. The common statistical model used is that the error has two additive parts:

- Systematic error which always occurs, with the same value, when we use the instrument in the same way and in the same case.

- Random error which may vary from observation to another.

Systematic error is sometimes called statistical bias. It may often be reduced with standardized procedures. Part of the learning process in the various sciences is learning how to use standard instruments and protocols so as to minimize systematic error.

Random error (or random variation) is due to factors that cannot or will not be controlled. One possible reason to forgo controlling for these random errors is that it may be too expensive to control them each time the experiment is conducted or the measurements are made. Other reasons may be that whatever we are trying to measure is changing in time (see dynamic models), or is fundamentally probabilistic (as is the case in quantum mechanics — see Measurement in quantum mechanics). Random error often occurs when instruments are pushed to the extremes of their operating limits. For example, it is common for digital balances to exhibit random error in their least significant digit. Three measurements of a single object might read something like 0.9111g, 0.9110g, and 0.9112g.

Characterization[edit]

Measurement errors can be divided into two components: random error and systematic error.[2]

Random error is always present in a measurement. It is caused by inherently unpredictable fluctuations in the readings of a measurement apparatus or in the experimenter’s interpretation of the instrumental reading. Random errors show up as different results for ostensibly the same repeated measurement. They can be estimated by comparing multiple measurements and reduced by averaging multiple measurements.

Systematic error is predictable and typically constant or proportional to the true value. If the cause of the systematic error can be identified, then it usually can be eliminated. Systematic errors are caused by imperfect calibration of measurement instruments or imperfect methods of observation, or interference of the environment with the measurement process, and always affect the results of an experiment in a predictable direction. Incorrect zeroing of an instrument leading to a zero error is an example of systematic error in instrumentation.

The Performance Test Standard PTC 19.1-2005 “Test Uncertainty”, published by the American Society of Mechanical Engineers (ASME), discusses systematic and random errors in considerable detail. In fact, it conceptualizes its basic uncertainty categories in these terms.

Random error can be caused by unpredictable fluctuations in the readings of a measurement apparatus, or in the experimenter’s interpretation of the instrumental reading; these fluctuations may be in part due to interference of the environment with the measurement process. The concept of random error is closely related to the concept of precision. The higher the precision of a measurement instrument, the smaller the variability (standard deviation) of the fluctuations in its readings.

Sources[edit]

Sources of systematic error[edit]

Imperfect calibration[edit]

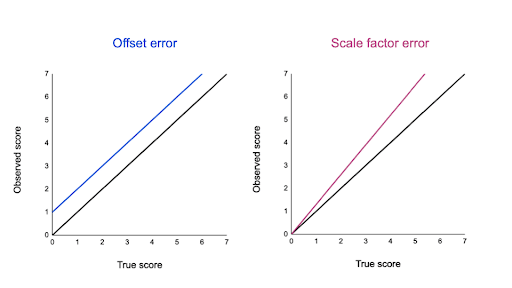

Sources of systematic error may be imperfect calibration of measurement instruments (zero error), changes in the environment which interfere with the measurement process and sometimes imperfect methods of observation can be either zero error or percentage error. If you consider an experimenter taking a reading of the time period of a pendulum swinging past a fiducial marker: If their stop-watch or timer starts with 1 second on the clock then all of their results will be off by 1 second (zero error). If the experimenter repeats this experiment twenty times (starting at 1 second each time), then there will be a percentage error in the calculated average of their results; the final result will be slightly larger than the true period.

Distance measured by radar will be systematically overestimated if the slight slowing down of the waves in air is not accounted for. Incorrect zeroing of an instrument leading to a zero error is an example of systematic error in instrumentation.

Systematic errors may also be present in the result of an estimate based upon a mathematical model or physical law. For instance, the estimated oscillation frequency of a pendulum will be systematically in error if slight movement of the support is not accounted for.

Quantity[edit]

Systematic errors can be either constant, or related (e.g. proportional or a percentage) to the actual value of the measured quantity, or even to the value of a different quantity (the reading of a ruler can be affected by environmental temperature). When it is constant, it is simply due to incorrect zeroing of the instrument. When it is not constant, it can change its sign. For instance, if a thermometer is affected by a proportional systematic error equal to 2% of the actual temperature, and the actual temperature is 200°, 0°, or −100°, the measured temperature will be 204° (systematic error = +4°), 0° (null systematic error) or −102° (systematic error = −2°), respectively. Thus the temperature will be overestimated when it will be above zero and underestimated when it will be below zero.

Drift[edit]

Systematic errors which change during an experiment (drift) are easier to detect. Measurements indicate trends with time rather than varying randomly about a mean. Drift is evident if a measurement of a constant quantity is repeated several times and the measurements drift one way during the experiment. If the next measurement is higher than the previous measurement as may occur if an instrument becomes warmer during the experiment then the measured quantity is variable and it is possible to detect a drift by checking the zero reading during the experiment as well as at the start of the experiment (indeed, the zero reading is a measurement of a constant quantity). If the zero reading is consistently above or below zero, a systematic error is present. If this cannot be eliminated, potentially by resetting the instrument immediately before the experiment then it needs to be allowed by subtracting its (possibly time-varying) value from the readings, and by taking it into account while assessing the accuracy of the measurement.

If no pattern in a series of repeated measurements is evident, the presence of fixed systematic errors can only be found if the measurements are checked, either by measuring a known quantity or by comparing the readings with readings made using a different apparatus, known to be more accurate. For example, if you think of the timing of a pendulum using an accurate stopwatch several times you are given readings randomly distributed about the mean. Hopings systematic error is present if the stopwatch is checked against the ‘speaking clock’ of the telephone system and found to be running slow or fast. Clearly, the pendulum timings need to be corrected according to how fast or slow the stopwatch was found to be running.

Measuring instruments such as ammeters and voltmeters need to be checked periodically against known standards.

Systematic errors can also be detected by measuring already known quantities. For example, a spectrometer fitted with a diffraction grating may be checked by using it to measure the wavelength of the D-lines of the sodium electromagnetic spectrum which are at 600 nm and 589.6 nm. The measurements may be used to determine the number of lines per millimetre of the diffraction grating, which can then be used to measure the wavelength of any other spectral line.

Constant systematic errors are very difficult to deal with as their effects are only observable if they can be removed. Such errors cannot be removed by repeating measurements or averaging large numbers of results. A common method to remove systematic error is through calibration of the measurement instrument.

Sources of random error[edit]

The random or stochastic error in a measurement is the error that is random from one measurement to the next. Stochastic errors tend to be normally distributed when the stochastic error is the sum of many independent random errors because of the central limit theorem. Stochastic errors added to a regression equation account for the variation in Y that cannot be explained by the included Xs.

Surveys[edit]

The term «observational error» is also sometimes used to refer to response errors and some other types of non-sampling error.[1] In survey-type situations, these errors can be mistakes in the collection of data, including both the incorrect recording of a response and the correct recording of a respondent’s inaccurate response. These sources of non-sampling error are discussed in Salant and Dillman (1994) and Bland and Altman (1996).[4][5]

These errors can be random or systematic. Random errors are caused by unintended mistakes by respondents, interviewers and/or coders. Systematic error can occur if there is a systematic reaction of the respondents to the method used to formulate the survey question. Thus, the exact formulation of a survey question is crucial, since it affects the level of measurement error.[6] Different tools are available for the researchers to help them decide about this exact formulation of their questions, for instance estimating the quality of a question using MTMM experiments. This information about the quality can also be used in order to correct for measurement error.[7][8]

Effect on regression analysis[edit]

If the dependent variable in a regression is measured with error, regression analysis and associated hypothesis testing are unaffected, except that the R2 will be lower than it would be with perfect measurement.

However, if one or more independent variables is measured with error, then the regression coefficients and standard hypothesis tests are invalid.[9]: p. 187 This is known as attenuation bias.[10]

See also[edit]

- Bias (statistics)

- Cognitive bias

- Correction for measurement error (for Pearson correlations)

- Errors and residuals in statistics

- Error

- Replication (statistics)

- Statistical theory

- Metrology

- Regression dilution

- Test method

- Propagation of uncertainty

- Instrument error

- Measurement uncertainty

- Errors-in-variables models

- Systemic bias

References[edit]

- ^ a b Dodge, Y. (2003) The Oxford Dictionary of Statistical Terms, OUP. ISBN 978-0-19-920613-1

- ^ a b John Robert Taylor (1999). An Introduction to Error Analysis: The Study of Uncertainties in Physical Measurements. University Science Books. p. 94, §4.1. ISBN 978-0-935702-75-0.

- ^ «Systematic error». Merriam-webster.com. Retrieved 2016-09-10.

- ^ Salant, P.; Dillman, D. A. (1994). How to conduct your survey. New York: John Wiley & Sons. ISBN 0-471-01273-4.

- ^ Bland, J. Martin; Altman, Douglas G. (1996). «Statistics Notes: Measurement Error». BMJ. 313 (7059): 744. doi:10.1136/bmj.313.7059.744. PMC 2352101. PMID 8819450.

- ^ Saris, W. E.; Gallhofer, I. N. (2014). Design, Evaluation and Analysis of Questionnaires for Survey Research (Second ed.). Hoboken: Wiley. ISBN 978-1-118-63461-5.

- ^ DeCastellarnau, A. and Saris, W. E. (2014). A simple procedure to correct for measurement errors in survey research. European Social Survey Education Net (ESS EduNet). Available at: http://essedunet.nsd.uib.no/cms/topics/measurement Archived 2019-09-15 at the Wayback Machine

- ^ Saris, W. E.; Revilla, M. (2015). «Correction for measurement errors in survey research: necessary and possible» (PDF). Social Indicators Research. 127 (3): 1005–1020. doi:10.1007/s11205-015-1002-x. hdl:10230/28341. S2CID 146550566.

- ^ Hayashi, Fumio (2000). Econometrics. Princeton University Press. ISBN 978-0-691-01018-2.

- ^ Angrist, Joshua David; Pischke, Jörn-Steffen (2015). Mastering ‘metrics : the path from cause to effect. Princeton, New Jersey. p. 221. ISBN 978-0-691-15283-7. OCLC 877846199.

The bias generated by this sort of measurement error in regressors is called attenuation bias.

Further reading[edit]

- Cochran, W. G. (1968). «Errors of Measurement in Statistics». Technometrics. 10 (4): 637–666. doi:10.2307/1267450. JSTOR 1267450.

- Research

- Random measurement…

- Random measurement error and regression dilution bias

Research Methods & Reporting

BMJ

2010;

340

doi: https://doi.org/10.1136/bmj.c2289

(Published 23 June 2010)

Cite this as: BMJ 2010;340:c2289

- Jennifer A Hutcheon, postdoctoral fellow1,

- Arnaud Chiolero, doctoral candidate, fellow in public health23,

- James A Hanley, professor of biostatistics2

- 1Department of Obstetrics & Gynaecology, University of British Columbia, Vancouver, Canada

- 2Department of Epidemiology, Biostatistics, and Occupational Health, McGill University, Purvis Hall, 1020 Avenue des Pins Ouest, Montreal QC, Canada H3A 1A2

- 3Institute of Social and Preventive Medicine (IUMSP), University Hospital Centre and University of Lausanne, Lausanne, Switzerland

- Correspondence to: J A Hanley james.hanley{at}mcgill.ca

- Accepted 2 February 2010

Random measurement error is a pervasive problem in medical research, which can introduce bias to an estimate of the association between a risk factor and a disease or make a true association statistically non-significant. Hutcheon and colleagues explain when, why, and how random measurement error introduces bias and provides strategies for researchers to minimise the problem

Summary points

-

The bias introduced by random measurement error will be different depending on whether the error is in an exposure variable (risk factor) or outcome variable (disease)

-

Random measurement error in an exposure variable will bias the estimates of regression slope coefficients towards the null

-

Random measurement error in an outcome variable will instead increase the standard error of the estimates and widen the corresponding confidence intervals, making results less likely to be statistically significant

-

Increasing sample size will help minimise the impact of measurement error in an outcome variable but will only make estimates more precisely wrong when the error is in an exposure variable

Introduction

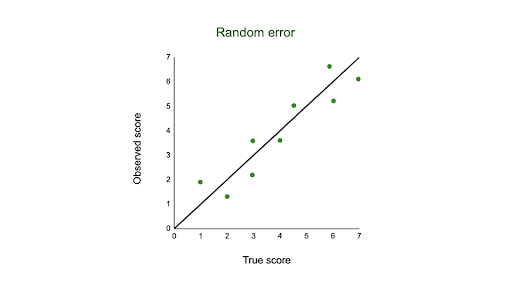

Random measurement error is a pervasive problem in medical research and clinical practice.1 It occurs when measurements fluctuate unpredictably around their true values and is caused by imprecise measurement tools or true biological variability, or both. For instance, when blood pressure is assessed with a sphygmomanometer, random error may arise from imprecise measurement due to rounding error or from true diurnal or day to day variation in pressure.2 3 Hence, a blood pressure reading obtained at a single occasion may differ by an unpredictable (random) amount from an individual’s usual blood pressure.3

Random measurement error differs from systematic measurement error.4 Systematic error occurs when the measurement error, after multiple measurements, does not average out to zero. The measurements are consistently wrong in a particular direction—for example, they tend to be higher than the true values. In the case of blood pressure measurement, systematic error may be due to improper calibration of the sphygmomanometer or improper arm cuff size, and averaging multiple blood pressure measurements will not help estimate true blood pressure.

While the impact of systematic error is generally well appreciated by researchers and addressed in epidemiological and clinical studies, the impact of random measurement error is often less well appreciated. Since the total error in a variable with random measurement error averages out to zero, many people assume that the effects of random measurement error on the estimate of the association between an exposure (risk factor) and an outcome (disease) obtained from a regression model will also cancel out (that is, have no effect on the estimate). Others have observed that random measurement error can bias the regression slope coefficient downwards towards the null, a phenomenon known as attenuation or regression dilution bias.5 6 7

In reality, the estimate of the association between an exposure and an outcome is attenuated by random measurement error in some situations but remain unchanged in others. In this article we use a simple example to show when, to what extent, and why random measurement error affects the estimates produced by regression models to assess the association between two variables. In particular, we describe how the effect of random measurement differs depending on whether the measurement error is in the exposure or outcome variable. We also make recommendations for dealing with random measurement error in the design and analysis of studies.

Glossary of terms

-

Random measurement error—This occurs when the recorded values of a study variable fluctuate randomly around the true values, such that some recorded values will be higher than the true values and other recorded values will be lower

-

Linear regression model—Statistical model used to evaluate the relation between one or more exposure variables and an outcome that is measured on a continuous scale (such as weight, blood glucose concentration, or bone mineral density). The linear relation between an exposure (X) and outcome (Y) is described by the regression equation E(Y) = β0 + β1X, where E(Y) is the expected (average) value of the variable Y, β0 is the intercept (the average value of the outcome Y when the exposure X has a value of zero), and β1 is the slope of the line

-

Regression slope—The slope of the line between an exposure and outcome variable in a linear regression model. It provides an estimate of the association between an exposure and outcome variable. For instance, a slope estimate of 2 would mean that for every 1 unit difference in the exposure (X) variable, the outcome (Y) variable would be, on average, higher by 2 units. The estimate of the regression slope is also referred to as the “beta coefficient estimate” or “slope coefficient estimate”

-

Regression dilution bias—A statistical phenomenon whereby random measurement error in the values of an exposure variable (X) causes an attenuation or “flattening” of the slope of the line describing the relation between the exposure (X) and an outcome (Y) of interest

Example

For illustrative purposes, we consider the simplistic case of a study conducted in four hypothetical individuals. The aim of this study is to assess the association between the exposure variable systolic blood pressure and the outcome variable left ventricular mass index (LVMI).8 It is well known that elevated blood pressure is associated with a large LVMI.8 Imagine that both variables are measured without measurement error and are perfectly correlated, so that all four observations fall along the regression line. The regression slope, or coefficient (β), is 1.00 g/m2/mm Hg (see appendix on bmj.com for the detailed calculation). In other words, for every 1 mm Hg difference in systolic blood pressure, LVMI is an average of 1 g/m2 higher. The table⇓ shows the systolic blood pressure and LVMI values measured for each individual, with no errors (section a) and with random errors in the exposure and outcome variables (sections b and c). Figure 1⇓ shows the relation between exposure and outcome variable in diagrammatic form.

Values of exposure variable systolic blood pressure and outcome variable left ventricular mass index (LVMI) with different degrees of random measurement error

Fig 1 Effect of random measurement error on relation between systolic blood pressure (exposure) and left ventricular mass index (LVMI) (outcome). With no random measurement error (panel a), the slope (β) of the line describes the error-free association between blood pressure (X) and LVMI (Y); when blood pressure is measured with a random error of ±10 or ±20 mm Hg (panel b), there is attenuation of the slope; when LVMI is measured with a random error of ±10 or ±20 g/m2 (panel c), there is increase in variability but no change in slope

- Download figure

- Open in new tab

- Download powerpoint

Random measurement error in the exposure (X) variable

Suppose that systolic blood pressure was measured with random errors of ±10 or ±20 mm Hg (see values in section b of table⇑). The regression slopes estimating the association between systolic blood pressure and LVMI flatten with increasing measurement error (fig 1, panel b⇑). As measurement error in systolic blood pressure increases, the observations become spread further apart on the X axis. While the systolic blood pressure values without measurement error range from 120 to 160 mm Hg, the horizontal range (along the X axis) increases to 100-170 mmHg with ±20 mm Hg error. The vertical range of the observations (along the Y axis), however, remains constant. Since the regression line is fitted by minimising the vertical distance between observations and their predicted values, the best fit line becomes increasingly flattened (“stretched out”) in order to accommodate the increased horizontal spread of the observations. The slope β decreases from 1.00 to 0.71 g/m2/mm Hg with ±10 mm Hg random error, and to 0.38 g/m2/mm Hg with ±20 mm Hg random error.

In an extreme case, the spread of observations along the X axis could become so large that the estimate of the best-fit regression line would be virtually flat, resulting in a complete attenuation of the association between systolic blood pressure and LVMI.

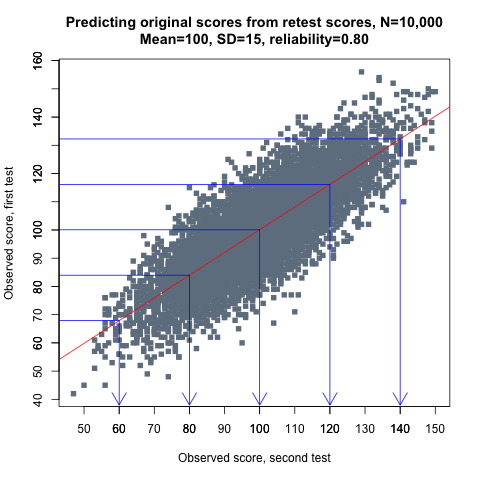

The extent of the bias in the estimate of the error-prone regression slope (β*) for a variable measured with random error (X*) is quantified in fig 2⇓.

The ratio of variation in error-free (true) X values to the variation in the observed error-prone (observed) values is known as the reliability coefficient, attenuation factor, or intra-class correlation. Because the variation in observed values is greater than the variation in error-free values due to random error, the ratio variation(X)/variation(X*) will be lower than 1, and the new estimate of the coefficient β* will be reduced in proportion, a typical case of regression dilution bias.

In practice, the use of an exposure variable (X) measured with random error results in underestimating (or even missing altogether) an association. A well known example is the underestimation of the association between usual blood pressure and the risk of cardiovascular disease.6 Blood pressure is most often estimated based on a limited number of readings (for example, office measurements), which leads to an imperfect approximation of usual blood pressure. The presence of random measurement error in estimates of usual blood pressure may underestimate the relative risk of cardiovascular disease due to elevated blood pressure by up to 60%.6 It explains, at least in part, why risk of cardiovascular disease is more strongly associated with blood pressure estimates using 24 hour, ambulatory blood pressure measurements (based on numerous readings, hence with less random error) than office blood pressure (based on fewer readings).3

Measurement error in the outcome (Y) variable

What if the exposure variable, systolic blood pressure, was measured without error, but the outcome variable, LVMI, had random measurement error? Would a similar attenuation of the estimated regression coefficient be seen?

Suppose that LVMI (Y) was measured with a random error of ±10 g/m2 or ±20 g/m2 (values in section c of the table⇑). When these error-prone LVMI values are regressed on systolic blood pressure, we see that the vertical distance (along the Y axis) between each observation and the regression line increases (panel c of fig 1⇑). However, although the total vertical distance between each observation and the regression line is increased, the slope of the line that is able to minimise these distances is identical. As a result, no attenuation of the estimate of the regression coefficient occurs, and it remains constant at β=1.00 g/m2/mm Hg. The increased vertical distance between observed and predicted values is reflected instead in the increased standard errors around the estimate for β, which increase from 0 with no measurement error to 0.45 with ±10 g/m2 error and to 0.89 with ±20 g/m2 error.

Why does the slope not flatten in this situation?

The equation for a regression model with no error can be expressed as Y = β0 + βX + ε (equation 1), where the error term ε represents the variability in Y that is not explained by the model’s exposure variable (X).

When Y is measured with error, Y is replaced in equation 1 with the observed (error-prone) variable Y*, which is equal to Y + random error. It can be shown that rearranging terms yields Y* = β0 + βX + ε + random error (equation 2). The random measurement error is simply added to the existing error term (ε) and, as a result, increases the total amount of unexplained variance in the regression model. The standard error for the estimate of β is therefore increased, with a correspondingly wider confidence interval. If a confidence interval is widened enough to include zero (for example, an estimate of the slope of 0.4, but with a 95% confidence interval from −0.1 to 0.9), the exposure would no longer be considered a statistically significant risk factor for the outcome of interest. The estimate of the regression coefficient β, however, is not affected.

In practice, although the regression coefficient itself will be unbiased when there is random measurement error in the outcome variable, the increased standard error could result in an association being overlooked because of lack of statistical significance. In essence, random measurement error in the outcome variable (Y) makes a study underpowered to detect a true effect of an exposure.

For example, ultrasound estimates of fetal weight are prone to a large degree of random measurement error (±10-15%).9 This error reduces the value of the estimated fetal weight in making appropriate clinical decisions, such as the timing of delivery for macrosomia. It could also influence conclusions of studies aimed at understanding determinants of fetal growth. If a researcher assesses the effects of maternal stress on fetal growth by estimating the relation between maternal cortisol levels (X) and fetal weight (Y),10 the 95% confidence intervals associated with the estimate of the slope β of the relation between the two variables will be widened due to the measurement error in estimated fetal weight. If the confidence interval is widened enough to include zero, the researcher would conclude that the association between maternal cortisol and fetal weight is not statistically significant, irrespective of the value of the slope itself.

Spirometry readings are another type of measurement prone to substantial random error, which is introduced by imprecise equipment, variability in technician skill, and participant behaviour.11 Consequently, confidence intervals around the estimated slope would also be widened in studies assessing determinants of respiratory status if the outcome is measured using spirometry.

In summary, the impact of random measurement error will be different depending on whether the error is in the exposure (X) or the outcome (Y) variable:

-

Random measurement error in the exposure variable (X) will bias the regression coefficient (slope) towards the null (regression dilution bias, attenuation)

-

Random measurement error in the outcome variable (Y) will have minimal effect on the regression coefficient, but will decrease the precision of the estimate (that is, increase the standard error).

The impact of random measurement error on measures of association is not restricted to cases where the outcome of interest is a continuous variable; it also occurs when the outcome of interest is a binary variable (such as disease versus no disease) or a survival time. For example, using home blood pressure measurements as the exposure (X), the hazard ratio for cardiovascular diseases (the outcome Y) was 1.020/unit of mm Hg based one measurement versus 1.035/unit of mm Hg based on the average of eight measurements.12 Of note, if correlation is used to assess an association between two variables, the correlation coefficient will be reduced if random error occurs either in X or in Y.

Additional bias beyond the effects of random measurement error can be introduced if the degree of random error differs according to case or control status (or exposed v unexposed status). The impact of this “differential” measurement error, and strategies to minimise it, are described elsewhere.13 For a comprehensive treatment of measurement error, including what to do if there is measurement error in confounder variables, we recommend the textbook of Carroll et al.14

Recommendations for researchers

The best strategy for dealing with random measurement error is to minimise it in the first place at the study design stage, either by investing in instruments capable of more precise measurements or obtaining repeated measurements from an individual to better estimate the true values.

With random measurement error in the exposure (X) variable, increasing the sample size will not minimise the bias from random error. Increasing the sample size will only make the estimates more precisely wrong.

If estimates of the extent of measurement error can be obtained from internal validation studies or the literature15 (using the reliability coefficient R), the regression coefficients can be corrected for the expected downward bias. Several authors have reviewed different statistical approaches to correct biased regression coefficients.16 17 18 However, these approaches rely on assumptions that may often not be met and are difficult to verify.19 The heated debate over the validity of “de-attenuated” estimates of the association between 24 hour sodium excretion in urine and blood pressure in the Intersalt study in the BMJ,20 21 22 23 24 for example, serves to underline the limitations of addressing measurement error in the analysis stage of a study. Correction for regression dilution bias requires a clear understanding of not only the extent of the random error but also the degree to which the error may be correlated with error in other variables. Any correlation in the errors, as was argued might occur between 24 hour sodium excretion and blood pressure, would produce highly inflated estimates of the association between sodium and blood pressure. These corrections for regression dilution bias may be better used for exploratory or sensitivity analyses.

If the outcome (Y) variable is prone to random measurement error, researchers should increase either the sample size or the number of measurements taken per subject to account for the increased standard error of the coefficient estimate. This increase will compensate for the precision lost as a result of random error.

The increase in number of subjects required can be estimated by the formula n/R, where n is the sample size required if no measurement error exists and R is the reliability coefficient. For example, if a sample size of 100 patients is required with error-free measurements, the use of error-prone measurements with a reliability coefficient of R = 0.6 would increase the number of patients required to detect the same effect to n/R = 100/0.6 = 167 patients.25 For cases where increasing the number of measurements per patient is preferable to increasing the number of patients, the Spearman-Brown formula for stepped up reliability can be used to estimate the number of repeated measurements per subject required to achieve a desired level of precision.26 27 28

Notes

Cite this as: BMJ 2010;340:c2289

Footnotes

-

Contributors: All authors contributed to the conception and drafting of the manuscript and approved the final version of the manuscript for publication. Table and figures were produced by AC. JAHutcheon is guarantor for the article.

-

Details of funding: JAHutcheon was supported by a doctoral research award from the Canadian Institutes of Health Research. AC was supported by a grant from the Swiss National Science Foundation (PASMA-115691/1) and by a grant from the Canadian Institutes of Health Research. JAHanley was supported by the Natural Sciences and Engineering Research Council of Canada and the Fonds québécois de la recherche sur la nature et les technologies. The work in this study was independent of funders.

-

Competing interests: All authors have completed the Unified Competing Interest form at www.icmeje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare that (1) none of the authors has support from any companies for the submitted work; (2) none of the authors has relationships with any company that might have an interest in the submitted work in the previous 3 years; (3) their spouses, partners, or children have no financial relationships that may be relevant to the submitted work; and (4) none of the authors has any non-financial interests that may be relevant to the submitted work.

References

- ↵

Bland JM, Altman DG. Measurement error. BMJ1996;313:744.

- ↵

Rose G. Standardisation of observers in blood pressure measurement. Lancet1965;285:673-4.

- ↵

Pickering TG, Shimbo D, Haas D. Ambulatory blood-pressure monitoring. N Engl J Med2006;354:2368-74.

- ↵

Last JM, ed. A dictionary of epidemiology. 4th ed. Oxford University Press, 2001.

- ↵

Spearman C. The proof and measurement of association between two things. Am J Psychol1904;15:72-101.

- ↵

MacMahon S, Peto R, Cutler J, Collins R, Sorlie P, Neaton J, et al. Blood pressure, stroke, and coronary heart disease. Part 1, prolonged differences in blood pressure: prospective observational studies corrected for the regression dilution bias. Lancet1990;335:765-74.

- ↵

Liu K. Measurement error and its impact on partial correlation and multiple linear regression analyses. Am J Epidemiol1988;127:864-74.

- ↵

Den Hond E, Staessen JA, on behalf of the APTH THOP investigators. Relation between left ventricular mass and systolic blood pressure at baseline in the APTH and THOP trials. Blood Press Monit2003;8:173-5.

- ↵

Dudley NJ. A systematic review of the ultrasound estimation of fetal weight. Ultrasound Obstet Gynecol2005;25:80-9.

- ↵

Diego MA, Jones NA, Field T, Hernandez-Reif M, Schanberg S, Kuhn C, et al. Maternal psychological distress, prenatal cortisol, and fetal weight. Psychosom Med2006;68:747-53.

- ↵

Miller MR, Hankinson J, Brusasco V, Burgos F, Casaburi R, Coates A, et al. Standardisation of spirometry. Eur Respir J2005;26:319-38.

- ↵

Stergiou GS, Parati G. How to best monitor blood pressure at home? Assessing numbers and individual patients. J Hypertens2010;28:226-8.

- ↵

Greenland S, Lash TL. Bias analysis. In: Rothman KJ, Greenland S, Lash TL, eds. Modern epidemiology. 3rd ed. Lippincott, Williams & Wilkins, 2008:345-80.

- ↵

Carroll RJ, Ruppert D, Stefanski LA. Measurement error in nonlinear models. Chapman and Hall/CRC Press, 1995.

- ↵

Whitlock G, Clarke T, Vander Hoorn S, Rodgers A, Jackson R, Norton R, et al. Random errors in the measurement of 10 cardiovascular risk factors. Eur J Epidemiol2001;17:907-9.

- ↵

Knuiman MW, Divitini ML, Buzas JS, Fitzgerald PEB. Adjustment for regression dilution in epidemiological regression analyses. Ann Epidemiol1998;8:56-63.

- ↵

Rosner B, Speigelman D, Willett WC. Correction of logistic regression relative risk estimates and confidence intervals for random within-person measurement error. Am J Epidemiol1992;136:1400-13.

- ↵

Andersen PK, Liestol K. Attenuation caused by infrequently updated covariates in survival analysis. Biostatistics2003;4:633-49.

- ↵

Frost C, White IR. The effect of measurement error in risk factors that change over time in cohort studies: do simple methods overcorrect for “regression dilution”? Int J Epidemiol2005;34:1359-68.

- ↵

Elliott P, Stamler J, Nichols R, Dyer AR, Stamler R, Kesteloot H, et al, for the Intersalt Cooperative Research Group. Intersalt revisited: further analyses of 24 hour sodium excretion and blood pressure within and across populations. BMJ1996;312:1249-53.

- ↵

Dyer AR, Elliott P, Marmot M, Kesteloot H, Stamler R, Stamler J, for the Intersalt Steering and Editorial Committee. Strength and importance of the relation of dietary salt to blood pressure. BMJ1996;312:1661-4.

- ↵

Davey Smith G, Phillips AN. Inflation in epidemiology: “The proof and measurement of association between two things” revisited. BMJ1996;312:1659-64.

- ↵

Day NE. Epidemiological studies should be designed to reduce correction needed for measurement error to a minimum. BMJ1997;315:484.

- ↵

Davey Smith G, Phillips AN. Correction for regression dilution bias in Intersalt study was misleading. BMJ1997;315:484.

- ↵

Fitzmaurice G. Measurement error and reliability. Nutrition2002;18:112-4.

- ↵

Perkins DO, Wyatt RJ, Bartko JJ. Penny-wise and pound-foolish: the impact of measurement error on sample size requirements in clinical trials. Biol Psychiatry2000;47:762-6.

- ↵

Spearman C. Correlation calculated from faulty data. Br J Psychol1910;3:271-95.

- ↵

Brown W. Some experimental results in the correlation of mental abilities. Br J Psychol1910;3:296-322.

A random measurement error is one that stems from fluctuation in the conditions within a system being measured which has nothing to do with the true signal being measured.

From: Clinical Engineering, 2014

Calibration of measuring sensors and instruments

Alan S. Morris, Reza Langari, in Measurement and Instrumentation (Third Edition), 2021

5.1 Introduction

We have just examined the various systematic and random measurement error sources in the last chapter. As far as systematic errors are concerned, we observed that recalibration at a suitable frequency was an important weapon in the quest to minimize errors due to drift in instrument characteristics. The use of proper and rigorous calibration procedures is essential in order to ensure that recalibration achieves its intended purpose, and, to reflect the importance of getting these procedures right, this whole chapter is dedicated to explaining the various facets of calibration.

We start in Section 5.2 by formally defining what calibration means, explaining how it is performed and considering how to calculate the frequency with which the calibration exercise should be repeated. We then go on to look at the calibration environment in Section 5.3, where we learn that proper control of the environment in which instruments are calibrated is an essential component in good calibration procedures. Section 5.4 then continues with a review of how the calibration of working instruments against reference instruments is linked by the calibration chain to national and international reference standards relating to the quantity that the instrument being calibrated is designed to measure. Finally, Section 5.5 emphasizes the importance of maintaining records of instrument calibrations and suggests appropriate formats for such records.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128171417000050

Measurement Uncertainty

Alan S. Morris, Reza Langari, in Measurement and Instrumentation, 2012

3.6.3 Graphical Data Analysis Techniques—Frequency Distributions

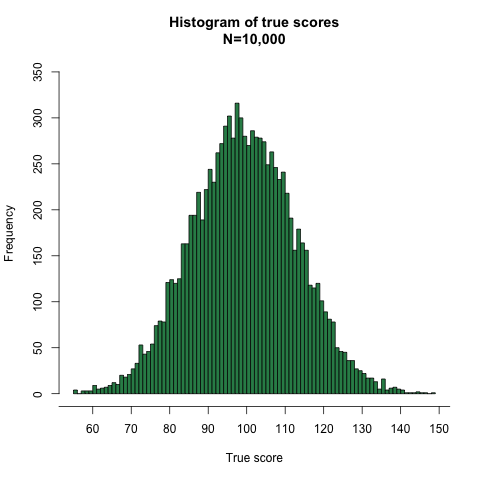

Graphical techniques are a very useful way of analyzing the way in which random measurement errors are distributed. The simplest way of doing this is to draw a histogram, in which bands of equal width across the range of measurement values are defined and the number of measurements within each band is counted. The bands are often given the name data bins. A useful rule for defining the number of bands (bins) is known as the Sturgis rule, which calculates the number of bands as

Number of bands=1+3.3log10n,

where n is the number of measurement values.

Example 3.5

Draw a histogram for the 23 measurements in set C of length measurement data given in Section 3.5.1.

Solution

For 23 measurements, the recommended number of bands calculated according to the Sturgis rule is 1 + 3.3 log10(23) = 5.49. This rounds to five, as the number of bands must be an integer number.

To cover the span of measurements in data set C with five bands, data bands need to be 2 mm wide. The boundaries of these bands must be chosen carefully so that no measurements fall on the boundary between different bands and cause ambiguity about which band to put them in. Because the measurements are integer numbers, this can be accomplished easily by defining the range of the first band as 401.5 to 403.5 and so on. A histogram can now be drawn as in Figure 3.6 by counting the number of measurements in each band.

Figure 3.6. Histogram of measurements and deviations.

In the first band from 401.5 to 403.5, there is just one measurement, so the height of the histogram in this band is 1 unit.

In the next band from 403.5 to 405.5, there are five measurements, so the height of the histogram in this band is 1 = 5 units.

The rest of the histogram is completed in a similar fashion.

When a histogram is drawn using a sufficiently large number of measurements, it will have the characteristic shape shown by truly random data, with symmetry about the mean value of the measurements. However, for a relatively small number of measurements, only approximate symmetry in the histogram can be expected about the mean value. It is a matter of judgment as to whether the shape of a histogram is close enough to symmetry to justify a conclusion that data on which it is based are truly random. It should be noted that the 23 measurements used to draw the histogram in Figure 3.6 were chosen carefully to produce a symmetrical histogram but exact symmetry would not normally be expected for a measurement data set as small as 23.

As it is the actual value of measurement error that is usually of most concern, it is often more useful to draw a histogram of deviations of measurements from the mean value rather than to draw a histogram of the measurements themselves. The starting point for this is to calculate the deviation of each measurement away from the calculated mean value. Then a histogram of deviations can be drawn by defining deviation bands of equal width and counting the number of deviation values in each band. This histogram has exactly the same shape as the histogram of raw measurements except that scaling of the horizontal axis has to be redefined in terms of the deviation values (these units are shown in parentheses in Figure 3.6).

Let us now explore what happens to the histogram of deviations as the number of measurements increases. As the number of measurements increases, smaller bands can be defined for the histogram, which retains its basic shape but then consists of a larger number of smaller steps on each side of the peak. In the limit, as the number of measurements approaches infinity, the histogram becomes a smooth curve known as a frequency distribution curve, as shown in Figure 3.7. The ordinate of this curve is the frequency of occurrence of each deviation value, F(D), and the abscissa is the magnitude of deviation, D.

Figure 3.7. Frequency distribution curve of deviations.

The symmetry of Figures 3.6 and 3.7 about the zero deviation value is very useful for showing graphically that measurement data only have random errors. Although these figures cannot be used to quantify the magnitude and distribution of the errors easily, very similar graphical techniques do achieve this. If the height of the frequency distribution curve is normalized such that the area under it is unity, then the curve in this form is known as a probability curve, and the height F(D) at any particular deviation magnitude D is known as the probability density function (p.d.f.). The condition that the area under the curve is unity can be expressed mathematically as

∫−∞∞F(D)dD=1.

The probability that the error in any one particular measurement lies between two levels D1 and D2 can be calculated by measuring the area under the curve contained between two vertical lines drawn through D1 and D2, as shown by the right-hand hatched area in Figure 3.7. This can be expressed mathematically as

(3.11)PD1≤D≤D2=∫D1D2F(D)dD.

Of particular importance for assessing the maximum error likely in any one measurement is the cumulative distribution function (c.d.f.). This is defined as the probability of observing a value less than or equal to Do and is expressed mathematically as

(3.12)P(D≤D0)=∫−∞D0F(D)dD.

Thus, the c.d.f. is the area under the curve to the left of a vertical line drawn through Do, as shown by the left-hand hatched area in Figure 3.7.

The deviation magnitude Dp corresponding with the peak of the frequency distribution curve (Figure 3.7) is the value of deviation that has the greatest probability. If the errors are entirely random in nature, then the value of Dp will equal zero. Any nonzero value of Dp indicates systematic errors in data in the form of a bias that is often removable by recalibration.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123819604000036

Active Geophysical Monitoring

Vyacheslav I. Yushin, Nikolay I. Geza, in Handbook of Geophysical Exploration: Seismic Exploration, 2010

4.3 Revealing latent relationships using the correlation method

A correlation analysis reveals the relationship between two series or between a series and a known function obscured by random measurement errors. It is better suited for a discontinuous time series than for spectral analysis. To estimate the hypothetical tidal component in the variations of some parameters, we must consider the following statistical problem. Let X and Y be two functions of two other independent random variables Φ and N:

(14)X=GsinΦ

(15)Y=BsinΦ+N,

where G and B are constants. G is assumed known (amplitude of gravity variations), N (random error in measurements of some parameter Y of a seismic wave) has a normal distribution with standard deviation σN, and Φ is distributed uniformly over the 0-2π interval. We wish to estimate a constant B in a selection of n independent measurements (number of measurements taken during the monitoring period, or the length of the series). Physically, value Φ models a tidal phase, which is certainly not a random component. However, if we consider that measurements are taken at arbitrary moments of time, such an approach is valid, because this assumption does not improve the final estimate with respect to the real situation. The solution of this problem leads to the following result. The correlation coefficient of arbitrary values X and Y is determined by the following expression:

(16)rXY=γ1+γ2,

where γ is the amplitude signal-to-noise ratio in an observation series

(17)γ=B2⋅σN.

The relationship (16) enables us to evaluate the original signal-to-noise ratio (17) using the correlation coefficient between series X and Y:

(18)γ=rXY1−rXY2,

where the true value rXY can be replaced by its empirical estimate. Thus, the algorithm of statistical evaluation for the tidal component B includes the following steps:

- 1.

-

calculation of the standard deviation of series Y;

- 2.

-

calculation of rXY;

- 3.

-

calculation of the initial signal-to-noise ratio γ by formula (18);

- 4.

-

evaluation of B by formula obtained from (17), which leads to

(19)B=2⋅γ⋅σN,

where, since γ≪1, measurement errors σN can be replaced with the standard deviation σY of series Y. The accuracy and stability of this estimate can be verified via the standard deviation σr of the empirical correlation coefficient, which is known (Livshits and Pugachev, 1963) to depend on the volume of selection n as

(20)σr=1−rXY2n.

The value of the constant G is in this case of no significance.

Let us now estimate the tidal component in travel-time change for the reference wavelet W4 using these equations and real data. The highest correlation between tidal effect and variation t4 (excluding the trend) was at r∗=0.17, associated with a 4 h shift in the travel-time series {t4} relative to the tide curve. The rms error in this estimate was σr=0.12, according to Eq. (20)—i.e., of low statistical significance. Nevertheless, assuming the empirical value r∗=0.17 to be the most reliable, the respective signal-to-noise ratio γ≈r=0.17 and the amplitude of the tidal component by Eqs (18) and (19) is:

(21)Bmax<2⋅0.17⋅0.5=0.12ms .

Therefore, using an additional correlation analysis, we arrived at a detectable limit of the tidal component more than five times lower than its visible magnitude (Eq. (10)), with a travel-time relative value of 0.5×10−5.

The obtained result should be considered in terms of probability. It indicates that if a correlation between travel time and tide does exist, it can be estimated by the above value. This is in agreement with observations, but the experimental data and its volume are insufficient for a more detailed conclusion. Moreover, the rough statistical estimates of the relationship between the two series appear too optimistic, since they ignore the true probability of data distribution remaining unattainable.

To estimate the probable natural scatter of the empirical correlation coefficient in the case when a series lacks any invariable component, we performed a numerical experiment on the correlation of independent arbitrary-number selections at n=60, as in nature with the real tidal function. Some selections contained a shifted series with a correlation coefficient of up to 0.15-0.2. Consequently, the detectable limit of tidal-velocity variation is overestimated, in spite of the above formal value, and in reality, it must be much lower than 0.5×10−5.

Estimates of the correlation between the travel-time differences {ti-tj} of the other six wavelets and the tidal component did not show absolute values above that for {t4}, and hence do not contradict the obtained upper boundary value.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/S0950140110040334

Software Architectures and Tools for Computer Aided Process Engineering

L. Puigjaner, … L. Puigjaner, in Computer Aided Chemical Engineering, 2002

3.5.5.3 Gross Error Detection

The adjustment of process data leading to better estimates of the process variable true value is normally performed in two steps. Non-random measurement errors, such as persistent gross errors, must first be detected, then removed or corrected. Next, the measurement(s) and/or constraint(s) that contain the gross error must be identified Indeed, meaningful data reconciliation can be achieved if and only if there is no gross error present in the data.

Thus the functionality of gross error detection encompasses the detection of the gross error, the identification of the variable subject to error and if possible the correction of the error encountered. On the other hand GED could return information about the type of gross error that has been identified, namely, a process-related error or measurement-related error. GED must be performed prior to DR step since a key assumption during DR is that errors are normally distributed. The residual vector (e) in Eq. (5) affect the violation of the constraints by the measurement and is the fundamental vector used in gross error detection with its covariance matrix. In order to maintain the degree of redundancy and observability, it is preferable, if possible, to compensate the measurement rather than eliminating the variable in error.

(5)fxm=e

The first step in the development of data reconciliation, parameter estimation, Gross error detection or variable classification is the preparation of a process model. This model is generally based on balance equation (energy, mass, components). This use case allows the introduction of the entire model constraints that represents the whole plant and the model parameters.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/S1570794602802127

Statistical analysis of measurements subject to random errors

Alan S. Morris, Reza Langari, in Measurement and Instrumentation (Third Edition), 2021

4.9 Distribution of manufacturing tolerances

Many aspects of manufacturing processes are subject to random variations caused by factors similar to those that cause random errors in measurements. In most cases, these random variations in manufacturing, which are known as tolerances, fit a Gaussian distribution, and the previous analysis of random measurement errors can be applied to analyze the distribution of these variations in manufacturing parameters.

Example 4.6

An integrated circuit chip contains 105 transistors. The transistors have a mean current gain of 20 and a standard deviation of 2. Calculate the following:

- (a)

-

the number of transistors with a current gain between 19.8 and 20.2

- (b)

-

the number of transistors with a current gain greater than 17

Solution

- (a)

-

The proportion of transistors in which 19.8 < gain < 20.2 is:

P[X<20.2]−P[X<19.8]=P[z<0.2]−P[z<−0.2](forz=(X−μ)/σ)

For X = 20.2; z = 0.1 and for X = 19.8; z = −0.1

From the tables, P[z < 0.1] = 0.5398 and thus P[z < −0.1] = 1 − P[z < 0.1] = 1–0.5398 = 0.4602

Hence, P[z < 0.1] − P[z < −0.1] = 0.5398 – 0.4602 = 0.0796

Thus 0.0796 × 105 = 7960 transistors have a current gain in the range 19.8–20.2.

- (b)

-

The number of transistors with gain > 17 is given by:

P[x > 17] = 1 − P[x < 17] = 1 − P[z < −1.5] = P[z < +1.5] = 0.9332

Thus, 93.32% (i.e., 93,320 transistors) has a gain > 17.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128171417000049

Volume 3

Herschel Caytak, … Miodrag Bolic, in Encyclopedia of Biomedical Engineering, 2019

Conclusion

The objective of this article was to present an overview of the major processing steps of BIS data including modeling, denoising, and classification. In order to provide context to the challenges inherent in denoising and classifying methods, we first described different types of systemic and random measurement errors as well as common artifacts.

As shown in Fig. 1, sources of noise originate from nonideal instrumentation and experimental conditions. Measurement setup also significantly affects error levels; for instance noise can be substantially reduced by appropriate measurement configuration such as using a tetrapolar setup, modification of electrode properties to reduce the EPI effect, enhancing electrode contact, and so on. Denoising strategy (e.g., choise of denoising algorithm) is dependent on type of data artifact; characterizing noise type is thus an integral part of data processing.

Denoising is typically implemented after raw data acquisition—before modeling and feature extraction. Different types of denoising methods described in this article include averaging, SVD decomposition, as well as removal of known artifacts (e.g., Hook artifact). Denoising can also be applied as a postprocessing step after feature extraction and model fitting; for instance classifiers have been used to distinguish between spectral features that are characteristic of noise and those of clean data. The classifier approach represents a novel “smart method” of noise removal algorithms based on learned parameters; we believe this will be an increasingly important focus of future research.

Data reduction from the impedance spectra to several representative parameters is accomplished using explanatory or descriptive models. The most popular explanatory model is the Cole model where impedance data are fitted to a semicircular arc in the complex plane. Both gradient-based and stochastic optimization methods are used for fitting. The gradient method is more appropriate for applications that require fast processing such as on-line monitoring, and the stochastic approach is better suited for applications requiring a high degree of accuracy. Typically PCA is used as descriptive method of modeling, whereby data dimensionality is reduced to a set of core eigenvectors/values; this is a compact way of representing the complex multivariate data without losing essential information. This method also allows the removal of noise and other sources of variability.

The use of classifiers in labeling features extracted from both explanatory and descriptive models has been demonstrated in a number of studies. No consensus however exists yet concerning a universally acceptable classification method for the BIS applications being explored. Larger studies will allow for applying more advanced learning techniques due to the increase of data available for analysis and classifier training. We expect to see more of ANN, deep learning approaches, and novel classifier combinations in the future to deal with highly nonlinear BIS data. Other important research directions include integration of BIS classification into larger diagnostic models, for example, based on Bayesian networks, better characterization of nonlinear tissue properties, and use of quantification of uncertainty and sensitivity analysis for analyzing the sensitivity of various BIS models to different model parameters and inputs.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128012383108840

Flux Analysis of Metabolic Networks

Gregory N. Stephanopoulos, … Jens Nielsen, in Metabolic Engineering, 1998

13.6.3. EFFECTS OF MEASUREMENT ERROR

Accurate measurement of fluxes and flux changes is necessary for the successful implementation of these methods. In fact, random errors in measurements have an effect upon the gFCC calculations which mimic those of nonlinearities. Hence, it is crucial to repeat and validate measurements, in order to reduce measurement error and ascertain whether nonidealities are indeed present.

The effects of random measurement error are shown in Fig. 13.5. Each calculation depicted in this figure was carried out following the methods of Section 13.2.1, using perturbations mirroring the ideal characteristic angles of the gFCCs at the branch point, with a random statistical error introduced into each flux measurement. It is important to realize that even a 5–10% error level can result in significantly skewed results. It also should be noted that, because the characteristic angles of perturbations in branches B and C are similar (but opposite), the gFCCs corresponding to these perturbations tend to be under- or overestimated more often than those corresponding to the A branch. This is a result of the near-singularity of the matrix inverted in Eq. (13.17). A comparison of the results of different levels of measurement error reveals that a 10% error allows good qualitative estimation of the gFCCs, but that an error level under 5% is necessary for good quantitative assessments. Because the accuracy of flux measurements may be beyond the control of the experimenter, however, the preferred method of improving the accuracy of control coefficient estimates is through regression analysis of the results of multiple perturbation experiments.

FIGURE 13.5. Effects of measurement error on gFCC calculations around the prephenate branch point. The lines represent the true group flux control coefficients. The scattered data points represent the endpoints of these lines calculated by assuming random statistical errors of 1, 5, 10, or 50% in the measurement of each flux change.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780126662603500145

Space object detection technology

Zhang Rongzhi, Yang Kaizhong, in Spacecraft Collision Avoidance Technology, 2020

3.2.2.3 Velocity measurement error

Doppler velocity measurement of pulse radars uses the Doppler effect of relative motion of object to obtain the change rate of range; hence, the accuracy of velocity measurement depends on the measurement accuracy of Doppler frequency. In the process of measuring the Doppler frequency shift, random and systematic errors will also be generated.

- •

-

Velocity measurement random error

Random errors of the velocity measurement system in pulse radars are generated mainly by thermal noise, multipath, object modulation, and quantization data processing.

- •

-

Thermal noise error

Assuming the velocity measurement subsystem of the monopulse radar has an equivalent noise bandwidth of 10 Hz, a filter bandwidth of 40 Hz, the error slope of the loop discriminator is taken as 1.2. If the S/N ratio=12 dB, the thermal noise error is about 0.3 m/s. If the S/N is 20 dB, the thermal noise error is reduced to 0.01 m/s.

- •

-

Quantization error

If 20-bit codes of binary system are used to record Doppler frequency, the quantization error of the speed is less than 0.01 m/s.

- •

-

Speed measurement systematic error

The system error of velocity measurement system of the pulse radar mainly includes equipment zero error, zero variation error of frequency discriminator, radio wave refraction error, and dynamic lag errors. Wherein the equipment zero error and wave refraction error are identical with Section 3.1.2.

- •

-

Zero value error of discriminator

Discriminator’s zero value can be calibrated, but the change in temperature will cause the change of zero value. This error should be controlled within 0.01 m/s.

- •

-

Dynamic lag error

This velocity measurement error caused by the dynamic lag is

(3.6)ΔV=Ka−1R¨

where Ka is the acceleration error coefficient of the velocity measurement system.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128180112000039

Data and Models

Sverre Grimnes, Ørjan G Martinsen, in Bioimpedance and Bioelectricity Basics (Third Edition), 2015

Concepts of Performance

There are several terms that are important in validating a new measurement method. A list of the most relevant aspects of validation is given in Table 9.5, along with a definition of each term and how it is usually reported. The definitions of the terms vary among different fields and standards, sometimes giving an inconsistent meaning. The table is an attempt at giving an unambiguous overview of the terms based on the most common uses.

Table 9.5. List of Important Terms in the Validation of New Measurement Technology and the Most Usual and Recommended Ways of Reporting

| Term | Definitiona | Reported as |

|---|---|---|

| Measurement error | Measured quantity value minus a reference quantity value (JCGM 200:2012) Systematic measurement error: component of measurement error that in replicate measurements remains constant or varies in a predictable manner (JCGM 200:2012) Random measurement error: component of measurement error that in replicate measurements varies in an unpredictable manner (BIPM, 2012) |

Quantity on the same scale as the measurement scale, relative error, percentwise error, mean square error, root-mean square error. Systematic measurement error: bias Random measurement error: standard deviation, variance, coefficient of variation |

| Sensitivity | The sensitivity of a clinical test refers to the ability of the test to correctly identify those patients with the disease (Lalkhen and McCluskey, 2008) | Equation 1 A part of the ROC curve which shows the relation between sensitivity, specificity and the detection threshold |

| Specificity | The specificity of a clinical test refers to the ability of the test to correctly identify those patients without the disease (Lalkhen and McCluskey, 2008) | Equation 2 A part of the ROC curve, which shows the relation between sensitivity, specificity, and the detection threshold |

| Agreement | The degree to which scores or ratings are identical (Kottner et al., 2011) | Continuous: Bland-Altman plot Discrete: percentage agreement |

| Trueness | Closeness of agreement between the average value obtained from a large series of results of measurement and a true value (ISO 5725-1) | Bias (i.e., the difference between the mean of the measurements and the true value) |

| Precision | Closeness of agreement between independent results of measurements obtained under stipulated conditions (ISO 5725-1) | Standard deviation, coefficient of variation |

| Repeatability | Precision determined under conditions in which independent test results are obtained with the same method on identical test items in the same laboratory by the same operator using the same equipment within short intervals of time (ISO 5725-1) | Within-subject standard deviation (Bland and Altman, 1999) Repeatability coefficient (Bland and Altman, 1999) |

| Reproducibility | Precision determined under conditions where test results are obtained with the same method on identical test items in different laboratories with different operators using different equipment (ISO 5725-1) | Standard deviation, coefficient of variation |

| Accuracyb | Closeness of agreement between the result of a measurement and a true value (both trueness and precision) (ISO 5725-1) Measurement accuracy: closeness of agreement between a measured quantity and a true quantity value of a measurand (JCGM 200:2012) |

Bias (trueness) and standard deviation/coefficient of variation (precision) Diagnostic accuracy: sensitivity and specificity Sensitivity and specificity corrected for prevalence as: (sensitivity) (prevalence) + (specificity) (1 − prevalence) (Metz, 1978) |

| Reliability | Ratio of variability between subjects or objects to the total variability of all measurements in the sample (Kottner et al., 2011) | Intraclass correlation coefficient Kappa statistics (categorical data) |

- a

- There are numerous different definitions in the literature, which can be inconsistent and confusing. These definitions provide one version with the aim of reducing ambiguity.

- b

- Accuracy has previously been defined as the same as trueness only, but with ISO 5725-1 (1994), and reflected in the JCGM 200:2012, the definition of accuracy has for the most changed to include both trueness and precision as given here. The old definition is still in use in some areas.

The concept of error in a measurement is quite straightforward and is the difference between the measured value and a reference value. If the error in replicate measurements remains constant or varies in a predictable manner, the error is referred to as a systematic measurement error. If the error varies in an unpredictable manner, it is referred to as random measurement error. The measurement error can be a combination of the two.

The term agreement can be regarded as a general term for the degree to which the measurements are identical (either in nominal, ordinal, or continuous variables) and it is of main interest in method comparison studies. Accuracy is the closeness of agreement between the result of a measurement and a true value, and depends on both trueness and precision. The difference between trueness and precision is easiest explained through the example of throwing darts. Trueness is high if the darts are centered around the middle, but low if they are all on one side of the board (bias), regardless of how much they are spread. Precision is high if they are close and low if they are spread far apart, regardless of the center they are spread around. Precision is further divided into repeatability and reproducibility according to the measurement condition. When new measurements are taken with the same setup by the same operator on the same items/subjects (i.e., replicated), the repeatability of the method is tested. When new measurements are taken with the same method on the same items/subjects but with different devices and operators, the reproducibility is tested. Repeatability can be thought of as the minimum variability between results, and reproducibility the maximum variability between the results. With measurement of thoracic bioimpedance as an example, the repeatability of the method can be assessed by replicating the measurement by the same operator using the same equipment (i.e., device and electrodes) on the same subjects, with measurements taken in quick succession such as on the same day. When clinical implementation is considered, it is also important to know how large this variation becomes under realistic conditions. Factors such as electrode positioning (operator-related), calibration (device-related), and ambient humidity (laboratory-related) may cause variations in the measurement. The reproducibility of the method can then be assessed by performing measurements on the same subjects at two or more different laboratories having different operators and equipment (but of the same type), providing a realistic estimate of the precision. Specific reproducibility, such as interelectrode reproducibility, can be assessed for the factors which influence the measurement, telling us how these factors influence the measurement precision.

Agreement and reliability are two distinct concepts in the medical literature (De vet et al., 2006; Kottner et al., 2011, Barlett and Frost, 2008). Although agreement is the degree to which scores or ratings are identical, reliability is the ability of a measuring device to differentiate among subjects, or objects (Kottner et al., 2011). Agreement concerns the measurement error whereas reliability relates the measurement error to the variability between the subjects or items that are tested (De Vet et al., 2006). Reliability is assessed during certain conditions such as different equipment or users (interrater reliability) or with the same equipment and users (intrarater or test-retest reliability). As an example, if we test our impedance measurement system against a set of calibration resistors once each month and each time measure a 10% positive offset, the system has a low agreement (and accuracy), but a high test-retest reliability. Given these definitions, the test-retest reliability may seem to be the same as the repeatability of a measurement, but we make a distinction here. Repeatability is assessed through repeated measurements on identical subjects/items within a short time relative to any changes in the property being measured, whereas test-retest reliability is assessed from measurements taken at different occasions with the same conditions and allowing changes in the property being measured. The same goes for reproducibility versus interrater reliability in that reproducibility is assessed using identical test items under different conditions (which is the source of variation) while interrater reliability also involves testing under different conditions, but in addition allows changes in the property being measured. This also implies that precision and reliability are two different concepts.

An advantage of using reliability to compare measurement methods is that it can be used to compare methods when their measurements are given on different scales or metrics (Barlett and Frost, 2008). For continuous variables, reliability is usually determined by the ICC. The ICC is a ratio of variances derived from ANOVA, with a maximum value of 1.0, indicating perfect reliability. There are different types of the ICC, including one- or two-way model, fixed- or random-effect model, and single or average measures (see Weir, 2005, for more on selection), and the type should be reported in a reliability study (Zaki et al., 2013). For assessing reliability in categorical data, kappa statistics such as Cohen’s kappa provide useful information (Kottner, 2011). Instead of simply taking the percentage of equal decisions relative to the total number of cases, Cohen’s kappa provides a measure of association that is corrected for equal decisions due to chance.

Which of these measures to report should be chosen based on the how the measurements are to be used in the future? The same goes for the importance of the measurement performance. A certain degree of measurement error may be acceptable if measurements are to be used as an outcome in a comparative study such as a clinical trial, but the same errors may be unacceptably large in individual patient management such as screening or risk prediction (Barlett and Frost, 2008). For some applications, there are specific ways of reporting performance which have become standard, such as the Clarke-Error Grid together with mean absolute relative deviation for blood glucose measurement. At last, it is important to also mention the concept of validity, originating from psychometrics and addresses the inference of truth of a set of statements (Nunnally and Bernstein, 1994). A study may provide perfect test results on accuracy, but if the experiments are not testing what they are supposed to, the results are not valid. For instance, testing the agreement between a new method and an existing method with barely acceptable clinical accuracy may provide a good agreement between the two, but the results are not valid with respect to the accuracy of the new method. Validity is also used to describe the same concept as trueness within psychometrics.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B978012411470800009X

Two Types of Experimental Error

No matter how careful you are, there is always error in a measurement. Error is not a «mistake»—it’s part of the measuring process. In science, measurement error is called experimental error or observational error.

There are two broad classes of observational errors: random error and systematic error. Random error varies unpredictably from one measurement to another, while systematic error has the same value or proportion for every measurement. Random errors are unavoidable, but cluster around the true value. Systematic error can often be avoided by calibrating equipment, but if left uncorrected, can lead to measurements far from the true value.

Key Takeaways

- Random error causes one measurement to differ slightly from the next. It comes from unpredictable changes during an experiment.

- Systematic error always affects measurements the same amount or by the same proportion, provided that a reading is taken the same way each time. It is predictable.

- Random errors cannot be eliminated from an experiment, but most systematic errors can be reduced.

Random Error Example and Causes

If you take multiple measurements, the values cluster around the true value. Thus, random error primarily affects precision. Typically, random error affects the last significant digit of a measurement.

The main reasons for random error are limitations of instruments, environmental factors, and slight variations in procedure. For example:

- When weighing yourself on a scale, you position yourself slightly differently each time.

- When taking a volume reading in a flask, you may read the value from a different angle each time.

- Measuring the mass of a sample on an analytical balance may produce different values as air currents affect the balance or as water enters and leaves the specimen.

- Measuring your height is affected by minor posture changes.

- Measuring wind velocity depends on the height and time at which a measurement is taken. Multiple readings must be taken and averaged because gusts and changes in direction affect the value.

- Readings must be estimated when they fall between marks on a scale or when the thickness of a measurement marking is taken into account.

Because random error always occurs and cannot be predicted, it’s important to take multiple data points and average them to get a sense of the amount of variation and estimate the true value.

Systematic Error Example and Causes

Systematic error is predictable and either constant or else proportional to the measurement. Systematic errors primarily influence a measurement’s accuracy.

Typical causes of systematic error include observational error, imperfect instrument calibration, and environmental interference. For example:

- Forgetting to tare or zero a balance produces mass measurements that are always «off» by the same amount. An error caused by not setting an instrument to zero prior to its use is called an offset error.

- Not reading the meniscus at eye level for a volume measurement will always result in an inaccurate reading. The value will be consistently low or high, depending on whether the reading is taken from above or below the mark.