Содержание

- New fragment overlaps old data (retransmission?) — false alarm?

- TCP Retransmissions – что это и как их анализировать с помощью Wireshark?

- Быстрая идентификация повторных передач (TCP Retransmissions) с помощью Wireshark

- TCP: sending lots of data deadlocks with slow peer #14571

- Comments

- HTTP request ends too early #6143

- Comments

New fragment overlaps old data (retransmission?) — false alarm?

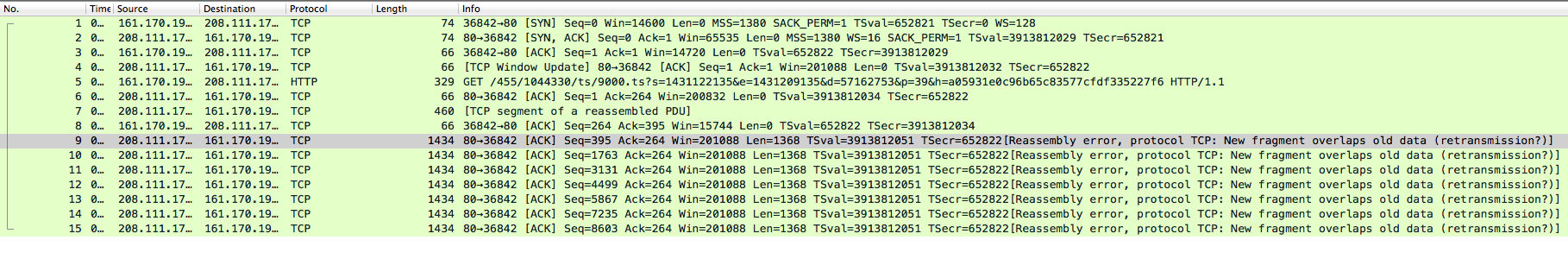

Hello all, I have a packet capture with an expert analysis that doesn’t appear to be valid. The packet capture is located at https://www.cloudshark.org/captures/67d2b4aed1d4 Here is what my own window looks like:

What leads me to believe that this is a bug in Wireshark is that there have been no actual retransmissions. I’ve opened up the pcap on multiple platforms (win7, mac, ubuntu) and all are reporting the same thing, so it’s not a platform-specific issue. Another curious thing is that Cloudshark’s expert analysis doesn’t show the fragment overlap error. To see it yourself you’ll need to download the pcap and view it in a desktop client.

What I’m trying to do is verify that this is indeed not a network issue but instead anomalous behavior on the part of Wireshark.

Thanks in advance, -Geoff

asked 23 Jun ’15, 08:59

glisk

6 ● 1 ● 1 ● 2

accept rate: 0%

I tried with 1.12.6 on Windows 7 64-bit and it seems like a bug to me. If you turn on TCP’s «Validate the TCP checksum if possible» option, those «New fragment overlaps old data (retransmission?)» Expert Infos go away . although you get 11 TCP «Bad checksum» Expert Infos instead.

By the way, I also tried with git-master and Wireshark crashed when I loaded the capture file. That might be a separate bug though, perhaps bug 10365.

I have played around withh the options and I found the following combination of Protocol Preferences which causes the strange output.

If one of these two options or both are deactivated, the error does not occur.

Источник

TCP Retransmissions – что это и как их анализировать с помощью Wireshark?

Наиболее частая ошибка, которую видит любой ИТ-специалист, установивший Wireshark и захвативший трафик, это повторная передача TCP пакета (TCP Retransmission). Даже в самой быстрой и правильно настроенной сети происходят потери пакетов и как следствие неполучение подтверждений доставки пакетов от получателя отправителю или обратно.

Это нормально и алгоритмы протокола TCP позволяют отрулить данные ошибки. Поэтому важно понимать, что TCP Retransmission – это симптом, а не причина болезни. Причины могут быть в ошибках на интерфейсах, перегрузке процессоров на сервере или пользовательском ПК, проблемы в пропускной способности каналов связи или фрагментирование пакетов и работа с этим на пути следования пакетов. Внимание надо уделить тому, как много повторных передач и часто они возникают, а не их наличию в принципе.

Анализатор протоколов Wireshark в зависимости от поведения определяет несколько типов повторных передач:

- TCP Retransmission – классический тип повторной передачи пакетов. Анализируя трафик Wireshark видит два пакета с одинаковым порядковым номером (sequence number) и данными с разницей по времени. Отправитель пакета, не получив подтверждения получения от адресата по истечении таймера retransmission timer, отправляет пакет повторно автоматически, предполагая, что он потерян по пути следования. Значение таймера подстраивается гибко и зависит от кругового времени передачи по сети для конкретного канал связи. Как он рассчитывается можно узнать в RFC6298 Computing TCP’s Retransmission Timer.

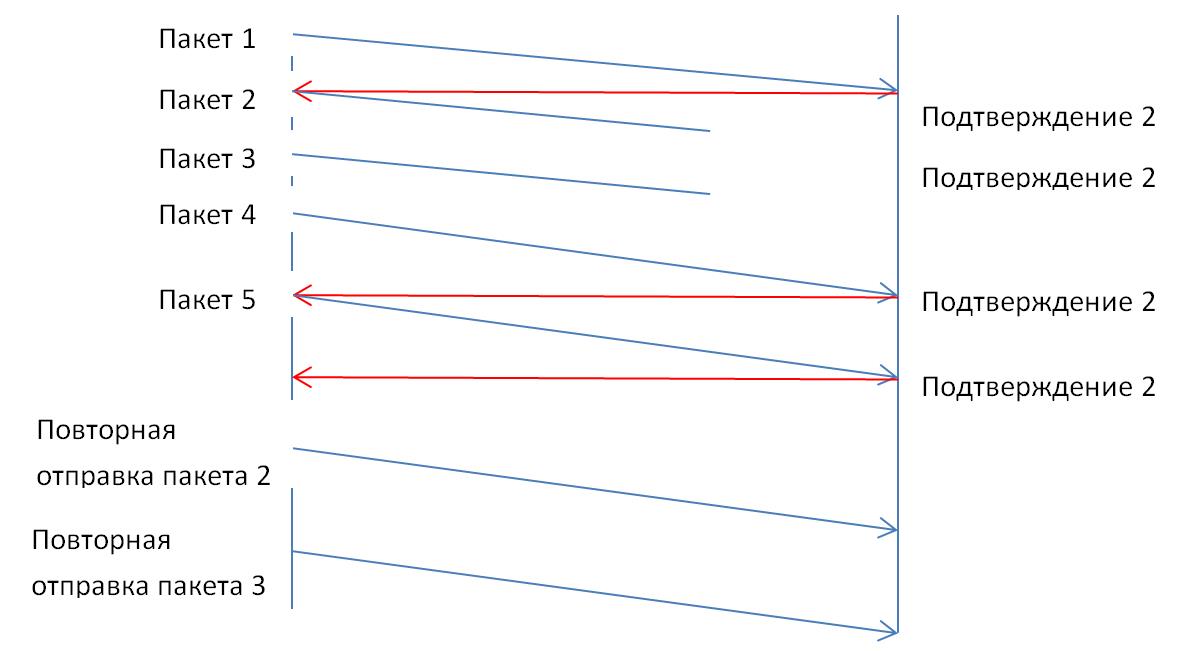

- TCP Fast Retransmission – отправитель отправляет повторно данные немедленно после предположения, что отправленные пакеты потеряны, не дожидаясь истечения времени по таймеру (ransmission timer). Обычно триггером для этого является получение нескольких подряд (обычно три) дублированных подтверждений получения с одним и тем же порядковым номером. Например, отправитель передал пакет с порядковым номером 1 и получил подтверждение – порядковый номер плюс 1, т.е. 2. Отправитель понимает, что от него ждут следующий пакет с номером два. Предположим, что следующие два пакета потерялись и получатель получает данные с порядковым номером 4. Получатель повторно отправляет подтверждение с номером 2. Получив пакет с номером 5, отправитель все равно отправляет подтверждение с номером 2. Отправитель видит три дублированных подтверждения, предполагает, что пакеты 2, 3 были потеряны и шлет их заново, не дожидаясь таймера.

- TCP Spurious Retransmission – этот тип повторной передачи появился в версии 1.12 сниффера Wireshark и означает, что отправитель повторно отправляет пакеты, на которые получатель уже отправил подтверждение.

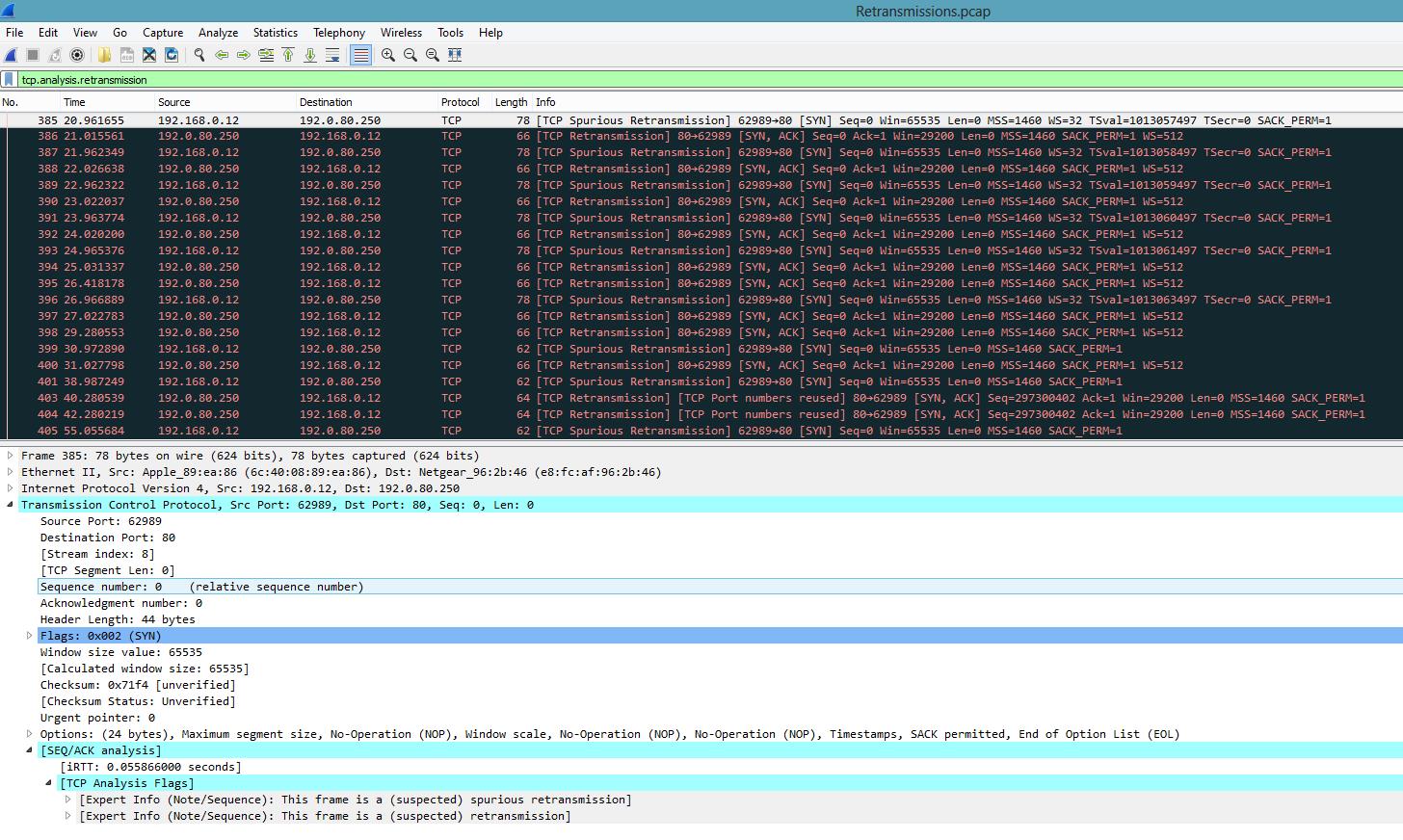

Быстрая идентификация повторных передач (TCP Retransmissions) с помощью Wireshark

Первая возможность – это воспользоваться фильтром: tcp.analysis.retransmission:

На экране будут отображены все повторные передачи и указан их тип.

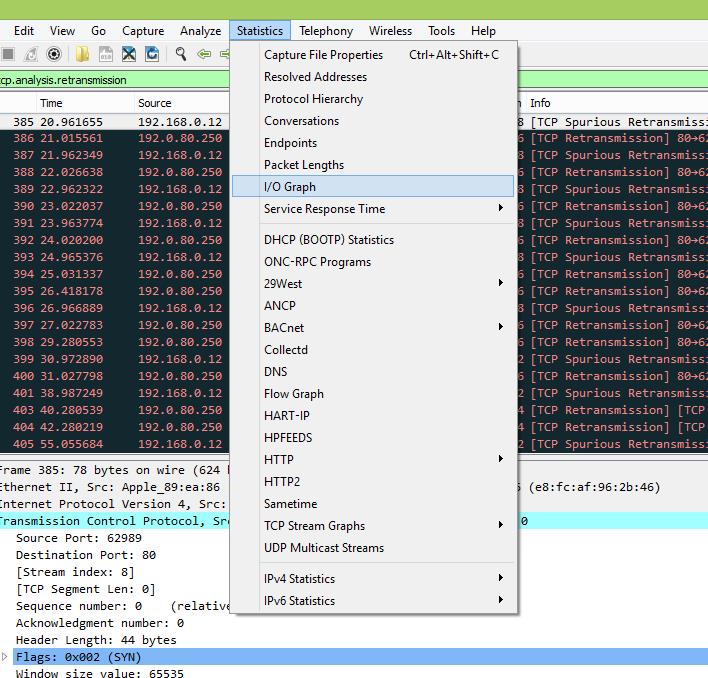

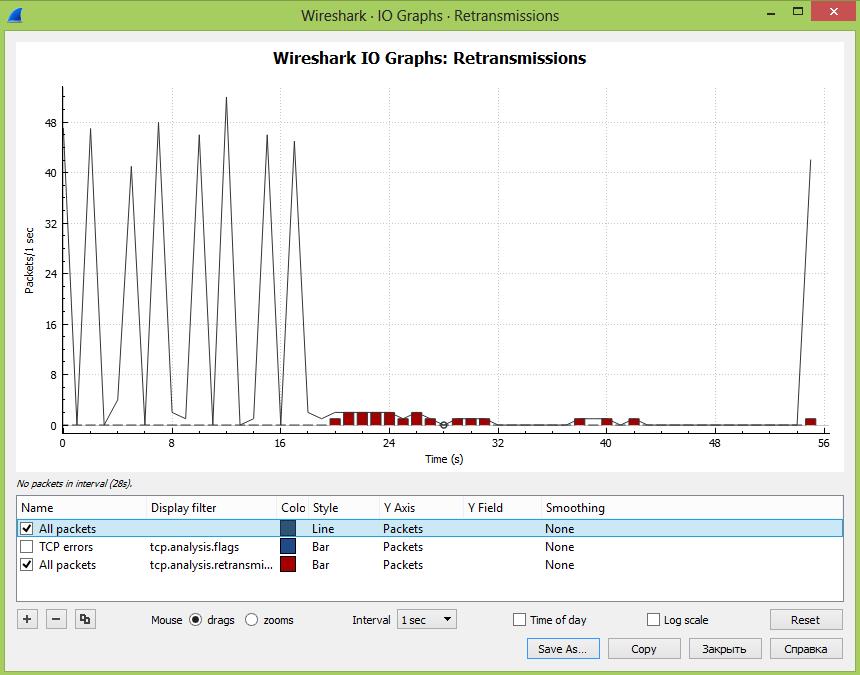

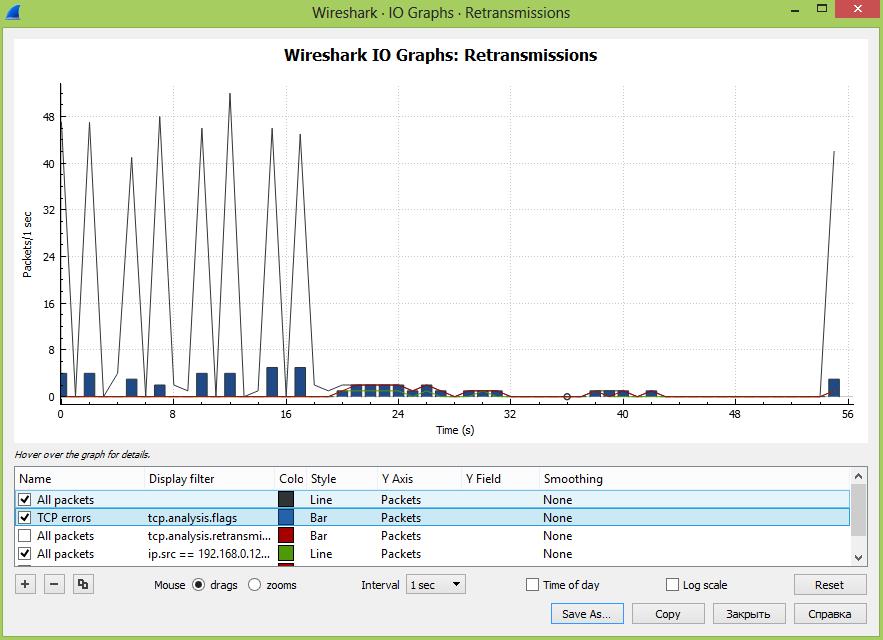

Вторая возможность – это графический анализ повторных передач, когда на графике мы можем выводить несколько графиков и сравнивать их во времени. Также можно сравнить два разных получателя трафика и сделать вывод, в каком сегменте сети происходят больше всего повторных передач вследствие перегрузки сети или оборудования.

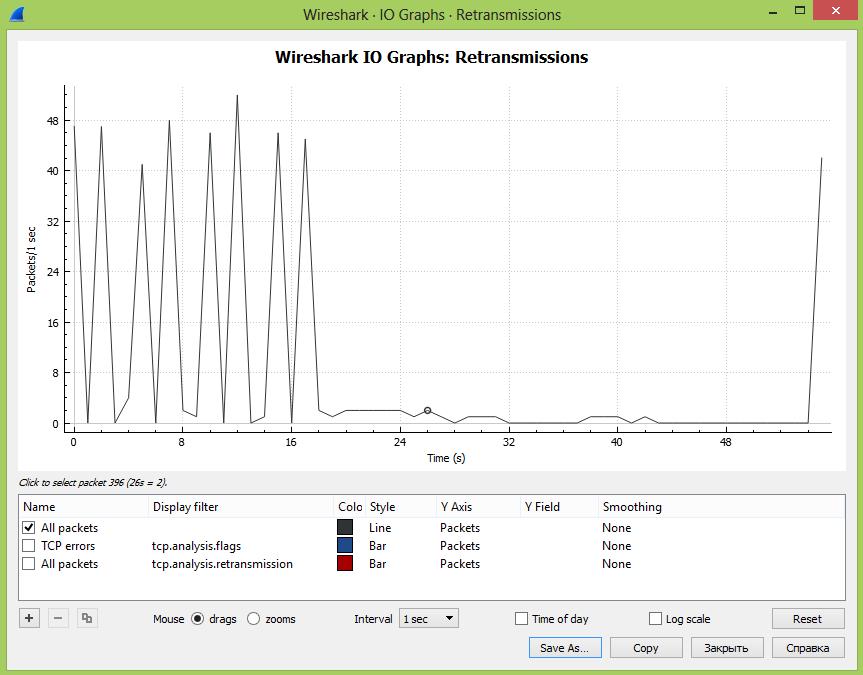

Заходим в раздел Statistics – I/O Graph:

На экране откроется окно с графиком, на котором будет отображаться общее количество передач во времени с момента начала захвата трафика. Единица измерения PPS – количество пакетов в секунду.

Далее в окошке под графиком можно добавлять дополнительные графики в зависимости от введенного фильтра и менять стиль вывода информации – график, гисторгамма и т.д. Тут добавлен знакомый нам фильтр: tcp.analysis.retransmission

Далее мы можем провести сравнительный анализ проблем с повторными передачами в сети в целом и между разными пользователями, указав фильтр: ip.src == xxx.xxx.xxx.xxx && tcp.analysis.retransmission

На наш взгляд, анализ повторных передач лучше делать именно в графическом виде, когда мы можем сравнить разные части сети или, например, как здесь можно сделать предположение, что всплески трафика приводят к росту повторных передач, что приводит к возникновению ошибок. Графики интерактивны и кликая на разные участки можно быстро перемещаться во времени, существенно ускоряя поиск.

Напоследок ещё раз напомним – повторные передачи это нормально до тех пор, пока их количество не начинает зашкаливать!

Источник

TCP: sending lots of data deadlocks with slow peer #14571

Describe the bug

When sending data on a TCP socket net_pkt_alloc_with_buffer might stall while holding context->lock , which is locked in net_context_send_new . This prevents tcp_established from processing ACK packets. But when ACK packets can’t be processed, net_pkt_alloc_with_buffer will timeout, leading to an ENOMEM error.

To Reproduce

Steps to reproduce the behavior:

- Send lots of data to a TCP peer, f.ex. with send

- Wait until the peer becomes distracted and does not immediately send an ACK packet

- Zephyr will use this opportunity to send data packets until its tx pool is exhausted

- The peer will catch up and send an ACK packet that acknowledges all data packets

- The rx_workq is now stuck in k_mutex_lock and can’t process the ACK packet

- The TCP retransmit timer will expire and Zephyr repeats an old packet although it was acknowledged by the unprocessed ACK packet

- The peer will send more ACK packets that remain unprocessed

- net_pkt_alloc_with_buffer will timeout, send fails, and the application will most likely drop the connection

Expected behavior

Received ACK packets should be processed while the TX path is waiting for free packets/buffers.

Impact

We aim for sending thousands of packets per second, so this is a showstopper.

Screenshots or console output

Environment (please complete the following information):

- OS: Linux

- Toolchain Zephyr SDK

- Commit d910aa6

The text was updated successfully, but these errors were encountered:

Источник

HTTP request ends too early #6143

It looks like the request is firing the end event too early, even before the whole download ends. This happens only on unstable connections. I’ve detected this issue while investigating the causes of bower/bower#824.

This simple script, without 3rd party code, is able to replicate the issue with the following NLC (network link conditioner) settings:

Output of the program with those NLC settings:

So basically, end event gets fired too early. If I switch off NLC everything is fine:

I was expecting the end event to be fired only when the download completes, or at very last, an error event.

The text was updated successfully, but these errors were encountered:

How is the connection terminated at the TCP level? (tcpdump or wireshark will tell you.) As you’re not getting an error, I suspect that it’s a regular FIN/ACK sequence?

@bnoordhuis I don’t have the time to debug it as of now. If really necessary, I will do it in the weekend.

‘If really necessary’ depends on how important you think it is that this gets looked into.

I have no easy way to replicate your set-up. I can set up a netem qdisc but that probably doesn’t work quite like Apple’s NLC so then we won’t be testing the same thing.

On the subject of FIN/ACK: if that’s indeed what gets sent over the wire (be it a virtual or a physical wire), then there is no bug, just business as usual.

Indeed there’s a FIN sent over the wire, with a fatal error:

Wireshark detected that the FIN packet occurred because of an error, could node do the same and fire an error event?

Indeed there’s a FIN sent over the wire, with a fatal error

It’s a protocol error but not necessarily a fatal one. Corrupted packets and retransmits happen all the time.

Apart from the PSH flag, it looks like regular connection termination. Not that the PSH flag is harmful, it’s just mildly uncommon.

Wireshark detected that the FIN packet occurred because of an error, could node do the same and fire an error event?

Not if the operating system doesn’t report it. Which it probably won’t because, as mentioned, it’s not a fatal error.

Sorry, but I don’t see anything here that suggests that it’s a node.js issue. Thanks for following up though.

@bnoordhuis I’ve tried to download with curl under the same NLC settings and I get the same behavior, but curl compares against the content-length to detect such issues:

I know that some servers might not send the content-length header, but for those that send we could add the same check and fire an error event? Or you see it implemented in an higher level module, like mikeal/request ?

Or you see it implemented in an higher level module, like mikeal/request?

I think so. The core http library is deliberately low-level. Not as low-level as I would like but that’s another story.

Throwing errors on bad Content-Length headers would also be quite disruptive because it’s not that uncommon. I think most browsers regard it as a size hint, nothing more.

@bnoordhuis after talking with an engineer of GitHub, I think that nodejs should be smarter in the way he handles the transfer:

Another thing worth mentioning is that chunked encoding should help you detect if the download finished abruptly. That is, if the connection closes in the middle of a chunk, that should be a real error. I’ve seen curl detect it, but perhaps not all http libraries are as smart.

Their servers are indeed sending chunked responses. Could node detect if the connection was closed in the middle of a chunk and fire an error in that situation?

Could node detect if the connection was closed in the middle of a chunk and fire an error in that situation?

It does, it emits an ‘aborted’ event on the response object.

@bnoordhuis I’ve search the document for the abort / aborted event and couldn’t find any. Is that for .abort() or a general event that can be used in the case I mentioned above?

You’re right, sorry. ‘aborted’ is an internal-ish event, you should also get the documented ‘close’ event.

If the aborted event is not a public API, then how is it possible to distinguish a truncated chunked download from a successful one? Won’t both emit a close event?

@jfirebaugh @satazor @bnoordhuis I know it’s been a while, but to answer your questions and anyone else who finds this ticket via Google:

- HTTP has only one proper mechanism to detect truncated downloads: The «Content-Length» header.

- If you use chunked HTTP downloads, it is possible to detect if a download ends in the middle of a chunk, but your downloading library needs to be coded to take advantage of that method, and not all servers support chunked transfers.

- If the file transmission ends prematurely (and isn’t chunked), the HTTP client will simply interpret that as a closed connection and think that the transmission is complete. Because HTTP is a terrible, old protocol where a closed connection is synonymous with the «end of the data».

- As an alternative to the «chunking» trick, someone could theoretically write a program which requests a «keep-alive» HTTP connection, performs a download, and then tries doing a read (such as a HEAD request on the same file) after the file is «complete». If the read fails, the connection has been dropped incorrectly. But nobody does this check, because it’d be an ugly hack.

- The only protocol that fully protects you against dropped connections during file transfers is HTTPS (SSL/TLS): As a SECURITY feature, they REQUIRE that a close_notify message is sent when the connection is about to be closed naturally. This is to prevent connection truncation attacks. But it also inadvertently protects us against truncated downloads. Because if we don’t get a «close_notify», and we try to read from the socket and fail to read, then we know that the download is broken. Libraries like cURL implement this check properly, and make it trivial to retry the download when the «SSL read error» happens.

- Furthermore, and most importantly of all, SSL/TLS mostly uses AES which has a 16-byte «bulk encryption» block size, so if we get a partial AES chunk it means we can’t decrypt it, and our download library would detect that as a truncated download too, regardless of whether «close_notify» is used or not. 🙂 That’s safe against 15 in every 16 closed connections (it wouldn’t work if the truncation happens exactly after the proper end of an AES block).

So no, there’s no reliable way to protect against truncation of plain-old HTTP. But on the other hand, HTTPS is on the rise and has two mechanisms that protect very well against truncation. And things like chunked transfers can be used on top of HTTPS to get even more security.

Источник

Содержание:

- 1 Know someone who can answer? Share a link to this question via email, Twitter, or Facebook.

- 2 Browse other questions tagged tcp https proxy wireshark or ask your own question.

-

- 2.0.1 Related

- 2.0.2 Hot Network Questions

-

I wrote a proxy server which support http and https connection, When I use with http all work fine, but when I work with https , wireshark report this error ‘Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)’ Although the browsing work fine but performance is impacted as after this I see TCP RST and because of that SSL negotiation happen again.

Any clue on what could be wrong ?

Browse other questions tagged tcp https proxy wireshark or ask your own question.

Hot Network Questions

To subscribe to this RSS feed, copy and paste this URL into your RSS reader.

site design / logo © 2019 Stack Exchange Inc; user contributions licensed under cc by-sa 4.0 with attribution required. rev 2019.11.15.35459

I have a problem on some environment with docker applications.

There are timeouts beetwen containters. After anlysing traffic with wireshark I have about 0,5% packages type «Malformed» with description:

Reassembly error, protocol TCP — new fragment overlaps old data

Hello all, I have a packet capture with an expert analysis that doesn’t appear to be valid. The packet capture is located at https://www.cloudshark.org/captures/67d2b4aed1d4 Here is what my own window looks like:

What leads me to believe that this is a bug in Wireshark is that there have been no actual retransmissions. I’ve opened up the pcap on multiple platforms (win7, mac, ubuntu) and all are reporting the same thing, so it’s not a platform-specific issue. Another curious thing is that Cloudshark’s expert analysis doesn’t show the fragment overlap error. To see it yourself you’ll need to download the pcap and view it in a desktop client.

What I’m trying to do is verify that this is indeed not a network issue but instead anomalous behavior on the part of Wireshark.

Thanks in advance, -Geoff

asked 23 Jun ’15, 08:59

glisk

6 ● 1 ● 1 ● 2

accept rate: 0%

I tried with 1.12.6 on Windows 7 64-bit and it seems like a bug to me. If you turn on TCP’s «Validate the TCP checksum if possible» option, those «New fragment overlaps old data (retransmission?)» Expert Infos go away . although you get 11 TCP «Bad checksum» Expert Infos instead.

By the way, I also tried with git-master and Wireshark crashed when I loaded the capture file. That might be a separate bug though, perhaps bug 10365.

I have played around withh the options and I found the following combination of Protocol Preferences which causes the strange output.

If one of these two options or both are deactivated, the error does not occur.

On a customer server running Apache 2.2 on Windows server 2012 we’re noticing that from time to time, some requests to the server never finish. Using wireshark I’ve found a bunch of duplicate ACKs get sent to the server as soon as it starts answering the request and after a few seconds a couple of retransmissions are received from the server.

The network setup is really basic with a server and some clients connected to a switch using UTP cables.

I’m not really sure what to make of this. I’m considering asking them to try different cabling, switch and/or NIC’s, but would like a second opinion on that.

1 0.000000000 192.168.1.103 -> 192.168.2.100 TCP 66 52011?81 [SYN] Seq=0 Win=8192 Len=0 MSS=1460 WS=256 SACK_PERM=1

2 0.000742000 192.168.2.100 -> 192.168.1.103 TCP 66 81?52011 [SYN, ACK] Seq=0 Ack=1 Win=8192 Len=0 MSS=1460 WS=256 SACK_PERM=1

3 0.000782000 192.168.1.103 -> 192.168.2.100 TCP 54 52011?81 [ACK] Seq=1 Ack=1 Win=65536 Len=0

4 0.001646000 192.168.1.103 -> 192.168.2.100 HTTP 606 GET /symfony/web/app.php/legacy/mutaties/afroepen.php?afroepid=16250 HTTP/1.1

5 0.002353000 192.168.2.100 -> 192.168.1.103 TCP 60 81?52011 [ACK] Seq=1 Ack=553 Win=65536 Len=0

6 0.747171000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

7 0.747172000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

8 0.747246000 192.168.1.103 -> 192.168.2.100 TCP 54 52011?81 [ACK] Seq=553 Ack=2921 Win=65536 Len=0

9 0.747504000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

10 0.747507000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

11 0.747562000 192.168.1.103 -> 192.168.2.100 TCP 54 52011?81 [ACK] Seq=553 Ack=5841 Win=65536 Len=0

12 0.748241000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

13 0.748242000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

14 0.748243000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

15 0.748244000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

16 0.748319000 192.168.1.103 -> 192.168.2.100 TCP 66 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=8761 SRE=10221

17 0.748338000 192.168.1.103 -> 192.168.2.100 TCP 66 [TCP Dup ACK 16#1] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=8761 SRE=11681

18 0.748593000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

19 0.748594000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

20 0.748595000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

21 0.748624000 192.168.1.103 -> 192.168.2.100 TCP 66 [TCP Dup ACK 16#2] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=8761 SRE=13141

22 0.748643000 192.168.1.103 -> 192.168.2.100 TCP 66 [TCP Dup ACK 16#3] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=8761 SRE=14601

23 0.748654000 192.168.1.103 -> 192.168.2.100 TCP 66 [TCP Dup ACK 16#4] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=8761 SRE=16061

24 0.748965000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

25 0.748966000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

26 0.748967000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

27 0.748999000 192.168.1.103 -> 192.168.2.100 TCP 74 [TCP Dup ACK 16#5] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=17521 SRE=18981 SLE=8761 SRE=16061

28 0.749014000 192.168.1.103 -> 192.168.2.100 TCP 74 [TCP Dup ACK 16#6] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=17521 SRE=20441 SLE=8761 SRE=16061

29 0.749249000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

30 0.749250000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

31 0.749275000 192.168.1.103 -> 192.168.2.100 TCP 74 [TCP Dup ACK 16#7] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=17521 SRE=21901 SLE=8761 SRE=16061

32 0.749289000 192.168.1.103 -> 192.168.2.100 TCP 74 [TCP Dup ACK 16#8] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=17521 SRE=23361 SLE=8761 SRE=16061

33 0.749578000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Fast Retransmission] 81?52011 [PSH, ACK] Seq=7301 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

34 0.749581000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

35 0.749907000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

36 0.749909000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

37 0.749910000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Out-Of-Order] 81?52011 [PSH, ACK] Seq=16061 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

38 0.749939000 192.168.1.103 -> 192.168.2.100 TCP 82 [TCP Dup ACK 16#9] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=24821 SRE=26281 SLE=17521 SRE=23361 SLE=8761 SRE=16061

39 0.749958000 192.168.1.103 -> 192.168.2.100 TCP 82 [TCP Dup ACK 16#10] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=24821 SRE=27741 SLE=17521 SRE=23361 SLE=8761 SRE=16061

40 0.750240000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

41 0.750241000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

42 0.750269000 192.168.1.103 -> 192.168.2.100 TCP 82 [TCP Dup ACK 16#11] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=24821 SRE=29201 SLE=17521 SRE=23361 SLE=8761 SRE=16061

43 0.750289000 192.168.1.103 -> 192.168.2.100 TCP 82 [TCP Dup ACK 16#12] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=24821 SRE=30661 SLE=17521 SRE=23361 SLE=8761 SRE=16061

44 0.750622000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

45 0.750624000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

46 0.750649000 192.168.1.103 -> 192.168.2.100 TCP 82 [TCP Dup ACK 16#13] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=24821 SRE=32121 SLE=17521 SRE=23361 SLE=8761 SRE=16061

47 0.751016000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Out-Of-Order] 81?52011 [PSH, ACK] Seq=23361 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

48 0.751017000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

49 0.751059000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#14] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=33581 SRE=35041 SLE=24821 SRE=32121 SLE=17521 SRE=23361 SLE=8761 SRE=16061

50 0.751549000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

51 0.751551000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

52 0.751567000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#15] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=33581 SRE=36501 SLE=24821 SRE=32121 SLE=17521 SRE=23361 SLE=8761 SRE=16061

53 0.751582000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#16] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=33581 SRE=37961 SLE=24821 SRE=32121 SLE=17521 SRE=23361 SLE=8761 SRE=16061

54 0.752392000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

55 0.752393000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

56 0.752394000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Out-Of-Order] 81?52011 [PSH, ACK] Seq=32121 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

57 0.752396000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

58 0.752406000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#17] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361 SLE=8761 SRE=16061

59 0.752433000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#18] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=40881 SRE=42341 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361

60 0.753551000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

61 0.753553000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

62 0.753571000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#19] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=40881 SRE=43801 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361

63 0.753588000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#20] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=40881 SRE=45261 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361

64 0.754550000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

65 0.754553000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Out-Of-Order] 81?52011 [PSH, ACK] Seq=39421 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

66 0.754553000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP segment of a reassembled PDU]

67 0.754572000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#21] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=40881 SRE=46721 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361

68 0.754610000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#22] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=40881 SRE=48181 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361

69 1.055597000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Retransmission] 81?52011 [PSH, ACK] Seq=7301 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

70 1.664583000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Retransmission] 81?52011 [PSH, ACK] Seq=7301 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

71 2.867842000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Retransmission] 81?52011 [PSH, ACK] Seq=7301 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

72 5.274379000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Retransmission] 81?52011 [PSH, ACK] Seq=7301 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

73 10.071711000 192.168.2.100 -> 192.168.1.103 TCP 1514 [TCP Retransmission] 81?52011 [PSH, ACK] Seq=7301 Ack=553 Win=65536 Len=1460[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)]

74 19.685995000 192.168.2.100 -> 192.168.1.103 TCP 60 81?52011 [RST, ACK] Seq=8761 Ack=553 Win=0 Len=0

75 19.686030000 192.168.1.103 -> 192.168.2.100 TCP 90 [TCP Dup ACK 16#23] 52011?81 [ACK] Seq=553 Ack=7301 Win=65536 Len=0 SLE=40881 SRE=48181 SLE=33581 SRE=39421 SLE=24821 SRE=32121 SLE=17521 SRE=23361

76 45.752325000 192.168.1.103 -> 192.168.2.100 TCP 55 [TCP Keep-Alive] [TCP Window Full] 52011?81 [ACK] Seq=552 Ack=7301 Win=65536 Len=1

77 58.686133000 192.168.1.103 -> 192.168.2.100 TCP 54 52011?81 [FIN, ACK] Seq=553 Ack=7301 Win=65536 Len=0

78 58.985830000 192.168.1.103 -> 192.168.2.100 TCP 54 [TCP Retransmission] 52011?81 [FIN, ACK] Seq=553 Ack=7301 Win=65536 Len=0

79 59.585853000 192.168.1.103 -> 192.168.2.100 TCP 54 [TCP Retransmission] 52011?81 [FIN, ACK] Seq=553 Ack=7301 Win=65536 Len=0

80 60.785894000 192.168.1.103 -> 192.168.2.100 TCP 54 [TCP Retransmission] 52011?81 [FIN, ACK] Seq=553 Ack=7301 Win=65536 Len=0

81 63.185944000 192.168.1.103 -> 192.168.2.100 TCP 54 [TCP Retransmission] 52011?81 [FIN, ACK] Seq=553 Ack=7301 Win=65536 Len=0

82 67.988211000 192.168.1.103 -> 192.168.2.100 TCP 54 [TCP Retransmission] 52011?81 [FIN, ACK] Seq=553 Ack=7301 Win=65536 Len=0

83 77.582500000 192.168.1.103 -> 192.168.2.100 TCP 54 52011?81 [RST, ACK] Seq=554 Ack=7301 Win=0 Len=0