| Reed–Solomon codes | |

|---|---|

| Named after | Irving S. Reed and Gustave Solomon |

| Classification | |

| Hierarchy | Linear block code Polynomial code Reed–Solomon code |

| Block length | n |

| Message length | k |

| Distance | n − k + 1 |

| Alphabet size | q = pm ≥ n (p prime) Often n = q − 1. |

| Notation | [n, k, n − k + 1]q-code |

| Algorithms | |

| Berlekamp–Massey Euclidean et al. |

|

| Properties | |

| Maximum-distance separable code | |

|

Reed–Solomon codes are a group of error-correcting codes that were introduced by Irving S. Reed and Gustave Solomon in 1960.[1]

They have many applications, the most prominent of which include consumer technologies such as MiniDiscs, CDs, DVDs, Blu-ray discs, QR codes, data transmission technologies such as DSL and WiMAX, broadcast systems such as satellite communications, DVB and ATSC, and storage systems such as RAID 6.

Reed–Solomon codes operate on a block of data treated as a set of finite-field elements called symbols. Reed–Solomon codes are able to detect and correct multiple symbol errors. By adding t = n − k check symbols to the data, a Reed–Solomon code can detect (but not correct) any combination of up to t erroneous symbols, or locate and correct up to ⌊t/2⌋ erroneous symbols at unknown locations. As an erasure code, it can correct up to t erasures at locations that are known and provided to the algorithm, or it can detect and correct combinations of errors and erasures. Reed–Solomon codes are also suitable as multiple-burst bit-error correcting codes, since a sequence of b + 1 consecutive bit errors can affect at most two symbols of size b. The choice of t is up to the designer of the code and may be selected within wide limits.

There are two basic types of Reed–Solomon codes – original view and BCH view – with BCH view being the most common, as BCH view decoders are faster and require less working storage than original view decoders.

History[edit]

Reed–Solomon codes were developed in 1960 by Irving S. Reed and Gustave Solomon, who were then staff members of MIT Lincoln Laboratory. Their seminal article was titled «Polynomial Codes over Certain Finite Fields». (Reed & Solomon 1960). The original encoding scheme described in the Reed & Solomon article used a variable polynomial based on the message to be encoded where only a fixed set of values (evaluation points) to be encoded are known to encoder and decoder. The original theoretical decoder generated potential polynomials based on subsets of k (unencoded message length) out of n (encoded message length) values of a received message, choosing the most popular polynomial as the correct one, which was impractical for all but the simplest of cases. This was initially resolved by changing the original scheme to a BCH code like scheme based on a fixed polynomial known to both encoder and decoder, but later, practical decoders based on the original scheme were developed, although slower than the BCH schemes. The result of this is that there are two main types of Reed Solomon codes, ones that use the original encoding scheme, and ones that use the BCH encoding scheme.

Also in 1960, a practical fixed polynomial decoder for BCH codes developed by Daniel Gorenstein and Neal Zierler was described in an MIT Lincoln Laboratory report by Zierler in January 1960 and later in a paper in June 1961.[2] The Gorenstein–Zierler decoder and the related work on BCH codes are described in a book Error Correcting Codes by W. Wesley Peterson (1961).[3] By 1963 (or possibly earlier), J. J. Stone (and others) recognized that Reed Solomon codes could use the BCH scheme of using a fixed generator polynomial, making such codes a special class of BCH codes,[4] but Reed Solomon codes based on the original encoding scheme, are not a class of BCH codes, and depending on the set of evaluation points, they are not even cyclic codes.

In 1969, an improved BCH scheme decoder was developed by Elwyn Berlekamp and James Massey, and has since been known as the Berlekamp–Massey decoding algorithm.

In 1975, another improved BCH scheme decoder was developed by Yasuo Sugiyama, based on the extended Euclidean algorithm.[5]

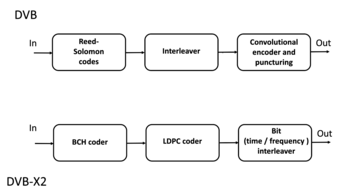

In 1977, Reed–Solomon codes were implemented in the Voyager program in the form of concatenated error correction codes. The first commercial application in mass-produced consumer products appeared in 1982 with the compact disc, where two interleaved Reed–Solomon codes are used. Today, Reed–Solomon codes are widely implemented in digital storage devices and digital communication standards, though they are being slowly replaced by Bose–Chaudhuri–Hocquenghem (BCH) codes. For example, Reed–Solomon codes are used in the Digital Video Broadcasting (DVB) standard DVB-S, in conjunction with a convolutional inner code, but BCH codes are used with LDPC in its successor, DVB-S2.

In 1986, an original scheme decoder known as the Berlekamp–Welch algorithm was developed.

In 1996, variations of original scheme decoders called list decoders or soft decoders were developed by Madhu Sudan and others, and work continues on these types of decoders – see Guruswami–Sudan list decoding algorithm.

In 2002, another original scheme decoder was developed by Shuhong Gao, based on the extended Euclidean algorithm.[6]

Applications[edit]

Data storage[edit]

Reed–Solomon coding is very widely used in mass storage systems to correct

the burst errors associated with media defects.

Reed–Solomon coding is a key component of the compact disc. It was the first use of strong error correction coding in a mass-produced consumer product, and DAT and DVD use similar schemes. In the CD, two layers of Reed–Solomon coding separated by a 28-way convolutional interleaver yields a scheme called Cross-Interleaved Reed–Solomon Coding (CIRC). The first element of a CIRC decoder is a relatively weak inner (32,28) Reed–Solomon code, shortened from a (255,251) code with 8-bit symbols. This code can correct up to 2 byte errors per 32-byte block. More importantly, it flags as erasures any uncorrectable blocks, i.e., blocks with more than 2 byte errors. The decoded 28-byte blocks, with erasure indications, are then spread by the deinterleaver to different blocks of the (28,24) outer code. Thanks to the deinterleaving, an erased 28-byte block from the inner code becomes a single erased byte in each of 28 outer code blocks. The outer code easily corrects this, since it can handle up to 4 such erasures per block.

The result is a CIRC that can completely correct error bursts up to 4000 bits, or about 2.5 mm on the disc surface. This code is so strong that most CD playback errors are almost certainly caused by tracking errors that cause the laser to jump track, not by uncorrectable error bursts.[7]

DVDs use a similar scheme, but with much larger blocks, a (208,192) inner code, and a (182,172) outer code.

Reed–Solomon error correction is also used in parchive files which are commonly posted accompanying multimedia files on USENET. The distributed online storage service Wuala (discontinued in 2015) also used Reed–Solomon when breaking up files.

Bar code[edit]

Almost all two-dimensional bar codes such as PDF-417, MaxiCode, Datamatrix, QR Code, and Aztec Code use Reed–Solomon error correction to allow correct reading even if a portion of the bar code is damaged. When the bar code scanner cannot recognize a bar code symbol, it will treat it as an erasure.

Reed–Solomon coding is less common in one-dimensional bar codes, but is used by the PostBar symbology.

Data transmission[edit]

Specialized forms of Reed–Solomon codes, specifically Cauchy-RS and Vandermonde-RS, can be used to overcome the unreliable nature of data transmission over erasure channels. The encoding process assumes a code of RS(N, K) which results in N codewords of length N symbols each storing K symbols of data, being generated, that are then sent over an erasure channel.

Any combination of K codewords received at the other end is enough to reconstruct all of the N codewords. The code rate is generally set to 1/2 unless the channel’s erasure likelihood can be adequately modelled and is seen to be less. In conclusion, N is usually 2K, meaning that at least half of all the codewords sent must be received in order to reconstruct all of the codewords sent.

Reed–Solomon codes are also used in xDSL systems and CCSDS’s Space Communications Protocol Specifications as a form of forward error correction.

Space transmission[edit]

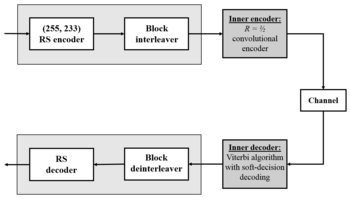

Deep-space concatenated coding system.[8] Notation: RS(255, 223) + CC («constraint length» = 7, code rate = 1/2).

One significant application of Reed–Solomon coding was to encode the digital pictures sent back by the Voyager program.

Voyager introduced Reed–Solomon coding concatenated with convolutional codes, a practice that has since become very widespread in deep space and satellite (e.g., direct digital broadcasting) communications.

Viterbi decoders tend to produce errors in short bursts. Correcting these burst errors is a job best done by short or simplified Reed–Solomon codes.

Modern versions of concatenated Reed–Solomon/Viterbi-decoded convolutional coding were and are used on the Mars Pathfinder, Galileo, Mars Exploration Rover and Cassini missions, where they perform within about 1–1.5 dB of the ultimate limit, the Shannon capacity.

These concatenated codes are now being replaced by more powerful turbo codes:

| Years | Code | Mission(s) |

|---|---|---|

| 1958–present | Uncoded | Explorer, Mariner, many others |

| 1968–1978 | convolutional codes (CC) (25, 1/2) | Pioneer, Venus |

| 1969–1975 | Reed-Muller code (32, 6) | Mariner, Viking |

| 1977–present | Binary Golay code | Voyager |

| 1977–present | RS(255, 223) + CC(7, 1/2) | Voyager, Galileo, many others |

| 1989–2003 | RS(255, 223) + CC(7, 1/3) | Voyager |

| 1989–2003 | RS(255, 223) + CC(14, 1/4) | Galileo |

| 1996–present | RS + CC (15, 1/6) | Cassini, Mars Pathfinder, others |

| 2004–present | Turbo codes[nb 1] | Messenger, Stereo, MRO, others |

| est. 2009 | LDPC codes | Constellation, MSL |

Constructions (encoding)[edit]

The Reed–Solomon code is actually a family of codes, where every code is characterised by three parameters: an alphabet size q, a block length n, and a message length k, with k < n ≤ q. The set of alphabet symbols is interpreted as the finite field of order q, and thus, q must be a prime power. In the most useful parameterizations of the Reed–Solomon code, the block length is usually some constant multiple of the message length, that is, the rate R = k/n is some constant, and furthermore, the block length is equal to or one less than the alphabet size, that is, n = q or n = q − 1.[citation needed]

Reed & Solomon’s original view: The codeword as a sequence of values[edit]

There are different encoding procedures for the Reed–Solomon code, and thus, there are different ways to describe the set of all codewords.

In the original view of Reed & Solomon (1960), every codeword of the Reed–Solomon code is a sequence of function values of a polynomial of degree less than k. In order to obtain a codeword of the Reed–Solomon code, the message symbols (each within the q-sized alphabet) are treated as the coefficients of a polynomial p of degree less than k, over the finite field F with q elements.

In turn, the polynomial p is evaluated at n ≤ q distinct points

Formally, the set

Since any two distinct polynomials of degree less than

Being a code that achieves this optimal trade-off, the Reed–Solomon code belongs to the class of maximum distance separable codes.

While the number of different polynomials of degree less than k and the number of different messages are both equal to

Simple encoding procedure: The message as a sequence of coefficients[edit]

In the original construction of Reed & Solomon (1960), the message

The codeword of

This function

This matrix is the transpose of a Vandermonde matrix over

Systematic encoding procedure: The message as an initial sequence of values[edit]

There is an alternative encoding procedure that also produces the Reed–Solomon code, but that does so in a systematic way. Here, the mapping from the message

To compute this polynomial

Once it has been found, it is evaluated at the other points

The alternative encoding function

Since the first

Since Lagrange interpolation is a linear transformation,

Discrete Fourier transform and its inverse[edit]

A discrete Fourier transform is essentially the same as the encoding procedure; it uses the generator polynomial p(x) to map a set of evaluation points into the message values as shown above:

The inverse Fourier transform could be used to convert an error free set of n < q message values back into the encoding polynomial of k coefficients, with the constraint that in order for this to work, the set of evaluation points used to encode the message must be a set of increasing powers of α:

However, Lagrange interpolation performs the same conversion without the constraint on the set of evaluation points or the requirement of an error free set of message values and is used for systematic encoding, and in one of the steps of the Gao decoder.

The BCH view: The codeword as a sequence of coefficients[edit]

In this view, the message is interpreted as the coefficients of a polynomial

For a «narrow sense code»,

Systematic encoding procedure[edit]

The encoding procedure for the BCH view of Reed–Solomon codes can be modified to yield a systematic encoding procedure, in which each codeword contains the message as a prefix, and simply appends error correcting symbols as a suffix. Here, instead of sending

Formally, the construction is done by multiplying

The remainder has degree at most

As a result, the codewords

Properties[edit]

The Reed–Solomon code is a [n, k, n − k + 1] code; in other words, it is a linear block code of length n (over F) with dimension k and minimum Hamming distance

The error-correcting ability of a Reed–Solomon code is determined by its minimum distance, or equivalently, by

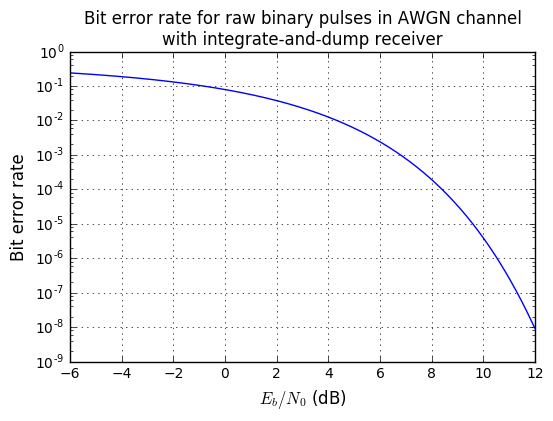

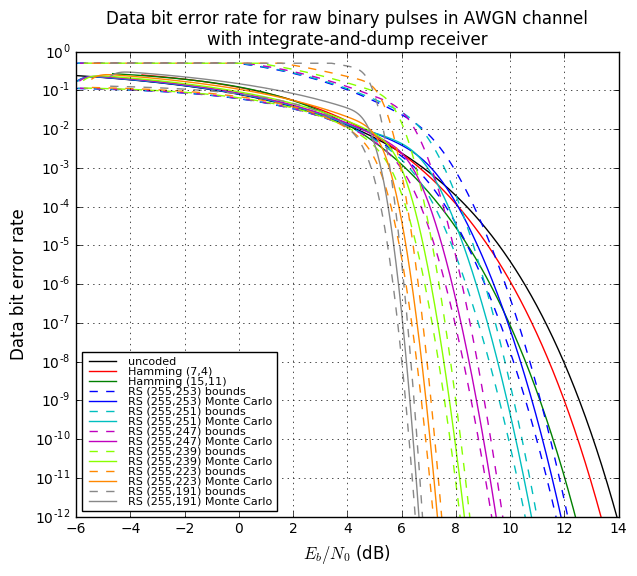

Theoretical BER performance of the Reed-Solomon code (N=255, K=233, QPSK, AWGN). Step-like characteristic.

The theoretical error bound can be described via the following formula for the AWGN channel for FSK:[11]

and for other modulation schemes:

where

For practical uses of Reed–Solomon codes, it is common to use a finite field

The sender sends the data points as encoded blocks, and the number of symbols in the encoded block is

The Reed–Solomon code properties discussed above make them especially well-suited to applications where errors occur in bursts. This is because it does not matter to the code how many bits in a symbol are in error — if multiple bits in a symbol are corrupted it only counts as a single error. Conversely, if a data stream is not characterized by error bursts or drop-outs but by random single bit errors, a Reed–Solomon code is usually a poor choice compared to a binary code.

The Reed–Solomon code, like the convolutional code, is a transparent code. This means that if the channel symbols have been inverted somewhere along the line, the decoders will still operate. The result will be the inversion of the original data. However, the Reed–Solomon code loses its transparency when the code is shortened. The «missing» bits in a shortened code need to be filled by either zeros or ones, depending on whether the data is complemented or not. (To put it another way, if the symbols are inverted, then the zero-fill needs to be inverted to a one-fill.) For this reason it is mandatory that the sense of the data (i.e., true or complemented) be resolved before Reed–Solomon decoding.

Whether the Reed–Solomon code is cyclic or not depends on subtle details of the construction. In the original view of Reed and Solomon, where the codewords are the values of a polynomial, one can choose the sequence of evaluation points in such a way as to make the code cyclic. In particular, if

[edit]

Designers are not required to use the «natural» sizes of Reed–Solomon code blocks. A technique known as «shortening» can produce a smaller code of any desired size from a larger code. For example, the widely used (255,223) code can be converted to a (160,128) code by padding the unused portion of the source block with 95 binary zeroes and not transmitting them. At the decoder, the same portion of the block is loaded locally with binary zeroes. The Delsarte–Goethals–Seidel[12] theorem illustrates an example of an application of shortened Reed–Solomon codes. In parallel to shortening, a technique known as puncturing allows omitting some of the encoded parity symbols.

BCH view decoders[edit]

The decoders described in this section use the BCH view of a codeword as a sequence of coefficients. They use a fixed generator polynomial known to both encoder and decoder.

Peterson–Gorenstein–Zierler decoder[edit]

Daniel Gorenstein and Neal Zierler developed a decoder that was described in a MIT Lincoln Laboratory report by Zierler in January 1960 and later in a paper in June 1961.[13] The Gorenstein–Zierler decoder and the related work on BCH codes are described in a book Error Correcting Codes by W. Wesley Peterson (1961).[14]

Formulation[edit]

The transmitted message,

As a result of the Reed-Solomon encoding procedure, s(x) is divisible by the generator polynomial g(x):

where α is a primitive element.

Since s(x) is a multiple of the generator g(x), it follows that it «inherits» all its roots.

Therefore,

The transmitted polynomial is corrupted in transit by an error polynomial e(x) to produce the received polynomial r(x).

Coefficient ei will be zero if there is no error at that power of x and nonzero if there is an error. If there are ν errors at distinct powers ik of x, then

The goal of the decoder is to find the number of errors (ν), the positions of the errors (ik), and the error values at those positions (eik). From those, e(x) can be calculated and subtracted from r(x) to get the originally sent message s(x).

Syndrome decoding[edit]

The decoder starts by evaluating the polynomial as received at points

Note that

The advantage of looking at the syndromes is that the message polynomial drops out. In other words, the syndromes only relate to the error, and are unaffected by the actual contents of the message being transmitted. If the syndromes are all zero, the algorithm stops here and reports that the message was not corrupted in transit.

Error locators and error values[edit]

For convenience, define the error locators Xk and error values Yk as:

Then the syndromes can be written in terms of these error locators and error values as

This definition of the syndrome values is equivalent to the previous since

The syndromes give a system of n − k ≥ 2ν equations in 2ν unknowns, but that system of equations is nonlinear in the Xk and does not have an obvious solution. However, if the Xk were known (see below), then the syndrome equations provide a linear system of equations that can easily be solved for the Yk error values.

Consequently, the problem is finding the Xk, because then the leftmost matrix would be known, and both sides of the equation could be multiplied by its inverse, yielding Yk

In the variant of this algorithm where the locations of the errors are already known (when it is being used as an erasure code), this is the end. The error locations (Xk) are already known by some other method (for example, in an FM transmission, the sections where the bitstream was unclear or overcome with interference are probabilistically determinable from frequency analysis). In this scenario, up to

The rest of the algorithm serves to locate the errors, and will require syndrome values up to

Error locator polynomial[edit]

There is a linear recurrence relation that gives rise to a system of linear equations. Solving those equations identifies those error locations Xk.

Define the error locator polynomial Λ(x) as

The zeros of Λ(x) are the reciprocals

Let

Sum for k = 1 to ν and it will still be zero.

Collect each term into its own sum.

Extract the constant values of

These summations are now equivalent to the syndrome values, which we know and can substitute in! This therefore reduces to

Subtracting

Recall that j was chosen to be any integer between 1 and v inclusive, and this equivalence is true for any and all such values. Therefore, we have v linear equations, not just one. This system of linear equations can therefore be solved for the coefficients Λi of the error location polynomial:

The above assumes the decoder knows the number of errors ν, but that number has not been determined yet. The PGZ decoder does not determine ν directly but rather searches for it by trying successive values. The decoder first assumes the largest value for a trial ν and sets up the linear system for that value. If the equations can be solved (i.e., the matrix determinant is nonzero), then that trial value is the number of errors. If the linear system cannot be solved, then the trial ν is reduced by one and the next smaller system is examined. (Gill n.d., p. 35)

Find the roots of the error locator polynomial[edit]

Use the coefficients Λi found in the last step to build the error location polynomial. The roots of the error location polynomial can be found by exhaustive search. The error locators Xk are the reciprocals of those roots. The order of coefficients of the error location polynomial can be reversed, in which case the roots of that reversed polynomial are the error locators

Calculate the error values[edit]

Once the error locators Xk are known, the error values can be determined. This can be done by direct solution for Yk in the error equations matrix given above, or using the Forney algorithm.

Calculate the error locations[edit]

Calculate ik by taking the log base

Fix the errors[edit]

Finally, e(x) is generated from ik and eik and then is subtracted from r(x) to get the originally sent message s(x), with errors corrected.

Example[edit]

Consider the Reed–Solomon code defined in GF(929) with α = 3 and t = 4 (this is used in PDF417 barcodes) for a RS(7,3) code. The generator polynomial is

If the message polynomial is p(x) = 3 x2 + 2 x + 1, then a systematic codeword is encoded as follows.

Errors in transmission might cause this to be received instead.

The syndromes are calculated by evaluating r at powers of α.

Using Gaussian elimination:

Λ(x) = 329 x2 + 821 x + 001, with roots x1 = 757 = 3−3 and x2 = 562 = 3−4

The coefficients can be reversed to produce roots with positive exponents, but typically this isn’t used:

R(x) = 001 x2 + 821 x + 329, with roots 27 = 33 and 81 = 34

with the log of the roots corresponding to the error locations (right to left, location 0 is the last term in the codeword).

To calculate the error values, apply the Forney algorithm.

Ω(x) = S(x) Λ(x) mod x4 = 546 x + 732

Λ'(x) = 658 x + 821

e1 = −Ω(x1)/Λ'(x1) = 074

e2 = −Ω(x2)/Λ'(x2) = 122

Subtracting

Berlekamp–Massey decoder[edit]

The Berlekamp–Massey algorithm is an alternate iterative procedure for finding the error locator polynomial. During each iteration, it calculates a discrepancy based on a current instance of Λ(x) with an assumed number of errors e:

and then adjusts Λ(x) and e so that a recalculated Δ would be zero. The article Berlekamp–Massey algorithm has a detailed description of the procedure. In the following example, C(x) is used to represent Λ(x).

Example[edit]

Using the same data as the Peterson Gorenstein Zierler example above:

| n | Sn+1 | d | C | B | b | m |

|---|---|---|---|---|---|---|

| 0 | 732 | 732 | 197 x + 1 | 1 | 732 | 1 |

| 1 | 637 | 846 | 173 x + 1 | 1 | 732 | 2 |

| 2 | 762 | 412 | 634 x2 + 173 x + 1 | 173 x + 1 | 412 | 1 |

| 3 | 925 | 576 | 329 x2 + 821 x + 1 | 173 x + 1 | 412 | 2 |

The final value of C is the error locator polynomial, Λ(x).

Euclidean decoder[edit]

Another iterative method for calculating both the error locator polynomial and the error value polynomial is based on Sugiyama’s adaptation of the extended Euclidean algorithm .

Define S(x), Λ(x), and Ω(x) for t syndromes and e errors:

The key equation is:

For t = 6 and e = 3:

The middle terms are zero due to the relationship between Λ and syndromes.

The extended Euclidean algorithm can find a series of polynomials of the form

Ai(x) S(x) + Bi(x) xt = Ri(x)

where the degree of R decreases as i increases. Once the degree of Ri(x) < t/2, then

Ai(x) = Λ(x)

Bi(x) = −Q(x)

Ri(x) = Ω(x).

B(x) and Q(x) don’t need to be saved, so the algorithm becomes:

R−1 := xt R0 := S(x) A−1 := 0 A0 := 1 i := 0 while degree of Ri ≥ t/2 i := i + 1 Q := Ri-2 / Ri-1 Ri := Ri-2 - Q Ri-1 Ai := Ai-2 - Q Ai-1

to set low order term of Λ(x) to 1, divide Λ(x) and Ω(x) by Ai(0):

Λ(x) = Ai / Ai(0)

Ω(x) = Ri / Ai(0)

Ai(0) is the constant (low order) term of Ai.

Example[edit]

Using the same data as the Peterson–Gorenstein–Zierler example above:

| i | Ri | Ai |

|---|---|---|

| −1 | 001 x4 + 000 x3 + 000 x2 + 000 x + 000 | 000 |

| 0 | 925 x3 + 762 x2 + 637 x + 732 | 001 |

| 1 | 683 x2 + 676 x + 024 | 697 x + 396 |

| 2 | 673 x + 596 | 608 x2 + 704 x + 544 |

Λ(x) = A2 / 544 = 329 x2 + 821 x + 001

Ω(x) = R2 / 544 = 546 x + 732

Decoder using discrete Fourier transform[edit]

A discrete Fourier transform can be used for decoding.[15] To avoid conflict with syndrome names, let c(x) = s(x) the encoded codeword. r(x) and e(x) are the same as above. Define C(x), E(x), and R(x) as the discrete Fourier transforms of c(x), e(x), and r(x). Since r(x) = c(x) + e(x), and since a discrete Fourier transform is a linear operator, R(x) = C(x) + E(x).

Transform r(x) to R(x) using discrete Fourier transform. Since the calculation for a discrete Fourier transform is the same as the calculation for syndromes, t coefficients of R(x) and E(x) are the same as the syndromes:

Use

Let v = number of errors. Generate E(x) using the known coefficients

Then calculate C(x) = R(x) − E(x) and take the inverse transform (polynomial interpolation) of C(x) to produce c(x).

Decoding beyond the error-correction bound[edit]

The Singleton bound states that the minimum distance d of a linear block code of size (n,k) is upper-bounded by n − k + 1. The distance d was usually understood to limit the error-correction capability to ⌊(d−1) / 2⌋. The Reed–Solomon code achieves this bound with equality, and can thus correct up to ⌊(n−k) / 2⌋ errors. However, this error-correction bound is not exact.

In 1999, Madhu Sudan and Venkatesan Guruswami at MIT published «Improved Decoding of Reed–Solomon and Algebraic-Geometry Codes» introducing an algorithm that allowed for the correction of errors beyond half the minimum distance of the code.[16] It applies to Reed–Solomon codes and more generally to algebraic geometric codes. This algorithm produces a list of codewords (it is a list-decoding algorithm) and is based on interpolation and factorization of polynomials over

Soft-decoding[edit]

The algebraic decoding methods described above are hard-decision methods, which means that for every symbol a hard decision is made about its value. For example, a decoder could associate with each symbol an additional value corresponding to the channel demodulator’s confidence in the correctness of the symbol. The advent of LDPC and turbo codes, which employ iterated soft-decision belief propagation decoding methods to achieve error-correction performance close to the theoretical limit, has spurred interest in applying soft-decision decoding to conventional algebraic codes. In 2003, Ralf Koetter and Alexander Vardy presented a polynomial-time soft-decision algebraic list-decoding algorithm for Reed–Solomon codes, which was based upon the work by Sudan and Guruswami.[17]

In 2016, Steven J. Franke and Joseph H. Taylor published a novel soft-decision decoder.[18]

MATLAB example[edit]

Encoder[edit]

Here we present a simple MATLAB implementation for an encoder.

function encoded = rsEncoder(msg, m, prim_poly, n, k) % RSENCODER Encode message with the Reed-Solomon algorithm % m is the number of bits per symbol % prim_poly: Primitive polynomial p(x). Ie for DM is 301 % k is the size of the message % n is the total size (k+redundant) % Example: msg = uint8('Test') % enc_msg = rsEncoder(msg, 8, 301, 12, numel(msg)); % Get the alpha alpha = gf(2, m, prim_poly); % Get the Reed-Solomon generating polynomial g(x) g_x = genpoly(k, n, alpha); % Multiply the information by X^(n-k), or just pad with zeros at the end to % get space to add the redundant information msg_padded = gf([msg zeros(1, n - k)], m, prim_poly); % Get the remainder of the division of the extended message by the % Reed-Solomon generating polynomial g(x) [~, remainder] = deconv(msg_padded, g_x); % Now return the message with the redundant information encoded = msg_padded - remainder; end % Find the Reed-Solomon generating polynomial g(x), by the way this is the % same as the rsgenpoly function on matlab function g = genpoly(k, n, alpha) g = 1; % A multiplication on the galois field is just a convolution for k = mod(1 : n - k, n) g = conv(g, [1 alpha .^ (k)]); end end

Decoder[edit]

Now the decoding part:

function [decoded, error_pos, error_mag, g, S] = rsDecoder(encoded, m, prim_poly, n, k) % RSDECODER Decode a Reed-Solomon encoded message % Example: % [dec, ~, ~, ~, ~] = rsDecoder(enc_msg, 8, 301, 12, numel(msg)) max_errors = floor((n - k) / 2); orig_vals = encoded.x; % Initialize the error vector errors = zeros(1, n); g = []; S = []; % Get the alpha alpha = gf(2, m, prim_poly); % Find the syndromes (Check if dividing the message by the generator % polynomial the result is zero) Synd = polyval(encoded, alpha .^ (1:n - k)); Syndromes = trim(Synd); % If all syndromes are zeros (perfectly divisible) there are no errors if isempty(Syndromes.x) decoded = orig_vals(1:k); error_pos = []; error_mag = []; g = []; S = Synd; return; end % Prepare for the euclidean algorithm (Used to find the error locating % polynomials) r0 = [1, zeros(1, 2 * max_errors)]; r0 = gf(r0, m, prim_poly); r0 = trim(r0); size_r0 = length(r0); r1 = Syndromes; f0 = gf([zeros(1, size_r0 - 1) 1], m, prim_poly); f1 = gf(zeros(1, size_r0), m, prim_poly); g0 = f1; g1 = f0; % Do the euclidean algorithm on the polynomials r0(x) and Syndromes(x) in % order to find the error locating polynomial while true % Do a long division [quotient, remainder] = deconv(r0, r1); % Add some zeros quotient = pad(quotient, length(g1)); % Find quotient*g1 and pad c = conv(quotient, g1); c = trim(c); c = pad(c, length(g0)); % Update g as g0-quotient*g1 g = g0 - c; % Check if the degree of remainder(x) is less than max_errors if all(remainder(1:end - max_errors) == 0) break; end % Update r0, r1, g0, g1 and remove leading zeros r0 = trim(r1); r1 = trim(remainder); g0 = g1; g1 = g; end % Remove leading zeros g = trim(g); % Find the zeros of the error polynomial on this galois field evalPoly = polyval(g, alpha .^ (n - 1 : - 1 : 0)); error_pos = gf(find(evalPoly == 0), m); % If no error position is found we return the received work, because % basically is nothing that we could do and we return the received message if isempty(error_pos) decoded = orig_vals(1:k); error_mag = []; return; end % Prepare a linear system to solve the error polynomial and find the error % magnitudes size_error = length(error_pos); Syndrome_Vals = Syndromes.x; b(:, 1) = Syndrome_Vals(1:size_error); for idx = 1 : size_error e = alpha .^ (idx * (n - error_pos.x)); err = e.x; er(idx, :) = err; end % Solve the linear system error_mag = (gf(er, m, prim_poly) gf(b, m, prim_poly))'; % Put the error magnitude on the error vector errors(error_pos.x) = error_mag.x; % Bring this vector to the galois field errors_gf = gf(errors, m, prim_poly); % Now to fix the errors just add with the encoded code decoded_gf = encoded(1:k) + errors_gf(1:k); decoded = decoded_gf.x; end % Remove leading zeros from Galois array function gt = trim(g) gx = g.x; gt = gf(gx(find(gx, 1) : end), g.m, g.prim_poly); end % Add leading zeros function xpad = pad(x, k) len = length(x); if len < k xpad = [zeros(1, k - len) x]; end end

Reed Solomon original view decoders[edit]

The decoders described in this section use the Reed Solomon original view of a codeword as a sequence of polynomial values where the polynomial is based on the message to be encoded. The same set of fixed values are used by the encoder and decoder, and the decoder recovers the encoding polynomial (and optionally an error locating polynomial) from the received message.

Theoretical decoder[edit]

Reed & Solomon (1960) described a theoretical decoder that corrected errors by finding the most popular message polynomial. The decoder only knows the set of values

Berlekamp Welch decoder[edit]

In 1986, a decoder known as the Berlekamp–Welch algorithm was developed as a decoder that is able to recover the original message polynomial as well as an error «locator» polynomial that produces zeroes for the input values that correspond to errors, with time complexity

Example[edit]

Using RS(7,3), GF(929), and the set of evaluation points ai = i − 1

a = {0, 1, 2, 3, 4, 5, 6}

If the message polynomial is

p(x) = 003 x2 + 002 x + 001

The codeword is

c = {001, 006, 017, 034, 057, 086, 121}

Errors in transmission might cause this to be received instead.

b = c + e = {001, 006, 123, 456, 057, 086, 121}

The key equations are:

Assume maximum number of errors: e = 2. The key equations become:

Using Gaussian elimination:

Q(x) = 003 x4 + 916 x3 + 009 x2 + 007 x + 006

E(x) = 001 x2 + 924 x + 006

Q(x) / E(x) = P(x) = 003 x2 + 002 x + 001

Recalculate P(x) where E(x) = 0 : {2, 3} to correct b resulting in the corrected codeword:

c = {001, 006, 017, 034, 057, 086, 121}

Gao decoder[edit]

In 2002, an improved decoder was developed by Shuhong Gao, based on the extended Euclid algorithm.[6]

Example[edit]

Using the same data as the Berlekamp Welch example above:

| i | Ri | Ai |

|---|---|---|

| −1 | 001 x7 + 908 x6 + 175 x5 + 194 x4 + 695 x3 + 094 x2 + 720 x + 000 | 000 |

| 0 | 055 x6 + 440 x5 + 497 x4 + 904 x3 + 424 x2 + 472 x + 001 | 001 |

| 1 | 702 x5 + 845 x4 + 691 x3 + 461 x2 + 327 x + 237 | 152 x + 237 |

| 2 | 266 x4 + 086 x3 + 798 x2 + 311 x + 532 | 708 x2 + 176 x + 532 |

Q(x) = R2 = 266 x4 + 086 x3 + 798 x2 + 311 x + 532

E(x) = A2 = 708 x2 + 176 x + 532

divide Q(x) and E(x) by most significant coefficient of E(x) = 708. (Optional)

Q(x) = 003 x4 + 916 x3 + 009 x2 + 007 x + 006

E(x) = 001 x2 + 924 x + 006

Q(x) / E(x) = P(x) = 003 x2 + 002 x + 001

Recalculate P(x) where E(x) = 0 : {2, 3} to correct b resulting in the corrected codeword:

c = {001, 006, 017, 034, 057, 086, 121}

See also[edit]

- BCH code

- Cyclic code

- Chien search

- Berlekamp–Massey algorithm

- Forward error correction

- Berlekamp–Welch algorithm

- Folded Reed–Solomon code

Notes[edit]

- ^ Authors in Andrews et al. (2007), provide simulation results which show that for the same code rate (1/6) turbo codes outperform Reed-Solomon concatenated codes up to 2 dB (bit error rate).[9]

References[edit]

- ^ Reed & Solomon (1960)

- ^ D. Gorenstein and N. Zierler, «A class of cyclic linear error-correcting codes in p^m symbols», J. SIAM, vol. 9, pp. 207–214, June 1961

- ^ Error Correcting Codes by W_Wesley_Peterson, 1961

- ^ Error Correcting Codes by W_Wesley_Peterson, second edition, 1972

- ^ Yasuo Sugiyama, Masao Kasahara, Shigeichi Hirasawa, and Toshihiko Namekawa. A method for solving key equation for decoding Goppa codes. Information and Control, 27:87–99, 1975.

- ^ a b Gao, Shuhong (January 2002), New Algorithm For Decoding Reed-Solomon Codes (PDF), Clemson

- ^ Immink, K. A. S. (1994), «Reed–Solomon Codes and the Compact Disc», in Wicker, Stephen B.; Bhargava, Vijay K. (eds.), Reed–Solomon Codes and Their Applications, IEEE Press, ISBN 978-0-7803-1025-4

- ^ J. Hagenauer, E. Offer, and L. Papke, Reed Solomon Codes and Their Applications. New York IEEE Press, 1994 — p. 433

- ^ a b Andrews, Kenneth S., et al. «The development of turbo and LDPC codes for deep-space applications.» Proceedings of the IEEE 95.11 (2007): 2142-2156.

- ^ See Lin & Costello (1983, p. 171), for example.

- ^ «Analytical Expressions Used in bercoding and BERTool». Archived from the original on 2019-02-01. Retrieved 2019-02-01.

- ^ Pfender, Florian; Ziegler, Günter M. (September 2004), «Kissing Numbers, Sphere Packings, and Some Unexpected Proofs» (PDF), Notices of the American Mathematical Society, 51 (8): 873–883, archived (PDF) from the original on 2008-05-09, retrieved 2009-09-28. Explains the Delsarte-Goethals-Seidel theorem as used in the context of the error correcting code for compact disc.

- ^ D. Gorenstein and N. Zierler, «A class of cyclic linear error-correcting codes in p^m symbols,» J. SIAM, vol. 9, pp. 207–214, June 1961

- ^ Error Correcting Codes by W Wesley Peterson, 1961

- ^ Shu Lin and Daniel J. Costello Jr, «Error Control Coding» second edition, pp. 255–262, 1982, 2004

- ^ Guruswami, V.; Sudan, M. (September 1999), «Improved decoding of Reed–Solomon codes and algebraic geometry codes», IEEE Transactions on Information Theory, 45 (6): 1757–1767, CiteSeerX 10.1.1.115.292, doi:10.1109/18.782097

- ^ Koetter, Ralf; Vardy, Alexander (2003). «Algebraic soft-decision decoding of Reed–Solomon codes». IEEE Transactions on Information Theory. 49 (11): 2809–2825. CiteSeerX 10.1.1.13.2021. doi:10.1109/TIT.2003.819332.

- ^ Franke, Steven J.; Taylor, Joseph H. (2016). «Open Source Soft-Decision Decoder for the JT65 (63,12) Reed–Solomon Code» (PDF). QEX (May/June): 8–17. Archived (PDF) from the original on 2017-03-09. Retrieved 2017-06-07.

Further reading[edit]

- Gill, John (n.d.), EE387 Notes #7, Handout #28 (PDF), Stanford University, archived from the original (PDF) on June 30, 2014, retrieved April 21, 2010

- Hong, Jonathan; Vetterli, Martin (August 1995), «Simple Algorithms for BCH Decoding» (PDF), IEEE Transactions on Communications, 43 (8): 2324–2333, doi:10.1109/26.403765

- Lin, Shu; Costello, Jr., Daniel J. (1983), Error Control Coding: Fundamentals and Applications, New Jersey, NJ: Prentice-Hall, ISBN 978-0-13-283796-5

- Massey, J. L. (1969), «Shift-register synthesis and BCH decoding» (PDF), IEEE Transactions on Information Theory, IT-15 (1): 122–127, doi:10.1109/tit.1969.1054260

- Peterson, Wesley W. (1960), «Encoding and Error Correction Procedures for the Bose-Chaudhuri Codes», IRE Transactions on Information Theory, IT-6 (4): 459–470, doi:10.1109/TIT.1960.1057586

- Reed, Irving S.; Solomon, Gustave (1960), «Polynomial Codes over Certain Finite Fields», Journal of the Society for Industrial and Applied Mathematics, 8 (2): 300–304, doi:10.1137/0108018

- Welch, L. R. (1997), The Original View of Reed–Solomon Codes (PDF), Lecture Notes

- Berlekamp, Elwyn R. (1967), Nonbinary BCH decoding, International Symposium on Information Theory, San Remo, Italy

- Berlekamp, Elwyn R. (1984) [1968], Algebraic Coding Theory (Revised ed.), Laguna Hills, CA: Aegean Park Press, ISBN 978-0-89412-063-3

- Cipra, Barry Arthur (1993), «The Ubiquitous Reed–Solomon Codes», SIAM News, 26 (1)

- Forney, Jr., G. (October 1965), «On Decoding BCH Codes», IEEE Transactions on Information Theory, 11 (4): 549–557, doi:10.1109/TIT.1965.1053825

- Koetter, Ralf (2005), Reed–Solomon Codes, MIT Lecture Notes 6.451 (Video), archived from the original on 2013-03-13

- MacWilliams, F. J.; Sloane, N. J. A. (1977), The Theory of Error-Correcting Codes, New York, NY: North-Holland Publishing Company

- Reed, Irving S.; Chen, Xuemin (1999), Error-Control Coding for Data Networks, Boston, MA: Kluwer Academic Publishers

External links[edit]

Information and tutorials[edit]

- Introduction to Reed–Solomon codes: principles, architecture and implementation (CMU)

- A Tutorial on Reed–Solomon Coding for Fault-Tolerance in RAID-like Systems

- Algebraic soft-decoding of Reed–Solomon codes

- Wikiversity:Reed–Solomon codes for coders

- BBC R&D White Paper WHP031

- Geisel, William A. (August 1990), Tutorial on Reed–Solomon Error Correction Coding, Technical Memorandum, NASA, TM-102162

- Concatenated codes by Dr. Dave Forney (scholarpedia.org).

- Reid, Jeff A. (April 1995), CRC and Reed Solomon ECC (PDF)

Implementations[edit]

- FEC library in C by Phil Karn (aka KA9Q) includes Reed–Solomon codec, both arbitrary and optimized (223,255) version

- Schifra Open Source C++ Reed–Solomon Codec

- Henry Minsky’s RSCode library, Reed–Solomon encoder/decoder

- Open Source C++ Reed–Solomon Soft Decoding library

- Matlab implementation of errors and-erasures Reed–Solomon decoding

- Octave implementation in communications package

- Pure-Python implementation of a Reed–Solomon codec

4.2. Введение в коды Рида-Соломона: принципы, архитектура и реализация

Коды Рида-Соломона были предложены в 1960 году Ирвином Ридом (Irving S. Reed) и Густавом Соломоном (Gustave Solomon), являвшимися сотрудниками Линкольнской лаборатории МТИ. Ключом к использованию этой технологии стало изобретение эффективного алгоритма декодирования Элвином Беликамфом (Elwyn Berlekamp; http://en.wikipedia.org/wiki/Berlekamp-Massey_algorithm), профессором Калифорнийского университета (Беркли). Коды Рида-Соломона (см. также http://www.4i2i.com/reed_solomon_codes.htm) базируются на блочном принципе коррекции ошибок и используются в огромном числе приложений в сфере цифровых телекоммуникаций и при построении запоминающих устройств. Коды Рида-Соломона применяются для исправления ошибок во многих системах:

- устройствах памяти (включая магнитные ленты, CD, DVD, штриховые коды, и т.д.);

- беспроводных или мобильных коммуникациях (включая сотовые телефоны, микроволновые каналы и т.д.);

- спутниковых коммуникациях;

- цифровом телевидении / DVB (digital video broadcast);

- скоростных модемах, таких как ADSL, xDSL и т.д.

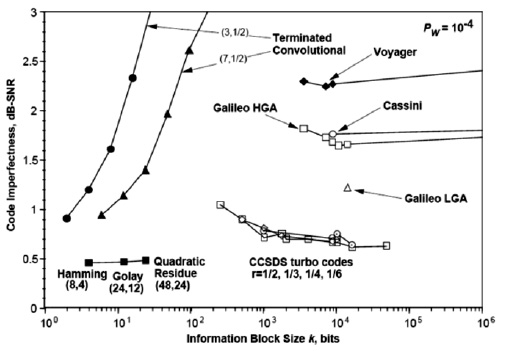

На

рис.

4.3 показаны практические приложения (дальние космические проекты) коррекции ошибок с использованием различных алгоритмов (Хэмминга, кодов свертки, Рида-Соломона и пр.). Данные и сам рисунок взяты из http://en.wikipedia.org/wiki/Reed-Solomon_error_correction.

Рис.

4.3.

Несовершенство кода, как функция размера информационного блока для разных задач и алгоритмов

Типовая система представлена ниже (см. http://www.4i2i.com/reed_solomon_codes.htm)

Рис.

4.4.

Схема коррекции ошибок Рида-Соломона

Кодировщик Рида-Соломона берет блок цифровых данных и добавляет дополнительные «избыточные» биты. Ошибки происходят при передаче по каналам связи или по разным причинам при запоминании (например, из-за шума или наводок, царапин на CD и т.д.). Декодер Рида-Соломона обрабатывает каждый блок, пытается исправить ошибки и восстановить исходные данные. Число и типы ошибок, которые могут быть исправлены, зависят от характеристик кода Рида-Соломона.

Свойства кодов Рида-Соломона

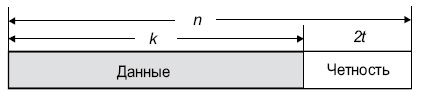

Коды Рида-Соломона являются субнабором кодов BCH и представляют собой линейные блочные коды. Код Рида-Соломона специфицируются как RS(n,k) s -битных символов.

Это означает, что кодировщик воспринимает k информационных символов по s битов каждый и добавляет символы четности для формирования n символьного кодового слова. Имеется nk символов четности по s битов каждый. Декодер Рида-Соломона может корректировать до t символов, которые содержат ошибки в кодовом слове, где 2t = n–k.

Диаграмма, представленная ниже, показывает типовое кодовое слово Рида-Соломона:

Рис.

4.5.

Структура кодового слова R-S

Пример. Популярным кодом Рида-Соломона является RS(255, 223) с 8-битными символами. Каждое кодовое слово содержит 255 байт, из которых 223 являются информационными и 32 байтами четности. Для этого кода

n = 255, k = 223, s = 8

2t = 32, t = 16

Декодер может исправить любые 16 символов с ошибками в кодовом слове: то есть ошибки могут быть исправлены, если число искаженных байт не превышает 16.

При размере символа s, максимальная длина кодового слова ( n ) для кода Рида-Соломона равна n = 2s – 1.

Например, максимальная длина кода с 8-битными символами ( s = 8 ) равна 255 байтам.

Коды Рида-Соломона могут быть в принципе укорочены путем обнуления некоторого числа информационных символов на входе кодировщика (передавать их в этом случае не нужно). При передаче данных декодеру эти нули снова вводятся в массив.

Пример. Код (255, 223), описанный выше, может быть укорочен до (200, 168). Кодировщик будет работать с блоком данных 168 байт, добавит 55 нулевых байт, сформирует кодовое слово (255, 223) и передаст только 168 информационных байт и 32 байта четности.

Объем вычислительной мощности, необходимой для кодирования и декодирования кодов Рида-Соломона, зависит от числа символов четности. Большое значение t означает, что большее число ошибок может быть исправлено, но это потребует большей вычислительной мощности по сравнению с вариантом при меньшем t.

Ошибки в символах

Одна ошибка в символе происходит, когда 1 бит символа оказывается неверным или когда все биты неверны.

Пример. Код RS(255,223) может исправить до 16 ошибок в символах. В худшем случае, могут иметь место 16 битовых ошибок в разных символах (байтах). В лучшем случае, корректируются 16 полностью неверных байт, при этом исправляется 16 x 8 = 128 битовых ошибок.

Коды Рида-Соломона особенно хорошо подходят для корректировки кластеров ошибок (когда неверными оказываются большие группы бит кодового слова, следующие подряд).

Декодирование

Алгебраические процедуры декодирования Рида-Соломона могут исправлять ошибки и потери. Потерей считается случай, когда положение неверного символа известно. Декодер может исправить до t ошибок или до 2t потерь. Данные о потере (стирании) могут быть получены от демодулятора цифровой коммуникационной системы, т.е. демодулятор помечает полученные символы, которые вероятно содержат ошибки.

Когда кодовое слово декодируется, возможны три варианта.

- Если 2s + r < 2t ( s ошибок, r потерь), тогда исходное переданное кодовое слово всегда будет восстановлено. В противном случае

- Декодер детектирует ситуацию, когда он не может восстановить исходное кодовое слово. или

- Декодер некорректно декодирует и неверно восстановит кодовое слово без какого-либо указания на этот факт.

Вероятность каждого из этих вариантов зависит от типа используемого кода Рида-Соломона, а также от числа и распределения ошибок.

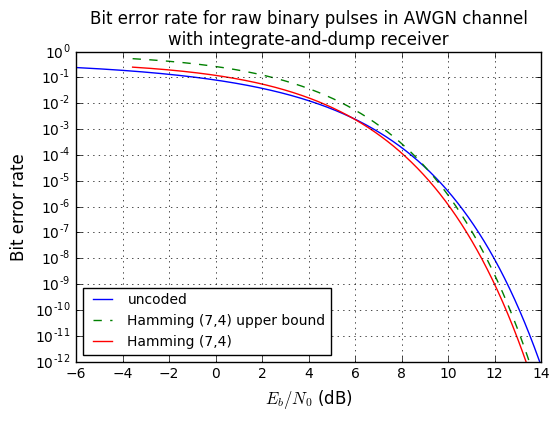

Last time, we talked about error correction and detection, covering some basics like Hamming distance, CRCs, and Hamming codes. If you are new to this topic, I would strongly suggest going back to read that article before this one.

This time we are going to cover Reed-Solomon codes. (I had meant to cover this topic in Part XV, but the article was getting to be too long, so I’ve split it roughly in half.) These are one of the workhorses of error-correction, and they are used in overwhelming abundance to correct short bursts of errors. We’ll see that encoding a message using Reed-Solomon is fairly simple, so easy that we can even do it in C on an embedded system.

We’re also going to talk about some performance metrics of error-correcting codes.

Reed-Solomon Codes

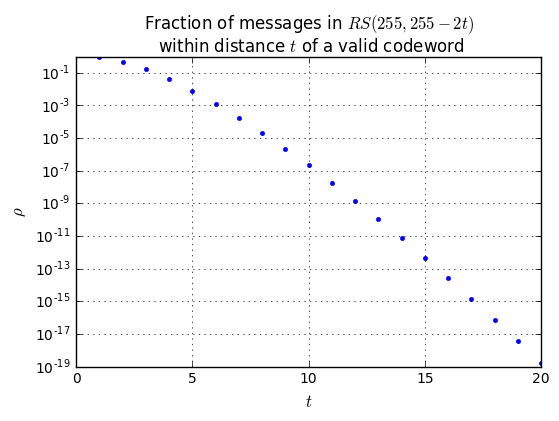

Reed-Solomon codes, like many other error-correcting codes, are parameterized. A Reed-Solomon ( (n,k) ) code, where ( n=2^m-1 ) and ( k=n-2t ), can correct up to ( t ) errors. They are not binary codes; each symbol must have ( 2^m ) possible values, and is typically an element of ( GF(2^m) ).

The Reed-Solomon codes with ( n=255 ) are very common, since then each symbol is an element of ( GF(2^8) ) and can be represented as a single byte. A ( (255, 247) ) RS code would be able to correct 4 errors, and a ( (255,223) ) RS code would be able to correct 16 errors, with each error being an arbitrary error in one transmitted symbol.

You may have noticed a pattern in some of the coding techniques discussed in the previous article:

- If we compute the parity of a string of bits, and concatenate a parity bit, the resulting message has a parity of zero modulo 2

- If we compute the checksum of a string of bytes, and concatenate a negated checksum, the resulting message has a checksum of zero modulo 256

- If we compute the CRC of a message (with no initial/final bit flips), and concatenate the CRC, the resulting message has a remainder of zero modulo the CRC polynomial

- If we compute parity bits of a Hamming code, and concatenate them to the data bits, the resulting message has a remainder of zero modulo the Hamming code polynomial

In all cases, we have this magic technique of taking data bits, using a division algorithm to compute the remainder, and concatenating the remainder, such that the resulting message has zero remainder. If we receive a message which does have a nonzero remainder, we can detect this, and in some cases use the nonzero remainder to determine where the errors occurred and correct them.

Parity and checksum are poor methods because they are unable to detect transpositions. It’s easy to cancel out a parity flip; it’s much harder to cancel out a CRC error by accidentally flipping another bit elsewhere.

What if there was an encoding algorithm that was somewhat similar to a checksum, in that it operated on a byte-by-byte basis, but had robustness to swapped bytes? Reed-Solomon is such an algorithm. And it amounts to doing the same thing as all the other linear coding techniques we’ve talked about to encode a message:

- take data bits

- use a polynomial division algorithm to compute a remainder

- create a codeword by concatenating the data bits and the remainder bits

- a valid codeword now has a zero remainder

In this article, I am going to describe Reed-Solomon encoding, but not the typical decoding methods, which are somewhat complicated, and include fancy-sounding steps like the Berlekamp-Massey algorithm (yes, this is the same one we saw in part VI, only it’s done in ( GF(2^m) ) instead of the binary ( GF(2) ) version), the Chien search, and the Forney algorithm. If you want to learn more, I would recommend reading the BBC white paper WHP031, in the list of references, which does go into just the right level of detail, and has lots of examples.

In addition to being more complicated to understand, decoding is also more computationally intensive. Reed-Solomon encoders are straightforward to implement in hardware — which we’ll see a bit later — and are simple to implement in software in an embedded system. Decoders are trickier, and you’d probably want something more powerful than an 8-bit microcontroller to execute them, or they’ll be very slow. The first use of Reed-Solomon in space communications was on the Voyager missions, where an encoder was included without certainty that the received signals could be decoded back on earth. From a NASA Tutorial on Reed-Solomon Error Correction Coding by William A. Geisel:

The Reed-Solomon (RS) codes have been finding widespread

applications ever since the 1977 Voyager’s deep space

communications system. At the time of Voyager’s launch, efficient

encoders existed, but accurate decoding methods were not even

available! The Jet Propulsion Laboratory (JPL) scientists and

engineers gambled that by the time Voyager II would reach Uranus in

1986, decoding algorithms and equipment would be both available and

perfected. They were correct! Voyager’s communications system was

able to obtain a data rate of 21,600 bits per second from

2 billion miles away with a received signal energy 100 billion

times weaker than a common wrist watch battery!

The statement “accurate decoding methods were not even available” in 1977 is a bit suspicious, although this general idea of faith-that-a-decoder-could-be-constructed is in several other accounts:

Lahmeyer, the sole engineer on the project, and his team of technicians and builders worked tirelessly to get the decoder working by the time Voyager reached Uranus. Otherwise, they would have had to slow down the rate of data received to decrease noise interference. “Each chip had to be hand wired,” Lahmeyer says. “Thankfully, we got it working on time.”

(Jefferson City Magazine, June 2015)

In order to make the extended mission to Uranus and Neptune possible,

some new and never-before-tried technologies had to be designed into

the spacecraft and novel new techniques for using the hardware to its

fullest had to be validated for use. One notable requirement was the need

to improve error correction coding of the data stream over the very inefficient method which had been used for all previous missions (and for

the Voyagers at Jupiter and Saturn), because the much lower maximum

data rates from Uranus and Neptune (due to distance) would have greatly

compromised the number of pictures and other data that could have been

obtained. A then-new and highly efficient data coding method called

Reed-Solomon (RS) coding was planned for Voyager at Uranus, and the

coding device was integrated into the spacecraft design. The problem

was that although RS coding is easy to do and the encoding device was

relatively simple to make, decoding the data after receipt on the ground

is so mathematically intensive that no computer on Earth was able to

handle the decoding task at the time Voyager was designed – or even

launched. The increase of data throughput using the RS coder was about

a factor of three over the old, standard Golay coding, so including the

capability was considered highly worthwhile. It was assumed that a decoder could be developed by the time Voyager needed it at Uranus. Such

was the case, and today RS coding is routinely used in CD players and

many other common household electronic devices.

(Sirius Astronomer, August 2017)

Geisel probably meant “efficient” rather than “accurate”; decoding algorithms were well-known before a NASA report in 1978 which states

These codes were first described

by I. S. Reed and G. Solomon in 1960. A systematic decoding algorithm was not

discovered until 1968 by E. Berlekamp. Because of their complexity, the RS

codes are not widely used except when no other codes are suitable.

Details of Reed-Solomon Encoding

At any rate, with Reed-Solomon, we have a polynomial, but instead of the coefficients being 0 or 1 like our usual polynomials in ( GF(2)[x] ), this time the coefficients are elements of ( GF(2^m) ). A byte-oriented Reed-Solomon encoding uses ( GF(2^8) ). The polynomial used in Reed-Solomon is usually written as ( g(x) ), the generator polynomial, with

$$g(x) = (x+lambda^b)(x+lambda^{b+1})(x+lambda^{b+2})ldots (x+lambda^{b+n-k-1})$$

where ( lambda ) is a primitive element of ( GF(2^m) ) and ( b ) is some constant, usually 0 or 1 or ( -t ). This means the polynomial’s roots are successive powers of ( lambda ), and that makes the math work out just fine.

All we do is treat the message as a polynomial ( M(x) ), calculate a remainder ( r(x) = x^{n-k} M(x) bmod g(x) ), and then concatenate it to the message; the resulting codeword ( C(x) = x^{n-k}M(x) + r(x) ), is guaranteed to be a multiple of the generator polynomial ( g(x) ). At the receiving end, we calculate ( C(x) bmod g(x) ) and if it’s zero, everything’s great; otherwise we have to do some gory math, but this allows us to find and correct errors in up to ( t ) symbols.

That probably sounds rather abstract, so let’s work through an example.

Reed-Solomon in Python

We’re going to encode some messages using Reed-Solomon in two ways, first using the unireedsolomon library, and then from scratch using libgf2. If you’re going to use a library, you’ll need to be really careful; the library should be universal, meaning you can encode or decode any particular flavor of Reed-Solomon — like the CRC, there are many possible variants, and you need to be able to specify the polynomial of the Galois field used for each symbol, along with the generator element ( lambda ) and the initial power ( b ). If anyone tells you they’re using the Reed-Solomon ( (255,223) ) code, they’re smoking something funny, because there’s more than one, and you need to know which particular variant in order to communicate properly.

There are two examples in the BBC white paper:

- one using ( RS(15,11) ) for 4-bit symbols, with symbol field ( GF(2)[y]/(y^4 + y + 1) ); for the generator, ( lambda = y = {tt 2} ) and ( b=0 ), forming the generator polynomial

$$begin{align}

g(x) &= (x+1)(x+lambda)(x+lambda^2)(x+lambda^3) cr

&= x^4 + lambda^{12}x^3 + lambda^{4}x^2 + x + lambda^6 cr

&= x^4 + 15x^3 + 3x^2 + x + 12 cr

&= x^4 + {tt F} x^3 + {tt 3}x^2 + {tt 1}x + {tt 12}

end{align}$$

- another using the DVB-T specification that uses ( RS(255,239) ) for 8-bit symbols, with symbol field ( GF(2)[y]/(y^8 + y^4 + y^3 + y^2 + 1) ) (hex representation

11d) and for the generator, ( lambda = y = {tt 2} ) and ( b=0 ), forming the generator polynomial

$$begin{align}

g(x) &= (x+1)(x+lambda)(x+lambda^2)(x+lambda^3)ldots(x+lambda^{15}) cr

&= x^{16} + lambda^{12}x^3 + lambda^{4}x^2 + x + lambda^6 cr

&= x^{16} + 59x^{15} + 13x^{14} + 104x^{13} + 189x^{12} + 68x^{11} + 209x^{10} + 30x^9 + 8x^8 cr

&qquad + 163x^7 + 65x^6 + 41x^5 + 229x^4 + 98x^3 + 50x^2 + 36x + 59 cr

&= x^{16} + {tt 3B} x^{15} + {tt 0D}x^{14} + {tt 68}x^{13} + {tt BD}x^{12} + {tt 44}x^{11} + {tt d1}x^{10} + {tt 1e}x^9 + {tt 08}x^8cr

&qquad + {tt A3}x^7 + {tt 41}x^6 + {tt 29}x^5 + {tt E5}x^4 + {tt 62}x^3 + {tt 32}x^2 + {tt 24}x + {tt 3B}

end{align}$$

In unireedsolomon, the RSCoder class is used, with these parameters:

c_exp— this is the number of bits per symbol, 4 and 8 for our two examplesprim— this is the symbol field polynomial, 0x13 and 0x11d for our two examplesgenerator— this is the generator element in the symbol field, 0x2 for both examplesfcr— this is the “first consecutive root” ( b ), with ( b=0 ) for both examples

You can verify the generator polynomial by encoding the message ( M(x) = 1 ), all zero symbols with a trailing one symbol at the end; this will create a codeword ( C(x) = x^{n-k} + r(x) = g(x) ).

Let’s do it!

import unireedsolomon as rs

def codeword_symbols(msg):

return [ord(c) for c in msg]

def find_generator(rscoder):

""" Returns the generator polynomial of an RSCoder class """

msg = [0]*(rscoder.k - 1) + [1]

degree = rscoder.n - rscoder.k

codeword = rscoder.encode(msg)

# look at the trailing elements of the codeword

return codeword_symbols(codeword[(-degree-1):])

rs4 = rs.RSCoder(15,11,generator=2,prim=0x13,fcr=0,c_exp=4)

print "g4(x) = %s" % find_generator(rs4)

rs8 = rs.RSCoder(255,239,generator=2,prim=0x11d,fcr=0,c_exp=8)

print "g8(x) = %s" % find_generator(rs8)

g4(x) = [1, 15, 3, 1, 12] g8(x) = [1, 59, 13, 104, 189, 68, 209, 30, 8, 163, 65, 41, 229, 98, 50, 36, 59]

Easy as pie!

Encoding messages is just a matter of using either a string (byte array) or a list of message symbols, and we get a string back by default. (You can use encode(msg, return_string=False) but then you get a bunch of GF2Int objects back rather than integer coefficients.)

(Warning: the unireedsolomon package uses a global singleton to manage Galois field arithmetic, so if you want to have multiple RSCoder objects out there, they’ll only work if they share the same field generator polynomial. This is why each time I want to switch back between the 4-bit and the 8-bit RSCoder, I have to create a new object, so that it reconfigures the way Galois field arithmetic works.)

The BBC white paper has a sample encoding only for the 4-bit ( RS(15,11) ) example, using ( [1,2,3,4,5,6,7,8,9,10,11] ) which produces remainder ( [3,3,12,12] ):

rs4 = rs.RSCoder(15,11,generator=2,prim=0x13,fcr=0,c_exp=4) encmsg15 = codeword_symbols(rs4.encode([1,2,3,4,5,6,7,8,9,10,11])) print "RS(15,11) encoded example message from BBC WHP031:n", encmsg15

RS(15,11) encoded example message from BBC WHP031: [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 3, 3, 12, 12]

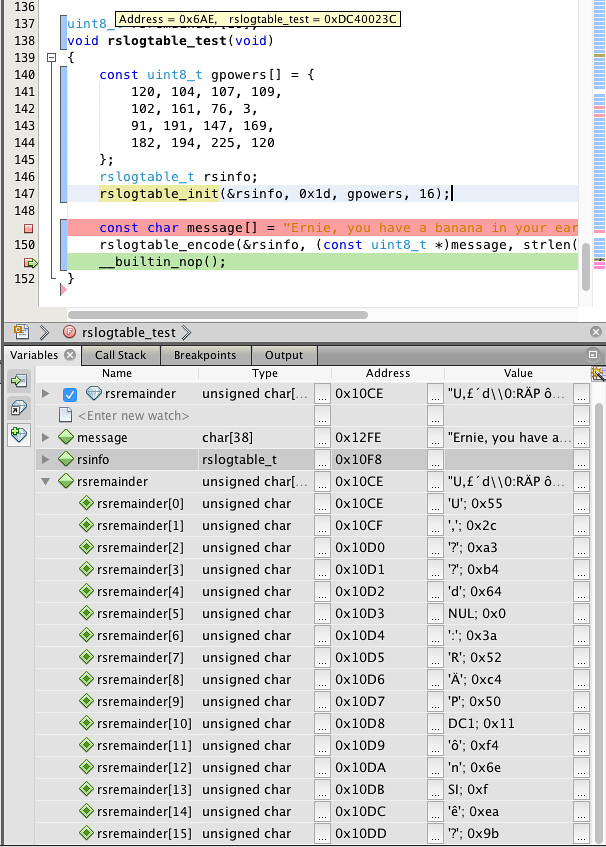

The real fun begins when we can start encoding actual messages, like Ernie, you have a banana in your ear! Since this has length 37, let’s use a shortened version of the code, ( RS(53,37) ).

Mama’s Little Baby Loves Shortnin’, Shortnin’, Mama’s Little Baby Loves Shortnin’ Bread

Oh, we haven’t talked about shortened codes yet — this is where we just omit the initial symbols in a codeword, and both transmitter and receiver agree treat them as implied zeros. An eight-bit ( RS(53,37) ) transmitter encodes as codeword polynomials of degree 52, and the receiver just zero-extends them to degree 254 (with 202 implied zero bytes added at the beginning) for the purposes of decoding just like any other ( RS(255,239) ) code.

rs8s = rs.RSCoder(53,37,generator=2,prim=0x11d,fcr=0,c_exp=8)

msg = 'Ernie, you have a banana in your ear!'

codeword = rs8s.encode(msg)

print ('codeword=%r' % codeword)

remainder = codeword[-16:]

print ('remainder(hex) = %s' % remainder.encode('hex'))

codeword='Ernie, you have a banana in your ear!U,xa3xb4dx00:Rxc4Px11xf4nx0fxeax9b' remainder(hex) = 552ca3b464003a52c45011f46e0fea9b

That binary stuff after the message is the remainder. Now we can decode all sorts of messages with errors:

for init_msg in [

'Ernie, you have a banana in your ear!',

'Billy! You have a banana in your ear!',

'Arnie! You have a potato in your ear!',

'Eddie? You hate a banana in your car?',

'01234567ou have a banana in your ear!',

'012345678u have a banana in your ear!',

]:

decoded_msg, decoded_remainder = rs8s.decode(init_msg+remainder)

print "%s -> %s" % (init_msg, decoded_msg)

Ernie, you have a banana in your ear! -> Ernie, you have a banana in your ear! Billy! You have a banana in your ear! -> Ernie, you have a banana in your ear! Arnie! You have a potato in your ear! -> Ernie, you have a banana in your ear! Eddie? You hate a banana in your car? -> Ernie, you have a banana in your ear! 01234567ou have a banana in your ear! -> Ernie, you have a banana in your ear!

---------------------------------------------------------------------------

RSCodecError Traceback (most recent call last)

in ()

7 '012345678u have a banana in your ear!',

8 ]:

----> 9 decoded_msg, decoded_remainder = rs8s.decode(init_msg+remainder)

10 print "%s -> %s" % (init_msg, decoded_msg)

/Users/jmsachs/anaconda/lib/python2.7/site-packages/unireedsolomon/rs.pyc in decode(self, r, nostrip, k, erasures_pos, only_erasures, return_string)

321 # being the rightmost byte

322 # X is a corresponding array of GF(2^8) values where X_i = alpha^(j_i)

--> 323 X, j = self._chien_search(sigma)

324

325 # Sanity check: Cannot guarantee correct decoding of more than n-k errata (Singleton Bound, n-k being the minimum distance), and we cannot even check if it's correct (the syndrome will always be all 0 if we try to decode above the bound), thus it's better to just return the input as-is.

/Users/jmsachs/anaconda/lib/python2.7/site-packages/unireedsolomon/rs.pyc in _chien_search(self, sigma)

822 errs_nb = len(sigma) - 1 # compute the exact number of errors/errata that this error locator should find

823 if len(j) != errs_nb:

--> 824 raise RSCodecError("Too many (or few) errors found by Chien Search for the errata locator polynomial!")

825

826 return X, j

RSCodecError: Too many (or few) errors found by Chien Search for the errata locator polynomial!

This last message failed to decode because there were 9 errors and ( RS(53,37) ) or ( RS(255,239) ) can only correct 8 errors.

But if there are few enough errors, Reed-Solomon will fix them all.

Why does it work?

The reason why Reed-Solomon encoding and decoding works is a bit hard to grasp. There are quite a few good explanations of how encoding and decoding works, my favorite being the BBC whitepaper, but none of them really dwells upon why it works. We can handwave about redundancy and the mysteriousness of finite fields spreading that redundancy evenly throughout the remainder bits, or a spectral interpretation of the parity-check matrix where the roots of the generator polynomial can be considered as “frequencies”, but I don’t have a good explanation. Here’s the best I can do:

Let’s say we have some number of errors where the locations are known. This is called an erasure, in contrast to a true error, where the location is not known ahead of time. Think of a bunch of symbols on a piece of paper, and some idiot erases one of them or spills vegetable soup, and you’ve lost one of the symbols, but you know where it is. The message received could be 45 72 6e 69 65 2c 20 ?? 6f 75 where the ?? represents an erasure. Of course, binary computers don’t have a way to compute arithmetic on ??, so what we would do instead is just pick an arbitrary pattern like 00 or 42 to replace the erasure, and mark its position (in this case, byte 7) for later processing.

Anyway, in a Reed-Solomon code, the error values are linearly independent. This is really the key here. We can have up to ( 2t ) erasures (as opposed to ( t ) true errors) and still maintain this linear independence property. Linear independence means that for a system of equations, there is only one solution. An error in bytes 3 and 28, for example, can’t be mistaken for an error in bytes 9 and 14. Once you exceed the maximum number of errors or erasures, the linear independence property falls apart and there are many possible ways to correct received errors, so we can’t be sure which is the right one.

To get a little more technical: we have a transmitted coded message ( C_t(x) = x^{n-k}M(x) + r(x) ) where ( M(x) ) is the unencoded message and ( r(x) ) is the remainder modulo the generator polynomial ( g(x) ), and a received message ( C_r(x) = C_t(x) + e(x) ) where the error polynomial ( e(x) ) represents the difference between transmitted and received messages. The receiver can compute ( e(x) bmod g(x) ) by calculating the remainder ( C_r(x) bmod g(x) ). If there are no errors, then ( e(x) bmod g(x) = 0 ). With erasures, we know the locations of the errors. Let’s say we had ( n=255 ), ( 2t=16 ), and we had three errors at known locations ( i_1, i_2, ) and ( i_3, ) in which case ( e(x) = e_{i_1}x^{i_1} + e_{i_2}x^{i_2} + e_{i_3}x^{i_3} ). These terms ( e_{i_1}x^{i_1}, e_{i_2}x^{i_2}, ) etc. are the error values that form a linear independent set. For example, if the original message had 42 in byte 13, but we received 59 instead, then the error coefficient in that location would be 42 + 59 = 1B, so ( i_1 = 13 ) and the error value would be ( {tt 1B}x^{13} ).

What the algebra of finite fields assures us, is that if we have a suitable choice of generator polynomial ( g(x) ) of degree ( 2t ) — and the one used for Reed-Solomon, ( g(x) = prodlimits_{i=0}^{2t-1}(x-lambda^{b+i}) ), is suitable — then any selection of up to ( 2t ) distinct powers of ( x ) from ( x^0 ) to ( x^{n-1} ) are linearly independent. If ( 2t = 16 ), for example, then we cannot write ( x^{15} = ax^{13} + bx^{8} + c ) for some choices of ( a,b,c ) — otherwise it would mean that ( x^{15}, x^{13}, x^8, ) and ( 1 ) do not form a linearly independent set. This means that if we have up to ( 2t ) erasures at known locations, then we can figure out the coefficients at each location, and find the unknown values.

In our 3-erasures example above, if ( 2t=16, i_1 = 13, i_2 = 8, i_3 = 0 ), then we have a really easy case. All the errors are in positions below the degree of the remainder, which means all the errors are in the remainder, and the message symbols arrived intact. In this case, the error polynomial looks like ( e(x) = e_{13}x^{13} + e_8x^8 + e_0 ) for some error coefficients ( e_{13}, e_8, e_0 ) in ( GF(256) ). The received remainder ( e(x) bmod g(x) = e(x) ) and we can just find the error coefficients directly.

On the other hand, let’s say we knew the errors were in positions ( i_1 = 200, i_2 = 180, i_3 = 105 ). In this case we could calculate ( x^{200} bmod g(x) ), ( x^{180} bmod g(x) ), and ( x^{105} bmod g(x) ). These each have a bunch of coefficients from degree 0 to 15, but we know that the received remainder ( e(x) bmod g(x) ) must be a linear combination of the three polynomials, ( e_{200}x^{200} + e_{180}x^{180} + e_{105}x^{105} bmod g(x) ) and we could solve for ( e_{200} ), ( e_{180} ), and ( e_{105} ) using linear algebra.

A similar situation applies if we have true errors where we don’t know their positions ahead of time. In this case we can correct only ( t ) errors, but the algebra works out so that we can write ( 2t ) equations with ( 2t ) unknowns (an error location and value for each of the ( t ) errors) and solve them.

All we need is for someone to prove this linear independence property. That part I won’t be able to explain intuitively. If you look at the typical decoder algorithms, they will show constructively how to find the unique solution, but not why it works. Reed-Solomon decoders make use of the so-called “key equation”; for Euclidean algorithm decoders (as opposed to Berlekamp-Massey decoders), the key equation is ( Omega(x) = S(x)Lambda(x) bmod x^{2t} ) and you’re supposed to know what that means and how to use it… sigh. A bit of blind faith sometimes helps.

Which is why I won’t have anything more to say on decoding. Read the BBC whitepaper.

Reed-Solomon Encoding in libgf2

We don’t have to use the unireedsolomon library as a black box. We can do the encoding step in libgf2 using libgf2.lfsr.PolyRingOverField. This is a helper class that the undecimate() function uses. I talked a little bit about this in part XI, along with some of the details of this “two-variable” kind of mathematical thing, a polynomial ring over a finite field. Let’s forget about the abstract math for now, and just start computing. The PolyRingOverField just defines a particular arithmetic of polynomials, represented as lists of coefficients in ascending order (so ( x^2 + 2 ) is represented as [2, 0, 1]):

from libgf2.gf2 import GF2QuotientRing, GF2Polynomial from libgf2.lfsr import PolyRingOverField import numpy as np f16 = GF2QuotientRing(0x13) f256 = GF2QuotientRing(0x11d) rsprof16 = PolyRingOverField(f16) rsprof256 = PolyRingOverField(f256) p1 = [1,4] p2 = [2,8] rsprof256.mul(p1, p2)

def compute_generator_polynomial(rsprof, elementfield, k):

el = 1

g = [1]

n = (1 << elementfield.degree) - 1

for _ in xrange(n-k):

g = rsprof.mul(g, [el, 1])

el = elementfield.mulraw(el, 2)

return g

g16 = compute_generator_polynomial(rsprof16, f16, 11)

g256 = compute_generator_polynomial(rsprof256, f256, 239)

print "generator polynomials (in ascending order of coefficients)"

print "RS(15,11): g16=", g16

print "RS(255,239): g256=", g256

generator polynomials (in ascending order of coefficients) RS(15,11): g16= [12, 1, 3, 15, 1] RS(255,239): g256= [59, 36, 50, 98, 229, 41, 65, 163, 8, 30, 209, 68, 189, 104, 13, 59, 1]

Now, to encode a message, we just have to convert binary data to polynomials and then take the remainder ( bmod g(x) ):

print "BBC WHP031 example for RS(15,11):"

msg_bbc = [1,2,3,4,5,6,7,8,9,10,11]

msgpoly = [0]*4 + [c for c in reversed(msg_bbc)]

print "message=%r" % msg_bbc

q,r = rsprof16.divmod(msgpoly, g16)

print "remainder=", r

print "nReal-world example for RS(255,239):"

print "message=%r" % msg

msgpoly = [0]*16 + [ord(c) for c in reversed(msg)]

q,r = rsprof256.divmod(msgpoly, g256)

print "remainder=", r

print "in hex: ", ''.join('%02x' % b for b in reversed(r))

print "remainder calculated earlier:n %s" % remainder.encode('hex')

BBC WHP031 example for RS(15,11):

message=[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11]

remainder= [12, 12, 3, 3]

Real-world example for RS(255,239):

message='Ernie, you have a banana in your ear!'

remainder= [155, 234, 15, 110, 244, 17, 80, 196, 82, 58, 0, 100, 180, 163, 44, 85]

in hex: 552ca3b464003a52c45011f46e0fea9b

remainder calculated earlier:

552ca3b464003a52c45011f46e0fea9b

See how easy that is?

Well… you probably didn’t, because PolyRingOverField hides the implementation of the abstract algebra. We can make the encoding step even easier to understand if we take the perspective of an LFSR.

Chocolate Strawberry Peach Vanilla Banana Pistachio Peppermint Lemon Orange Butterscotch

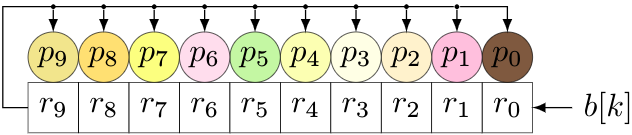

We can handle Reed-Solomon encoding in either software or hardware (and at least the remainder-calculating part of the decoder) using LFSRs. Now, we have to loosen up what we mean by an LFSR. So far, all the LFSRs have dealt directly with binary bits, and each shift cell either has a tap, or doesn’t, depending on the coefficients of the polynomial associated with the LFSR. For Reed-Solomon, the units of computation are elements of ( GF(256) ), represented as 8-bit bytes for a particular degree-8 primitive polynomial. So the shift cells, instead of containing bits, will contain bytes, and the taps will change from simple taps to multipliers over ( GF(256) ). (This gets a little hairy in hardware, but it’s not that bad.) A 10-byte LFSR over ( GF(256) ) implementing Reed-Solomon encoding would look something like this:

We would feed the bytes ( b[k] ) of the message in at the right; bytes ( r_0 ) through ( r_9 ) represent a remainder, and coefficients ( p_0 ) through ( p_9 ) represent the non-leading coefficients of the RS code’s generator polynomial. In this case, we would use a 10th-degree generator polynomial with a leading coefficient 1.

For each new byte, we do two things:

- Shift all cells left, shifting in one byte of the message

- Take the byte shifted out, and multiply it by the generator polynomial, then add (XOR) with the contents of the shift register.

We also have to remember to do this another ( n-k ) times (the length of the shift register) to cover the ( x^{n-k} ) factor. (Remember, we’re trying to calculate ( r(x) = x^{n-k}M(x) ).)

f256 = GF2QuotientRing(0x11d)

def rsencode_lfsr1(field, message, gtaps, remainder_only=False):

""" encode a message in Reed-Solomon using LFSR

field: symbol field

message: string of bytes

gtaps: generator polynomial coefficients, in ascending order

remainder_only: whether to return the remainder only

"""

glength = len(gtaps)

remainder = np.zeros(glength, np.int)

for char in message + ''*glength:

remainder = np.roll(remainder, 1)

out = remainder[0]

remainder[0] = ord(char)

for k, c in enumerate(gtaps):

remainder[k] ^= field.mulraw(c, out)

remainder = ''.join(chr(b) for b in reversed(remainder))

return remainder if remainder_only else message+remainder

print rsencode_lfsr1(f256, msg, g[:-1], remainder_only=True).encode('hex')

print "calculated earlier:"

print remainder.encode('hex')

552ca3b464003a52c45011f46e0fea9b calculated earlier: 552ca3b464003a52c45011f46e0fea9b

That worked, but we still have to handle multiplication in the symbol field, which is annoying and cumbersome for embedded processors. There are two table-driven methods that make this step easier, relegating the finite field stuff either to initialization steps only, or something that can be done at design time if you’re willing to generate a table in source code. (For example, using Python to generate the table coefficients in C.)

Also we have that call to np.roll(), which is too high-level for a language like C, so let’s look at things from this low-level, table-driven approach.

One table method is to take the generator polynomial and multiply it by each of the 256 possible coefficients, so that we get a table of 256 × ( n-k ) bytes.

def rsencode_lfsr2_table(field, gtaps):

"""

Create a table for Reed-Solomon encoding using a field

This time the taps are in *descending* order

"""

return [[field.mulraw(b,c) for c in gtaps] for b in xrange(256)]

def rsencode_lfsr2(table, message, remainder_only=False):

""" encode a message in Reed-Solomon using LFSR

table: table[i][j] is the multiplication of

generator coefficient of x^{n-k-1-j} by incoming byte i

message: string of bytes

remainder_only: whether to return the remainder only

"""

glength = len(table[0])

remainder = [0]*glength

for char in message + ''*glength:

out = remainder[0]

table_row = table[out]

for k in xrange(glength-1):

remainder[k] = remainder[k+1] ^ table_row[k]

remainder[glength-1] = ord(char) ^ table_row[glength-1]

remainder = ''.join(chr(b) for b in remainder)

return remainder if remainder_only else message+remainder

table = rsencode_lfsr2_table(f256, g[-2::-1])

print "table:"

for k, row in enumerate(table):

print "%02x: %s" % (k, ' '.join('%02x' % c for c in row))