From Wikipedia, the free encyclopedia

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) [1] is a function that maps an event or values of one or more variables onto a real number intuitively representing some «cost» associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy.

In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century.[2] In the context of economics, for example, this is usually economic cost or regret. In classification, it is the penalty for an incorrect classification of an example. In actuarial science, it is used in an insurance context to model benefits paid over premiums, particularly since the works of Harald Cramér in the 1920s.[3] In optimal control, the loss is the penalty for failing to achieve a desired value. In financial risk management, the function is mapped to a monetary loss.

Examples[edit]

Regret[edit]

Leonard J. Savage argued that using non-Bayesian methods such as minimax, the loss function should be based on the idea of regret, i.e., the loss associated with a decision should be the difference between the consequences of the best decision that could have been made had the underlying circumstances been known and the decision that was in fact taken before they were known.

Quadratic loss function[edit]

The use of a quadratic loss function is common, for example when using least squares techniques. It is often more mathematically tractable than other loss functions because of the properties of variances, as well as being symmetric: an error above the target causes the same loss as the same magnitude of error below the target. If the target is t, then a quadratic loss function is

for some constant C; the value of the constant makes no difference to a decision, and can be ignored by setting it equal to 1. This is also known as the squared error loss (SEL). [1]

Many common statistics, including t-tests, regression models, design of experiments, and much else, use least squares methods applied using linear regression theory, which is based on the quadratic loss function.

The quadratic loss function is also used in linear-quadratic optimal control problems. In these problems, even in the absence of uncertainty, it may not be possible to achieve the desired values of all target variables. Often loss is expressed as a quadratic form in the deviations of the variables of interest from their desired values; this approach is tractable because it results in linear first-order conditions. In the context of stochastic control, the expected value of the quadratic form is used.

0-1 loss function[edit]

In statistics and decision theory, a frequently used loss function is the 0-1 loss function

where

Meaning if the input is evaluated to true, then the output is 1. Otherwise, if the input is evaluated to false, then the output will be 0.

Constructing loss and objective functions[edit]

In many applications, objective functions, including loss functions as a particular case, are determined by the problem formulation. In other situations, the decision maker’s preference must be elicited and represented by a scalar-valued function (called also utility function) in a form suitable for optimization — the problem that Ragnar Frisch has highlighted in his Nobel Prize lecture.[4]

The existing methods for constructing objective functions are collected in the proceedings of two dedicated conferences.[5][6]

In particular, Andranik Tangian showed that the most usable objective functions — quadratic and additive — are determined by a few indifference points. He used this property in the models for constructing these objective functions from either ordinal or cardinal data that were elicited through computer-assisted interviews with decision makers.[7][8]

Among other things, he constructed objective functions to optimally distribute budgets for 16 Westfalian universities[9]

and the European subsidies for equalizing unemployment rates among 271 German regions.[10]

Expected loss[edit]

In some contexts, the value of the loss function itself is a random quantity because it depends on the outcome of a random variable X.

Statistics[edit]

Both frequentist and Bayesian statistical theory involve making a decision based on the expected value of the loss function; however, this quantity is defined differently under the two paradigms.

Frequentist expected loss[edit]

We first define the expected loss in the frequentist context. It is obtained by taking the expected value with respect to the probability distribution, Pθ, of the observed data, X. This is also referred to as the risk function[11][12][13][14] of the decision rule δ and the parameter θ. Here the decision rule depends on the outcome of X. The risk function is given by:

Here, θ is a fixed but possibly unknown state of nature, X is a vector of observations stochastically drawn from a population,

Bayesian expected loss[edit]

In a Bayesian approach, the expectation is calculated using the posterior distribution π* of the parameter θ:

One then should choose the action a* which minimises the expected loss. Although this will result in choosing the same action as would be chosen using the frequentist risk, the emphasis of the Bayesian approach is that one is only interested in choosing the optimal action under the actual observed data, whereas choosing the actual frequentist optimal decision rule, which is a function of all possible observations, is a much more difficult problem.

Examples in statistics[edit]

- For a scalar parameter θ, a decision function whose output

is an estimate of θ, and a quadratic loss function (squared error loss)

the risk function becomes the mean squared error of the estimate,

An Estimator found by minimizing the Mean squared error estimates the Posterior distribution’s mean.

- In density estimation, the unknown parameter is probability density itself. The loss function is typically chosen to be a norm in an appropriate function space. For example, for L2 norm,

the risk function becomes the mean integrated squared error

Economic choice under uncertainty[edit]

In economics, decision-making under uncertainty is often modelled using the von Neumann–Morgenstern utility function of the uncertain variable of interest, such as end-of-period wealth. Since the value of this variable is uncertain, so is the value of the utility function; it is the expected value of utility that is maximized.

Decision rules[edit]

A decision rule makes a choice using an optimality criterion. Some commonly used criteria are:

- Minimax: Choose the decision rule with the lowest worst loss — that is, minimize the worst-case (maximum possible) loss:

- Invariance: Choose the decision rule which satisfies an invariance requirement.

- Choose the decision rule with the lowest average loss (i.e. minimize the expected value of the loss function):

Selecting a loss function[edit]

Sound statistical practice requires selecting an estimator consistent with the actual acceptable variation experienced in the context of a particular applied problem. Thus, in the applied use of loss functions, selecting which statistical method to use to model an applied problem depends on knowing the losses that will be experienced from being wrong under the problem’s particular circumstances.[15]

A common example involves estimating «location». Under typical statistical assumptions, the mean or average is the statistic for estimating location that minimizes the expected loss experienced under the squared-error loss function, while the median is the estimator that minimizes expected loss experienced under the absolute-difference loss function. Still different estimators would be optimal under other, less common circumstances.

In economics, when an agent is risk neutral, the objective function is simply expressed as the expected value of a monetary quantity, such as profit, income, or end-of-period wealth. For risk-averse or risk-loving agents, loss is measured as the negative of a utility function, and the objective function to be optimized is the expected value of utility.

Other measures of cost are possible, for example mortality or morbidity in the field of public health or safety engineering.

For most optimization algorithms, it is desirable to have a loss function that is globally continuous and differentiable.

Two very commonly used loss functions are the squared loss,

The choice of a loss function is not arbitrary. It is very restrictive and sometimes the loss function may be characterized by its desirable properties.[16] Among the choice principles are, for example, the requirement of completeness of the class of symmetric statistics in the case of i.i.d. observations, the principle of complete information, and some others.

W. Edwards Deming and Nassim Nicholas Taleb argue that empirical reality, not nice mathematical properties, should be the sole basis for selecting loss functions, and real losses often are not mathematically nice and are not differentiable, continuous, symmetric, etc. For example, a person who arrives before a plane gate closure can still make the plane, but a person who arrives after can not, a discontinuity and asymmetry which makes arriving slightly late much more costly than arriving slightly early. In drug dosing, the cost of too little drug may be lack of efficacy, while the cost of too much may be tolerable toxicity, another example of asymmetry. Traffic, pipes, beams, ecologies, climates, etc. may tolerate increased load or stress with little noticeable change up to a point, then become backed up or break catastrophically. These situations, Deming and Taleb argue, are common in real-life problems, perhaps more common than classical smooth, continuous, symmetric, differentials cases.[17]

See also[edit]

- Bayesian regret

- Loss functions for classification

- Discounted maximum loss

- Hinge loss

- Scoring rule

- Statistical risk

References[edit]

- ^ a b Hastie, Trevor; Tibshirani, Robert; Friedman, Jerome H. (2001). The Elements of Statistical Learning. Springer. p. 18. ISBN 0-387-95284-5.

- ^ Wald, A. (1950). Statistical Decision Functions. Wiley.

- ^ Cramér, H. (1930). On the mathematical theory of risk. Centraltryckeriet.

- ^ Frisch, Ragnar (1969). «From utopian theory to practical applications: the case of econometrics». The Nobel Prize–Prize Lecture. Retrieved 15 February 2021.

- ^ Tangian, Andranik; Gruber, Josef (1997). Constructing Scalar-Valued Objective Functions. Proceedings of the Third International Conference on Econometric Decision Models: Constructing Scalar-Valued Objective Functions, University of Hagen, held in Katholische Akademie Schwerte September 5–8, 1995. Lecture Notes in Economics and Mathematical Systems. Vol. 453. Berlin: Springer. doi:10.1007/978-3-642-48773-6. ISBN 978-3-540-63061-6.

- ^ Tangian, Andranik; Gruber, Josef (2002). Constructing and Applying Objective Functions. Proceedings of the Fourth International Conference on Econometric Decision Models Constructing and Applying Objective Functions, University of Hagen, held in Haus Nordhelle, August, 28 — 31, 2000. Lecture Notes in Economics and Mathematical Systems. Vol. 510. Berlin: Springer. doi:10.1007/978-3-642-56038-5. ISBN 978-3-540-42669-1.

- ^ Tangian, Andranik (2002). «Constructing a quasi-concave quadratic objective function from interviewing a decision maker». European Journal of Operational Research. 141 (3): 608–640. doi:10.1016/S0377-2217(01)00185-0. S2CID 39623350.

- ^ Tangian, Andranik (2004). «A model for ordinally constructing additive objective functions». European Journal of Operational Research. 159 (2): 476–512. doi:10.1016/S0377-2217(03)00413-2. S2CID 31019036.

- ^ Tangian, Andranik (2004). «Redistribution of university budgets with respect to the status quo». European Journal of Operational Research. 157 (2): 409–428. doi:10.1016/S0377-2217(03)00271-6.

- ^ Tangian, Andranik (2008). «Multi-criteria optimization of regional employment policy: A simulation analysis for Germany». Review of Urban and Regional Development. 20 (2): 103–122. doi:10.1111/j.1467-940X.2008.00144.x.

- ^ Nikulin, M.S. (2001) [1994], «Risk of a statistical procedure», Encyclopedia of Mathematics, EMS Press

- ^

Berger, James O. (1985). Statistical decision theory and Bayesian Analysis (2nd ed.). New York: Springer-Verlag. Bibcode:1985sdtb.book…..B. ISBN 978-0-387-96098-2. MR 0804611. - ^ DeGroot, Morris (2004) [1970]. Optimal Statistical Decisions. Wiley Classics Library. ISBN 978-0-471-68029-1. MR 2288194.

- ^ Robert, Christian P. (2007). The Bayesian Choice. Springer Texts in Statistics (2nd ed.). New York: Springer. doi:10.1007/0-387-71599-1. ISBN 978-0-387-95231-4. MR 1835885.

- ^ Pfanzagl, J. (1994). Parametric Statistical Theory. Berlin: Walter de Gruyter. ISBN 978-3-11-013863-4.

- ^ Detailed information on mathematical principles of the loss function choice is given in Chapter 2 of the book Klebanov, B.; Rachev, Svetlozat T.; Fabozzi, Frank J. (2009). Robust and Non-Robust Models in Statistics. New York: Nova Scientific Publishers, Inc. (and references there).

- ^ Deming, W. Edwards (2000). Out of the Crisis. The MIT Press. ISBN 9780262541152.

Further reading[edit]

- Aretz, Kevin; Bartram, Söhnke M.; Pope, Peter F. (April–June 2011). «Asymmetric Loss Functions and the Rationality of Expected Stock Returns» (PDF). International Journal of Forecasting. 27 (2): 413–437. doi:10.1016/j.ijforecast.2009.10.008. SSRN 889323.

- Berger, James O. (1985). Statistical decision theory and Bayesian Analysis (2nd ed.). New York: Springer-Verlag. Bibcode:1985sdtb.book…..B. ISBN 978-0-387-96098-2. MR 0804611.

- Cecchetti, S. (2000). «Making monetary policy: Objectives and rules». Oxford Review of Economic Policy. 16 (4): 43–59. doi:10.1093/oxrep/16.4.43.

- Horowitz, Ann R. (1987). «Loss functions and public policy». Journal of Macroeconomics. 9 (4): 489–504. doi:10.1016/0164-0704(87)90016-4.

- Waud, Roger N. (1976). «Asymmetric Policymaker Utility Functions and Optimal Policy under Uncertainty». Econometrica. 44 (1): 53–66. doi:10.2307/1911380. JSTOR 1911380.

| title | date | categories | tags | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

About loss and loss functions |

2019-10-04 |

|

|

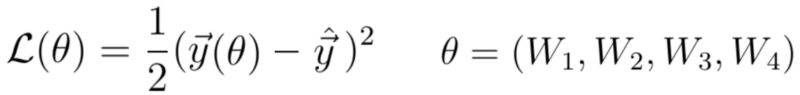

When you’re training supervised machine learning models, you often hear about a loss function that is minimized; that must be chosen, and so on.

The term cost function is also used equivalently.

But what is loss? And what is a loss function?

I’ll answer these two questions in this blog, which focuses on this optimization aspect of machine learning. We’ll first cover the high-level supervised learning process, to set the stage. This includes the role of training, validation and testing data when training supervised models.

Once we’re up to speed with those, we’ll introduce loss. We answer the question what is loss? However, we don’t forget what is a loss function? We’ll even look into some commonly used loss functions.

Let’s go! 😎

[toc]

[ad]

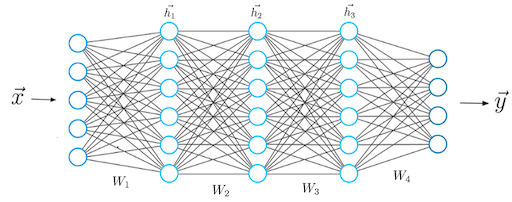

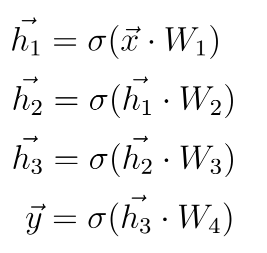

The high-level supervised learning process

Before we can actually introduce the concept of loss, we’ll have to take a look at the high-level supervised machine learning process. All supervised training approaches fall under this process, which means that it is equal for deep neural networks such as MLPs or ConvNets, but also for SVMs.

Let’s take a look at this training process, which is cyclical in nature.

Forward pass

We start with our features and targets, which are also called your dataset. This dataset is split into three parts before the training process starts: training data, validation data and testing data. The training data is used during the training process; more specificially, to generate predictions during the forward pass. However, after each training cycle, the predictive performance of the model must be tested. This is what the validation data is used for — it helps during model optimization.

Then there is testing data left. Assume that the validation data, which is essentially a statistical sample, does not fully match the population it describes in statistical terms. That is, the sample does not represent it fully and by consequence the mean and variance of the sample are (hopefully) slightly different than the actual population mean and variance. Hence, a little bias is introduced into the model every time you’ll optimize it with your validation data. While it may thus still work very well in terms of predictive power, it may be the case that it will lose its power to generalize. In that case, it would no longer work for data it has never seen before, e.g. data from a different sample. The testing data is used to test the model once the entire training process has finished (i.e., only after the last cycle), and allows us to tell something about the generalization power of our machine learning model.

The training data is fed into the machine learning model in what is called the forward pass. The origin of this name is really easy: the data is simply fed to the network, which means that it passes through it in a forward fashion. The end result is a set of predictions, one per sample. This means that when my training set consists of 1000 feature vectors (or rows with features) that are accompanied by 1000 targets, I will have 1000 predictions after my forward pass.

[ad]

Loss

You do however want to know how well the model performs with respect to the targets originally set. A well-performing model would be interesting for production usage, whereas an ill-performing model must be optimized before it can be actually used.

This is where the concept of loss enters the equation.

Most generally speaking, the loss allows us to compare between some actual targets and predicted targets. It does so by imposing a «cost» (or, using a different term, a «loss») on each prediction if it deviates from the actual targets.

It’s relatively easy to compute the loss conceptually: we agree on some cost for our machine learning predictions, compare the 1000 targets with the 1000 predictions and compute the 1000 costs, then add everything together and present the global loss.

Our goal when training a machine learning model?

To minimize the loss.

The reason why is simple: the lower the loss, the more the set of targets and the set of predictions resemble each other.

And the more they resemble each other, the better the machine learning model performs.

As you can see in the machine learning process depicted above, arrows are flowing backwards towards the machine learning model. Their goal: to optimize the internals of your model only slightly, so that it will perform better during the next cycle (or iteration, or epoch, as they are also called).

Backwards pass

When loss is computed, the model must be improved. This is done by propagating the error backwards to the model structure, such as the model’s weights. This closes the learning cycle between feeding data forward, generating predictions, and improving it — by adapting the weights, the model likely improves (sometimes much, sometimes slightly) and hence learning takes place.

Depending on the model type used, there are many ways for optimizing the model, i.e. propagating the error backwards. In neural networks, often, a combination of gradient descent based methods and backpropagation is used: gradient descent like optimizers for computing the gradient or the direction in which to optimize, backpropagation for the actual error propagation.

In other model types, such as Support Vector Machines, we do not actually propagate the error backward, strictly speaking. However, we use methods such as quadratic optimization to find the mathematical optimum, which given linear separability of your data (whether in regular space or kernel space) must exist. However, visualizing it as «adapting the weights by computing some error» benefits understanding. Next up — the loss functions we can actually use for computing the error! 😄

[ad]

Loss functions

Here, we’ll cover a wide array of loss functions: some of them for regression, others for classification.

Loss functions for regression

There are two main types of supervised learning problems: classification and regression. In the first, your aim is to classify a sample into the correct bucket, e.g. into one of the buckets ‘diabetes’ or ‘no diabetes’. In the latter case, however, you don’t classify but rather estimate some real valued number. What you’re trying to do is regress a mathematical function from some input data, and hence it’s called regression. For regression problems, there are many loss functions available.

Mean Absolute Error (L1 Loss)

Mean Absolute Error (MAE) is one of them. This is what it looks like:

Don’t worry about the maths, we’ll introduce the MAE intuitively now.

That weird E-like sign you see in the formula is what is called a Sigma sign, and it sums up what’s behind it: |Ei|, in our case, where Ei is the error (the difference between prediction and actual value) and the | signs mean that you’re taking the absolute value, or convert -3 into 3 and 3 remains 3.

The summation, in this case, means that we sum all the errors, for all the n samples that were used for training the model. We therefore, after doing so, end up with a very large number. We divide this number by n, or the number of samples used, to find the mean, or the average Absolute Error: the Mean Absolute Error or MAE.

It’s very well possible to use the MAE in a multitude of regression scenarios (Rich, n.d.). However, if your average error is very small, it may be better to use the Mean Squared Error that we will introduce next.

What’s more, and this is important: when you use the MAE in optimizations that use gradient descent, you’ll face the fact that the gradients are continuously large (Grover, 2019). Since this also occurs when the loss is low (and hence, you would only need to move a tiny bit), this is bad for learning — it’s easy to overshoot the minimum continously, finding a suboptimal model. Consider Huber loss (more below) if you face this problem. If you face larger errors and don’t care (yet?) about this issue with gradients, or if you’re here to learn, let’s move on to Mean Squared Error!

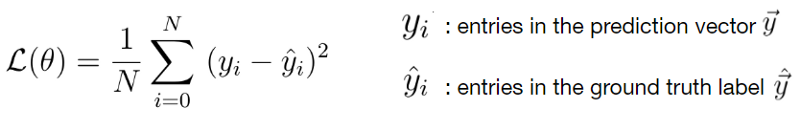

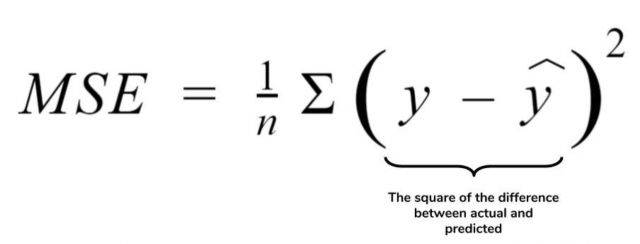

Mean Squared Error

Another loss function used often in regression is Mean Squared Error (MSE). It sounds really difficult, especially when you look at the formula (Binieli, 2018):

… but fear not. It’s actually really easy to understand what MSE is and what it does!

We’ll break the formula above into three parts, which allows us to understand each element and subsequently how they work together to produce the MSE.

The primary part of the MSE is the middle part, being the Sigma symbol or the summation sign. What it does is really simple: it counts from i to n, and on every count executes what’s written behind it. In this case, that’s the third part — the square of (Yi — Y’i).

In our case, i starts at 1 and n is not yet defined. Rather, n is the number of samples in our training set and hence the number of predictions that has been made. In the scenario sketched above, n would be 1000.

Then, the third part. It’s actually mathematical notation for what we already intuitively learnt earlier: it’s the difference between the actual target for the sample (Yi) and the predicted target (Y'i), the latter of which is removed from the first.

With one minor difference: the end result of this computation is squared. This property introduces some mathematical benefits during optimization (Rich, n.d.). Particularly, the MSE is continuously differentiable whereas the MAE is not (at x = 0). This means that optimizing the MSE is easier than optimizing the MAE.

Additionally, large errors introduce a much larger cost than smaller errors (because the differences are squared and larger errors produce much larger squares than smaller errors). This is both good and bad at the same time (Rich, n.d.). This is a good property when your errors are small, because optimization is then advanced (Quora, n.d.). However, using MSE rather than e.g. MAE will open your ML model up to outliers, which will severely disturb training (by means of introducing large errors).

Although the conclusion may be rather unsatisfactory, choosing between MAE and MSE is thus often heavily dependent on the dataset you’re using, introducing the need for some a priori inspection before starting your training process.

Finally, when we have the sum of the squared errors, we divide it by n — producing the mean squared error.

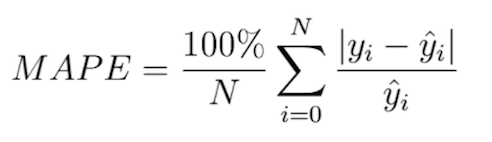

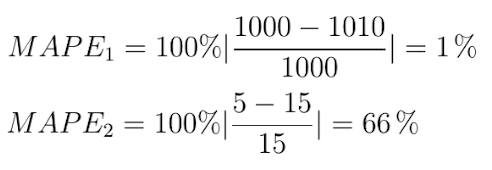

Mean Absolute Percentage Error

The Mean Absolute Percentage Error, or MAPE, really looks like the MAE, even though the formula looks somewhat different:

When using the MAPE, we don’t compute the absolute error, but rather, the mean error percentage with respect to the actual values. That is, suppose that my prediction is 12 while the actual target is 10, the MAPE for this prediction is [latex]| (10 — 12 ) / 10 | = 0.2[/latex].

Similar to the MAE, we sum the error over all the samples, but subsequently face a different computation: [latex]100% / n[/latex]. This looks difficult, but we can once again separate this computation into more easily understandable parts. More specifically, we can write it as a multiplication of [latex]100%[/latex] and [latex]1 / n[/latex] instead. When multiplying the latter with the sum, you’ll find the same result as dividing it by n, which we did with the MAE. That’s great.

The only thing left now is multiplying the whole with 100%. Why do we do that? Simple: because our computed error is a ratio and not a percentage. Like the example above, in which our error was 0.2, we don’t want to find the ratio, but the percentage instead. [latex]0.2 times 100%[/latex] is … unsurprisingly … [latex]20%[/latex]! Hence, we multiply the mean ratio error with the percentage to find the MAPE!

Why use MAPE if you can also use MAE?

[ad]

Very good question.

Firstly, it is a very intuitive value. Contrary to the absolute error, we have a sense of how well-performing the model is or how bad it performs when we can express the error in terms of a percentage. An error of 100 may seem large, but if the actual target is 1000000 while the estimate is 1000100, well, you get the point.

Secondly, it allows us to compare the performance of regression models on different datasets (Watson, 2019). Suppose that our goal is to train a regression model on the NASDAQ ETF and the Dutch AEX ETF. Since their absolute values are quite different, using MAE won’t help us much in comparing the performance of our model. MAPE, on the other hand, demonstrates the error in terms of a percentage — and a percentage is a percentage, whether you apply it to NASDAQ or to AEX. This way, it’s possible to compare model performance across statistically varying datasets.

Root Mean Squared Error (L2 Loss)

Remember the MSE?

There’s also something called the RMSE, or the Root Mean Squared Error or Root Mean Squared Deviation (RMSD). It goes like this:

Simple, hey? It’s just the MSE but then its square root value.

How does this help us?

The errors of the MSE are squared — hey, what’s in a name.

The RMSE or RMSD errors are root squares of the square — and hence are back at the scale of the original targets (Dragos, 2018). This gives you much better intuition for the error in terms of the targets.

Logcosh

«Log-cosh is the logarithm of the hyperbolic cosine of the prediction error.» (Grover, 2019).

Well, how’s that for a starter.

This is the mathematical formula:

And this the plot:

Okay, now let’s introduce some intuitive explanation.

The TensorFlow docs write this about Logcosh loss:

log(cosh(x))is approximately equal to(x ** 2) / 2for smallxand toabs(x) - log(2)for largex. This means that ‘logcosh’ works mostly like the mean squared error, but will not be so strongly affected by the occasional wildly incorrect prediction.

Well, that’s great. It seems to be an improvement over MSE, or L2 loss. Recall that MSE is an improvement over MAE (L1 Loss) if your data set contains quite large errors, as it captures these better. However, this also means that it is much more sensitive to errors than the MAE. Logcosh helps against this problem:

- For relatively small errors (even with the relatively small but larger errors, which is why MSE can be better for your ML problem than MAE) it outputs approximately equal to [latex]x^2 / 2[/latex] — which is pretty equal to the [latex]x^2[/latex] output of the MSE.

- For larger errors, i.e. outliers, where MSE would produce extremely large errors ([latex](10^6)^2 = 10^12[/latex]), the Logcosh approaches [latex]|x| — log(2)[/latex]. It’s like (as well as unlike) the MAE, but then somewhat corrected by the

log.

Hence: indeed, if you have both larger errors that must be detected as well as outliers, which you perhaps cannot remove from your dataset, consider using Logcosh! It’s available in many frameworks like TensorFlow as we saw above, but also in Keras.

[ad]

Huber loss

Let’s move on to Huber loss, which we already hinted about in the section about the MAE:

Or, visually:

When interpreting the formula, we see two parts:

- [latex]1/2 times (t-p)^2[/latex], when [latex]|t-p| leq delta[/latex]. This sounds very complicated, but we can break it into parts easily.

- [latex]|t-p|[/latex] is the absolute error: the difference between target [latex]t[/latex] and prediction [latex]p[/latex].

- We square it and divide it by two.

- We however only do so when the absolute error is smaller than or equal to some [latex]delta[/latex], also called delta, which you can configure! We’ll see next why this is nice.

- When the absolute error is larger than [latex]delta[/latex], we compute the error as follows: [latex]delta times |t-p| — (delta^2 / 2)[/latex].

- Let’s break this apart again. We multiply the delta with the absolute error and remove half of delta square.

What is the effect of all this mathematical juggling?

Look at the visualization above.

For relatively small deltas (in our case, with [latex]delta = 0.25[/latex], you’ll see that the loss function becomes relatively flat. It takes quite a long time before loss increases, even when predictions are getting larger and larger.

For larger deltas, the slope of the function increases. As you can see, the larger the delta, the slower the increase of this slope: eventually, for really large [latex]delta[/latex] the slope of the loss tends to converge to some maximum.

If you look closely, you’ll notice the following:

- With small [latex]delta[/latex], the loss becomes relatively insensitive to larger errors and outliers. This might be good if you have them, but bad if on average your errors are small.

- With large [latex]delta[/latex], the loss becomes increasingly sensitive to larger errors and outliers. That might be good if your errors are small, but you’ll face trouble when your dataset contains outliers.

Hey, haven’t we seen that before?

Yep: in our discussions about the MAE (insensitivity to larger errors) and the MSE (fixes this, but facing sensitivity to outliers).

Grover (2019) writes about this nicely:

Huber loss approaches MAE when 𝛿 ~ 0 and MSE when 𝛿 ~ ∞ (large numbers.)

That’s what this [latex]delta[/latex] is for! You are now in control about the ‘degree’ of MAE vs MSE-ness you’ll introduce in your loss function. When you face large errors due to outliers, you can try again with a lower [latex]delta[/latex]; if your errors are too small to be picked up by your Huber loss, you can increase the delta instead.

And there’s another thing, which we also mentioned when discussing the MAE: it produces large gradients when you optimize your model by means of gradient descent, even when your errors are small (Grover, 2019). This is bad for model performance, as you will likely overshoot the mathematical optimum for your model. You don’t face this problem with MSE, as it tends to decrease towards the actual minimum (Grover, 2019). If you switch to Huber loss from MAE, you might find it to be an additional benefit.

Here’s why: Huber loss, like MSE, decreases as well when it approaches the mathematical optimum (Grover, 2019). This means that you can combine the best of both worlds: the insensitivity to larger errors from MAE with the sensitivity of the MSE and its suitability for gradient descent. Hooray for Huber loss! And like always, it’s also available when you train models with Keras.

Then why isn’t this the perfect loss function?

Because the benefit of the [latex]delta[/latex] is also becoming your bottleneck (Grover, 2019). As you have to configure them manually (or perhaps using some automated tooling), you’ll have to spend time and resources on finding the most optimum [latex]delta[/latex] for your dataset. This is an iterative problem that, in the extreme case, may become impractical at best and costly at worst. However, in most cases, it’s best just to experiment — perhaps, you’ll find better results!

[ad]

Loss functions for classification

Loss functions are also applied in classifiers. I already discussed in another post what classification is all about, so I’m going to repeat it here:

Suppose that you work in the field of separating non-ripe tomatoes from the ripe ones. It’s an important job, one can argue, because we don’t want to sell customers tomatoes they can’t process into dinner. It’s the perfect job to illustrate what a human classifier would do.

Humans have a perfect eye to spot tomatoes that are not ripe or that have any other defect, such as being rotten. They derive certain characteristics for those tomatoes, e.g. based on color, smell and shape:

— If it’s green, it’s likely to be unripe (or: not sellable);

— If it smells, it is likely to be unsellable;

— The same goes for when it’s white or when fungus is visible on top of it.If none of those occur, it’s likely that the tomato can be sold. We now have two classes: sellable tomatoes and non-sellable tomatoes. Human classifiers decide about which class an object (a tomato) belongs to.

The same principle occurs again in machine learning and deep learning.

Only then, we replace the human with a machine learning model. We’re then using machine learning for classification, or for deciding about some “model input” to “which class” it belongs.Source: How to create a CNN classifier with Keras?

We’ll now cover loss functions that are used for classification.

Hinge

The hinge loss is defined as follows (Wikipedia, 2011):

It simply takes the maximum of either 0 or the computation [latex] 1 — t times y[/latex], where t is the machine learning output value (being between -1 and +1) and y is the true target (-1 or +1).

When the target equals the prediction, the computation [latex]t times y[/latex] is always one: [latex]1 times 1 = -1 times -1 = 1)[/latex]. Essentially, because then [latex]1 — t times y = 1 — 1 = 1[/latex], the max function takes the maximum [latex]max(0, 0)[/latex], which of course is 0.

That is: when the actual target meets the prediction, the loss is zero. Negative loss doesn’t exist. When the target != the prediction, the loss value increases.

For t = 1, or [latex]1[/latex] is your target, hinge loss looks like this:

Let’s now consider three scenarios which can occur, given our target [latex]t = 1[/latex] (Kompella, 2017; Wikipedia, 2011):

- The prediction is correct, which occurs when [latex]y geq 1.0[/latex].

- The prediction is very incorrect, which occurs when [latex]y < 0.0[/latex] (because the sign swaps, in our case from positive to negative).

- The prediction is not correct, but we’re getting there ([latex] 0.0 leq y < 1.0[/latex]).

In the first case, e.g. when [latex]y = 1.2[/latex], the output of [latex]1 — t times y[/latex] will be [latex] 1 — ( 1 times 1.2 ) = 1 — 1.2 = -0.2[/latex]. Loss, then will be [latex]max(0, -0.2) = 0[/latex]. Hence, for all correct predictions — even if they are too correct, loss is zero. In the too correct situation, the classifier is simply very sure that the prediction is correct (Peltarion, n.d.).

In the second case, e.g. when [latex]y = -0.5[/latex], the output of the loss equation will be [latex]1 — (1 times -0.5) = 1 — (-0.5) = 1.5[/latex], and hence the loss will be [latex]max(0, 1.5) = 1.5[/latex]. Very wrong predictions are hence penalized significantly by the hinge loss function.

In the third case, e.g. when [latex]y = 0.9[/latex], loss output function will be [latex]1 — (1 times 0.9) = 1 — 0.9 = 0.1[/latex]. Loss will be [latex]max(0, 0.1) = 0.1[/latex]. We’re getting there — and that’s also indicated by the small but nonzero loss.

What this essentially sketches is a margin that you try to maximize: when the prediction is correct or even too correct, it doesn’t matter much, but when it’s not, we’re trying to correct. The correction process keeps going until the prediction is fully correct (or when the human tells the improvement to stop). We’re thus finding the most optimum decision boundary and are hence performing a maximum-margin operation.

It is therefore not surprising that hinge loss is one of the most commonly used loss functions in Support Vector Machines (Kompella, 2017). What’s more, hinge loss itself cannot be used with gradient descent like optimizers, those with which (deep) neural networks are trained. This occurs due to the fact that it’s not continuously differentiable, more precisely at the ‘boundary’ between no loss / minimum loss. Fortunately, a subgradient of the hinge loss function can be optimized, so it can (albeit in a different form) still be used in today’s deep learning models (Wikipedia, 2011). For example, hinge loss is available as a loss function in Keras.

Squared hinge

The squared hinge loss is like the hinge formula displayed above, but then the [latex]max()[/latex] function output is squared.

This helps achieving two things:

- Firstly, it makes the loss value more sensitive to outliers, just as we saw with MSE vs MAE. Large errors will add to the loss more significantly than smaller errors. Note that simiarly, this may also mean that you’ll need to inspect your dataset for the presence of such outliers first.

- Secondly, squared hinge loss is differentiable whereas hinge loss is not (Tay, n.d.). The way the hinge loss is defined makes it not differentiable at the ‘boundary’ point of the chart — also see this perfect answer that illustrates it. Squared hinge loss, on the other hand, is differentiable, simply because of the square and the mathematical benefits it introduces during differentiation. This makes it easier for us to use a hinge-like loss in gradient based optimization — we’ll simply take squared hinge.

[ad]

Categorical / multiclass hinge

Both normal hinge and squared hinge loss work only for binary classification problems in which the actual target value is either +1 or -1. Although that’s perfectly fine for when you have such problems (e.g. the diabetes yes/no problem that we looked at previously), there are many other problems which cannot be solved in a binary fashion.

(Note that one approach to create a multiclass classifier, especially with SVMs, is to create many binary ones, feeding the data to each of them and counting classes, eventually taking the most-chosen class as output — it goes without saying that this is not very efficient.)

However, in neural networks and hence gradient based optimization problems, we’re not interested in doing that. It would mean that we have to train many networks, which significantly impacts the time performance of our ML training problem. Instead, we can use the multiclass hinge that has been introduced by researchers Weston and Watkins (Wikipedia, 2011):

What this means in plain English is this:

For all [latex]y[/latex] (output) values unequal to [latex]t[/latex], compute the loss. Eventually, sum them together to find the multiclass hinge loss.

Note that this does not mean that you sum over all possible values for y (which would be all real-valued numbers except [latex]t[/latex]), but instead, you compute the sum over all the outputs generated by your ML model during the forward pass. That is, all the predictions. Only for those where [latex]y neq t[/latex], you compute the loss. This is obvious from an efficiency point of view: where [latex]y = t[/latex], loss is always zero, so no [latex]max[/latex] operation needs to be computed to find zero after all.

Keras implements the multiclass hinge loss as categorical hinge loss, requiring to change your targets into categorical format (one-hot encoded format) first by means of to_categorical.

Binary crossentropy

A loss function that’s used quite often in today’s neural networks is binary crossentropy. As you can guess, it’s a loss function for binary classification problems, i.e. where there exist two classes. Primarily, it can be used where the output of the neural network is somewhere between 0 and 1, e.g. by means of the Sigmoid layer.

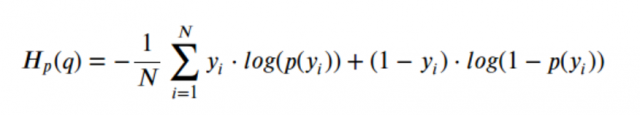

This is its formula:

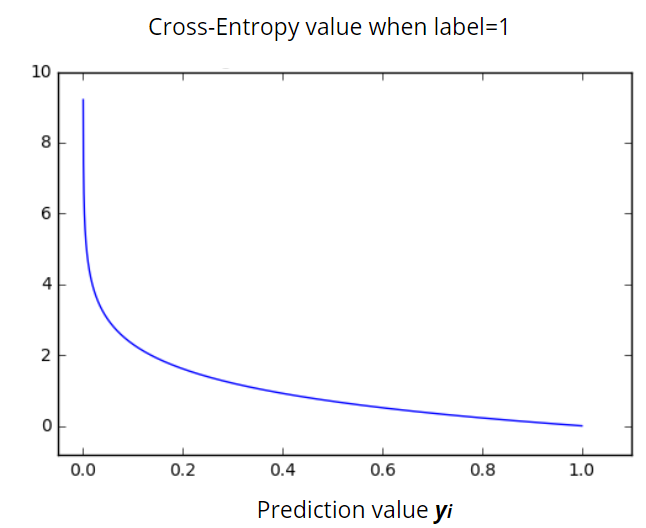

It can be visualized in this way:

And, like before, let’s now explain it in more intuitive ways.

The [latex]t[/latex] in the formula is the target (0 or 1) and the [latex]p[/latex] is the prediction (a real-valued number between 0 and 1, for example 0.12326).

When you input both into the formula, loss will be computed related to the target and the prediction. In the visualization above, where the target is 1, it becomes clear that loss is 0. However, when moving to the left, loss tends to increase (ML Cheatsheet documentation, n.d.). What’s more, it increases increasingly fast. Hence, it not only tends to punish wrong predictions, but also wrong predictions that are extremely confident (i.e., if the model is very confident that it’s 0 while it’s 1, it gets punished much harder than when it thinks it’s somewhere in between, e.g. 0.5). This latter property makes the binary cross entropy a valued loss function in classification problems.

When the target is 0, you can see that the loss is mirrored — which is exactly what we want:

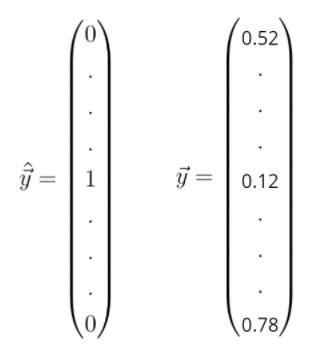

Categorical crossentropy

Now what if you have no binary classification problem, but instead a multiclass one?

Thus: one where your output can belong to one of > 2 classes.

The CNN that we created with Keras using the MNIST dataset is a good example of this problem. As you can find in the blog (see the link), we used a different loss function there — categorical crossentropy. It’s still crossentropy, but then adapted to multiclass problems.

This is the formula with which we compute categorical crossentropy. Put very simply, we sum over all the classes that we have in our system, compute the target of the observation and the prediction of the observation and compute the observation target with the natural log of the observation prediction.

It took me some time to understand what was meant with a prediction, though, but thanks to Peltarion (n.d.), I got it.

The answer lies in the fact that the crossentropy is categorical and that hence categorical data is used, with one-hot encoding.

Suppose that we have dataset that presents what the odds are of getting diabetes after five years, just like the Pima Indians dataset we used before. However, this time another class is added, being «Possibly diabetic», rendering us three classes for one’s condition after five years given current measurements:

- 0: no diabetes

- 1: possibly diabetic

- 2: diabetic

That dataset would look like this:

| Features | Target |

| { … } | 1 |

| { … } | 2 |

| { … } | 0 |

| { … } | 0 |

| { … } | 2 |

| …and so on | …and so on |

However, categorical crossentropy cannot simply use integers as targets, because its formula doesn’t support this. Instead, we must apply one-hot encoding, which transforms the integer targets into categorial vectors, which are just vectors that displays all categories and whether it’s some class or not:

- 0: [latex][1, 0, 0][/latex]

- 1: [latex][0, 1, 0][/latex]

- 2: [latex][0, 0, 1][/latex]

[ad]

That’s what we always do with to_categorical in Keras.

Our dataset then looks as follows:

| Features | Target |

| { … } | [latex][0, 1, 0][/latex] |

| { … } | [latex][0, 0, 1][/latex] |

| { … } | [latex][1, 0, 0][/latex] |

| { … } | [latex][1, 0, 0][/latex] |

| { … } | [latex][0, 0, 1][/latex] |

| …and so on | …and so on |

Now, we can explain with is meant with an observation.

Let’s look at the formula again and recall that we iterate over all the possible output classes — once for every prediction made, with some true target:

Now suppose that our trained model outputs for the set of features [latex]{ … }[/latex] or a very similar one that has target [latex][0, 1, 0][/latex] a probability distribution of [latex][0.25, 0.50, 0.25][/latex] — that’s what these models do, they pick no class, but instead compute the probability that it’s a particular class in the categorical vector.

Computing the loss, for [latex]c = 1[/latex], what is the target value? It’s 0: in [latex]textbf{t} = [0, 1, 0][/latex], the target value for class 0 is 0.

What is the prediction? Well, following the same logic, the prediction is 0.25.

We call these two observations with respect to the total prediction. By looking at all observations, merging them together, we can find the loss value for the entire prediction.

We multiply the target value with the log. But wait! We multiply the log with 0 — so the loss value for this target is 0.

It doesn’t surprise you that this happens for all targets except for one — where the target value is 1: in the prediction above, that would be for the second one.

Note that when the sum is complete, you’ll multiply it with -1 to find the true categorical crossentropy loss.

Hence, loss is driven by the actual target observation of your sample instead of all the non-targets. The structure of the formula however allows us to perform multiclass machine learning training with crossentropy. There we go, we learnt another loss function

Sparse categorical crossentropy

But what if we don’t want to convert our integer targets into categorical format? We can use sparse categorical crossentropy instead (Lin, 2019).

It performs in pretty much similar ways to regular categorical crossentropy loss, but instead allows you to use integer targets! That’s nice.

| Features | Target |

| { … } | 1 |

| { … } | 2 |

| { … } | 0 |

| { … } | 0 |

| { … } | 2 |

| …and so on | …and so on |

Kullback-Leibler divergence

Sometimes, machine learning problems involve the comparison between two probability distributions. An example comparison is the situation below, in which the question is how much the uniform distribution differs from the Binomial(10, 0.2) distribution.

When you wish to compare two probability distributions, you can use the Kullback-Leibler divergence, a.k.a. KL divergence (Wikipedia, 2004):

begin{equation} KL (P || Q) = sum p(X) log ( p(X) div q(X) ) end{equation}

KL divergence is an adaptation of entropy, which is a common metric in the field of information theory (Wikipedia, 2004; Wikipedia, 2001; Count Bayesie, 2017). While intuitively, entropy tells you something about «the quantity of your information», KL divergence tells you something about «the change of quantity when distributions are changed».

Your goal in machine learning problems is to ensure that [latex]change approx 0[/latex].

Is KL divergence used in practice? Yes! Generative machine learning models work by drawing a sample from encoded, latent space, which effectively represents a latent probability distribution. In other scenarios, you might wish to perform multiclass classification with neural networks that use Softmax activation in their output layer, effectively generating a probability distribution across the classes. And so on. In those cases, you can use KL divergence loss during training. It compares the probability distribution represented by your training data with the probability distribution generated during your forward pass, and computes the divergence (the difference, although when you swap distributions, the value changes due to non-symmetry of KL divergence — hence it’s not entirely the difference) between the two probability distributions. This is your loss value. Minimizing the loss value thus essentially steers your neural network towards the probability distribution represented in your training set, which is what you want.

Summary

In this blog, we’ve looked at the concept of loss functions, also known as cost functions. We showed why they are necessary by means of illustrating the high-level machine learning process and (at a high level) what happens during optimization. Additionally, we covered a wide range of loss functions, some of them for classification, others for regression. Although we introduced some maths, we also tried to explain them intuitively.

I hope you’ve learnt something from my blog! If you have any questions, remarks, comments or other forms of feedback, please feel free to leave a comment below! 👇 I’d also appreciate a comment telling me if you learnt something and if so, what you learnt. I’ll gladly improve my blog if mistakes are made. Thanks and happy engineering! 😎

References

Chollet, F. (2017). Deep Learning with Python. New York, NY: Manning Publications.

Keras. (n.d.). Losses. Retrieved from https://keras.io/losses/

Binieli, M. (2018, October 8). Machine learning: an introduction to mean squared error and regression lines. Retrieved from https://www.freecodecamp.org/news/machine-learning-mean-squared-error-regression-line-c7dde9a26b93/

Rich. (n.d.). Why square the difference instead of taking the absolute value in standard deviation? Retrieved from https://stats.stackexchange.com/a/121

Quora. (n.d.). What is the difference between squared error and absolute error? Retrieved from https://www.quora.com/What-is-the-difference-between-squared-error-and-absolute-error

Watson, N. (2019, June 14). Using Mean Absolute Error to Forecast Accuracy. Retrieved from https://canworksmart.com/using-mean-absolute-error-forecast-accuracy/

Drakos, G. (2018, December 5). How to select the Right Evaluation Metric for Machine Learning Models: Part 1 Regression Metrics. Retrieved from https://towardsdatascience.com/how-to-select-the-right-evaluation-metric-for-machine-learning-models-part-1-regrression-metrics-3606e25beae0

Wikipedia. (2011, September 16). Hinge loss. Retrieved from https://en.wikipedia.org/wiki/Hinge_loss

Kompella, R. (2017, October 19). Support vector machines ( intuitive understanding ) ? Part#1. Retrieved from https://towardsdatascience.com/support-vector-machines-intuitive-understanding-part-1-3fb049df4ba1

Peltarion. (n.d.). Squared hinge. Retrieved from https://peltarion.com/knowledge-center/documentation/modeling-view/build-an-ai-model/loss-functions/squared-hinge

Tay, J. (n.d.). Why is squared hinge loss differentiable? Retrieved from https://www.quora.com/Why-is-squared-hinge-loss-differentiable

Rakhlin, A. (n.d.). Online Methods in Machine Learning. Retrieved from http://www.mit.edu/~rakhlin/6.883/lectures/lecture05.pdf

Grover, P. (2019, September 25). 5 Regression Loss Functions All Machine Learners Should Know. Retrieved from https://heartbeat.fritz.ai/5-regression-loss-functions-all-machine-learners-should-know-4fb140e9d4b0

TensorFlow. (n.d.). tf.keras.losses.logcosh. Retrieved from https://www.tensorflow.org/api_docs/python/tf/keras/losses/logcosh

ML Cheatsheet documentation. (n.d.). Loss Functions. Retrieved from https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html

Peltarion. (n.d.). Categorical crossentropy. Retrieved from https://peltarion.com/knowledge-center/documentation/modeling-view/build-an-ai-model/loss-functions/categorical-crossentropy

Lin, J. (2019, September 17). categorical_crossentropy VS. sparse_categorical_crossentropy. Retrieved from https://jovianlin.io/cat-crossentropy-vs-sparse-cat-crossentropy/

Wikipedia. (2004, February 13). Kullback–Leibler divergence. Retrieved from https://en.wikipedia.org/wiki/Kullback%E2%80%93Leibler_divergence

Wikipedia. (2001, July 9). Entropy (information theory). Retrieved from https://en.wikipedia.org/wiki/Entropy_(information_theory)

Count Bayesie. (2017, May 10). Kullback-Leibler Divergence Explained. Retrieved from https://www.countbayesie.com/blog/2017/5/9/kullback-leibler-divergence-explained

The article contains a brief on various loss functions used in Neural networks.

What is a Loss function?

When you train Deep learning models, you feed data to the network, generate predictions, compare them with the actual values (the targets) and then compute what is known as a loss. This loss essentially tells you something about the performance of the network: the higher it is, the worse your network performs overall.

Loss functions are mainly classified into two different categories Classification loss and Regression Loss. Classification loss is the case where the aim is to predict the output from the different categorical values for example, if we have a dataset of handwritten images and the digit is to be predicted that lies between (0–9), in these kinds of scenarios classification loss is used.

Whereas if the problem is regression like predicting the continuous values for example, if need to predict the weather conditions or predicting the prices of houses on the basis of some features. In this type of case, Regression Loss is used.

In this article, we will focus on the most widely used loss functions in Neural networks.

-

Mean Absolute Error (L1 Loss)

-

Mean Squared Error (L2 Loss)

-

Huber Loss

-

Cross-Entropy(a.k.a Log loss)

-

Relative Entropy(a.k.a Kullback–Leibler divergence)

-

Squared Hinge

Mean Absolute Error (MAE)

Mean absolute error (MAE) also called L1 Loss is a loss function used for regression problems. It represents the difference between the original and predicted values extracted by averaging the absolute difference over the data set.

MAE is not sensitive towards outliers and is given several examples with the same input feature values, and the optimal prediction will be their median target value. This should be compared with Mean Squared Error, where the optimal prediction is the mean. A disadvantage of MAE is that the gradient magnitude is not dependent on the error size, only on the sign of y — ŷ which leads to that the gradient magnitude will be large even when the error is small, which in turn can lead to convergence problems.

When to use it?

Use Mean absolute error when you are doing regression and don’t want outliers to play a big role. It can also be useful if you know that your distribution is multimodal, and it’s desirable to have predictions at one of the modes, rather than at the mean of them.

Example: When doing image reconstruction, MAE encourages less blurry images compared to MSE. This is used for example in the paper Image-to-Image Translation with Conditional Adversarial Networks by Isola et al.

Mean Squared Error (MSE)

Mean Squared Error (MSE) also called L2 Loss is also a loss function used for regression. It represents the difference between the original and predicted values extracted by squared the average difference over the data set.

MSE is sensitive towards outliers and given several examples with the same input feature values, the optimal prediction will be their mean target value. This should be compared with Mean Absolute Error, where the optimal prediction is the median. MSE is thus good to use if you believe that your target data, conditioned on the input, is normally distributed around a mean value, and when it’s important to penalize outliers extra much.

When to use it?

Use MSE when doing regression, believing that your target, conditioned on the input, is normally distributed, and want large errors to be significantly (quadratically) more penalized than small ones.

Example: You want to predict future house prices. The price is a continuous value, and therefore we want to do regression. MSE can here be used as the loss function.

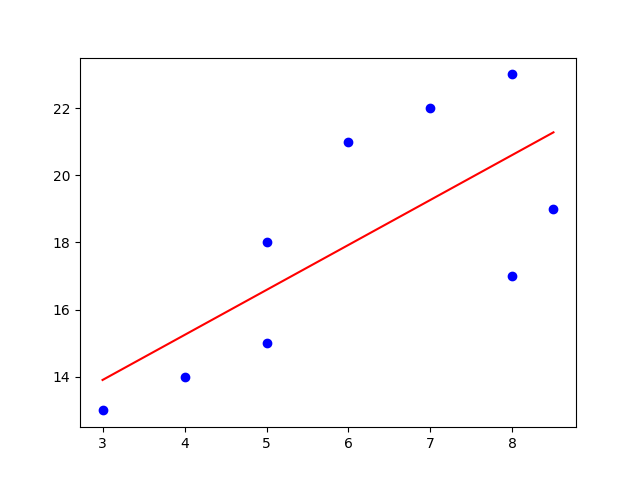

Calculate MAE and MSE using Python

Original target data is denoted by y and predicted label is denoted by (Ŷ) Yhat are the main sources to evaluate the model.

import math

import numpy as np

import matplotlib.pyplot as plt

y = np.array([-3, -1, -2, 1, -1, 1, 2, 1, 3, 4, 3, 5])

yhat = np.array([-2, 1, -1, 0, -1, 1, 2, 2, 3, 3, 3, 5])

x = list(range(len(y)))

#We can visualize them in a plot to check the difference visually.

plt.figure(figsize=(9, 5))

plt.scatter(x, y, color="red", label="original")

plt.plot(x, yhat, color="green", label="predicted")

plt.legend()

plt.show()

# calculate MSE

d = y - yhat

mse_f = np.mean(d**2)

print("Mean square error:",mse_f)

Mean square error: 0.75

# calculate MAE

mae_f = np.mean(abs(d))

print("Mean absolute error:",mae_f)

Mean absolute error: 0.5833333333333334

Huber Loss

Huber Loss is typically used in regression problems. It’s less sensitive to outliers than the MSE as it treats error as square only inside an interval.

Consider an example where we have a dataset of 100 values we would like our model to be trained to predict. Out of all that data, 25% of the expected values are 5 while the other 75% are 10.

An MSE loss wouldn’t quite do the trick, since we don’t really have “outliers”; 25% is by no means a small fraction. On the other hand, we don’t necessarily want to weigh that 25% too low with an MAE. Those values of 5 aren’t close to the median (10 — since 75% of the points have a value of 10), but they’re also not really outliers.

This is where the Huber Loss Function comes into play.

The Huber Loss offers the best of both worlds by balancing the MSE and MAE together. We can define it using the following piecewise function:

Here, (𝛿) delta → hyperparameter defines the range for MAE and MSE.

In simple terms, the above radically says is: for loss values less than (𝛿) delta, use the MSE; for loss values greater than delta, use the MAE. This way Huber loss provides the best of both MAE and MSE.

Set delta to the value of the residual for the data points, you trust.

import numpy as np

import matplotlib.pyplot as plt

def huber(a, delta):

value = np.where(np.abs(a)<delta, .5*a**2, delta*(np.abs(a) - .5*delta))

deriv = np.where(np.abs(a)<delta, a, np.sign(a)*delta)

return value, deriv

h, d = huber(np.arange(-1, 1, .01), delta=0.2)

fig, ax = plt.subplots(1)

ax.plot(h, label='loss value')

ax.plot(d, label='loss derivative')

ax.grid(True)

ax.legend()

In the above figure, you can see how the derivative is a constant for abs(a)>delta

In TensorFlow 2 and Keras, Huber loss can be added to the compile step of your model.

model.compile(loss=tensorflow.keras.losses.Huber(delta=1.5), optimizer='adam', metrics=['mean_absolute_error'])

When to use Huber Loss?

As we already know Huber loss has both MAE and MSE. So when we think higher weightage should not be given to outliers, then set your loss function as Huber loss. We need to manually define is the (𝛿) delta value. Generally, some iterations are needed with the respective algorithm used to find the correct delta value.

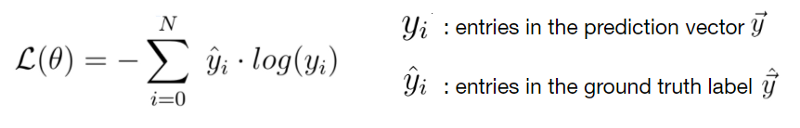

Cross-Entropy Loss(a.k.a Log loss)

The concept of cross-entropy traces back into the field of Information Theory where Claude Shannon introduced the concept of entropy in 1948. Before diving into the Cross-Entropy loss function, let us talk about Entropy.

Entropy has roots in physics — it is a measure of disorder, or unpredictability, in a system.

For instance, consider below figure two gases in a box: initially, the system has low entropy, in that the two gasses are completely separable(skewed distribution); after some time, however, the gases blend(distribution where events have equal probability) so the system’s entropy increases. It is said that in an isolated system, the entropy never decreases — the chaos never dims down without external influence.

Entropy

For p(x) — probability distribution and a random variable X, entropy is defined as follows:

Reason for the Negative sign: log(p(x))<0 for all p(x) in (0,1) . p(x) is a probability distribution and therefore the values must range between 0 and 1.

A plot of log(x). For x values between 0 and 1, log(x) <0 (is negative).

Cross-Entropy loss is also called logarithmic loss, log loss, or logistic loss. Each predicted class probability is compared to the actual class desired output 0 or 1 and a score/loss is calculated that penalizes the probability based on how far it is from the actual expected value. The penalty is logarithmic in nature yielding a large score for large differences close to 1 and small score for small differences tending to 0.

Cross-Entropy is expressed by the equation;

Where x represents the predicted results by ML algorithm, p(x) is the probability distribution of “true” label from training samples and q(x) depicts the estimation of the ML algorithm.

Cross-entropy loss measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverge from the actual label. So predicting a probability of .012 when the actual observation label is 1 would be bad and result in a high loss value. A perfect model would have a log loss of 0.

The graph above shows the range of possible loss values given a true observation. As the predicted probability approaches 1, log loss slowly decreases. As the predicted probability decreases, however, the log loss increases rapidly. Log loss penalizes both types of errors, but especially those predictions that are confident and wrong!

The cross-entropy method is a Monte Carlo technique for significance optimization and sampling.

Binary Cross-Entropy

Binary cross-entropy is a loss function that is used in binary classification tasks. These are tasks that answer a question with only two choices (yes or no, A or B, 0 or 1, left or right).

In binary classification, where the number of classes M equals 2, cross-entropy can be calculated as:

Sigmoid is the only activation function compatible with the binary cross-entropy loss function. You must use it on the last block before the target block.

The binary cross-entropy needs to compute the logarithms of Ŷi and (1-Ŷi), which only exist if Ŷi is between 0 and 1. The softmax activation function is the only one to guarantee that the output is within this range.

Categorical Cross-Entropy

Categorical cross-entropy is a loss function that is used in multi-class classification tasks. These are tasks where an example can only belong to one out of many possible categories, and the model must decide which one.

Formally, it is designed to quantify the difference between two probability distributions.

If 𝑀>2 (i.e. multiclass classification), we calculate a separate loss for each class label per observation and sum the result.

-

M — number of classes (dog, cat, fish)

-

log — the natural log

-

y — binary indicator (0 or 1) if class label c is the correct classification for observation o

-

p — predicted probability observation o is of class 𝑐

Softmax is the only activation function recommended to use with the categorical cross-entropy loss function.

Strictly speaking, the output of the model only needs to be positive so that the logarithm of every output value Ŷi exists. However, the main appeal of this loss function is for comparing two probability distributions. The softmax activation rescales the model output so that it has the right properties.

Sparse Categorical Cross-Entropy

sparse categorical cross-entropy has the same loss function as, categorical cross-entropy which we have mentioned above. The only difference is the format in which we mention 𝑌𝑖(i,e true labels).

If your Yi’s are one-hot encoded, use categorical_crossentropy. Examples for a 3-class classification: [1,0,0] , [0,1,0], [0,0,1]

But if your Yi’s are integers, use sparse_categorical_crossentropy. Examples for above 3-class classification problem: [1] , [2], [3]

The usage entirely depends on how you load your dataset. One advantage of using sparse categorical cross-entropy is it saves time in memory as well as computation because it simply uses a single integer for a class, rather than a whole vector.

Calculate Cross-Entropy Between Class Labels and Probabilities

The use of cross-entropy for classification often gives different specific names based on the number of classes.

Consider a two-class classification task with the following 10 actual class labels (P) and predicted class labels (Q).

# calculate cross entropy for classification problem

from math import log

from numpy import mean

# calculate cross entropy

def cross_entropy_funct(p, q):

return -sum([p[i]*log(q[i]) for i in range(len(p))])

# define classification data p and q

p = [1, 1, 1, 1, 1, 0, 0, 0, 0, 0]

q = [0.7, 0.9, 0.8, 0.8, 0.6, 0.2, 0.1, 0.4, 0.1, 0.3]

# calculate cross entropy for each example

results = list()

for i in range(len(p)):

# create the distribution for each event {0, 1}

expected = [1.0 - p[i], p[i]]

predicted = [1.0 - q[i], q[i]]

# calculate cross entropy for the two events

cross = cross_entropy_funct(expected, predicted)

print('>[y=%.1f, yhat=%.1f] cross entropy: %.3f' % (p[i], q[i], cross))

results.append(cross)

# calculate the average cross entropy

mean_cross_entropy = mean(results)

print('nAverage Cross Entropy: %.3f' % mean_cross_entropy)

Running the example prints the actual and predicted probabilities for each example. The final average cross-entropy loss across all examples is reported, in this case, as 0.272

>[y=1.0, yhat=0.7] cross entropy: 0.357

>[y=1.0, yhat=0.9] cross entropy: 0.105

>[y=1.0, yhat=0.8] cross entropy: 0.223

>[y=1.0, yhat=0.8] cross entropy: 0.223

>[y=1.0, yhat=0.6] cross entropy: 0.511

>[y=0.0, yhat=0.2] cross entropy: 0.223

>[y=0.0, yhat=0.1] cross entropy: 0.105

>[y=0.0, yhat=0.4] cross entropy: 0.511

>[y=0.0, yhat=0.1] cross entropy: 0.105

>[y=0.0, yhat=0.3] cross entropy: 0.357

Average Cross Entropy: 0.272

Relative Entropy(Kullback–Leibler divergence)

The Relative entropy (also called Kullback–Leibler divergence), is a method for measuring the similarity between two probability distributions. It was refined by Solomon Kullback and Richard Leibler for public release in 1951(paper), KL-Divergence aims to identify the divergence(separation or bifurcation) of a probability distribution given a baseline distribution. That is, for a target distribution, P, we compare a competing distribution, Q, by computing the expected value of the log-odds of the two distributions:

For distributions P and Q of a continuous random variable, the Kullback-Leibler divergence is computed as an integral:

If P and Q represent the probability distribution of a discrete random variable, the Kullback-Leibler divergence is calculated as a summation:

Also, with a little bit of work, we can show that the KL-Divergence is non-negative. It means, that the smallest possible value is zero (distributions are equal) and the maximum value is infinity. We procure infinity when P is defined in a region where Q can never exist. Therefore, it is a common assumption that both distributions exist on the same support.

The closer two distributions get to each other, the lower the loss becomes. In the following graph, the blue distribution is trying to model the green distribution. As the blue distribution comes closer and closer to the green one, the KL divergence loss will get closer to zero.

Lower the KL divergence value, the better we have matched the true distribution with our approximation.

Comparison of Blue and green distribution

The applications of KL-Divergence:

-

Primarily, it is used in Variational Autoencoders. These autoencoders learn to encode samples into a latent probability distribution and from this latent distribution, a sample can be drawn that can be fed to a decoder which outputs e.g. an image.

-

KL divergence can also be used in multiclass classification scenarios. These problems, which traditionally use the Softmax function and use one-hot encoded target data, are naturally suitable to KL divergence since Softmax “normalizes data into a probability distribution consisting of K probabilities proportional to the exponentials of the input numbers”

-

Delineating the relative (Shannon) entropy in information systems,

-

Randomness in continuous time-series.

Calculate KL-Divergence using Python

Consider a random variable with six events as different colors. We may have two different probability distributions for this variable; for example:

import numpy as np

import matplotlib.pyplot as plt

events = ['red', 'green', 'blue', 'black', 'yellow', 'orange']

p = [0.10, 0.30, 0.05, 0.90, 0.65, 0.21]

q = [0.70, 0.55, 0.15, 0.04, 0.25, 0.45]

Plot a histogram for each probability distribution, allowing the probabilities for each event to be directly compared.

# plot first distribution

plt.figure(figsize=(9, 5))

plt.subplot(2,1,1)

plt.bar(events, p, color ='green',align='center')

# plot second distribution

plt.subplot(2,1,2)

plt.bar(events, q,color ='green',align='center')

# show the plot

plt.show()

We can see that indeed the distributions are different.

Next, we can develop a function to calculate the KL divergence between the two distributions.

def kl_divergence(p, q):

return sum(p[i] * np.log(p[i]/q[i]) for i in range(len(p)))

# calculate (P || Q)

kl_pq = kl_divergence(p, q)

print('KL(P || Q): %.3f bits' % kl_pq)

# calculate (Q || P)

kl_qp = kl_divergence(q, p)

print('KL(Q || P): %.3f bits' % kl_qp)

KL(P || Q): 2.832 bits

KL(Q || P): 1.840 bits

Nevertheless, we can calculate the KL divergence using the rel_entr() SciPy function and confirm that our manual calculation is correct.

The rel_entr() function takes lists of probabilities across all events from each probability distribution as arguments and returns a list of divergences for each event. These can be summed to give the KL divergence.

from scipy.special import rel_entr

print("Using Scipy rel_entr function")

bo_1 = np.array(p)

bo_2 = np.array(q)

print('KL(P || Q): %.3f bits' % sum(rel_entr(bo_1,bo_2)))

print('KL(Q || P): %.3f bits' % sum(rel_entr(bo_2,bo_1)))

Using Scipy rel_entr function

KL(P || Q): 2.832 bits

KL(Q || P): 1.840 bits

Let us see how KL divergence can be used with Keras. It’s pretty simple, It just involves specifying it as the used loss function during the model compilation step:

# Compile the model model.compile(loss=keras.losses.kullback_leibler_divergence, optimizer=keras.optimizers.Adam(), metrics=[‘accuracy’])

Squared Hinge

The squared hinge loss is a loss function used for “maximum margin” binary classification problems. Mathematically it is defined as:

where ŷ is the predicted value and y is either 1 or -1.

Thus, the squared hinge loss → 0, when the true and predicted labels are the same and when ŷ≥ 1 (which is an indication that the classifier is sure that it’s the correct label).

The squared hinge loss → quadratically increasing with the error, when when the true and predicted labels are not the same or when ŷ< 1, even when the true and predicted labels are the same (which is an indication that the classifier is not sure that it’s the correct label).

As compared to traditional hinge loss(used in SVM) larger errors are punished more significantly, whereas smaller errors are punished slightly lighter.

Comparison between Hinge and Squared hinge loss

When to use Squared Hinge?

Use the Squared Hinge loss function on problems involving yes/no (binary) decisions. Especially, when you’re not interested in knowing how certain the classifier is about the classification. Namely, when you don’t care about the classification probabilities. Use in combination with the tanh() the activation function in the last layer of the neural network.

A typical application can be classifying email into ‘spam’ and ‘not spam’ and you’re only interested in the classification accuracy.

Let us see how Squared Hinge can be used with Keras. It’s pretty simple, It just involves specifying it as the used loss function during the model compilation step:

#Compile the model

model.compile(loss=squared_hinge, optimizer=tensorflow.keras.optimizers.Adam(lr=0.03), metrics=['accuracy'])

Feel free to connect me on LinkedIn for any query.

Thank you for reading this article, I hope you have found it useful.

References

https://www.machinecurve.com/index.php/2019/10/12/using-huber-loss-in-keras/

https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html#:~:text=Cross%2Dentropy%20loss%2C%20or%20log,So%20predicting%20a%20probability%20of%20.

https://towardsdatascience.com/cross-entropy-loss-function-f38c4ec8643e

https://towardsdatascience.com/understanding-the-3-most-common-loss-functions-for-machine-learning-regression-23e0ef3e14d3

https://gobiviswa.medium.com/huber-error-loss-functions-3f2ac015cd45

https://www.datatechnotes.com/2019/10/accuracy-check-in-python-mae-mse-rmse-r.html

https://peltarion.com/knowledge-center/documentation/modeling-view/build-an-ai-model/loss-functions/

An in-depth explanation for widely used regression loss functions like mean squared error, mean absolute error, and Huber loss.

Loss function in supervised machine learning is like a compass that gives algorithms a sense of direction while learning parameters or weights.

This blog will explain the What? Why? How? and When? to use Loss Functions including the mathematical intuition behind that. So without wasting further time, Let’s dive into the concepts.

What is Loss Function?

Every supervised learning algorithm is trained to learn a prediction. These predictions should be as close as possible to label value / ground-truth value. The loss function measures how near or far are these predicted values compared to original label values.

Loss functions are also referred to as error functions as it gives an idea about prediction error.

Loss Functions, in simple terms, are nothing but an equation that gives the error between the actual value and the predicted value.

The simplest solution is to use a difference between actual values and predicted values as an error, but that’s not the case. Academicians, researchers, or engineers don’t use this simple approach.

For an example of a Linear Regression Algorithm, the squared error is used as a loss function to determine how well the algorithm fits your data.

But why not just the difference as error function?

The intuition is if you take just a difference as an error, the sign of the difference will hinder the model performance. For example, if a data point has an error of 2 and another data point has an error of -2, the overall difference will be zero, but that’s wrong.

Another solution that we might come up with is instead of taking the signed difference, we could use unsigned difference, e.g. we use MOD, |x|. The unsigned difference is called an Absolute Error. This could work in some cases but not always.

But again, why can’t we use the absolute error metric for all cases? why do we have different types of Loss functions? To answer those questions, let’s dive into the types of Loss Functions and What? Why? How? and When? of it.

Types of loss functions

Researchers are studying Loss Functions over the years for perfect loss functions which can be fit for all.

During this process, many loss functions have emerged. Broadly LFs can be classified into two types.

- Loss Functions for Regression Tasks

- Loss Functions for Classifications Tasks

In this article, we will focus on loss functions for regression algorithms and will cover classification algorithms in another article.

Loss Functions for Regression

We will discuss the widely used loss functions for regression algorithms to get a good understanding of loss function concepts.

Algorithms like Linear Regression, Decision Tree, Neural networks, majorly use the below functions for regression problems.

- Mean Squared Loss(Error)

- Mean Absolute Loss(Error)

- Huber Loss

Mean Squared Error

Mean squared error (MSE) can be computed by taking the actual value and predicted value as the inputs and returning the error via the below equation (mean squared error equation).